Abstract

Language models and other recent machine learning paradigms blur the distinction between generative and discriminative tasks, in a continuum that is regulated by the degree of pre- and post-supervision that is required from users, as well as the tolerated level of error. In few-shot inference, we need to find a trade-off between the number and cost of the solved examples that have to be supplied, those that have to be inspected (some of them accurate but others needing correction) and those that are wrong but pass undetected. In this paper, we define a new Supply-Inspect Cost Framework, associated graphical representations and comprehensive metrics that consider all these elements. To optimise few-shot inference under specific operating conditions, we introduce novel algorithms that go beyond the concept of rejection rules in both static and dynamic contexts. We illustrate the effectiveness of all these elements for a transformative domain, data wrangling, for which language models can have a huge impact if we are able to properly regulate the reliability-usability trade-off, as we do in this paper.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

In many machine learning (ML) applications, the degree of automation is partial by definition and dominated by trade-offs. Users need to supervise the process at different stages, mostly through the labelling of training or contextualising examples, and the inspection of some of the results from the ML system, to check that the outcome meets the desired quality. In many tasks, especially those of generative character or when predictions are structured, the creation or labelling of examples is more costly than their inspection. For instance, Fig. 1 shows a transformation where visually inspecting the result is much faster than writing it directly. Many tedious manipulation tasks are of this kind, such as wrangling with spreadsheets and other sources of text and data.

In recent times, few-shot learning [1] has been proposed as a new machine learning paradigm able to learn from only a few supervised examples, thus alleviating the problem of having large labelled training sets. Few-shot approaches have been successfully applied in areas such as fault diagnosis [2] and image semantic segmentation [3, 4].

Example of a name transformation problem. In a few-shot inference session with a language model (prompt not shown), \(n_s\) examples were completely supplied by a human (in blue) and the rest (\(n_o\)) were completed by the system. From these, \(n_i\) were inspected, of which \(n_v\) were correct and validated (in turquoise) and \(n_c\) were wrong (in orange) and corrected (in purple). Finally, the remaining examples \(n_u\) were not inspected, of which \(n_a\) were accurate and \(n_w\) were wrong (in red). The horizontal solid lines represent the thresholds for the two main choices to be made: how many examples are supplied (\(n_s\)), and inspected (\(n_i\)). Note that the only truly rejected instances are the ones in orange, crossed out by the user

Some recent language models (LMs) such as the GPT family [5, 6], PanGu-\(\alpha \) [7], PaLM [8], BLOOM [9] or Llama [10] have excelled at few-shot inference, where a task is solved by supplying a small set of correct examples formatted as a prompt [11]. The quality of the completion usually depends on the number of supplied examples \(n_s\). For instance, 5-shot inference is usually better than 2-shot inference, but requires more effort from the user. Both the cost of supplying and the cost of inspecting each example are elements of the operating condition. On top of this, some tasks or users may have different error tolerances, which is another component of the operating condition. The latter can be adjusted by the use of reject options based on a confidence threshold t [12,13,14]. Some completed examples have sufficient confidence to go through, but others are rejected to another system. However, if the user inspects some of these examples, and decides to correct them, they immediately become good examples that could be used to retrain or tune the model. In the case of few-shot learning with LMs, they could be used to enlarge and rerun the prompt with better accuracy and confidence estimation for new examples. This shows that the traditional reject option approach is insufficient: to account for this situation, we need a new framework and algorithms that can deal interactively in this situation.

We look at the balance between reliability and usability to determine the optimal number of few-shot examples. This approach aims to minimise the total cost of providing and exploring examples, and of accounting for undetected errors. Unlike active learning [15], our exploration does not rely on the training algorithm to select examples. Instead, an external process takes responsibility for selecting the example size and identifying the best cardinality \(n_s\) and threshold t based on the prevailing operating conditions. This departure from active learning is crucial because the examples that add the most value to active learning are the same ones that could potentially increase the frequency of costly \(n_c\) cases. Compared to active learning (where more examples are used for training the model) and threshold choice for reject options (where this is decided after the model has been learned), here we need to play with the prompt (and the number of instances used in the few-shot process, interactively) to reach an optimal trade-off.

To show the effectiveness of our approach, we choose an application where both reliability and usability are critical: data wrangling transformations [16]. Also, the degree of automation is partial by definition and dominated by trade-offs [17]. The user has to give at least one instance of the transformation so that most of the rest are completed by the system, but could give more instances if that compensates for fewer examples the user has to inspect and possibly correct, and of course fewer errors. We apply our algorithms to 123 tasks from 7 different domains, for which we also estimate reasonable operating conditions from a human study. This represents the first benchmark with annotated human reliability-usability conditions for the evaluation of LMs.

The main contributions of this paper are:

-

1.

We formalise a novel methodology for few-shot inference based on the trade-off between reliability and usability through a new cost framework integrating all the relevant elements in few-shot learning, including the number of examples provided, examples inspected, and errors not detected.

-

2.

We analyse how the number of examples provided to the model affects not only the accuracy of the outputs but also the model confidence, represented as logprobs.

-

3.

We devise an original graphical representation called ‘supply-inspect cost surfaces’, as the method for selecting the optimal thresholds (number of supplied examples and the degree for inspection) given the operating condition. We show that the volume under this surface, when the axes conform with the expected operating distribution, is equal to the expected cost.

-

4.

We establish several innovative static and dynamic algorithms to reduce the expected cost given the operating condition. Their performance is presented both experimentally and theoretically, showing that these algorithms approximate the optimal trade-off between reliability and usability.

-

5.

We release a benchmark containing a substantial number of 123 tasks across 7 domains. This benchmark is annotated with information on the plausible range of operating conditions derived from real questionnaires completed by human users.

The paper is structured as follows. Section Supply-inspect cost framework introduces the framework to formalise diverse costs for few-shot learners. In Sect. Threshold choice method, we propose different threshold methods that can be employed for the addressed problem. Section Supply-inspect surfaces and expected cost includes some theoretical results about supply-inspect surfaces and the expected cost. The experimental setting is described in Sect. Experimental design, while the results of the experiments are discussed in Sect. Results. Lastly, we include a section for related work and a final section with the closing remarks, including a discussion about the wide applicability of this work, its limitations and questions for future work.

Supply-inspect cost Framework

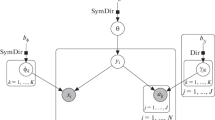

Consider a problem space \({{\mathcal {D}}}\) for which a human user wants to solve a finite set \(D \subset {{\mathcal {D}}}\) of \(n=|D |\) instances \(x \in D\) as accurately and efficiently as possible. The problem may be discriminative or generative. With the help of an AI model M that can do few-shot ‘learning’, the user may choose a small set of examples \(D_s \subset D\), with \(n_s = |D_s |\), add a correct output for each of them, and supply the labelled dataset to the model. M is now contextualised with \(D_s\) (e.g., via a prompt) and outputs the answers for \(D_o = D {\setminus } D_s\). If the user is concerned by the errors of the model, a possible solution could be to increase \(n_s\), since the results for \(D_o\) are expected to be better as we provide more information to M.

However, reaching high reliability with this schema for some few-shot inference systems such as LMs may be infeasible, even with large \(D_s\). If the error tolerance is low, the user may introduce a reject option [14, 18, 19]. In the most common incarnation of this schema, if the model outputs a confidence value \({\hat{p}}(x)\), e.g., the probability of being correct for each instance x, we can define a reject rule: if \({\hat{p}}(x) \le t_r\), with \(t_r\) being the reject threshold, then the user will not use the output of the model. But the rejected examples can be manually inspected by the user and solve them herself. This is what Fig. 2 shows for a dates processing problem. Looking at the left plot (1-shot), for different reject thresholds (shown on the \(x\)-axis) and \(n = 32\) examples, the proportion of accurate, wrong and rejected examples evolves from about 85% accurate vs 12% wrong for \(t_r=0\) (no rejection) and 97% rejected for \(t_r=1\) (being the supplied examples the remaining 3%). The hot spot is found somewhere between \(t_r= 0.4\) and \(t_r=0.6\), with very few errors but the system automating more than 80% of the examples. Of course, this may be considered an insufficient automation with an unacceptable number of errors, and the alternative is to supply the model with further labelled examples. This is what we see in Fig. 2, where \(n_s\) is in (1..4). In this particular example, we see that the improvement saturates for \(n_s = 3\) and a very good spot is found in that plot for \(t_r = 0.7\), giving about 90% accurate results with the rest being rejected.

Reject option behaviour averaged for 10 dates formatting problems with \(n = 32\) instances each. The curves show average proportion of examples (supplied \(\frac{n_s}{n}\) in blue, accurate \(\frac{n_a}{n}\) in green, wrong \(\frac{n_w}{n}\) in red and rejected \(\frac{n_r}{n}\) in grey) as we increase the reject threshold \(t_r\) in the \(x\)-axis. The four plots show the evolution for different values of \(n_s\) in (1..4)

This traditional view of rejection neglects an important aspect: many of the initially rejected examples were actually correct! Rather than rejecting the output of the model, the user has the option of inspecting these unreliable examples. There are two possible outcomes to the inspection: sometimes the user has to correct the example, but in many other cases the user only needs to validate it. The latter scenario generally requires much less effort than the former. In Fig. 1, we can observe this distinction through the examples marked in orange, which represent incorrect instances that required correction, and those marked in turquoise, which were verified as correct and could then be confidently used by the model.

This set of inspected examples we now denote by \(D_i \subset D_o\). The big insight and refinement from the concept of rejection is that we can split \(D_i\) into two different sets, \(D_v\), the examples correctly labelled by M and hence validated by the user, and \(D_c\), the examples incorrectly labelled by M, which must also be corrected (these are the truly rejected ones). Finally, the examples that go uninspected (\(D_u\)) can also be divided into accurate \(D_a\) and wrong \(D_w\). Following the previous notation, we have that \(n_{\bullet }= |D_{\bullet } |\) for \({\bullet }\in \{a,c,u,v,w\}\), where \(n = n_s + n_i + n_u\), \(n_i=n_v+n_c\) and \(n_u=n_a+n_w\), which is what we see in Fig. 1. We usually want \(n_s\) very low and \(n_i\) low for usability, with \(n_w\) very low for reliability.

Now let us consider several cost functions \(f_{\bullet }\) for each of the previous sets \(D_{\bullet }\) as a function of the number of elements \(n_{\bullet }\). The global cost to minimise is:

It is customary to define utility functions that depend linearly on the number of examples. Under this assumption, we have:

Proposition 1

Assuming all functions \(f_\bullet \) are linear in \(n_\bullet \) of the form \(f_\bullet (n_\bullet ) = c_\bullet \cdot n_\bullet \), we have that:

where \(c_s\) is the unitary cost for the user to solve an example, \(c_i\) is the unitary cost for the user to inspect an example and \(c_w\) is the unitary cost of an unspotted wrong example.

The proofs for this and all the other theoretical results in the paper can be found in the appendix.

Proposition 1 establishes that, in principle, we only need to know three cost constants: \(c_s\), \(c_i\) and \(c_w\), which entail only two degrees of freedom, as a multiplicative factor over all costs does not change a selection. This means that we can use ratios instead, and we define the operating condition as:

Similarly, any solution to this problem only needs two thresholds. We can first determine \(n_s\), i.e., how many examples the user supplies, as the quality of the inferences will depend on it (and hence all the other \(n_{\bullet }\)). For this, we will seek for a good threshold \(t_s\) statically and dynamically in the following sections. In a few-shot scenario, the \(n_s\) that derives from \(t_s\) is expected to be a small number. Once \(D_s\) is given to the model, we get the confidence for all the other examples \(D_o\). From here, a static method should determine the inspection threshold \(t_i\) (a confidence below which we decide to inspect, replacing the threshold \(t_r\) in the traditional reject option scenario). This will determine the numbers \(n_a\), \(n_c\), \(n_v\) and \(n_w\). We can integrate both thresholds (the two horizontal lines in Fig. 1) into a vector \(\textbf{t}\):

The supply threshold \(t_s \in [0,1]\) determines

with \(\alpha > 1\) being a large constant so that the scale focuses on small sets \(D_s\). The inspection threshold \(t_i \in [0,1]\) sets

where \({\hat{p}}(x)\) is the model’s confidence for each instance x.

We can now rewrite Eq. 1 as a function of vectors \(\textbf{t}\) and \(\textbf{c}\). Since the operating condition \(\textbf{c}\) in Eq. 2 only has two degrees of freedom, the cost just differs by a multiplicative factor \(c_w\):

Proposition 2

Q can be expressed on the thresholds \(\textbf{t}\) and only the two components of \(\textbf{c}\):

From now on, we will simply consider \(c_w=1\) being the cost unit, so that \(c_s\) and \(c_i\) are the two components in \(\textbf{c}\), which we will call supply cost (ratio) and inspect cost (ratio).

Given \(\textbf{t}\) and \(\textbf{c}\), in order to calculate Q from a test set, we proceed as follows. On the test set of n examples, we denote by p(x) (with \(p(x) \in \{0,1\}\) for all x) whether the model is right (1) or wrong (0) with an example x. We have

and

This completes all the \(n_{\bullet }\) for calculating Q.

Algorithm 1 implements the definition of Q as per Propositions 1 and 2. Note that, this algorithm performs a sample in line 1 (the notation \({\mathop {\sim }\limits ^{n_s}}\) means a sample of size \(n_s\)), so the exact actual cost when given fixed threshold and cost vectors \(\textbf{t}\) and \(\textbf{c}\) would be obtained by considering all possible combinatorial samples of \(n_s\) elements from n without replacement \({n \atopwithdelims ()n_s}\). But as the order of examples for LMs matters [20], the exact Q would be given by considering all permutations. In practice, making the sample multiple times and averaging the results can give a good approximation for Q, which is what the parameter m means (number of samples).

Threshold choice methods

A threshold choice method T takes a cost vector \(\textbf{c}\) and possibly other parameters and returns \(\textbf{t}\) for a given model M.

Optimal method \(T^o\)

If we have access to the true p, we can easily define the optimal threshold choice method:

When we use this method to derive the threshold, we have \(Q(\textbf{t}^*;\textbf{c})\), represented more shortly as \(Q^o(\textbf{c})\). The optimal threshold can be calculated with Algorithm 2 which is an exhaustive grid searchFootnote 1 looking for the optimal threshold \(\mathbf {t^*}\), as defined in the \(T^o\) threshold method. It is implemented by iterating on the number of supplied examples \(s\in [1..n]\) (first loop starting at line 3) and threshold \(\theta \in [0..1]\) using a sufficient resolution \(\epsilon \) (inner loop starting at line 5). \(\epsilon \) must be small enough to find any threshold \(\theta \) that could appear between two consecutive \({\hat{p}}(x)\).

Fixed method \(T^\phi \)

In practice, we do not have access to the true p, so any method will usually give suboptimal results when exploring the trade-offs. For instance, the higher \(t_s\) the higher the part of the cost that comes from \(n_s\). However, this will usually entail better predictions and confidence, reducing the number of examples \(n_i\) that have to be inspected and the final number of wrong examples \(n_w\). So it seems the first choice must be \(t_s\). We can assume a constant \(n_s\) and derive \(t_s\) accordingly, undoing \(\nu \) in Eq. 4. We could do this for both \(t_s\) and \(t_i\), choosing them in a fixed way that is independent of \(\textbf{c}\), such as \(T^\phi \) making \(t_s=\nu ^{-1}_{\alpha ,n}(5)\) and \(t_i= 0.5\). This cost, denoted by \(Q^\phi \), would be obtained if the user always supplies 5 examples and inspects the remaining examples whose estimated confidence is lower than 0.5. The fixed method completely disregards the costs.

Static method \(T^\sigma \)

We call the first family of methods using the costs static, as they derive \(t_s\) just once and then \(t_i\) from it. When the algorithm decides \(t_s\) it still does not have access to the estimated probabilities. This family of methods makes the assumption that for \(n_s=0\) there is a baseline proportion of examples that will be right and this proportion usually increases as \(n_s\) grows. We assume the proportion of corrected over inspected is \(\frac{n_c}{n_i}\)=\(\frac{1}{b_c}(1 - \frac{n_s}{n})\) and wrong over uninspected is \(\frac{n_w}{n_u}\)=\(\frac{1}{b_w}(1- \frac{n_s}{n})\). With this, from Proposition 1 we have:

We are given \(c_s\), \(c_i\) and n, so basically we have to find the pair \(\langle n_s, n_i\rangle \) such that the above expression is minimised, with \(n_s + n_i \le n\), \(n_s > 0\), \(n_i \ge 0\). This can be done with linear programming or any other solver, discard \(n_i\) and then keep \(n_s\) for the next step (and \(t_s\) comes from \(\nu ^{-1}\) in Eq. 4).

Once \(n_s\) has been decided, we choose \(n_s\) examples randomly from D that are labelled (by a human H) and supplied (\(D_s)\) to the model M, getting the results and probabilities for all other examples \(D_o\). We calculate \(n_i\) using Eq. 5. If we take \({\hat{p}}(x)\) as good estimates or at least perfectly calibrated then

and

Using all this in Proposition 2, we just need to minimise:

This is what Algorithm 3 calculates. It first determines the values \(n_s\) and \(n_i\) that minimise function g but only \(n_s\) is kept (line 2) to be used for selecting the sample \(D_s\) (line 4) to be labeled by the user (line 5). This supplied cost is calculated in line 6. The model M is prompted with \(D_s\) (line 7). Then, the model’s confidence for \(D_o\) (the remaining examples in D) is obtained (line 9) and used for ordering the examples in \(D_o\) in increasing order of predicted confidence (line 10). Finally, the for loop (lines 12–20) performs an exhaustive search of the best inspection threshold \(t_i\) (line 18) for which the overall cost (lines 13 to 15) is minimum. Note that, in Algorithm 3 we cannot really consider that the method looks for all subsets \(D_s\) of size \(n_s\) in D, as trying each of them in practice will incur a cost from the human. Consequently, the static Algorithm 3 only has one run. In order to evaluate this and other methods (e.g., the dynamic one), we did repetitions outside the algorithm. Additionally, in Algorithm 3 the calculations using \({\hat{p}}\) consider the sums over decreasing index ranges, i.e., \(x \in D_p[1:0]\) and \(D_o[(n_o+1:n_o)]\) to be empty, and assume no ties (e.g., by adding a small random number to all \({\hat{p}}(x)\)).

Dynamic method \(T^\delta \)

The static algorithm assumes that the examples to be supplied to the model must be sampled at the beginning, and then use the model for the rest of the examples. Instead, we could take an incremental approach, where we supply very few examples \(D_s\) as start (let us say \(|D_s |= s_0\)), and infer the outputs for all the rest (\(D_o\)). While this may be very conservative in terms of \(c_s\) and may give poor results at this point, we can already use the model to rank the examples in \(D_o\) and choose just very few \(D_i\) for inspection (let us say \(|D_i |= i_{\oplus }\)). The insightful observation comes when we realise that some of them will be correct (and hence validated, \(D_v\)) and some of them will be incorrect (and hence corrected, \(D_c\)), but all of them can be reused for another iteration with the model with \(D_s \cup D_v \cup D_c\) examples. Interestingly, while the new \(n_s\) includes all these examples, the elements in \(D_c\) have been inspected and supplied (with cost \(c_i + c_s\)) but the elements in \(D_v\) have only been validated (with cost \(c_i\)). This may represent an important saving, as the human can supply \(n_s\) examples to the model with lower cost than the original \(c_s\cdot n_s\).

This observation leads to Algorithm 4. The algorithm takes the usual parameters but also \(s_0\), \(i_{\oplus }\), \(s_\star \) (maximum number of iterations) and rand (true if the examples to be inspected are selected randomly, and false if it is done following \({\hat{p}}\)), and it returns the thresholds \(t_s\) and \(t_i\) but it also returns two extra values: \(n_v\) and \(n_i\). The value \(n_v\) represents the examples that were validated by the human and hence did not incur the \(c_s\) cost. The value \(n_i\) are the examples that were inspected before they were moved to \(D_s\). These have to be used when calculating the cost of the dynamic algorithm: we have to add the cost of \(n_i\) and remove the cost of \(n_v\) when plugging Algorithms 4 and 1 together:

Regarding the parameters \(s_0\) and \(i_{\oplus }\), the smaller the better, ideally \(s_0=i_{\oplus }=1\).

If there is no tolerance for errors, any algorithm should inspect all examples. In this case, we can prove the following:

Proposition 3

When no wrong results are permitted, the dynamic algorithm \(T^\delta \) with \(s_0=i_{\oplus }=1\) and \(s_\star = |D |\) is optimal up to \(c_i\cdot (n_s-1)\) cost units provided the algorithm always orders examples by decreasing probability of being correct (more likely correct first).

Note that Proposition 3 dictates that in this extreme case where wrong examples are not allowed it could be more beneficial to choose the next \(i_{\oplus }\) by decreasing \({\hat{p}}(x)\) rather than increasing \({\hat{p}}(x)\) as Algorithm 4 does when rand is false. However, this would always increase the few-shot examples for the model with easy examples first, which would be less informative than using other more difficult (and corrected) examples. As using an increasing or decreasing order for this are extreme, we will finally use a random choice.

Supply-inspect surfaces and expected cost

For any threshold choice method \(\mu \), its supply-inspect surface is simply \(Q^\mu (\textbf{c})\) on the \(z\)-axis, where the other two axes are just the two components of the operating condition \(\textbf{c}\). Originally, the two components of \(\textbf{c}\) are ratios (as defined in Eq. 2), and they go from 0 to \(\infty \), but many values will be close to 0, as it is usual that \(c_s \ll c_w\) and \(c_i \ll c_w\). To make the space finite and better accounting for the interesting regions of the space, we introduce two normalisation functions \(h_s\) and \(h_i\), such that the \(x\)-axis is given by \(h_s(c_s)\) (the supply cost coordinate) and the \(y\)-axis is given by \(h_i(c_i)\) (the inspect cost coordinate). In what follows, we consider \(h_s(a) {\mathop {=}\limits ^{\text {def}}}h_i(a) {\mathop {=}\limits ^{\text {def}}}1-\beta ^{-a}\), with \(\beta >1\). With this we also have that the two axes are in [0, 1[ and the volume and the surface will be finite.Footnote 2 Coordinates can be mapped to costs simply by \(h_s^{-1}(x) = -\log _\beta (1-x) = c_s\) and \(h_i^{-1}(y) = -\log _\beta (1-y) = c_i\).

Fig. 3 shows a ‘supply-inspect’ surface using three techniques: the optimal, static and dynamic methods. The \(x\)-axis, ranging from 0 to 1, represents the relative cost of supplying an example compared to an incorrect result, with 0 representing a very low supplying cost. Similarly, the \(y\)-axis, also ranging from 0 to 1, reflects the relative inspection costs, with lower values indicating low inspection costs.

This visualisation helps us to understand the cost dynamics at play under different operating conditions, highlighting trade-offs and guiding the optimisation of models for practical applications. Basically, we can better understand the expectation on varying \(\textbf{c}\) beyond just a single point. If we assume a distribution \(\omega \) on operating conditions and \(\textbf{c} \sim \omega \), we have the expected cost \({\mathbb {E}}_{\textbf{c} \sim \omega } [ Q(\textbf{c}) ]\). The following holds:

Proposition 4

Consider \({{\mathcal {H}}}\) the bivariate distribution that results on applying \(h_s\) and \(h_i\) to the two dimensions of \(\omega \). If \({{\mathcal {H}}}\) is a bivariate uniform distribution, then the volume under the supply-inspect surface is the expected cost.

Corollary 1

The volume in the supply-inspect space under \(h_s(a) = h_i(a) = 1 - e^{-a}\) is equivalent to a weighted integral over the original space assuming an exponential distribution with \(\lambda =1\).

The above corollary suggests that our normalisation of the space is actually assuming an exponential distribution on the costs with parameter \(\lambda =1\). Other parameters could be explored or even other distributions in the exponential family, such as the gamma distribution, but ours serves well as a standard to represent the surfaces in a bounded space [0, 1[.

As usual in other Pareto comparisons (e.g., ROC analysis [21, 22], or objective optimisation problems [23]), when two surfaces cross, both have regions for which one is better than the other. One surface can only be safely discarded below the convex hull of some other surfaces. The volume (or expected loss) only seems an indication of how good a method is in expectation.

So far, we have considered that humans are perfect, but this is usually irrealistic, even if we take them as ground truth. In practice, we need to estimate \(e_s\) and \(e_i\) to account for the proportion of supplied examples and inspected examples respectively a human makes wrong. While this may suggest that we need to redo all our framework because of this, the following proposition and corollary show that we do not, provided we readjust the cost estimations.

Proposition 5

Consider the same conditions as Proposition 1 but we now have a proportion of human error \(e_s\) and \(e_i\) for the supplied examples and inspected examples, respectively. The new cost equation becomes:

Corollary 2

We can express the cost when human errors exist as a readjustment of the normalisation of costs:

where \(c'_s = \frac{c_s}{c_w} +e_s \) and \( c'_i = \frac{c_i}{c_w} + e_i \).

This is a very elegant adjustment, as we only need to estimate the error rates and include them in the calculation of the operating condition. Everything else remains the same.

Experimental design

As discussed in the introduction, many routine tasks involve transforming inputs into outputs, such as converting some pieces of information into some standardised form. These tasks become interesting for (semi-)automation only if humans have to supply very few examples, and the errors in the uninspected results are unlikely. Consequently, these tasks are perfectly suited for few-shot learning under the supply-inspect cost framework introduced in this paper. Accordingly, we will use a repository of tasks built over the most comprehensive benchmark for data-wrangling transformation problems to date, the Data Wrangling Dataset RepositoryFootnote 3 [24, 25], which we have extended considerablyFootnote 4 (see [26] for further information). Overall, the repository contains 123 different tasks divided into 7 different domains (dates, emails, freetext, names, phones, times and units). For every task we have 32 annotated examples where an input string is converted into a corrected or transformed version. The appendix contains full details about the tasks (Table 2) and some illustrative examples (Table 3).

The experimental goals are:

-

1.

Explore whether these problems are solvable with LMs in a few-shot fashion and determine whether there is a saturation point in the number of supplied examples.

-

2.

Study whether the number of examples provided to the model affects not only the accuracy of the outputs but also their confidence (\({\hat{p}}\)), so there is a trade-off between \(n_s\) vs \(n_c\) and \(n_w\).

-

3.

Determine how close the static and dynamic algorithms can get to the optimal cost, in comparison with the fixed method.

-

4.

Derive and use reasonable cost distributions from the human study, and analyse how results differ from the uniform case.

For the experiments, we used four GPT-3 versions: Ada, Babbage, Curie and DaVinci with approximately 350 M, 1.3B, 6.7B, and 175B parameters, respectively. Following the recommendations in the OpenAI APIFootnote 5 we used prompts following an input–output style, where the string “Input:" is used to indicate the start of the input, and the string “Output:" is used to indicate the start of the output. The line break \(\backslash \) n separates the input from the output of an example, as well as the examples in the prompt. The instances have one (one-shot) or more (few-shot) given input–output pairs of the same problem and domain, and one single input ending the prompt. The model has have to provide the output by continuing the prompt. These are two one-shot examples (from different domains: dates and times):

We obtain the confidence \({\hat{p}}\) that the model gives for the output as follows. If the model outputs the sequence of tokens \(a_1, a_2,...\), we trim the part that corresponds to the solution template. For these tokens we simply calculate the sum of the logprobs (or logarithm of probabilities that the model assigns to its generated outputs, which offers a measure of the model’s confidence in its predictions) of all items and then convert this sum into the probability \({\hat{p}}\).

As we cannot really do repetitions without incurring real extra cost to the user, we calculate Q by performing only one sample of the \(n_s\) examples from D (\(n_s\) determined by each threshold method), and a lightweight implementation of \(T^o\) (see Algorithm 5 in the appendix). This ensures that our evaluation reflects practical constraints while still generating meaningful, actionable insights.

With the intention of obtaining optimal results, we carried out experiments with other methods using specific configurations. For the \(T^\phi \) method we used \(t_s=\nu ^{-1}_{\alpha ,n}(5)\) and \(t_i= 0.5\), while for \(T^\sigma \) we used \(b_c=2\) and \(b_w=3\). For \(T^\delta \) the method was run with \(s_0 = i_{\oplus } = 1\) and a fixed number of interactions (\(s_\star =10\)). While we experimented with several other parameter settings and variants for \(T^\phi \), \(T^\sigma \) and \(T^\delta \), the results obtained were either similar or inferior to those configurations. We therefore concluded that the chosen configuration gave the best performance. Also, given the combinatorial nature that would be required to evaluate all possible subsets \(D_s\), our \(T^o\) approach serves as an upper bound estimate — an estimate that closely reflects an ideal baseline. The sensitivity of these parameters plays a crucial role in the outcome.

In order to estimate reasonable operating conditions, we conducted a questionnaire on 31 human subjects where we asked four questions for each of the seven domains. The first two questions measured the actual time for solving an instance (this time \(\tau _s\) being a proxy for \(c_s\)) and the actual time for verifying an instance (this time \(\tau _i\) being a proxy for \(c_i\)), averaged over five instances per question.

This was followed by a third subjective question asking the cost unit per time unit of a person (\(\chi /\tau \)), so that times could be converted into costs, and a fourth subjective question that asked about the cost of each error \(\chi _w\) directly. We just derived \(\chi _s = {\tau _s}\frac{\chi }{\tau }\) and \(\chi _i = {\tau _i}\frac{\chi }{\tau }\). Finally, we divided both by \(\chi _w\) to have the normalised costs in \(\textbf{c}\). That is, the estimate of the operating condition \(\hat{\textbf{c}}\) is given by \({\hat{c}}_s = \frac{\chi _s}{\chi _w}\) and \({\hat{c}}_i = \frac{\chi _i}{\chi _w}\). The results are shown in Table 1.

Mean accuracy per domain for increasing values of \(n_s\). Detailed results in Table 6 in the appendix regarding the accuracy per task for increasing values of \(n_s\)

Finally, we also considered that humans may have errors, as we discussed around Proposition 5. In our questionnaires, humans were just given one example to infer the solution for all the other examples, so the error percentages we obtained are an overestimation of what trained humans would do for these domains. Nevertheless, the adjusted costs as per corollary 2 can be found in the appendix, and the recalculation of the expected costs for all methods. Even in these extreme conditions of human errors, the dynamic method is robust.

Results

We follow the experimental goals sequentially.Footnote 6 In addressing goal (1), our main objectives were to investigate the feasibility of using LMs, to solve the problems illustrated by the Data Wrangling dataset. We also wanted to determine the exact saturation point for few-shot learning. Our analysis revealed interesting dynamics, as shown in Fig. 4. This plot illustrates the fluctuation of the accuracy achieved by the models, with the number of shots ranging from zero to ten across all the established domains. We can see an immediate, sharp increase in accuracy from zero shots to a one-shot scenario. This period of rapid growth then slows down into a more moderate and gradual increase until we reach around the 8 or 9-shot mark. After this point, the growth stabilises, suggesting the onset of a saturation point. From this data, we can confidently conduct our experiments with ten-shot at most for GPT-3, regardless of whether \(n_s\) is higher.

Proportion of \(n_a\) and \(n_w\) examples for increasing \(n_s\) with different thresholds (in colour) for the dates domain (see Fig. 11 in the appendix for all domains)

For goal (2), we focus on the model’s confidence, denoted by \({\hat{p}}\), and its evolution as the number of examples is adjusted. Similar to our findings from goal (1), we observe a stabilisation around the ninth example, as shown in Fig. 5. In Fig. 6, we illustrate the trade-off between the number of examples we provide (\(n_s\)), the correctly predicted examples (\(n_a\)), and the incorrectly predicted examples (\(n_w\)). What we are essentially visualising here is how the ratio of correctly and incorrectly predicted examples increases and decreases as we steadily increase the number of examples provided (\(n_s\)). These fluctuations occur over different thresholds, which are set according to the model’s confidence. It is important to note that within this particular example setting, the proportion of rejected examples, or \(n_r\), would be the difference between 1 and the combined sum of the hit (accurate examples) and miss (inaccurate examples) ratios.

In pursuing goal (3), we use a supply-inspect framework in which the operational conditions of each domain follow a uniform distribution, denoted here as \({{\mathcal {H}}}\) as per Proposition 4. The volumes are calculated using a trapezoidal method over a grid layout. Figure 7 positions and compares the average expected cost for each domain. The cost distributions have been determined using data from human-led responses (opaque bars) and with uniform \({{\mathcal {H}}}\) (transparent bars). This comparison sets the benchmark at an optimal level (\(T^o\)) and measures the performance of static (\(T^\sigma \)), dynamic (\(T^\delta \)) and fixed (\(T^\phi \)) methods against this ideal standard. The transparent bars in this figure show how close both the static \((T^\sigma )\) and dynamic \((T^\delta )\) algorithms are to the optimal cost for each domain. From our data, we see that \(T^\phi \) outperforms \(T^\sigma \) in five of the total domains expressed, but \(T^\delta \) remains superior to both.

Average expected costs per domain using the cost distributions from humans (opaque bars) and with uniform \({{\mathcal {H}}}\) (transparent bars) for the optimal (\(T^o\)), static (\(T^\sigma \)), dynamic (\(T^\delta \)) and fixed (\(T^\phi \)) methods. Detailed information per domain, problem and method in Table 7 in the technical appendix

To create a more realistic distribution of operating conditions, we include the results of \(c_s\) and \(c_i\) from the human-driven questionnaires, in line with our goal (4). Rather than simply averaging these operating conditions, we analyse each human response as a unique operating condition, expressed as \(\langle c_s, c_i\rangle \). Each corresponding result of Q is calculated individually before being averaged together. Figure 7 (opaque bars) shows these results. We see a decrease in the overall magnitudes as the values become skewed towards lower ratios. A visual representation of this skew can be seen in Table 1. In six of the seven domains, \(T^\phi \) lags behind, with the dynamic \(T^\delta \) algorithm outperforming in all seven domains. In particular, \(T^\delta \) comes very close to the optimal result in many cases. It should be emphasised that these data do not suggest that \(T^\delta \) consistently outperforms the rest in all operating conditions. In fact, when compared to \(T^\phi \), which is optimal for a single operating condition, it is impossible to achieve complete dominance with \(T^\delta \). In general, surfaces cross as we saw in Fig. 3.

For a more detailed breakdown of our findings and results by domain, problem and methodology, we refer readers to Table 7 in our technical appendix.

Related work

The tension between reliability and usability goes beyond AI, since usability is related to the type and degree of supervision required from humans while providing a good quality of service [28,29,30]. However, many new tasks in AI, such as those provided by generative models [31,32,33], challenge this assumption. For instance, if a model generates images, inspecting and validating them is much cheaper for the user than creating or correcting them. An illustrative situation is few-shot learning [1, 34]. This is an important and increasingly more common way of using LMs, where template prompts accommodate an arbitrary number of examples [5, 11, 35,36,37,38,39].

The extension of this paradigm to other modalities is expected to happen soon [40]. However, to our knowledge, no previous work on LMs or few-shot inference has considered any realistic cost model to account for the reliability-usability trade-offs of these applications.

One general way to reduce the impact of classification errors is the use of a reject option [14] which determines the examples for which the classifier abstains. Reject options have been extensively studied for binary classification by optimising a certain objective cost function [41,42,43,44,45,46] or based on ROC analysis [47, 48].

On the other hand, a trade-off between performance and number of examples provided is also related to the area of active learning, where a learner iteratively chooses the training data by asking an oracle (usually the user) to label a few unlabelled examples [15, 49, 50]. A common query strategy is uncertainty sampling where the examples with the lowest confidence are selected first. Additionally, many active learning methods try to minimise annotation costs by reducing the number of examples to be labelled at each iteration. Although the most common scenario assumes the annotation cost is the same for all examples, some approaches also consider the cases in that the annotation cost vary between instances [51,52,53,54,55]. Active learning has also been studied for learning classifiers with reject option in an active way [56, 57] as an alternative to other (passive) methods that assume that a large labelled dataset is available. Nevertheless, to our knowledge, no active learning method reuses the classifier outputs to reduce the number of examples to be labelled by the user as our framework does. Finally, in other fields similar problems have been addressed by optimisation [2] or iterative learning [58].

Our static method, based on a cost-based thresholding function, is related to reject option methods and other threshold-choice methods that consider probability estimates [59]. However, in contrast to reject option approaches, the estimated threshold does not select the examples to be rejected but to be inspected, for which the labels estimated by the model are kept and used. Our framework is also general, going beyond any particular supervised task, and being especially applicable to those ML problems of generative character, where inspection costs are much smaller than supervision costs.

The dynamic algorithm might be considered as an active learning method with query strategies based on confidence, but not precisely selecting informative examples first. Again, the key difference of a supply-inspect cost framework is that the user inspects, rather than labels, the examples, and she only corrects those that are wrong, reducing the human cost since inspection is cheaper than correction. Consequently, there is a trade-off between preventing corrections and getting information from the user. In active learning, querying examples for which the model is correct is not informative and hence not pursued.

Even if active learning does not look for a trade-off between inspecting vs supplying costs, and hence the comparison is not really meaningful, we refer the reader to the appendix E for a comparative study of our methods against active learning. We show that even with a perfect example choice strategy having no errors at all (\(Q_w = 0\)), active learning is worse than all the supply-inspect methods introduced in this paper.

Conclusions and future work

The classical reject-option model is inappropriate for many old and new applications in AI, where humans play a more fluid role of pre-supervisors (supplying solved examples) and post-supervisors (inspecting examples provided by the system, and eventually correcting them). The new general supply-inspect framework introduced in this paper captures the need for adjusting the pre- and post-supervision efforts through the supply and inspect thresholds respectively. The dynamic algorithm shows that in scenarios where it is possible (and meaningful, as they are corrected by the user) to increase the number of examples incrementally as they are validated or corrected, we can obtain better results than fixed or static threshold choices. We have shown theoretical results about the framework (contributions 1 & 2), the supply-inspect space (contribution 3) and the algorithms (contribution 4). In practice, the space should be used to analyse how the surfaces from different threshold choice methods cross, helping decisions about their use depending on the operating conditions.

We have evaluated the feasibility of the results presented in this study from both an implementation and computational perspective. From an implementation perspective, we used a repository of tasks from the Data Wrangling Dataset Repository (contribution 5), containing 123 different tasks divided into 7 different domains, providing a broad scope for learning and testing the models. Additionally, the experimental procedures, which include exploring the solvability of tasks, studying the effects of the number of examples on model performance, comparing different algorithms, and deriving cost distributions from human studies, showed the implementability of the framework. From a computational perspective, we have used four well-known public versions of the GPT-3 model with varying numbers of parameters, from 350 M to 175B. This range of model complexity allowed us the study of trade-offs between computational resources and model performance.

The setting fits few-shot inference with LMs perfectly, but it has broad applicability to a range of problems in ML where the degrees of supply and inspect effort are variable, depending on the domain or the user. We have also illustrated that while the space of operating conditions is uncertain, an exponential distribution of \(c_s\) and \(c_i\) is appropriate as an aggregated metric. Nevertheless, we have had the rare determination of estimating realistic ranges of operating conditions from humans. While human questionnaires have many biases and limitations, we leave these estimated costs as meta-data for other researchers to conduct more realistic usability-reliability studies using some new methods.

Indeed, the static and dynamic algorithms may be improved in many ways, depending on the level of sophistication and some other information available during deployment. As presented in this paper, they are foundational for two major families of threshold-choice methods for this new supply-inspect paradigm, but more methods will surely come.

For instance, in the particular use of LMs, we also see potential for more sophisticated ways of choosing examples or prompts, inspired by recent research showing that not only the distribution of examples matters but also their order, or other ways to increase performance like calibration. In more general terms, we think this paper contributes to the recent trend of analysing the deployment of ML systems more holistically and taking human factors into account.

Data availability

All the code and results, questionnaires and responses can be found in https://github.com/nandomp/Trade-OffsFew-Shot.git.

Notes

It could also be used any other optimization algorithm for finding the minimum of a function, such as gradient descent.

In what follows, the integrals will go from 0 to 1, but we have to consider the volumes are open on 1.

https://openai.com/blog/openai-api/

In compliance with the recommendations of the Science paper about reporting of evaluation results in AI [27], all the code, human questionnaire data, and results at the instance level can be found in https://github.com/nandomp/Trade-OffsFew-Shot.git.

Note that \(e_s\) simply accounts for the percentage of examples solved incorrectly, but \(e_i\) only accounts for the percentage of examples that are incorrect and not detected by the human (false negatives), but we do not consider here those examples that are correct and flagged as incorrect by the human (false positives), as they are covered by \(e_s\). We do this, as this is the interpretation in Proposition 5.

References

Wang Y, Yao Q, Kwok JT, Ni LM (2020) Generalizing from a few examples: a survey on few-shot learning. ACM Comput Surv (CSUR) 53(3):1–34

Tao H, Cheng L, Qiu J, Stojanovic V (2022) Few shot cross equipment fault diagnosis method based on parameter optimization and feature mertic. Measur Sci Technol 33(11):115005

Wang K, Liew JH, Zou Y, Zhou D, Feng J (2019) Panet: Few-shot image semantic segmentation with prototype alignment. In: proceedings of the IEEE/CVF international conference on computer vision. p. 9197–9206

Yang B, Liu C, Li B, Jiao J, Ye Q (2020) Prototype mixture models for few-shot semantic segmentation. In: Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part VIII 16. Springer. p. 763–778

Brown TB, Mann B, Ryder N, Subbiah M, Kaplan J, Dhariwal P, et al. (2020) Language Models are Few-Shot Learners. In: Advances in Neural Information Processing Systems. p. 1877–1901

OpenAI. GPT-4 technical report. ArXiv. 2023;abs/2303.08774

Zeng W, Ren X, Su T, Wang H, Liao Y, Wang Z, et al (2021) PanGu-\(\alpha \): large-scale autoregressive pretrained chinese language models with auto-parallel computation. arXiv preprint arXiv:2104.12369

Chowdhery A, et al (2022) PaLM: scaling language modeling with pathways. arXiv:2204.02311 [cs]

BigScience, et al (2023) BLOOM: A 176B-parameter open-access multilingual language model. https://doi.org/10.48550/arXiv.2211.05100. arXiv:2211.05100 [cs]

Touvron H, Lavril T, Izacard G, Martinet X, Lachaux MA, Lacroix T, et al (2023) Llama: Open and efficient foundation language models. arXiv preprint arXiv:2302.13971

Schellaert W, Martínez-Plumed F, Vold K, Burden J, Casares PA, Loe BS et al (2023) Your prompt is my command: on assessing the human-centred generality of multimodal models. J Artif Intell Res 77:377–394

Franc V, Prusa D, Voracek V (2023) Optimal strategies for reject option classifiers. J Mach Learn Res 24(11):1–49

Pugnana A, Ruggieri S (2023) A model-agnostic heuristics for selective classification. In: Proceedings of the AAAI Conference on Artificial Intelligence. vol. 37. p. 9461–9469

Hendrickx K, Perini L, Van der Plas D, Meert W, Davis J (2023) Machine learning with a reject option: a survey. arXiv preprint arXiv:2107.11277

Kumar P, Gupta A (2020) Active learning query strategies for classification, regression, and clustering: a survey. J Comput Sci Technol 35:913–945

Rattenbury T, Hellerstein JM, Heer J, Kandel S, Carreras C (2017) Principles of data wrangling: practical techniques for data preparation. O’Reilly Media, Inc

Jaimovitch-López G, Ferri C, Hernández-Orallo J, Martínez-Plumed F, Ramírez-Quintana MJ (2023) Can language models automate data wrangling? Mach Learn 112(6):2053–2082

Charoenphakdee N, Cui Z, Zhang Y, Sugiyama M (2021) Classification with rejection based on cost-sensitive classification. In: International Conference on Machine Learning. PMLR. p. 1507–1517

Zhou L, Martínez-Plumed F, Hernández-Orallo J, Ferri C, Schellaert W (2022) Reject before you run: small assessors anticipate big language models. In: 1st AI Evaluation Beyond Metrics Workshop (EBEM), CEUR Proceedings, volume 3169

Lu Y, Bartolo M, Moore A, Riedel S, Stenetorp P (2021) Fantastically ordered prompts and where to find them: overcoming few-shot prompt order sensitivity. arXiv preprint arXiv:2104.08786

Flach PA (2016) ROC analysis. In: Encyclopedia of Machine Learning and Data Mining. Springer, p. 1–8

Nakas C, Bantis L, Gatsonis C (2023) ROC analysis for classification and prediction in practice. CRC Press

Tian Y, Si L, Zhang X, Cheng R, He C, Tan KC et al (2021) Evolutionary large-scale multi-objective optimization: a survey. ACM Comput Surv (CSUR) 54(8):1–34

Contreras-Ochando L, Ferri C, Hernández-Orallo J, Martínez-Plumed F, Ramírez-Quintana MJ, Katayama S (2019) Automated data transformation with inductive programming and dynamic background knowledge. In: Proceedings of the European Conference on Machine Learning and Knowledge Discovery in Databases, ECML PKDD 2019. Springer, p. 735–751

Contreras-Ochando L, Ferri C, Hernández-Orallo J, Martínez-Plumed F, Ramírez-Quintana MJ, Katayama S (2019) BK-ADAPT: dynamic background knowledge for automating data transformation. In: Joint European Conference on Machine Learning and Knowledge Discovery in Databases. Springer. p. 755–759

Srivastava A, Rastogi A, Rao A, Shoeb AAM, Abid A, Fisch A, et al. (2022) Beyond the imitation game: In: Quantifying and extrapolating the capabilities of language models. arXiv preprint arXiv:2206.04615

Burnell R, Schellaert W, Burden J, Ullman TD, Martinez-Plumed F, Tenenbaum JB et al (2023) Rethink reporting of evaluation results in AI. Science 380(6641):136–138

Virani N, Iyer N, Yang Z (2020) Justification-based reliability in machine learning. In: Proc. of the AAAI Conf. on Artificial Intelligence. vol. 34. p. 6078–6085

Cabitza F, Campagner A, Balsano C (2020) Bridging the “last mile” gap between AI implementation and operation:“data awareness” that matters. Ann Transl Med 8(7). https://doi.org/10.21037/atm.2020.03.63

De A, Koley P, Ganguly N, Gomez-Rodriguez M (2020) Regression under human assistance. In: Proc. of the AAAI Conf. on Artificial Intelligence. vol. 34. p. 2611–2620

Pan Z, Yu W, Yi X, Khan A, Yuan F, Zheng Y (2019) Recent progress on generative adversarial networks (GANs): a survey. IEEE Access 7:36322–36333

Harshvardhan G, Gourisaria MK, Pandey M, Rautaray SS (2020) A comprehensive survey and analysis of generative models in machine learning. Comput Sci Rev 38:100285

Saxena D, Cao J (2021) Generative adversarial networks (GANs) challenges, solutions, and future directions. ACM Comput Surv (CSUR) 54(3):1–42

Sung F, Yang Y, Zhang L, Xiang T, Torr PH, Hospedales TM (2018) Learning to compare: relation network for few-shot learning. In: Proc. of the IEEE Conf. on computer vision and pattern recognition. p. 1199–1208

Xu S, Semnani S, Campagna G, Lam M (2020) AutoQA: from databases to Q &A semantic parsers with only synthetic training data. In: Proc. of the 2020 Conf. on Empirical Methods in Natural Language Processing (EMNLP). p. 422–434

Izacard G, Grave E (2020) Leveraging passage retrieval with generative models for open domain question answering. arXiv preprint arXiv:2007.01282

Hendrycks D, Burns C, Basart S, Zou A, Mazeika M, Song D, et al (2020) Measuring massive multitask language understanding. In: International Conf. on Learning Representations

Reynolds L, McDonell K (2021) Prompt programming for large language models: beyond the few-shot paradigm. arXiv preprint arXiv:2102.07350

Scao TL, Rush AM (2021) How many data points is a prompt worth? arXiv preprint arXiv:2103.08493

Bommasani R, Hudson DA, Adeli E, Altman R, Arora S, von Arx S, et al. (2021) On the opportunities and risks of foundation models. arXiv preprint arXiv:2108.07258

Chow C (1970) On optimum recognition error and reject tradeoff. IEEE Trans Inform Theory 16(1):41–46

Herbei R, Wegkamp MH (2006) Classification with reject option. In: Canadian Journal of Statistics/La Revue Canadienne de Statistique. p. 709–721

Bartlett PL, Wegkamp MH (2008) Classification with a reject option using a hinge loss. J Mach Learn Res 9(59):1823–1840

Wegkamp M, Yuan M (2011) Support vector machines with a reject option. Bernoulli 17(4):1368–1385

Denis C, Hebiri M, Zaoui A (2020) Regression with reject option and application to kNN. arXiv preprint arXiv:2006.16597

Lee JK, Bu Y, Rajan D, Sattigeri P, Panda R, Das S, et al (2021) Fair selective classification via sufficiency. In: International Conf. on Machine Learning. PMLR. p. 6076–6086

Tortorella F (2000) An optimal reject rule for binary classifiers. In: Joint IAPR International Workshops on Statistical Techniques in Pattern Recognition (SPR) and Structural and Syntactic Pattern Recognition (SSPR). Springer. p. 611–620

Pietraszek T (2007) On the use of ROC analysis for the optimization of abstaining classifiers. Mach Learn 68(2):137–169

Settles B (2011) From theories to queries: active learning in practice. In: Active Learning and Experimental Design workshop In conjunction with AISTATS 2010. JMLR Workshop and Conf. Proc. p. 1–18

Chen X, Price E (2019) Active regression via linear-sample sparsification. In: Beygelzimer A, Hsu D, (eds) Proc. of the Thirty-Second Conf. on Learning Theory. vol. 99 of Proc. of Machine Learning Research. PMLR. p. 663–695

Margineantu DD (2005) Active cost-sensitive learning. In: Proc. of the 19th International Joint Conf. on Artificial Intelligence. p. 1622–1623

Settles B, Craven M, Friedland L (2008) Active learning with real annotation costs. In: Proc. of the NIPS workshop on cost-sensitive learning. vol. 1. Available at https://api.semanticscholar.org/CorpusID:16285026

Haertel RA, Seppi KD, Ringger EK, Carroll JL (2008) Return on investment for active learning. In: Proc. of the NIPS Workshop on cost-sensitive learning. vol. 72

Culotta A, McCallum A (2005) Reducing labeling effort for structured prediction tasks. In: Proc. of the AAAI Conf. on Artificial Intelligence,. vol. 5. p. 746–751

Fu Y, Zhu X, Li B (2013) A survey on instance selection for active learning. Knowl Inform Syst 35(2):249–283

El-Yaniv R, Wiener Y (2012) Active learning via perfect selective classification. J Mach Learn Res 13(2):255–279

Shah K, Manwani N (2020) Online active learning of reject option classifiers. In: Proc. of the AAAI Conf. on Artificial Intelligence. vol. 34. p. 5652–5659

Zhou C, Tao H, Chen Y, Stojanovic V, Paszke W (2022) Robust point-to-point iterative learning control for constrained systems: A minimum energy approach. Int J Robust Nonlinear Control 32(18):10139–10161

Hernández-Orallo J, Flach P, Ferri C (2012) A unified view of performance metrics: translating threshold choice into expected classification loss. J Mach Learn Res 13(Oct):2813–2869

Jeong D, Aggarwal S, Robinson J, Kumar N, Spearot A, Park DS (2023) Exhaustive or exhausting? Evidence on respondent fatigue in long surveys. J Dev Econ 161:102992

Acknowledgements

We thank the anonymous reviewers for their comments.

Funding

The funding has been received from ValgrAI - Valencian Graduate School and Research Network for Artificial Intelligence; the Norwegian Research Council with Grant no. 329745 (Machine Teaching for Explainable AI); Generalitat Valenciana with Grant nos. CIPROM/2022/6 (FASSLOW) and IDIFEDER/2021/05 (CLUSTERIA); the European Commission under H2020-EU with Grant no. 952215 (TAILOR); US DARPA with Grant no. HR00112120007 (RECoG-AI); the Future of Life Institute with Grant no. RFP2-152; the Spanish Ministry of Science and Innovation (MCIN/AEI/10.13039/ 501100011033) with Grant no. PID2021-122830OB-C42 (SFERA) and “ERDF A way of making Europe”; and the Spanish Ministry of Universities with Grant no. PID2022-140110OA-I00 (FISCALTICS) funded by MICIU/AEI/10.13039/501100011033 and by ERDF, EU.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no Conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A

Here we include the proofs of all the theoretical results presented in the paper.

Proposition 1

(in main paper) Assuming all functions \(f_\bullet \) are linear in \(n_\bullet \) of the form \(f_\bullet (n_\bullet ) = c_\bullet \cdot n_\bullet \), we have that:

where \(c_s\) is the unitary cost for the user to solve an example, \(c_i\) is the unitary cost for the user to inspect an example and \(c_w\) is the unitary cost of an unspotted wrong example.

Proof

From the original definition of cost we have:

We can first consider that uninspected but accurate instances have no cost, i.e., \(f_a = 0\). This means:

As both \(D_v\) and \(D_c\) are inspected but only \(D_c\) is corrected, we can separate the cost of inspection \(f_i\) from \(f_v\) and \(f_c\). Hence, \(f_v\) is decomposed into the cost of inspection \(f_i\) and the cost of accurate instances \(f_a\) (we are assuming it is 0), and \(f_c\) is decomposed into the cost of inspection \(f_i\) and the cost of correcting \(f_f\). Thus, we can rewrite the above equation as follows:

As \(f_i\) is linear, it is additive; so we have:

The cost of a user producing the output for an instance that is supplied initially or after detecting an error is equal, as the user has to solve the instance in both cases. So we have that \(f_s = f_f\), and \(n_i = n_v + n_c\) and we finally simplify:

This can now be expressed in terms of cost constants:

\(\square \)

Proposition 2

(in main paper) Q can be expressed on the thresholds \(\textbf{t}\) and only the two components of \(\textbf{c}\):

Proof

We can rewrite the expression in Proposition 1 as a function of the thresholds.

We now simply extract \(c_w\) as a common factor.

Because \(c_w\) is a constant multiplicative factor for any method or model, we get the result by assuming \(c_w = 1\). \(\square \)

Proposition 3

(in main paper) When no wrong results are permitted, the dynamic algorithm with \(s_0=i_{\oplus }=1\) and \(s_\star =|D |\) is optimal up to \(c_i\cdot (n_s-1)\) cost units provided the algorithm always orders examples by decreasing probability of being correct (more likely correct first).

Proof

After calling \(T^\delta \), the cost is calculated as:

In the extreme case where no wrong results are permitted and the given parameters, all inspections and corrections are inside the algorithm, no further inspections or corrections have to do in Q, and no wrong results happen, so plugging Proposition 1 we have:

Since \(s_\star = |D |= n\), all examples will be supplied (except those that are validated) and all inspected but one (the first one, since \(i_\oplus = 1\)). This leads to:

Clearly, the only way of minimising this expression is by maximising \(n_v\), which is achieved if the algorithm picks the elements that are inspected as those of highest probability of being correct first.

In order to say that this approach is almost optimal, we still need to show that Eq. A1 is not far from the optimal method. We start from Proposition 1 again, for the optimal method and full inspection we have:

Since we inspect all except the supplied, we have \(n_i = n - n_s\); plugging this and reorganising we have:

Since \(n_c = n - n_s - n_v\), we get

Comparing this expression with Eq. A1 we get the term \(c_i\cdot (n_s-1)\), which is usually small. \(\square \)

Proposition 4

(in main paper) Consider \({{\mathcal {H}}}\) the bivariate distribution that results on applying \(h_s\) and \(h_i\) to the two dimensions of \(\omega \). If \({{\mathcal {H}}}\) is a bivariate uniform distribution, then the volume under the supply-inspect surface is the expected cost.

Proof

The volume under the supply-inspect surface is given by:

As \({{\mathcal {H}}}\) is a bivariate uniform distribution, this is an expected value:

Since \({{\mathcal {H}}}\) is the result of applying \(h_s\) and \(h_i\) to \(\omega \), we just get: \({\mathbb {E}}_{\textbf{c} \sim \omega } [ Q(\textbf{c}) ]\). \(\square \)

Corollary 1

(in main paper) The supply-inspect space under \(h_s(a) = h_i(a) = 1 - e^{-a}\) is equivalent to a weighted integral over the original space assuming an exponential distribution with \(\lambda =1\).

Proof

In the original space, we can define a weighted integral as follows:

where \(\omega (a)= \lambda e^{-\lambda a}\) is the density function of the exponential distribution.

Doing integration by substitution with \(du = \omega (y)dy\) we get that \(u= \int \omega (b)db = \Omega (b) = -e^{-\lambda b}\) and \(\Omega ^{-1}(u)= \frac{-1}{\lambda }\log (-u)\). This leads to:

Doing similarly with substitution with \(dv = \omega (a)da\) we get that \(v= \int \omega (a)da = \Omega (a) = -e^{-\lambda a}\) and \(\Omega ^{-1}(v)= \frac{-1}{\lambda }\log (-v)\). Now we have:

Just making the variable changes \(x= v+1\) and \(y= u+1\) we get:

We have that \(\Omega ^{-1}(x-1) = -\frac{1}{\lambda }\log (1-x)\). Plugging it above we have:

If we just make \(h_s(a) = 1 - e^{-a}\) we have that \(h_s^{-1}(x) = -\log (1-x)\), and if we just make \(h_i(a) = 1 - e^{-a}\) we have that \(h_i^{-1}(y) = -\log (1-y)\). By plugging these two expressions into Proposition 4, and setting \(\lambda =1\) we have an expression equal to Eq. A2. \(\square \)

Proposition 5

(in main paper) Consider the same conditions as Proposition 1 but we now have a proportion of human error of \(e_s\) and \(e_i\) for the supplied examples and inspected examples respectively. The new cost equation becomes:

Proof

We extend Proposition 1 with the new errors

since the supplying error rate affects \(n_s+n_c\) examples and the inspecting error rate affects \(n_i\). By rearranging we get:

\(\square \)

Corollary 2

(in main paper) We can express the cost when human errors exist as a readjustment of the normalisation of costs, as follows:

where

Proof

From Proposition 5 and dividing by \(c_w\) as in Proposition 2, we get the expression. \(\square \)

Appendix B

In this appendix, we introduce a Lightweight Variant of \(T^o\).

Note that the Algorithm 2 we implemented in our experiments, calls Q, which has m repetitions. Alternatively, we can do a version that is more similar to the other algorithms, and iterates on j from 0 to \(n_o\) to find the optimal cut point. This would allow for higher efficiency escaping long loops for small \(\epsilon \) values. Algorithm 5 is then a lightweight version of Algorithm 2. However, the lightweight version also has a sample from D at the beginning, so a better estimation of \(T^o\) would require repetitions too, between the outer and the inner loop. Since our results for few-shot learning on GPT-3 were fixed, we always chose the same sample, and this is why we do not need the repetitions for our experiments.

Appendix C

This section includes more information about the datasets used in the experiments. Table 2 presents the name and a short description of some of the tasks included in our repository of data wrangling tasks. Some examples of the tasks are shown in 3. In this table we also include information of the number of tasks per domain. Complete details of the tasks and the examples of them can be found in the BigBench [26] benchmark repository.Footnote 7 All the code and results and visualisations can be found in https://github.com/nandomp/Trade-OffsFew-Shot.git.

Appendix D

Here, we present the calculations with human errors.

As we said, humans were not always correct in the questionnaires when supplying or inspecting examples, and this varies per domain. We estimated \(e_s\) and \(e_i\) to account for the proportion of supplied examples and inspected examples respectively a human makes wrong.Footnote 8 The results are summarised in Table 4 and included in the estimations of costs. Namely, this estimate of the operating condition \(\hat{\textbf{c}}\) including error is given by

Average expected costs per domain using the cost distributions from humans for the optimal (\(T^o\)), static (\(T^\sigma \)), dynamic (\(T^\delta \)) and fixed (\(T^\phi \)) methods, using the costs derived including the human errors from Table 5

Table 5 shows the operating conditions (medians) for the seven domains including the errors. In our questionnaires, humans were only given one solved example (they were not given any general rule about how to do the transformation), so it is important that we compare these errors \(e_s\) and \(e_i\), especially the first one, with the results for GPT-3 for one-shot, as shown in Fig. 4. There we see that the domain with highest error is times, followed by units, and the domain with lowest error is emails. We see similar proportions in the human results in Table 5, not in magnitude (one-shot human errors are much lower than one-shot GPT-3) but in the domains that are more or less difficult. For humans, teh worst results are given for times, followed by phones. The best domain is freetext and emails.

Finally, Fig. 8 shows the overall expected costs, calculated in the same way as in Fig. 7, but now including the human errors. We see that in this extreme situation, the dynamic method obtains the best performance (not considering the optimal method since it is not realistic) in all the domains except from times and units. This is caused by \(c_i'\) being higher than \(c_s'\) in Table 5. If we analyse the difference between \(c_s'\) and \(c_i'\) and the performance of the dynamic method with respect to the static and fixed, we observe that the worst results of the dynamic with respect the other two is found in the domains where \(c_i'>c_s'\): emails, times and units. The dynamic method was designed considering the expected situation that the cost of inspect examples is lower than the cost of supply examples for the same task. For the domains where this situation is not found, the static method seems to be a better option. Finally, the fixed method is not a good selection since it (almost) always obtains the worst results.

Appendix E

In this section, we include tables and figures with the complete results that are shown summarised in the paper.

Table 6 shows the accuracy per task for increasing values of \(n_s\). These values are aggregated to produce Fig. 4.

Table 7 includes the aggregated expected costs per domain, problem and method optimal (\(T^o\)), (static \(T^\sigma \)), dynamic (\(T^\delta \)) and fixed (\(T^\phi \)). In this case, these results are used to generate Fig. 7.

Figure 9 shows the results for active learning [15] following a straightforward random query strategy. We use a batch size of 1 (equal to the dynamic algorithm \(s_0 = i_{\oplus } = 1\)), and a fixed number of interactions (10, also equal to the dynamic algorithm, \(s_\star =10\)).

As we can see, for this problem, active learning incurs very high costs for all the domains compared to the optimal (\(T^o\)), static (\(T^\sigma \)), dynamic (\(T^\delta \)) and fixed (\(T^\phi \)) methods, shown in Fig. 7. It could be argued that these high costs are due to the query strategy used. However, if we disaggregate the results by the type of cost incurred (\(c_s\), \(c_i\) and \(c_w\)) as we show in Fig. 10), we see that an important part of the total cost Q is dominated by \(c_s\) and \(c_i\). In particular, for the uniform costs, assuming active learning were perfect in finally reaching a model with \(Q_w = 0\), we would get that \(Q_s + Q_i\) around 24, which is always above the results of Fig. 7 (except for units with \(T^\sigma \) and \(T^\phi \)). Similarly, for human costs, only \(T^\sigma \) could be worse for dates and names, even assume the active learning strategy is perfect and gets a perfect model with \(Q_w\).

Note that the most important difference of active learning with our methods is that active learning does not balance the cost of supplying new examples against the cost of inspecting them by the user and, thus, in many operating conditions in the supply-inspect space, active learning cannot optimise for these different costs. Actually, active learning is dominated by \(c_s \cdot n\), where \(c_s\) is almost never spared, as active learning looks for the most informative examples (usually requiring correction) where n is the number of iterations.

Finally, Fig. 11 illustrates the reject option behaviour for all domain problems and different values of \(n_s\). The curves show the proportion of examples of each category (supplied \(\frac{n_s}{n}\) in blue, accurate \(\frac{n_a}{n}\) in green, wrong \(\frac{n_w}{n}\) in red and rejected \(\frac{n_r}{n}\) in grey) as we increase the reject threshold \(t_r\) in the \(x\)-axis. The eleven columns show the evolution for different values of \(n_s\) in (0..10). As expected, for low values of the threshold few examples are rejected and when we increase the threshold, the number of rejected examples is increased. Usually, the wrong instances are the first to be rejected. This figure is also useful to study the performance of the LM depending on \(n_s\). For 0-shot (\(n_s=0\)), the performance is very low for all the domains. This performance increases significantly when we provide more examples to the LM. A summary of these plots is included in Fig. 4.

Average expected costs per domain using the cost distributions from humans (using the costs derived including the human errors from Table 5) and with uniform \({{\mathcal {H}}}\) using an active learning approach

Reject option behaviour for all domain problems. The curves show the proportion of examples of each category (supplied \(\frac{n_s}{n}\) in blue, accurate \(\frac{n_a}{n}\) in green, wrong \(\frac{n_w}{n}\) in red and rejected \(\frac{n_r}{n}\) in grey) as we increase the reject threshold \(t_r\) in the \(x\)-axis. The eleven plots show the evolution for different values of \(n_s\) in (0..10)

Appendix F

Here we include details about the questionnaires, the way they were distributed and how the estimates for \(c_s\) and \(c_i\) were obtained, as well as the aggregated distributions.

The questionnaires employed in this work are based on the Data Wrangling Dataset Repository,Footnote 9 a benchmark integrating many data wrangling tasks in the literature as well as new manually gathered tasks dealing with daily transformations [24]. Overall, the repository contains 123 different tasks divided into 7 different domains (dates, emails, freetext, names, phones, times and units). For every task we have 32 annotated examples where an input string is converted into a corrected or transformed version.

For each domain we selected randomly one task for ‘supply’ examples, and a different task for ‘inspect’ responses. For each task, we also selected randomly five instances to be completed by the humans who were performing the questionnaire. These first two questionnaires measured the actual time for solving an instance (its supply time \(\tau _s\) being a proxy for \(c_s\)) and the actual time for verifying an instance (its inspect time \(\tau _i\) being a proxy for \(c_i\)), averaged over five instances per question. An example of the questionnaire for the domain names and can be seen in Fig. 12a (supply) and in Fig. 12b (inspect).

This was followed by a third subjective question asking the cost unit per time unit of a person (\(\chi /\tau \)), so that times could be converted into costs, and a fourth subjective question that asked about the cost of each error \(\chi _w\) directly. We just derived \(\chi _s = {\tau _s}\frac{\chi }{\tau }\) and \(\chi _i = {\tau _i}\frac{\chi }{\tau }\). Finally, we divided both by \(\chi _w\) to have the normalised costs in \(\textbf{c}\). That is, the estimate of the operating condition \(\hat{\textbf{c}}\) is given by \({\hat{c}}_s = \frac{\chi _s}{\chi _w}\) and \({\hat{c}}_i = \frac{\chi _i}{\chi _w}\). Before these questions, we used an introductory text to give some context. In Fig. 12c, we show the questionnaire employed in the domain names.

In order to improve the estimation of costs and reduce the effect of respondent fatigue [60] (i.e., poor performance and efficiency for the later items of a questionnaire when respondents get bored, tired, or uninterested with the task), we produced a second version of the questionnaires in which we used the same tasks but swapping problems. The task used for the the supply problem in the first questionnaire was used for the inspect problem in the second version. Likewise, the task used for the inspect problem in the first questionnaire was used in the supply problem in the second version. Additionally, we reversed the order of the domains in the second questionnaire. The first version of the questionnaire was filled by 17 respondents, while the second version was filled by 14, 31 in total.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Martínez-Plumed, F., Jaimovitch-López, G., Ferri, C. et al. A general supply-inspect cost framework to regulate the reliability-usability trade-offs for few-shot inference. Complex Intell. Syst. (2024). https://doi.org/10.1007/s40747-024-01599-6

Received:

Accepted:

Published: