Abstract

Origin-destination demand prediction is a critical task in the field of intelligent transportation systems. However, accurately modeling the complex spatial-temporal dependencies presents significant challenges, which arises from various factors, including spatial, temporal, and external influences such as geographical features, weather conditions, and traffic incidents. Moreover, capturing multi-scale dependencies of local and global spatial dependencies, as well as short and long-term temporal dependencies, further complicates the task. To address these challenges, a novel framework called the Spatial-Temporal Memory Enhanced Multi-Level Attention Network (ST-MEN) is proposed. The framework consists of several key components. Firstly, an external attention mechanism is incorporated to efficiently process external factors into the prediction process. Secondly, a dynamic spatial feature extraction module is designed that effectively captures the spatial dependencies among nodes. By incorporating two skip-connections, this module preserves the original node information while aggregating information from other nodes. Finally, a temporal feature extraction module is proposed that captures both continuous and discrete temporal dependencies using a hierarchical memory network. In addition, multi-scale features cascade fusion is incorporated to enhance the performance of the proposed model. To evaluate the effectiveness of the proposed model, extensively experiments are conducted on two real-world datasets. The experimental results demonstrate that the ST-MEN model achieves excellent prediction accuracy, where the maximum improvement can reach to 19.1%.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In recent years, the rapid advancement of sensing devices in intelligent transportation systems (ITS) has facilitated the collection of large volumes of traffic data. This data not only plays an important role in constructing traffic prediction models but also aids in understanding the movement patterns of vehicles or crowds [4, 18]. In the filed of traffic prediction, origin-destination (OD) demand prediction has gradually emerged as an independent and significant research area. Different from traditional prediction, OD prediction focuses on forecasting traffic demand from specific origin locations to particular destinations using historical observations [36].

OD flow data is typically sourced from various channels, including sensors, smart card records, and taxi booking information [23]. Each of these channels provides valuable insights into different aspects of transportation behavior. Moreover, OD flows can be categorized into static flows and dynamic flows based on their inherent characteristics [8, 21]. Static flows refer to stable and consistent movement patterns occurred during specific time intervals, such as peak commuting hours between residential and commercial areas [35]. These flows follow regular routines and display predictable traffic demand patterns. In contrast, dynamic flows capture temporal fluctuations in population movement, reflecting changes in individual travel behavior influenced by factors like day of the week, time of day, and city events. Dynamic flows are more variable, posing modeling challenges due to the constantly changing nature of human mobility. Hence, effective OD flow modeling requires the incorporation of both static and dynamic flow features.

Faced with the challenge of OD demand prediction, traditional statistical methods encounter limitations with nonlinear and complex OD data. As a result, researchers are increasingly turning towards deep learning models to address these spatial-temporal complexities. Convolutional neural networks (CNNs) are commonly employed for spatial dependencies, especially suitable for grid-like data structures [24]. Recurrent neural networks (RNNs), including variants like long short-term memory (LSTM), are favored for capturing temporal patterns and modeling long-term dependencies in sequential data [37]. Moreover, attention mechanisms play a crucial role in OD demand prediction [30], enabling models to focus on relevant spatial or temporal features while reducing irrelevant ones. By directing attention towards specific regions or time steps, models can effectively capture the most significant dependencies for OD demand prediction.

More recently, there has been a growing interest in graph convolutional neural networks (GCNs) because of their impressive capabilities in learning graph representations. By leveraging message-passing mechanisms and aggregating information from neighboring nodes, GCNs extract useful dependencies among nodes and encode complex spatial relationships. Several GCN-based prediction approaches, such as MTGNN [33], DMSTGCN [12], and STHAN [19], have emerged for spatial-temporal prediction. These models exploit the inherent graph structure of transportation networks and demonstrate promising prediction performance.

Although existing models have achieved some success in OD demand prediction, three major issues still require further addressed:

-

Consideration of static and dynamic external factors: OD demand prediction is influenced by static external factors such as road structure and geographical features, as well as dynamic external factors like weather conditions and traffic accidents. However, existing research in this area has not adequately considered these factors and their simultaneous integration.

-

Effective modeling of spatial-temporal dynamics: Current OD demand prediction models often fail to effectively capture the inherent complex spatial-temporal dynamics of traffic systems. One of the main limitations lies in the oversimplified representation of spatial and temporal dependencies. The complex interactions between different regions are overlooked, thus failing to adapt to the dynamic nature of traffic patterns.

-

Complexity of temporal information and patterns: For OD demand prediction, static flow and dynamic flow represent different temporal information and patterns observed in the movement of people or vehicles between specific OD pairs. However, previous methods face challenges in modeling both types of flows, primarily due to the complexity involved in capturing the overall demand patterns.

Based on the above analysis, we propose ST-MEN, a spatial-temporal memory enhanced multi-level attention network to improve the accuracy of OD demand prediction. For issue 1, ST-MEN incorporates both static and dynamic external factors as attributes of road segments. These attributes are integrated into the model using a low-complexity external attention mechanism, facilitating the model to learn and focus on the relevant features associated with these factors. For issue 2, ST-MEN develops a dynamic spatial feature extraction module, which effectively captures the evolving dependencies among nodes while preserving important OD information. This integration of dynamic spatial feature extraction enables ST-MEN to flexibly adjust to changing demand patterns and more accurately capture the spatial-temporal dynamics. For issue 3, a continuous temporal feature extraction module is devised to capture multiple temporal patterns, including both discrete-time and continuous-time information, leveraging an enhanced memory network. For model evaluation, we design an enhanced version of ST-MEN with multi-scale feature cascade fusion to further optimize the model performance. The contributions of this work are summarized as follows:

-

This paper proposes a novel OD demand prediction model ST-MEN to integrate both static and dynamic external factors within a spatial-temporal framework.

-

A dynamic spatial feature extraction module is developed to effectively capture the dynamic changes among nodes in the traffic network.

-

Complex temporal information and patterns are learned through the application of an enhanced memory network.

-

Multi-scale features cascade fusion is incorporated to enhance the performance of the proposed model.

-

Experiments conducted on real-world datasets demonstrate that ST-MEN outperforms the existing models.

The rest of this paper is structured as follows: “Related work” discusses some related works, “Preliminary” outlines the problem definition, “Methodology” details the proposed model ST-MEN, “Experiments” provides extensive experimental evaluations, and “Conclusion” concludes the paper.

Related work

As ride-hailing services gain popularity, OD demand prediction has become a hot topic among researchers. Various models have been developed to address this challenge, including classic ones like the autoregressive integrated moving average (ARIMA) [25] and support vector regression (SVR) [28]. However, it is evident that these traditional models are incapable to handle the complex node connections, leading to suboptimal performance in real-world scenarios. With the advancements in deep learning technology, researchers have increasingly turned to deep learning-based models. Examples includes ST-ResNet [38], residual convolutional models [3], hybrid convolutional networks [34], generative adversarial networks [43], and quantum convolutional neural networks [9]. While these models have demonstrated superior performance compared to traditional ones, they are typically suitable for handling standard grid data. When dealing with non-Euclidean structures such as traffic topology graphs, they often fail to produce satisfactory results.

In recent years, graph neural networks and graph attention mechanisms have been widely adopted to address non-Euclidean problems. Wang et al. [31] introduce a recurrent strategy and a global attention network to fulfill the real-time demands of autonomous driving. To predict renewable power generation, Damaševičius et al. [7] introduce an attention-based RNN method aided by decomposition techniques. Nayakanti et al. [22] develop a motion prediction framework leveraging simple but effective attention mechanisms. Bacanin et al. [2] present a forecasting model for cloud computing loads, combining decomposition techniques, an LSTM network enhanced with attention mechanisms, and a customized particle swarm optimization algorithm. Zhang et al. [39] devise a multi-head dual sparse self-attention model designed specifically for predicting remaining useful life. Predić et al. [26] introduce a decomposition-assisted attention RNN model optimized using a customized particle swarm optimization algorithm for forecasting cloud loads. To forecasting the remaining useful life of an aircraft engines, Zhao et al. [42] propose a multi-level integrated self-attention method.

For OD demand prediction research, some models simply treat the OD matrix as a two-dimensional image, resembling multi-resolution spatial-temporal deep learning approaches [16, 24]. While spectral and convolutional methods show improvement over traditional deep learning models, they still fail to accurately capture complex spatial-temporal correlations. With advancements in graph neural networks, many researchers have explored incorporating stations or regions as nodes in the graph [15]. GEML [32] combines GNNs and LSTM to handle spatial-temporal features. DNEAT [37] extracts temporal features at different time granularities. HMOD [41] is designed on the basis of a dynamic graph representation learning model, with a multi-level memory structure. STHAN [19] constructs the spatial-temporal heterogeneous graph incorporating multiple spatial and temporal relationships. GDCF [13] devises an encoder-decoder architecture and adopt a two-phase training mechanism to generate generic node representations. These methods leverage graph neural networks to better handle the spatial-temporal dependencies of non-Euclidean structured data, resulting in superior performance. However, existing models often overlook external factors and fail to model complex spatial-temporal dependencies effectively.

Preliminary

In this section, necessary definitions are provided and the problem of OD demand prediction is formalized (Fig. 1). Additionally, a comparison between classical self-attention and external attention is introduced.

Problem definition

Definition 1

(Dynamic graph) A dynamic graph is defined as \(G = ({V,E})\), where \(V=\{v_i\}_{i=1}^N\) represents the set of nodes deployed on road segments, and \(E=\{e_j\}_{j=1}^M\) represents the set of edges. Each edge \(e_j=(o_j,d_j,t_j, \tau _j, \mathbf {f_j})\) denotes a trip, where \(o_j\) and \(d_j\) signify the origin and destination of the trip, and \(t_j\) and \(\tau _j\) denote the departure time and travel time of the trip, respectively. \(\mathbf {f_j}\) involves feature information such as weather, wind speed, etc. \(G^t\) denotes the graph learned at time step t, including all trips that occurred before t.

Definition 2

(OD demand matrix) An OD demand matrix from time step \(t-\tau \) to t is denoted as \({Y^{t-\tau :t}} \in \mathbb {R}^{N\times N}\), where \(Y_{ij}\) represents the traffic flow from node \(v_i\) to \(v_j\) during time interval from \(t-\tau \) to t.

\({\textbf {OD demand prediction problem}}\). Given a dynamic graph \(G^t\) at time step t, the purpose of OD demand prediction is to learn a function \(\mathbf {g(.)}\) to predict the OD demand matrix from time step t to \(t+\tau \):

The overall architecture of ST-MEN (Spatial-Temporal Memory Enhanced Multi-Level Attention Network) consists of several key components: the external feature extraction (EFE) block, the dynamic spatial feature extraction (DSFE) block, and the continuous temporal feature extraction (CTFE) block. An enhanced version called ST-MEN-F incorporates an enhanced temporal feature extraction (ETFE) component. The top parts shows the overall architecture and the bottom part shows the detail of each block

Attention mechanism

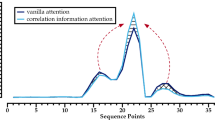

This subsection compares the classic self-attention mechanism with the external attention mechanism [11], as illustrated in Fig. 2, to justify the choice of external attention for modeling external factors in “Dynamic spatial feature extraction (DSFE)”. In the self-attention mechanism, a feature map \(F \in \mathbb {R}^{N \times d}\) is taken as input, where N is the number of items and d is the number of feature dimensions. Then, the input is projected into three matrices: \( Q\in \mathbb {R}^{N\times d'} \), and \(\{K, V\} \in \mathbb {R}^{N\times d}\) through linear transformation. Thus, the self-attention mechanism can be represented as follows:

Here, \(A \in \mathbb {R}^{N\times N}\) refers to the attention matrix, \(\alpha _{i,j}\) denotes the similarity between elements i and j, and \(F_{out} \in \mathbb {R}^{N\times N}\) represents the output value matrix. However, the high computational complexity of self-attention (\(O(dN^2)\)) poses a challenge for regular models. The core of self-attention lies in enhancing input features through linear transformation. Yet, using an \(N \times N \) self-attention matrix or an N-element self-value matrix in this transformation yields little difference. Moreover, self-attention typically only considers correlations among elements in a single dataset, while ignoring correlations among elements in different datasets. This limitation restricts its performance potential.

Methodology

In this section, we introduce the proposed spatial-temporal memory enhanced multi-level attention network ST-MEN in details. In “External feature extraction (EFE)”, we introduce the external feature extraction (EFE) block, towards effectively modeling external factors for traffic data. In “Dynamic spatial feature extraction (DSFE)”, we design dynamic spatial feature extraction (DSFE) block, which captures the dynamic spatial dependencies of traffic data. In “Enhanced temporal feature extraction (ETFE)”, continuous temporal feature extraction(CTFE) block is introduced to capture the temporal dependencies of traffic data. In “Continuous temporal feature extraction (CTFE)”, we introduce the enhanced temporal feature extraction (ETFE) block, which further augments the ability of temporal feature extraction at different scales to further improve prediction accuracy. The overall framework and its variant of the proposed ST-MEN are illustrated in “Overall architecture”. Finally, the loss function utilized in the model is presented in “Loss function”.

External feature extraction (EFE)

To comprehensively model the external factors influencing the OD demand, ST-MEN incorporates an external feature extraction module, which integrates both static and dynamic external factors. The selection and extraction of key external factors are based on the consensus reached by previous studies in traffic prediction. However, few methods consider both static and dynamic factors simultaneously in OD demand prediction. Static external factors, like road structure and geographical features [27], significantly influence travel patterns and demand. By capturing and modeling these static factors, the model gains a better understanding of their impact on OD demand. Moreover, dynamic factors, such as weather conditions and traffic incidents [40], are also considered. These factors evolve over time and affect travel demand. By integrating dynamic external factors, ST-MEN can adapt to changing conditions, resulting in more accurate and robust predictions.

Formally, EFE calculates the importance of features using the input data F and an external memory unit \(M \in \mathbb {R}^{S \times d}\). The standard form can be formulated as:

where \((\alpha )_{i, j}\) measures the similarity between the i-th column and the j-th row of the memory unit M. Note that M is a learnable matrix acting as the dataset memory, independent of input data. A is the attention map derived from learned dataset-level prior knowledge. It resembles self-attention mechanisms, providing both local and global information to the model and facilitating precise parameter sharing. During training, M gets updated based on the similarities in A.

As depicted in Fig. 2b, EFE incorporates external attention by utilizing two separate memory units, \(M_k\in \mathbb {R}^{S\times d}\) and \(M_v\in \mathbb {R}^{S\times d}\), to adjust the computation of the initial attention. The integration of these memory units enhances the model’s capability to extract information from external features, leading to improved experimental results. This process can be formalized as:

The computation complexity of this process is O(dSN), where d and S are learnable hyper-parameters. Therefore, EFE operates linearly as the dataset size increases. It can handle larger datasets and improve model performance without substantially raising computational costs.

For the normalization operation, Softmax is a prevalent technique in self-attention to ensure that the attention weights sum up to 1, i.e., \(\sum _j \alpha _{i,j}=1\). However, the matrix multiplication used to compute the attention map can be sensitive to the scaling of input data. To overcome this issue, the model adopts double normalization [10], which independently normalizes rows and columns, as represented in the following equation:

where \((\tilde{\alpha })_{i, j}\) represents the similarity between the i-th column and the j-th row of the memory unit \(M_k\), \((\hat{\alpha })_{i, j}\) represents the columns that have been normalized, and \(\alpha _{i, j}\) represents the columns and rows that have been normalized.

Dynamic spatial feature extraction (DSFE)

In order to efficiently capture time-varying spatial features, a DSFE module is devised, consisting of a linear layer, three parallel ResMLP layers, and an average pooling operation, as illustrated in Fig. 1b. Initially, the obtained feature map \(F_e \in {R^{N \times N}}\) is taken as input and transformed into a set of \(N^2 d\)-dimensional embeddings via the linear layer. These embeddings then proceed through three parallel ResMLP layers, generating a set of \(N^2 d\)-dimensional output embeddings. Subsequently, these output embeddings are fed into an avg-pooling layer to form an \(N^2 d\)-dimensional vector representing the feature map. Finally, they pass through a linear classifier to yield the final feature map as the output. Figure 3 depicts the details of each ResMLP layer, using the Affine operation instead of layer normalization. The choice avoids reliance on batch statistics and better preserves information from neighboring nodes compared to standard normalization. It can be denoted as:

where \(\alpha \) and \(\varvec{\beta }\) are learnable weight vectors used to rescale and transform input elements. For simplicity, the Affine operation, applied independently to each column of the matrix X, is represented as \(\varphi (X)\).

Overall, the ResMLP layer stacks a set of \(N^2 d\)-dimensional input features in a \(d \times N^2\) matrix X, and transforms them into a set of \(N^2 d\)-dimensional output features stored in matrix \(F_d\) through the following transformation:

where symbol \(\star \) denotes the linear operation and tanh is the activation function. \(W_1\), \(W_2\) and \(W_3\) are the weight matrices of the ResMLP layer, with dimensions of \(N^2 \times N^2\), \(4 d \times d\), and \( d \times 4d\), respectively. The intermediate matrix Z, input matrix X, and output matrix \(F_d\) all have identical dimensions. Skip connections are utilized to preserve feature information from the previous layer and prevent excessive loss of information. The first sub-layer with skip connections facilitates information exchange across different feature maps, while the second sub-layer facilitates information exchange among nodes.

Enhanced temporal feature extraction (ETFE)

To further improve the ability to capture multi-scale continuous and discrete temporal dependencies, we introduce the ETFE module to generate the aggregated feature map. The most important part of this module is Multi-scale Features Cascade Fusion (MFCF) structure. The overall architecture of MFCF is illustrated in Fig. 4. Note that the bottom of Fig. 4 represents a stage in the multi-scale structure, composed of m such stages. The process begins by processing input feature maps of size \(N\times N\) through a stem module to extract smaller feature maps of size \(\frac{N}{8}\times \frac{N}{8}\). This stem module consists of two consecutive \(3\times 3\) convolutional layers, each with a stride of 2, \(N_0\) blocks, and a \(2\times 2\) convolutional layer with a stride of 2. After each \(3\times 3\) convolutional layer, a LayerNorm [1] layer and a GELU [14] unit are applied. This initial processing stage is then followed by multiple stages with identical structures, aiming to extract multi-scale features.

Following the stem module, cascade feature fusion stage with \(N_0, N_1, N_2, N_3\) blocks is proposed, respectively. Each block in this stage has a fundamental structure consisting of a token mixer, dilation convolution, and MLP layer, as shown in the top part of Fig. 4. The token mixer utilizes a lightweight \(7\times 7\) depth-wise convolution from ConvNeXt [20], which facilitates temporal feature interactions. Additionally, within each block, a \(7\times 7\) dilated depth-wise convolution is incorporated, along with three skip connections. This configuration effectively captures long-range dependencies among spatial features while minimizing parameter increase and computational overhead. The multi-scale features obtained from the final stage are then passed to the temporal feature generation module, where further processing and feature generation take place.

The ETFE module, with its MFCF structure, enables the extraction of multi-scale features, capturing both continuous and discrete temporal dependencies. This module incorporates three mechanisms, such as multi-scale features, cascade feature fusion, token mixer and dilation convolution. In particular, the ETFE module first incorporates a multi-scale structure that extracts features at different scales. By processing the input feature maps through multiple stages, each with its own set of blocks, the module captures information at various granularities. Second, with the cascade feature fusion stage consisting of multiple blocks, the module iteratively refines and combines features from different scales. This fusion mechanism helps to propagate information across scales, allowing the model to integrate and leverage temporal dependencies at different levels of abstraction. Third, the token mixer utilizes a lightweight depth-wise convolution to facilitate temporal feature interactions. Moreover, the dilation convolution, along with skip connections, helps to capture long-range dependencies among spatial features with minimal parameter increase and computational overhead.

Continuous temporal feature extraction (CTFE)

The CTFE plays a pivotal role within the ST-MEN model, focusing on capturing both continuous and discrete temporal dependencies. Illustrated in Fig. 1c, the CTFE module consists of three key components: temporal feature generation, temporal feature fusion, and temporal feature update units. It takes the aggregated feature map as input, which contains historical trips represented by edges with continuous timestamps. These timestamps hold crucial information about OD demand and serve as the foundation for node representation in the model. To effectively capture temporal dependencies, the model leverages a hierarchical memory structure inspired by [41]. In particular, the memory state maintained at each node is represented as:

where \({H}^0\) represents the memory of continuous time, while \({H}^{{d}}(d \ge 1)\) represents the memory of macro-discrete time that spans \(\Delta {T}_{{d}}\) time units. CTFE treats time as a continuous feature, updating it when an edge appears. Additionally, it maintains multiple discrete time features to display the state of each node. Next, the details of each module of CTFE will be elaborated upon.

Feature generation

The goal of CTFE is to ensure that node states are updated efficiently and timely. When memory updates occur, a new edge is created for both continuous time memory and macro-discrete time memory. At the end of each time unit, update information is triggered to compute these edges and update the node’s memory. For continuous time memory, this information includes the node state since the last update, a specially encoded time interval, and aggregated information from neighbor nodes. Meanwhile, for macro-discrete time memory, the information includes the most recent node state update, the OD matrix within specific time intervals, and aggregated information from neighboring nodes. For a specific node i, the feature generation is formulated as:

where \(\textbf{M}_d^0 \in \mathbb {R}^{d_M}\) describes the above information and \(\odot \) indicates element-wise product. \(\textbf{t}_i^{-}\) represents the update time of node i in the previous iteration, while \(\textbf{Y}_{\textrm{t}-2^{\textrm{d}-1} \Delta \textrm{T}, \textrm{t}}^{\textrm{i}}\) denotes the i-th row of the OD matrix. \(\textbf{W}^*\)and \(\textbf{b}^*\) are learnable parameters. \(\sigma \) represents the activation function.

Feature fusion

Previous message functions have typically aggregated information from specific tracks, such as continuous information at a particular granularity or discrete information. However, continuous messages may overlook certain global spatial-temporal patterns, while fine-grained messages may miss out on the spatial-temporal information present in discrete messages. To tackle this issue, a temporal feature fusion mechanism is designed to combine specific track information with other data, generating diverse forms of spatial-temporal information. The latest information stored in the corresponding storage memory can be represented as:

where \({M}^0\) represents the output of the information function. When \(\textbf{M}^l\) is calculated, information from other time granularities is initially aggregated and then concatenated with \({M}^{l-1}\). The main objective of this operation is to integrate information from different time granularities while maintaining the corresponding time granularity details as much as possible. Next, the concatenation result is passed through a fully connected layer.

Feature update

The temporal feature update unit aims to update the current information while preserving historical information as much as possible. Inspired by [41], GRU [6] is employed to update the memory state of nodes.This process is formulated as:

where \({M}_d^L\) denotes the message of d-th memory at the L-th layer. As the increasing of message level, multi-scale temporal features are learned.

Overall architecture

Applying a pyramid framework, we design the spatial-temporal memory enhanced multi-level attention network ST-MEN In particular, given the topology graph of the road network \(G^t\) at a certain time t, ST-MEN first employs the external attention mechanism to model the external factors, such as weather and event, and obtain the feature representation \(F_e\). Then, in the DSFE module, a parallel residual MLP group followed by average pooling is utilized to extract deep dynamic spatial features \(F_d\), enhancing the feature learning of neighboring nodes within one or two hops. In the CTFE module, both the continuous and discrete temporal features \(F_c\) are captured and updated. Finally, the prediction results are obtained by conducting the OD demand matrix.

Based on the above framework, we further design an enhanced version of the proposed ST-MEN (i.e. ST-MEN-F)as shown in Fig. 1., which introduces a Enhanced Temporal Feature Extraction (ETFE) to further augment the representation power of the CTFE block, and comparison results of two frameworks are given in Table 2.

Loss function

The OD demand prediction task aims to minimize the difference between the predicted values and the ground truth. For this purpose, the \(\mathcal {L}\) loss function is employed as the objective function:

where \(\hat{y}\) denotes the predicted value, while y represents the corresponding ground truth. Given the frequent occurrence of zeros in the OD matrix, predicting them as negative numbers is deemed acceptable in real-world scenarios. However, it’s crucial to differentiate between heavily negative values and slightly negative ones, as they may convey distinct implications. Directly training on these values could potentially result in inferior performance.

Experiments

In this section, we first present the relevant datasets and experimental settings. Next, extensive experiments are conducted to verify the superiority of the proposed model.

Datasets

Experiments are conducted on two popular real-world datasets: New York Taxi and Cheng Du Taxi. The statistical data of the two datasets are summarized in Table 1.

-

New York Taxi: This dataset contains taxi traffic data in New York City from January to June 2019, covering 63 regions and 38,498,427 time slots. We select 139 days as the training set, the next 21 days as the validation set, and another next 21 days as the test set.

-

Cheng Du Taxi: This dataset contains the taxi trip records in Chengdu, China, spanning from August 3rd, 2014, to August 30th, 2014, covering 79 regions and 3,636,845 records. We allocate 19 days for the training set, 4 days for the validation set, and another 4 days for the testing set.

Experimental settings

Experimental configurations

The proposed model aims to utilize historical trip data to predict the OD demand matrix for the next time intervals \(\tau =[30, 60, 90]\) minutes. During training, we employ the Adam optimizer with a learning rate of \(1e-5\) for both datasets. The model is trained for 500 epochs, with early stopping activated and a patience set to 20. The layer depth L of the model is fixed at 2. Memory dimension \(d_H\) and message dimension \(d_M\) are set to 128. For the MFCF structure, we uniformly set the dilation rate r of the dilated convolution to 3 across all blocks. These variations maintain consistent channel dimensions (\(S = 128\)) while employing varying block numbers (\(N_0=3,N_1=2,N_2=7,N_3=2\)). The total number of MFCF stages m is 4. The model is implemented using the Pytorch framework and experiments are conducted on an NVIDIA GeForce RTX 3070 GPU.

Evaluation metrics

The performance of different methods is evaluated with the following metrics: mean absolute error (MAE) and root mean squared error (RMSE), defined as:

Here, N represent the batch size, \(y_i\) and \(\hat{y}_i\) are the ground-truth and predicted values in a mini-batch, respectively.

Baselines

This paper compares the ST-MEN model with traditional statistic-based models as well as recently proposed deep neural network models.

-

HA: The prediction output of this conventional model is determined by taking the historical average value of the OD demand matrix as the predicted value.

-

Linear Regression (LR): This model is a classic regression model that aims to identify the linear correlation between the input and output. The input of the model consists of the historical OD demand of a single OD pair in the latest four consecutive time slots.

-

XGBoost [5]: This model employs gradient-boosted trees to learn from past patterns, and its input is similar to that of logistic regression (LR).

-

GEML [32]: This is an OD demand prediction model that utilizes snapshots and pre-defined neighborhoods. The geographical neighborhood in GEML is defined based on distance.

-

DNEAT [37]: This model is a snapshot-based OD demand prediction model that incorporates node-edge attention. Similar to GEML, DNEAT also defines neighborhood based on geographical distance.

-

TGN [29]: This model is a continuous-time dynamic graph representation learning framework.

-

HMOD [41]: The model is designed on the basis of a dynamic graph representation learning model, with a multi-level memory structure.This model is the inspiration for this research.

-

STHAN [19]:The model constructs a spatio-temporal heterogeneous graph incorporating multiple spatial and temporal relationships, and utilize meta-paths to characterize complex spatial relationships. To capture this heterogeneity, The model employ hierarchical attention, comprising node-level attention and meta-path-level attention.

-

COOL [17]: To capture a range of long-term transitional patterns, the model employs a unified self-attention decoder that combines sequential representations through multi-rank and multi-scale attention branches..

Main results and analysis

Table 2 presents the experimental results of different models tested on two real-world datasets. Evaluation metrics include MAE and RMSE, with time intervals \(\tau \) ranging in [30, 60, 90] min. The best results are highlighted in bold, while the second-best results are underlined. Compared to conventional static models and machine learning models (e.g., HA, LR, and XGBoost), deep learning-based approaches demonstrate superior performance due to their capacity of capturing non-linear relationships and adapting to large-scale data.

The proposed models, ST-MEN and its enhanced version ST-MEN-F, exhibit superior performance across various prediction tasks among deep learning-based approaches. Specifically, for 30-minute short-term prediction on the New York Taxi dataset, ST-MEN achieves impressive MAE and RMSE scores of 0.6540 and 1.4244, while ST-MEN-F scores 0.5923 and 1.3172, respectively. Compared to the best baseline model HMOD, which records slightly higher MAE and RMSE values of 0.7317 and 1.5960, ST-MEN-F shows an improvement of nearly 18% in both metrics. Similarly, for 60-minute mid-term prediction, ST-MEN and ST-MEN-F outperform HMOD, with an notable improvement of nearly 13% in both metrics. The trend continues for 90-minute long-term prediction, where ST-MEN and ST-MEN-F demonstrate significant enhancements of nearly 10% and 3% in MAE and RMSE metrics compared to HMOD.

The superior performance of ST-MEN and ST-MEN-F is not limited to the New York Taxi dataset but also extends to the Cheng Du Taxi dataset, where they outperform the baseline models. These results highlight the effectiveness and superiority of the proposed models in predicting taxi OD demand for various prediction horizons. Their consistent outperformance of baseline models demonstrate the potential for accurate and reliable short-term, mid-term, and long-term OD demand forecasting tasks. These significant improvements arise from the effective modeling of external influencing factors and the successful capture of spatial-temporal dynamics by the models. Therefore, compared to other deep learning models, ST-MEN and ST-MEN-F exhibit heightened sensitivity to external factors and spatial-temporal dependencies, thus enhancing the accuracy of OD demand prediction effectively.

Ablation study

To verify the effect of several key components on the proposed model, this paper conducts ablation studies on two datasets. The variants of ST-MEN are named as follows:

-

w/o EFE: It removes the EFE block from the basic ST-MEN framework.

-

w/o DSFE: It removes the DSFE block from the basic ST-MEN framework.

-

ST-MEN-F: It adds the ETFE block based on the basic ST-MEN framework.

Table 3, Figs. 5, and 6 report the MAE and RMSE results of ST-MEN, ST-MEN-F, and the variants on the two real-world datasets. The experimental findings clearly indicate the effectiveness of the EFE block (w/o EFE) in enhancing the model’s performance by integrating both static and dynamic external factors. For instance, in the case of the Cheng Du Taxi dataset with a time interval of 30 min, the MAE and RMSE values decrease from 1.438 and 2.846 to 1.337 and 2.495, respectively. This integration significantly contributes to the model’s improved robustness and stability when faced with various external influences.

Furthermore, the DSFE block (w/o DSFE) emerges as the most influential component in enhancing the model’s performance. For instance, in the case of the New York Taxi dataset with a time interval of 60 min, the MAE and RMSE values decrease from 0.778 and 1.805 to 0.677 and 1.653, respectively. This reduction demonstrates the efficacy of the proposed DSFE block in extracting and learning dynamic spatial features among nodes, thereby improving the overall prediction accuracy.

When both of these key modules are included simultaneously, the model consistently achieves the better results across all time intervals. This can be attributed to the model’s effective integration of external factors in combination with its ability to capture spatial-temporal dynamics. By considering both static and dynamic external factors and leveraging the spatial-temporal relationships, the model achieves superior performance, providing more accurate and reliable predictions. Moreover, it is observed that adding ETFE block can further improve the performance of the framework. As the multi-scale features, cascade feature fusion, token mixer, and dilation convolution collectively enhance the model’s ability to understand and leverage temporal dependencies across different scales and time intervals. This leads to improved performance in capturing and modeling complex temporal patterns, resulting in more accurate predictions or representations of the data.

Parameter sensitivity

Figures 7 and 8 present the impact of layer depth L on different datasets. In the case of the New York Taxi dataset with a time interval of \(\tau =30\) minutes, the performance of ST-MEN is evaluated. For \(L=1\), ST-MEN achieves the MAE of 0.722 and the RMSE of 1.648. However, as the layer depth L is increased to 2, the performance of ST-MEN improves significantly, resulting in the MAE of 0.654 and RMSE of 1.424. This improvement can be attributed to the deeper architecture of the model, which enhances its ability to learn representations and capture complex correlations and patterns within the data.

It is important to note that further increasing the number of layers beyond \(L=2\) does not lead to performance improvements and can even degrade the model’s performance. This phenomenon occurs due to the model’s tendency to overfit to the noise present in the training data, thereby hindering its generalization to new data.

The experimental findings on the Cheng Du Taxi dataset also exhibit a similar trend. Therefore, setting the layer depth L to 2 provides an optimal balance between model complexity and performance for both the New York Taxi and Cheng Du Taxi datasets. This choice allows ST-MEN to effectively capture the underlying patterns and correlations in the data without being overly influenced by noise, resulting in improved prediction accuracy.

Conclusion

This paper introduces a novel approach called spatial-temporal memory enhanced multi-level attention network for OD demand prediction (ST-MEN). The objective of ST-MEN is to comprehensively consider the influence of external factors on traffic conditions and enhance the prediction performance. In particular, ST-MEN integrates external feature extraction with dynamic spatial features, as well as continuous and discrete temporal feature extraction. This combination allows the model to capture a wide range of information related to external factors, spatial dependencies between nodes and temporal dynamics. Furthermore, multi-scale features cascade fusion is incorporated to enhance the performance of the proposed model. To validate the effectiveness of ST-MEN, extensive experiments are conducted on two real-world datasets. The results demonstrate that the proposed model outperforms existing methods in terms of OD demand prediction performance. The comprehensive consideration of external factors and the integration of various feature extraction techniques contribute to the success of ST-MEN in accurately predicting OD demand.

There are also some limitations in the proposed model, which will be the focus of the future work to further enhance the capabilities and applicability of the model. Firstly, applying the model to other spatial-temporal forecasting tasks, such as arrival time estimation, needs further investigation. By leveraging the strengths of ST-MEN, such as its attribute-augmented spatial-temporal framework and dynamic feature extraction, we will investigate whether this model is applicable to address other forecasting problems. Secondly, broader external factors like points of interest (POI) data can provide valuable insights into the dynamics of transportation systems. By incorporating these factors into the model, it will be useful to capture and model the complex correlations between external factors and OD demand, leading to more accurate predictions.

Data availability

Data will be made available on request.

References

Ba JL, Kiros JR, Hinton GE (2016) Layer normalization. arXiv preprint arXiv:1607.06450

Bacanin N et al (2023) Cloud computing load prediction by decomposition reinforced attention long short-term memory network optimized by modified particle swarm optimization algorithm. Ann Oper Res 1–34. https://doi.org/10.1007/s10479-023-05745-0

Chen G, Dai Y, Zhang J (2023) Rrcnet: refinement residual convolutional network for breast ultrasound images segmentation. Eng Appl Artif Intell 117:105601

Chen J, Wang W, Yu K, Hu X, Cai M, Guizani M (2023) Node connection strength matrix-based graph convolution network for traffic flow prediction. IEEE Trans Veh Technol 72(9):12063–12074

Chen T, Guestrin C (2016) Xgboost a scalable tree boosting system. In: Proceedings of the 22nd acm sigkdd international conference on knowledge discovery and data mining. ACM, San Francisco, pp 785–794

Cho K, Van Merriënboer B, Gulcehre C, Bahdanau D, Bougares F, Schwenk H, Bengio Y (2014) Learning phrase representations using rnn encoder–decoder for statistical machine translation. arXiv preprint arXiv:1406.1078

Damaševicius R et al (2024) Decomposition aided attention-based recurrent neural networks for multistep ahead time-series forecasting of renewable power generation. PeerJ Comput Sci 10:1795

Galliani G, Secchi P, Ieva F (2023) Estimation of dynamic origin-destination matrices in a railway transportation network integrating ticket sales and passenger count data. arXiv preprint arXiv:2312.07732

Gong LH, Pei JJ, Zhang TF, Zhou NR (2024) Quantum convolutional neural network based on variational quantum circuits. Opt Commun 550:129993

Guo MH, Cai JX, Liu ZN, Mu TJ, Martin RR, Hu SM (2021) Pct: point cloud transformer. Comput Vis Media 7:187–199

Guo MH, Liu ZN, Mu TJ, Hu SM (2022) Beyond self-attention: external attention using two linear layers for visual tasks. IEEE Trans Pattern Anal Mach Intell 45(5):5436–5447

Han L, Du B, Sun L, Fu Y, Lv Y, Xiong H (2021) Dynamic and multi-faceted spatio-temporal deep learning for traffic speed forecasting. KDD 21:547–555

Han L, Zhang R, Sun L, Du B, Fu Y, Zhu T (2023) Generic and dynamic graph representation learning for crowd flow modeling. Proc AAAI Conf Artif Intelli 37:4293–4301

Hendrycks D, Gimpel K (2016) Gaussian error linear units (gelus). arXiv preprint arXiv:1606.08415

Huo G, Zhang Y, Wang B, Gao J, Hu Y, Yin B (2023) Hierarchical spatio-temporal graph convolutional networks and transformer network for traffic flow forecasting. IEEE Trans Intell Transp Syst 24(4):3855–3867

Jiang W, Ma Z, Koutsopoulos HN (2022) Deep learning for short-term origin-destination passenger flow prediction under partial observability in urban railway systems. Neural Comput Appl 34:4813–4830

Ju W, Zhao Y, Qin Y, Yi S, Yuan J, Xiao Z, Luo X, Yan X, Zhang M (2024) Cool: a conjoint perspective on spatio-temporal graph neural network for traffic forecasting. Inf Fusion 107:102341

Kong J, Fan X, Jin X, Lin S, Zuo M (2023) A variational Bayesian inference-based en-decoder framework for traffic flow prediction. IEEE Trans Intell Transp Syst 25(3):2966–2975

Ling S, Yu Z, Cao S, Zhang H, Hu S (2023) Sthan: transportation demand forecasting with compound spatio-temporal relationships. ACM Trans Knowl Discov Data 17(4):1–23

Liu Z, Mao H, Wu CY, Feichtenhofer C, Darrell T, Xie S (2022) A convnet for the 2020s. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. (CVPR). IEEE, New Orleans, pp 11976–11986

Mohammed M, Oke J (2023) Origin-destination inference in public transportation systems: a comprehensive review. Int J Transp Sci Technol 12(1):315–328

Nayakanti N et al (2023) Wayformer: motion forecasting via simple and efficient attention networks. In: 2023 IEEE international conference on robotics and automation (ICRA). IEEE

Nie L, Wang X, Zhao Q, Shang Z, Feng L, Li G (2023) Digital twin for transportation big data: a reinforcement learning-based network traffic prediction approach. IEEE Trans Intell Transp Syst 25(1):896–906

Noursalehi P, Koutsopoulos HN, Zhao J (2021) Dynamic origin-destination prediction in urban rail systems: a multi-resolution spatio-temporal deep learning approach. IEEE Trans Intell Transp Syst 23(6):5106–5115

Pan B, Demiryurek U, Shahabi C (2012) Utilizing real-world transportation data for accurate traffic prediction. In: 2012 IEEE 12th international conference on data mining. IEEE, pp 595–604

Predic B et al (2023) Cloud-load forecasting via decomposition-aided attention recurrent neural network tuned by modified particle swarm optimization. Complex Intell Syst 10:1–21

Qu H, Gong Y, Chen M, Zhang J, Zheng Y, Yin Y (2022) Forecasting fine-grained urban flows via spatio-temporal contrastive self-supervision. IEEE Trans Knowl Data Eng 35(8):8008–8023

Ristanoski G, Liu W, Bailey J (2013) Time series forecasting using distribution enhanced linear regression. In: Advances in knowledge discovery and data mining: 17th Pacific-Asia conference, PAKDD 2013, Gold Coast, Australia, April 14–17, 2013, proceedings, part I 17. Springer, pp 484–495

Rossi E, Chamberlain B, Frasca F, Eynard D, Monti F, Bronstein M (2020) Temporal graph networks for deep learning on dynamic graphs. arXiv preprint arXiv:2006.10637

Sankar A, Wu Y, Gou L, Zhang W, Yang H (2020) Dysat: deep neural representation learning on dynamic graphs via self-attention networks. In: Proceedings of the 13th international conference on web search and data mining. ACM, Houston, pp 519–527

Wang M, Li C, Ke F (2023) Recurrent multi-level residual and global attention network for single image deraining. Neural Comput Appl 35(5):3697–3708

Wang Y, Yin H, Chen H, Wo T, Xu J, Zheng K (2019) Origin-destination matrix prediction via graph convolution: a new perspective of passenger demand modeling. In: Proceedings of the 25th ACM SIGKDD international conference on knowledge discovery & data mining. ACM, Anchorage, pp 1227–1235

Wu Z, Pan S, Long G, Jiang J, Chang X, Zhang C (2020) Connecting the dots: multivariate time series forecasting with graph neural networks. In: Proceedings of the 26th ACM SIGKDD International conference on knowledge discovery and data mining. ACM, Virtual Event, pp 753–763

Yao H, Tang X, Wei H, Zheng G, Yu Y, Li Z (2018) Modeling spatial-temporal dynamics for traffic prediction 1, 9. arXiv preprint arXiv:1803.01254

Yin G, Huang Z, Bao Y, Wang H, Li L, Ma X, Zhang Y (2023) Convgcn-rf: a hybrid learning model for commuting flow prediction considering geographical semantics and neighborhood effects. GeoInformatica 27(2):137–157

Zeng J, Tang J (2023) Combining knowledge graph into metro passenger flow prediction: a split-attention relational graph convolutional network. Expert Syst Appl 213:118790

Zhang J, Che H, Chen F, Ma W, He Z (2021) Short-term origin-destination demand prediction in urban rail transit systems: a channel-wise attentive split-convolutional neural network method. Transp Res Part C Emerg Technol 124:102928

Zhang J, Zheng Y, Qi D, Li R, Yi X, Li T (2018) Predicting citywide crowd flows using deep spatio-temporal residual networks. Artif Intell 259:147–166

Zhang J et al (2023) An integrated multi-head dual sparse self-attention network for remaining useful life prediction. Reliab Eng Syst Saf 233:109096

Zhang Q, Huang C, Xia L, Wang Z, Li Z, Yiu S (2023) Automated spatio-temporal graph contrastive learning. In: Proceedings of the ACM Web Conference 2023. ACM, Austin, pp 295–305

Zhang R, Han L, Liu B, Zeng J, Sun L (2022) Dynamic graph learning based on hierarchical memory for origin-destination demand prediction. arXiv preprint arXiv:2205.14593

Zhao K et al (2023) Multi-scale integrated deep self-attention network for predicting the remaining useful life of aero-engine. Eng Appl Artif Intell 120:105860

Zhou NR, Zhang TF, Xie XW, Wu JY (2023) Hybrid quantum-classical generative adversarial networks for image generation via learning discrete distribution. Signal Process Image Commun 110:116891

Acknowledgements

Jiawei Lu and Lin Pan of this paper have contributed equally to the research and preparation of this manuscript.

Author information

Authors and Affiliations

Contributions

Jiawei Lu: Writing - Original Draft, Writing - Review & Editing, Conceptualization, Methodology; Lin Pan: Writing - Original Draft,Writing Review & Editing, Formal analysis, Visualization. Qianqian Ren: Writing - Review & Editing, Visualization.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no confict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lu, J., Pan, L. & Ren, Q. Spatial-temporal memory enhanced multi-level attention network for origin-destination demand prediction. Complex Intell. Syst. (2024). https://doi.org/10.1007/s40747-024-01494-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40747-024-01494-0