Abstract

The accurate prediction of a lithium-ion battery’s State of Health is of critical importance for efficient battery health management. Existing data-driven estimation methodologies grapple with issues such as high model complexity and a dearth of guidance from prior knowledge, which impose constraints on their efficacy. This work introduces a novel cross-modal distillation network for battery State of Health estimation, structured around a TransformerEncoder as the teacher network and a Convolutional Neural Network as the student network. Initially, the teacher model is pre-trained offline using State of Health degradation data to learn the degradation patterns. The directly measurable feature data (such as voltage, temperature, and current) is subsequently fed into the student network for online training and computation of a hard loss. the student network’s output is then directed into the pre-trained the teacher network to compute a soft loss, thereby offering prior knowledge of degradation laws and steering the optimization process of the student network. Rigorous experiments are conducted utilizing various datasets, with the outcomes validating the superior estimation accuracy and degradation rule adherence of the model. Notably, among five different models, this model demonstrates the best performance on almost all datasets, achieving an RMSE of 0.0097 and an MAE of 0.0065 on Cell1 of the Oxford dataset. Moreover, the model also demonstrates robust performance across different usage scenarios, inclusive of multi-battery estimation. Furthermore, this paper also introduces a fine tuning method for State of Health predictions only using the first half of the data. Comparative analysis with other models underscores the competitiveness of the proposed model, showcasing its potential for broader application.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In this contemporary era marked by an unprecedented dependence on technology, lithium-ion batteries (LIBs) have evolved to be a pivotal component of the quotidian existence [1, 2]. They fuel an extensive array of gadgets, from smartphones to electric vehicles (EVs), renewable energy storage systems, and portable electronic devices, aiming to monitor and predict battery health in real time. This approach enhances battery life, improves energy efficiency, ensures a stable energy supply, and enhances the user experience [3,4,5]. However, the health and longevity of these batteries serve as critical determinants of their optimal functioning and safe operation [6,7,8]. Owing to an assortment of degradation mechanisms such as irreversible chemical reactions, electrode material degradation, and unwanted byproduct formation on the electrode surfaces, the batteries’ performance and safety progressively deteriorate [8].

The State of Health (SOH) is a significant parameter, assessing the overall condition and remaining lifespan of a battery by reflecting its current capacity as a percentage of its initial capacity [9, 10]. Precise prediction of SOH is integral to ensure the battery’s reliable performance, prolong its lifespan, thus contributing to cost-efficiency and environmental sustainability [11,12,13]. Various methodologies have been postulated for predicting the SOH of LIBs, which can be broadly bifurcated into two categories: model-driven and data-driven strategies.

Model-driven approaches primarily leverage empirical models, physics-electrochemical-based models, and fusion models, synergized with prior knowledge or adaptive filters, for effective SOH prediction [14,15,16,17]. Empirical models specialize in constructing and predicting relationships based on historical data and experimental findings, encompassing exponential models [18, 19], polynomial models [20], and ensemble models [21]. These models exhibit considerable adaptability and necessitate minimal domain-specific knowledge. Nonetheless, their theoretical foundation may be perceived as a weakness since they essentially delineate data relationships rather than explicate the inherent rationales. Moreover, the efficacy of empirical models is profoundly contingent upon the integrity of the input data and the model quality. Noise, errors, missing values in the data or inappropriate modeling assumptions can significantly compromise the performance. Regrettably, real-world SOH data is often noisy and can be sporadic and is also hard to identify appropriate model assumptions, rendering empirical models unsuitable for realistic SOH prediction. On the other hand, physics-electrochemical-based models incorporate observed data and underlying physical and electrochemical principles [22,23,24]. This attribute tends to increase their accuracy but also their complexity. Consequently, these models typically demand substantial computational resources, which could pose a significant constraint for large-scale SOH prediction scenarios. Additionally, physics-electrochemical-based models hinge heavily on multiple parameters that must be estimated from data. However, direct measurement or estimation of these parameters may pose substantial challenges in the context of LIB SOH estimation. Moreover, the efficacy of these methodologies critically hinges on the quality of the model employed. Nevertheless, the development of a precise battery model poses substantial challenges due to the substantial requirement for extensive prior knowledge and experimental data, which may not always be readily accessible. Fusion models strive to amalgamate the strengths of both empirical and physics-electrochemical-based models [25]. They utilize a substantial volume of data for training and simultaneously account for the physical and electrochemical processes underlying the data. Consequently, fusion models often yield more accurate predictions than singular models. Nevertheless, their considerable computational and data requirements, along with their high parameter sensitivity, limit their applicability to SOH prediction tasks. Furthermore, the creation and fine-tuning of fusion models necessitate a profound comprehension of both empirical methods and the relevant domain’s physics and electrochemistry, which could further complicate the modeling process.

In recent years, data-driven methodologies have demonstrated remarkable success across diverse fields. Transitioning from these broad applications, data-driven methodologies have also proven to be pivotal in forecasting battery SOH, circumventing the necessity for an in-depth understanding of complex physical phenomena. This approach significantly increases adaptability under various real-world conditions.Such methodologies aim to elucidate the relationship between SOH and the representative feature vector during the aging process [26]. By leveraging extensive datasets and applying machine learning algorithms, data-driven methods provide valuable insights into battery SOH. This, in turn, aids in the development of more efficient and reliable energy storage systems.Data-driven approaches broadly categorize into traditional machine learning (ML) methodologies and deep learning (DL) techniques. Traditional ML approaches, including but not limited to, Support Vector Machines (SVM) [27, 28], Random Forest (RF) [29], and Gaussian Process Regression (GPR) [30], demonstrate robust capability in elucidating non-linear relationships and recognizing inherent patterns. Nevertheless, these techniques are highly dependent on proper feature selection; irrelevant or redundant features can markedly impair model performance. Since feature selection and extraction are usually manual processes, they can be time-consuming and necessitate a profound understanding of both the data and task at hand.In contrast, deep learning methodologies, such as Deep Neural Networks (DNN) [31], Convolutional Neural Networks (CNNs) [32], LSTMs [33], and Transformer [34] alleviate this issue. These techniques are capable of autonomously learning and extracting high-level features from raw data, thereby significantly reducing the manual feature extraction burden. Moreover, each of these models brings unique benefits to SOH prediction. For instance, CNNs, with their powerful multi-scale feature extraction ability, whose strong ability was proofed in the field of image processing and State of Charge (SOC) prediction [35,36,37]. These capabilities enable CNNs to offer detailed insights into the battery’s condition. Nonetheless, CNNs exhibit limitations in battery health estimation due to their insensitivity to input sequence order, hindering their ability to discern temporal dependencies in data sequences [36]. Conversely, time-series models such as Long Short-Term Memory (LSTMs) networks and Recurrent Neural Networks (RNNs), inherently designed to precess temporal data, their efficiencies have been validated in many fields such as oil price predicting, energy load predictiing and anomalies detecting in electroencephalography (EEG) data etc. [38,39,40]. Temporal data-a prevalent aspect in battery SOH prediction involving chronological records of voltage, current, and temperature-excel has been proven to be suitable for analysis with time series models [36]. To amalgamate the strengths of various models, researchers have explored hybrid models, such as CNN-LSTM [41], Convolutional-Bidirectional Gated Recurrent network [42], and Bidirectional-LSTM-Attention [43], merging multiple architectures to leverage their collective advantages. However, these combined models often resemble a simple superposition of several models, and do not necessarily fully exploit the individual advantages of the sub-models. This remains a challenge for future research and model development.

From a comprehensive discussion on the data-driven models, a crucial issues is found that the most predictions of battery SOH from these models generally lack incorporation of prior knowledge, such as the rules of degradation. This limitation implies that while these models may deliver numerically sound results, they might fail to capture actual degradation trends. A vital task of contemporary research is thus to refine the models to ensure proficiency in numerical accuracy while faithfully following real-world degradation trajectories. One promising solution to this challenge is Knowledge Distillation (K-D), a strategy with potential applicability to SOH estimation tasks [44]. K-D’s fundamental aim is to distill and generalize the knowledge encapsulated within a more sophisticated “teacher” model. This distilled knowledge is then employed to guide a simpler “student” model towards optimal performance, achieved with reduced computational requirements. In the context of SOH estimation, a well-configured teacher model can provide invaluable insights into SOH’s change patterns, directing the student model’s optimization trajectory more accurately [45]. This capability proves beneficial, enhancing the overall model performance even when the training only utilizes a limited set of simple features. Knowledge Distillation uniquely uses the teacher model’s predictions as “soft labels,” enabling the student model’s training without an extensive labeled dataset. This feature allows effective training of models even under conditions of data scarcity [44]. Besides, The estimation of battery SOH is a complex time-series task, influenced by an array of dynamic factors, such as charge cycles, current, and temperature. A well-trained teacher model adeptly captures these intricate time-series dynamics and subsequently transfers this nuanced understanding to the student model during the distillation process. Moreover, Battery SOH degradation exhibits nonlinearity, long-term dependence, and periodicity features that contribute to its complexity. An offline pretrained teacher model can discern these intricate rules. Meanwhile, the student model, guided by the K-D strategy, learns a simplified representation of these rules during online SOH estimation. This learning approach reduces the model’s complexity and computational requirements while preserving its core predictive power.

Informed by the preceding discourse, this study proposes a cross-modal distillation based deep learning model under utilizing a CNN and a TransformerEncoder to estimate the SOH of LIBs. Within this configuration, the TransformerEncoder operates as the teacher model, and the CNN serves as the student model. The K-D framework is employed to transfer the SOH change patterns of LIBs, thus guiding the optimization process of the proposed model. This guidance becomes integral to the model’s loss function. Consequently, the proposed method integrates both the prior knowledge of SOH degradation contributed by the teacher model, and the proficient ability of multi-scale feature extraction provided by the student model. This synergy ultimately enhances the model’s performance in SOH estimation. Prior research on LIB capacity estimation, as well as related studies in machinery, computer vision, and natural language processing, has validated the superior ability of CNNs to extract multi-scale features in comparison to traditional machine learning methods. Concurrently, the TransformerEncoder has displayed remarkable performance in learning temporal dependencies within time-series data, outpacing other models, notably those based on RNNs, in most scenarios. Given this background, the current study employs a K-D framework incorporating CNN and TransformerEncoder to estimate the SOH of LIBs, thus introducing a fresh perspective to SOH prediction. The unique contributions of this paper are enumerated as follows:

-

(1)

This study first proposes a novel cross-model K-D strategy and validates the efficiency of the K-D strategy on the SOH estimation tasks of LIBs. What sets this method apart is its novel integration of domain-specific knowledge into the machine learning framework, a stark contrast to the prevailing machine learning models that operate largely on data-driven insights without adequately considering the underlying patterns of SOH changes. The K-D strategy not only enhances the predictive accuracy but also imbues the model with interpretability by embedding prior knowledge into its architecture.

-

(2)

The distillation process is designed to not only transfer knowledge from the teacher to the student model but also to enrich the student model’s learning with the unique capabilities of the teacher model. This involves a novel training strategy where the student model learns to mimic the teacher’s output on the same input data while also gaining insights from the teacher model’s internal representations. This dual learning objective encourages the student model to develop a more robust understanding of the data, leading to improved performance on SOH prediction tasks.

-

(3)

The student model, a CNN, exhibits intrinsic robustness in multi-scale feature extraction, effectively mitigating feature extraction challenges. Concurrently, the teacher model, a Transformer Encoder, adeptly discerns and integrates SOH evolution rules into the objective function. This strategic integration capitalizes on the respective strengths of CNN and Transformer Encoder, culminating in superior estimation performance.

-

(4)

The empirical evidence underscores the precision of the proposed model, demonstrating significant improvements over previous models through optimal performance metrics and successful significance testing. This study introduces ’direct gain’ and ’effective gain’ as novel metrics to assess the impact of the cross-modal K–D strategy, comparing the baseline CNN model against our proposed architecture. While an anomaly was observed in NASA’s cell B0031, showing negative gains, this exception underscores the cross-modal K–D strategy’s effectiveness, further affirming the proposed network’s superiority and efficacy relative to existing models.

The remainder of this paper is divided into the following sections: In Sect. Methodology, the definition of SOH, knowledge distillation strategy, the structure of the proposed network, the loss functions, the optimizing process and the SOH prediction process are briefly introduced. Section Datasets and experimental details illustrates the datasets used in this paper and the implementation details. In Sect. Results and discussions, the experimental results are presented and analyzed. Finally, Sect. Conclusion summarizes the whole paper.

Methodology

Definition of SOH

SOH is a commonly used term in battery management systems that refers to the overall condition of a battery compared to its ideal, new condition. Typically, the SOH of LIBs decreases gradually with and charge/discharge cycles. Factors such as the battery’s operating conditions, temperature, charging rate, number of charge cycles, and depth of discharge all have effects on SOH. The SOH of LIBs can be estimated and measured by different methods, among which the most common method is to determine the SOH by monitoring the capacity degradation of the battery. Other methods include voltage degradation analysis, internal resistance measurements and electrochemical impedance spectroscopy, etc. The SOH of LIBs can be estimated and measured by different methods. SOH of LIBs is very important for the use and management of batteries. It helps users to understand the performance status of the battery and perform proper maintenance, and is also important for battery reliability and life prediction. When the SOH decreases to a certain level, it may be necessary to take measures such as replacing the battery or performing maintenance. It is usually expressed as a percentage, with 100% indicating that the battery is in new or ideal condition. In practice, it is common to set some SOH thresholds to indicate the health status of the battery. These thresholds can be adjusted for different applications and battery types. For example, for lithium batteries in electric vehicles, battery replacement or repair may generally be considered when the SOH decreases to about 80%. In essence, SOH provides a measure of the battery’s current performance capability compared to when it was new, helping to predict the remaining useful life of the battery and make decisions about when it might need to be replaced or serviced. SOH is calculated as Eq. 1, where \(C_{current}\) is the current capacity and \(C_{initial}\) stands for the initial capacity of the battery.

Knowledge distillation framework

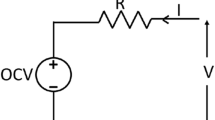

In this study, a novel cross-modal K–D framework is introduced, diverging from the traditional paradigms wherein both the teacher and student networks are typically architecturally congruent. Unlike conventional methodologies, our framework employs heterogeneously structured networks, leveraging a Transformer Encoder as the teacher and a CNN as the student. This innovative approach is depicted in our schematic representation, as shown in Fig. 1. By integrating two distinct network architectures, our framework capitalizes on the unique strengths and capabilities inherent to each model. The Transformer Encoder, known for its proficiency in capturing long-range dependencies within sequential data. It is trained offline using the SOH data, and imparts its adeptness in understanding complex patterns to the CNN. Conversely, the CNN, with its remarkable efficiency in processing spatial hierarchies and extracting local features, complements the Transformer’s capabilities. This synergistic interaction enhances the overall predictive performance of the student network, enabling a more nuanced and comprehensive representation of the input data.

One of the primary virtues of employing a cross-modal K-D framework lies in its ability to mitigate model redundancy. Traditional K-D frameworks, by utilizing identical teacher and student models, risk reinforcing the learning of redundant features, thereby limiting the potential for model enhancement. Our approach, by contrast, encourages the exploration of data from diverse perspectives, fostering a reduction in model redundancy and complexity without compromising the core predictive capabilities.Moreover, the heterogeneity of the teacher and student networks introduces differential sensitivities to data noise and outliers. Through the knowledge distillation process, the student model inherits the resilience and robustness of the teacher model, thereby augmenting its stability and reliability in adverse data conditions. This aspect is particularly advantageous in applications where data quality may vary or is prone to disturbances. The cornerstone of our framework is the facilitation of knowledge transfer from the TransformerEncoder, which encapsulates intricate patterns and dependencies within the data, to the CNN. This transfer of knowledge enables the student model to harness the analytical prowess of the teacher model, significantly diminishing prediction errors and enhancing the overall efficacy of the K–D process.

To better understand the inner computational process of the model, the process will be explained in a mathematical way as follows. Given a SOH degradation dataset \(D=\left\{ y^{(k)}|k=1,\ldots ,N\right\} \), where \(y^{(k)}\) denotes the SOH according to the kth cycle, a pre-training dataset of the teacher network \(D_{T}=\left\{ X^{(k)},y^{(k)} \mid k=L+1,\ldots ,N\right\} \) can be constructed by time window sliding processing where every sample \(X^{(k)}=[y^{(k-1)},\ldots , y^{(k-L)}]\) has one channel and L time steps and is associated with a ground truth \(y^{(k)}\). Then, a simple TransformerEncoder as a the teacher network is established to learn a nonlinear mapping \(\mathcal {F}_T:R^{L} \longrightarrow R^{1}\), which reflects the degradation of SOH. Given the above the teacher network \(\mathcal {F}_T\), a series of estimated SOH \(\tilde{y}^{(k)}_T\) from \(\mathcal {F}_T\) is derived, which can be calculated as Eq. 2.

\(\mathcal {F}_T\) applies a series of transformations with the parameters \(\theta _{T}\) to the input sequence to derive a representation of the ground truth values. Here, \({X}^{(k)}\) is the ground truth series of the \((k-1)\)th cycle to the \((k-L)\)th cycle, and \(\tilde{y}^{(k)}_{T}\) stands for the output of the teacher network at the kth time step, which also means the predicted value of the kth cycle. With this design, the important temporal information from the input sequence can be extracted and transformed into the corresponding ground truth values.

In a similar manner, when presented with a dataset comprising directly measurable features (voltage, current, etc.)\(D_{origin}=\left\{ x^{(k)}_{origin}|k=1,2,\ldots ,N\right\} \), where \(x^{(k)}_{origin}\) represents the original feature data for the kth cycle. It is important to acknowledge that these \(x^{(k)}_{origin}\) might not be embedded in the same Euclidean space due to variations in data point lengths during different cycles. Consequently, the incorporation of such raw data into certain DL models, particularly CNN, which demand a fixed input dimension, requires preprocessing. One viable option for this preprocessing is to ensure that the fixed length is aligned by resampling without losing a significant amount of information. Furthermore, the existence of disparate units and ranges among different features may hinder a fair comparison and weighing of these features during the model training process. In practice, features with larger value ranges could disproportionately influence the model’s decision-making process, potentially introducing bias. To rectify this issue and promote a more equitable contribution of all features within the model, standardization of the data is performed, thus eliminating differences in scale while simultaneously enhancing model convergence speed. In light of these considerations, a pre-training dataset, denoted as \(D_{S}=\left\{ x^{(k)},y^{(k)}\right\} \), is constructed for the student network using the resampling and standardization techniques. Here, \(y^{(k)}\) represents the ground truth for the kth cycle. Subsequently, a straightforward CNN is established as the student network, with the aim of learning a nonlinear mapping \(\mathcal {F}_S:R^{C \times W \times H} \longrightarrow R^{1}\) (C, W, H represent number of features, the weight of each feature, and the height of each feature, respectively), effectively capturing the relationship between the measured features and the SOH. By leveraging the aforementioned the student network \(\mathcal {F}_S\), the estimation of SOH becomes achievable. The output of the student network, denoted as \(\tilde{y}_S\), can be calculated using Eq. 3.

where \(\theta _{S}\) means the parameters of the student network, and \(\tilde{y}_S^{(k)}\) is the estimated SOH of the kth cycle.

Network description

Teacher network. The choice of a suitable model as the teacher network, which aims to learn the degradation laws of battery SOHs, is a pivotal consideration in this task. This paper elected to use a TransformerEncoder network as the foundation for the teacher network, which is depicted in the following structure. Contrary to RNN based architectures, such as LSTM or Gated Recurrent Units (GRU), the TransformerEncoder does not experience the issues of vanishing or exploding gradients, thereby enabling it to more effectively capture long-term dependencies in the data [46]. This attribute is paramount in SOH estimation tasks, wherein the degradation of battery health is driven by an extensive history of operational conditions and usage patterns. Additionally, the TransformerEncoder does not impose any presuppositions regarding the sequential order of the input data, endowing it with a certain degree of scale invariance [46, 47]. In the context of SOH prediction, where degradation rates can differ significantly due to varying operational conditions, this characteristic allows the model to concentrate on the relative shifts in the health state instead of being excessively affected by the data’s scale. Considering all the above merits, TransformerEncoder is selected as the core of the teacher network, whose structure could be displayed as Fig. 2.

Student Network. As shown in Fig. 3, the student network in the proposed cross-modal K-D framework employs a CNN as its core. Recognized for its capacity to automatically discern and extract significant features from raw data, the CNN eliminates the necessity for manual feature engineering [48]. This capability is particularly advantageous in the context of SOH prediction, given the intricate interplay of factors influencing battery degradation. Originally conceived for image processing, where inputs are inherently high-dimensional (e.g., width, height, color channels), the CNN is intrinsically equipped to manage high-dimensional data. This makes it ideal for handling the variety of data dimensions involved in battery SOH estimation tasks, such as voltage, current, temperature, and charge/discharge cycles. In addition to its prowess in handling high-dimensional data, the CNN’s shared-weights architecture and inherent translation invariance ensures it is not unduly sensitive to the specific positions of features within the input data. This property is beneficial for SOH prediction, where specific degradation patterns may manifest at varying lifecycle stages of the battery. Furthermore, the robustness of CNNs against noise and outliers, commonplace in real-world battery usage data, endows them with greater reliability in delivering consistent prediction performance.

The architecture of the student network is structured around four Conv2d layers, two Maxpool2d layers, a Flatten layer, and two fully connected layers. The Conv2d layers extract local features, while the fully connected layers integrate and compress these features, culminating in an output that represents the estimated SOH for the current cycle. Inputs to the student network are formatted as matrices with dimensions \(C \times W \times H\), where \(W \times H\) defines the size of each input block, and C indicates the depth of the input, or the number of input features.

Adopting a matrix rather than a vector representation for each feature’s data presents multiple benefits. Primarily, in the realm of SOH prediction, where raw data often demonstrates temporal correlations-each data point is associated with its adjacent points-matrix representation preserves this temporal information. This preservation is critical for CNNs to effectively capture spatial correlations within the raw data through convolutional operations, allowing for the identification of local patterns and spatial distributions that enrich feature representation learning.

Furthermore, matrix representation enhances data reusability and parameter efficiency. It facilitates weight sharing across matrix elements under diverse convolution kernels, substantially reducing the model’s parameter count and thereby accelerating computation. In contrast, vector-based feature representation might necessitate distinct weights for each data point, increasing both storage and computational demands.

Moreover, matrix representation adeptly captures the spatial structure and inter-feature correlations. By organizing raw data into a 3D matrix, it leverages the spatial distribution of data points across dimensions. Here, the \(W\times H\) grid organizes each feature’s data points, allowing rows and columns to denote distinct features and their spatial positions. This structure offers insights into the relationships between features, enabling an analysis of feature variations within specific grid regions. Such a 3D data representation not only elucidates feature interrelations but also aligns seamlessly with CNN model inputs, optimizing the data’s suitability for convolutional processing.

Loss functions

Teacher loss The Mean Squared Error (MSE) loss is commonly used as a measure of the discrepancy between predicted and true values in the training process of the teacher model. During the training process, the continuity difference between the predicted and true values are usually concerning. the MSE is able to measure this difference directly and provides an average of the prediction errors, allowing us to fully evaluate the performance of the teacher network. Minimizing the teacher loss \(\mathcal {L}_{Teacher}\) during training encourages the model to converge towards more accurate predictions. Given a pair of SOH series, including the predicted output of the teacher network \(\tilde{y}_{T}\), y is the true SOH series (after window sliding). The teacher loss \(\mathcal {L}_{Teacher}\) is calculated as Eq. 4.

In the prior analysis, this study delved into the Teacher Loss, pivotal for the teacher model’s offline training phase. However, the exigency to integrate a spectrum of loss functions emanates in the realm of online, real-time training, necessitating the consideration of both prediction accuracy and knowledge transference. This necessitates the utilization of both hard loss, indicative of direct learning from ground truth labels, and soft loss, reflective of knowledge assimilation from the teacher model. Consequently, a composite loss function, through a weighted amalgamation of these elements, is proposed for computation. Within the Knowledge Distillation paradigm, these variegated loss functions are instrumental.

The ensuing discourse elucidates the loss functions soft loss, hard loss, and total loss utilized in the online training of the student network. The primary aim of these functions is to streamline the knowledge conveyance from the teacher to the student model, facilitating the latter’s autonomous pattern recognition and precise prognostication. By judiciously integrating and weighting these distinct loss functions, a holistic evaluation metric is formulated, encapsulating both the direct acquisition of knowledge from true labels (hard loss) and the transference of insights from the teacher model (soft loss). This synthesized loss metric enables the student model to not only emulate the teacher model but also to accurately forecast true labels, thereby augmenting its generalization prowess on novel data.

Student loss

The construction of the student loss function is pivotal in defining the effectiveness of the learning model, comprising two essential components: hard loss and soft loss. The hard loss is determined by the discrepancy between the student model’s predictions and the actual output, providing a direct learning mechanism from the ground truth labels. Conversely, the soft loss is derived from the student model’s output processed through the teacher model, leveraging the teacher’s pre-learned empirical knowledge-specifically, the degradation mechanisms of battery capacity. The overall loss is an amalgamation of these two losses, weighted appropriately. This balanced approach not only facilitates the student model’s mimicry of the teacher model but also enhances its generalization capabilities. The inclusion of soft loss, informed by the teacher model’s soft labels, allows the student model to grasp nuanced patterns and interdependencies within the data that hard labels may not reveal. Consequently, this enables the student model to perform more adeptly on novel data. The total loss is quantified as per Eq. 5, with \(\alpha \) representing the weighting coefficient. This methodical construction ensures a comprehensive learning process, capturing both explicit and implicit knowledge.

Soft Loss. Within the student loss, the soft loss, calculated from the outputs of the TransformerEncoder-based teacher network and the CNN-based student network, is pivotal. This loss, determined by the MSE between the teacher’s outputs (termed “soft labels”) and the student’s outputs, measures the discrepancy in their comprehension of SOH patterns. Essentially, soft loss quantifies the gap between the knowledge imparted by the teacher network and the student network’s current understanding, aiming for its minimization to facilitate the student’s learning of complex SOH patterns from the teacher. This process not only augments the student’s pattern recognition and predictive accuracy but also ensures the effective transfer of knowledge during the cross-modal K-D phase. Soft loss serves as a metric to gauge and confirm the successful knowledge acquisition by the student model from the teacher model. It is expressed as Eq. 7, with \(\hat{y}^{(k)}_{T}\) denoting the kth output from the teacher network, obtained through \(\mathcal {F}_{T}\), and \(\tilde{y}^{(k)}_{S}\) representing the corresponding output from the student network.

Hard loss Another component of the student loss, the hard loss, calculated for the student network employing a CNN, is crucial. It measures the discrepancy between the CNN’s predictions, referred to as “hard labels,” and the actual ground truth. This discrepancy directs the student network’s learning trajectory, aiming to minimize prediction errors between forecasted and actual SOH values. Minimizing hard loss optimizes the student model’s parameters, enhancing its predictive accuracy. Hard loss is foundational for Back Propagation and gradient descent, fundamental training mechanisms for the CNN, ensuring the student’s capability to autonomously understand data patterns and accurately predict SOH. While the teacher network’s knowledge aids the student’s learning, the student’s ability to independently make precise predictions is vital. Hard loss, expressed as Eq. 8, with \(y^{(k)}\) representing the kth ground truth, serves as a critical metric for evaluating the student network’s independent learning and accuracy in predictions.

Optimizer

After discussing the loss function, the optimizer will be further discussed in the following section, which is a pivotal component in enhancing the neural network’s performance by minimizing the loss function. Once the final optimization objective (loss function) is established, the parameters of the model could be updated using the BP algorithm and optimizer. In this paper, Adam [49] is utilized. Comparing to other optimizers such as Stochastic gradient descent (SGD) [50], and Root Mean Square Propagation (RMSProp) [50], Adam combines the advantages of RMSprop and Momentum, calculating the exponential moving average of gradients as well as the exponential moving average of the squared gradients. This approach allows for more nuanced adjustments to the learning rate, often leading to faster convergence. Empirically, experiments under different optimizers are conducted. The model’s performances on Cell1, Cell3, Cell5 of the Oxford dataset optimized by Adam, SGD, and RMSProp are evaluated, the results are shown on Table 1. From the results, it can be clearly seen that Adam optimizer performs significantly better than others among three selected Cells. Thus, Adam is finally utilized.

In Adam algorithm, the learning rate will be automatically changed during the training process, which could avoid it being inadequate to rapidly getting the global best solution. The optimizing process in respect to parameter \(\theta \) is formulated as Eqs. 9–14:

where \(g_q\) is the gradient of \(\theta _q\) in the whole network consists of the gradients of student model and the teacher model. t stands for the current training epoch. \(m_t\) and \(V_t\) represent the momentum and the adaptive learning rate respectively. \(\beta _1\) and \(\beta _2\) are two hyperparameters, which are usually set to 0.9 and 0.999. Due to the values of \(\beta _1\) and \(\beta _2\), \(m_t\) and \(V_t\) are usually very small and converging to 0 at the initial training process and lead to bias in estimates. To mitigate this problem, \({\hat{m}}_t\) and \({\hat{V}}_t\) are used for deviation correction. \(\eta \) is the learning rate, and \(\varepsilon \) is a smoothing coefficient to avoid the denominator being 0, which is set to \(1e-8\) in this paper.

SOH prediction process overview

With the proposed model, the SOH estimation process can be roughly divided into four parts: data preparation, offline training of the teacher network, online training of the student network, and SOH prediction, as shown in Fig. 4.

-

(1)

Data Preparation In data preparation, experimental datasets from batteries subjected to varied charging and discharging protocols are amassed and subjected to preprocessing. Specifically, for the SOH degradation data designated for offline training of the teacher network, this dataset undergoes processing via a sliding window generator. This mechanism constructs a dataset comprising historical state information alongside the corresponding target SOH values. Conversely, the raw data earmarked for online training of the student model undergoes resampling and is subsequently organized into a matrix of dimensions \(C \times W \times H\). The intricacies of this preprocessing procedure are delineated in Sect. Data pre-processing, providing a comprehensive overview of the methodologies employed to prepare the data for effective model training.

-

(2)

Offline Training of the teacher network After data preparation, the teacher network is then trained by the SOH degradation data offline. All the parameters of the teacher network are iteratively updated by the Adam optimizer using the teacher loss as the optimizing objective. The offline training algorithm is summarized as Algo. 1.

-

3)

Online Training of the student network After offline training, the parameters of the pre-trained the teacher network are frozen, and the student network is then trained by the formed raw data. The online training process can be seen in Fig. 5. For each batch, the output series of the student network of the current batch is sent to the teacher network as input. Then, the total loss \(\mathcal {L}_{total}\) is calculated by the soft loss \(\mathcal {L}_{soft}\) (calculated using the outputs of the student network \(\tilde{y}_{S}\) and the teacher network \(\hat{y}_{T}\)) and the hard loss \(\mathcal {L}_{hard}\) (calculated using the outputs of the student network \(\tilde{y}_{S}\) and the ground truth y). After that, the parameters of the student network are iteratively updated by the Adam optimizer to minimize the total loss \(\mathcal {L}_{total}\). The online training algorithm is summarized as Algo. 2.

-

(4)

SOH Prediction After online training of the student network, the well-trained model is utilized to predict the SOHs of other batteries. During the prediction process, the formed raw data is fed to the model to obtain the prediction.

Datasets and experimental details

Data description

The dataset used in this paper is collected from two different databases: the NASA Ames Prognostics Data Repository [51] (6 cells were selected) and the Oxford Battery Degradation Dataset [52] (8 cells were selected). The two sets of cells are run under different charging/discharging protocols, which can be seen at Table 2, and the details are as follows:

NASA: Six batteries (serial numbers B5, B6, B7, B29, B30, and B31) were subjected to numerous charge/discharge cycles to accelerate the aging of the batteries. During charging, a constant current (CC) to constant voltage (CV) procedure was followed. The CC discharge setting was used until the voltage for the various cells dropped to a specific level.

Oxford: Oxford University utilized eight Kokam (SLPB533459H4) lithium-ion pouch cells (OX–x) with a nominal capacity of 0.74 Ah (where x denotes the battery number) for testing on long-term battery deterioration. To simulate vehicle urban circumstances, eight batteries were regularly charged at 2C and drained utilizing dynamic curves in a heated room at \(40^{\circ } C\). To determine the retention capacity, the batteries underwent testing using the CC cyclic technique at 100 charge/discharge cycle intervals.

Data pre-processing

During the offline training phase, the teacher network processed standardized SOH series data via a sliding window technique, as depicted in Fig. 6. This method reshaped the input data into a matrix of dimensions \(L\times (N-L)\), with L being the chosen window size of 3 steps and N represents the data length, to capture temporal dependencies for prediction. This window slides through the series, generating input–output pairs by extracting values within the window as inputs and the immediate next value as the output, iterating over the series with a step size of one. Both the teacher and student networks utilized this data, partitioned into training and testing sets through the Leave-One-Out method for pre-training and performance evaluation of the teacher model. Similarly, the student model’s output at each epoch was processed through this sliding window mechanism before being evaluated by the teacher network, facilitating an integrated training approach.

The data generation process is illustrated in Fig. 7. In each input sample, each feature consists of a sequence of \(W \times H\) consecutive data points extracted from the kth charging cycle, where the kth ranges from 1 to the kth, representing the total number of charging cycles involved in the current battery. To facilitate network input, each input block is structured as a \(C\times W\times H\) matrix, where C denotes the number of features. Following sample generation, the data underwent standardization. Subsequently, the samples were divided into two sets: a training set and a testing set, utilizing the Leave-One-Out method. The target battery was designated as the testing set, while the remaining batteries constituted the training set. The student model was trained on the training set and validated using the testing set.

Evaluation metrics

MAE and RMSE are used to evaluate the error of the estimated SOH. The predicted SOH is denoted as \({\tilde{y}}^{(k)}\) and the true SOH is denoted as \(y^{(k)}\), the RMSE and MAE between then are shown as Eqs. 15 and 16:

RMSE calculates the square root of the average squared differences between predicted and actual values, emphasizing larger errors. It’s sensitive to outliers and commonly used in regression analysis, with a lower RMSE indicating better model performance. MAE computes the average of absolute differences between predictions and actual values, treating all errors equally and being less affected by outliers. A lower MAE suggests improved accuracy. Both RMSE and MAE are key metrics for evaluating model accuracy and guiding model selection and calibration.

To further substantiate the efficacy of the proposed cross-modal K-D framework, two additional evaluation metrics are introduced, namely, direct gain and effective gain of knowledge embedding. These metrics serve as valuable indicators of the performance improvement achieved by K-D strategy compared to a baseline model CNN. The direct gain and effective gain are computed based on the metric results obtained from the baseline model (\(\zeta _{1}\)) and the proposed framework (\(\zeta _{2}\)), as well as the results from the proposed teacher network (\(\zeta _{3}\)), according to the following equations:

The direct gain (\(Direct\ Gain\)) is calculated as the difference between the metric results of the baseline model and the proposed framework, as expressed by Eq. 17. It captures the direct improvement achieved by utilizing the framework.

Moreover, the effective gain (\(Effective\ Gain\)) is determined by normalizing the direct gain with respect to the results obtained from the proposed teacher network. This normalization allows us to assess the performance enhancement achieved by considering the knowledge distillation process. Equation 18 presents the calculation for the effective gain.

These evaluation metrics, direct gain and effective gain, provide deeper insights into the performance improvement resulting from the application of the cross-modal K–D framework. In the forthcoming section, the results are presented and engaged in discussions to elucidate the findings.

Results and discussions

In this section, the Oxford dataset and the NASA dataset are used to validate the effectiveness of the proposed network are introduced. Then the coefficient \(\alpha \) for the total loss function and the evaluation criteria are given. Then, the performance of the proposed model is compared with the CNN, LSTM, and BiLSTM-Attention models. The improvements of the performance of the proposed network in terms of RMSE and MAE are analyzed.

Coefficient selection for the loss function

In the optimization of deep learning models, the careful selection of hyperparameters, especially penalty terms within the loss function, is critical. These terms are instrumental in balancing the contributions of various losses, thereby directly influencing the model’s learning behavior and performance outcomes. This is particularly relevant in contexts involving multi-task learning or multiple loss functions, where penalty coefficients are essential for modulating the impact of each loss component. Furthermore, they serve to prevent overfitting by managing the model’s complexity via regularization strategies. The integration of prior knowledge through these penalty terms also facilitates guided learning, enhancing model efficacy.

In this study, the total loss function, delineated in Sect. Loss functions, incorporates aspects of the SOH degradation trend as prior knowledge, orienting the student model towards optimal learning trajectories. The empirical selection of the penalty coefficient \(\alpha \) is thus pivotal for augmenting model performance and generalization. An experimental evaluation was conducted using seven distinct values of \(\alpha \) to assess their impact on SOH estimation accuracy within the Oxford dataset. The results, presented in Table 3, demonstrated that an \(\alpha \) value of \(\frac{1}{2}\) achieved superior performance, consistently yielding RMSEs below 0.0105 across all tested batteries.

To visually articulate these findings, the prediction results for Cell1 are showcased, illustrating that the SOH estimation curve corresponding to \(\alpha = \frac{1}{2}\) most closely mirrors the actual SOH trajectory. This visual comparison, from Figs. 8a to 9c, alongside the evaluation metrics presented in Fig. 9d, underscores the efficacy of \(\alpha = \frac{1}{2}\) as the optimal penalty coefficient. Consequently, this value was selected for the loss function, significantly enhancing the learning direction and generalization capability of the model in the context of SOH estimation.

Verification of the cross-modal K–D strategy

This section conducts a comparative analysis of prominent SOH estimation methodologies, including CNN, LSTM, and BiLSTM-Attention, to elucidate the efficacy of the proposed model in assessing the SOH of LIBs. Employing two distinct datasets, specifically the NASA and Oxford datasets, this analysis facilitates a comprehensive evaluation. Reference to the Oxford dataset, in particular, allows for the illustration of estimation outcomes across four different models, as depicted in Fig. 11. Subsequent figures, (10a) through (11d), juxtapose actual SOH values against predictions from each model across the lifespan of various cells. The proposed model achieves superior alignment with actual SOH values, outperforming CNN, LSTM, and BiLSTM-Attention, which nonetheless demonstrate commendable capabilities in feature extraction and time-series data processing, respectively. The integration of CNN’s multi-scale feature extraction with the TransformerEncoder’s insights into SOH trend variations enables the proposed model to surpass the performance of individual models and the previously dominant BiLSTM-Attention.

Quantitative evaluations, presented in Table 4, utilize RMSE and MAE as metrics, highlighting the precision of SOH predictions for the Oxford dataset. Notably, Cell7’s predictions are most accurate, with RMSE and MAE values at 0.0065 and 0.0050, respectively. Discrepancies in RMSE and MAE across different cells and models underscore significant performance enhancements, though Cell 5’s data analysis suggests anomalies potentially due to data collection errors or unique degradation patterns.

Further validation of the proposed model’s effectiveness is evidenced through direct and effective gain calculations, as outlined in Tables 5 and 6, comparing it against the baseline CNN model. The proposed model exhibits positive gains in most cases, affirming the cross-modal K–D strategy’s impact. However, exceptions like B0031, with negligible direct and effective gains, indicate performance parity between models. Overall, the proposed cross-modal K–D strategy confirms its capability for precise SOH estimation, significantly enhancing accuracy as demonstrated by reduced RMSE and MAE metrics.

To ascertain the statistical significance of these improvements, a Shapiro-Wilk test is applied to evaluate data distribution normality, serving as a precursor to determining the appropriate significance testing method. The Shapiro-Wilk test, assessing the compatibility of data ordinal statistics with those expected from a normal distribution, thereby evaluates the data’s adherence to normality. This analysis is pivotal for choosing a statistically valid method to confirm the enhancements’ significance.

where n is the sample size (i.e., the number of data points), \(x_{(i)}\) represents the ith observation ordered from smallest to largest, \(a_i\) is a coefficient based on the sample size and normal distribution, usually obtained by a special algorithm, \(\bar{x}\) is the mean of the sample, and W is the test statistic, which ranges from 0 to 1, with a value close to 1 meaning that the data is more likely to be from a normal distribution. The results are shown as Table 7.

The Shapiro-Wilk test results indicate that for the RMSE data, the differences between the proposed model and the LSTM model and CNN model as well as the BiLSTM-Attention model are not normally distributed (\(p < 0.05\)). However, for the CNN-LSTM model, the p-value is above 0.05, which suggests that the differences might be normally distributed.

For the MAE data, the differences between the proposed model and the CNN model are not normally distributed (\(p < 0.05\)). For the LSTM, BiLSTM-Attention, and CNN-LSTM models, the p-values are either very close to or below 0.05, which may suggest non-normal distribution.

Given these results, it would be more appropriate to use non-parametric tests for most comparisons since the assumption of normality is not met. The Wilcoxon signed-rank test [53] is a suitable choice for these comparisons. The statistic W is calculated as follows: For each pair of matched observations, calculate their difference:

where \(X_i\) and \(Y_i\) are the two observations in the \(i^{th}\) pair, and \(D_i\) is their difference. Remove all cases where \(D_i=0\), as this indicates that for the \(i^{th}\) pair of observations, the two samples yielded the same result. Moving on, Sort all non-zero differences by their absolute values: \(\left| D_i\right| \). Then assign ranks to each difference, with the smallest difference receiving rank 1, and so on. If there are ties (equal differences), their ranks are the average of the ranks they would have received had they not been tied. Finally, the statistic W can be calculated as:

The Wilcoxon signed-rank test results are shown as Table 8. The Wilcoxon signed-rank test results show that the differences between the proposed model and each of the other models are statistically significant for both the RMSE and MAE metrics, with all p-values being less than the standard alpha level of 0.05. This suggests that the improvements of the proposed model are statistically significant compared to the other models.

SOH estimation with limited initial data

In the realm of Li-ion battery SOH prediction within practical contexts, challenges often arise due to the limited availability of early-stage charging data, such as access to merely the initial 40% of degradation data. A pivotal metric for gauging a predictive model’s efficacy is its precision in forecasting the remaining SOH trajectory based solely on this early-stage data.

Given the heterogeneity across battery types, fine-tuning methodologies are employed to tailor the model’s parameters, thereby optimizing its predictive performance for each specific battery’s unique characteristics and behavior. This customization enhances the model’s accuracy and reliability in SOH estimation.

This study conducts experimental evaluations across various scenarios, focusing on predicting subsequent SOH segments using differing proportions of initial data from Cell1 in the Oxford dataset. The experiments utilize 10%, 30%, 50%, and 70% of initial SOH degradation data to predict the subsequent 90%, 70%, 50%, and 30% of SOH, respectively. The aim is to ascertain the model’s performance and effectiveness under these conditions. The model is initially trained on the specified early-stage data and subsequently fine-tuned using a pre-established model trained on comparable charging and discharging protocols. The latter-stage data serve as the testing set.

Results, depicted in Fig. 12, demonstrate that the predicted curves consistently mirror the actual SOH trends, underscoring the model’s capability to effectively forecast SOH using partial data. RMSE values of 0.0109, 0.0081, 0.0091, and 0.0095, respectively, were observed, all below the threshold of 0.0100. This indicates the model’s proficiency in accurately projecting SOH trajectories based exclusively on initial degradation data segments.

Model robustness analysis

In operational environments, data collection is often compromised by factors such as operational discrepancies, instrumental inaccuracies, and environmental perturbations, resulting in noisy data. To evaluate the model’s robustness against such noise, interference noise, specifically a Gaussian random variable with a zero mean, is superimposed on each feature data point prior to online training.

Table 9 presents the outcomes of this noise addition. Analyzing RMSE metrics on the Oxford dataset, the proposed model demonstrated resilience, maintaining low RMSE values across all cells both pre- and post-noise introduction. Notably, in Cells 3 and 5, where other models exhibited significant RMSE fluctuations, the proposed model showed minimal variation. Similarly, on the NASA dataset, the proposed model’s RMSE values remained stable post-noise addition, particularly in cells B0007, B0029, B0030, and B0031, surpassing the noise resistance of competing models. MAE metrics paralleled the RMSE findings, with the proposed model exhibiting consistent stability against noise interference.

In summary, the proposed model evidenced robust performance, sustaining low RMSE and MAE levels on both datasets irrespective of noise introduction. This underscores its capability to efficiently mitigate the impact of data noise.

Model complexity

In the realm of online SOH prediction, model size is of paramount importance alongside accuracy. Accuracy benchmarks the model’s performance, while model size, denoted by the parameter count or computational resource demand, is critical for ensuring efficient, real-time predictions. The significance of model size transcends mere accuracy assessments, affecting the model’s practical deployment, feasibility, and computational efficiency. Therefore, a dual focus on optimizing both accuracy and model size is imperative for the development of scalable and effective SOH prediction solutions.

Within the context of KD, emphasis is placed on the online training phase of the student model, as it is directly trained with the specific dataset. Conversely, the complexity of the teacher model, being pre-trained offline, is less of a concern since its training does not rely on the current dataset and its associated computational costs are not a factor during the distillation process. The crux of KD lies in efficiently transferring knowledge from the teacher to the student model, ensuring the latter’s performance and efficiency during online training phases.

Using the Oxford dataset, which includes two features with 3200 data points each, as an illustrative example, the output size of each layer is detailed in Table 10. The model, encompassing 139,925 parameters and leveraging the provided features, presents a streamlined yet effective framework for SOH learning and prediction in LIBs.

To further evaluate the complexity of the proposed model, the same data is used to calculate the total parameters of different models. The experimental results are shown in Table 11. It is evident that the size and the number of parameters of the proposed network are both the lowest among the compared models. This indicates that the model not only exhibits better evaluation performance than other methods but is also lighter in terms of computational resources, which is crucial for online SOH estimation.

Limitations

Despite the commendable performance of the proposed framework in SOH prediction, some limitations should be noticed. Primarily, the efficacy of this methodology is contingent upon the quality and volume of the training data. In contexts where SOH datasets are sparse, contaminated with noise, or exhibit significant imbalance, the model’s performance could be compromised. Future research might benefit from exploring advanced data augmentation strategies or semi-supervised learning methods to mitigate these issues. Furthermore, the decision to employ TransformerEncoders as teacher models and CNNs as student models was predicated on their established proficiency in processing time-series and spatial data, respectively. Nonetheless, this configuration may not universally represent the most effective approach across all SOH prediction scenarios. Investigating alternative architectures or combinations thereof for both teacher and student models may reveal more efficacious or precise predictive solutions.

Conclusion

This study introduces a novel cross-modal learning framework leveraging a K-D strategy for SOH estimation in LIBs. A Transformer Encoder model, acting as the teacher, elucidates battery capacity deterioration mechanisms, while a CNN serves as the student model, learning the correlation between external battery measurements and SOH predictions. This approach employs a cross-modal K-D strategy to effectively utilize prior knowledge within the SOH prediction model.

Empirical simulations reveal significant findings: the proposed framework outperforms conventional models such as CNN, LSTM, and BiLSTM-Attention, reducing RMSE and MAE by upwards of 25%, with cases exceeding 94%. This demonstrates the model’s superior accuracy in SOH forecasting for LIBs. Additionally, the cross-modal K-D strategy shows notable efficacy, evidenced by positive direct and effective gains in most tested batteries, except for a comparable performance on NASA’s B0031 battery, highlighting the strategy’s advantage in predictive precision. Significance testing confirms these improvements as statistically significant.

The model also features reduced complexity with only 139,925 parameters, offering computational efficiency without sacrificing robustness or accuracy, even under noisy conditions. This underscores the model’s suitability for real-world applications where noise is prevalent.

For future research, the authors will focus on creating a data-model-driven network to further enhance performance and interpretability, and applying transfer learning to adapt the model for batteries under diverse charging/discharging conditions. These efforts aim to advance reliable, efficient, and intelligent LIB management systems, contributing to the dynamic field of energy, including the Electric Vehicle (EV) industry, energy storage systems, and portable electronics.

Data Availability

Data is openly available in public repositories.

References

Khaleghi S, Hosen MS, Karimi D, Behi H, Beheshti SH, Van Mierlo J, Berecibar M (2022) Developing an online data-driven approach for prognostics and health management of lithium-ion batteries. Appl Energy 308:118348. https://doi.org/10.1016/j.apenergy.2021.118348

Scrosati B, Hassoun J, Sun Y-K (2011) Lithium-ion batteries: a look into the future. Energy Environ Sci 4:3287–3295. https://doi.org/10.1039/C1EE01388B

Li Y, Vilathgamuwa M, Choi SS, Xiong B, Tang J, Su Y, Wang Y (2020) Design of minimum cost degradation-conscious lithium-ion battery energy storage system to achieve renewable power dispatchability. Appl Energy 260:114282. https://doi.org/10.1016/j.apenergy.2019.114282

Jing W, Lai CH, Wong WSH, Wong MLD (2018) A comprehensive study of battery-supercapacitor hybrid energy storage system for standalone pv power system in rural electrification. Appl Energy 224:340–356. https://doi.org/10.1016/j.apenergy.2018.04.106

Etacheri V, Marom R, Elazari R, Salitra G, Aurbach D (2011) Challenges in the development of advanced li-ion batteries: a review. Energy Environ Sci 4:3243–3262. https://doi.org/10.1039/C1EE01598B

Qian C, Xu B, Xia Q, Ren Y, Sun B, Wang Z (2023) Soh prediction for lithium-ion batteries by using historical state and future load information with an am-seq2seq model. Appl Energy 336:120793. https://doi.org/10.1016/j.apenergy.2023.120793

Li Y, Li K, Liu X, Li X, Zhang L, Rente B, Sun T, Grattan KTV (2022) A hybrid machine learning framework for joint soc and soh estimation of lithium-ion batteries assisted with fiber sensor measurements. Appl Energy 325:119787. https://doi.org/10.1016/j.apenergy.2022.119787

Li Y, Sheng H, Cheng Y, Stroe D-I, Teodorescu R (2020) State-of-health estimation of lithium-ion batteries based on semi-supervised transfer component analysis. Appl Energy 277:115504. https://doi.org/10.1016/j.apenergy.2020.115504

Li J, Adewuyi K, Lotfi N, Landers RG, Park J (2018) A single particle model with chemical/mechanical degradation physics for lithium ion battery state of health (soh) estimation. Appl Energy 212:1178–1190. https://doi.org/10.1016/j.apenergy.2018.01.011

Wen J, Chen X, Li X, Li Y (2022) Soh prediction of lithium battery based on ic curve feature and bp neural network. Energy 261:125234. https://doi.org/10.1016/j.energy.2022.125234

Qu J, Liu F, Ma Y, Fan J (2019) A neural-network-based method for rul prediction and soh monitoring of lithium-ion battery. IEEE Access 7:87178–87191. https://doi.org/10.1109/ACCESS.2019.2925468

Andre D, Appel C, Soczka-Guth T, Sauer DU (2013) Advanced mathematical methods of soc and soh estimation for lithium-ion batteries. J Power Sour 224:20–27. https://doi.org/10.1016/j.jpowsour.2012.10.001

Yang S, Zhang C, Jiang J, Zhang W, Zhang L, Wang Y (2021) Review on state-of-health of lithium-ion batteries: characterizations, estimations and applications. J Clean Prod 314:128015. https://doi.org/10.1016/j.jclepro.2021.128015

Ouyang M, Feng X, Han X, Lu L, Li Z, He X (2016) A dynamic capacity degradation model and its applications considering varying load for a large format li-ion battery. Appl Energy 165:48–59. https://doi.org/10.1016/j.apenergy.2015.12.063

Khodadadi Sadabadi K, Ramesh P, Tulpule P, Rizzoni G (2019) Design and calibration of a semi-empirical model for capturing dominant aging mechanisms of a pba battery. J Energy Storage 24:100789. https://doi.org/10.1016/j.est.2019.100789

Singh P, Chen C, Tan CM, Huang S-C (2019) Semi-empirical capacity fading model for soh estimation of li-ion batteries. Appl Sci 9(15). https://doi.org/10.3390/app9153012

Ge M-F, Liu Y, Jiang X, Liu J (2021) A review on state of health estimations and remaining useful life prognostics of lithium-ion batteries. Measurement 174:109057. https://doi.org/10.1016/j.measurement.2021.109057

Duong PLT, Raghavan N (2018) Heuristic kalman optimized particle filter for remaining useful life prediction of lithium-ion battery. Microelectron Reliab 81:232–243. https://doi.org/10.1016/j.microrel.2017.12.028

El Mejdoubi A, Chaoui H, Gualous H, Van Den Bossche P, Omar N, Van Mierlo J (2019) Lithium-ion batteries health prognosis considering aging conditions. IEEE Trans Power Electron 34(7):6834–6844. https://doi.org/10.1109/TPEL.2018.2873247

Bertinelli Salucci C, Bakdi A, Glad IK, Vanem E, De Bin R (2022) Multivariable fractional polynomials for lithium-ion batteries degradation models under dynamic conditions. J Energy Storage 52:104903. https://doi.org/10.1016/j.est.2022.104903

Su X, Wang S, Pecht M, Zhao L, Ye Z (2017) Interacting multiple model particle filter for prognostics of lithium-ion batteries. Microelectron Reliab 70:59–69. https://doi.org/10.1016/j.microrel.2017.02.003

Xiong R, Li L, Li Z, Yu Q, Mu H (2018) An electrochemical model based degradation state identification method of lithium-ion battery for all-climate electric vehicles application. Appl Energy 219:264–275. https://doi.org/10.1016/j.apenergy.2018.03.053

Zheng L, Zhang L, Zhu J, Wang G, Jiang J (2016) Co-estimation of state-of-charge, capacity and resistance for lithium-ion batteries based on a high-fidelity electrochemical model. Appl Energy 180:424–434. https://doi.org/10.1016/j.apenergy.2016.08.016

Doyle M, Fuller TF, Newman J (1993) Modeling of galvanostatic charge and discharge of the lithium/polymer/insertion cell. J Electrochem Soc 140(6):1526. https://doi.org/10.1149/1.2221597

Liu K, Zou C, Li K, Wik T (2018) Charging pattern optimization for lithium-ion batteries with an electrothermal-aging model. IEEE Trans Ind Inform 14(12):5463–5474. https://doi.org/10.1109/TII.2018.2866493

Wang Y, Tian J, Sun Z, Wang L, Xu R, Li M, Chen Z (2020) A comprehensive review of battery modeling and state estimation approaches for advanced battery management systems. Renew Sustain Energy Rev 131:110015. https://doi.org/10.1016/j.rser.2020.110015

Zhang J, Wang P, Gong Q, Cheng Z (2021) Soh estimation of lithium-ion batteries based on least squares support vector machine error compensation model. J Power Electron 21(11):1712–1723. https://doi.org/10.1007/s43236-021-00307-8

Álvarez Antón JC, García Nieto PJ, Blanco Viejo C, Vilán Vilán JA (2013) Support vector machines used to estimate the battery state of charge. IEEE Trans Power Electron 28(12):5919–5926. https://doi.org/10.1109/TPEL.2013.2243918

Li Y, Zou C, Berecibar M, Nanini-Maury E, Chan JC-W, van den Bossche P, Van Mierlo J, Omar N (2018) Random forest regression for online capacity estimation of lithium-ion batteries. Appl Energy 232:197–210. https://doi.org/10.1016/j.apenergy.2018.09.182

Feng H, Shi J (2022) Research on battery life prediction method based on gaussian process regression. In: 2022 International Conference on Image Processing, Computer Vision and Machine Learning (ICICML), pp. 313–318. https://doi.org/10.1109/ICICML57342.2022.10009883

Chemali E, Kollmeyer PJ, Preindl M, Emadi A (2018) State-of-charge estimation of li-ion batteries using deep neural networks: a machine learning approach. J Power Sour 400:242–255. https://doi.org/10.1016/j.jpowsour.2018.06.104

Chemali E, Kollmeyer PJ, Preindl M, Fahmy Y, Emadi A (2022) A convolutional neural network approach for estimation of li-ion battery state of health from charge profiles. Energies 15(3). https://doi.org/10.3390/en15031185

Park K, Choi Y, Choi WJ, Ryu H-Y, Kim H (2020) Lstm-based battery remaining useful life prediction with multi-channel charging profiles. IEEE Access 8:20786–20798. https://doi.org/10.1109/ACCESS.2020.2968939

Chen D, Hong W, Zhou X (2022) Transformer network for remaining useful life prediction of lithium-ion batteries. IEEE Access 10, 19621–19628. https://doi.org/10.1109/ACCESS.2022.3151975

Gong L-H, Pei J-J, Zhang T-F, Zhou N-R (2024) Quantum convolutional neural network based on variational quantum circuits. Opt Commun 550:129993. https://doi.org/10.1016/j.optcom.2023.129993

Tian J, Chen C, Shen W, Sun F, Xiong R (2023) Deep learning framework for lithium-ion battery state of charge estimation: recent advances and future perspectives. Energy Storage Mater 61:102883. https://doi.org/10.1016/j.ensm.2023.102883

Zhou N-R, Zhang T-F, Xie X-W, Wu J-Y (2023) Hybrid quantum-classical generative adversarial networks for image generation via learning discrete distribution. Signal Process Image Commun 110:116891. https://doi.org/10.1016/j.image.2022.116891

Jovanovic L, Jovanovic D, Bacanin N, Jovancai Stakic A, Antonijevic M, Magd H, Thirumalaisamy R, Zivkovic M (2022) Multi-step crude oil price prediction based on lstm approach tuned by salp swarm algorithm with disputation operator. Sustainability 14(21). https://doi.org/10.3390/su142114616

Pilcevic D, Djuric Jovicic M, Antonijevic M, Bacanin N, Jovanovic L, Zivkovic M, Dragovic M, Bisevac P (2023) Performance evaluation of metaheuristics-tuned recurrent neural networks for electroencephalography anomaly detection. Front Physiol 14. https://doi.org/10.3389/fphys.2023.1267011

Bacanin N, Stoean C, Zivkovic M, Rakic M, Strulak-Wójcikiewicz R, Stoean R (2023) On the benefits of using metaheuristics in the hyperparameter tuning of deep learning models for energy load forecasting. Energies 16(3). https://doi.org/10.3390/en16031434

Song X, Yang F, Wang D, Tsui K-L (2019) Combined cnn-lstm network for state-of-charge estimation of lithium-ion batteries. IEEE Access 7:88894–88902. https://doi.org/10.1109/ACCESS.2019.2926517

Mousapour Mamoudan M, Ostadi A, Pourkhodabakhsh N, Fathollahi-Fard AM, Soleimani F (2023) Hybrid neural network-based metaheuristics for prediction of financial markets: a case study on global gold market. J Comput Design Eng 10(3): 1110–1125. https://doi.org/10.1093/jcde/qwad039. https://arxiv.org/abs/https://academic.oup.com/jcde/article-pdf/10/3/1110/52600231/qwad039.pdf

Guo Y, Yang D, Zhao K, Wang K (2022) State of health estimation for lithium-ion battery based on bi-directional long short-term memory neural network and attention mechanism. Energy Reports 8, 208–215. https://doi.org/10.1016/j.egyr.2022.10.128 . (2022 International Conference on the Energy Internet and Energy Interactive Technology)

Hinton G, Vinyals O, Dean J (2015) Distilling the knowledge in a neural network. arXiv preprint arXiv:1503.02531

Furlanello T, Lipton Z, Tschannen M, Itti L, Anandkumar A (2018) Born again neural networks. In: Dy, J., Krause, A. (eds.) Proceedings of the 35th International Conference on Machine Learning. Proceedings of Machine Learning Research, vol. 80, pp. 1607–1616. PMLR. https://proceedings.mlr.press/v80/furlanello18a.html

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser Lu, Polosukhin I (2017) Attention is all you need. In: Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R. (eds.) Advances in neural information processing systems, vol. 30. Curran Associates, Inc., (2017). https://proceedings.neurips.cc/paper_files/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf

Devlin J, Chang M-W, Lee K, Toutanova K (2018) Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805

Krizhevsky A, Sutskever I, Hinton GE (2017) Imagenet classification with deep convolutional neural networks. Commun. ACM 60(6):84–90. https://doi.org/10.1145/3065386

Kingma DP, Ba J (2015) Adam: a method for stochastic optimization. In: Bengio, Y., LeCun, Y. (eds.) 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7-9, 2015, Conference Track Proceedings. http://arxiv.org/abs/1412.6980

Ruder S (2017) An overview of gradient descent optimization algorithms

Saha, B., Goebel, K.: Battery data set, Moffett Field, CA (2007). https://ti.arc.nasa.gov/tech/dash/groups/pcoe/prognostic-data-repository/#battery

Birkl, C.: Oxford battery degradation dataset 1. University of Oxford (2017)

Rey, D., Neuhäuser, M.: In: Lovric, M. (ed.) Wilcoxon-Signed-Rank Test, pp. 1658–1659. Springer, Berlin, Heidelberg (2011). https://doi.org/10.1007/978-3-642-04898-2_616

Acknowledgements

This work is supported by the Heilongjiang Provincial Natural Science Foundation (CN) (LH2022F032).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xie, W., Zeng, Y. A knowledge distillation based cross-modal learning framework for the lithium-ion battery state of health estimation. Complex Intell. Syst. 10, 5489–5511 (2024). https://doi.org/10.1007/s40747-024-01458-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-024-01458-4