Abstract

Image restoration is a fundamental problem in computer vision, with the goal of restoring high-quality images from degraded low-quality observation images. However, the ill-posedness of restoration problem often leads to artifacts in the results. It inspires us to study the combination of prior, effectively restrict the solution space and improving the quality of the restored image. In this paper, a novel hybrid regularization method for image restoration is proposed, which adopts both internal and external image priors into a unified framework. Specifically, a new hybrid regularization model is designed. The TV model and a deep image denoiser are inserted into the restoration model using the plug-and-play framework, protecting image details while implicitly using external information for deep denoising. Moreover, in order to make the proposed hybrid regularization operable, an adaptive parameter method is proposed to adaptively balance the TV model and learned model in iteration. Experiments show that the proposed method surpasses the performance of existing image restoration techniques.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

In real scenes, images are affected by noise and blur, which can lead to a decrease in image quality [1]. The process of image degradation [2] can usually be described as

where A represents the blur matrix, n represents the noise. In order to improve image quality, model-based methods [3,4,5,6] and learning-based methods [7,8,9,10] are widely used in image restoration problems.

In model-based restoration methods, the objective function contains data fidelity term and regularization term [11]. The data fidelity term ensures consistency between data and makes results consistent with degradation processes. Regularization terms introduce prior information into the model to accomplish specific tasks. Model-based image restoration methods can generally be expressed as the following minimized energy function [12]:

where x represents high-quality images and y represents degraded images.

In Eq. (2), the data fidelity term is used to evaluate the similarity between the observed data and the estimated high-quality image based on the observation model. In recent years, many new fidelity terms [13,14,15,16,17,18,19] have been proposed, and achieves very well results. As we know, the image restoration problem is highly ill-posed. Thus, It is necessary to make reasonable use of prior knowledge. In this paper, we will focus on the study of the prior model to restrict the solution space, improving the reconstruction quality.

The regularization term [20] is used to model prior knowledge and extract important feature information of the image. And it can also punish solutions that do not meet assumptions and constrain the estimated reconstructed image. In [21], Zhou et al. proposed a model with MR image reconstruction incorporating the concept of TV regularization. In [22], Zhang et al. developed an iterative algorithm that utilizes an optimizer to improve image reconstruction and effectively reduce artifacts in image restoration. In [23], Sun et al. proposed a fractional Tikhonov frame imaging method, which has an automatic parameter selection function to ensure image reconstruction accuracy. In [24], Ghulyani et al. designed a new regularization term and constructed a novel image reconstruction model. Zhao et al. [25] proposed a method of using wavelet sparsity-constrained image prior. This was followed by Bhadra et al. [26], who applied adaptive priors, and Gong et al. [27], who applied non-local priors to image reconstruction. However, the regularization term constructed by the above method has subjective tendency and cannot achieve the best effect in restoring image detail information.

The above prior models have achieved some results in improving image quality. However, these prior models are artificially designed, and the subjective tendencies would suppress some image information, leading to poor restoration performance.

Deep learning methods are based on end-to-end mapping relationships. The optimal weight constraint is constructed to gain prior knowledge for image restoration. Up to now, many learning-based methods [28,29,30,31,32] have been proposed to improve image quality, such as Shi et al. [28] proposed a real-world character restoration network, Mei et al. [29] proposed a pyramid attention network, and Ye et al. [31] proposed a network called UIR-Net. These methods could obtain image with high quality, but they cannot effectively utilize the prior information inside the image, and there are still artifacts in the reconstructed image.

To sum up, the model-based methods are flexible to handle inverse problems with complex degradation, but require devising complex priors to achieve good performance. Learning-based methods have been able to learn end-to-end mapping between high-quality and low-quality images, but they cannot effectively utilize the prior information inside the image. Therefore, this paper proposes a method that can simultaneously utilize the advantages of both methods to solve the problem of image restoration.

Hybrid regularization inspired by total variation and deep denoiser prior for image restoration method is proposed in this paper. Based on the Plug-and-Play framework, using both the advantages of TV prior in protecting image edges and the advantages of learned prior in protecting image textures. And set an adaptive parameter to balance the weights of TV prior and learned prior. Contributions to this paper are outlined below:

(1) Constructing an image restoration model based on hybrid prior with adaptive parameter under the plug-and-play framework. Through this framework, the hand-crafted prior and the learned prior are combined to jointly constrain the reconstruction process to effectively restore image details.

(2) Introducing TV prior and fast and flexible denoising convolutional neural network (FFDNet) networks, utilizing the advantages of TV prior being able to protect image edge information and the advantages of learned prior being able to protect image texture, allowing both to jointly protect image detail information.

(3) To balance the weights of TV prior and the learned prior, a function based on the gradient information is designed to estimate the weight parameter adaptively. For images with sharp outline, increase the contribution of TV. Otherwise, increase the contribution of the learned prior model.

The remaining parts of this paper are as follows: Section “Materials and Methods” introduces the method proposed in this paper, section “Experiment” demonstrates the effectiveness of the proposed method through experiments, and section “Conclusion” provides a brief conclusion.

Materials and Methods

Hybrid regularization inspired by the total variation prior and deep denoiser prior for image restoration method (called HRIR for short) is proposed in this paper. In HRIR, the TV prior and learned prior are introduced to protect image edge and texture information, respectively. Moreover, a gradient based method is designed to adaptively estimate the weight parameter which could balance these two models.

Proposed reconstruction model

Assuming that the observed image y degrades from the potential high-quality image x. To obtain high-quality images, the TV based restoration model is established as follows:

where A is the degradation matrix; \(\lambda \) is regularization parameter.

In Eq. (3), \(||y - Ax||_2^2\) is the data fidelity term that measures the similarity of y and Ax. \(\Phi (x)\) is the regularization term. Here, in order to preserve the edge details, the TV model is introduced into Eq. (3), i.e., \(\Phi (x) = ||Dx|{|_1}\), where D represents the gradient operator. As we know, the TV model usually results in over-smoothing, which is not conducive to protecting texture details. Thus, another regularization term J(x) is adopted in the restoration model. Here, J(x) represents the prior information introduced by the deep denoising network. Such a model is designed to provide more information for recovering more realistic texture details. Therefore, \(\Phi (x)\) can be expressed as: \(\Phi (x)=\alpha J(x) + (1 - \alpha )||Dx|{|_1}\).

Based on the above analysis, a new reconstruction model based on hybrid priors is established as follows,

where A is the degradation matrix; \(\lambda \) and \(\alpha \) are regularization parameters. Where \(\lambda \) is a fixed constant related to noise level, and \(\alpha \) is an adaptive parameter updated in each iteration. Use \(\lambda \) to better constrain the reconstruction process, and use \(\alpha \) to balance the weights of the two models.

The optimization

Since Eq. (4) is an unconstrained function, it is difficult to solve it directly. Therefore, this paper considers introducing two auxiliary variables and iteratively solving Eq. (4) into three sub problems using the HQS method, as shown in HRIR algorithm.

HRIR algorithm | |

|---|---|

input : The low quality image y, maximum iteration N, \(\alpha ,\lambda \), \(\gamma _1\), \(\gamma _2\); | |

output : The high quality image x; | |

1. \(k=0\); | |

2. Use low quality image y to represent the initial image \({x^0}\); | |

3. Calculate the degradation matrix A; | |

4. \({z_1{\mathrm{{^0}}}} = {x^0},{z_2{\mathrm{{ ^0}}}} = D{x^0}\); | |

5. for \(k=0:N\) | |

6. Update \(\alpha , \gamma _1\), \(\gamma _2\); | |

7. Calculate \(x^{k + 1}\)using equation (7a); | |

8. Calculate \(z_1^{k + 1}\)using equation (7b); | |

9. Calculate \(z_2^{k + 1}\)using equation (7c); | |

10. \(x = x^{k + 1}\); | |

11. \(k=k+1\); | |

12. end for |

First, two auxiliary variables \({z_1},{z_2}\) are introduced. Thus, Eq. (4) could be rewritten as follows,

The augmented lagrangian function corresponding to Eq. (5) is:

where \(\gamma _1\), \(\gamma _2\) are penalty parameters for iterative changes in non-descending order. \(\gamma _1\), \(\gamma _2\) update with iteration.

Then, Eq. (6) is split into the following subproblems,

In Eqs. (7a) to (7c), introducing the auxiliary variable \({z_1},{z_2}\) could separate the data fidelity term and the regularization term into subproblems on \(x,{z_{1}},{z_2}\), respectively. Figure 1 shows the main steps of the algorithm.

(a)Solving the x-subproblem

Subproblem Eq. (7a) is related to the data fidelity item \(||y - Ax||_2^2\), which is a restoration problem constrained by the prior model based on the \(L_2\) norm. Thus, Eq. (7a) could be solved by \(\frac{{\partial {F_1}}}{{\partial x}} = 0\), where \({F_1}(x) = \frac{1}{2}||y - Ax||_2^2 + \frac{{{\gamma _1}}}{2}||{z_1^k} - x||_2^2 + \frac{{{\gamma _2}}}{2}||{z_2^k} - Dx||_2^2\), and the following expression is obtained,

Then, the fast Fourier transform (FFT) is applied to solve Eq. (8), and \(x^{k + 1}\) would be calculated by the following formula,

where \(F(\bullet ){\text { represents the Fourier transform, and }}{F^{ - 1}}(\bullet )\) represents the inverse Fourier transform. \(\overline{F(\bullet )}\) is a conjugate complex transformation of the Fourier transform.

(b)Solving the \({z_1}\) -subproblem

Subproblem Eq. (7b) is related to the regularization term J(x). This subproblem could be seen as a denoising problem, \(x = {z_1} + n\), where n represents noise.

Since solving the prior model is a complex computational process, consider using a learning-based method instead of solving the prior model, updating \(z_{1}\) by using a denoising algorithm on x. Eq. (7b) could also be rewritten as:

From subproblems Eqs. (7b) and (10), it is shown that a denoising network could be introduced to address the problem presented in Eq. (7b). Thus, the prior model J(x) is replaced by the denoising network, which avoids the design of complex regularization models. Then subproblem Eq. (7b) could be solved directly using an off-the-shelf denoiser.

In this paper, the network, FFDNet [33], is considered to deal with the denoising problem, which could handle a variety of noise levels. As shown in Fig. 2, the FFDNet introduces a downsampling operator with reversible that reshapes one noise image into several downsampled subimages. A noise level map M is then connected with the subimage to form a tensor \(\tilde{y}\). The tensor \(\tilde{y}\) is input to the convolutional layer for feature extraction. To maintain a constant size of the feature map after each convolution, zero-padding is used. Following the final layer, an upsampling operation is performed to derive a clear image.

(c)Solving the \({z_2}\) -subproblem

Subproblem Eq. (7c) is related to the regularization term \(||Dx|{|_1}\). This subproblem could be seen that establishing \({z_2}\) from the noisy data Dx, and such a problem could be described as \(Dx = {z_2} + \varepsilon \), where \(\varepsilon \) represents noise.

The objective function in Eq. (7c) is based on the \(L_1\) norm, the iterative shrinkage threshold algorithm is introduced to address the denoising problem. By using the soft threshold operator, the subproblem Eq. (7c) could be solved as:

with

Adaptive parameter estimating

In Eq. (4), in order to better make use of the TV prior and learned prior, the parameter estimation function based on the gradient information is set here to balance these two models adaptively. Shown as follows:

where N(x) is represented as the normalization function, G represents gradient operator, and l is the weight coefficient, with \(l=7\).

Equation (12) shows the parameter estimation function based on the gradient information. When \(|Gx| \rightarrow 0\), it indicates a weak edge, and when \(|Gx| \rightarrow \infty \), it represents a strong edge. Therefore, we use gradient-based functions to estimate the adaptive parameter. In this model, as \(\alpha \) is inversely proportional to the gradient, the reciprocal of the gradient is used as a function to represent \(\alpha \). In addition, to ensure that the denominator is not 0, a constant 1 is added to the denominator. In the implementation process, considering the order of magnitude of the gradient, we use the square root of the gradient to replace the gradient of the image.

Experiment

In this section, a comparison is made between our proposed method and several image restoration techniques, including FFDNet [33], CBDNet [34], weighted nuclear norm minimization (WNNM) [10], image restoration convolutional neural network (IRCNN) [35], denoiser prior image restoration (DPIR) [36]. In our paper, several objective and subjective indicators were used to evaluate the effect. The results of these experiments are presented in the following subsections.

Experiment preparation

We have assembled a collection of source images for our training dataset, which includes 400 images from the BSD dataset and 5000 images from the Paris dataset. We use these datasets (Set5, Set12, Set18, and Set24) to validate the proposed algorithm performance.

In this paper, we use subjective effects and objective indicators as the evaluation criteria for experimental results. Subjective effects reflect the reconstruction effects of different methods by reconstructing images. We use Peak Signal to Noise Ratio (PSNR) (dB) and Structural Similarity (SSIM) as objective indicators for evaluation. The larger the PSNR and SSIM, the better the effect. Eqs. (13) and (14) respectively provide the calculation formulas for PSNR and SSIM.

In Eqs. (13) and (14), I represents original image, K represents reconstruction image, \(m \times n\) is the size of the image I, K. (i, j) represents the pixel points in images I, K. \(MA{X_I}\) is the maximum possible pixel value of the original image. \({\mu _I}\) is the mean of I; \({\mu _K}\) is the mean of K; \(\sigma _I^2\) is the variance of I; \(\sigma _K^2\) is the variance of K; \({\sigma _{IK}}\) is the covariance of I and K. \({c_1}\) and \({c_2}\) are constant.

To generate low-quality images, different levels of noise and blur are added to the datasets. The images are then used in experiments. Table 1 presents the noise and blur with different levels used in this paper.

We also set the parameters in the model. For the reconstruction process, \(\lambda \) is related to noise level, and remain fixed in each iteration. For penalty parameters \(\gamma _1\) and \(\gamma _2\), set an interval of [7,49], select multiple points within this interval, and the number of points is equal to the number of iterations. The value of each point decreases logarithmically. Set a function based on noise level and selected points to represent parameters \(\gamma _1\) and \(\gamma _2\).

In this paper, all methods produce results generated by code provided by the authors and set the parameters of their experiments to the optimum value.

Ablation study

This section first tested the value of parameter l in Eq. (12), and we conducted a series of experiments on the Set5 dataset to select the value of parameter l under different noise and blur environments. The experimental results are conducted with different values of l from 0.1 to 20. The interval between the values of each point is 0.1. Part of the experimental results are presented in the following Table 2. The experimental results have shown that the reconstructed image obtained is the best when l=7.

In addition, this paper also carried out ablation experiments to verify the effectiveness of each module of the proposed method. We will remove the TV model and FFDNet model separately and conduct experiments on the remaining modules. Experiments were conducted on images in different noise and blur environments using the Set5 dataset, and the experimental results are shown in Table 3.

Experimental results demonstrate the effectiveness of each module of this method, and each module combination achieves optimal PSNR (dB) and SSIM.

Experimental results

In this section, the effectiveness of our method is fully demonstrated through objective indicators and subjective effects. First, this paper adds different Gaussian blur kernels (a 3\(\times \)3 Gaussian blur kernel with variance of 1 and a 5\(\times \)5 Gaussian blur kernel with variance of 1.6) to the test images. In addition, Gaussian noise with noise levels of 0.01 and 0.03 is added to generate low-quality images. Tables 4 and 5 show the results of the average PSNR (dB) and average SSIM under different Gaussian noise levels and Gaussian blur kernel conditions. It can be seen from these two tables that in the situation of Gaussian white noise with different noise levels and Gaussian blur, our method could obtain better average PSNR (dB) and average SSIM. These results indicate the superior performance of the proposed method discussed in this paper.

In addition, we also conducted experiments under different sizes of Average blur kernels and different Gaussian noise levels. Objective indicators are shown in Tables 6 and 7. We use Gaussian noise with the level of 0.01 and 3\(\times \)3 Average blur kernel as well as Gaussian noise with the level of 0.03 and 5\(\times \)5 Average blur kernel. Under these conditions, the proposed method achieves the optimal average PSNR (dB) and average SSIM results.

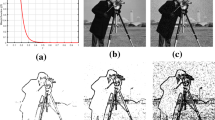

To demonstrate the subjective validity of the proposed method, restore the images (Figs. 3, 4and 5) at various noise levels and blur kernels is given. For detailed comparison, the selected portion of the red rectangle in each image is enlarged. It should be noted that the enlarged area could show more detail. As shown in Fig. 3, the method proposed in this paper could preserve the image details more effectively, and the texture part of the restored image is clearer. For the restoration of the“Baby face”image and the“Butterfly”image (shown in Figs. 4 and 5, respectively), our method obtains the result with less artifacts and clearer edges, providing a better view. Our proposed method protects the image details by introducing the TV and learned prior and achieves the best visual effects.

Through the above experimental results, it has been proved that the method proposed in this paper outperforms the comparable method in both objective indicators and subjective effects. Therefore, the effectiveness of the proposed method has been demonstrated. Based on the Plug-and-Play framework, combine TV prior and learned prior, and set adaptive parameters to balance weights of the two. The new reconstruction model constructed in this way has good results in image restoration.

Conclusion

In this paper, hybrid regularization inspired by total variation and deep denoiser prior for image restoration method is proposed. Using the plug-and-play framework to combine TV prior and learned prior, a new reconstruction model is constructed. At the same time, the advantages of TV prior protecting image edges and learned prior protecting image textures are used to restore image details more effectively. In addition, in order to better balance the weights of TV prior and learned prior, a function based on gradient is set as adaptive parameter to automatically balance the weights of the two in each iteration. Experimental results show that our method achieved optimal results.

Data availability

The relevant data and material in this paper are available from the corresponding author.

References

Chen H, He X, Qing L, Wu Y, Ren C, Sheriff RE, Zhu C (2022) Real-world single image super-resolution: A brief review. Inf Fusion 79:124–145

Gothwal R, Tiwari S, Shivani S (2022) Computational medical image reconstruction techniques: a comprehensive review. Arch Comput Methods Eng 29(7):5635–5662

Deng H, Liu G, Zhou L (2023) Ultrasonic logging image denoising algorithm based on variational bayesian and sparse prior. J Electron Imaging 32(1):013004

Dong C, Loy CC, He K, Tang X (2015) Image super-resolution using deep convolutional networks. IEEE Trans Pattern Anal Mach Intell 38(2):295–307

Burger H.C, Schuler C.J, Harmeling S (2012) Image denoising: Can plain neural networks compete with bm3d? In: Computer Vision and Pattern Recognition (CVPR), 2012 IEEE Conference On

Roy H, Chaudhury S, Yamasaki T, Hashimoto T (2021) Image inpainting using frequency-domain priors. J Electron Imaging (2)

Zhang M, Desrosiers C (2018) High-quality image restoration using low-rank patch regularization and global structure sparsity. IEEE Trans Image Process 28(2):868–879

Dong W, Zhang L, Shi G, Li X (2012) Nonlocally centralized sparse representation for image restoration. IEEE Trans Image Process 22(4):1620–1630

Dabov K, Foi A, Katkovnik V, Egiazarian K (2007) Image denoising by sparse 3-d transform-domain collaborative filtering. IEEE Trans Image Process 16(8):2080–2095

Gu S, Zhang L, Zuo W, Feng X (2014) Weighted nuclear norm minimization with application to image denoising. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2862–2869

Arvanitopoulos N, Achanta R, Susstrunk S (2017) Single image reflection suppression. In: 2017 IEEE conference on computer vision and pattern recognition (CVPR)

Chen H, He X, Qing L, Teng Q (2017) Single image super-resolution via adaptive transform-based nonlocal self-similarity modeling and learning-based gradient regularization. IEEE Trans Multimedia 19(8):1702–1717

El Helou M, Süsstrunk S (2022) Bigprior: toward decoupling learned prior hallucination and data fidelity in image restoration. IEEE Trans Image Process 31:1628–1640

Shi Y, Wu Y, Wang M, Rao Z, Yang B, Fu F, Lou Y (2022) Image reconstruction of conductivity distribution with combined l 1-norm fidelity and hybrid total variation penalty. IEEE Trans Instrum Meas 71:1–12

Thomaz A.A, Lima J.A, Miosso C.J, Farias M.C, Krylov A.S, Ding Y (2022) Undersampled magnetic resonance image reconstructions based on a combination of u-nets and l1, l2, and tv optimizations. In: 2022 IEEE international conference on imaging systems and techniques (IST), pp 1–6. IEEE

Kyrillidis A (2018) Simple and practical algorithms for ‘p-norm low-rank approximation

Chen M, Tang C, Zhang J, Lei Z (2018) Image decomposition and denoising based on shearlet and nonlocal data fidelity term. SIViP 12:1411–1418

Liang H, Zhao S (2022) Mlsr: missing information-based fidelity and learned regularization for single-image super-resolution. Comput Electr Eng 98:107674

Liang H, Zhao S, Li N (2022) Multi-fidelity and learning-regularization for single image super resolution. J Franklin Inst 359(9):4489–4512

Zhang X, Lei Y (2020) Super-resolution reconstruction of dual regular items infrared image based on deep plug-and-play. In: Proceedings of the 2020 4th international conference on digital signal processing, pp 22–28

Zhou B, Yang Y-F, Hu B-X (2020) A second-order tv-based coupling model and an admm algorithm for mr image reconstruction. Int J Appl Math Comput Sci 30(1):113–122

Zhang Z, Chen B, Xia D, Sidky EY, Pan X (2021) Directional-tv algorithm for image reconstruction from limited-angular-range data. Med Image Anal

Sun Y, Zhang Y, Wen Y (2022) Image reconstruction based on fractional tikhonov framework for planar array capacitance sensor. IEEE Trans Comput Imaging 8:109–120

Ghulyani M, Skariah DG, Arigovindan M (2022) Generalized hessian-schatten norm regularization for image reconstruction. IEEE Access 10:58163–58180

Zhao D, Huang Y, Zhao F, Qin B, Zheng J(2021) Reference-driven undersampled mr image reconstruction using wavelet sparsity-constrained deep image prior. Comput Math Methods Med

Bhadra S, Zhou W, Anastasio MA (2020) Medical image reconstruction with image-adaptive priors learned by use of generative adversarial networks. In: Medical imaging 2020: physics of medical imaging, vol 11312, pp 206–213 . SPIE

Gong K, Catana C, Qi J, Li Q (2021) Direct reconstruction of linear parametric images from dynamic pet using nonlocal deep image prior. IEEE Trans Med Imaging 41(3):680–689

Shi D, Diao X, Tang H, Li X, Xing H, Xu H (2022) Rcrn: Real-world character image restoration network via skeleton extraction. In: Proceedings of the 30th ACM international conference on multimedia, pp 1177–1185

Mei Y, Fan Y, Zhang Y, Yu J, Zhou Y, Liu D, Fu Y, Huang TS, Shi H (2023) Pyramid attention network for image restoration. Int J Comput Vis 131(12):3207–3225

Liu H, Lv H, Han C, Zhao Y (2023) Vibration detection and degraded image restoration of space camera based on correlation imaging of rolling-shutter cmos. Sensors 23(13):5953

Mei X, Ye X, Zhang X, Liu Y, Wang J, Hou J, Wang X (2022) Uir-net: a simple and effective baseline for underwater image restoration and enhancement. Remote Sens 15(1):39

Liu M, Tang L, Fan L, Zhong S, Luo H, Peng J (2022) Carnet: context-aware residual learning for jpeg-ls compressed remote sensing image restoration. Remote Sens 14(24):6318

Zhang K, Zuo W, Zhang L (2018) Ffdnet: toward a fast and flexible solution for cnn-based image denoising. IEEE (9)

Guo S, Yan Z, Zhang K, Zuo W, Zhang L (2019) Toward convolutional blind denoising of real photographs. In: 2019 IEEE/CVF conference on computer vision and pattern recognition (CVPR)

Zhang K, Zuo W, Gu S, Zhang L (2017) Learning deep cnn denoiser prior for image restoration. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3929–3938

Zhang K, Li Y, Zuo W, Zhang L, Van Gool L, Timofte R (2021) Plug-and-play image restoration with deep denoiser prior. IEEE Trans Pattern Anal Mach Intell 44(10):6360–6376

Acknowledgements

This work was supported by Natural Science Foundation of Shandong Province (No. ZR2022LZH008), The 20 Planned Projects in Jinan (No. 2021GXRC046), Basic Research enhancement Program of Qilu University of Technology (Shandong Academy of Sciences) (No. 2021JC02015), and Basic research projects of Qilu University of Technology (Shandong Academy of Sciences)(No. 2023PY039).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no Conflict of interest to declare that are relevant to the content of this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liang, H., Zhang, J., Wei, D. et al. Hybrid regularization inspired by total variation and deep denoiser prior for image restoration. Complex Intell. Syst. 10, 4731–4739 (2024). https://doi.org/10.1007/s40747-024-01405-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-024-01405-3