Abstract

In recent years, graph neural networks (GNNs) have been widely applied in recommender systems. However, existing recommendation algorithms based on GNNs still face challenges in node aggregation and feature extraction processes because they often lack the ability to capture the interactions between users and items, as well as users’ multiple intentions. This hinders accurate understanding of users’ needs. To address the aforementioned issues, we propose a recommendation model called multi-intent-aware interactive graph convolutional network (Multi-IAIGCN). This model is capable of integrating multiple user intents and adopts an interactive convolution approach to better capture the information on the interaction between users and items. First, before the interaction between users and items begins, user intents are divided and mapped into a graph. Next, interactive convolutions are applied to the user and item trees. Finally, by aggregating different features of user intents, predictions of user preferences are made. Extensive experiments on three publicly available datasets demonstrate that Multi-IAIGCN outperforms existing state-of-the-art methods or can achieve results comparable to those of existing state-of-the-art methods in terms of recall and NDCG, thus verifying the effectiveness of Multi-IAIGCN.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In the era of information explosion, users are confronted with a vast amount of irrelevant information, making it difficult to find personalized content that meets their individual needs in a short period of time. Under such background, recommender systems have emerged, which predict user preferences and recommend potentially interesting content, alleviating the dilemma of choosing from a large amount of information. Collaborative filtering (CF) [1] is one of the most widely used recommendation algorithms in various types of recommender systems. Specifically, the core idea of collaborative filtering is to leverage similarity based on collective behavior such as user behaviors and preferences and provide personalized recommendations by discovering similarities between users or items.

The main objective of collaborative filtering (CF) is to learn the latent features of users and items and then predict user preferences based on feature fusion. Therefore, capturing highly expressive latent features is crucial for the final prediction results. Matrix factorization (MF) [2] is a classic model for mining the latent features of users and items. It directly maps the IDs of users and items to their vector embeddings and reconstructs the interaction matrix to learn the latent features of users and items. This approach effectively addresses the issue of sparse matrices that collaborative filtering algorithms struggle to handle. Subsequently, several studies [3, 4] have utilized MF and its variants to extract latent features of both users and items to facilitate the process of recommendation.

While mapping user and item IDs to embedding vectors can represent nodes, it may not effectively reflect the interactions between users and items. To improve the quality of node features, researchers often model user interactions as inputs. Some studies have shown that graph neural networks (GNNs) have stronger capabilities in extracting structured data and can effectively model complex interaction data. Graph convolutional networks (GCNs) are used to aggregate node features, and their special propagation mechanism improves downstream task performance [5, 6].

In the process of capturing high-order signals between neighborhoods, the security of neural networks also requires our attention. Recently, many works have demonstrated the vitality of research in this field [7,8,9].

The core idea of a GCN is to represent user-item interactions as a bipartite graph and propagate user and item embeddings on the graph to aggregate high-order collaborative signals between neighborhoods. By aggregating collaborative signals between multi-hop neighbors, user and item embeddings are improved. IA-GCN aggregates neighboring nodes based on GCN, introducing guidance signals from the counterpart tree when aggregating neighborhood nodes. According to these guidance signals, weight allocation occurs between nodes, enhancing interactions between users and items, and aggregating user intent features. Although existing GCN-based methods have achieved some success in effectiveness evaluation, most of them have limitations in capturing multiple user intents due to the diversity of user behaviors, resulting in suboptimal recommendation performance of the models. In addition, the lack of explicit guiding signals and guided interactive behaviors between the user and item trees results in less expressive user and item embeddings, which limits the accuracy of the recommender system.

To address the issues above, this paper proposes a novel model called Multi-IAIGCN. In summary, we make the following contributions:

-

1.

We propose a module that captures multiple user intentions. This method involves integrating and partitioning multiple user intents and mapping them into separate intent trees. An attention mechanism is employed to accurately capture the user’s intent requirements and thereby eliminate the ambiguity of user intents.

-

2.

We propose an interactive intent-aware convolution method. This method enhances the aggregation of node features by adding interactive signals between multiple user intent trees and item trees to guide the aggregation process. It adopts an interactive convolution approach for aggregating features locally within a single user intent tree and the corresponding item tree.

-

3.

We conduct a series of scalable experiments. The experimental results demonstrate that Multi-IAIGCN is effective and outperforms other baseline models in terms of recall and NDCG, indicating significant improvements in recommendation performance.

Compared to previous benchmark models, Multi-IAIGCN can capture a greater number of user intents. During the process of aggregating nodes, it can capture guiding signals from counterpart nodes, contributing to the enrichment of node representations. In summary, the fundamental differences between Multi-IAIGCN and prior work are mainly concentrated in two aspects:

-

1.

Multi-IAIGCN leverages guiding signals from the counterpart tree during the node aggregation process, introducing an interactive convolution approach that enriches the embeddings of user and item nodes.

-

2.

Multi-IAIGCN focuses on various intent information from users.It can effectively mine these real intentions and eliminate the ambiguity of user intent.

Related work

Collaborative filtering

Collaborative filtering (CF) is one of the most successful techniques used in recommender systems to date. It is based on the assumption that if a user has shown interest in certain items before, the user is likely to maintain similar interest in these items in the future. CF typically models users and items using vector embeddings and historical interaction data, reconstructs user-item interactions, captures the collaborative signals within these interactions to learn embeddings, and ultimately predicts interaction probabilities.

Sedhain et al. [10] proposed AutoRec, which combines collaborative filtering with autoencoders. This recommendation model stacks multiple layers of autoencoders to project user–item interactions into latent representations, generating more expressive embeddings for data reconstruction. However, AutoRec has limited generalization ability due to the fact that it is a single-hidden-layer neural network model. DNN-MF [3] integrates deep neural networks, matrix factorization, and social spider optimization to explore users’ multi-criteria preferences. The model enhances the quality of recommended services by leveraging latent semantics and also takes into account the specificity of the recommended services. DropoutNet, which was proposed by Maksims et al. [11], addresses the cold-start issue by implicitly converting the content of cold-start items into warm-start item embeddings through randomly dropping out the user/item embeddings learned during the training process. This approach enables end-to-end training and has achieved promising results. In addition, collaborative filtering algorithms also suffer from weak generalization ability and the cold-start issue, and they are highly dependent on historical records [12]. Subsequent research mainly focuses on the following two areas: improving embeddings and optimizing interaction methods [13,14,15,16].

In recent years, there have been studies integrating reinforcement learning into recommendation systems, enriching node embeddings and bringing performance gains to recommendation systems [17,18,19].

Intent recommendation

The key issue for recommender systems is how to effectively model user behavior and extract adequate embedding signals to ultimately recommend items that align with user intents. Approaches relying solely on historical interactions may not capture the dynamic changes in user preferences because user behavior is often driven by intent. Therefore, it is important to extract the features representing user intents based on user behaviors. In addition, improving the interpretability of recommender systems can enhance user acceptance of recommended results [20, 21].

Existing personalized recommender systems not only need to handle massive real-time data, but they also need to respond to diverse service requirements based on user intents [22]. To capture the true intent of users, ASLI [23] models multiple user behaviors by considering various interactions as encoding information to obtain user feature representations and predicts the next item that a user may choose based on these representations. DSSRec [24] infers intent representations based solely on a single sequential representation, which optimizes intent in the latent space, but it may not fully utilize user intent information.

User intent is often reflected through behavioral data, and since sequential recommendation is sensitive to temporal information, it can better capture the evolution and trend of user interest. Therefore, introducing behavioral information into sequential recommendation is crucial. Chen et al. [25] proposed ICL, which incorporates contrastive learning and intent information into sequential recommendation. To some extent, sequence data can better present user intent. ICL synchronizes sequential recommendation with user intent as much as possible. Moreover, by leveraging contrastive learning, it also effectively solves the problem of lack of intent label data.

GRU4REC [26] is a sequential model based on recurrent neural networks (RNNs) that uses a variant of RNNs called gated recurrent unit (GRU) to capture long-term dependencies in sequence data and avoid the vanishing gradient problem. The model can handle data of different lengths and is more realistic for recommendation scenarios, showing good performance in recommendation tasks [27].

To meet the diverse needs of users, Wang et al. adopted a personalized determinantal point process (DPP) [28] to enhance user satisfaction with effective recommendations. It can be deployed as a subsequent component after any existing ranking function to improve the diversity of items on the recommendation list.

Overall architecture of Multi-IAIGCN.The model consists of four main components, namely embedding module, graph convolution module, intent attention module, and prediction module. (1) The embedding module takes the user and item interaction sequences \(S_{i}\) and \(S_{j}\) as inputs and maps the user interaction sequence to multiple graph structures (a) and the item interaction sequence to a single graph structure (b) based on the clustering results. (2) In the graph convolution module, IA-GCN is used to perform interactive convolution between a single user intent tree and an item tree and aggregate the intent features (c) and item features (d). (3) In the intent attention module (e), the attention mechanism is employed to fuse multiple user intents into a single embedding. (4) Finally, in the prediction module (f), the model outputs the scores of user preference toward items. Among them, \(S_{i}\) is the embedding vector of the item sequence that has interacted with user Si, \(S_{j}\) is the user sequence that has interacted with item j. Agg represents the aggregation method, k is the number of user intents, and interaction represents the interaction signal between users and items

Recommendation based on graph convolution

Although deep learning is effective in capturing implicit embeddings in Euclidean data (such as images and text), its application is limited when dealing with non-Euclidean data that are represented in a graphical or structured format. Recently, the use of graph convolutional networks for processing such data has gained widespread attention.

GC-MC [29] is a graph autoencoder framework that applies graph convolutional networks to user–item bipartite graphs for matrix completion. However, it only aggregates the feature representations of first-order neighbors, failing to fully capture the superiority of graph structure and, therefore, lacking rich feature representations. This limitation affects the quality of recommendations. GraphSage [30] is the first model that introduces inductive learning capability into graph models by continuously aggregating neighbor information and updating node features with shared parameters, thereby reducing the difficulty of model training.

PinSage [5] is the first industrial-scale recommender system based on graph convolutional networks (GCN), which is an improvement over GraphSage. PinSage utilizes random walks to perform local graph convolution on subgraphs within the neighborhood of a node and aggregates neighbor features to update node embeddings. PinSage introduces the concept of higher-order connectivity, and it captures the collaborative signals between higher-order connected nodes by stacking convolutional layers to update node embeddings, which is of great significance for training node features.

Graph attention networks (GAT) [31] are the first to introduce the attention mechanism into graph neural networks, in which the attention mechanism is used to aggregate node embeddings. Hu et al. [32] proposed MCRec, which further introduced the self-attention mechanism to measure the importance of different meta-paths. He et al. [6] proposed NGCF, which considered high-order neighborhood aggregation, stacked three GCN layers in the user/item interaction graph, and designed effective functional structures in the recommended architecture representing aggregation, such as feature transformation, nonlinear activation, and neighborhood aggregation.

Subsequently, He et al. [33] proposed LightGCN, which can be considered a lightweight version of GCN. The main innovation is training simplification achieved based on NGCF [6] by removing feature transformation and nonlinear activation, reducing the number of model parameters, and lowering the training difficulty, which significantly improves the training efficiency of the model.

Multi-intent-aware recommendation model

Overall architecture of the model

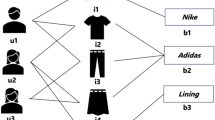

The overall framework of the model is shown in Fig. 1. The model adopts a bipartite graph structure with users and items as nodes and employs a dual-tower model to implement the recommendation function.

Embedding module

This module encodes the historical user–item interaction data (Fig. 1a, b). The interaction data \(S_{i}\) of user i can reflect the user’s latent intent. The module (Fig. 1a) uses the K-means algorithm to cluster the user’s latent intent and then maps the user intent to k graph structures, where k is a hyperparameter.

Here, \(S_{i} \in R^{m}\) represents the item sequence with which the user interacts, and \(e_i \in R^{m \times n}\) represents the user embedding, where m is the length of the user interaction sequence and n is the dimension of the embedding.

where \(e^{(j)}_i\) is the embedding representation of the \(j\textrm{th}\) intent sequence of user i, and \(\left[ e^{(1)}_{i},e^{(2)}_{i} \cdots e^{(k)}_{i} \right] \in R^(m \times n)\) is the embedding representation of the clustered user intent sequences obtained after clustering.

We take the item information (e.g., price, category, etc.) from all recent purchase records of a user as the user’s embedding. After clustering the user embeddings, we reassign them as the user’s intent information.

Graph convolution module

This module (Fig. 1c, d) uses IA-GCN to perform convolutional operations on user intents and items and aggregates the user intent features and item features using the user–item interaction data. The convolution method used is quite unique in that it performs guided convolution and multi-intent convolution. When convolving user intent features using IA-GCN, the module aggregates each user intent tree separately using guidance signals from item trees. After interactive convolution on each user intent is complete, the model obtains K feature representations of user intents. Different intent features represent different user demands. Guided convolution and multi-intent convolution both play significant roles in model representation.

The unique feature of IA-GCN’s convolution operation is that the node aggregation process does not involve additional trainable parameters, but instead it solely relies on the similarity between the guided matrix g and the neighbors of a node. Therefore, this convolution approach is lightweight and easy to scale, significantly reducing the complexity of the model’s training process. Next, a detailed description of IA-GCN will be provided. When aggregating multi-layer node features, the convolutional module of IA-GCN follows the same approach as traditional GCN, which is based on the high-order connectivity of the user-item bipartite graph. By stacking and iterating convolutional layers on the user-item bipartite graph, the node features are eventually aggregated to obtain \(k^{th}\) layer representations of the nodes.

In the equation above, \(e_i^l\) is the \(l\textrm{th}\) layer representation of node i, AGG is the aggregation function, and \({\mathbb {C}}_i\) is a set of child nodes of node i.

As shown in Fig. 2, according to the guiding principles of IA-GCN, there are two possible scenarios during the interactive convolution of node r, which are listed below.

\(\blacksquare \) \(g = i, p\in {\mathbb {C}}_u\). As shown in Fig. 2, the aggregation of neighbors in the user tree is guided by the item-guiding term, which is one of the guiding principles of IA-GCN.

\(\blacksquare \) \(g = u, p\in {\mathbb {C}}_i\). As shown in Fig. 2, the aggregation of neighbors in the item tree is guided by the user-guiding term, which is another guiding principle of IA-GCN.

It is important to note that, in IA-GCN, the weights for aggregating node features from child nodes are derived from the guiding term of the other tree. Specifically, when aggregating nodes, the weight for each child node is calculated based on the similarity between the node and the guiding term, and then weights are assigned accordingly [14].

where \({\mathcal {L}}\) is the temperature parameter, \(\alpha _{r,p}\) is the weight of neighboring node p with respect to node r, \(p^{'}\) denotes the other nodes neighboring node r, and \(e_g^0\) is the original embedding of the guiding node. It is necessary to ensure that \(\sum _{p \in {\mathbb {C}}_{r}}{\alpha _{r,p} \mid g = 1}\). The convolution operation of IA-GCN can be expressed as:

In this paper, a multi-intent interactive convolution approach (Fig. 3) is adopted, which performs interactive convolution between the user’s individual intents and target items. This convolution approach allows the model to extract feature representations of multiple user intents. Meanwhile, IA-GCN is used to perform local convolution operations, which can receive guidance signals from the counterpart tree when aggregating node features. This enables the model to understand multiple user preferences and thus improves the performance of the model.

Architecture of the intent attention module, in which y is the feature representation of the item, and \(x_i\) is the feature representation of the \(i^{th}\) user intent, which serves as the Query in the attention mechanism. The k user intents are used as multiple heads in the multi-head attention block, where each head corresponds to one output of the model for each intent. Finally, the feature representations of multiple user intents are concatenated

Intent attention module

The main function of this module is to focus on the extracted feature representations of K user intents. For example, when user A expresses intentions to buy pants and beer, by analyzing the user’s interactive behaviors, we may find that the user has already purchased barbecue, which indicates that the user has a stronger desire for beer compared to pants. This helps to reduce the ambiguity of user intents. Using attention mechanisms, the priority of user intents can be further analyzed among multiple intent representations, thus providing services of higher quality to users. Figure 4 illustrates the architecture of the Intent Attention Module.

Each intent output corresponds to a head:

The intent attention module plays a crucial role in the overall recommendation performance of the model, making the recommendations more targeted. Unlike GAT, which applies the attention mechanism to aggregating neighboring nodes, Multi-IAIGCN applies the attention mechanism on user intent features. Compared with GAT, IA-GCN is more lightweight because it does not involve trainable parameters in the process of neighborhood aggregation. In terms of overall structure, a unique feature of Multi-IAIGCN is the incorporation of the intent attention mechanism, which not only enhances the interpretability of the model but also aligns better with users’ actual needs. It is reasonable to say that this model is a variant of IA-GCN, which will be of greater practical significance after multiple features of user intents are incorporated. Therefore, the intent attention module is indispensable in the Multi-IAIGCN model.

Prediction module

The function of this module is to output the final prediction results. However, unlike traditional GCN-based recommender systems, most of which rely on the dual-tower structure and directly interact with bilateral features, the traditional GCN model aggregates l-order feature connections separately for user and item nodes during the inference process.

Then, cross-fusion between user and item nodes is performed:

The fusion method based on traditional GCN has several advantages, such low difficulty level of training and high scalability, but it suffers from accuracy loss. This module does not adopt the traditional GCN interaction method, and it only perform fully connected operations on the user tree. The reason is that in the two preceding modules, the IA-GCN interaction method and attention mechanism have already enabled the user tree to receive signals from the item tree. To avoid accuracy loss, only fully connected layers are used after the concatenation operation in the final prediction module. Empirical results have shown that this approach can significantly improve the performance of the model.

Due to the interaction between users and items that occurs in the early graph convolution module and the attention mechanism module, as well as the fusion of user and item features, there is no issue of insufficient interaction. On the contrary, the convolution approach guided by interactive information in the graph convolution module generates more signals of interaction between user and item trees, which plays a crucial role in the final prediction process of the model.

Loss function

To learn the parameters of the model, we adopt the BPR [34] loss function, which is a pairwise loss function widely used in recommender systems. The loss function is defined as:

The set \(O = \left\{ \left( {u,i^{+},i^{-}} \right) \mid \left( {u,i^{+}} \right) \in R^{+},\left( {u,i^{-}} \right) \in R^{-} \right\} \) is the paired training set, where \(R^{+}\) denotes the positive samples of user–item interactions, \(R^{-}\) denotes the negative samples randomly taken from user–item pairs that have not interacted, \(\Theta \) denotes the trainable parameters of the model, and \(\lambda \) is the \(L_2\) regularization coefficient. Unlike traditional GCN, the interactive convolution module of the model does not involve additional trainable parameters. The main trainable parameters of the model are concentrated in the intent attention and prediction modules.

Experimental results and analysis

Datasets

To evaluate the effectiveness of the proposed method, extensive experiments were conducted on three publicly available datasets, namely, Yelp2018, Gowalla, Restaurant, and Amazon-Book. During the training process, samples of user–items pairs that had interacted were used as positive samples for training, while negative sampling was performed to obtain samples of user–item pairs that did not interact. 80% of the interactions in each dataset were used to construct the training set, and the remaining interactions were used as the test set. Table 1 summarizes the statistical data for all datasets.

Evaluation metrics

To evaluate the performance of the recommendation model in terms of Top@20, the average Recall@20 and NDCG@20 scores were used as evaluation metrics to assess the model’s ability to correctly recommend items. Extensive experiments were conducted on Multi-IAIGCN and several baseline models with three datasets, and some of the experimental data for baseline models was obtained directly from the authors’ publicly available data.

Hyperparameter settings

The proposed model was implemented in the PyTorch framework. A fixed embedding size of 64 was used for hyperparameter settings. The Adam optimizer with a learning rate of \({1e}^{- 4}\) and an \(L_2\) regularization coefficient of \({1e}^{- 4}\) was used for experimental purposes. In addition, the early stopping strategy was implemented. The main idea of this strategy is that, if the value of Recall@20 on the validation data does not improve for 50 consecutive epochs, the model’s training process will be stopped early. To ensure fair comparison, the experimental settings for the proposed model, including the number of layers, were kept consistent with those for baseline models.

Baseline models

The proposed model was compared with the baseline models listed below to demonstrate its effectiveness.

BPRMF [34]: BPRMF is a matrix factorization model based on the Bayesian algorithm. Unlike traditional matrix factorization models, BPRMF utilizes triplet data of user-item preference rankings for training so as to optimize individual user preferences.

GC-MC [29]: GC-MC only considers first-order neighbors. The model uses autoencoders to generate user/item feature representations during matrix completion and leverages decoders for link prediction to reconstruct user–item ratings.

PinSage [5]: PinSage has been developed based on GraphSAGE [35]. The model stacks convolutional layers on the user–item bipartite graph to obtain feature representations for nodes.

GRU4REC [26]: GRU4REC is a method based on recurrent neural networks (RNN) that captures the general interest of users in session-based recommendation scenarios. It uses RNN to model the sequences of interactions between users and items in session-based recommendation scenarios.

NGCF [6]: NGCF completes graph initialization using the matrix of interactions between users and items. It consists of two main processes, namely, message construction and message aggregation. Unlike traditional embeddings, NGCF stacks convolutional layers to capture signals between high-order neighboring nodes and then injects the collaborative signals into node representations to obtain more effective embeddings.

LightGCN [33]: One essential difference between Light-GCN and NGCF is that Light-GCN eliminates nonlinear activation and feature transformation processes. This reduces the training difficulty of the model and makes it more lightweight, resulting in improved recommendation performance.

DGCF [36]: By leveraging the adjacency matrix of user behaviors and the adjacency matrix of item attributes, the model obtains information on the mutual interactions between users and items and emphasizes the importance of differentiating user intentions on connected items.

FedGRec [37]: This model integrates federated learning with the graph structure to effectively utilize indirect user-item interactions. More specifically, users and servers explicitly store the latent embeddings of users and items, where the latent embeddings aggregate indirect user–item interactions of different orders, and are used as proxies for the missing interaction graph during local training.

IA-GCN [14]: IA-GCN captures interaction signals more effectively by adding interactive signals between user and item trees and enriching the embeddings of final nodes. The inspiration for this study comes from the unique interactive convolution approach of IA-GCN.

Experimental results and analysis

Comparison of prediction accuracy

To demonstrate the effectiveness of our model, we compared it with several state-of-the-art methods across different categories.

The experimental results are shown in Table 2, which has successfully demonstrated the effectiveness of Multi-IAIGCN on four datasets, with notable performance on the Yelp2018 and Gowalla datasets. On the Yelp2018 dataset, Multi-IAIGCN achieved a 1.97% improvement in the Recall@20 compared to IA-GCN and a nearly 9.3% improvement compared to FedGRec. Furthermore, Multi-IAIGCN demonstrated 1.7% and 8.55% improvements in the NDCG metric, respectively. On the Gowalla dataset, Multi-IAIGCN has achieved no significant improvement in Recall@20, but is has improved NDCG@20 by 0.2% and 15.3%.On the Restaurant dataset, the performance of Multi-IAIGCN is noteworthy. Multi-IAIGCN has shown an improvement of approximately 2.9% in the recall metric compared to IA-GCN. In comparison to FedGRec, it has demonstrated an increase of around 6.9% in recall. Additionally, in terms of the NDCG metric, Multi-IAIGCN has exhibited an improvement of approximately 9% compared to IA-GCN. Furthermore, Multi-IAIGCN outperformed FedGRec on all the four datasets.

Model performance analysis

Multi-IAIGCN performs significantly better than other mainstream recommendation algorithms on three datasets, which is due to the two main structures of the model, namely guided graph convolution and multi-intent perception. Next, Multi-IAIGCN and newer graph neural network-based mainstream recommendation algorithms will be analyzed separately.

The experiments of NGCF on the Yelp2018 and Amazon-Book datasets are highly informative. NGCF achieves more diverse node representations and satisfactory results by injecting the captured collaborative signals into node representations through stacking multiple embedding propagation layers. This finding provides guidance for future work and indicates that collaborative signals from different propagation layers play a significant role in diversifying node representations.

Light-GCN reduces the training difficulty of the model by eliminating unnecessary linear transformations and nonlinear activations in NGCF, and it has delivered remarkable experimental results. However, both NGCF and Light-GCN lack interactive signals between user and item trees. This results in the lack of mutual guidance between user and item trees in user/item feature representations, thus hindering the learning of higher-quality features.

Compared with IA-GCN, Multi-IAIGCN pays more attention to user intent information, and it has achieved the best or near-optimal performance on three datasets due to its unique design that combines interactive convolution with multi-intent perception. In particular, the incorporation of the multi-intent perception module diversifies user embeddings, providing support for future work.

On the Amazon-Book dataset, Multi-IAIGCN performs slightly worse than LightGCN and IA-GCN, but it performs significantly better than NGCF and greatly reduces the training difficulty. The reason is that Multi-IAIGCN adopts the interactive convolution approach of IA-GCN and aggregates the features of a node among its neighbors under the guidance of the opposite tree, thereby diversifying node representations. The reason why Multi-IAIGCN does not perform as well as other models on the Amazon-Book dataset is speculated to be the sparsity of interaction data (as shown in Table 1), while denser interaction data contributes more to the clustering of user intent in the model. Compared with LightGCN, our model (Multi-IAIGCN) is more effective in incorporating external guidance information. Compared with IA-GCN, our model is more effective in integrating multiple user intents and is more interpretable because it can model multiple user interests simultaneously.

Ablation experiments

Impact of the number of intentions

Experiments were conducted to assess the impact of the number of user intentions (denoted by K) in Multi-IAIGCN. In general, K affects not only the experimental results but also the complexity of the final model. The experimental results achieved on three datasets show that the model delivers the best performance when \(K = 4\).

In theory, the more the user intent features aggregated in a model, the better the performance of the model. From a practical perspective, data sparsity has a significant impact on model performance. Figure 6 shows the trend of changes in the model.As the number of user intentions increases, the problem of data sparsity caused by intent features becomes more obvious.

Impacts of interactive convolution and intent attention

Extensive experiments were conducted on interactive convolution and multi-intent attention modules with a focus on Multi-IAIGCN. To ensure fair comparison, the value of the hyperparameter K was set to 4. The experimental results have confirmed the superiority of our model and verified the importance of user intent diversification and interactive convolution in recommender systems.The experimental results are shown in Table 3.

A thorough analysis of the experimental results shows that, compared with the methods that only use IA-GCN or solely rely on multiple user intents to make recommendations, the proposed model performs exceptionally well, with significantly improved recommendation efficiency. These results(Fig. 7) further verify the effectiveness of our model.

Conclusion

In view of the limitations of existing recommendation methods, we conducted research on the recommendation methods that incorporate multiple user intents. This paper thoroughly analyzes the structural features of interactive graph convolutional neural networks and proposes a multi-intent-aware recommendation algorithm based on this analysis. The proposed model addresses the problem of low recommendation effectiveness in the presence of multiple user intents and avoids complete loss of signals from the counterpart tree during the aggregation process.

The model mainly consists of two modules designed to mine the true user intents. In the graph convolution module, an interactive convolution approach is used to aggregate the features of multiple user intents. In the multi-intent attention module, an attention mechanism is employed to assign weights to the effects of multiple user-intent embeddings, thus constructing global intent features and eliminating ambiguity in user intents. The experimental results show that the recommendation model proposed in this paper performs better on three public datasets than other mainstream recommendation models, especially on data sets with high interaction density(Restaurant: Density \(0.748\%\)). In the future, we will concentrate more attention on two directions: (a) expanding our experiments on datasets with higher interaction density to better demonstrate the effectiveness of our model, and (b) researching how to further improve the performance of the model on datasets with lower interaction density.

Data availability

The data generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Covington P, Adams JK, Sargin E (2016) Deep neural networks for youtube recommendations. In: Proceedings of the 10th ACM Conference on Recommender Systems

Koren Y, Bell R, Volinsky C (2009) Matrix factorization techniques for recommender systems. Computer 42(8):30–37

Sinha B, Dhanalakshmi R (2022) Dnn-mf: deep neural network matrix factorization approach for filtering information in multi-criteria recommender systems. Neural Comput Appl. https://doi.org/10.1007/s00521-022-07012-y

Li F, Xu G, Cao L (2015) Two-level matrix factorization for recommender systems. Neural Comput Appl. https://doi.org/10.1007/s00521-015-2060-3

Ying R, He R, Chen K, Eksombatchai P, Hamilton WL, Leskovec J (2018) Graph convolutional neural networks for web-scale recommender systems. KDD ’18. In: Association for Computing Machinery, New York, NY, USA, pp. 974–983. https://doi.org/10.1145/3219819.3219890

He X, Liao L, Zhang H, Nie L, Hu X, Chua T-S (2017) Neural collaborative filtering. In: Proceedings of the 26th International Conference on World Wide Web. WWW ’17. International World Wide Web Conferences Steering Committee, Republic and Canton of Geneva, CHE, pp 173–182. https://doi.org/10.1145/3038912.3052569

Song X, Wu SN et al (2023) Switching-like event-triggered state estimation for reaction-diffusion neural networks against dos attacks. Neural Process Lett 55:8997–9018. https://doi.org/10.1007/s11063-023-11189-1

Song X, Sun P, Sea Song (2023) Quantized neural adaptive finite-time preassigned performance control for interconnected nonlinear systems. Neural Comput Appl 35:15429–15446. https://doi.org/10.1007/s00521-023-08361-y

Song X, Wu N, Song S, Zhang Y, Stojanovic V (2023) Bipartite synchronization for cooperative-competitive neural networks with reaction-diffusion terms via dual event-triggered mechanism. Neurocomputing 550:126498. https://doi.org/10.1016/j.neucom.2023.126498

Sedhain S, Menon AK, Sanner S, Xie L (2015) Autorec: autoencoders meet collaborative filtering. In: Proceedings of the 24th International Conference on World Wide Web. WWW ’15 Companion. Association for Computing Machinery, New York, NY, USA, pp 111–112. https://doi.org/10.1145/2740908.2742726

Volkovs M, Yu G, Poutanen T (2017) Dropoutnet: addressing cold start in recommender systems. In: Proceedings of the 31st International Conference on Neural Information Processing Systems. NIPS’17. Curran Associates Inc., Red Hook, NY, USA, pp 4964–4973

Ren H, Liu B, Sun J, Dong Q, Qian J (2023) A time and relation-aware graph collaborative filtering for cross-domain sequential recommendation. J Comput Res Dev 60:112–124. https://doi.org/10.7544/issn1000-1239.202110545

Tran D, Sheng Q, Zhang WE, Aljubairy A, Zaib M, Hamad S, Tran N, Nguyen K (2021) Hetegraph: graph learning in recommender systems via graph convolutional networks. Neural Comput Appl. https://doi.org/10.1007/s00521-020-05667-z

Zhang Y, Wang P, Zhao X, Qi H, He J, Jin J, Peng C, Lin Z, Shao J (2022) IA-GCN: interactive graph convolutional network for recommendation

Kim J, Lamb A, Woodhead S, Jones SLP, Zheng C, Allamanis M (2021) Corgi: content-rich graph neural networks with attention. In: Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining

Ren H, Liu B, Sun J, Dong Q, Qian J (2021) Shared-account cross-domain requential recommendation. J Comput Res Dev 58:2524–2537. https://doi.org/10.7544/issn1000-1239.202110545

Chen S, Qiu X, Tan X, Fang Z, Jin Y (2022) A model-based hybrid soft actor-critic deep reinforcement learning algorithm for optimal ventilator settings. Inf Sci 611:47–64. https://doi.org/10.1016/j.ins.2022.08.028

Qiu X, Tan X, Li Q, Chen S, Ru Y, Jin Y (2022) A latent batch-constrained deep reinforcement learning approach for precision dosing clinical decision support. Knowl-Based Syst 237:107689. https://doi.org/10.1016/j.knosys.2021.107689

Qu C, Tan X, Xue S, Shi X, Zhang J, Mei H (2023) Bellman meets hawkes: model-based reinforcement learning via temporal point processes. Proc AAAI Confer Artif Intell 37:9543–9551. https://doi.org/10.1609/aaai.v37i8.26142

Huang L, Jiang B, Lv S, Liu Y, Li D (2018) Survey on deep learning based recommender systems. Chin J Comput 41(7):29

Gu J, Fan S, Li N, Zhang S (2022) Long and short term recommendation model based on knowledge graph preference attention network and its updating method. J Comput Appl 42:1079–1086

Meng H, Liu Z, Wang F, Xu J, Zhang G (2017) An efficient collaborative filtering algorithm based on graph model and improved knn. Comp Res Dev 54:1426–1438. https://doi.org/10.7544/issn1000-1239.2017.20160302

Tanjim MM, Su C, Benjamin E, Hu D, Hong L, McAuley J (2020) Attentive sequential models of latent intent for next item recommendation. In: Proceedings of The Web Conference 2020. WWW ’20. Association for Computing Machinery, New York, NY, USA, pp. 2528–2534. https://doi.org/10.1145/3366423.3380002

Ma J, Zhou C, Yang H, Cui P, Wang X, Zhu W (2020) Disentangled self-supervision in sequential recommenders. KDD ’20. Association for Computing Machinery, New York, NY, USA, pp 483–491. https://doi.org/10.1145/3394486.3403091

Chen Y, Liu Z, Li J, McAuley J, Xiong C (2016) Intent contrastive learning for sequential recommendation. In: Proceedings of the ACM Web Conference 2022. WWW ’22. Association for Computing Machinery, New York, NY, USA, pp 2172–2182 (2022). https://doi.org/10.1145/3485447.3512090

Hidasi B, Karatzoglou A, Baltrunas L, Tikk D (2015) Session-based recommendations with recurrent neural networks. CoRR abs/1511.06939

Elman JL (1990) Finding structure in time. Cogn Sci 14(2):179–211. https://doi.org/10.1016/0364-0213(90)90002-E

Wang Y, Zhang X, Liu Z, Dong Z, Feng X, Tang R, He X (2020) Personalized re-ranking for improving diversity in live recommender systems. abs/2004.06390

Berg R, Kipf TN, Welling M (2017) Graph convolutional matrix completion

Hamilton WL, Ying R, Leskovec J (2017) Inductive representation learning on large graphs. In: Proceedings of the 31st International Conference on Neural Information Processing Systems. NIPS’17. Curran Associates Inc., Red Hook, NY, USA, pp 1025–1035

Velickovic P, Cucurull G, Casanova A, Romero A, Liò P, Bengio Y (2018) Graph attention networks. In: International Conference on Learning Representations. https://openreview.net/forum?id=rJXMpikCZ

Hu B, Shi C, Zhao WX, Yu PS (2018) Leveraging meta-path based context for top-n recommendation with a neural co-attention model. KDD ’18. Association for Computing Machinery, New York, NY, USA, pp 1531–1540. https://doi.org/10.1145/3219819.3219965

He X, Deng K, Wang X, Li Y, Zhang Y, Wang M (2020) Lightgcn: Simplifying and powering graph convolution network for recommendation. In: Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval. SIGIR ’20. Association for Computing Machinery, New York, NY, USA, pp 639–648. https://doi.org/10.1145/3397271.3401063

Rendle S, Freudenthaler C, Gantner Z, Schmidt-Thieme L (2009) Bpr: Bayesian personalized ranking from implicit feedback. In: Proceedings of the Twenty-Fifth Conference on Uncertainty in Artificial Intelligence. UAI ’09. AUAI Press, Arlington, Virginia, USA, pp 452–461

Hamilton WL, Ying R, Leskovec J (2017) Inductive representation learning on large graphs. In: Proceedings of the 31st International Conference on Neural Information Processing Systems. NIPS’17. Curran Associates Inc., Red Hook, NY, USA, pp 1025–1035

Wang X, Jin H, Zhang A, He X, Xu T, Chua T-S (2020) Disentangled graph collaborative filtering. In: Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval. SIGIR ’20. Association for Computing Machinery, New York, NY, USA, pp 1001–1010. https://doi.org/10.1145/3397271.3401137

Li J, Huang H (2022) Fedgrec: Federated graph recommender system with lazy update of latent embeddings. In: Proceedings of the NeurlPS’22 Conference on International Workshop on Federated Learning

Funding

This work was supported by Natural Science Foundation of Hebei Province (No. F2022511001) and Hebei University High Level Talent Research Launch Project (521100223212).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

No potential conflict of interest was reported by the authors.

Ethical approval

We ensure that accepted principles of ethical and professional conduct have been followed.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhang, J., Gao, H., Xiao, S. et al. A multi-intent-aware recommendation algorithm based on interactive graph convolutional networks. Complex Intell. Syst. 10, 4493–4506 (2024). https://doi.org/10.1007/s40747-024-01366-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-024-01366-7