Abstract

Most existing multi-objective evolutionary algorithms relying on fixed reference vectors originating from an ideal or a nadir point may fail to perform well on multi- and many-objective optimization problems with various convexity or shapes of Pareto fronts. A possible reason could be the inaccurate measurement of the diversity of solutions or the failure of the fixed reference vectors in guiding the rapidly changing population. To meet this challenge, this work develops an adaptive normal reference vector-based decomposition strategy for guiding the search process, which is able to handle various convexity and shapes of Pareto fronts. Specifically, the normal vector passing through the center of each cluster in a constructed hyperplane is adopted as the reference vector for guiding the search process. Then, a selection strategy is put forward based on the positions of solutions in the current population and the normal vectors for the environmental selection. Based on the adaptive normal vectors, the proposed algorithm can not only rapidly adapt to the changing population but also alleviate the influence of the convexity of Pareto fronts on the measurement of diversity. Experimental results show that the proposed algorithm performs consistently well on various types of multi-/many-objective problems having regular or irregular Pareto fronts. In addition, the proposed algorithm is shown to perform well in the optimization of the polyester fiber esterification process.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

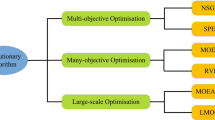

Evolutionary algorithms have demonstrated high effectiveness in solving multi-objective optimization problems (MOPs) [1]. However, there are currently two challenges in this research field. On the one hand, the early multi-objective evolutionary algorithms (MOEAs) were designed to solve problems with two or three objectives [2], and suffered from the curse of dimensionality when handling MOPs with more than three objectives, i.e., many-objective optimization problems (MaOPs) [3]. On the other hand, most MOEAs assumed that the Pareto fronts (PFs) of the MOPs/MaOPs are regular; thus, these algorithms cannot perform well on problems with irregular PFs [4] that are commonly seen in the real world.

To meet the above challenges, a variety of new MOEAs have been developed. These algorithms improve their ability to deal with MaOPs mainly in the following four ways [5]. First, some MOEAs enhance selection pressure by modifying the dominance relationship. As the proportion of non-dominated individuals increases rapidly with the number of objectives, the Pareto dominance relationship loses the pressure to converge to the PFs. Therefore, several modified concepts of dominance are proposed to enhance the convergence ability, such as subregion PBI-dominance [6] and D-dominance [7]. Second, some MOEAs add auxiliary selection methods to the traditional non-dominated sorting approach. For example, [8] uses corner sort to save more objective comparisons and execution time. Third, some MOEAs use weights, reference points or reference vectors to decompose MaOPs, such as MOEA/D [9] and its variants, NSGA-III [10], RVEA [11]. In these algorithms, a set of reference vectors are predefined, and then, the original MOP is partitioned into several subproblems. The results of subproblems will constitute the final solution set of the problem. The preset reference vectors are equivalent to the fixed convergence directions which can greatly improve the convergence efficiency of handling MaOPs. However, this method may be sensitive to the shape of the PFs [12]. Fourth, some MOEAs rely on performance indicators for optimizing MaOPs [13]. Since the computation costs increase rapidly with the number of objectives in most indicator-based algorithms, algorithms like [14] make efforts to reduce the computational complexity of indicators. However, to improve the convergence of the algorithms for MaOPs, the diversity performances can deteriorate when solving MOPs [15].

Besides, for handling MOPs/MaOPs with irregular PFs (called irregular problems for simplicity; otherwise, problems with regular PFs are called regular problems), MOEAs have been developed considering the characteristics of irregular PF shapes. These algorithms can be mainly divided into two categories. The first category includes algorithms based on the adjustment of reference vectors. Since the fixed reference vectors waste a lot of computing resources when dealing with irregular problems, some algorithms adjust the reference vectors according to the population distribution [11, 16, 17]. Various approaches to adjusting reference vectors have been developed for the past 2 decades like addition and deletion of reference vectors in promising regions [18,19,20], learning the distribution of reference vectors [21,22,23], and regenerating a set of reference vectors after a predefined number of iterations [17] or triggering a condition [24, 25]. However, regardless of how the reference vectors are being adjusted, if these reference vectors are defined to originate from the ideal point or the nadir point, they will be sensitive to highly convex or concave PFs. An illustrative example is given in Fig. 1. We can see that reference vectors originating from the ideal point have difficulties in guiding the search process to find well-distributed solutions in handling problems with convex PFs, as shown in Fig. 1a. The solutions found by the reference vectors are denoted using red dots. A similar conclusion can be drawn when the reference vectors originate from the nadir point, which results in a set of poorly distributed solutions in solving problems with concave PFs, as plotted in Fig. 1b. In conclusion, the convergence and diversity of solutions in the reference vector-assisted methods are usually highly dependent on the distribution of the reference vectors. In most decomposition-based work [9, 11], the angle or the perpendicular Euclidean distance between solutions and reference vectors is usually used to measure the diversity contribution of a solution. This measurement may be inaccurate when dealing with problems with convex or concave PFs. Hence, we adopt a set of normal vectors, as plotted in Fig. 1c, to guide the search process in this work. We can see that the normal vectors can help find well-diversified solutions in handling problems with either convex or concave PFs.

The second category for dealing with irregular problems is clustering. Hua et al. [26] put forward a hierarchical clustering environmental selection strategy, in which the distances between the solutions and centers are used for deciding which solution is promising. Liu et al. [27] suggest a local density value to adaptively and steadily learn cluster centers of solutions during the search process by accounting for both convergence and diversity of solutions during the search process. However, the aforementioned methods do not consider the influence of the convexity of approximated PFs on the calculation of cluster centers, resulting in non-uniform solutions in highly convex or concave problems. Hence, in [28], the curving rate of approximated PFs is first estimated and then used to normalize the clustered solutions.

To sum up, no matter whether we adjust reference vectors or directly cluster the solutions during the search process, we still face the following challenges. First, most scalarizing functions are designed based on the reference vectors originating from the ideal point or the nadir point, which cannot always assure a fair measurement of the diversity of solutions. Second, the rapidly changing population during the search process poses great challenges to the timing of reference vector adaptation and clustering. Last but not least, the strategies to improve the algorithms for handling irregular problems will degrade the performance in handling regular problems to a certain extent. Thus, it still remains a challenge to handle many-objective irregular problems.

Motivated by the above discussions, this paper proposes an adaptive normal reference vector-based multi- and many-objective evolutionary algorithm (NRV-MOEA) to deal with multiple types of MOPs and MaOPs. In NRV-MOEA, the solutions in the last accepted non-dominated front are first normalized and then mapped onto a hyperplane. Then, the mapped solutions will be clustered into a number of groups for generating the normal reference vectors to guide the search process. The normal vector is generated in a way that vectors that are passing through each cluster center and are vertical to the hyperplane will be used as the reference vectors. In addition, based on the normal vectors, we propose a new approach to measuring the quality of solutions for environmental selection.

The main contributions of this work can be summarized as follows:

-

1.

We propose an adaptive normal reference vector adaptation method based on mapping and clustering. The individuals in the last accepted non-dominated front are mapped onto a hyperplane along normal vectors and the mapped solutions are then divided into a number of groups for generating a set of adaptive reference vectors. The normal vectors passing through the cluster centers will be adopted as the reference vectors for guiding the optimization process.

-

2.

We further put forward a new way of measuring the quality of solutions based on the adaptive normal reference vector. Specifically, the convergence and diversity of solutions are calculated using the vertical distance from the solutions to the cluster centers and the projection distance of individuals on the hyperplane, respectively.

-

3.

The proposed NRV-MOEA is compared with the state-of-the-art algorithms on 74 test instances. Finally, NRV-MOEA is successfully applied to the optimization of the polyester fiber esterification process, an important process in the textual industry. Our experimental results demonstrate that NRV-MOEA has significant advantages in dealing with problems with various convexity and shapes of PFs.

In the remainder of this paper, we first discuss the main ideas of the proposed NRV-MOEA in the section “Main ideas”. The section “Proposed algorithm” presents the detailed implementations of the proposed algorithm. Then, comparative results on the DTLZ, WFG, MaF, IMOP and DPF benchmark problems and on the polyester fiber esterification process optimization are presented in the sections “Empirical results” and “Optimization of the polyester fiber esterification process”, respectively. Finally, conclusions are provided in the section “Conclusion”.

Main ideas

Mapping by normal vectors

Since a decomposition-based mechanism is used in NRV-MOEA, it is important to divide the population evenly. In NRV-MOEA, individuals are mapped onto a hyperplane constructed by extreme individuals with the maximum value of each objective. By mapping, individuals with the same convergence direction will be mapped to the same point on the hyperplane. In this way, we can effectively distinguish individuals with different convergence directions.

Usually, individuals will be mapped to a hyperplane by the ideal point [29] or the nadir point [30], which has the minimum or maximum value of each objective. In Fig. 1a, individuals are mapped to the hyperplane by the ideal point, the square represents the ideal point, red and black dots are the original individuals, the dotted lines are the mapping directions, and the circles are the mapped individuals on the hyperplane. We can see that the individuals uniformly distributed on the flat and concave non-dominated fronts are also uniformly distributed after mapping to the hyperplane, which shows that mapping by ideal point can reflect the original individual distribution on flat and concave non-dominated fronts. However, represented by red dots, the original individuals are distributed on a convex non-dominated front, with crowded distribution in the middle part of the non-dominated front, whereas sparse distributions at both ends of the non-dominated fronts, but uniformly distributed after mapping to the hyperplane. This shows that mapping by ideal point cannot reflect the distribution of individuals on the convex non-dominated front. On the contrary, as shown in Fig. 1b, mapping from the nadir point can only reflect the distribution of the individuals on flat and convex non-dominated fronts, but not that on concave non-dominated fronts. To adapt more shapes of non-dominated fronts, we propose a normal vector-based mapping method. As shown in Fig. 1c, individuals are mapped onto the hyperplane by the normal vectors. This mapping method can reflect the distribution of the individuals on flat, concave, or convex non-dominated fronts.

There are several studies that have adopted normal vectors in dealing with irregular problems. For instance, in [31], the perpendicular distance from an individual to the unit hyperplane and harmonic average distance between an individual and its neighboring individuals are weighted to estimate the quality of solutions in environmental selection. In this work, it is hard to specify the number of neighboring individuals to better account for diversity. In [32], the convergence and diversity of solutions in the population are measured by the Euclidean distances between solutions to the mirror points constructed by decreasing the coordinates of the reference points by 1 and the angle between solutions and the reference points, respectively. However, the diversity of solutions in [32] is still measured by the angle between solutions as other decomposition-based approaches such as [11], which could be inaccurate on those convex or concave PFs.

Environmental selection based on clustering and normal vectors passing through cluster centers

Clustering is an effective method to partition the population evenly on an unknown shape of a non-dominated front. As mentioned in CA-MOEA [26], hierarchical clustering using Ward’s linkage measured by the Euclidean distance is well suited for partitioning the population of unknown PF shapes. Here, our proposed NRV-MOEA method adopts this clustering method for mapped populations on the hyperplane. As shown in Fig. 2a, b, A, B, C, D, E, F, and G denote seven individuals in the objective space, with B, C, E on the first front and A, D, F, G on the second front. Suppose we need to select four individuals from these seven individuals. If we do the clustering among the original individuals, as shown in Fig. 2a, individuals with similar objective values will be merged into the same cluster. Therefore, since A is far away from other individuals, itself is a single cluster, B and C are merged into one cluster, D, F, and G are merged into another cluster, and E itself is a cluster. As cluster centers are treated as reference points, A, C, E, and F will be selected, since they are closest to the reference points. We can see that the contribution of B to population diversity is similar to that of A, but the convergence of B is better than A, so B should be the better choice. However, since the clustering method in the original population cannot identify individuals in a similar convergence direction, A is selected and B is discarded. Meanwhile, E and F are individuals in the same convergence direction, but they are repeatedly selected. The result is not satisfactory.

To improve environmental selection results, a new selection method using mapping, clustering, and normal vectors is proposed. As illustrated in Fig. 2b, individuals A, B, C, D, E, F, and G are mapped onto the hyperplane to obtain MA, MB, MC, MD, ME, MF, and MG. The dendrogram of the bottom–up hierarchical clustering of MA to MG is shown in Fig. 2c. In Fig. 2c, after performing hierarchical clustering, by choosing the layer i, we can determine all solutions can be grouped into i clusters. For instance, the solutions under the branch of layer 1 are of the same cluster, and solutions under the seven branches of layer 7 are grouped into 7 clusters, each solution being located in one cluster. As we want to select four individuals, we cluster these mapped individuals into four clusters. Therefore, we refer to layer 4 of the dendrogram, where MA and MB are merged into one cluster, MC and MD are two independent clusters, ME, MF, and MG are merged into one cluster. Clusters are represented by dashed circles in Fig. 2b. After clustering, we calculate a cluster center for every cluster, represented by a star. Then, a normal vector passing through each cluster center is calculated. After that, a novel distance-based selection scheme is proposed in NRV-MOEA. Take B as an example, \(d_1\) is the Euclidean distance from the individual to the normal vector, and \(d_2\) is the projection distance of the individual on the hyperplane. \(d = d_1-d_2\) is calculated for measurement. The smaller the value of d, the better the fitness of the individual. Since the solutions in different Pareto fronts have different \(d_2\) values, we adopt \(d_2\) to measure the convergence. In addition, the measurement of diversity using \(d_1\) can distinguish the distance of a solution to its nearest reference vector. Since the normal vectors are relatively uniform, using the vertical distance from a solution to the nearest normal vector can push the solutions moving to the directions of normal vectors, which will result in a set of uniformly distributed solutions. In addition, \(d_1\) is free of the influence of the shape of PFs, as shown in Fig. 1b. Then, we select an individual with the smallest d from each cluster. Since B has a smaller value of d than A, B is selected from the cluster constructed by A and B. Consequently, we select B, C, D, and E from these seven individuals, as shown in Fig. 2b. It can be seen that this environmental selection scheme can select individuals with the best convergence from uniformly distributed convergence directions. Moreover, since we use normal vectors as mapping directions and reference vectors, this method can adapt to various shapes of PFs. Other clustering approaches such as k-means can also be used for clustering the mapped solutions in this work.

Proposed algorithm

The details of NRV-MOEA are presented in this section. The elite reservation mechanism is applied in NRV-MOEA, and both the parent and offspring populations will participate in the environmental selection. Individuals are selected according to the dominance relationship as well as the normal vectors passing through the cluster centers of the mapped individuals on the hyperplane, and at last, the selected individuals compete with the archive individuals to further improve the population diversity. In the following, we present the framework and the main components of the proposed algorithm.

The framework

The main loop of NRV-MOEA is presented in Algorithm 1. Similar to most MOEAs, first, an initial parent population P of size N is randomly generated according to the constraints on objective functions and decision variables. Meanwhile, the initial population P is added to an archive Arc. Then, the simulated binary crossover [33] and polynomial mutation [34] are used to produce the offspring population Q of size N. After that, the elite preservation mechanism is adopted, in which the parent population is combined with offspring population to construct a population \({P_u} = P \cup Q\) of size 2N. At each iteration, we select N elite individuals from the combined population by environmental selection. To select elite individuals, the fast non-dominated sorting approach [35] is first applied to sort the 2N individuals in the combined population into several non-dominated fronts, denoted as \({F_1}, {F_2}, \ldots \). Then, individuals are selected from the non-dominated fronts one by one until the size of \({{F^u}} = {F_1} \cup \cdots \cup {F_l}\) is equal to or for the first time exceeds N, supposing that the last accepted non-dominated front is \({F_l}\). If the size of \({{F^u}}\) is exactly equal to N, then we simply select all individuals in \({{F^u}}\) as the solution population of current iteration. In the case that the size of \({{F^u}}\) is larger than N, we need to select N individuals from \({{F^u}}\). Considering that the objective values can be of different ranges, the individuals are normalized by \(b_{i N}=\left( b_{i}-b_{i \min }\right) /\left( s\right) \), where \({b_i}\) is the ith objective value of individual b in \({{F^u}}\), s is equal to \(b_{i \max }-b_{i \min }\) and is fixed for every \(f_r\cdot MaxG\) generations (MaxG is the maximum number of generations, \(f_r\) is set to 0.1 in this work), and \({b_{i\min }}\) and \({b_{i\max }}\) are the minimum and maximum values among all the objective values in \({{F^u}}\). Then, all the normalized individuals will be mapped onto the hyperplane using Algorithm 2, and the mapped individuals are then clustered to N clusters by hierarchical clustering with the Ward’s linkage criterion [36] measured by the Euclidean distance. Afterward, environmental selection using Algorithm 3 will be used to select N individuals from \({{F^u}}\). These N individuals will be compared with N archive individuals through a pruning mechanism to screen out the final N individuals to become the parents of the next generation. This reproduction and selection procedure repeats until a termination condition is met and the population P in the last generation will be the final solution set.

Mapping

In NRV-MOEA, individuals are mapped onto the hyperplane by a set of normal vectors passing through the corresponding solution. The mapping point of an individual on the hyperplane is actually the intersection of its own normal vector and the hyperplane. The pseudocode of the mapping algorithm is given in Algorithm 2. The hyperplane is calculated according to the extreme individuals which have the maximum value of each objective. Suppose the equation of the hyperplane is \({A_1}{x_1} + {A_2}{x_2} + \cdots + {A_m}{x_m} + D = 0\), where the parameters (\({A_1}, {A_2}, \ldots , {A_m}, D\)) can be determined by the extreme solutions, the coordinates of the mapped point (\({p_1}, {p_2},\ldots , {p_m}\)) of the individual (\({b_1}, {b_2},\ldots , {b_m}\)) are calculated as follows:

where \(t = \frac{{ - ({A_1} \times {b_1} + {A_2} \times {b_2} +... + {A_m} \times {b_m} + D)}}{{(A_1^2 + A_2^2 + \cdots + A_m^2)}}\).

Environmental selection using clustering and normal vectors passing through cluster centers

To do the environmental selection, the Ward’s linkage criterion measured by the Euclidean distance is used to perform the hierarchical clustering for the mapped individuals on the hyperplane. The center of each cluster is produced by calculating the average value of each objective of all the individuals in the cluster, using the following equation:

where mP is a cluster of n individuals, \({mP_k}\) is the kth individual in cluster mP, and C is the cluster center of mP. Since this clustering method is able to evenly divide the mapped individuals into N groups, the N cluster centers are evenly spread among mapped individuals on the hyperplane.

Afterward, we calculate a normal vector passing through each cluster center. As illustrated in Fig. 2b, \(d_1\) is the vertical distance from the individual to the cluster center normal vector, and \(d_2\) is the projection distance of individual on the hyperplane. More specifically, \(d_1\) is used to measure the diversity of an individual, and the smaller \(d_1\) is, the closer the individual is to the normal vector passing through the cluster center, thus indicating better diversity; \(d_2\) reflects the convergence of the individual, and the larger \(d_2\) is, the farther the individual is away from the hyperplane, thus indicating better convergence. Note that if the individual is on the other side of the hyperplane, i.e., the side closer to the nadir point, then we set \(d_2\) to be negative. Sequentially, we calculate \(d = d_1 - d_2\) to measure the convergence and diversity of individuals at the same time, where a smaller d indicates a better fitness of the individual. Since the individuals are normalized before environmental selection calculation, the ranges of \(d_1\) and \(d_2\) will not be too much different. Finally, we select the individual with the minimum d among all the individuals in each cluster to obtain N individuals. The pseudocode of environmental selection algorithm is given in Algorithm 3.

Archive management mechanism

The individuals \(P_c\) chosen in the environmental selection procedure will be further compared with the solutions in the archive Arc. In this work, we adopt the epsilon-indicator-based archive management adopted in [22] for the management of the archive. Specifically, we first combine \(P_c\) with Arc and then select the non-dominated solutions in the combined set. If the number of non-dominated solutions is larger than the population size N, we will use \(\epsilon \)-indicator to delete those solutions with lower \(\epsilon \)-based fitness values. Otherwise, we will keep the non-dominated solutions only in the archive.

Computational complexity of NRV-MOEA

The worst-case computational complexity of the proposed NRV-MOEA in one iteration is analyzed as follows. Let m be the objective number and N be the population size. In Algorithm 1, the initialization takes O(mN). The reproduction procedure and fast non-dominated sorting need \(O(mN^2)\). The normalization of population in line 9 requires O(mN). For mapping individuals to the hyperplane, finding extreme individuals (line 2 in Algorithm 2) takes O(mN), and mapping non-dominated individuals to the hyperplane using Eq. (1) (lines 4 to 7 in Algorithm 2) needs no more than O(mN). In line 11 of Algorithm 1, the hierarchical clustering needs \(O(N^2)\). In environmental selection (line 12 in Algorithm 1), calculating the cluster centers by equation (2) takes \(O(N^2)\) (line 5 in Algorithm 3), calculating the fitness value d of every individual (lines 6 to 14 in Algorithm 3) requires no more than \(O(N^2)\), and choosing the best individual from every cluster takes O(N). At last, in the archive management, fast non-dominated sorting needs \(O(mN^2)\), deleting the most crowded individuals one by one takes \(O(N^2)\). To summarize, the total computational complexity of NRV-MOEA in the worst case in one iteration is \(O(mN^2)\).

Empirical results

In this section, the experimental study of NRV-MOEA is described. To verify the performance of NRV-MOEA, the experiments were conducted on 74 test issues with a wide range of objective numbers and various PF shapes. All experiments are implemented on PlatEMO [37] by MATLAB R2022a and run on a PC with Intel Core i7-12700 H, CPU 2.70GHz, RAM 32.00 GB.

Benchmark problems and compared algorithms

The adopted benchmark problems include the widely used scalable benchmark problems DTLZ1-DTLZ4 [38], WFG1-WFG9 [39], IMOP1 to IMOP8 [40], four test problems of the MaF [41] test suite, and the recently proposed scalable benchmark problems DPF1–DPF5 [42].

NRV-MOEA is compared with five state-of-the-art MOEAs, namely, MOEA/D-UR [25], MaOEA/SRV [27], VaEA [43], KnEA [44], and CA-MOEA [26]. Among them, CA-MOEA and MaOEA/SRV are algorithms based on clustering, while MOEA/D-UR is based on reference vector adaptation. KnEA is based on knee solutions, in which a hyperplane is constructed for identifying knee solutions. VaEA is a state-of-the-art MOEA for dealing with both regular and irregular problems.

Performance indicators

The inverted generational distance plus (IGD\(^{+}\)) [45] and hypervolume (HV) [46] are adopted in this work as performance indicators. The number of reference points sampled from true PFs is 100,000, as recommended in [47]. A lower IGD\(^{+}\) value indicates a better performance. A solution set obtained by each algorithm in this work is first normalized when calculating HV indicator and the reference point is set to \((1.1,1.1,\ldots ,1.1)\), as in [37]. A higher HV value indicates a better performance.

Experimental settings

As usual, the population size is set according to \(N = C_{H + m - 1}^{m - 1}\) [9], where H specifies the granularity or resolution of reference vectors by Das and Dennis’s systematic approach [48], and m represents the number of objectives. The population size for 2-, 3-, 5-, 10-, and 15-objective test instances is set to 40, 45, 50, 65, and 120, respectively. The maximum number of fitness evaluations for the m-objective test instance is set to m*10000.

In the simulated binary crossover and polynomial mutation, the probability of crossover is set to 1 and the probability of mutation is set to 1/V (V is the number of decision variables). The distribution index of both is set to 20. To make a fair comparison, 20 independent runs are performed on each instance for all compared algorithms.

Results on irregular test instances

We first test the performance of NRV-MOEA and the five algorithms under comparison using the IGD\(^{+}\) indicator as the evaluation metric. Problems having irregular PFs and regular PFs are studied separately. The mean value and standard deviation (in parentheses) of each test instance are presented. The results are highlighted in bold and shaded if they are the best among the compared algorithms. The Wilcoxon rank-sum test at a significance level of 0.05 is used to analyze the result of the algorithms, where the symbol ‘+’ means that competing algorithms show significantly better performance than NRV-MOEA, ‘−’ indicates that competing algorithms show significantly worse performance than NRV-MOEA, and ‘\(\approx \)’ means that the results obtained by the two algorithms under comparison are statistically similar.

It can be observed from Table 1 that NRV-MOEA achieves in general the best performance on irregular problems in terms of the IGD\(^{+}\) values, followed by MOEA/D-UR and MaOEA/SRV. NRV-MOEA achieves the best performance on 21 out of 44 test instances. Among these irregular test instances, the true PFs of DPF1 to DPF5 and MaF4 are hard to reach, and the true PFs of MaF1, MaF2 and WFG1 to WFG3 are easy to be found by a multi-objective evolutionary algorithm. Overall, NRV-MOEA is outperformed by other competing algorithms in solving MaF4, suggesting that the convergence speed of NRV-MOEA may be slowed down by the normal vectors. In solving easily converged problems, such as MaF1, NRV-MOEA shows very competitive performance, suggesting that the found solution set is well diversified. Table 2 presents the HV values of solutions obtained by NRV-MOEA and the other five algorithms under comparison. It is observed that NRV-MOEA is slightly outperformed by MaOEA/SRV while performing better than the other four algorithms in terms of HV indicator. Specifically, NRV-MOEA wins over MaOEA/SRV on 12 out of 44 test instances, while MaOEA/SRV wins over NRV-MOEA on 17 out of 44 test instances. The inconsistency between the results in terms of IGD\(^{+}\) and HV could be attributed to the setting of the reference point, i.e., \((1.1,1.1,\ldots ,1.1)\), may not be suitable for all irregular problems in calculating the HV values.

Figures 3 and 4 further plot the solution set with the medium IGD\(^{+}\) values over 20 independent runs obtained by NRV-MOEA, CA-MOEA, MOEA/D-UR, and MaOEA/SRV on DPF3, MaF7, IMOP5, and IMOP8. We can see that NRV-MOEA can reach the true PFs in dealing with these four test instances and the obtained solution set is the most well distributed.

Results on regular test instances

Table 3 presents the IGD\(^+\) results of CA-MOEA, MOEA/D-UR, MaOEA/SRV, VaEA, KnEA, and NRV-MOEA on 5-, 10-, and 15-objective regular test instances. It can be observed from Table 3 that NRV-MOEA achieves the best performance on 21 out of 30 test instances. Results show that NRV-MOEA performs the best on all test problems except for DTLZ1 and DTLZ3, on which problem NRV-MOEA is outperformed by MOEA/D-UR and MaOEA/SRV. NRV-MOEA is able to approximately search for the whole PFs, as presented in Fig. 5. We can see that CA-MOEA is not able to approach the true PFs on DTLZ2 and WFG9. MOEA/D-UR and MaOEA/SRV are able to approach the true PFs, and, however, that they can obtain a part of the true PFs only, especially for MOEA/D-UR. The reason could be that the frequency of reference vector adaptation in MOEA/D-UR is set to so low that some promising solutions may get lost during the interval of reference vector adaptation. The performance of NRV-MOEA in terms of HV indicator values, as shown in Table 4, is consistent with the performance in terms of IGD\(^+\) values, mainly because the setting of reference point, i.e., (\(1.1,1.1,\ldots ,1.1\)), for the calculation of HV values, has been demonstrated to be reasonable in dealing with irregular test problems.

We believe that there are two reasons why our algorithm also performs very well in problems with regular PFs. First, our algorithm is able to preserve a set of well-distributed solutions in the hyperplane-based environmental selection, which helps to outline the overall PF. We use \(\epsilon \) indicator in the archive management mainly for compensating the situation of the convergence speed being slowed caused by the normal vectors. Since the \(\epsilon \) indicator focuses more on the convergence, as studied in Two_arch2 [49], we use the \(\epsilon \) indicator to further select a set of well-converged solutions and let these solutions participate in the process of offspring generation, with the expectation that both well-converged and well-distributed solutions can be generated during the search process In combination with a \(\epsilon \)-based archive management strategy, the solutions in both the current population and the archive are used for generating new offspring, ensuring that the well-converged solutions in the archive also have a chance to involve in the generation of new offspring. By doing this, NRV-MOEA can strike a good balance between convergence and diversity. Second, we control the scale of different objectives for the normalization of solutions using a fixed frequency to avoid the hyperplane being rapidly changed, which will then alleviate the convergence speed from being slowed down to some extent.

From the above experimental results, it can be concluded that the proposed NRV-MOEA can handle MOPs and MaOPs with a wide range of objective numbers, from MOPs with two-to-three objectives to MaOPs with up to 15 objectives. Meanwhile, NRV-MOEA performs well on MOPs and MaOPs with various kinds of PFs, regardless of regular or irregular.

Average performance indicator in terms of IGD\(^+\) and HV

The Friedman’s test implemented in the software tool KEEL [50] is used to rank NRV-MOEA and the five algorithms under comparison by solving all regular and irregular test instances. Figure 6 plot the average performance indicator values in terms of IGD\(^+\) and HV. It can be seen that NRV-MOEA ranks the best in terms of both IGD\(^+\) and HV values, followed by MaOEA/SRV and MOEA/D-UR, which is consistent with the results in Tables 1, 2, 3, and 4.

Optimization of the polyester fiber esterification process

Polyester fiber has the characteristics of high strength, high modulus, and low water absorption, and therefore is the most widely used in civil and industrial fabric. The esterification in polymerization process is a key step in polyester fiber production. There are three main steps in the esterification process, namely esterification, pre-poly-merization, and final polymerization. A diagram of the esterification process is shown in Fig. 7.

During the esterification process [51], the reactor pressure, the temperature, and the residence time of the polymeric reaction mass inside the reactor can affect three performance indexes of polyester fibers, namely, the average molecular mass (\({M_n}\)), the esterification rate (\({E_s}\)), and the percent of diethylene glycol (\({W_t}\)). The higher the average molecular mass (\({M_n}\)) and esterification rate (\({E_s}\)), the lower the percent of diethylene glycol (\({W_t}\)), the better the property of polyester fibers will be. A detailed mechanism model can be found [51].

Therefore, the optimization of the esterification process is formulated as a three-objective minimization problem, i.e., minimization of \(1/{M_n}\), \(1/{E_s}\) and \({W_t}\). Here, we compare the performance of NRV-MOEA with MOEA/D-UR and MaOEA/SRV. The population size is set to 100 for all algorithms, and the maximum iteration number is set as 300, as recommended in [51]. The simulation results are presented in Fig. 8. It can be seen from Fig. 8 that NRV-MOEA restores the distribution of the Pareto surface to the greatest extent, while the breadth and uniformity of the non-dominated solution set obtained by MOEA/D-UR and MaOEA/SRV are deficient.

The results in terms of HV [52] and IGD\(^+\) values are listed in Table 5. As the HV values may heavily depend on the reference point [53], we also calculate the IGD\(^+\) values for a fair comparison. Here, the reference points for calculating IGD\(^+\) are obtained by identifying the non-dominated individuals in the set of all final individuals obtained by the three comparison algorithms over 20 independent runs. Table 5 presents the mean and standard deviation (in parentheses) of the IGD\(^+\) and HV values over 20 independent runs. The performance of these algorithms is further rigorously compared using the Wilcoxon rank-sum test at a significance level of 0.05. The results indicate that NRV-MOEA is significantly better than the compared algorithms in the optimization of polyester fiber esterification process.

Conclusion

In this paper, a novel evolutionary algorithm named NRV-MOEA is proposed for dealing with a wide range of multi-/many-objective optimization problems. The main idea is to cluster the non-dominated individuals mapped onto the hyperplane to generate normal vectors passing through the cluster centers, and then use these normal vectors to select individuals with better convergence and diversity from each cluster. The proposed NRV-MOEA is compared with five selected algorithms on 74 test instances with 2–15 objectives and various shapes of PFs. The results on all the test problems demonstrate that NRV-MOEA is able to obtain competitive results on both MOPs and MaOPs with regular or irregular PFs.

NRV-MOEA is slightly less competitive in dealing with multi-modal problems such as DTLZ1 and DTLZ3, which can be attributed to the fact that the hyperplane-based approach may emphasize more diversity than convergence in the environmental selection. Thus, our future work will focus on designing more competitive hyperplane-assisted environmental selection strategies to better account for diversity and convergence.

Data Availability

The data that support the findings of this study are available from the corresponding author, Dr. Qiqi Liu, upon reasonable request.

References

Zhou A, Qu B-Y, Li H, Zhao S-Z, Suganthan PN, Zhang Q (2011) Multiobjective evolutionary algorithms: a survey of the state of the art. Swarm Evol Comput 1:32–49

Zhao Z, Liu S, Zhou M, Abusorrah A (2021) Dual-objective mixed integer linear program and memetic algorithm for an industrial group scheduling problem. IEEE/CAA J Autom Sin 8:1199–1209

von Lücken C, Barán B, Brizuela C (2014) A survey on multi-objective evolutionary algorithms for many-objective problems. Comput Optim Appl 58:707–756

Hua Y, Liu Q, Hao K, Jin Y (2021) A survey of evolutionary algorithms for multi-objective optimization problems with irregular pareto fronts. IEEE/CAA J Automat Sin 8:303–318

Li B, Li J, Tang K, Yao X (2015) Many-objective evolutionary algorithms: a survey. ACM Comput Surv 48:1–35

Ming F, Gong W, Wang L (2022) A two-stage evolutionary algorithm with balanced convergence and diversity for many-objective optimization. IEEE Trans Syst Man Cybern Syst 52:6222–6234

Chen L, Liu H-L, Tan KC, Cheung Y-M, Wang Y (2019) Evolutionary many-objective algorithm using decomposition-based dominance relationship. IEEE Trans Cybern 49:4129–4139

Wang H, Yao X (2014) Corner sort for pareto-based many-objective optimization. IEEE Trans Cybern 44:92–102

Zhang Q, Li H (2007) Moea/d: a multiobjective evolutionary algorithm based on decomposition. IEEE Trans Evol Comput 11:712–731

Deb K, Jain H (2014) An evolutionary many-objective optimization algorithm using reference-point-based nondominated sorting approach, part i: Solving problems with box constraints. IEEE Trans Evol Comput 18:577–601

Cheng R, Jin Y, Olhofer M, Sendhoff B (2016) A reference vector guided evolutionary algorithm for many-objective optimization. IEEE Trans Evol Comput 20:773–791

Jiang S, He X, Zhou Y (2019) Many-objective evolutionary algorithm based on adaptive weighted decomposition. Appl Soft Comput 84:105731

Li J, Chen G, Li M, Chen H (2020) An enhanced-indicator based many-objective evolutionary algorithm with adaptive reference point. Swarm Evol Comput 55:100669

Tang W, Liu H-L, Chen L, Tan KC, Cheung YM (2020) Fast hypervolume approximation scheme based on a segmentation strategy. Inf Sci 509:320–342

Li K, Wang R, Zhang T, Ishibuchi H (2018) Evolutionary many-objective optimization: a comparative study of the state-of-the-art. IEEE Access 6:26194–26214

Jain H, Deb K (2014) An evolutionary many-objective optimization algorithm using reference-point based nondominated sorting approach, part ii: Handling constraints and extending to an adaptive approach. IEEE Trans Evol Comput 18:602–622

Li M, Yao X (2020) What weights work for you? adapting weights for any pareto front shape in decomposition-based evolutionary multiobjective optimisation. Evol Comput 28:227–253

Tian Y, Cheng R, Zhang X, Cheng F, Jin Y (2017) An indicator-based multiobjective evolutionary algorithm with reference point adaptation for better versatility. IEEE Trans Evol Comput 22:609–622

Zou J, Zhang Z, Zheng J, Yang S (2021) A many-objective evolutionary algorithm based on dominance and decomposition with reference point adaptation. Knowl-Based Syst 231:107392

Liu Q, Jin Y, Heiderich M, Rodemann T (2023) Coordinated adaptation of reference vectors and scalarizing functions in evolutionary many-objective optimization. IEEE Trans Syst Man Cybern Syst 53:763–775

Liu Y, Ishibuchi H, Masuyama N, Nojima Y (2019) Adapting reference vectors and scalarizing functions by growing neural gas to handle irregular Pareto fronts. IEEE Trans Evol Comput 24:439–453

Liu Q, Jin Y, Heiderich M, Rodemann T, Yu G (2020) An adaptive reference vector-guided evolutionary algorithm using growing neural gas for many-objective optimization of irregular problems. IEEE Trans Cybern 52:2698–2711

Hong R, Yao F, Liao T, Xing L, Cai Z, Hou F (2023) Growing neural gas assisted evolutionary many-objective optimization for handling irregular Pareto fronts. Swarm Evol Comput 78:101273

Zhou C, Dai G, Zhang C, Li X, Ma K (2018) Entropy based evolutionary algorithm with adaptive reference points for many-objective optimization problems. Inf Sci 465:232–247

de Farias LR, Araújo AF (2022) A decomposition-based many-objective evolutionary algorithm updating weights when required. Swarm Evol Comput 68:100980

Hua Y, Jin Y, Hao K (2019) A clustering-based adaptive evolutionary algorithm for multiobjective optimization with irregular pareto fronts. IEEE Trans Cybern 49:2758–2770

Liu S, Lin Q, Wong K-C, Coello CAC, Li J, Ming Z, Zhang J (2020) A self-guided reference vector strategy for many-objective optimization. IEEE Trans Cybern 52:1164–1178

Liu S, Yu Q, Lin Q, Tan KC (2020) An adaptive clustering-based evolutionary algorithm for many-objective optimization problems. Inf Sci 537:261–283

Sato H, Nakagawa S, Miyakawa M, Takadama K (2016) Enhanced decomposition-based many-objective optimization using supplemental weight vectors. In: IEEE Congress on Evolutionary Computation (CEC) 2016, p. 1626–1633. https://doi.org/10.1109/CEC.2016.7743983

Wu M, Li K, Kwong S, Zhang Q (2020) Evolutionary many-objective optimization based on adversarial decomposition. IEEE Trans Cybern 50:753–764

Bi X, Wang C (2018) A many-objective evolutionary algorithm based on hyperplane projection and penalty distance selection. Nat Comput 17:877–899

Elarbi M, Bechikh S, Coello Coello CA, Makhlouf M, Said LB (2020) Approximating complex pareto fronts with predefined normal-boundary intersection directions. IEEE Trans Evol Comput 24:809–823

Deb K, Agrawal RB (1995) Simulated binary crossover for continuous search space. Complex Syst 9:115–148

Deb K, Goyal M (1996) A combined genetic adaptive search (geneas) for engineering design. Comput Sci Inform 26:30–45

Long Q, Wu X, Wu C (2021) Non-dominated sorting methods for multi-objective optimization: review and numerical comparison. J Ind Manag Optim 17:1001–1023

Murtagh F, Legendre P (2014) Ward’s hierarchical agglomerative clustering method: which algorithms implement ward’s criterion? J Classif 31:274–295

Tian Y, Cheng R, Zhang X, Jin Y (2017) Platemo: a matlab platform for evolutionary multi-objective optimization [educational forum]. IEEE Comput Intell Mag 12:73–87

Deb K, Thiele L, Laumanns M, Zitzler E (2005) Scalable test problems for evolutionary multiobjective optimization. Springer, London, pp 105–145

Huband S, Barone L, While L, Hingston P (2005) A scalable multi-objective test problem toolkit. In: Coello Coello CA, Hernández Aguirre A, Zitzler E (eds) Evolutionary multi-criterion optimization. Springer, Berlin, pp 280–295

Tian Y, Cheng R, Zhang X, Li M, Jin Y (2019) Diversity assessment of multi-objective evolutionary algorithms: performance metric and benchmark problems [research frontier]. IEEE Comput Intell Mag 14:61–74

Ran C, Li M, Ye T, Zhang X, Yang S (2017) A benchmark test suite for evolutionary many-objective optimization. Complex Intell Syst 3:67–81

Zhen L, Li M, Ran C, Peng D, Xin Y (2018) Multiobjective test problems with degenerate pareto fronts 1–20

Xiang Y, Zhou Y, Li M, Chen Z (2017) A vector angle-based evolutionary algorithm for unconstrained many-objective optimization. IEEE Trans Evol Comput 21:131–152

Zhang X, Tian Y, Jin Y (2015) A knee point-driven evolutionary algorithm for many-objective optimization. IEEE Trans Evol Comput 19:761–776

Ishibuchi H, Masuda H, Tanigaki Y, Nojima Y (2015) Modified distance calculation in generational distance and inverted generational distance. In: International Conference on Evolutionary Multi-criterion optimization, Springer, pp. 110–125

While L, Hingston P, Barone L, Huband S (2006) A faster algorithm for calculating hypervolume. IEEE Trans Evol Comput 10:29–38

Roy PC, Islam MM, Murase K, Yao X (2015) Evolutionary path control strategy for solving many-objective optimization problem. IEEE Trans Cybern 45:702–715

Das I, Dennis JE (1998) Normal-boundary intersection: a new method for generating the pareto surface in nonlinear multicriteria optimization problems. SIAM J Optim 8:631–657

Wang H, Jiao L, Yao X (2015) Two_arch2: an improved two-archive algorithm for many-objective optimization. IEEE Trans Evol Comput 19:524–541

Alcalá-Fdez J, Sanchez L, Garcia S, del Jesus MJ, Ventura S, Garrell JM, Otero J, Romero C, Bacardit J, Rivas VM et al (2009) KEEL: a software tool to assess evolutionary algorithms for data mining problems. Soft Comput 13:307–318

Zhu X, Hao K, Tang X, Wang T, Hua Y, Liu X (2019) The multi-objective optimization of esterification process based on improved nsga-iii algorithm. In: 2019 12th Asian Control Conference (ASCC), pp. 603–608

Bader J, Zitzler E (2011) Hype: an algorithm for fast hypervolume-based many-objective optimization. Evol Comput 19:45–76

Ishibuchi H, Imada R, Setoguchi Y, Nojima Y (2017) Reference point specification in hypervolume calculation for fair comparison and efficient search. In: Proceedings of the Genetic and Evolutionary Computation Conference, GECCO ’17, Association for Computing Machinery, New York, NY, USA, p. 585–592. https://doi.org/10.1145/3071178.3071264

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grant Nos. 62302147, 62136003, and 62306097. The authors would like to thank Prof. Yaochu Jin and A.P. Ran Cheng for their guidance and suggestions on this work. They would also like to thank Prof. Handing Wang, A.P. Ye Tian for their helpful discussions. They would also like to thank Xiuli Zhu for her technical assistance of the work in Sect. 5. Finally, Yicun Hua would like to thank A.R. Yuan Cao for his assistance in implementing the algorithm.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper. The authors declare the following financial interests/personal relationships which may be considered as potential competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hua, Y., Liu, Q. & Hao, K. Adaptive normal vector guided evolutionary multi- and many-objective optimization. Complex Intell. Syst. 10, 3709–3726 (2024). https://doi.org/10.1007/s40747-024-01353-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-024-01353-y