Abstract

Light guide plate (LGP) is a key component of liquid crystal display (LCD) display systems, so its quality directly affects the display effect of LCD. However, LGPs have complex background texture, low contrast, varying defect size and numerous defect types, which makes realizing efficient and accuracy-satisfactory surface defect automatic detection of LGPS still a big challenge. Therefore, combining its optical properties, dot distribution, defect imaging characteristics and detection requirements, a surface defect detection algorithm based on LGP-YOLO for practical industrial applications is proposed in this paper. To enhance the feature extraction ability of the network without dimensionality reduction, expand the effective receptive field and reduce the interference of invalid targets, we built the receptive field module (RFM) by combining the effective channel attention network (ECA-Net) and reviewing large kernel design in CNNs (RepLKNet). For the purpose of optimizing the performance of the network in downstream tasks, enhance the network's expression ability and improve the network’s ability of detecting multi-scale targets, we construct the small detection module (SDM) by combining space-to-depth non-strided convolution (SPDConv) and omini-dimensional dynamic convolution (ODConv). Finally, an LGP defect dataset is constructed using a set of images collected from industrial sites, and a multi-round experiment is carried out to test the proposed method on the LGP detect dataset. The experimental results show that the proposed LGP-YOLO network can achieve high performance, with mAP and F1-score reaching 99.08% and 97.45% respectively, and inference speed reaching 81.15 FPS. This demonstrates that LGP-YOLO can strike a good balance between detection accuracy and inference speed, capable of meeting the requirements of high-precision and high-efficiency LGP defect detection in LGP manufacturing factories.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

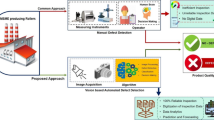

During the process of manufacturing a product, surface defect detection assumes a significant role in on-site quality control. As a vital element of liquid crystal display (LCD) backlight modules, the light guide plate (LGP) possesses noteworthy attributes such as extraordinary thinness, heightened transparency, elevated reflectivity, homogeneous light guidance, and remarkable brightness. Consequently, it finds extensive employment in the display systems of intelligent devices. The configuration of a prototypical LCD screen is shown in Fig. 1 [1]. The lower surface of the LGP is permeated with numerous light guide points, exhibiting distinct sizes and varying densities. As the lateral line of light encounters these light guide points, the resultant reflected rays disperse at diverse angles, thereby ensuring the uniform emission of light by the LGP. In the course of LGP production, it is inevitable that some freshly manufactured LGPs will possess imperfections, such as white spots, black spots, scratch lines, recessed areas, and shadows. Regrettably, these defects significantly impede the performance of the display system.

The detection of LGP defects poses several challenges, including the presence of complex texture background, low contrast, and small defect size. Resorting to conventional detection methods, such as manual inspection, would inevitably result in reduced detection efficiency, while also augmenting the likelihood of errors and omissions. Such discrepancies not only inflict economic losses upon the factory but also engender potential safety hazards for consumers. Hence, it becomes imperative that newly manufactured LGPs undergo rigorous defect detection prior to their departure from the factory.

The primary motivations of the work

In contemporary LGP production factories, the manual inspection of LGP defects remains the predominant method, whereby human testers employ optometry devices. Nonetheless, this approach is marred by several deficiencies, including a notable decline in both the accuracy and speed of defect detection following prolonged periods of continuous testing. With the advent of deep learning within the realm of artificial intelligence, the employment of deep learning algorithms for defect detection has become pervasive across various industries, encompassing solar panels, optical films, LCD screens, magnetic tiles, textiles, and other products. By leveraging machine vision and deep learning, the automated optical detection of LGP defects can profoundly enhance both the accuracy and speed of defect detection, thereby facilitating the optimization of the LGP production process.

Innovation aspects

However, LGP defect detection presents extremely high requirements on the detection method/system for three reasons: (1) LGPs have complex image background texture, and their defects are characterized by rich types and varied sizes. (2) The low contrast between some LGP defects and the background gives rise to missed detection and false detection. (3) The image size of an LGP can exceed 400 MB, so the detection model must have high inference speed to meet the speed requirement of online detection. At present, several detection methods of LGP have been proposed, such as LGP defect detection method based on dynamic weight combination classifier (CCDW) [2], LGP defect detection method based on dense bilinear convolutional neural network (BCNN) [3], LGP defect detection method based on semantic segmentation enabled by deep learning [4], end-to-end multitask learning network for detecting defects of mobile phone LGPs [5], HM-YOLOv5 network for detecting defects of hot-pressed LGPs [6], method for detecting defects of vehicle mounted LGPs based on improved RetinaNet [7], etc. But they all have shortcomings in accuracy and inference speed, especially for the accuracy of multi-scale targets, which makes it difficult to satisfy the actual quality inspection requirements of LGP.

Although two-stage target detection networks such as R-CNN [8], SPP-Net [9], FastR-CNN [10], and FasterR-CNN [11] have relatively high accuracy, their speed is relatively slow. In comparison, single-stage object detection networks such as SSD and YOLO series (YOLOv3 [12], YOLOv4 [13], YOLOv5 [14], YOLOv6 [15], and YOLOv7 [16]) have relatively high inference speed and relatively low accuracy. For this reason, the object detection networks cannot be directly applied in LGP defect detection. To meet this challenge, we constructed the receptive field module (RFM) and the small detection module (SDM) to improve YOLOv7, the single-stage object detection network with the highest performance up to now, obtaining a new LGP defect detection network LGP-YOLO. The LGP-YOLO network can achieve high detection accuracy and high inference speed at the same time, and has demonstrated satisfactory performance in an intelligent visual detection system for detecting surface defects of LGPs.

Contributions

The main contributions of this paper are as follows:

-

1.

The RFM is constructed by combining the ECA-Net channel attention mechanism and RepLKNet. The RFM can integrate channel and spatial information, thereby directing more attention to the defect targets and improving the feature extraction ability of the network. This arrangement can expand the effective receptive field of the network, improve the defect detection ability of the network, and effectively reduce the network parameter quantity and computational complexity.

-

2.

An SDM is constructed by combining the SPD-Conv module and ODConv. This arrangement can effectively reduce the loss of fine-grain feature information, enhance the network expression of features, and improve the small target detection ability of the network and the accuracy in detecting multi-scale targets.

-

3.

A solution for LGP defect detection based on LGP-YOLO is proposed on the basis of constructing the RFM and SDM, achieving a good balance between defect detection accuracy and network inference speed. The solution has been successfully applied in online real-time detection of LGP defects.

Addressing the sections

The remainder of this paper is arranged as follows: Part 2 summarizes relevant research work; Part 3 analyzes the challenges and the corresponding solutions in LGP defect detection; Part 4 analyzes the RFM and SDM, as well as the improved LGP-YOLO detection network; Part 5 describes the dataset of LGP defects used in this study and a series of experiments (ablation, comparison) carried out on the dataset and analyzes the experimental results; Part 6 is the conclusion of this paper. Tables 1 and 2, respectively, summarize the abbreviations and variables mentioned in this paper.

Related work

In recent years, defect detection methods based on deep learning have been widely used to detect defects of various objects such as steel components, wooden products, and LCD screens. Aiming to meet the need of detecting defects of hot-rolled steel plates, He et al. [17] proposed a new object detection framework called classification priority network (CPN) and a new classification network called multi-group convolutional neural network (MG-CNN) for detecting steel surface defects, achieving a mean average precision (mAP) level over 96%. He et al. [18] developed a hybrid fully convolutional neural network for wood defect detection, achieving an mAP of 99.14%. Ding et al. [19] proposed a PCB surface defect detection network that makes use of k-mean values to obtain reasonable anchor frames through clustering. They also introduced a multi-scale pyramid network into Fast RCNN to enhance the fusion of feature information obtained from the bottom layer and improve the accuracy of the network in detecting small defects; Lin et al. [20] proposed an automatic steel surface detection method based on deep learning, which can detect steel surface defects with higher efficiency and accuracy; Zhao et al. [21] developed PB-YOLOv5 lightweight detection network based on YOLOv5 for detecting defects on the surface of particle boards. They successfully reduced the network scale and improved the detection efficiency of YOLOv5 by adding the Ghost Bottleneck lightweight deep convolution module and SELayer module of attention mechanism to the YOLOv5 detection algorithm, and the resulting PB-YOLOv5 network is lightweight enough to be embedded into devices with small computing power. Yang [22] developed an asymptotic tracking with novel integral robust schemes for mismatched uncertain nonlinear systems, extend the robust integral of the sign of the error (RISE) feedback control to handle both matched and mismatched disturbances simultaneously. Xie et al. [23] developed a surface defect detection algorithm based on feature-enhanced YOLO (FE-YOLO) for application in real industrial scenes. They improved detection accuracy under high intersection over union (IoU) threshold by combining depthwise separable convolution and dense connection and establishing a new prediction box regression loss function. Yang et al. [24] developed a neuroadaptive learning algorithm for constrained nonlinear systems with disturbance rejection. On the basis of constraint demands for control input amplitude, output performance, and system states, it effectively reduce endogenous and exogenous disturbances. He et al. [25] developed a floating object detection algorithm based on YOLOv5 to solve the problems of low accuracy and slow speed in floating object detection. By introducing smooth tags and enhancing the extraction of floating object features using the initial topology, they successfully reduced the network burden and suppressed over-fitting in the network training process, achieving an mAP of 96.48% and a detection speed of 30 fps. Zeqiang et al. (2022) [26] proposed Attention-YOLOv5 algorithm to solve the problems of low accuracy and slow speed in the surface defect detection of strip steel products. They improved the algorithm's ability of defect feature extraction by adding a convolutional layer to the tail section of the pooling structure of the spatial pyramid, achieving an mAP of 87.3%.

However, studies dealing with the topic of developing LGP defect detection algorithms based on deep learning are rare in the literature. Ming et al. (2019) [2] proposed an LGP defect detection method based on a dynamic weight combination classifier (CCDW), which has poor network accuracy and slow inference speed, therefore, is not suitable for online LGP defect detection. On the basis of analyzing the edge and regional features of defective LGPs, Hong et al. [3] developed an LGP defect detection method based on dense bilinear convolutional neural network (BCNN). Liu et al. [4] proposed an LGP defect detection method based on semantic segmentation enabled by deep learning, achieving a detection rate of 96% for defects such as white points, black points, and scratches. Li et al. [5] proposed an end-to-end multitask learning network for detecting defects of mobile phone LGPs. They adopted a multitask learning network as the main structure of the detection network, designed a segmentation head for the detection network, and made use of multi-scale feature fusion to accurately locate each defect in the image, achieving an F1-score of 99.67% on a mobile phone LGP dataset and 96.77% on the Kolektor surface defect dataset. Li and Yang [6] proposed HM-YOLOv5 network for detecting defects of hot-pressed LGPs. They reduced information loss and improved the defect detection ability of the network by constructing a hybrid attention module (HAM) and a pyramid-structured multi-unfolding convolutional module (MCM), achieving an mAP of 98.9% on a self-built LGP dataset. Li et al. [7] proposed a method for detecting defects of vehicle-mounted LGPs based on improved RetinaNet. They improved the ability of the detection network in fusing shallow semantic information and deep semantic information and detecting small targets by replacing RetinaNet’s backbone network with ResNeXt50 and inserting lightweight module Ghost_module into the backbone layer, achieving an accuracy of 98.6% on a vehicle LGP defect dataset The above studies have promoted the development of LGP defect detection techniques to some extent, achieving progress in improving detection accuracy, inference speed and robustness of detection networks. A summary of related works is provided in Table 3 to make it clear.

However, to effectively detect and classify defects with finer granularity and strike good balance between detection accuracy and detection speed, we need to find detection models with less parameters and stronger feature extraction ability. Therefore, this article proposes the LGP-YOLO network for high-precision real-time online detection of LGP defects.

Characteristics of LGP defects and process in detecting LGP defects

Characteristics and causes of LGP defects

The defects of the LGPs described in this paper can be divided into five types: white point defects, black point defects, bright line defects, dark line defects, and regional defects, as shown in Fig. 2. Due to the low difficulty of identifying regional defects, defects of this type will not be distinguished by contrast. The characteristics and causes of LGP defects are as follows:

-

1.

Some examples of white point defects are shown in Fig. 2a. White point defects, with a size of 5–20 pixels, have high contrast with the background. A white point defect is formed when multiple light guide points generated in the production process are too close to each other that a contiguous area is formed, so white point defects have a larger area compared with normal light guide points.

-

2.

Some examples of black dot defects are shown in Fig. 2b. Black point defect have a low contrast with the background. They have an average size close to that of white point defects but have a flatter shape than white point defects.

-

3.

Some examples of bright line defects are shown in Fig. 2c. Bright line defects, with a width less than 1 pixel, have high contrast with the background. They are generally caused by scratching on the LGP surface due to improper handling and storage. This type of defects is difficult to discern with the naked eyes, therefore, is difficult to detect.

-

4.

Some examples of dark line defects are shown in Fig. 2d. Dark line defects have low contrast with the background, and their width and shape are similar to those of bright line defects. The width and contrast of dark line defects dictate that this type of defects ranks no.1 among all defect types in terms of both false detection and missed detection.

-

5.

Some examples of regional defects are shown in Fig. 2e. Regional defects have a size greater than 20 pixels. A regional defect is formed when the light guide points in a certain region are damaged during the production process thus forming a large area of shadow.

Difficulties in LGP defect detection

According to the defect imaging characteristics and the defect detection requirements of LGPs, the key difficulties in LGP defect detection are as follows:

-

1.

The distribution of light guide points on an LGP is disorderly and uneven, so LGPs have a very complex image background texture. In addition, the LGP images have low contrast and uneven brightness, which further increase the chance of making errors in detection.

-

2.

White point defects of LGPs look very similar to dust particles on the image in terms of size and brightness, giving rise to errors during detection.

-

3.

LGP defects of different types differ greatly from each other in size, which requires that the detection network has the ability to detect both small and large targets at the same time.

Process in LGP defect detection in real-world

In Fig. 3, we show the operation process in the real-world of LGP defect detection. First, the LGP image is photo by the hardware part and input to the software part. Secondly, the LGP image is preprocessed (such as region division and clipping) in the software part and transferred into the LGP-YOLO network proposed in this paper. Finally, after the detection, the LGP-YOLO network generates the output of image, complete the LGP defect detection in the real-world.

LGP defect detection method based on LGP-YOLO

Algorithmic details of YOLOv7

As one of the latest basic networks in the YOLO series, YOLOv7 outperforms most known real-time object detectors in terms of detection speed and detection accuracy in the range of 5 FPS to 160 FPS. Among known real-time object detectors above 30 FPS, YOLOv7 also has the highest accuracy [27]. The backbone network of YOLOv7 is composed of three parts: backbone network, neck and head. The backbone network mainly consists of multiple convolution layers (CBSes), efficient layer aggregation networks (ELANs), extended ELAN (E-ELAN) modules, MaxPooling and Conv (MPConv) and SPPCSPC modules. The backbone network is responsible for extracting image features, and transferring the extracted image features to the neck. In the neck, YOLOv7 adopts a PAFPN structure and performs stack scaling, which are the same as YOLOv5. The neck fuses high-level features and low-level features to obtain features of three sizes: large, medium and small, and transfers the fused features separately into the IDetect modules responsible for processing large, medium and small features in the detection head to accomplish the integration of high-resolution information and high-level semantic information. The detection head decouples the high-resolution information received from the neck, identifying the category and position of each target. The detection process of YOLOv7 network is shown in Fig. 3: YOLOv7, as a single stage detector, firstly segments the input image into \(S\times S\) (S = 4 in Fig. 4) equally sized grids to facilitate the division of the detection range. Then, each segmented grid is parallelly predicted for the position of the detection border and the category of the target, and the prediction results are output. However, there may be multiple overlapping prediction boxes in the prediction results. To eliminate this phenomenon, we finally introduce a non-maximum suppression algorithm (NMS) to eliminate redundant predictors and complete detection.

Differing from other YOLO series networks, YOLOv7 is furnished with a scalable and high-efficiency layer aggregation network E-ELAN in its backbone network. This structure is in essence equivalent to two parallel ELANs with their outputs connected together. In this way, the problem of the number of computing units increasing indefinitely with the iteration is avoided through expanding, transforming and fusing the cardinal numbers, which destroys the stable state of the original gradient path thus accelerating the convergence of the network. In addition, E-ELAN changes the feature structure of the computing block without changing the structure of the original conversion layer, which can effectively improve the parameter calculation efficiency of the YOLOv7 network.

The detection head of YOLOv7 uses a re-parameterized convolution (RepConv). RepConv consists of identify mappings of 3 × 3 convolutions and 1 × 1 convolutions. It adopts a straight tube structure, combining 3 × 3 and 1 × 1 convolution sum units in one convolution layer. YOLOv7 adopts a RepConv without feature links, which avoids disrupting cross-layer interactions in the residual structure thus achieving faster inference speed and higher detection accuracy.

Furthermore, it is feasible to scale the depth and width of the entire network by implementing a composite scaling mechanism on the YOLOv7 network, and the resulting network is YOLOv7-X.

In this study, we experimented extensively with LGP defect detection using the YOLOv7 network. It was found that certain texture and contour characteristics within the medium and shallow layers were not fully extracted, resulting in a significant loss of intricate details during the detection process. Consequently, the overall accuracy in detecting small objects proved to be suboptimal, with pronounced challenges of missed and false detections. Furthermore, the YOLOv7 network's detection head, RepConv, demonstrated inadequate adaptability in identifying multi-scale targets due to its lack of dynamic adjustment capabilities. This limitation not only hindered the accuracy of LGP defect detection but also led to an increase in parameter quantity. In light of these shortcomings, we embarked on improving YOLOv7 by prioritizing the enhancement of its ability to detect objects of varying scales. Our proposed enhancements encompassed expanding the network's receptive field to comprehensively extract texture and contour information from the medium and shallow layers. Additionally, we introduced a dedicated module for small object detection to minimize the loss of fine-grain information. Moreover, the detection head underwent an upgrade aimed at striking a balance between detection accuracy and inference speed, thereby alleviating the incidence of missed and false detections.

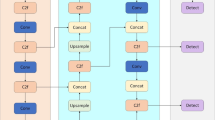

Improved YOLOv7 network

Since the background texture of LGPs is quite complex, strong feature extraction ability is required for achieving high detection accuracy. To this end, we constructed the RFM by combining ECA-Net and RepLKNet. This module was integrated ahead of the final MP (Max-Pooling) module within the backbone network of YOLOv7, so as to expand the effective receptive field, better focus on defect targets and enhance the network's feature extraction ability. Both the point detects and line defects of LGPs belong to small target defects, and the resolution of regional defects is much greater than those of point detects and line defects. These characteristics of LGP defects should be considered in the design of the detection network. Considering the fact that the main reason behind the poor multi-scale target detection performance of YOLOv7 is the loss of a large portion of fine-grain information, we combined SPD Conv and ODConv to construct the SDM and used it to replace the detection head of the YOLOv7 network. This arrangement can effectively reduce the loss of fine-grain feature information without slowing down the inference speed of the network, enhance the network expression of features, and significantly improve the detection accuracy of the network. On the basis of constructing the RFM and SDM, the improved version of the YOLOv7 network shown in Fig. 5 was designed.

RFM

ECA-Net

The structure of ECA-Net is shown in Fig. 6. First, a feature image with a size of \(C\times H\times W\) is input into the network. It undergoes a global average pooling (GAP) process on the spatial dimension to obtain a \(1\times 1\times C\) feature image, thus accomplishing spatial feature compression. Then, the compressed feature image is made to undergo one-dimensional convolution \(k\) (in this paper \(k=5\)), which realizes cross-channel information capturing and assigning different weights to channels. Next, the resulting feature image is processed using an activation function to generate a feature image with a size of \(1\times 1\times C\). Finally, the generated \(1\times 1\times C\) feature image is multiplied by the original input feature image (\(C\times H\times W\)) channel by channel to obtain the final feature image with a size of \(C\times H\times W\). Through cross-channel capturing of interaction information, ECA-Net achieves higher detection accuracy with relatively low network complexity. This approach can improve the performance of various depth CNN architectures. The size of the convolution kernel is adaptively determined by the channel dimension. The calculation formula of the convolution kernel is shown in Eq. (1) [28]:

where \(c\) represents the channel dimension, \(\mathrm{a}\) represents constant 2, \(b\) represents constant 1, and \({\left|n\right|}_{\mathrm{odd}}\) is the odd number closest to n.

In order to reduce the network parameter quantity as much as possible and ensure the lightweight of the network, ECA-Net is also placed in front of the MP module in the backbone network. The improvement on the LGP-YOLO network brought by ECA-Net will be described in detail in “LGP defect detection method based on LGP-YOLO”.

RepLKNet

RepLKNet, as a module with pure CNN architecture, has a convolution kernel size of 31 × 31. As shown in Fig. 7, RepLKNet consists of three parts: stem, stages, and transitions [29]. In addition to deep-wise (DW) super convolution, other modules that make up RepLKNet include DW3 × 3 convolutions, 1 × 1 convolutions and batch normalization modules (BNs). The above modules are mostly small convolution kernels, with a simple structure and a small number of parameters. In addition, RepLKNet uses a re-parameterized structure to increase the size of the convolution kernel and expand the effective receptive field and shape deviation. In addition, a short_cut layer is introduced into RepLKNet to ensure detection efficiency, improve detection accuracy, and enhances the performance of the network in downstream tasks.

RFM

To tackle the difficulties in feature extraction caused by the complex background texture of LGPs, we constructed an RFM by combining ECA-Net, RepLKNet and a 3 × 3 convolution module (Conv). The network structure of the RFM is shown in Fig. 8. The introduction of a 31 × 31 super large convolution kernel through RepLKNet expands the effective receptive field of the network, and ECA-Net reduces the complexity of the network and suppresses the interference of invalid information such as background texture. The spatial information bits extracted by ECA-Net and RepLKNet are added up and input into the Conv module with a convolution kernel size of 3 × 3. In the RFM, the number of channels in the Conv module is set to 256. RFM is placed behind the last ELAN module in the backbone layer, which can expand the effective receptive field and enhance the feature extraction ability of the network.

SDM

SPDConv

SPDConv adopts a CNN architecture, and replaces strided convolution and pooling layer operations with deep-wise convolution (SPD) and non-strided convolution (stripe = 1). This arrangement enables SPDConv to preserve all information on the channel dimensions and effectively avoid some problems caused by the use of stride convolution and pooling layer in the traditional CNN architecture (including the loss of fine-grain feature information and the decrease of the feature expression ability of the network). The working process of SPDConv is shown in Fig. 9. Take the case of scale = 2 as an example, suppose that an intermediate feature mapping with a size of \(S\times S\times {C}_{1}\) is sliced into a series of sub-feature mappings through depth-wise convolution. The definition of sub-feature mapping is shown in Eq. (2):

As shown in Fig. 9, four sub-feature mappings \({f}_{\mathrm{0,0}}\), \({f}_{\mathrm{1,0}}\), \({f}_{\mathrm{0,1}}\) and \({f}_{\mathrm{1,1}}\) are obtained through the processing shown in Eq. (2). The sub-feature mappings are interconnected through channel dimensions to obtain a feature mapping \({X}_{0}\), and this feature mapping is input into the non-strided convolution layer. Feature mapping \({X}_{0}\) is further transformed into feature mapping \({X}^{^{\prime\prime} }(\frac{S}{\mathrm{scale}},\frac{S}{\mathrm{scale}}{,c}_{2})\) through the filtering of C2. Finally, the transformed mapping \({X}^{^{\prime\prime} }\) is output from SPDConv [30].

ODConv

ODConv, as a full-dimension dynamic convolution module, carries out learning along all four dimensions of the kernel space in any convolution layer using a multi-dimension attention mechanism and a parallel strategy. ODConv adds four different types of attention mechanisms to four dimensions. The attention mechanism of ODConv is depicted in Fig. 10. Figure 10 shows the spatial coordinate multiplication operation along the space dimension, Fig. 10 shows the channel multiplication operation along the input channel dimension, Fig. 10 shows the filter multiplication operation along the output channel dimension, and Fig. 10 shows the convolution kernel dimension multiplication operation along the convolution kernel spatial dimension [31]. The introduction of the above-mentioned four attention mechanisms can reduce the number of extra parameters of the network, improve the expression ability of the network, and enhance the feature extraction performance of CNN basic convolution operations. The definition of ODConv is shown in Eq. (3):

where, \({\alpha }_{\mathrm{w}1}\in R\) represents the attention scalar of the convolution kernel \({W}_{1}\); \({\alpha }_{s1}\in {R}_{k}\times k\), \({\alpha }_{ci}\in {R}_{cin}\) and \({\alpha }_{f1}\in {R}_{\mathrm{cout }}\) represent the attention mechanisms calculating along the space dimension, input channel dimension, and output channel dimension, respectively; and \(\odot \) represents the multiplication operation on different dimensions of the convolution kernel space.

SDM

To tackle the difficulties in defect detection caused by the large defect resolution range and a large proportion of small target defects, we combined SPDConv, 3 × 3 convolution (Conv) and ODConv to construct the SDM. The network structure of the SDM is shown in Fig. 11. The combined use of SPDConv and 3 × 3 convolution can reduce the loss of fine-grain feature information, and improve the small target detection ability of the network. The SDM can amplify \(4C\) channels of output features simultaneously, and transfers the result to the detection head ODConv. Compared with the original detection head RepConv of YOLOv7, ODConv has the advantage of using a multi-dimension attention mechanism and a parallel strategy, and can dynamically adjust the convolution kernel size to suit the detection targets, resulting in fewer extra network parameters, lower network complexity and stronger network expression ability. Replacing the original detection head of YOLOv7 with the SDM can optimize the performance of the network in downstream tasks, enhances the network expression ability, and improve the network's ability in detecting multi-scale targets. In this way, the difficulties in defect detection caused by a large defect resolution range and a large proportion of small target defects can be effectively dissolved.

Loss function

In the field of deep learning, the loss function is used to measure the discrepancy between the predicted value and the true value of the network. It is an important network performance indicator. The original loss function (Loss) of YOLOv7 consists of three components: confidence loss function \({L}_{\mathrm{conf}}\), location loss function \({L}_{\mathrm{box}}\), and classification loss function \({L}_{\mathrm{cls}}\). The definition of loss function is shown in Eq. (4):

where \({\lambda }_{\mathrm{conf}}\) represents the confidence loss weight, \({\lambda }_{\mathrm{box}}\) represents the location loss weight, and \({\lambda }_{\mathrm{cls}}\) represents the classification loss weight.

The definition of \(\mathrm{IoU}\) between real box \(A\) and predicted box \(B\) is shown in Eq. (5):

In this paper, CIoU is used as the loss function [13]. The definition of CIoU is shown in Eq. (6):

where \(d\) represents the distance between the center point of the target box and the center point of the predicted box, and \(e\) represents the diagonal distance between the two minimum bounding boxes, as shown in Fig. 12; \(b\) and \({b}^{gt}\) represent the center points of the target box and the predicted box respectively, and \(\rho \) represents the Euclidean distance between the two points; \(\alpha \) represents the equilibrium parameter defined as Eq. (9); \(v\) is used to indicate the consistency in measuring aspect ratio.

The definition of \(v\) is as follows:

In addition, \(b\), \({b}^{gt}\) and \(\rho \) satisfy the formula for calculating the distance d from the center point of the target box:

Experiment and analysis

LGP defect detection system

The LGP defect detection system is shown in Fig. 13. This system primarily encompasses three distinct subsystems, namely mechanical transmission, image acquisition, and image processing. The mechanical transmission subsystem assumes the crucial responsibility of conveying LGPs from the production line to the inner recesses of the LGP defect detection apparatus. On the other hand, both the image acquisition subsystem and the image processing subsystem are entrusted with the duty of discerning and characterizing the defects inherent in each individual LGP. For the purpose of capturing impeccably detailed LGP images, thereby ensuring optimal defect visibility, the LGP defect detection system incorporates a DELSA 16 k line scanning camera in conjunction with a multi-angle light source. Moreover, the image processing subsystem is instrumental not only in examining the authentic performance of the LGP but also in conducting practical application testing through the utilization of training weights specific to the model.

LGP defect dataset (LGP SDD)

Dataset plays a crucial role in the training process, and its quality directly affects the accuracy of defect detection. In the context of this study, the dataset comprises original images gathered by the LGP defect detection system that operates in tandem with LGP production lines. The original LGP images were cropped to obtain single-channel grayscale sample images with a resolution of 224 × 224.

The LGP surface defect dataset contains 6,160 images with 6267 annotations. The defect types include dark shadow, bright line, dark line, white dot, and black dot. Table 4 shows the number of LGP images in each defect type. During the experiment, the dataset was divided into the training set, validation set and testing set in a 7:2:1 ratio, consisting of 4312, 1232, and 616 images, respectively. Table 5 shows the distribution of the defects of each type in the training set, validation set and testing set.

Mosaic data enhancement

To improve the robustness and detection accuracy of the network, it is advisable to add a data augmentation function at the input section of the network. In this study, the mosaic data augmentation method was adopted. This method works in the following way: First, randomly select 4 images from the training set; Then, let the images undergo random scaling, random cropping and random arranging, and randomly select an image splicing point; Finally, splice the transformed images into a single window based on the splicing point [32]. The process of data augmentation is shown in Fig. 14. This method adds more small samples to the network through steps such as random scaling, thereby making the sample distribution more uniform. This data augmentation will improve the robustness of the network, and splicing 4 images in one window will accelerate the convergence speed of the network.

Experimental environment and parameter settings

The processor is Intel (R) Core (TM) i7-10750H CPU@2.60 GHz; The memory is 32 GB; the graphics card model is Nvidia GTX 3090Ti (single card), with a memory of 24 GB; and the capacity of the hard disk is PM9A1, with the hard disk space of 1 T. The operating system is Windows 11 (64 bit) for the OS; the version of Computational Unified Device Architecture (CUDA) is 11.7; the cuDNN version is 8.6.0, Anaconda for the package manager and environment manager, the deep learning framework uses Pytorch 1.13.1, and the compiler is Python 3.7.

In this study, the COCO dataset pre-training network of YOLOv7 is used for transfer learning, and the relevant hyperparameters are set as follows: The size of the input image is 576 × 576 pixels; The network uses 3 epochs for preheating training, with an initial learning rate of 0.1 and a minimum learning rate of 0.01; The total number of epochs in the training is 300; The momentum is set to 0.937, the optimizer uses SGD (stochastic gradient descent), weight_decay is set to 0.0005; Given the training speed and the graphics memory size, batch size is set to 32, and mosaic data enhancement is enabled. Adjustable control parameters of the experiment are summarized in Table 6.

Among the above, the size of the input image, total number of epochs, and batch size have a major impact on the performance of the network. Size of the input image must be a multiple of 32 to fully transform the feature map; size of the input image determines the resolution of each defect during the training. When the size of the input image is too small, it may cause small target defects such as dark line defects to be difficult to detect. When the size of the input image is too large, it may cause the network to occupy too much graphics card memory, resulting in too long training time. Total number of epochs affects the fitting degree and training time. When a total number of epochs is too small, the results may exhibit underfitting. When total number of epochs is too large, it can lead to a long training time and waste computility. The batch size determines the number of images in each group and the memory usage of the model during the validation. When the batch size is too small, the training results may exhibit underfitting. When the batch size is too large, there may be overfitting in the results, which may occupy a large amount of graphics card memory, resulting in a long training time. Both underfitting and overfitting can lead to a decrease in network performance. In addition, preheating training epoches determine the adjustment time of the preheating learning rate to reduce the oscillation of loss function. Initial learning rate can prevent skipping the loss function during parameter update. Minimum learning rate can prevent the result from converging too slowly. Momentum can prevent the occurrence of local optimal solutions and affect the output result. Weight_decay can reduce overfitting to a certain extent by constraining the norm of the parameter.

Network evaluation indicators

To ensure a comprehensive evaluation of the network, this paper proposes to use several objective evaluation indicators to evaluate the network. The definitions of the evaluation indicators used in this study are as follows:

TP represents the number of LGP defects correctly identified and classified by the network, FN represents the number of LGP defects erroneously classified by the network as non LGP defects, and FP is the number of dots/lines/areas erroneously classified by the network as LGP defects.

Precision means the proportion of correctly detected LGP defects in all detected targets, while recall means the proportion of correctly detected LGP defects in all true LGP defects. Recall is a constraint on accuracy. If the accuracy is too high but the recall is too low, it means that the network has classified too many LGP defects as non-LGP defects. If this phenomenon occurs, the network will give a punishment to the overly high accuracy.

However, neither precision or recall can fully reflect the performance of the network, so F1-score is used as a more balanced indicator [8]. The definition of F1-score is shown in Eq. (13):

In Eqs. (14) and (15), AP represents the area between the PR (precision-recall) curve and the coordinate axis, mAP represents the average AP value of different types of LGP defects, and N represents the number of classes of test samples. Since there are 5 types of LGP defects in the LGP defect dataset used in this study, N = 5.

Ablation experiment

To verify the rationality of the LGP-YOLO network structure and analyze the improvements in the network detection ability brought by the RFM and SDM developed in this study, we introduced ECA-Net, RepLKNet, SPDConv, ODConv, the RFM, and the SDM into the YOLOv7 network separately and tested the performance of the upgraded YOLOv7 network. The experimental results are shown in Table 7.

The experimental results show: adding ECA-Net to YOLOv7 and replacing the original detection head of YOLOv7 separately can reduce the parameter quality by 2.49 M and 0.59 M as well as improve mAP by 0.36% and 0.45% respectively, but cannot improve Fl-score significantly. After ECA-Net is added to the network, the detection speed of the upgraded YOLOv7 can be increased to 95.85 FPS, which is significantly higher than that of the original network (2.13 FPS). Incorporating SPDConv into YOLOv7 offers notable improvements in the network's mAP and precision, amounting to 97.63% and 96.18%, respectively. These values surpass those of the original YOLOv7 network by 1.00% and 3.19% respectively. The AP in detecting white point defects and dark line defects is also enhanced by 1.1% and 2.7%, respectively. Notably, the changes in recall are minimal, indicating that SPDConv effectively tackles false detections but does not significantly improve missed detections. Integrating RepLKNet into YOLOv7 to expand the effective receptive field yields slight improvements in AP across all five defect types. This underscores RepLKNet's ability to enhance the network's feature extraction capacity and overcome challenges posed by the intricate background texture of LGPs. However, for higher detection performance, RepLKNet must synergize more effectively with downstream modules. The addition of RFM to YOLOv7 enhances AP by 1.5%, 1.0%, 2.1%, and 0.6% in detecting white point defects, bright line defects, dark line defects, and regional defects, respectively. Likewise, incorporating SDM into YOLOv7 leads to AP improvements of 1.7%, 2.5%, and 4.1% in detecting white point defects, bright line defects, and dark line defects respectively, elevating mAP to 98.42%, an increase of 1.73%. Combining RFM and SDM in YOLOv7 results in AP enhancements across all five defect categories (4.0% for white point defects, 2.5% for bright line defects, and 4.9% for dark line defects), while boosting the F1-score by 2.9%.

In summary, RFM expands the network’s effective receptive field, augmenting detection accuracy with minimal inference speed loss. SDM proficiently mitigates the loss of fine-grain information, bolstering the network's ability to detect multi-scale targets, particularly smaller ones. However, SDM does lead to a significant reduction in inference speed. When RFM is added to the tail section of the backbone network and the original detection head is replaced with SDM, upstream and downstream tasks experience improvements, respectively. Consequently, the combined use of these two modules substantially enhances the network's detection capability.In summary, the RFM can expand the effective receptive field of the network and improve the detection accuracy with only a small loss of inference speed; The SDM can effectively reduce the loss of fine-grain information and improve the network's ability of detecting multi-scale targets (especially small targets), but it will reduce the inference speed of the network significantly; Adding the RFM to the tail section of the backbone network and replacing the original detection head with the SDM will bring improvements in the upstream tasks and downstream tasks respectively, so the combined use of the two modules can bring greater improvement on the detection capability of the network.

Comparison experiment

To verify the effectiveness and superiority of the LGP-YOLO LGP defect detection network, we conducted a comparison experiment to compare the performance level of LGP-YOLO with those of YOLOv5s [33], YOLOv5S-CA [34], YOLOv5l [35], YOLOv5-improve [36], YOLOv7 [37] and YOLOv7-X. Under the same hardware and software experimental environment, all models are trained under the optimal parameters to keep the fairness of experimental comparisons. The training results of the experimental networks are shown in Table 8, and the training curves and test results are shown in Figs. 15 and 16, respectively.

Table 8 show: when tested on the LGP dataset, YOLOv5s, YOLOv5s-CA, YOLOv5l and YOLOv5-improve can achieve higher detection accuracy (reaching 97.59%,98.01%, 97.84% and 97.71%, respectively), but their inference speeds are relatively slow (which are 51.83, 54.26, 44.48 and 47.85, respectively). YOLOv7 and YOLOv7x have faster inference speeds, which are 93.72 FPS and 75.91 FPS, respectively. However, the detection accuracy of YOLOv7 is only 96.63%. Although YOLOv7-X has a relatively high detection accuracy, its parameter quantity is too large. All the above four networks have a F1-Score level around 96%. Compared with YOLOv7, the LGP-YOLO network proposed in this paper achieves the following improvements: the overall detection accuracy is increased by 2.45%, reaching 99.08% (the detection accuracy improvement is 4.0% for white point defects and 2.8% for dark line defects); Noteworthy enhancements are observed in detecting small targets, multi-scale targets, and low contrast defects. The F1-score experiences a notable increase of 2.22%, reaching 97.77%. Additionally, the detection speed of LGP-YOLO reaches 81.15 FPS, surpassing YOLOv5s, YOLOv5s-CA, YOLOv5l, YOLOv5-improve, and YOLOv7-X, and only trailing behind YOLOv7. The parameter count of LGP-YOLO stands at a mere 39.18 M, approximately 44% lower than that of YOLOv7-X, albeit 0.98 M higher than YOLOv7. Moreover, the FLOP of LGP-YOLO is 9.5G lower than that of YOLOv7, totaling 97.4G with an 8.8% decline. Overall, LGP-YOLO exhibits lower complexity compared to the baseline network YOLOv7. It is apparent that among all networks, the LGP-YOLO network displays heightened confidence in detecting all defect types, establishing its superior detection performance.

Figure 15 shows: the training loss function curve converges rapidly in the first 25 epochs, and the verification loss function curve converges rapidly in the first 50 epochs, and both curves achieve complete convergence in about 100 epochs; The training losses of YOLOv7 and LGP-YOLO are roughly consistent, while LGP-YOLO performs better in validation loss (after 30 epochs, the validation loss of LGP-YOLO is significantly smaller than that of YOLOv7). It is known in Fig. 16, the mAP curve and F1 score curve of LGP-YOLO reach complete convergence around 75 epochs (which is earlier than YOLOv7), and LGP-YOLO surpass YOLOv7 significantly in terms of mAP and F1-score.

In summary, the proposed LGP-YOLO network can greatly improve the detection accuracy with relatively high inference speed and a small increase in parameter quantity, exhibiting salient advantages over other networks. In particular, LGP-YOLO is superior to YOLOv7-X in terms of parameter quantity, FLOP, detection accuracy, inference speed, accuracy, recall, and F1-score. It achieves better detection performance with only about 55% of the parameter quantity of YOLOv7-X, improving mAP by 1.1% and F1-score by 2.25%.

The Grad-CAM for the visual representation of YOLOv7 and LGP-YOLO is shown in Fig. 17. In Grad-The preprocessing process of LGPAM, we can see that LGP-YOLO gives a higher importance for all kinds of LGP defects than baseline network YOLOv7, especially black point defects and black line defects. This means that LGP-YOLO has higher accuracy and more accurate positioning of defects.

Detection process in practical and confusion matrix of LGP

To evaluate the performance of LGP-YOLO network in detecting LGP defects in practical applications, 60 sets of actual LGP images with defects are selected for experiments and divides regions of interest on the LGP images to improve detection efficiency. LGP image resolution is \(\mathrm{25,000} \times \mathrm{16,384}\), region of interest resolution is \(\mathrm{19,008} \times 9792\). The sliding window resolution is \(576\times 576\), moving from left to right and from top to bottom, with a sliding step length of 0.8 times the resolution of the sliding window. In this case, adjacent windows have an overlap of 0.2 times the sliding window resolution, which can eliminate missed LGP defects and improve detection accuracy. After the detection is completed, non-maximum suppression is performed on the overlapping parts of adjacent windows to eliminate duplicate detection. The preprocessing process of LGP images is shown in Fig. 18.

The confusion matrix of LGP defect detection is shown in Fig. 19, where the abscissa of the matrix represents the original category of the target, and the ordinate of the matrix represents the category of the target after classification. If the target is classified as Background_FN, it indicates that the network has made a mistake of missed detection; if the category of the target after classification is different from the correct one, it indicates that the network has made a mistake of false detection. Figure 20 shows some common cases of missed detection and false detection.

It can be known that the network achieves the highest detection accuracy in detecting white point defects, black point defects, and regional defects, without a single error of false detection or missed detection. The detection accuracy for bright line defects stands at 99%, with a negligible 1% false detection probability. Similarly, the accuracy for dark line defects is 98%, with a 1% probability of false detection and missed detection.

During the detection process, a fraction of bright line defects (1%) were mistakenly classified as dark line defects, while a small portion of dark line defects (1%) were erroneously classified as black point defects. Comparative analysis with YOLOv7 reveals that LGP-YOLO has exhibited improved detection accuracy. Specifically, the accuracy of detecting dark line defects, white point defects, and bright line defects has seen respective increases of 3%, 4%, and 4%. Notably, there has been a significant advancement in the detection accuracy of dark line defects misclassified as background, witnessing a noteworthy 4% increment. According to our analysis, the false detection and missed detection errors associated with bright line defects and dark line defects can be attributed to the following factors:

-

1.

Bright line defects and dark line defects are similar in shape and are small, making it difficult to extract features information;

-

2.

Dark line defects and black dot defects have similar resolution and similar contrast with the background, and some of them are small targets, giving rise to false detection;

-

3.

The images in the LGP defect dataset used in this study are manually labeled. As the number of images is quite large (6160), there may be some erroneously labeled images.

Although the LGP-YOLO network makes some missed detection and false detection mistakes in LGP defect detection, it can meet the requirements of LGP detect detection in production lines as long as its detection accuracy exceeds 98%. Therefore, the LGP defect detection network LGP-YOLO proposed in this paper can be applied in quality inspection in LGP manufacturing factories.

Network robustness

To verify the robustness of the LGP-YOLO network, we conducted an experiment on the DeepPCB dataset (from [38]) using the same experimental method as in the experiment on the LGP defect dataset. The images (640 × 640) in the DeepPCB dataset contains several types of defects such as scratches and short circuit on the newly produced PCBs. Compared with the LGP defect dataset, the DeepPCB dataset is characterized by simple background texture and high contrast between defects and the background, so it is relatively easy to extract defect features. However, the defect types of the DeepPCB dataset are more numerous, and the number of sample images (1350) is relatively small. Therefore, the detection network needs a faster convergence speed to prevent over-fitting which is likely to occur when the number of samples is small. The training results are shown in Table 9, the test results and confusion matrix are shown in Figs. 21 and 22, respectively.

It can be seen from Table 9, Figs. 21 and 22 that the proposed LGP-YOLO network excels on all indicators, which proves that LGP-YOLO has excellent robustness.

Real-world application of LGP-YOLO

To ensure the operability and performance of LGP-YOLO in practical applications, researchers have conducted practical operations at Highbroad Corporation and CHIN MING SHAN OPTRONICS CORPORATION. The actual operation is shown in Fig. 23. After actual testing, the accuracy of LGP-YOLO is above 99%, and the inference speeds is above 70, satisfying the actual detection requirements of LGP.

Conclusion

In this paper, we propose a new LGP surface defect detection method based on LGP-YOLO. First, an RFM is constructed, combining ECA-Net, RepLKNet and a 3 × 3 convolution, the RFM enhances the feature extraction ability of the network and expands the effective receptive field, solving the problem that feature extraction is made difficult by the complex background texture of LGPs and reducing the interference of invalid targets. Then, an SDM is constructed, consisting of SPDConv, ODConv, and a 3 × 3 convolution, the SDM can optimize the performance of the network in downstream tasks, enhance the expression ability of the network, and improve the ability to detecting multi-scale targets, thereby dissolving the difficulties in defect detection caused by large resolution range of LGP defects and a large proportion of small target defects. Finally, a large number of experiments were conducted on a self-built LGP defect dataset and the DeepPCB dataset. The experimental results demonstrate that the LGP-YOLO network proposed in this paper can achieve higher performance than the existing networks in defect detection. Notably, compared to the baseline network YOLOv7, LGP-YOLO achieves a remarkable reduction in network computing load, with a FLOP (floating point operations) of only 97.4G. Moreover, LGP-YOLO demonstrates outstanding performance on the LGP defect dataset, achieving an mAP (mean average precision) of 99.08% and an F1-Score of 97.45%, which are 2.45% and 2.15% higher than YOLOv7, respectively, while maintaining an impressive FPS (frames per second) of 81.15%. On the DeepPCB dataset, LGP-YOLO achieves an mAP of 98.49% and an F1-score of 96.73%, surpassing YOLOv7 by 3.42% and 4.39% respectively, with an FPS of 80.92. Notably, LGP-YOLO offers a relatively high inference speed and a relatively small network parameter quantity, enabling improved detection accuracy, reduced missed detection rate, and false detection rate, thereby effectively meeting the LGP quality inspection requirements of LGP manufacturing facilities and preventing economic losses and safety hazards associated with LGP defects.

Although LGP-YOLO achieves a good balance between inference speed and accuracy, its parameter-adjusting process is a little complex in practical applications. Therefore, our future works will focus on developing parameter adaptive algorithms to simplify the parameter tuning process, and improve the practicality of the model.

Data availability

The data generated during and analysed during the current study are shared at https://github.com/wanyan12345/LGP_YOLO.git.

References

Yao J, Li J (2022) AYOLOv3-Tiny: an improved convolutional neural network architecture for real-time defect detection of PAD light guide plates. Comput Ind 136:103588

Hong L, Wu X, Zhou D, Liu F (2021) Effective defect detection method based on bilinear texture features for LGPs. IEEE Access 9:147958–147966

Liu L, Zuo H, Qiu X (2021) Research on defect pattern recognition of light guide plate based on deep learning semantic segmentation. J Phys Conf Ser 1865(2):022033

Li Y, Li J (2021) An end-to-end defect detection method for mobile phone light guide plate via multitask learning. IEEE Trans Instrum Meas 70:1–13

Li J, Yang Y (2023) HM-YOLOv5: a fast and accurate network for defect detection of hot-pressed light guide plates. Eng Appl Artif Intell 117:105529

Li J, Wang H (2022) Surface defect detection of vehicle light guide plates based on an improved RetinaNet. Meas Sci Technol 33(4):045401

Girshick R, Donahue J et al (2014). Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the 2014 CVPR, pp 580–587

Purkait P, Zhao C et al (2017). SPP-Net: deep absolute pose regression with synthetic views. arXiv:1712.03452

Girshick R (2015) Fast R-CNN. In: Proceedings of the 2015 ICCV, pp 1440–1448

Ren S et al (2015) Faster R-CNN: towards real-time object detection with region proposal networks. In: Proceedings of the 2017 IEEE transactions on pattern analysis and machine intelligence, pp 1137–1149

Redmon J et al (2018) YOLOv3: an incremental improvement. arXiv:1804.02767

Bochkovskiy A et al (2020) YOLOv4: optimal speed and accuracy of object detection. arXiv:2004.10934

Wang J et al (2021) Improved YOLOv5 network for real-time multi-scale traffic sign detection. Neural Comput Appl 35:7853–7865

Li C, Li L et al (2022). YOLOv6: a single-stage object detection framework for industrial applications. arXiv:2209.02976

Wang C-Y, Bochkovskiy A et al (2022) YOLOv7: trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv:2207.02696

He D, Xu K et al (2019) Defect detection of hot rolled steels with a new object detection framework called classification priority network. Comput Ind Eng 128:290–297

He T, Liu Y, Xu C, Zhou X, Hu Z, Fan J (2019) A fully convolutional neural network for wood defect location and identification. IEEE Access 7:123453–123462

Ding R, Dai L, Li G, Liu H (2019) TDD-Net: a tiny defect detection network for printed circuit boards. CAAI Trans Intell Technol 4(2):110–116

Lin W-Y, Lin C-Y et al (2019) Steel surface defects detection based on deep learning. In: Advances in physical ergonomics and human factors, p 786

Zhao Z, Yang X et al (2021) Real-time detection of particleboard surface defects based on improved YOLOV5 target detection. Sci Rep 11(1):21777

Yang G (2022) Asymptotic tracking with novel integral robust schemes for mismatched uncertain nonlinear systems. Int J Robust Nonlinear Control 33(3):1988–2002

Xie Y, Hu W, Xie S, He L (2023) Surface defect detection algorithm based on feature-enhanced YOLO. Cogn Comput 15(2):565–579

Yang G, Yao J, Zhenle D (2022) Neuroadaptive learning algorithm for constrained nonlinear systems with disturbance rejection. Int J Robust Nonlinear Control 32(10):6127–6147

He X, Wang J, Chen C, Yang X (2021) Detection of the floating objects on the water surface based on improved YOLOv5. In: Proceedings of the 2021 ICIBA, pp 772–777

Zeqiang S, Bingcai C (2022). Improved Yolov5 algorithm for surface defect detection of strip steel. In: Proceedings of the artificial intelligence in China

Ming W, Shen F et al (2019) Defect detection of LGP based on combined classifier with dynamic weights. Measurement 143:211–225

Liu S, Wang Y, Yu Q, Liu H, Peng Z (2022) CEAM-YOLOv7: improved YOLOv7 based on channel expansion and attention mechanism for driver distraction behavior detection. IEEE Access 10:129116–129124

Wang Q, Wu B, Zhu P et al (2020) ECA-Net: efficient channel attention for deep convolutional neural networks. In: Proceedings of the 2020 CVPR, pp 11531–11539

Ding X, Zhang X, Han J, Ding G (2022) Scaling up your kernels to 31×31: revisiting large kernel design in CNNs. In: Proceedings of the 2022 CVPR, pp 11953–11965

Sunkara R, Luo T (2022) No more strided convolutions or pooling: a new CNN building block for low-resolution images and small objects. In: ECML/PKDD

Li C, Zhou A, Yao A (2022) Omni-dimensional dynamic convolution. arXiv:2209.07947

Sun X, Liu T, Yu X, Pang B (2021) Unmanned surface vessel visual object detection under all-weather conditions with optimized feature fusion network in YOLOv4. J Intell Robot Syst 103(3):55

Zhang Y, Yang Y, Sun J et al (2023) Surface defect detection of wind turbine based on lightweight YOLOv5s model. Measurement 220:113222

Wen G, Li S, Liu F, Luo X et al (2023) YOLOv5s-CA: a modified YOLOv5s network with coordinate attention for underwater target detection. Sensors 23(7):3367

Wang K, Teng Z, Zou T (2022) Metal defect detection based on Yolov5. J Phys Conf Ser 2218(1):012050

Xiong C, Hu S, Fang Z (2022) Application of improved YOLOV5 in plate defect detection. Int J Adv Manuf Technol

Dehaerne E, Dey B, Halder S, Gendt S D (2023) Optimizing YOLOv7 for semiconductor defect detection. In: Proceedings of the advanced lithography

DeepPCB (2020) https://github.com/Charmve/Surface-Defect-Detection/tree/master/DeepPCB

Acknowledgements

This work was supported by the Key R&D Program of Zhejiang (No. 2023C01062) and the Basic Public Welfare Research Program of Zhejiang Province (No. LGF22F030001, No. LGG19F03001).

Funding

This work was supported by the Key R&D Program of Zhejiang (No. 2023C01062) and the Basic Public Welfare Research Program of Zhejiang Province (No. LGF22F030001, No. LGG19F03001).

Author information

Authors and Affiliations

Contributions

All authors contributed to the study’s conception and design. Material preparation, data collection, and analysis were performed by JL. The first draft of the manuscript was written by YW. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Ethical approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

All authors have read and agreed to the published version of the manuscript.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wan, Y., Li, J. LGP-YOLO: an efficient convolutional neural network for surface defect detection of light guide plate. Complex Intell. Syst. 10, 2083–2105 (2024). https://doi.org/10.1007/s40747-023-01256-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-023-01256-4