Abstract

There are inconsistent tasks and insufficient training in the SAR ship detection model, which severely limit the detection performance of the model. Therefore, we propose a twin branch network and design two loss functions: regression reverse convergence loss and classification mutual learning loss. The twin branch network is a simple but effective method containing two components: twin regression network and twin classification network. Aiming at the inconsistencies between training and testing in regression branches, we propose a regression reverse convergence loss (RRC Loss) based on twin regression networks. This loss can make multiple training samples in the twin regression branch converge to the label from the opposite direction. In this way, the test distribution can be closer to the training distribution after processing. For inadequate training in classification branch, Inspired by knowledge distillation, we construct self-knowledge distillation using a twin classification network. Meanwhile, our proposed classification mutual learning loss (CML Loss) enables the twin classification network not only to conduct supervised learning based on the label but also to learn from each other. Experiments on SSDD and HRSID datasets prove that, compared with the original method, the proposed method can improve the AP by 2.7–4.9% based on different backbone networks, and the detection performance is better than other advanced algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Synthetic Aperture Radar (SAR) is a high resolution image radar. On the one hand, as an active microwave imaging sensor, SAR has a particular penetration effect on ground objects, so it is less affected by the environment and can effectively detect various hidden objects. On the other hand, its all-weather advantages enable it to complete exploration missions in all extreme conditions. Owing to these properties, SAR has been widely used in ship detection [1,2,3,4,5,6].

Traditional SAR ship detection methods rely on several handcrafted features. There are three main methods, including methods based on contrast information [7,8,9], the geometric and texture features [10, 11], as well as the statistical analysis [12, 13]. In addition, [14] consider both Marine clutter and signal backscattering in SAR images, and propose a Generalized Likelihood Ratio Test (GLRT) detector. Lang et al. [15] proposed a Spatial Enhanced Pixel Descriptor (SEPD) to realize the spatial structure information of the ship target and improve the separability between the ship target and the ocean clutter. Leng et al. [16] defined the Area Ratio Invariant Feature Group (ARI-FG) to modify the traditional detector. Among them, CFAR (Constant False alarm Rate) [17,18,19] detection method and its variant methods are the most widely studied.

In recent years, thanks to the development of deep learning and GPU computing performance, the convolution neural network has thrived in SAR ship detection [20,21,22,23]. Compared with the traditional method using artificial design features, the object detection algorithm based on the convolution neural network has significantly improved accuracy. Among them, the dense detection network gradually leads the development trend of target detection. To explore the rules of the detection model, we usually divide the detection model into several components [24]: backbone network, neck network, and head network. After a large-scale training of the image classification task, the backbone network migrates to the object detection task to fine-tune its parameters, so it has many mature structures and parameters. Neck network is generally used to realize multi-scale feature fusion. The fused features can better represent objects with various shapes and positions. Head network is a crucial structure of object detection, which uses the features output by the neck network to classify and locate targets. The earlier object detection algorithms, Faster RCNN [25], YOLO [26], and SSD [27], share a head network for classification and regression tasks. With the advent of RetinaNet [28], FCOS [29], FoveaBox [30], IoU-Net [31], and other algorithms [32,33,34], classification and regression tasks are separated, and parallel classification and regression branches are constructed. However, there are still some problems in this structure, limiting the improvement of model detection performance.

Inconsistencies between training and testing in regression branches. Specifically, the widely used regression loss is the L1 loss function, smooth L1 loss function, and L2 loss function, which make the coordinates of the proposal boxes generally regarded as the Dirac Delta distribution. This distribution enables all coordinate predictions to be aggregated to labels as much as possible, as shown in Fig. 1a. Representative algorithms using Dirac Delta distribution include SSD, FCOS, YOLO series, RCNN series, etc. However, after the following simulation experiment, it is found that the obtained result distribution is often very different from the Dirac Delta distribution. Therefore, coordinate prediction is regarded as Dirac Delta distribution in the training process, which leads to the inconsistency between the training and testing process. Finally, the ship detection performance is reduced.

Some recent work [35, 36] has considered prediction coordinates as Gaussian distributions to bridge the gap between training and testing, as shown in Fig. 1b. To some extent, Gaussian distribution can describe the coordinate distribution and weaken the requirements of Dirac triangle distribution. But in fact, the predicted values of coordinates may show very rich and flexible distributions during the testing process, even distributions that are generally not recognized. Therefore, it is not the most appropriate method to set up the coordinate prediction as Gaussian distribution and can only achieve a suboptimal performance.

Inadequate training of classification branches. We noticed that the conventional classification branch usually only uses a single loss, which easily leads to the phenomenon of low object classification scores when the background is chaotic. Finally, the ship with low scores are removed as background in the post-processing process, which ultimately affects the detection effect of the model. In this regard, the current research mainly focuses on the integration of classification and regression loss, so as to use regression loss to intervene in classification loss. This can optimize the training of classification branches to some extent. However, the background of SAR ship targets is usually chaotic. Background information interferes with classification and regression features. Therefore, the upper limit of classification and regression features determines that the fusion loss of classification and regression is difficult to improve the classification score further. Consequently, the current research still cannot break through the upper limit of classification branch training, resulting in the training process cannot be more sufficient.

To solve the above problems, this paper proposes a simple but effective detection network named twin branch network and designs two loss function: regression reverse convergence loss (RRC Loss) and classification mutual learning loss (CML Loss). Firstly, the twin head network designed in this paper will derive classification and regression branches, forming twin classification network and twin regression network. The two networks output two sets of data in parallel during the training process. On this basis, the loss function of regression reverse convergence is proposed to normalize the two coordinate predicted values in twin regression networks. Then the special relationship between two coordinate predicted values is used to get more accurate coordinate predicted values effectively. In addition, inspired by knowledge distillation, this paper proposes a mutual learning loss for classification, enabling self-knowledge distillation within the twin classification network. In the training process, the twin classification network will be transformed iteratively between the teacher and student network and continue to learn from each other to enhance the classification branch training. Finally, the experiments on SSDD dataset show that compared with conventional RetinaNet, our method can improve 2.7–4.9% AP in different backbone networks. At the same time, our detection performance is better than other current advanced methods. In addition, experiments on the HRSID dataset show that the proposed method has good portability. For example, FoveaBox improved by the proposed method can improve 1.5–2.0% AP based on different backbone networks. RetinaNet improved by the proposed method can improve 1.5–4.8% AP based on different backbone networks. PISA improved by the method in this paper can improve AP by 1.5% based on ResNet-50. Experiments show that our method is advanced.

The main contributions of our work can be summarized as follows

-

We conducted detailed experiments and analyses of existing methods. At the same time, the common problems of inconsistencies between training and testing in regression branches and inadequate training of classification branches are found in current methods.

-

This paper proposes a simple but effective detection network named twin branch network and designs two loss function: RRC Loss and CML Loss. To resolve inconsistencies between training and testing in regression branches, we use twin regression branches and RRC losses. For inadequate training of classification branches, twin classification branches and CML losses were used.

-

We conducted extensive experiments on the benchmark SSDD and HRSID datasets to prove the effectiveness of the proposed method. The experimental results confirmed that the proposed method is effective.

Motivation

In this section, the results obtained by the mainstream dense detector RetinaNet are studied to explore the main problems existing in the current detection model and then provide the basis for the theory of this paper.

RetinaNet is based on a feature pyramid network, and its detection head network is a general parallel structure. The classification branch will score each anchor box. Accordingly, the regression branch will predict each anchor’s center point offset \((\varDelta x,\varDelta y)\) and aspect ratio offset \((\varDelta w,\varDelta h)\). Then the output of the classification and regression branches will jointly determine the detection result. In the training phase, each anchor box of \((\varDelta x,\varDelta y, \varDelta w,\varDelta h)\) will learn a Dirac Delta distribution for labels y. At the same time, the classification score of each anchor fits the unique thermal coding of the category label. Finally, non-maximum suppression is used to process classification scores in the test phase to remove overlapping prediction boxes.

We study the regression branch of RetinaNet. In this paper, two single ship images are selected respectively. Trained RetinaNet models detect the target and then collect data without non-maximum suppression. Next, We conducts a statistical analysis of the collected data, and the results are shown in Fig. 2.

It can be seen from Fig. 2 that the distribution of \((\varDelta x,\varDelta y, \varDelta w,\varDelta h)\) corresponding to the two images is not similar to the Dirac triangle distribution. At the same time, they do not constitute a Gaussian distribution but a random distribution, even the label value is not within the maximum probability range. On the one hand, this phenomenon shows that the mainstream detector represented by RetinaNet has the problem of inconsistent training and testing. On the other hand, it also shows that it is unreliable to make the training process fit the Gaussian distribution.

In order to deal with the above problems, this paper tries to consider the following two perspectives:

Increasing the number of branches. In the testing process, the distribution of the prediction box will present an unknown distribution, and the mean of the distribution is the accurately predicted value. Then, to obtain accurate target box coordinates from the unknown distribution, the simple idea is to do much random sampling on the unknown distribution and, finally, calculate the mean to obtain accurate coordinates. Multiple regression branches are set up in the detector if we want to achieve the above purpose. In the training process, the output value of each regression branch learns the label separately. In this case, the output values should be independent and identically distributed. Then, the average of all the regression outputs is calculated to get an accurate result.

Increasing the number of branches is a novel idea. However, it has no practical significance because it requires many output values as samples, which means that the number of regression branches and model parameters need to be significantly increased.

Adjusting the distribution of the results. In some studies, it is assumed that the distribution of test results conforms to the Gaussian distribution. Then the coordinates of the prediction box are fitted to the Gaussian distribution during training, as shown in Fig. 1b. In the training process, the training samples should be as close to the distribution of test results as possible, but it has been proved in Fig. 2 that this method is not the most effective.

We may try to consider the reverse. Consider the Dirac triangle distribution during training as a label. Let the test distribution approach the Dirac triangle distribution. Specifically, the test distribution is adjusted heuristically without modifying the training pipeline, as shown in Fig. 3. Although the test distribution is unknown, if the range and shape of the distribution can be modified, it can be made as close to the Dirac triangle as possible.

Based on the above two research perspectives, twin branch network and RRC Loss are proposed in this paper. In addition, this paper continues to study the classification branches. In order to intuitively understand the training of the RetinaNet model, all the predicted classification scores are counted in this paper. We assume that proposal boxes with a classification score greater than 0.5 are regarded as targets, while those with a classification score less than 0.5 are regarded as false detections. The statistical results are shown in Fig. 4.

It can be seen from Fig. 4 that among all detected ship targets, the number of targets with classification scores ranging from 0.8 to 0.85 is the largest. However, the number of detected targets dropped sharply from 0.85 to 1.0. The scores of most targets are in the range of 0.5–0.8, and the classification scores of targets are generally not high.

The results of statistics on all classification scores on the test dataset. The bar chart on the left shows that most ships are in the range of 0.8–0.85. In the range of 0.85–1.0, the number of detected ships decreased sharply. Almost all ships have scored in the range of 0.5–0.8. In addition, we list the ship images with different classification scores on the right

Therefore, the above statistical results indicate that the detector represented by RetinaNet has the problem of inadequate classification branching training. In order to alleviate this problem, we design a CML Loss based on the twin branch network.

Method

In this section, we first explain the structure of the twin branch network based on the classical RetinaNet model. Then, we introduce RRC loss’s composition and working principle. Finally, the CML Loss will be introduced in detail.

Twin branch network

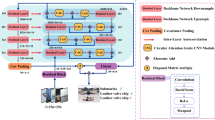

The general detection model consists of classification and regression branches, and there is no interaction between the two branches. The classification and regression branches contain four convolution layers and activation functions. On this basis, the twin branch network designed in this paper derives the regression and classification branches, and the network structure is shown in Fig. 5.

It should be noted that the derived branch is structurally consistent and symmetric with the original branch, but there is no intersection between the two. This structure ensures that the distribution of derived branches and original branches is as close as possible. In addition, this design will not affect the original training pipeline. At the same time, the implementation of the difficulty is small, and the idea is simple. Finally, the RRC Loss and CML Loss is added to the network based on retaining the original loss function. The form of the total loss function is as follows:

where \(\mathcal {L}^1_\textrm{cls}\) and \(\mathcal {L}^2_\textrm{cls}\) represent the original classification loss function in the RetinaNet model, that is, focal loss; \(\mathcal {L}^1_\textrm{reg}\) and \(\mathcal {L}^2_\textrm{reg}\) respectively represent the original regression loss function, and the smooth L1 loss function is used in this paper. Finally, \(\mathcal {M}_\textrm{cls}\) and \(\mathcal {M}_\textrm{reg}\) are CML loss and RRC loss, respectively.

Regression reverse convergence loss

Twin regression network derived regression branches into two branches, increasing the number of regression branches. However, if the mean of the test distribution is calculated only by two independent regression branches, it is difficult to get an accurate prediction. Therefore, this paper will use RRC loss to guide the training process of the two regression branches. The network structure is shown in Fig. 6.

On the one hand, the distributions of two regression branches should be as close as possible, so the L1 loss function is used to constrain the distance between predicted results. On the other hand, in order to make the distribution of output conducive to the generation of accurate predictive values, the predicted results of the two branches converge to the label from opposite directions so that the output values of the two regression branches are respectively concentrated on both sides of the label. Finally, the new distribution is obtained by averaging the two distributions. After averaging, the distribution becomes sharper and closer to the Dirac Delta distribution. Specifically, cosine similarity is used to reduce the similarity of the two branches predicted values relative to the label’s direction. The form of the loss function of regression reverse convergence is as follows:

where, \(bbox^1_{i}\) and \(bbox^2_{i}\) respectively represent the predicted value of the ith positive samples on the two regression branches, \(\mathcal {T}_i\) represents the regression target value, and \(\cos \) is used to calculate the cosine similarity of the predicted value on the two branches. After subtracting a predicted regression value \((\varDelta x_i,\varDelta y_i)\) from the label \((\varDelta x,\varDelta y)\), the change vector of the regression value \((\varDelta x_i - \varDelta x, \varDelta y_i - \varDelta y)\) can be obtained. At the same time, with the help of cosine similarity, the cosine value between the change vectors on the two branches will keep decreasing, leading to the angle gradually approaching \(\pi \). The cosine similarity between the two branches is calculated as follows:

In the latter part of Eq. (2), we use the L2 norm to reduce the Euclidean distance of the regression predicted values on the two branches to promote their eventual convergence to the same result.

Classification mutual learning loss

CML loss will result in self-knowledge distillation within the twin classification network. In the training process, the twin classification network will be transformed iteratively between the teacher and student network and continue to learn from each other to enhance the classification branch training.

Inspired by knowledge distillation, in order to promote the training of classification branches, we constructed self-knowledge distillation inside the twin classification network. During the training process, the two classification branches would be iteratively converted between the teacher and student networks. The model structure is shown in Fig. 7.

When one classification branch is backpropagated, it will be treated as the student network, and the other branch is automatically treated as the teacher network. Once the classification score of the student network is lower than that of the teacher network, the teacher network will provide an additional loss for the student network to promote the training of the student network.

CML Loss can establish mutual learning between two branches to continuously strengthen the training of two branches of classification. Different from the conventional knowledge distillation, when the classification score of the student network is higher than that of the teacher network, the teacher network may provide training direction contrary to the label, thus hindering the training of the student network. Therefore, when the classification score of the student network is higher than that of the teacher network, the student network does not punish. Specifically, the CML Loss is as follows:

where, m represents the margin, which is set to 0 in the experiment in this paper. y represents the label value of the classification. Since SAR ship datasets are usually single categories, \(y=1\) is used when the anchor box is classified as a positive sample, and \(y=0\) is used otherwise. \(\mathcal {C}_\textrm{s}\) represents the classification score of the student network, and \(\mathcal {C}_\textrm{t}\) represents the classification score of the teacher network. CML Loss can only be penalized if the student loss is greater than the teacher loss. This loss encourages the student network to approach or better the teacher network in classification. However, it does not give the student network much of a boost once it achieves the teacher network’s performance.

Experiment

We first introduce the datasets, experiment setting, and evaluation criteria in the experiment section. Then, the effects of twin networks and different loss functions on the experimental results will be studied in detail through ablation study. Next, this paper will verify the detection performance and robustness of the proposed method on SSDD and HRSID datasets.

Datasets

To prove the superiority of this method, we conducted extensive experiments on the SSDD [37] and HRSID [38] datasets.

SSDD is the first SAR ship dataset established in 2017. It has been widely used by many researchers since its publication and has become the baseline dataset for SAR ship detection. The SSDD dataset contains many scenarios and ships and involves various sensors, resolutions, polarization modes, and working modes. Additionally, the label file settings of this dataset are the same as those of the mainstream PASCAL VOC [39] dataset, so training of the algorithms is convenient.

In using the SSDD dataset, researchers used to randomly divide training, validation, and test datasets. These inconsistent divisions often result in the absence of common evaluation criteria. As researchers gradually discovered this problem, they began to establish uniform training and test datasets. Currently, 80% of the total dataset are training datasets, and the remaining 20% are test datasets. There are 1160 images in the SSDD dataset. Therefore, the number of images in the training dataset is 921, and the number of images in the test dataset is 239. For further refinement, images whose names end with digits 1 and 9 are set as test datasets. In this way, the performance of various detection algorithms can be evaluated in a targeted way.

The HRSID dataset is a dataset released by UESTC in January 2020. HRSID is used for ship detection, semantic segmentation, and instance segmentation tasks in high-resolution SAR images. The dataset contains 5604 high-resolution SAR images and 16,951 ship instances. Its label file settings are the same as those of the mainstream of the Microsoft common objects in context (MS COCO) [40] dataset.

Evaluation metrics

To evaluate the detection performance of the algorithm model, we adopted the evaluation criteria AP50, AP75, APS, APM and APL in the MS COCO dataset. Average Precision (AP) is the area under the accuracy-recall curve, and mean Average Precision (mAP) is the average of various categories AP, where accuracy and recall are shown in formula 13. AP is the mean AP exceeding Intersection over Union (IoU) = 0.50: 0.05: 0.95 (primary challenge measure), AP50 is the AP with IoU = 0.5 (PASCAL VOC measure), and AP75 is the AP with IoU = 0.75. APS, APM and APL represent AP of small target, medium target and large target respectively, where small target with an area less than \(32^2\) pixels, medium target with an area between \(32^2\) pixels and \(96^2\) pixels, and large target with an area greater than \(96^2\) pixels.

Here, TP (true positive) is the number of ships correctly detected, FP (false positive) is the number of ships incorrectly classified as positive, and FN (false negative) is the number of ships incorrectly classified as negative. AP is defined as

where P represents precision and R represents recall. AP is equal to the area under the curve.

Experimental settings

All experiments were implemented in PyTorch 1.6.0, CUDA 11.2, and cuDNN 7.4.2 with an Intel intel(R) xeon(R) silver 4110 CPU and an NVIDIA Geforce TITAN RTX GPU. The PC operating system is Ubuntu 18.04. Table 1 presents the computer and deep learning environment configuration for our experiments.

The algorithm model in this paper is based on the MMDetection [41] framework. Where training strategy \(1\times \) represents 12 epochs of training, \(2\times \) represents 24 epochs of training, the optimizer adopts the stochastic gradient descent method, learning rate Settings include 0.01, 0.005, and 0.001, momentum is 0.9, weight delay is 0.0001.

Ablation study

The influence of each model component

Our method is based on Resnet-50 RetinaNet model. Firstly, the influence of each component structure in our method on detection performance is studied. The experimental results are shown in Table 2.

Without the CML Loss and RRC Loss, we only retain the conventional classification and regression loss functions in RetinaNet, train the twin branch network, and finally average the output results of the two groups of classification and regression for target prediction. The detection results are shown in row 2 of Table 2. Compared with the conventional RetinaNet model, the AP of the twin branching network is 50.2%, which is 1.4% higher than that of the conventional model.

Next, CML Loss is added to the twin branch network, and the detection results are shown in row 4 of Table 2. After testing, the AP is 52.9%, which is 2.8% and 1.4% higher than the original structure and the loss before adding, respectively, proving that our CML Loss can enhance the model’s training.

Then, to continue proving the effectiveness of the RRC Loss, this paper only added the RRC Loss to the twin branch network for training, and the detection results are shown in row 3 of Table 2. After adding the RRC Loss, the AP is 52.9%, which is 4.1% higher than the original structure.

Finally, when the twin branch network, the CML Loss, and the RRC Loss are used simultaneously, the detection results are shown in line 4 of Table 2. The improved AP improves by 5.1%, indicating that the twin branch network and the two loss functions proposed in this paper can significantly improve the detection performance.

The influence of different measurement functions

We studied the influence on the experimental results when the RRC Loss and the CML Loss adopt different measure functions. The experimental results are shown in Table 3, where \(N\backslash A\) indicates that no loss function is adopted.

The CML Loss measures the distance between the output results on two branches. Since the distance between two branches is usually tiny, L1 and L2 losses are used to realize the CML Loss in this paper. In addition, since the loss function of regression reverse convergence is composed of reverse and convergence, cosine similarity is used to realize the reverse function, and L1 and L2 loss functions realize the convergence function. Compared with other schemes in the table, when the classification loss is L1 loss, the reverse function adopts cosine similarity, and the convergence function is L1 loss, the detection performance of the model is the best, and the evaluation criterion AP is 53.9%.

The influence of different aggregation methods

In the inference, the twin branch network outputs two groups of classification scores and two groups of regression predictive values, respectively. In this section, we studied the influence of different combination methods for four groups of output values on detection performance, and the experimental results are shown in Table 4.

In the table, branch output X means that the output result of the X branch network is used for post-processing. In addition, \(1\backslash 2\) means that the output result of the two branches is combined, that is, the output result of the two branches is averaged.

As can be seen from the table, when only a single branch is used for detection, the evaluation criterion decreases drastically. When the classification and regression output are combined, the AP of the evaluation criterion is optimal and significantly improved. The results show that the two loss functions proposed in this paper have constraints on the training of twin branch network. Also, it is worth noting that the AP in the first four rows of the table are almost identical, indicating that the two branches within a twin branch network are independent of each other.

The results on SSDD dataset

The visualization of reverse convergence

This section uses cosine similarity to make the two regression branches in opposite directions. In order to intuitively prove that the theory has a tangible impact on the training process, images will be randomly selected from the test dataset to visualize the distribution near the center point of the target, which is used to prove that the two regression branches approach labels from different directions, as shown in Fig. 8.

The red dots indicate the target location. The red and green contour lines represent the density hierarchy of the output results of the two regression branches. The darker the color in the figure, the greater the density of the predicted value at that position. As can be seen from Fig. 8, the two contour lines are located on both sides of the red dot, respectively, proving the authenticity of reverse convergence once again.

The adequacy of classification training

In order to further illustrate that the CML Loss promotes model training, we will analyze the changes in classification loss in this section, and the results are shown in Fig. 9.

The loss curves of twin classification networks. a The corresponding loss function of the proposed method is the lowest, indicating that the proposed method can promote the model’s training. b We count the classification scores of all the objects in the test dataset. The red and blue areas are the statistical results of the original method and our method, respectively. The proposed method significantly improves the number of predictions in the range of 0.8–1, indicating that the classification score of the proposed method is generally higher than that of the original method, which further proves that the proposed method strengthens the training of the model

As shown in the figure, the trend curves of RetinaNet and the proposed method fluctuate during training. However, the loss values of the proposed method are lower than those of the original method in both backbone networks. Therefore, the proposed method facilitates the training of twin classification networks.

In addition, all classification scores without non-maximum suppression were counted, as shown in Fig. 9. The red bars are the conventional RetinaNet model and the blue ones are the proposed methods.

As can be seen from the figure, the peak of our classification score is 0.85–0.9, and it is mainly concentrated in 0.75–0.95. Compared with the baseline method, the classification score is significantly improved, which further proves that the proposed method positively affects classification training.

The comparison with other advanced methods

In order to prove the effectiveness of the method, this paper conducted an extensive comparison of the SSDD dataset, and the experimental results are shown in Table 5.

For RetinaNet models, the AP of our method improved by 2.7% after 24 epochs of training on ResNet-50. When the ResNet-101 backbone network was used, the AP improved by 4.6% and 2.8% after 12 and 24 iterations compared to the baseline method. On the more powerful ResNext-101, the proposed method achieved a 4.9% AP improvement over the baseline method. In addition, various models are tested in this paper, and the AP of the PAA method with the best performance is 52.4%. Compared with the PAA method, our method improves AP by 1.2% under the same backbone network, and our other criteria are better than the PAA algorithm. Experimental results show that our method can significantly improve ship detection performance under different backbone networks under the SSDD dataset.

Figure 10 shows the visualized detection results on the SSDD dataset. As can be seen from the figure, repeated detection and missed detection occurred to varying degrees in the other seven models. However, the proposed method is more accurate in SAR ship detection under complex background.

The results on the HRSID dataset

In order to verify the robustness of the proposed method, the twin branch network is migrated to FoveaBox and PISA algorithms in this section, and an extensive comparison is made on the HRSID dataset. The experimental results are shown in Table 6.

Unlike the RetinaNet method, FoveaBox is a classical anchor-free object detection method with advanced performance. PISA algorithm is based on RetinaNet, which reweights training samples and improves the performance of the baseline method. One is different from the RetinaNet training pipeline, and the other is an improved version of RetinaNet, so the experiments conducted on these two methods are representative.

As can be seen from Table 6, in the FoveaBox model, our method is almost unchanged in the ResNet-50 backbone network but improves 1.3–2% AP in other backbone networks. In PISA, our method improved by 1.5% over the RseNet-50 backbone network; In addition, 1.5–4.8%AP was improved in the RetinaNet method.

Experimental results show that the twin branch network can significantly improve the detection performance under two benchmark SAR ship datasets and three advanced algorithm models, so the proposed method has good robustness.

Conclusion

In this paper, a twin branch network is proposed, and two loss functions are designed, which are CML Loss and RRC Loss, respectively. Combined with the two losses, the twin branch network can standardize the test distribution and obtain more accurate detection results. As far as we know, without changing the test distribution, most of the work is to make the network fit the test distribution during training. We innovatively modify the test distribution heuristically to make the test distribution appear similar to the training distribution, which is ultimately beneficial to obtaining more accurate results. Finally, our method can significantly improve the SAR ship detection accuracy by adding a few training parameters, and its application in other computer vision tasks is also worth exploring.

Data Availability

All data included in this study are available upon request by contact with the corresponding.

References

Du L et al (2019) Target discrimination based on weakly supervised learning for high-resolution SAR images in complex scenes. IEEE Trans Geosci Remote Sens 58(1):461–472

Shahzad M et al (2018) Buildings detection in VHR SAR images using fully convolution neural networks. IEEE Trans Geosci Remote Sens 57(2):1100–1116

Huang L et al (2017) OpenSARShip: a dataset dedicated to Sentinel-1 ship interpretation. IEEE J Sel Top Appl Earth Observ Remote Sens 11(1):195–208

Zhang Z et al (2017) Complex-valued convolutional neural network and its application in polarimetric SAR image classification. IEEE Trans Geosci Remote Sens 55(12):7177–7188

Yang G et al (2018) Unsupervised change detection of SAR images based on variational multivariate Gaussian mixture model and Shannon entropy. IEEE Geosci Remote Sens Lett 16(5):826–830

Gierull CH (2018) Demystifying the capability of sublook correlation techniques for vessel detection in SAR imagery. IEEE Trans Geosci Remote Sens 57(4):2031–2042

Iervolino P, Guida R (2017) A novel ship detector based on the generalized-likelihood ratio test for SAR imagery. IEEE J Sel Top Appl Earth Observ Remote Sens 10(8):3616–3630

Schwegmann CP, Kleynhans W, Salmon BP (2015) Manifold adaptation for constant false alarm rate ship detection in South African oceans. IEEE J Sel Top Appl Earth Observ Remote Sens 8(7):3329–3337

Gierull CH, Sikaneta I (2017) A compound-plus-noise model for improved vessel detection in non-Gaussian SAR imagery. IEEE Trans Geosci Remote Sens 56(3):1444–1453

Kaplan LM (2001) Improved SAR target detection via extended fractal features. IEEE Trans Aerosp Electron Syst 37(2):436–451

Tello M, López-Martínez C, Mallorqui JJ (2005) A novel algorithm for ship detection in SAR imagery based on the wavelet transform. IEEE Geosci Remote Sens Lett 2(2):201–205

Song S et al (2016) Ship detection in SAR imagery via variational Bayesian inference. IEEE Geosci Remote Sens Lett 13(3):319–323

Ferrentino E et al (2019) Detection of wind turbines in intertidal areas using SAR polarimetry. IEEE Geosci Remote Sens Lett 16(10):1516–1520

Iervolino P, Guida R (2017) A novel ship detector based on the generalized-likelihood ratio test for SAR imagery. IEEE J Sel Top Appl Earth Observ Remote Sens 10(8):3616–3630

Lang H, Xi Y, Zhang X (2019) Ship detection in high-resolution SAR images by clustering spatially enhanced pixel descriptor. IEEE Trans Geosci Remote Sens 57(8):5407–5423

Leng X et al (2018) Area ratio invariant feature group for ship detection in SAR imagery. IEEE J Sel Top Appl Earth Observ Remote Sens 11(7):2376–2388

Liu T et al (2020) Robust CFAR detector based on truncated statistics for polarimetric synthetic aperture radar. IEEE Trans Geosci Remote Sens 58(9):6731–6747

Li T et al (2017) An improved superpixel-level CFAR detection method for ship targets in high-resolution SAR images. IEEE J Sel Top Appl Earth Observ Remote Sens 11(1):184–194

Ao W et al (2018) Detection and discrimination of ship targets in complex background from spaceborne ALOS-2 SAR images. IEEE J Sel Top Appl Earth Observ Remote Sens 11(2):536–550

Zhu M et al (2020) Rapid ship detection in SAR images based on YOLOv3. In: 2020 5th international conference on communication, image and signal processing (CCISP). IEEE

Chen Y, Yu J, Xu Y (2020) SAR ship target detection for SSDv2 under complex backgrounds. In: 2020 International conference on computer vision, image and deep learning (CVIDL). IEEE

Zhang T et al (2020) Balanced feature pyramid network for ship detection in synthetic aperture radar images. In: 2020 IEEE radar conference (RadarConf20). IEEE

Deng Z et al (2019) Learning deep ship detector in SAR images from scratch. IEEE Trans Geosci Remote Sens 57(6):4021–4039

Bochkovskiy A, Wang C-Y, Liao H-YM (2020) Yolov4: optimal speed and accuracy of object detection. ArXiv preprint arXiv:2004.10934

Ren S et al (2015) Faster r-cnn: towards real-time object detection with region proposal networks. Adv Neural Inf Process Syst 28

Redmon J et al (2016) You only look once: Unified, real-time object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition

Liu W et al (2016) Ssd: single shot multibox detector. In: European conference on computer vision. Springer, Cham

Lin T-Y, et al (2017) Focal loss for dense object detection. In: Proceedings of the IEEE international conference on computer vision

Tian Z et al (2019) Fcos: fully convolutional one-stage object detection. In: Proceedings of the IEEE/CVF international conference on computer vision

Kong T et al (2020) Foveabox: beyond anchor-based object detection. IEEE Trans Image Process 29:7389–7398

Jiang B et al (2018) Acquisition of localization confidence for accurate object detection. In: Proceedings of the European conference on computer vision (ECCV)

Zhu B et al (2020) Autoassign: differentiable label assignment for dense object detection. arXiv preprint arXiv:2007.03496

Qiu H et al (2020) Borderdet: border feature for dense object detection. In: European conference on computer vision. Springer, Cham

Zhang S et al (2020) ‘Bridging the gap between anchor-based and anchor-free detection via adaptive training sample selection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition

Choi J et al (2019) Gaussian yolov3: An accurate and fast object detector using localization uncertainty for autonomous driving. In: Proceedings of the IEEE/CVF international conference on computer vision

He Y et al (2019) Bounding box regression with uncertainty for accurate object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition

Zhang T et al (2021) Sar ship detection dataset (ssdd): official release and comprehensive data analysis. Remote Sens 13(18):3690

Wei S et al (2020) HRSID: a high-resolution SAR images dataset for ship detection and instance segmentation. IEEE Access 8:120234–120254

Everingham M et al (2010) The pascal visual object classes (voc) challenge. Int J Comput Vision 88(2):303–338

Lin T-Y et al (2014) Microsoft coco: common objects in context. In: European conference on computer vision. Springer, Cham

Chen K et al (2019) MMDetection: open mmlab detection toolbox and benchmark. arXiv preprint arXiv:1906.07155

Wang N et al (2020) Nas-fcos: fast neural architecture search for object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition

Kim K, Lee HS (2020) Probabilistic anchor assignment with iou prediction for object detection. In: European conference on computer vision. Springer, Cham

Li X et al (2020) Generalized focal loss: learning qualified and distributed bounding boxes for dense object detection. Adv Neural Inf Process Syst 33:21002–21012

Cao Y et al (2020) Prime sample attention in object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 11583–11591

Funding

Grant name: National Natural Science Foundation of China. Grant number: 62006240. Grant recipient: Yujie He.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest. The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lv, Y., Li, M. & He, Y. A novel twin branch network based on mutual training strategy for ship detection in SAR images. Complex Intell. Syst. 10, 2387–2400 (2024). https://doi.org/10.1007/s40747-023-01240-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-023-01240-y