Abstract

Thermal infrared image colorization is very difficult, and colorized images suffer from poor texture detail recovery and low color matching. To solve the above problems, this paper proposes an Efficient and Effective Generative Adversarial Network (E2GAN). This paper proposes multi-level dense module, feature fusion module, and color-aware attention module in the improved generator. Adding multi-level dense module can enhance the feature extraction capability and the improve detail recovery capability Using the feature fusion module in the middle of the encoder–decoder reduces the information loss caused by encoder down-sampling and improves the prediction of fine color of the image. Using the color-aware attention module during up-sampling allows for capturing more semantic details, focusing on more key objects, and generating high-quality colorized images. And the proposed discriminator is the PatchGAN with color-aware attention module, which enhances its ability to discriminate between true and false colorized images. Meanwhile, this paper proposes a novel composite loss function that can improve the quality of colorized images, generate fine local details, and recover semantic and texture information. Extensive experiments demonstrate that the proposed E2GAN has significantly improved SSIM, PSNR, LPIPS, and NIQE on the KAIST dataset and the FLIR dataset compared to existing methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

With decreasing price and increasing resolution, thermal infrared cameras have gradually moved from their initial military applications to civilian applications, such as autonomous driving [1,2,3], and security [4, 5]. However, thermal infrared images have limitations of low contrast and lack of color information because of the imaging principle and manufacturing process. These problems can reduce the performance of computer vision tasks [6,7,8,9] based on thermal infrared images. Therefore, thermal infrared image processing has become a hot research topic in academia and industry. In thermal infrared image processing, colorization is one of the important algorithms. On the one hand, people prefer color information to gray-scale information; on the other hand, compared with visible color images, thermal infrared images lose some information. It not only loses color information, but also loses semantic information.

As a result, the colorization of thermal infrared images involves the recovery of color information and semantic information. The colorization of single-channel gray thermal images into color visible images with sufficient details is valuable to improve the scene representation of thermal infrared images. However, thermal infrared image colorization is a highly ill-posed problem, which makes the design of network architecture for colorization extremely difficult. The current problem is how to accurately predict color information and recover semantic information from the contour details of thermal infrared images objects.

After several decades of development, a lot of colorization methods have been proposed, which can be broadly classified into three categories: (1) scribble-based methods [10,11,12], (2) reference image-based methods [13,14,15,16], and (3) fully automated methods [17,18,19,20,21,22,23,24,25,26]. The two former types of methods require manual interaction to distribute reasonable colors to various objects in the input image, which makes them labor-intensive and less robust to errors. [10,11,12] require pre-setting reference colors to be drawn on gray-scale images, and [13,14,15,16] depend heavily on manually selected reference color images. With the rapid development of deep learning, image colorization methods have gradually started to utilize generative adversarial networks (GANs) to automatically colorize images. TICC-GAN [18] used conditional generative adversarial networks to colorize infrared images, but the generated colorized images were blurred in detail and lacked texture information. Therefore, SCGAN [22] boosts the colorization quality by increasing the feature extraction capability, but the attention prediction network only works on a single resolution level, which leads to insufficient feature information. I2VGAN [23] enhances the colorization quality by segmenting infrared and visible video into images with the relationship between consecutive frames. MobileAR-GAN [24] improves the network and loss function, which achieves state-of-the-art colorization quality. However, due to insufficient consideration of gray-scale detail recovery, the colorized images look similar to the visible images, but the semantic information of the details is always not satisfactory.

To address the above problem, this paper proposes an Efficient and Effective Generative Adversarial Network (E2GAN). The generator proposes a hybrid structure of multi-level dense module, feature fusion module, and color-aware attention module, which enhances the feature extraction capability, captures more semantic details, and generates high-quality colorized images. And the discriminator is a PatchGAN with a color-aware attention module, which enhances the discriminator’s ability to recognize true and fake colorized images. Meanwhile, this paper proposes a novel composite loss function to achieve realistic image quality.

To be more specific, the main contributions of the work are as follows:

-

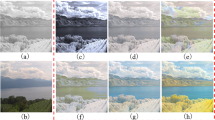

The proposed multi-level dense module extracts multi-level context information with fewer parameters and computational cost, which enhances the feature extraction capability and improves the detail recovery capability. As shown in Fig. 1, the proposed E2GAN can generate higher quality color thermal infrared images while maintaining high robustness compared to previous methods.

-

This paper adopts the proposed color-aware attention module to the decoder part, which effectively improves the colorization quality of the network by enhancing important features and suppressing unnecessary ones. It also enhances perceptual information, captures more semantic details, and focuses on more critical objects.

-

This paper proposes the feature fusion module, which processes the features down-sampled by the encoder and sends the fused features to the decoder. The feature fusion module can reduce the information loss caused by encoder down-sampling and improve the prediction of the image’s detailed color.

-

This paper proposes a composite loss function consisting of synthetic loss, adversarial loss, feature loss, total variation loss, and gradient similarity loss, which can improve the quality of colorized images and generate fine local details, meanwhile recovering semantic and texture information.

-

This paper evaluated the proposed network on the KAIST dataset and the FILR dataset. Extensive experimental results show that compared with existing methods, the proposed network generates colorized images with the state-of-the-art results in SSIM (Structural Similarity), PSNR (Peak Signal to Noise Ratio), LPIPS (Learned Perceptual Image Patch Similarity) and NIQE (Natural Image Quality Evaluator).

In the remainder of this paper, the next section summarizes the related work about IR image colorization and attention mechanisms. The subsequent section describes the architecture of the proposed E2GAN followed by which the experiments on the KAIST dataset and the FILR dataset are presented. The final section draws conclusions from the work.

Related work

Knowledge reserve

After decades of technological development, the field of artificial intelligence (AI) has gradually matured. Machine learning (ML) [27] is a branch of AI. It includes NAS [28], WANN [29], and hybrid approaches between meta-heuristics and machine learning [30,31,32], the latter being a novel research area that successfully combines machine learning and swarms intelligence approaches and has been shown to yield excellent results in different domains. Deep learning (DL) [33, 34] is a subset of ML, or deep learning is a technique that implements machine learning. In recent years, deep learning-based infrared techniques have emerged [35, 36], and these deep learning-based methods are relatively new and have achieved good performance in many scenarios.

Image colorization

Traditional colorization methods have required manual interaction to assign reasonable colors to different objects in the input image, including scribbles [10,11,12] and reference images [13,14,15,16], among others. After the fast development of deep learning, Convolutional Neural Networks (CNNs) have been successfully applied to colorization tasks in gray-scale [37,38,39,40,41], near-infrared [17, 24, 42], and thermal infrared [20, 25, 43,44,45].

Larsson et al. [37] predicted the histogram of each pixel to solve the problem of different colors. Deshpande et al. [38] used a variational auto-encoder for colorization. Limmer et al. [42] proposed a deep multi-scale CNN for near-infrared colorization. Berg et al. [43] proposed two methods for thermal infrared to colorize visible images from fully automatic colorization. Almasri et al. [44] proposed a deep learning method that maps thermal infrared features in the thermal image spectrum to their visible representations in the low-frequency domain. Then the predicted low-frequency representation is combined with the high-frequency representation, which is extracted from the thermal image using a panoramic sharpening method. Cheng et al. [20] proposed a coarse-to-fine colorization strategy to generate more realistic colorized images. Liu et al. [45] proposed a two-stage colorization method, which converted infrared images into gray-scale images and then converted gray-scale images into color images. This method could learn the intrinsic features. Wang et al. [25] improved the quality of color thermal infrared images using hierarchical architecture, attention mechanism, and proposing a composite loss function. All in all, previous methods have achieved impressive results, however, due to the insufficient constraint of the loss function of CNNs on the image colorization, the semantic encoding entanglement and geometric distortion in colorization tasks are still not well addressed.

Generating adversarial networks

Generative adversarial networks (GANs) [46] have shown excellent performance in tasks such as image-to-image translation [39,40,41, 47, 48], image style transfer [49,50,51], and image super-resolution [52,53,54]. For image-to-image translation, Isola et al. [39] used pairs of images to embed conditional vectors for the generated colorized images. To reduce the difficulty of collecting paired data, Zhu et al. [40] proposed an unpaired image-to-image translation method with cyclic consistency loss. Kniaz et al. [47] used thermal histograms and feature descriptors as thermal features. Vitoria et al. [41] proposed a colorization method that combines semantic information. The model learns colorization by combining the perception of color and category distributions with semantic understanding to learn colorization ability. Zhao et al. [22] improved the quality of colorization by enhancing the feature extraction capability. Li et al. [23] segmented infrared and visible video into images and enhanced the quality of colorization by the relationship between consecutive frames. Saxena et al. [48] proposed a new multi-constraint adversarial model, where several adversarial constraints are applied to the multi-scale output of the generator through a single discriminator. The gradients are simultaneously passed to all scales and help the generator to train to capture the difference in appearance between two domains. Jung et al. [55] proposed a novel semantic relation consistency (SRC) regularization along with decoupled contrastive learning, which utilized the diverse semantics by focusing on the heterogeneous semantics between the image patches of a single image. However, SRC generated images still have distortion. The generative adversarial network produces images that are diverse but suffer from incorrect mapping and lack of detail, as well as the risk of training instability and pattern collapse. Chen et al. [56] improved texture distortion and detail blurring through an improved generator and a new contrast loss function that ensures the consistency of image content and structure. Compared with CNN-based, the GAN-based colorization methods reduce artifacts and improve the quality of colorization. However, the feature information of the generated image is incomplete, resulting in the mismatch between the structure and content of objects in the image, which in turn leads to texture distortion and blurred details.

Attentional mechanisms

In recent years, attention mechanisms [57, 58] have been extensively used in computer vision fields, such as semantic segmentation [59, 60], image fusion [61, 62], and object detection [63, 64], which help models to focus their attention on important feature parts. To address the lack of texture information and semantic information, some methods employed attention mechanisms to aid image-to-image transformation. Kim et al. [65] introduced channel attention mechanisms in generators and discriminators to better achieve overall shape transformation. To produce more realistic output images, Emami et al. [66] proposed a new spatial attention that is used to help the generator to pay more attention to the most discriminative regions between the source and target domains. Lai et al. [67] introduced a residual attention mechanism that further facilitates the propagation of features through the encoder. Tang et al. [68] created attention masks based on CycleGAN with an embedded attention mechanism, which combines the generated results with an attention mask to obtain high-quality colorized images. Yadav et al. [24] utilized attention gates in the decoder part of the generator network, which helps to focus on more important features to learn. Luo et al. [26] designed a novel attention module, which not only progressively utilizes extensive contextual information to assist in the semantic perception of complex regions but also reduces the spatial entanglement of features. The results show that the proposed E2GAN generates visually more realistic RGB images compared to the above methods. The comparison in Figs. 7 and 9 clearly demonstrates the advantage.

Proposed E2GAN

The proposed E2GAN aims to learn the optimal mapping of thermal infrared image \(I_\textrm{ir}\) to Ground Truth \(I_\textrm{vis}\), and generate optimal colorized images \(I_\textrm{col}\). However, thermal infrared image colorization requires estimating texture information and semantic information simultaneously, which is very difficult to learn the optimal mapping. Inspired by GANs, as shown in Fig. 2, the proposed E2GAN consists of a designed generator and discriminator. The generator is used to generate the colorized images, while the discriminator tries to identify the input images as true or false.

Overall framework

Figure 3 shows the technical details of the proposed E2GAN, which uses an encoder–decoder structure. The generator consists of five parts: multi-level dense module, down-sampling module, up-sampling module, feature fusion module, and color-aware attention module.

The multi-level dense module is the basic module used to fully extract information from thermal infrared images. It extracts multi-level contextual information with fewer parameters and computational cost, which enhances feature extraction capability and improves detail recovery. The down-sampling module utilizes a convolutional block with a convolutional kernel of 3 and a stride size of 2, which can retain more information from thermal infrared images. The up-sampling module adopts a transpose convolution with a convolution kernel of 4 and a stride size of 2. A convolution block with a convolution kernel of 3 and a stride size of 1 is added to the transpose convolution to reduce the resulting checkerboard artifacts. The feature fusion module is applied between the encoder and the decoder. It reduces the information loss caused by encoder down-sampling and improves the prediction of the image’s fine colors. The color-aware attention module is used for up-sampling, and it effectively improves the colorization quality of the network by enhancing important features and suppressing unnecessary features. It also enhances the perceptual information to capture more semantic details and focus on more critical objects. The discriminator is a modified PatchGAN with a color-aware attention module in the middle convolutional layer. It can boost perceptual information and facilitate quick convergence. Moreover, the proposed E2GAN satisfies 1-Lipschitz continuity by attaching spectral normalization [69] to each discriminator layer.

Network modules

Multi-level dense module

Currently, the commonly used feature extraction module in colorization uses residual blocks [18, 24], although it effectively mitigates the problem of gradient conduction and is able to retain most of the low-frequency information. However, multi-level information is underutilized in this structure, and solving this problem has been shown to produce positive effects in many fields [70,71,72]. To sufficiently reuse the abundant multi-level information in the encoder–decoder, this paper proposes a multi-level dense module to extract multi-level context information with fewer parameters and computational cost, which enhances the feature extraction capability and improves the detail recovery.

Figure 4 shows the technical details of the proposed multi-level dense module. Specifically, in the main route, this paper employs a convolutional block with a convolutional kernel of 3 and a step size of 1. Given the channel numbers M for the input of the multi-level dense module and the channel numbers N for the output. the channel numbers of the convolutional layer in the ith convolutional block is \(N/2^i\), except for the channel numbers of the last convolutional block, which are the same as the channel numbers of the previous convolutional block. In each slave route in a multi-level dense block, this paper uses a convolutional block with a convolutional kernel of 3. The input channel numbers of the convolutional block are the same as the output channel numbers. This paper uses \(f_i\) and \(m_i\) to denote the ith convolutional block of the main route and the slave route, respectively. Therefore, the output of the ith convolutional block is calculated as follows:

where \(x_{i-1}\) and \(x_{i}\) are the input and output of the ith convolutional block, respectively. \(f_i\) and \(m_i\) denote a special convolution block, which includes a convolutional layer, an instance normalization layer, and the LeakyReLU activation layer. \(k_i\) is the kernel size of the convolutional layer.

This paper focuses on scalable receptive fields and multi-level information. The lower level needs enough channels to encode more fine-grained small receptive field information, while the higher level with large receptive fields focuses more on the generalization of higher-level information. However, setting the same channels as the lower levels may lead to information redundancy, so this paper provides different proportions of channel outputs in the multi-level dense module. To enrich the feature information, this paper uses the \(x_1\) to \(x_n\) feature mapping as the output of the multi-level dense module by concatenating fusion. In the settings, the final output of the multi-level dense module is:

where x denotes the multi-level dense module output. C denotes the concatenate fusion operation in the method, which maintains enriched multi-level information within the channel. And \(x_{1}, x_{2}, \ldots , x_{n}\) are the feature maps from all n convolutional blocks. x captures the multi-scale information of all blocks. Based on experimental experience, n is chosen to be 4.

Feature fusion module

Nowadays, the vast majority of encoder–decoder architectures perform up-sampling recovery directly after the end of down-sampling, where no additional operations are performed. However, in this structure, the down-sampling information is not fully utilized. For thermal infrared image colorization, the prediction of fine color information in the local image plays an important role in generating the color information of the three channels. Inspired by [73, 74], this paper designed feature fusion modules to be applied between the encoder and decoder, and the structure of the feature fusion module is shown in Fig. 5. The first convolution block consists of convolution kernels of 3 and stride size of 1. The channel numbers are reduced to half, but the feature map scale remains the same. The second convolution block has a convolution kernel of 3 and a stride size of 2. The channel numbers remain the same, but the feature map scale is reduced to half. The third convolution

block has a convolution kernel of 3, a stride size of 1, and the channel numbers are reduced to half. The three convolution blocks separately extract multi-scale information. After bilinear up-sampling interpolation to the initial image size. Then the global features and local features are concatenated by a concatenate and fusion operation, increasing the network recognition capability. Finally, the final feature map is obtained by the fourth convolution block and skip connection. The feature fusion module can reduce the information loss of encoder down-sampling and improve the prediction of the image’s fine color. The entire process of the feature fusion module can be described as follows:

where \(x_\textrm{output}\) and \(x_\textrm{input}\) are the input and output of the feature fusion module, \(f_{i}\) is the ith convolution block, Up is the bilinear up-sampling interpolation, and C is the concatenate fusion operation.

Color-aware attention module

The process of convolution causes the perceptual field to be limited to a certain range. This operation leads to a certain difference between pixel-to-pixel of the same category, which can result in lower-quality of colorization results. To solve the above problem, this paper proposes the color-aware attention module. As shown in Fig. 6, it mainly consists of position-aware attention and semantic-aware attention. In particular, the position-aware attention can capture the context information between arbitrary two positional information in the position, while the semantic-aware attention can capture the context information in the channel dimension.

Position-aware attention: The technical details of the location-aware attention are shown in the top region of Fig. 6. The input feature map of the position-aware attention is \(X \in R^{H \times W \times C}\), where H, W, C represent the height, width, and channel dimensions, respectively. The input X is convolved 1\(\times \)1 to obtain \(x^1\). And the output of this stage is processed with two parallel dilation convolution blocks and a standard convolution block to obtain the feature maps of \(x^2\), \(x^3\), and \(x^4\). This paper multiplies the two mappings \(x^2\) and \(x^3\), which is used softmax to obtain the attention matrix, as shown in Eqs. (4) and (5):

where f denotes the convolution and \(s_{i, j}\) evaluates the ith influence of the jth position on the position.

In the feature map \(x^3\) provides feature mappings at different scales, the fusion layer decides to fuse the various feature mappings carried by \(x^3\) with the \(s_{i, j}\) feature matrix to help and guide the model to better recover the color and structural features of the image, prompting the model to generate a more realistic colorized image. And summed by the convolutional block with \(x^1\). Finally, the final feature map is obtained by a standard convolutional block. Position-aware attention can be expressed as:

Semantic-aware attention: In high-level semantic features, each channel can be considered as a special response to a certain class. Enhancing the feature channels of this response can effectively improve the colorized image quality. Each channel is weighted by calculating a weighting factor. This highlights the important channels and enhances the feature representation. Semantic-aware attention can be expressed as

This paper applies the proposed color-aware attention module to the decoder part, which effectively improves the colorization quality of the network by enhancing the important features and suppressing the unnecessary ones. Meanwhile, it enhances perceptual information, captures more semantic details, and focuses on more critical objects.

Loss function

To penalize the distance between real color images and colorized images, this paper proposes a composite loss function consisting of synthetic loss, adversarial loss, feature loss, total variation loss, and gradient similarity loss. It can improve the quality of the colorized image and generate fine local details while recovering semantic and texture information. This paper now describes the technical description of each loss function in detail.

Synthetic loss. In fact, synthetic loss is L1 loss. By increasing the synthetic loss, the brightness and contrast differences between color images and Ground Truth can be effectively minimized. If the GAN focuses overly on the synthesis loss, the brightness and contrast in the thermal infrared image will be lost. To prevent the generator over representation of pixel-to-pixel relationships, this paper will add appropriate weights for synthetic loss. The synthetic loss can be expressed as

where W and H denote the height and width of the thermal infrared image, respectively. \(I_\textrm{ir}\) denotes the input thermal infrared image, \(I_\textrm{vis}\) denotes Ground Truth. \(G(\cdot )\) denotes the generator, and \(\Vert \cdot \Vert _{1}\) denotes the L1 norm.

Adversarial loss. Although the application of synthetic loss facilitates high PSNR, colorized images will lose some of their detailed content. To encourage the network colorization results with more realistic details, this paper utilizes adversarial loss. Adversarial loss is used to learn the implicit relationship between thermal infrared images and Ground Truth. Adversarial loss is defined as

where the input thermal infrared image \(I_\textrm{ir}\) is not only the input to the generator, but also to the discriminator as a conditional item.

Feature loss. \(L_\textrm{syn}\) and \(L_\textrm{adv}\) sometimes fail to ensure consistency between perceptual quality and objective metrics. To alleviate this problem, this paper uses feature loss [75], which compares the differences between feature maps extracted by dedicated pre-trained models. In this paper, this paper uses the VGG-16 CNN model [76] to extract the feature maps of the color results and Ground Truth, and then compute the Manhattan distance between the pair of feature maps. The feature loss is expressed as

where \(\varphi _{n}(\cdot )\) denotes the nth layer feature map in the VGG-16 network. \(C_n\), \(H_n\) and \(W_n\) denote the number of channels, height and width of the layer, respectively.

Total variation loss. In addition to using feature loss to recover high-level content, this paper also employs total variation loss [77] to enhance the spatial smoothness of color thermal images. The total variation loss is defined as:

where \(\nabla _{x}\) and \(\nabla _{y}\) denote the gradient of the image in the x and y directions. \(|\cdot |\) denotes the element-by-element absolute value of the given input.

Gradient similarity loss. This paper is the first to use gradient similarity loss to enhance the colorized image quality. Gradient similarity loss of visible images is used in the network to constrain the network, which is used to obtain texture information and luminance information of the images. The gradient similarity loss facilitates faster convergence of the network. The gradient similarity loss is defined as

where \(\nabla \) denotes the gradient.

Final loss function. The final loss function of the proposed E2GAN is presented as follows:

where \(\lambda _\textrm{adv}\), \(\lambda _\textrm{feat}\), \(\lambda _\textrm{syn }\), \(\lambda _\textrm{tv}\) and \(\lambda _\textrm{grad}\) denote the weights controlling the different loss shares in the complete loss function, respectively. The weights are set based on preliminary experiments on the training dataset.

Experiments

To verify the capability and quality of the proposed E2GAN, this paper performed a series of experiments on the processed KAIST (denoted as KAIST) dataset and the processed FLIR (denoted as FLIR) dataset. this paper compares the representative visible colorization networks and infrared colorization networks in recent years. This paper introduces the information on the datasets used in Sect. 4.1. Then this paper describes the experimental setting of the proposed E2GAN. Next, a comparison of the performance with other advanced networks is shown on the KAIST dataset and FLIR dataset. This is followed by ablation studies to verify the reasonableness and effectiveness of the proposed module and loss function. Subsequently, generalization experiments were conducted to verify that the proposed E2GAN has stronger robustness and generalization ability. Finally, the limitations of the proposed E2GAN are analyzed.

Dataset information

Although many visible-infrared datasets are available, most of them are unaligned. And when applying colorization tasks, colorization networks generate images with poor colorization quality and are prone to color confusion. Therefore, this paper selected two processed datasets for extensive experiments.

KAIST dataset: The KAIST multispectral pedestrian dataset [78] consists of a total of 95,328 images, each containing both RGB color and infrared versions of the image. The dataset captures a variety of regular traffic scenes including schoolyards, streets, and the countryside during daytime and nighttime, respectively. The image size is 640 \(\times \) 512. Although the KAIST dataset itself states that it is aligned, after careful observation, this paper found that some of the infrared images are still partially deviated from the visible images. And because the KAIST dataset is taken from the video continuous frame images, the neighboring images will not be very different from each other. After the washing operation, this paper selected 4755 daytime images and 2846 nighttime images in the training dataset. In the test dataset, 1455 daytime images and 797 nighttime images were selected. This paper uses only the daytime training dataset for training.

FLIR dataset: The FLIR datasetFootnote 1 has 8862 unaligned visible and infrared image pairs, which contain abundant scenes, such as roads, vehicles, pedestrians, and so on. These images are highly representative scenes in FLIR videos. In this dataset, this paper selects feature points by the control point selection algorithm to form a feature point matrix and use this matrix to manually align the FLIR dataset. After filtering the alignment, this paper left 4346 daytime images and 363 nighttime images in the training dataset. In the test dataset, 1455 daytime images and 104 nighttime images were selected. This paper uses only the daytime training dataset for training. Table 1 provides specific details about these datasets.

Implementation details

Each image is resized from an arbitrary size of the dataset to a fixed size of 256 \(\times \) 256 and then input to the training network. The proposed network is trained for 200 epochs, and the batchsize is set to 1. During training, this paper uses a “Lambda” learning rate decay strategy [40], where the learning rate is set to 0.0002. The number of channels in the first convolutional layer of the generator and discriminator is set to 64. This paper uses the Adam optimizer [79] with \(\beta _1\) = 0.5 and \(\beta _2\) = 0.999. The discriminator and generator are trained alternately until the proposed E2GAN converges. In all experiments, according to the experimental experience, this paper sets the weights of different losses in the final loss function to \(\lambda _\textrm{adv}\) = 1, \(\lambda _\textrm{feat}\) = 1, \(\lambda _\textrm{syn}\) = 15, \(\lambda _\textrm{tv}\) = 1 and \(\lambda _\textrm{grad}\) = 10, respectively. This paper implemented the network with the PyTorch framework [80] and performed it on an NVIDIA Titan Xp GPU for training.

where \(\textrm{lr}_\textrm{lambda}\) denotes the decaying learning rate factor, epoch denotes the current training round. \(n_{-}\textrm{epoch}\) denotes the number of training rounds with constant learning rate, and \(n_{-}\textrm{epoch}_{-}\textrm{decay}\) denotes the number of training rounds with decaying learning rate. \(\textrm{epoch}_\textrm{last}\) denotes the last training round. \(\textrm{lr}_\textrm{base}\) denotes the base learning rate, and \(\textrm{lr}_\textrm{new}\) denotes the updated learning rate.

In this paper, this paper uses the four representative image quality evaluation metrics for quantitative evaluation: SSIM [81], PSNR, LPIPS [82], and NIQE [83]. PSNR is used to measure the intensity difference between colorization results and Ground Truth, and higher PSNR indicates the intensity of two images is more similar. SSIM is used to measure the similarity between colorization results and Ground Truth, and a higher SSIM indicates a smaller difference. LPIPS is more consistent with human perception than PSNR and SSIM, and lower LPIPS indicates that the colorization results are more realistic and diverse. NIQE is a more effective indicator of non-reference colorization quality, and lower NIQE indicates better colorization quality. In addition, all quantitative experimental results are the average of all images in each test dataset. This paper also uses three evaluation metrics regarding computational complexity: generator parameters, Graphics Processing Unit (GPU) memory, and Multiply-Accumulate Operations (MACs). The lower the three parameters, the lower the computational complexity.

Comparison experiments

This paper selected the eight representative colorization methods and the proposed E2GAN on the KAIST dataset and FLIR dataset for comprehensive comparison. The comparison mainly includes recent approaches based on non-attention mechanisms for comparison, such as Pix2Pix [39], CycleGAN [40], ThermalGAN [47], TICC-GAN [18] and I2VGAN [23], and approaches based on attention mechanisms, such as U-GAT-IT [65] and CWT-GAN [67], and the latest contrast learning based method SRC [55]. Among them, TICC-GAN is the most similar to the approach, and this paper sets it as the baseline. Compared to the eight representative colorization methods, the proposed E2GAN accurately generates locally realistic textures and fine details. Regarding the comparison methods, this paper trained and tested them on the KAIST dataset and the FLIR dataset using the source code published by the authors and the hyperparameters provided.

Comparison more details by observational analysis using the KAIST dataset between proposed E2GAN and other colorization methods. From these results, it can be seen that the proposed E2GAN has a clear advantage in color realism and detail accuracy, especially for objects such as “vehicles” and “crosswalks”. It indicates that the method performs well in terms of both global quality and local perception

Comparison on the KAIST dataset

First of all, this paper performed comprehensive comparisons with the proposed E2GAN on the KAIST dataset using the comparison methods. As can be seen in Fig. 7, it can be seen that CycleGAN, ThermalGAN, and U-GAT-IT can only reconstruct most of the global structure, but the colorization results still look unrealistic while lacking in color information and local details. For example, critical objects such as “vehicles”, “crosswalks”, and “traffic signs” in visual perception are not accurately restored. Among all comparison methods, Pix2pix has the most model parameters. However, the network roughly recovered the overall chromaticity but failed to accurately estimate the luminance, and the colored images were severely blurred and lacked fine textures. While colorization is accomplished, there are still deficiencies in image structure and detail recovery. As the current state-of-the-art methods, TICC-GAN, I2VGAN, CWT-GAN, and SRC return quite reasonable and natural color images compared to the first four methods. However, it fails in terms of image details, especially in terms of tiny structures, and the contours of “crosswalks”, “buildings” and “roadblocks” are confusing. There are artifacts in the colored sky, and there is a semantic confusion effect. The proposed E2GAN has a clear advantage in color realism and detail accuracy, especially for objects such as “vehicles” and “crosswalks”. It indicates that the method performs well in terms of both global quality and local perception. More detailed information on the colorization details is shown in Fig. 8. The quantitative performance of each comparison method in the KAIST dataset is shown in Table 2, and the optimal values of each metric are marked in red to highlight. SRC achieves optimal results on NIQE, but its qualitative analysis shows serious distortion in its colorization results, which indicates that the unreferenced metrics are susceptible to noise, artifacts, and other conditions. The proposed E2GAN achieves competitive metrics and has low computational complexity. The good performance of the algorithm in PSNR and SSIM proves that the proposed E2GAN retains more structural information and texture features of the infrared images, and LPIPS proves that the algorithm is more relevant to the real image from the information theory point of view.

Comparison on the FLIR dataset

To fully reflect the advancedness of the proposed E2GAN, this paper is validated on the FLIR dataset with the same experimental settings and evaluation metrics as the KAIST dataset comparison experiments. As shown in Fig. 9, the colorization effect results of each comparison method for multiple scenes in the FLIR dataset are demonstrated. As can be seen from Fig. 10, the colorized images of the other comparison algorithms do not have obvious representation of the sky details in the infrared images and produce serious artifacts. In contrast, the colorized image of this paper not only retains the complete sky texture but also has no artifacts. Meanwhile, the colorized image of each comparison method does not have good colorization of the distant targets, but the colorized image of this paper has more complete and obvious distant targets, and the details in the image are also fully preserved, and the image as a whole is more consistent with human eye vision. This indicates that the method in this paper can still generate color images with higher perceptual quality and can learn the data distribution of different datasets with high robustness.

The quantitative performance of each comparison method on the FLIR dataset is shown in Table 3, and the optimal values of each metric are marked in red to highlight. The algorithms in this paper still have more obvious advantages in PSNR, SSIM, and LPIPS compared to other comparison methods, while the performance on NIQE also possesses a better performance. The analysis results of each method on the two data sets are the same, and the quantitative analysis results are consistent with the qualitative analysis results, which proves the effectiveness and advancement of the method in this paper. Meanwhile, it indicates that the proposed E2GAN is able to learn the data distribution of different datasets with extremely high robustness. The performance of each comparison method for a lot of random samples is shown in Fig. 11. It can be seen that compared with other comparison methods, the proposed E2GAN produces less performance degradation in complex scenarios and has stronger robustness.

Comparison of more details by observational analysis using the FLIR dataset between proposed E2GAN and other colorization methods. From these results, it can be seen that the proposed E2GAN has a clear advantage in color realism and detail accuracy, especially for objects such as “vehicles” and “trees”. It indicates that the method performs well in terms of both global quality and local perception

Ablation study

In addition to performing comparative experiments, this paper also performed ablation studies, which were used to demonstrate the reasonableness and validity of the proposed modules and composite loss functions in the proposed E2GAN. Specifically, this paper performed quantitative and qualitative analyses on the KAIST dataset and the FLIR dataset.

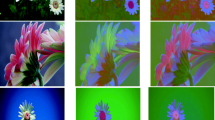

Importance of different modules

To further validate the reasonableness and effectiveness of several modules proposed in the proposed E2GAN, this paper performed the experimental analysis of different module structures on the KAIST dataset and FLIR dataset. Specifically, this paper designed four module structures. Table 4 shows the quantitative comparison of different module structures on the KAIST dataset and the FLIR dataset. Among them, Structure1 is a w/o multi-level dense module (replaced with one standard dense block [84]), Structure2 is a w/o feature fusion module (replaced with one standard residual block [85]), Structure3 is a w/o color-aware attention module (replaced with CBAM [86]), and Structure4 is the complete E2GAN structure. A comparison of the perceptual quality of the different module structures on the KAIST dataset and the FLIR dataset is shown in Fig. 12. It can be seen that by removing the multi-level dense module and the feature fusion module, the colorized images generated by the proposed E2GAN have reduced quality, and the edges are blurred, which fails to recover many important objects. The lack of the feature fusion module fails to prediction of the image’s detailed color, which leads Images color confusion. The absence of the color-aware attention module leads to semantic confusion and fails to emphasize the important parts of the image. In conclusion, each module of the proposed E2GAN is indispensable.

Importance of different loss functions

This paper further explored the effect of different loss functions on the composite loss function. This paper performed the experimental analysis of different loss function combinations on the KAIST dataset and the FLIR dataset. Specifically, this paper designed six combinations of loss functions. Table 5 shows the quantitative comparison of different loss function combinations on the KAIST dataset and the FLIR dataset. In particular, Loss1 is \(L_\textrm{adv}\), Loss2 is \(L_\textrm{adv}\)+\(L_\textrm{syn}\), Loss3 is \(L_\textrm{adv}\)+\(L_\textrm{syn}\)+\(L_\textrm{feat}\), Loss4 is \(L_\textrm{adv}\)+\(L_\textrm{syn}\)+\(L_\textrm{feat}\)+\(L_\textrm{tv}\), and Loss5 is \(L_\textrm{adv}\)+\(L_\textrm{syn}\)+\(L_\textrm{feat}\)+\(L_\textrm{tv}\)+\(L_\textrm{grad}\). Different loss function combinations are compared in terms of perceptual quality on the KAIST dataset and the FLIR dataset as shown in Fig. 13. It can be seen that \(L_\textrm{adv}\) can learn the implicit relationship between thermal infrared images and Ground Truth to generate accurate details, \(L_\textrm{syn}\) can improve the quality of colorized images, \(L_\textrm{feat}\) can increase the detail information, \(L_\textrm{tv}\) can reduce the impact of noise in the infrared dataset and generate smoother colorized images, and \(L_\textrm{grad}\) can effectively learn the gradient characteristics of real visible images from the training data, thus helping the generator to reconstruct more realistic and clear color images. Therefore, each of the loss functions is essential for generating high-quality colorized images.

Night to day colorization generalization

This paper also performed generalization experiments on thermal infrared images captured during the nighttime, with Pix2Pix, CycleGAN, ThermalGAN, U-GAT-IT, TICC-GAN, I2VGAN, CWT-GAN, and SRC. Figure 14 shows the colorization results of the proposed E2GAN and the comparison methods. All methods were trained on the daytime dataset only. Due to the lower contrast of nighttime infrared images, colorization is more difficult. Therefore, the contrast methods’ results of nighttime are more blurred compared to those of the daytime, and there is a problem of color confusion. There are serious distortions in the areas of people, cars, and trees in the picture, resulting in the inability to identify significant targets. In contrast, the proposed E2GAN still recovers most of the detailed textures, which are clearly visible in the colorization results, and significantly outperforms other comparison methods.

Limitations

The proposed E2GAN achieves excellent results on the KAIST dataset and the FLIR dataset, but there are still some problems. Figure 15 shows some failure cases. It can be seen that the proposed E2GAN can recover the global structure, but the local details are easily colored incorrectly or ignored directly. Examples include “shadows”, “buildings”, and “cars”. These failures contain repeating textures and less structural information, which makes it difficult for the network to colorize them. This may be due to problems in the dataset itself, the existing datasets are not diverse enough for the model to learn the color and detail information of the objects that do not appear in the training set. There are also such as low resolution, low contrast, and blurred visuals. Considering the difficulty of dataset acquisition, it may be necessary to pre-train the proposed network, which learns various semantic information of different scenes in other existing datasets. This paper will investigate it in the future work.

Conclusion

In this paper, this paper proposes an Efficient and Effective Generative Adversarial Network (E2GAN), which exhibits excellent visual quality in colorizing thermal infrared images. This paper demonstrates the advantages of the multi-level dense module, the color-aware attention module, and the feature fusion module over existing structures in feature extraction and detail recovery. Meanwhile, the proposed composite loss function is explored to facilitate the recovery of texture information and semantic information. Extensive experiments on thermal infrared image colorization are performed on the KAIST dataset and the FLIR dataset. The experimental results were compared with Pix2Pix, CycleGAN, ThermalGAN, U-GAT-IT, TICC-GAN, I2VGAN, CWT-GAN, and SRC. Quantitative results show that the performance of the method improves on all datasets in terms of different evaluation metrics such as SSIM, PSNR, LPIPS, and NIQE. Qualitative results also point out that the colorized images of the method improve in terms of detail recovery capability, semantic information, and artifact reduction. The unification of quantitative and qualitative results proves the superiority of the proposed E2GAN.

Qualitative analysis of night to day colorization generalization for the KAIST dataset and the FLIR dataset. Due to the lower contrast of nighttime infrared images, colorization is more difficult. In contrast, the proposed E2GAN still recovers most of the detailed textures, which are clearly visible in the colorization results, and significantly outperforms other comparison methods

Failure colorized cases by proposed E2GAN. It can be seen that the proposed E2GAN can recover the global structure, but the local details are easily colored incorrectly or ignored directly. Examples include “shadows”, “buildings”, and “cars”. These failures contain repeating textures and less structural information, which makes it difficult for the network to colorize them

There are three aspects in future work. First, to build a complete dataset, high-quality and diverse aligned thermal infrared-visible image pairs need to be acquired, which allows the network to learn more diverse color information. Secondly, more different generator architectures need to be explored to further improve the image quality, such as using a transformer encoder for the generator. Finally, the pre-training approach is attempted to improve the colorization quality by allowing the network to learn various semantic information of different scenes in other existing datasets.

Data Availability

Data related to the current study are available from the corresponding author on reasonable request.

References

Chen J, Liu Z, Jin D, Wang Y, Yang F, Bai X (2022) Light transport induced domain adaptation for semantic segmentation in thermal infrared urban scenes. IEEE Trans Intell Transp Syst 23(12):23194–23211. https://doi.org/10.1109/TITS.2022.3194931

Tang L, Yuan J, Zhang H, Jiang X, Ma J (2022) Piafusion: a progressive infrared and visible image fusion network based on illumination aware. Inf Fusion 83:79–92

Chen S, Xu X, Yang N, Chen X, Du F, Ding S, Gao W (2022) R-net: a novel fully convolutional network-based infrared image segmentation method for intelligent human behavior analysis. Infrared Phys Technol 123:104164

Tang L, Yuan J, Ma J (2022) Image fusion in the loop of high-level vision tasks: A semantic-aware real-time infrared and visible image fusion network. Inf Fusion 82:28–42

Chen Y, Li L, Liu X, Su X (2022) A multi-task framework for infrared small target detection and segmentation. IEEE Trans Geosci Remote Sens 60:1–9

Huang L, Dai S, Huang T, Huang X, Wang H (2021) Infrared small target segmentation with multiscale feature representation. Infrared Phys Technol 116:103755

Dai Y, Wu Y, Zhou F, Barnard K (2021) Asymmetric contextual modulation for infrared small target detection. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision, pp 950–959

Zuo Z, Tong X, Wei J, Su S, Wu P, Guo R, Sun B (2022) Affpn: attention fusion feature pyramid network for small infrared target detection. Remote Sens 14(14):3412

Ma J, Zhang H, Shao Z, Liang P, Xu H (2020) Ganmcc: A generative adversarial network with multiclassification constraints for infrared and visible image fusion. IEEE Trans Instrum Meas 70:1–14

Bao B, Fu H (2019) Scribble-based colorization for creating smooth-shaded vector graphics. Comput Gr 81:73–81

Chen C, Xu Y, Yang X (2019) User tailored colorization using automatic scribbles and hierarchical features. Digit Signal Process 87:155–165

Kim E, Lee S, Park J, Choi S, Seo C, Choo J (2021) Deep edge-aware interactive colorization against color-bleeding effects. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 14667–14676

Lu P, Yu J, Peng X, Zhao Z, Wang X (2020) Gray2colornet: transfer more colors from reference image. In: Proceedings of the 28th ACM international conference on multimedia, pp 3210–3218

Lee J, Kim E, Lee Y, Kim D, Chang J, Choo J (2020) Reference-based sketch image colorization using augmented-self reference and dense semantic correspondence. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 5801–5810

Yin W, Lu P, Zhao Z, Peng X (2021) Yes, “attention is all you need”, for exemplar based colorization. In: Proceedings of the 29th ACM international conference on multimedia, pp 2243–2251

Shi M, Zhang J-Q, Chen S-Y, Gao L, Lai Y-K, Zhang F (2022) Deep line art video colorization with a few references. IEEE Trans Vis Comput Gr. https://doi.org/10.1109/TVCG.2022.3146000

Liang W, Ding D, Wei G (2021) An improved dualgan for near-infrared image colorization. Infrared Phys Technol 116:103764

Kuang X, Zhu J, Sui X, Liu Y, Liu C, Chen Q, Gu G (2020) Thermal infrared colorization via conditional generative adversarial network. Infrared Phys Technol 107:103338

Babu KK, Dubey SR (2020) Pcsgan: Perceptual cyclic-synthesized generative adversarial networks for thermal and nir to visible image transformation. Neurocomputing 413:41–50

Cheng F, Shi J, Yun L, Cao X, Zhang J (2020) From coarse to fine (fc2f): a new scheme of colorizing thermal infrared images. IEEE Access 8:111159–111171

Kim G, Kang K, Kim S, Lee H, Kim S, Kim J, Baek S.-H, Cho S (2022) Bigcolor: colorization using a generative color prior for natural images. In: European conference on computer vision. Springer, pp 350–366

Zhao Y, Po L-M, Cheung K-W, Yu W-Y, Rehman YAU (2020) Scgan: saliency map-guided colorization with generative adversarial network. IEEE Trans Circuits Syst Video Technol 31(8):3062–3077

Li S, Han B, Yu Z, Liu CH, Chen K, Wang S (2021) I2v-gan: unpaired infrared-to-visible video translation. In: Proceedings of the 29th ACM international conference on multimedia, pp 3061–3069

Yadav NK, Singh SK, Dubey SR (2022) Mobilear-gan: Mobilenet-based efficient attentive recurrent generative adversarial network for infrared-to-visual transformations. IEEE Trans Instrum Meas 71:1–9. https://doi.org/10.1109/TIM.2022.3166202

Wang H, Cheng C, Zhang X, Sun H (2022) Towards high-quality thermal infrared image colorization via attention-based hierarchical network. Neurocomputing 501:318–327. https://doi.org/10.1016/j.neucom.2022.06.021

Luo F, Li Y, Zeng G, Peng P, Wang G, Li Y (2022) Thermal infrared image colorization for nighttime driving scenes with top-down guided attention. IEEE Trans Intell Transp Syst 23(9):15808–15823. https://doi.org/10.1109/TITS.2022.3145476

Cheng P, Chen M, Stojanovic V, He S (2021) Asynchronous fault detection filtering for piecewise homogenous markov jump linear systems via a dual hidden markov model. Mech Syst Signal Process 151:107353. https://doi.org/10.1016/j.ymssp.2020.107353

Zoph B, Le QV (2016) Neural architecture search with reinforcement learning. arXiv preprint arXiv:1611.01578

Gaier A, Ha D (2019) Weight agnostic neural networks. Adv Neural Inf Process Syst 32

Bacanin N, Stoean R, Zivkovic M, Petrovic A, Rashid TA, Bezdan T (2021) Performance of a novel chaotic firefly algorithm with enhanced exploration for tackling global optimization problems: Application for dropout regularization. Mathematics 9(21):2705

Malakar S, Ghosh M, Bhowmik S, Sarkar R, Nasipuri M (2020) A ga based hierarchical feature selection approach for handwritten word recognition. Neural Comput Appl 32:2533–2552

Bacanin N, Zivkovic M, Al-Turjman F, Venkatachalam K, Trojovskỳ P, Strumberger I, Bezdan T (2022) Hybridized sine cosine algorithm with convolutional neural networks dropout regularization application. Sci Rep 12(1):1–20

Shen L, Tao H, Ni Y, Wang Y, Vladimir S (2023) Improved yolov3 model with feature map cropping for multi-scale road object detection. Meas Sci Technol 34(4):045406. https://doi.org/10.1088/1361-6501/acb075

Tao H, Cheng L, Qiu J, Stojanovic V (2022) Few shot cross equipment fault diagnosis method based on parameter optimization and feature mertic. Meas Sci Technol 33(11):115005. https://doi.org/10.1088/1361-6501/ac8368

Dai X, Yuan X, Wei X (2022) Data augmentation for thermal infrared object detection with cascade pyramid generative adversarial network. Appl Intell 52(1):967–981

Tang L, Xiang X, Zhang H, Gong M, Ma J (2023) Divfusion: darkness-free infrared and visible image fusion. Inf Fusion 91:477–493. https://doi.org/10.1016/j.inffus.2022.10.034

Larsson G, Maire M, Shakhnarovich G (2016) Learning representations for automatic colorization. In: European conference on computer vision. Springer, pp 577–593

Deshpande A, Lu J, Yeh M.-C, Jin Chong M, Forsyth D (2017) Learning diverse image colorization. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6837–6845

Isola P, Zhu J-Y, Zhou T, Efros AA (2017) Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1125–1134

Zhu J-Y, Park T, Isola P, Efros AA (2017) Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the IEEE international conference on computer vision, pp 2223–2232

Vitoria P, Raad L, Ballester C (2020) Chromagan: adversarial picture colorization with semantic class distribution. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision, pp 2445–2454

Limmer M, Lensch HP (2016) Infrared colorization using deep convolutional neural networks. In: 2016 15th IEEE international conference on machine learning and applications (ICMLA). IEEE, pp 61–68

Berg A, Ahlberg J, Felsberg M (2018) Generating visible spectrum images from thermal infrared. In: Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pp 1143–1152

Almasri F, Debeir O (2020) Robust perceptual night vision in thermal colorization. arXiv preprint arXiv:2003.02204

Liu S, Gao M, John V, Liu Z, Blasch E (2020) Deep learning thermal image translation for night vision perception. ACM Trans Intell Syst Technol (TIST) 12(1):1–18

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial networks. Commun ACM

Kniaz V.V, Knyaz V.A, Hladuvka J, Kropatsch W.G, Mizginov V (2018) Thermalgan: multimodal color-to-thermal image translation for person re-identification in multispectral dataset. In: Proceedings of the European conference on computer vision (ECCV) workshops, pp 0–0

Saxena D, Kulshrestha T, Cao J, Cheung S-C (2022) Multi-constraint adversarial networks for unsupervised image-to-image translation. IEEE Trans Image Process 31:1601–1612

Li B, Zhu Y, Wang Y, Lin C-W, Ghanem B, Shen L (2021) Anigan: style-guided generative adversarial networks for unsupervised anime face generation. IEEE Trans Multimedia 24:4077–4091. https://doi.org/10.1109/TMM.2021.3113786

Xu W, Long C, Wang R, Wang G (2021) Drb-gan: a dynamic resblock generative adversarial network for artistic style transfer. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 6383–6392

Peng X, Peng S, Hu Q, Peng J, Wang J, Liu X, Fan J (2022) Contour-enhanced cyclegan framework for style transfer from scenery photos to Chinese landscape paintings. Neural Comput Appl 34:1–22

Shi Y, Han L, Han L, Chang S, Hu T, Dancey D (2022) A latent encoder coupled generative adversarial network (le-gan) for efficient hyperspectral image super-resolution. IEEE Trans Geosci Remote Sens 60:1–19

Dharejo FA, Deeba F, Zhou Y, Das B, Jatoi MA, Zawish M, Du Y, Wang X (2021) Twist-gan: towards wavelet transform and transferred gan for spatio-temporal single image super resolution. ACM Trans Intell Syst Technol (TIST) 12(6):1–20

Gong Y, Liao P, Zhang X, Zhang L, Chen G, Zhu K, Tan X, Lv Z (2021) Enlighten-gan for super resolution reconstruction in mid-resolution remote sensing images. Remote Sens 13(6):1104

Jung C, Kwon G, Ye JC (2022) Exploring patch-wise semantic relation for contrastive learning in image-to-image translation tasks. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 18260–18269

Chen L, Liu Y, He Y, Xie Z, Sui X (2023) Colorization of infrared images based on feature fusion and contrastive learning. Opt Lasers Eng 162:107395

Misra D, Nalamada T, Arasanipalai A.U, Hou Q (2021) Rotate to attend: convolutional triplet attention module. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision, pp 3139–3148

Hou Q, Zhou D, Feng J (2021) Coordinate attention for efficient mobile network design. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 13713–13722

Elhassan MA, Huang C, Yang C, Munea TL (2021) Dsanet: dilated spatial attention for real-time semantic segmentation in urban street scenes. Expert Syst Appl 183:115090

Liu S.-A, Xie H, Xu H, Zhang Y, Tian Q (2022) Partial class activation attention for semantic segmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 16836–16845

Wang Z, Wu Y, Wang J, Xu J, Shao W (2022) Res2fusion: infrared and visible image fusion based on dense res2net and double nonlocal attention models. IEEE Trans Instrum Meas 71:1–12

Jiang L, Fan H, Li J (2022) A multi-focus image fusion method based on attention mechanism and supervised learning. Appl Intell 52(1):339–357

Obeso AM, Benois-Pineau J, Vázquez MSG, Acosta AÁR (2022) Visual vs internal attention mechanisms in deep neural networks for image classification and object detection. Pattern Recogn 123:108411

Miao S, Du S, Feng R, Zhang Y, Li H, Liu T, Zheng L, Fan W (2022) Balanced single-shot object detection using cross-context attention-guided network. Pattern Recogn 122:108258

Kim J, Kim M, Kang H, Lee K (2019) U-gat-it: unsupervised generative attentional networks with adaptive layer-instance normalization for image-to-image translation. arXiv preprint arXiv:1907.10830

Emami H, Aliabadi MM, Dong M, Chinnam RB (2020) Spa-gan Spatial attention gan for image-to-image translation. IEEE Trans Multimedia 23:391–401

Lai X, Bai X, Hao Y (2021) Unsupervised generative adversarial networks with cross-model weight transfer mechanism for image-to-image translation. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 1814–1822

Tang H, Liu H, Xu D, Torr PH, Sebe N (2021) Attentiongan: unpaired image-to-image translation using attention-guided generative adversarial networks. IEEE Trans Neural Netw Learn Syst. https://doi.org/10.1109/TNNLS.2021.3105725

Miyato T, Kataoka T, Koyama M, Yoshida Y (2018) Spectral normalization for generative adversarial networks. arXiv preprint arXiv:1802.05957

Li B, Xiao C, Wang L, Wang Y, Lin Z, Li M, An W, Guo Y (2022) Dense nested attention network for infrared small target detection. IEEE Trans Image Process. https://doi.org/10.1109/TIP.2022.3199107

Guo M, Zhang Z, Liu H, Huang Y (2022) Ndsrgan: a novel dense generative adversarial network for real aerial imagery super-resolution reconstruction. Remote Sens 14(7):1574

Fan M, Lai S, Huang J, Wei X, Chai Z, Luo J, Wei X (2021) Rethinking bisenet for real-time semantic segmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 9716–9725

Li G, Wang Y, Liu Z, Zhang X, Zeng D (2022) Rgb-t semantic segmentation with location, activation, and sharpening. IEEE Trans Circ Syst Video Technol. https://doi.org/10.1109/TCSVT.2022.3208833

Liu Y, Shen J, Yang L, Bian G, Yu H (2023) Resdo-unet: a deep residual network for accurate retinal vessel segmentation from fundus images. Biomed Signal Process Control 79:104087

Johnson J, Alahi A, Fei-Fei L (2016) Perceptual losses for real-time style transfer and super-resolution. In: European conference on computer vision. Springer, pp 694–711

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556

Aly HA, Dubois E (2005) Image up-sampling using total-variation regularization with a new observation model. IEEE Trans Image Process 14(10):1647–1659

Hwang S, Park J, Kim N, Choi Y, So Kweon I (2015) Multispectral pedestrian detection: Benchmark dataset and baseline. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1037–1045

Kingma DP, Ba J. Adam: a method for stochastic optimization (2014). arXiv preprint arXiv:1412.6980

Paszke A, Gross S, Massa F, Lerer A, Bradbury J, Chanan G, Killeen T, Lin Z, Gimelshein N, Antiga L et al (2019) Pytorch: an imperative style, high-performance deep learning library. Adv Neural Inf Process Syst 32

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4):600–612

Zhang R, Isola P, Efros AA, Shechtman E, Wang O (2018) The unreasonable effectiveness of deep features as a perceptual metric. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 586–595

Mittal A, Soundararajan R, Bovik AC (2012) Making a “completely blind’’ image quality analyzer. IEEE Signal Process Lett 20(3):209–212

Huang G, Liu Z, Van Der Maaten L, Weinberger KQ (2017) Densely connected convolutional networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4700–4708

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Woo S, Park J, Lee J-Y, Kweon IS (2018) Cbam: Convolutional block attention module. In: Proceedings of the European conference on computer vision (ECCV), pp 3–19

Funding

This work was supported by Jilin Province Development and Reform Commission (Grant no. FG2021236JK).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

We declare that this paper has no financial or personal relationships that can influence the work.

Code Availability

The codes used during the study are available from the corresponding author by request.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chen, Y., Zhan, W., Jiang, Y. et al. Exploring efficient and effective generative adversarial network for thermal infrared image colorization. Complex Intell. Syst. 9, 7015–7036 (2023). https://doi.org/10.1007/s40747-023-01079-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-023-01079-3