Abstract

Directional sensor networks (DSNs) are ad-hoc networks which are utilized in different industrial applications. Their usual engagements are to monitor and to perform the coverage of all specific targets in the observing fields permanently. These kinds of networks include numerous configurable directional sensors in which they can be utilized in one of the possible directions along with the one of their adjustable ranges. Although the energy harvesting methodology is being applied for these battery-hungry applications, the battery management and network lifetime maximization are still prominent challenges. In this paper, the network lifetime extension is formulated to a discrete optimization problem which is a famous non-deterministic polynomial time hardness (NP-Hard) problem. To solve this combinatorial problem, a discrete cuckoo search algorithm (D-CSA) is designed and is called in several rounds. A cover is a sub set of configured sensors capable of monitoring all targets in the observing field. In each round, the most efficient cover is constituted along with its activation time. In the determined activation time, the sensors in the cover are scheduled in wakeup mode whereas others are set in sleep mode to save energy. Despite other meta-heuristic algorithms, this proposed algorithm utilizes the novel defined discrete walking around procedures that makes to reach a good balance between exploration and exploitation in this complex search space. The proposed algorithm has been tested in different scenarios to be evaluated. The simulation results in the variety circumstances prove the superiority of the proposed algorithm is about 20.29%, 19.55%, 14.40%, 14.51%, 7.70% and 8.03% in term of average lifespan improvement against H-MNLAR, Hm-LifMax-BC, GA, ACOSC, H-GATS, and HDPSO algorithms, respectively. The results also show the high potential scalability of the proposed algorithm.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

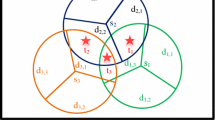

Directional sensor networks (DSNs) include set of directional sensors with adjustable ranges such as infrared, radar, ultrasonic, video camera sensors which are usually used for sensing and monitoring, control, and surveillance of the special environments [1]. These kinds of networks attracted great attentions in research communities because of their pervasive and diverse real world applications especially in the internet of things (IoT) context. Some of DSN applications in different industries are in the gas, oil, military, national security, wild life, and forestry fields in which the goal is to monitor the full coverage of predefined set of targets continuously [2,3,4,5]. In other words, some of the industries are so critical in which the permanent observation of the whole targets is very vital for them. Then, the appropriate reaction against a disaster or an event is timely performed [1]. One of the most important features of a typical directional sensor is that it is only activated in one of the multiple direction capabilities with one adjusted sensing range among set of different possible ranges at the same time. In fact, it works in a sector-like behavior unlike the traditional sensor network that works in a disk-like monitoring capability. Figure 1 depicts a typical directional sensor \({S}_{i}\) which can work in one of its three possible directions (it only observes 120 degree in each direction) and it can be activated in one of its two possible ranges. In the example of Fig. 1, if the sensor \({S}_{i}\) is activated in the first direction by the first adjusting range, namely \({S}_{i}\)(1,1), it can observe the target \({t}_{1}\). In addition, if it is activated in the second direction by that adjusted range, namely \({S}_{i}\)(2,1), it can monitor no target unless it adjusts its range to the second gear, namely \({S}_{i}\)(2,2) so that it can observe the target \({t}_{2}\). Note that, for the target \({t}_{1}\), adjusting \({S}_{i}\)(1,1) is the optimal configuration for the sensor \({S}_{i}\) because it monitors the target \({t}_{1}\) with the lowest possible power consumption.

One of the most important concerns associated to DSN is the battery management. Although the energy harvesting technology such as the solar energy usage aims to obviate the battery replacing and replenishing of depleted battery problems, the power management or the network lifespan improvement of DSN is still a prominent challenge. This is because the type of harvesting technology is either expensive or it does not work properly in the night, foggy, and cloudy environments [6, 7]. Since the sensors are pinned to the limited battery power, the network lifetime maximization is a great challenge in this type of network. The network lifetime refers to a time period between the outset working the network until the time the first target becomes out of coverage because of some sensors’ battery depletion. Bear in mind that all the targets must be continuously monitored during this time period for this reason only an intelligent and dynamic scheduling algorithm can figure out this complex problem. The maximization of DSN’s lifetime issue is an NP-Hard problem [4, 20, 30]. The maximum network lifetime with sensor adjustable ranges (MNLAR) is a well-known NP-Hard problem [5, 8, 15]. The DSN comprises n directional sensors that are placed in n different locations to observe m predefined targets continuously. For the sake of view angle and battery limitations, the number of sensor nodes is commonly multiple times of the number of targets to have reliable field monitoring. Each sensor node has two states: active (wakeup) and passive (sleep) modes. In the sleep mode, the sensor’s power consumption is negligible in which it can be overlooked. In addition to, the switching overhead between sensor modes is considered zero. It is favorable to change a sensor’s state from wakeup to the sleep mode once another sensor acts the same functionality without presence of this sensor in term of target monitoring at the same time. In other words, it is desirable to reduce sensors’ coverage overlap. However, in the wakeup mode the battery consumption depends on the adjusted sensing range. The greater adjusted range is, the greater amount of battery consumption is. The heuristic which engages sensors in such a way to monitor all targets must be aware of some clues. First, it must adjust sensor’s range in the optimal manner. The optimal sensing range for a target is that if it is reduced, the target cannot be observed. In other words, the range of activated sensor for a target must be adjusted in the minimum radius as possible. Another clue revolves around this fact that if the engaged sensor with this adjusted optimal range can cover other targets or not. If so, it is not necessary to utilize additional sensors to cover those covering targets and the concentration should be on the configuration of other sensors for remaining uncovered targets in the field. In this way, the more active but idle sensors’ state can be changed to sleep mode for saving energy. Consequently, more battery is saved toward lifetime prolonging by utilizing efficient engaged sensors. In this regards, an intelligent scheduler algorithm should periodically wake up some sensors and sleep others. So, the dynamic scheduler must build a cover set (CS) in each time interval in which the CS can cover all of the predefined targets in the observing filed. The CS includes set of active (wakeup) sensors with optimal adjusted sensors in their suitable directions which can cover all of the targets in the field; note that, the states of other available sensors except for ones in the current CS are set to sleep mode to save energy. For each CS, an upper time limit is considered as a threshold. This threshold strongly depends on the weakest sensor in term of remaining battery power in the current CS because the battery depletion of that sensor leads some targets to be out of coverage. By exploiting this strategy, the lifetime with high probability is extended. The proposed scheduler utilizes sensors in the current CS within the threshold time duration to cover all of the targets permanently; after the time is elapsed equal to this specified amount, the new CS is constituted and is utilized; then, the state of sensors in the current new CS are set to wakeup and other unnecessary sensors’ are set to sleep mode to save battery life. It is iterated until no CS can be built. To do efficiently, the proposed scheduler is aware to prioritize targets in constituting the new cover. Hereafter, the term cover is used for the cover set (CS). If the scheduler prioritizes targets based on their criticality in the field and it then configures sensors in optimal manner based on the targets priority, the energy saving is efficient. This method is also used to generate initial population. The critical target is the one target in which the sum of remaining battery of all sensors that can monitor that critical target is the least amount in comparison with other targets. The network lifetime is calculated by the sum of all activation time (that specific time amount) associated to all constituted CSs. If both CS set and its activation time are selected optimally, then the final optimal network lifetime is optimally achieved based on induction rule [9]. It means that, the algorithm returns optimal solution provided all of its sub problems return their optimal sub-solutions. Consequently, if the best CS with the most suited upper time limit are selected in each time interval, the final result will definitely be optimal. As the stated problem is NP-Hard, reaching to the optimal solution is very hard. Therefore, the authors of this paper intend to present an efficient solution. The main objective of the current paper is to extend network lifetime by exploiting different techniques. Since the search space is very large and complex, this paper presents a meta-heuristic algorithm to find the efficient cover along with its activation time for each round. Then, it switches between covers within activation time to prolong the network lifetime. Its objective function is to minimize the cover’s battery consumption; then, it returns an efficient cover that consumes the least amount of sum battery usage of all utilized sensors and switch off other unnecessary sensors. In addition to, for the sake of the uniform usage of all sensors’ battery, another function is designed and added to the objective function which conducts making covers with both minimum total battery usage and distribution of battery consumption. This fitness lets have more opportunity to build a new cover for forthcoming rounds because it saves more energy. Several heuristic and meta-heuristic algorithms have been presented in literature to figure out the well-known MNLAR problem. An exact and heuristic-based methods were proposed by Cerulli et al. to maximize network lifetime in wireless sensor networks with adjustable sensing ranges [5]. In the suggested work, the network lifetime challenge was modeled to an optimization problem which was solved by different greedy algorithms. In [30], the authors proposed an improvement to this approach, proposing ad-hoc sensing ranges between the minimum and maximum range size for each sensor, which considers the values that allow to monitor sensors with the minimum energy consumption. A similar idea was proposed by Rossi et al. in [15] for directions. Another heuristic methods were presented by Mohamadi et al. for DSN environment [8]. Although both proposed algorithms were promising in final results, these algorithms need more operators to explore bigger search space. A genetic-based meta-heuristic algorithm has been presented to solve the MNLAR problem with exploring search space efficiently [4]. It works better than the mentioned heuristics, but it suffers from simple crossover and mutation operators. The particle swarm optimization (PSO)-based meta-heuristic algorithm was presented to solve target-barrier coverage problem in DSN network with rotatable sensors in the special context such as ecological reserve and contamination monitoring [10]. As the search space of the stated problem is discrete in nature, the intrinsic continuous optimization algorithms such as PSO-based, grey wolf optimization (GWO)-based, and whale optimization algorithm (WOA)-based cannot take over these kinds of problems efficiently. On the other hand, the genetic-based algorithms (GAs) are adaptive in both discrete and continuous search space, but all of them are susceptible to get stuck in local optimum [11]. One of the promising optimization algorithms which experiences even bad solutions to open new room for avoiding from local optimum trap is the simulated annealing algorithm (SA) [12]. Although it returns good solutions especially in the discrete search space, it naturally has local search inclination and cannot control large scale searching fields. For the sake of aforementioned reasons, this paper presents a discrete version of cuckoo search optimization algorithm (D-CSA) that does not have aforesaid shortcomings to solve well-known MNLAR problem. It is worth mentioning that the proposed D-CSA has basic discrepancies with the classic CSA in the lévy flight part. The canonical CSA utilizes the continuous lévy flight procedure; then, the continuous values are converted to discrete values commensurate with this paper’s stated problem by either S-shape or V-shape transfer functions [43], but both of them led poor results experimentally. To obviate the problem, the novel discrete lévy flight concept is incorporated which leads better results by directly permuting search space uniformly. To solve MNLAR problem, the proposed D-CSA algorithm takes benefit of several plus points. First, similar to SA, it experiences even bad solutions to evade from local optimal trap. Second, it is population-based algorithm in spite of SA which is a point-wise algorithm; for this reason, it has different agents to quest unexplored search filed globally. Third, it utilizes lévy flight concept to explore search space uniformly [13]. For this reason semi-random discrete walking around procedures are applied which are the assimilation of the lévy flight concept in discrete space to permute solutions toward global best effectively. In this phase, the variety operators are introduced each of which is randomly, unbiasedly, called in spite of other meta-heuristic algorithms which apply limited operators even biasedly. Succinctly, the proposed D-CSA is run in several rounds. In each round it returns the most efficient cover along with its effective activation time. This scheduler determines the sensors in this cover must be in wakeup mode and the rest sensors must be scheduled in sleep mode. After activation time is elapsed, another cover is created following by performing sleep/wakeup scheduling on available sensors. This procedure is repeated until the remaining battery associated to available sensors do not let to build another cover. The sum of accumulated activation time is the network lifetime.

Therefore, the novelty of the current paper is:

-

It formulates MNLAR issue to a discrete optimization problem which is an NP-Hard problem. The proposed formulation well clarifies the problem and its constraints.

-

It presents a discrete cuckoo search optimization algorithm (D-CSA) to solve this combinatorial problem.

-

It presents variety discrete lévy flight walking around procedures for efficient and uniformly permutation of discrete search space toward global best.

-

It defines different scenarios and settings to validate the performance of proposed D-CSA against other existing state-of-the-arts.

The rest of this current paper is structured as follows. Sect. “Related works” briefly reviews related works. Sect. “Problem statement” is dedicated to problem statement along with sub sections which introduce notations and problem formulation. The block diagram and the proposed algorithm is placed in Sect. “Proposed discrete cuckoo search algorithm for lifetime maximization in DSN”. Sect. “Performance evaluation” presents performance evaluation. Sect. “Conclusion and future work” concludes the paper along with the future direction.

Related works

One of the most important applications of DSNs is the targets coverage in the specific observing field. In other words, the targets coverage means that the gathering data of predetermined points from environment is continuously done so that the appropriate reaction is timely performed by the sink surveillance whether he/she is in the field or not. Apart from the DSN’s intrinsic constraints in term of memory and processing capacities, the maximization of network lifespan is a great challenge owing to battery power limitation of sensors. Different solutions were proposed in literature to improve lifetime in these networks. The proposals in literature can be classified in three categories: exact, heuristic, and meta-heuristic approaches. The coverage algorithms which maximize network lifetime and observe all targets at the same time are either target-centric or area centric. In the former, the fixed and limited number of targets are distributed in special environment so that all of them must be continuously monitored; the goal is to utilize the minimum number of sensors as possible [14]. In the latter case, the special targets are not considered, but the determined area must be permanently monitored; the goal is to reduce the view overlap associated to different sensors to extend network lifetime [15]. This paper’s concentration is on target coverage and network lifespan maximization. Several exact and heuristics were proposed by Cerulli et al. in 2012 to solve network lifetime extension in wireless sensor networks with adjustable ranges [5]. The authors modeled the issue to a constraint programming problem in which its goal was to find different cover sets (CSs) each of which is activated by appropriate activation time. To solve this problem, different heuristics and an exact algorithm which was based on column generation techniques were introduced [5]. In their application, sensors were only adjustable in different ranges, but they dissipate much battery energy because they were utilized to monitor targets with disk-like fashion not sector-like behavior. Some heuristic algorithms have been proposed by Mohamadi et al. to solve MNLAR problem [8]. In each heuristic, the criteria for making CS are different such as remaining battery power of sensors, the criticality circumstance of targets that are the key notes for decision making. Afterwards, all of the key notes are weighted in the greater heuristic. Although the proposed heuristics were promising in their final results, the more improvement is possible for the sake of utilizing non-uniform battery usage especially in the larger search space. The similar heuristic works have been proposed by Jinglan Jian et al. to maximize full-view target coverage in the camera sensor networks [1]. Another heuristic algorithm was proposed to deploy sensors in the wireless network to maximize object coverage subject to considering sensor angle and battery limitations as constraints [16]. In this line, some other heuristics were proposed by Tan and Jarvis to make balance between detection and coverage of targets [17]. A hierarchical target-oriented multi-agent coordination framework (HiT-MAC) was presented by Xu et al. in 2020 to maximize target coverage problem [18]. This problem is formulated to an integer linear programming (ILP) problem. To solve this combinatorial problem, the two-level heuristic has been extended. A heuristic-based target coverage-aware algorithm was presented in 2019 to figure out the problem of network lifetime maximization regarding to the sensor angles and their battery limitations [19].

As the exact and heuristic algorithms work such as greedy algorithms regarding to a predetermined criteria, they do not have capability to amend solutions toward global optimization. For this reason, they cannot reclaim the rudimentary solutions gained during their approach to reach global optimal. In addition to, the majority of solutions lead sub-optimal especially in the large-scale search space. To this end, several meta-heuristic solutions have been presented in literature to solve MNLAR problem in which their results are relatively better than heuristic ones. Some heuristics and a meta-heuristic algorithm based on SA algorithm have been propounded for presenting energy-efficient coverage-preserving protocol in wireless networks [21]. The main concentration of these proposals were to switch energy consumption mode between high-energy mode and low-energy mode in suitable time to manage network power consumption. Another meta-heuristic-based algorithm, a genetic-based algorithm was devised to maximize the number of CSs in DSN networks while it covers all of the targets at the same time [22]. The proposed GA’s operators were conducted in such a way that to meet two objectives. First, the network lifetime is possibly increased by making the more number of CSs. Second, the remaining battery of sensors are preserved so that the next CS can be constituted [23]. A multi-objective coverage optimization based on memetic algorithm has been presented for directional sensor networks [24]. The main objective was to extend network lifespan. To this end, the algorithm’s operators are conducted to use less sensors in each cover set. Another genetic-based algorithm was proposed by Alibeiki et al. in 2019 with considering adjustable sensor ranges along with view angle direction [4]. The fitness function of their algorithm was to consider minimum power usage of each CS, but not attention to uniformly energy usage between sensors. So, the further improvement can be possible. A learning automat-based algorithm was presented by Razali et al. to solve priority-based target coverage problem in DSN networks with adjustable sensing ranges [25]. The distributed particle swarm optimization (D-PSO) algorithm was designed to reach a near to optimal coverage of closed virtual target-barrier determined in the directional sensor networks with rotatable sensors [10]. A bat-inspired algorithm was applied for connected target coverage in WSNs [26]. It searches to find appropriate sensors to activate for sensing targets. Based on the first selection nodes the other nodes between the first and the sink are gradually selected. Fan and Liang in [27] proposed a hybrid discrete meta-heuristic algorithm for solving target coverage problem in visual directional sensor networks. They presented a discrete PSO algorithm to manage swarm members in the search space, but for exploration they utilizes crossover operator of the genetic algorithm. Their algorithm tries to monitor maximum targets with the minimum number of utilized sensors.

Mottaki et al. in [33] presented a genetic-based approach for solving well-known target Q-coverage problem in both over-provisioned and under-provisioned DSN environment. Since full target coverage and battery management in such battery-limited networks are of the most challenges, presenting an algorithm which pays on both goals at the same time is favorable. To this end, Mottaki et al. proposed two target-oriented GA-based algorithm in which the former was extended to cover the targets in over-provisioned conditions and the latter was designed for the same goal in the under-provisioned circumstance networks. An efficient hybrid algorithm was proposed in literature to apply cover sets scheduling in the wireless sensor networks (WSNs) to maximize network lifetime [34]. The proposed hybrid algorithm engages both GA and tabu search algorithms to maximize the network lifespan. First, it forms several cover sets; then, it schedules which cover must be utilized in the right sequence. The tabu search method that they utilized provides a list of prohibited movement of covers to prevent engaging the previously performed movement as many as possible. This limitation in utilizing movement tries to produce solutions with high rate of diversity. A heuristic algorithm was developed by Hong et al. in [35] to maximize network lifetime for strong barrier coverage of 3D camera sensor networks. The authors modeled the lifetime maximization barrier coverage to the LifMax-BC problem. Then, they proved the stated problem is an NP-Hard. To solve this complex problem in the limited time frame, the graph-theory-based heuristic algorithm was developed to figure out either homogeneous or heterogeneous networks in which in the first network the utilization of sensors is done with uniform working duration whereas in the second network the utilization of sensors is done with different working duration. A heuristic algorithm based on 3D-Voronoi and K-means approaches was extended by Gou et al. in [36] to present an energy-efficient coverage enhancement solutions in 3D heterogeneous WSN networks. To this end, their proposed algorithm works in three phases. At first, a random node deployment is performed; then, the twice node deployment is done using a new strategy to improve uniformity node deployment. At the second phase, the optimal perceptual radius is measured by incorporating K-means and 3D-Voronoi partitioning methods to ameliorate the quality of network coverage. At the third phase that is the final phase, a multi-hop communication path and feedback working are suggested to manage battery consumption and network lifetime expansion as a consequence. A continuous action-set learning automaton (CALA) is applied to cover all of the targets in the observing field and to prolong network age of WSN networks [37]. As the sensing angle is of the most important parameters of the stated problem, an automaton randomly selects one action from continuous actions set which is independent from other automata. This randomly selected action is utilized on the random environment; so, the environment returns its feedback signals from environment. That automaton updates sensing angle associated to that especial sensor by considering reward function which is directly dependent on the objective of the stated problem. Therefore, the most efficient angle of each sensor is adjusted. A hybrid meta-heuristic algorithm namely enhanced firefly sparrow search algorithm (EFSSA) was propounded by Wang et al. in [38] to figure out network lifetime maximization problem in WSN environment. The novelty of the proposed approach is to utilize numerous strategies chief among is an improvement strategy. This strategy follows in threefolds. In the Elite Reverse Strategy (ERS), the sparrow search algorithm (SSA) that was first introduced in 2020 [39] is called to generate an initial semi-random solutions. To improve the previously generated solutions, the ERS method is called. Since the SSA approach easily gets stuck in local optimal trap, the firefly disturbance strategy is incorporated as the second strategy to shift solutions toward global optimization. In the previous strategies, 2n number of solutions were generated. Then, new fitness function is applied to sort solutions accordingly. Finally, the n number of sorted solutions that are the sensors’ configurations are returned. To compare reviewed studies, Table 1 summarizes the comparison of related works.

This literature review reveals that several schemes have been presented to solve sensor network lifetime maximization along with the target coverage issue. However, there are some shortcomings such as lack of scheduling for adjustable range in the only appropriate sector direction, uniformly battery usage, lack of making balance between exploration and exploitation in search space, neglecting to attention the discrete search space which leads sub-optimal solutions. The balance between exploration and exploitation phases in meta-heuristic algorithms can potentially improve the final results because it evades from local optimal trap and traverses unexplored search space with high probability precluding early convergence which may lead efficient solutions. To fill the gaps, the current paper proposes a discrete cuckoo search algorithm which is aware of aforementioned points. To reach the efficient scheduling solution needs to permute search space by changing the configurations of applied sensors which leads a discrete permutation the reason why the search space is optimal finding in a discrete search space. Since the majority of meta-heuristic algorithms have evolutionary behavior, they seldom experience worse solutions in offspring generating process in which it increases the chance of getting stuck in the local optimal trap and leads the early convergence. The simulate annealing algorithm (SA) is a good example of non-evolutionary algorithm which completely accepts better next solutions and even experiences next worse solutions with the amount of probability approaching to zero round by round [40]. The proposed cuckoo search algorithm in its body experiences worse solution without divergence because of incorporating the new operators and at the same time moves toward global optimization. Recently, the application of different versions of cuckoo search optimization algorithm recently attracts research community because of its effectiveness in optimization process. For instance, Asghari et al. in [41] proposed a bi-objective discrete version of cuckoo search algorithm to solve scientific workflow scheduling problems in cloud computing environment with total execution time and execution reliability optimization perspectives. The results gained from extensive simulations prove these claims.

Problem statement

This section presents the formal problem statement. Before doing so, at first some notations and definitions are proposed to ease the follow of the current paper. Afterwards, the MNLAR issue is formulated to a discrete optimization problem.

Notations and definitions

In this sub section, the notations and terms which are applied in the proposed model are placed in Table 2. The DSN network is modeled to a network (N) that is a tuple such as N = (T, S, D, R). The T is a set of m targets T = {\({t}_{1},{t}_{2},\dots ,{t}_{m}\)} placed in the predetermined locations of observing field that must be monitored by the a set of n sensors S = {\({S}_{1},{S}_{2},\dots ,{S}_{n}\)}.

As mentioned earlier, the number of sensors are usually more than the number of targets. Each sensor can work only in one of its different angles or directions at a time. Each possible direction is determined by D = {\({d}_{1}\), \({d}_{2},\dots ,{d}_{MaxDir}\)} where \({d}_{i}\) = i. For instance, if the MaxDir parameter is 3, each sensor can only observe one of the 120 degrees of a 360 circular region. In addition, each sensor can be activated only in one of its possible ranges. All possible ranges are in a set R = {\({r}_{1}\), \({r}_{2},\dots ,{r}_{MaxRng}\)} where \({r}_{i}\) = i. For instance, the MaxRng parameter associated to sensors depicted in the Fig. 1 is normalized to 2 (each range covers 30 m, c.f. in Table 6). Note that, corresponding to the utilized sensor range, the level of battery energy is consumed. Namely, the greater range is configured for a sensor, the more and far coverage are gained; also, the more battery is consumed. The notation \({S}_{i}(j,k)\) is used to indicate the sensor \({S}_{i}\)’s direction is in \(j\)-th angle with \(k\)-th adjustable range where 1 \(\le j\le\) MaxDir and 1 \(\le k\) \(\le\) MaxRng. For the sake of simplicity, we take that all of the sensors are homogeneous in architecture and consume the same battery usage. In this study, the coordination information of sensors and targets are determined in advance. Moreover, the number and the position of sensors and targets do not change during the scheduling process. The notation \(ToT[{S}_{i}\left(j,k\right)]\) is the total targets that can be covered by the configured sensor \({S}_{i}\left(j,k\right)\). In other words, the subset of all targets in the field that can be covered by the sensor \({S}_{i}\) when it is configured in the \(j\)-th working direction and is activated in \(k\)-th sensing range. The \(k\)-th sensing range is corresponding to the \(k\)-th battery usage level that is the \({\Delta }^{k}\) amount in time unit. It is clear-cut that for each sensor \({S}_{i}\) which is configured in the \(j\)-th working direction, the inequality \(ToT[{S}_{i}\left(j,{l}_{1}\right)]\) \(\subseteq\) \(ToT[{S}_{i}\left(j,{l}_{2}\right)]\) is correct where 1 \(\le {l}_{1}\le {l}_{2}-1\). The sensor \({S}_{i}\left(j,{l}_{1}\right)\) is optimal for a target \({t}_{p}\) if either \({l}_{1}\)=1, or \({t}_{p}\in\) \(ToT[{S}_{i}\left(j,{l}_{1}\right)]\) and \({t}_{p}\notin\) \(ToT[{S}_{i}\left(j,{l}_{2}\right)]\) 1 \(\le {l}_{2}<{l}_{1}\). In other words, the sensor \({S}_{i}\) is optima for a target \({t}_{p}\) if the target is placed in its working direction and can be sensed by the minimum adjusting range and minimum battery usage level as possible. The main concentration of the current paper is to constitute cover sets (CSs); each cover set \({CS}_{q}\) contains some tuples each of which is a configured sensor with specifications in terms of utilized direction along with its adjusted range. A cover set \({CS}_{q}\) is calculated by Eq. (1).

As mentioned earlier, the notation \(ToT[{CS}_{q}]\) is used to indicate all of the targets which can be observed by cover set \({CS}_{q}\). The \(ToT[{CS}_{q}]\) value can be calculated by Eq. (2).

If a cover set \({CS}_{q}\) can observe and monitor all of the targets placed in the observation environment, this cover set is called a cover. This investigation can be compared by Eq. (2). Formally, if \(ToT\left[{CS}_{q}\right]=T\) (as set of all targets in the field), then the set \({CS}_{q}\) is a cover. The main objective of the current paper is to constitute different covers. Each cover is scheduled for some time to observe the whole targets in the field. The upper time limit as an upper threshold is used for at the most time utilizing of a cover. This time is bounded to the battery level of the weakest sensor, in term of its residual battery, in this being utilized cover because the battery depletion of the weakest sensor leads some targets become out of coverage. To utilize sensors efficiently, the regulator parameter (RP) is also applied which is less than that upper threshold. By exploiting this parameter we can uniformly use overall battery of sensors. In each round, the current cover is utilized to monitor all of the targets in the field for some time. After that time, the next cover must be built and the time which the cover must be in use is determined. This time is named coverage time or activation time (AT) by a cover. The network lifetime is the sum of coverage time of each cover until the last interval when it is impossible to constitute another cover owing to some or all sensors battery depletion. The chief objective of the current paper is to extend network lifetime. The determinants are how to utilize sensors in which direction by which adjustable range and with what activation time. This is the issue that the sleep-wakeup scheduling algorithm should solve it. The inefficient selection of each part will take the solution’s trajectory far from the optimal ones. The reason why this paper presents an intelligent scheduling algorithm for selecting optimal adjustable range and direction associated to each sensor in a cover along with the engagement of them in an appropriate coverage time for every round. To normalize the power consumption of each sensor, the notation \({\Delta }^{l}\) is defined; it means the power consumption ratios between when the sensor adjusting range is in level l and the least possible ranging level that is 1. For instance, the \({\Delta }^{l}\) = 3 means that the power consumption of the sensor when it is configured in the l–th range is 3 times more than that of activated in the first level (lowest possible range). It is clear when the sensor range adjusted in the lowest radius as possible (in the first radius), the power consumption is \({\Delta }^{1}\)=1 for one unit of time (UT). Then, the power consumption of sensors in different adjusted ranges are normalized in comparison with \({\Delta }^{1}\) which is 1. For example if it is taken \({\Delta }^{2}=3\) and the sensor \({S}_{i}\) is configured in the second range, it keeps observing continuously only in \(\frac{UT}{3}\) of time unit since \({\Delta }^{2}=3.\) Fig. 2 depicts an illustrative example where in the sensing field there are five homogeneous sensors are observing five different targets.

Each sensor has 3 possible directions \({d}_{1}\) = 1, \({d}_{2}\) = 2, and \({d}_{3}\) = 3 along with 2 possible adjustable ranges, namely,\({r}_{1}\) = 1, and \({r}_{2}\) = 2. Take \({\Delta }^{1}\) = 1 and \({\Delta }^{2}\) = 2 in this network.

In Fig. 2, four sets:\({CS}_{1}\), \({CS}_{2}\), \({CS}_{3}\), and \({CS}_{4}\) are four possible covers since \(ToT\left[{CS}_{q}\right]=\left\{{t}_{1},{t}_{2},{t}_{3},{t}_{4},{t}_{5}\right\}=T,\) where q = 1,2,3,4. The four covers\({CS}_{1}\), \({CS}_{2}\), \({CS}_{3}\), and \({CS}_{4}\) are calculated by Eq. (3) through Eq. (6) respectively.

At the outset, the energy level of each sensor is normalized to 1 as for full battery level (\(\beta_{i}\) = 1 for all sensors where i = 1,2,,,,. m). Note that, the remaining battery of a sensor \(S_{i}\) in a cover after its deployment in time interval \(\tau_{j}\) (associated to the j-th round) known as an activation time, is measured by Eq. (7). The term \({\Delta }^{l}\) is the amount of battery usage when the sensor is adjusted in l-th range.

Regarding to Fig. 2, if each cover in a set {\({CS}_{1}\), \({CS}_{2}\), \({CS}_{3}\), \({CS}_{4}\)} is continuously engaged for 0.5 UT to observe all of the targets, the network lifetime would be 0.5 UT. After that time, it is impossible to create another cover. For example, if the cover \({CS}_{2}\) is continuously utilized for monitoring all of the targets in the field for 0.5 UT duration, the sensors\({S}_{1}\),\({S}_{4}\), and \({S}_{5}\) are depleted after this interval. Then, it is impossible to build another cover although the sensor \({S}_{2}\) was in sleep mode during the activation time to save energy. So, the network lifetime is 0.5 UT. This situation is happened for other covers. Here, we show that how the intelligent scheduling and appropriate time activation can extend network lifespan. If the intelligent scheduler, schedules five configurations \({Conf}_{1}\) through \({Conf}_{5}\) from the possible covers in {\({CS}_{1}\), \({CS}_{2}\),\({CS}_{3}\), and\({CS}_{4}\)} with the sequence\({Conf}_{1}={CS}_{4}\), \({Conf}_{2}={CS}_{3}\),\({Conf}_{3}={CS}_{1}\), \({Conf}_{4}={CS}_{2}\), \({Conf}_{5=}{CS}_{1}\) each of which is activated for 0.125 UT; then, the network lifetime is extended to 1.125 UT. In fact, here the RP parameter that was used is 0.125 UT. After 5 switching between different covers, three sensors:\({S}_{1}\), \({S}_{4}\) and \({S}_{5}\) are depleted. In other words, the next cover cannot be constituted by alive sensors \({S}_{2}\) and \({S}_{3}\) in the sixth round. This simple example proves the intelligent scheduler improves network lifetime 225% in comparison with the naïve procedure in this case study.

Problem formulation

This section formulates network lifespan maximization with full target coverage issue to a discrete optimization problem subject to some constraints. This is an NP-Hard problem because each sensor in a cover is configured in one of the possible directions (from [1..\(\mathrm{MaxDir}\)]) and also it is adjusted in one of its possible covering ranges (each of them is labeled an integer number from [1..\(\mathrm{MaxRng}\)]). Therefore, there are \(\mathrm{MaxDir}(\mathrm{named} d)\times M\mathrm{axRng}(\mathrm{named} g)\) configuration possibilities for each directional sensor. As there are n number of sensors in the field for targets surveillance, all of the possibilities are \({n}^{d\times g}\). It is obvious that the problem is NP-Hard especially for large parameter n, \(d\), and \(g\) it needs presenting an efficient algorithm to solve the stated combinatorial problem. In this paper, the main objective function is modeled in Eq. (8) where the constraints are formulated in Eqs. (9–12).

In this optimization model, the term \(\tau_{j}\) is the activation time of each cover in j-th round that is utilized to monitor all targets placed in the observing filed. Then, the main objective function is to maximize the sum of all activation times which Eq. (8) indicates. In this model, some constraints are to be met. The nested sigma in Eq. (9) implies that each sensor can observe the environment till its battery power is depleted. In fact, all of the energy is shared between different rounds. If the sensor \({\text{S}}_{\text{i}}\) is not utilized in the j-th round which binary decision variable \({\text{X}}_{\text{ijkl}}\) specifies, it is set to the sleep mode to save energy. The parameter \(\beta\) is used to show the maximum amount of battery that a sensor can use up. In this normalization form, \(\beta\) is normalized to 1. The binary decision variable \({\text{X}}_{\text{ijkl}}\) is applied to imply that sensor \({S}_{i}\) can be activated only in its k-th direction with l-th adjusting range for j-th round (sensor \({S}_{i}\) is activated in the j-th cover that is utilized in j-th round); in this case, the decision variable \({\text{X}}_{\text{ijkl}}\) is set to 1 otherwise it is considered 0. When the sensor’s range is adjusted to its l-th possible range, the amount of battery consumption is \({\Delta }^{l}\) in its activation time. The term \(\Delta^{l} \times \tau_{j}\) dubs the amount of sensor battery consumption in \(\tau_{j}\) interval. The Eq. (10) emphasizes that if the sensor \({S}_{i}\) is utilized in j-th round, it is only configured in one direction with one predefined range. The Eq. (11) emphasizes that in each round all of the targets must be covered by the engaged cover. To this end, the binary variable \({\text{X}}_{\text{ijkl}}\) is also used in Eq. (11) for determining which sensors are incorporating in observation. The variable \({\text{NCovers}}\) is the number of covers that can be built in the all life cycle. It as a clear-cut a combinatorial NP-Hard problem which needs an efficient solution.

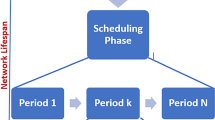

Proposed discrete cuckoo search algorithm for lifetime maximization in DSN

This section presents the proposed meta-heuristic algorithm which solves lifetime maximization problem in DSN networks. Before paying on it, the Fig. 3 schematically presents the procedure of how the main algorithm works. In the block diagram of Fig. 3, first, it receives the problem specifications along with the related parameters. Then, it returns an efficient scheduling solution as an output. Note that the scheduling solution is a solution containing different covers each of which is activated for a specific time interval then the new cover is built. At the outset depicted in the Fig. 3, it initializes the final solution which must determine what cover, a set of configured sensors, must be activated for how long. Note that, in the start the final solution is null and during the algorithm running it is updated. The algorithm is iterated until the remaining battery of the whole sensors does not permit to build another cover. If no so, the proposed discrete cuckoo search algorithm (D-CSA) is run to find the most efficient cover with the efficient activation time; then, this solution is added to the final solution. To this end, the proposed D-CSA repeats MaxGen times. At the inception, it initializes population of eggs and calculates fitness for them; then it assigns rank values for each egg that is a candidate solution in the population. In each repetition, based on ranking value it partitions the solutions in two inferior and superior set of solutions with the probability of \(P_{\alpha }\) and (1 − \(P_{\alpha }\)) respectively. The inferior solutions are updated with calling one of the lévy flight procedures. The updated solutions are completely accepted whether are better or not. Again, the population is split into the inferior and superior solutions with that predefined probability. In this time, the superior solutions are updated with calling one of the lévy flight procedures. Then, a pair of random solutions in the whole population is selected. The updated solutions in superior set are accepted only that are better than the pair of randomly selected solutions; this is elaborated between lines 21 through 32 in Algorithm 2. In other words, the update is done once the improvement is witnessed. This approach is iterated till MaxGen times; then, the efficient solution is added to the final solution which includes the covers (configured sensors) along with the activation time. Then, it comes back to the first while-loop to check whether the whole alive sensors can build another cover or not. If not so, the final solution is returned.

Now that the main procedure has been cleared schematically, the main algorithm is elaborated in the form of pseudo code. Since the search space of the stated problem is intrinsically discrete, the experiments of the well-reputed continuous optimization algorithms such as PSO, GWO, WOA, etc. in some cases do not yield efficient solutions even using their discrete versions because their discretized solutions are either inefficient or meaningless regarding to the stated problem of this paper. On the other hand, the majority of meta-heuristic algorithms get stuck in local optimum. For aforementioned reasons, the cuckoo search algorithm is selected because it evades from local optimum trap similar to the simulated annealing (SA) algorithms, but it has inclination to global optimization in contrast to SA. In other words, it examines even bad solutions to find new rooms for both evading from local optimal trap and finding possible global optimal. In addition to, the CSA algorithm has improvement in its superior part which guarantees evolutionary computation. The canonical Cuckoo Search Algorithm (CSA) is drawn in Algorithm 1 [13].

One of the most important features of cuckoo search algorithm is to apply lévy flight concept. It is beneficial to pave the way of trajectory toward exploring the search space uniformly. Engaging the continuous lévy flight and discretizing the gained solution lead inefficient solution or meaningless regarding to our stated problem. To assimilate this point in discrete search space, the diverse exploring procedures are defined each of which is randomly called to make the sense of randomness and lévy flight concept in its discrete version [31]. In the next, two subsections are dedicated to explain about the proposed algorithm. The first subsection defines problem encoding, fitness function, and termination criteria. The second subsection clarifies the proposed algorithm in detail.

Preliminaries: encoding, fitness, termination criteria

One of the most important parts of each meta-heuristic algorithm is how to encode the stated problem (phonotype) in the artificial intelligence domain (genotype). The encoding phase has significant impact on the overall cost especially its encoded problem length. For this reason, the problem is encoded such as in Refs. [4, 27] to be as short as possible. In the cuckoo search context, each candidate solution is modeled to an egg. Each egg is encoded such a chromosome in GA. Regarding to the Fig. 2, the intention is how to construct a candidate solution (egg). Here, each candidate solution is a candidate cover. To present an efficient encoded solution, the target is in the preference instead of the utilized sensors because the number of utilized sensors depend on their adjusted ranges and residual battery energy in which it is variable and it may differ in each cover, but the number of targets are constant in each cover. To construct an egg, each target must be monitored by an adjusted sensor. Also, it may be possible to monitor other targets by the current engaged sensor this is the reason the number of utilized sensors in a cover differs in comparison with other covers. For instance, the encoded solution of cover \({CS}_{3}\) in DSN relevant to the Fig. 2 is depicted in Table 3.

The notation [1, 2, 4] in \({t}_{4}\)’s column means that the sensor \({S}_{4}\) (the first component of the tuple [1, 2, 4]) is activated in its first direction (the second component of the tuple [1, 2, 4]) by the second adjusted range (the third component of the tuple [1, 2, 4]) to monitor the target \({t}_{4}\). During the algorithm process, there may be a possibility to have impairment in the encoding of new generated solutions. For instance, the encoded solution may have one configured sensor in more than one direction or the sensor is in use in more than one range; all of the mentioned circumstances are impossible. Therefore, the encoded solution is considered impaired and must be repaired. To this end, the subjective heuristic Check&Correct(.) function (c.f. in Algorithm 5) is incorporated. The fitness function is very important part of each meta-heuristic algorithm because it directly steers solutions toward the desired objectives. So, it needs intricate fitness function to gain promising and efficient solutions. In the stated problem of this paper, the selection of the best effective cover must has the lowest sum of battery consumption in a cover. In the other side, it needs taking uniform usage of sensors' battery because the unbalanced battery usage may cause the situation in the near future so that all of the alive sensors even with high amount of remaining battery cannot have full target coverage owing to some sensors battery depletion. As mentioned earlier, the objective function is to extend network lifetime. This is done by considering lower battery consumption in a cover. Equation (13) calculates the energy usage of each \({\text{Cover}}_{\text{q}}\).

It indicates that the cover among several covers which consumes the lowest sum of battery usage is the most favorable. If two different covers in the egg population have the same amount of fitness value based on Eq. (13), the distribution factor (DF) is defined and applied in the fitness function. This parameter is used to show in how extent the cover uses up battery uniformly. The DF value for a cover \({\text{Cover}}_{\text{q}}\) is calculated by Eq. (14).

The minimization of the Eq. (14) is favorable because it minimizes the overlap between the common sensors which observe the same target and consequently it causes uniform battery usage with high probability. In other words, the set of sensors with the minimum amortized energy, that the Eq. (14) indicates, is evenly used available energy. In Eq. (14), the term \({\text{LS}}_{\text{q}}({\text{t}}_{\text{p}})\) is a list of sensors from a candidate cover \({\text{Cover}}_{\text{q}}\) that can observe the target \({\text{t}}_{\text{p}}\); it is calculated by Eq. (15).

To use up the lowest energy and at the same time uniform usage, the final fitness function for each cover is calculated via Eq. (16) by incorporating both Eq. (14) and Eq. (15) where the coefficients \(\omega_{1}\) and \(\omega_{2}\) are used to indicate the importance of each determinant.

In this regards, the importance of both determinants are considered the same, namely, \(\omega_{1}\) = \(\omega_{2}\) = 0.5. In addition, since the range and unit values of both DF(.) and E(.) functions differ, the values are normalized in the same range. Therefore, the final designed fitness function leads the solutions with the lowest battery usage and at the same time with uniform battery consumption which contingently prolongs network lifecycle.

The termination criteria of the main algorithm is to reach the point so that it is not possible to construct a new cover for the sake of insufficient remaining battery power of the whole sensors. So, it iterates until it cannot make another new cover. In each iteration, the meta-heuristic algorithm is run MaxGen times for the maximum generations times; then a cover along with its activation time is returned.

The main algorithm presentation and description

The main algorithm which solves MNLAR problem is Algorithm 2. Algorithm 2 is a discrete cuckoo search algorithm (D-CSA) for solving MNLAR problem. It inputs the DSN information in terms of targets & sensors specifications and algorithm settings. Then, it returns the efficient scheduling solution which is a set of several tuples (cover no., cover, activation time) for each cover. The main proposed algorithm for solving MNLAR problem is elaborated and depicted in Algorithm 2.

Algorithm 2 is iterated till the remaining battery of available sensors do not suffice to make another cover. This iteration is between lines 7 through 48. The number of rounds that the algorithm can make new cover is then specified by NCover variable which is not determined in advance. In the main loop, an efficient cover is found to be added into rudimentary scheduling which is in EfficientSchedulingSolution variable. This so far efficient cover is made by running instructions of lines 11 through 33 which is the main block of D-CSA. Before doing so, the semi-random initial population is created and all of the fitness values are calculated for each solution (each egg). Algorithm 3 is dedicated to generate initial population with length of PopSize. In Algorithm 3, each solution \({\text{Sol}}_{i}\) that is in Population [i] has m number of fields each of which has a triple value. For instance, if the Population [i] is exactly similar to the encoded solution in Table 3; then, Population [i].\({t}_{5}\) = [2, 2, 3]. Algorithm 3 creates a list that is List[1..m] with the size of all targets. For each target, it determines the configurations of all available sensors which enable them to observe that target. For instance, for i-th solution of population, the fifth gene as the representative of the fifth target are set to [2, 2, 3] that derives its value from one of the permutation tuples in List [5]; this means that for observing the target \({t}_{5}\) the sensor \({S}_{3}\) is configured in its second direction by the second observing range.

For generating the semi-random initial population, half of the solutions are created in such a way the target that is in the critical situation is selected with high priority in the process of constructing a random solution. The critical target is a target which sum of the remaining battery associated to the only sensors that enable monitoring the critical target is in the least amount. Therefore, the sensor that monitors the critical target must be determined optimally to save more energy. This point is applied in the generation of semi-random population. The rest solutions are randomly generated. So, for each target \({t}_{j}\) one of the permutations in List[j] is randomly selected to construct an encoded solution. In this way, a set of random solutions is generated. It is clear-cut that the Algorithm 3 consumes O(m \(\times PopSize\)) as time complexity.

After calling Algorithm 3 in line 8 of Algorithm 2, the fitness values of all eggs are calculated in line 9. Then, the ranking of eggs, representative of candidate solutions, are done based on determined fitness values. In the outset of For-loop (G = 1 To MaxGen) which creates an efficient cover, the solutions are partitioned into two nest categories superior and inferior in line 12. The term \(P_{\alpha }\) determines the fraction of population belonging to inferior category. The rest that are (1-\(P_{\alpha }\)) belongs to superior category. The prominent point of the proposed algorithm revolves around two parts. At first, for each solution in inferior nests the DiscreteLévyFlight algorithm is called to explore discrete search space uniformly. This procedure is elaborated in Algorithm 4. To do so, the handful discrete walking around procedures are defined each of which is randomly called. By applying of one these procedures, the new solution is created. This new solution is substituted by the old one; this is done in lines 13 through 19 of Algorithm 2. This part works such as the SA algorithm does. In fact, it even experiences the bad solutions to evade from getting stuck in the local optimum, but there may be a concern in which the new generated solutions worsen the objective function for all time. This concern can be obviated in the second part because in the second part, for each solution in the superior nests the DiscreteLévyFlight algorithm is called; then, the new generated solution is compared with one of the random solution selected from the entire population. If the new generated solution is better than the selected random solution, that random solution is abandoned from the population and the new solution is placed instead. In the second part it is evolution-based in which the solutions are conducted toward global optimum; this is done in lines 21 through 32 of Algorithm 2. This way is to make a good balance between exploration and exploitation in the search space. After the execution of several generations, the best so far solution,\({\text{Best}}_{\text{Cover}}\), is opted from ranking of solutions based on their fitness values in the current population which line #34 of Algorithm 2 shows. In line #35, the upper threshold time for activation time (AT) of the so far best cover, \({\text{Best}}_{\text{Cover}}\), is determined. This value depends on the weakest sensor in term of its remaining battery in the gained cover. This activation time can be obtained via Eq. (17).

In Eq. (17), the term \(\beta_{i}\) is the remaining battery amount of being used sensor \({\text{S}}_{\text{i}}\). The calculated activation time is added to the lifespan variable in line 36 which determines the life time of the network. The variable NCover that indicates the number of made covers so far is added by one in line 37. Then, the tuple (NCover,\({\text{Best}}_{\text{Cover}}\), AT) is added to EfficientSchedulingSolution in line 38. One of the most prominent part of CSA is how to apply lévy flight procedure. To do so, the novel DiscreteLévyFlight algorithm is called for both inferior and superior nests. It contains handful walking around procedures to explore discrete search space uniformly and efficiently. These procedures are named Crossover-one-point, Crossover-two-point, Shuffle-Odd–Even, and Mutation-two each of which is called randomly because the unbiased artificial algorithm yields more efficient results. This lévy flight procedure is conducted in such a way that it inputs a pair of solutions; then, it returns a new pair of solutions. All of walking around procedures act by changing the input solutions. If during the changes the new solutions are infeasible, the minor modification converts them to make feasible solutions. The infeasibility of solutions mainly backs to the fact that each sensor is utilized in more than one direction or by more than one adjusted radius. To this end, Check&Correct(.) procedure elaborated in Algorithm 5 is called after any changes. The novel DiscreteLévyFlight procedure is elaborated in Algorithm 4. The time complexity of Algorithm 4 is bounded to the time complexity of Algorithm 5 that is O(\({m}^{2}\)) because the linear traverse in each of quadratic parts of Algorithm 4 consumes O(\(m\)) which is trivial against Check&Correct(.) cost. Therefore, the time complexity of Algorithm 4 is O(\({m}^{2}\)).

The DiscreteLévyFlight procedure is called two times in Algorithm 2 that are in lines 14 and 22. When it is called, at its first instruction a random integer number from [1.0.4] interval is drawn in which the number is respectively used for calling Crossover-one-point, Crossover-two-point, Shuffle-Odd–Even, and Mutation-two procedures. If the drawn random integer in Algorithm 4 is one, the Crossover-one-point procedure is called that is similar to crossover of genetic algorithm [32]. Regarding to the problem illustrated in Fig. 2, the Fig. 4 depicts two input solutions \({\text{Sol}}_{i}\)[1.0.5] and \({\text{Sol}}_{j}\)[1.0.5] for Crossover-one-point procedure by considering crossover single point on 3 (on third gene).

After one-point crossover is performed two solutions Temp1 and Temp2 are created in which the first one is invalid. The Fig. 5 illustrates two new generated solutions. The solution Temp1 is infeasible because the sensor \({\text{S}}_{3}\) cannot be activated in two directions at the same time. Afterward, the Check&Correct(.) procedure is called by Algorithm 5 in lines 7 and 8 to repair the contingent impaired solutions. In calling Check&Correct(.) procedure in line 7 of Algorithm 4, a candidate sensor such as \({\text{S}}_{2}\) for monitoring of target \({\text{t}}_{5}\) is randomly added to make feasible and valid solution. In calling Check&Correct(.) procedure in line 8 of Algorithm 4, no changes happen. The valid pair of solutions are shown in Fig. 6.

The same behavior is done for calling Crossover-two-point procedure. Figure 7 is dedicated for illustrative example of this procedure by considering random numbers 1 and 3 as cross points.

Figure 8 demonstrates the intermediate generated solutions that contain imperfect solution. The solution Temp2 is infeasible because the sensor \({\text{S}}_{3}\) cannot be activated in two directions at the same time (see targets \({\text{t}}_{3}\) and \({\text{t}}_{5}\)). Afterward, the Check&Correct(.) procedure modifies it to the accurate solution by engaging random sensor \({\text{S}}_{2}\)(1, 2) instead of \({\text{S}}_{3}\)(2,1) for observing the target \({\text{t}}_{3}\). The Fig. 9 shows the corrected pair of solutions.

In this line, Fig. 10 depicts two input solutions \({\text{Sol}}_{i}\) and \({\text{Sol}}_{j}\) as inputs for the Shuffle-odd–even procedure that is relevant to when Algorithm 4 draws the integer number 3 in its first line.

The Shuffle-odd–even procedure shuffles between two solutions in which it takes odd genes form itself and takes corresponding even genes from other side. After doing so over two solutions of Fig. 10, two new solutions are created but both of them are invalid that Fig. 11 illustrates.

However, by incorporating minor changes, new valid solutions are produced. For instance, Temp1 is an invalid solution because both sensors \({\text{S}}_{1}\) (for targets \({\text{t}}_{1}\) and \({\text{t}}_{2}\)) and \({\text{S}}_{5}\) (for targets \({\text{t}}_{4}\) and \({\text{t}}_{5}\)) have been utilized in two different directions. With closer look, when \({\text{S}}_{1}\)(1, 2) is utilized for target \({\text{t}}_{2}\), the simple changes \({\text{S}}_{1}\)(1,1) for target \({\text{t}}_{1}\) to \({\text{S}}_{1}\)(1, 2) can cover both targets \({\text{t}}_{1}\) and \({\text{t}}_{2}\) at the same time so that it does not need additional sensor. In other words, once one sensor can observe multiple targets in the same direction it is configured to the greater radius but an optimal one. For another target \({\text{t}}_{5}\), the impossible \({\text{S}}_{5}\)(1, 2) is substituted by adjusted \({\text{S}}_{2}\)(3, 2) from List[5]. Therefore, the valid solutions \({\text{Sol}}_{p}\) and \({\text{Sol}}_{q}\) are created with the same behavior that are depicted in Fig. 12.

The last walking around algorithm of lévy flight concept is the Mutation-two procedure; it is called when Algorithm 4 draws 4 as random integer. Figure 13 depicts the input of the Mutation-two procedure.

In Fig. 13, a pair of solutions are given which was taken from either inferior or superior nests. Then, a random gene is selected for each (a sensor that covers the target in that gene) and is substituted by another possible gene (another adjusted sensor that can cover the same target). For instance in solution \({\text{Sol}}_{i}\), the third gene of this solution is selected that is for monitoring of target \({\text{t}}_{3}\). This gene, \({\text{S}}_{3}\)(2, 1), is substituted by another possible adjusted sensor \({\text{S}}_{2}\)(1, 2) which is randomly selected from List [3]. This approach is also performed for the \({\text{Sol}}_{j}\). If for each changes the invalid solutions is gained, the Check&Correct(.) procedure modifies this invalid solution to a valid one similar to the previous examples. Fortunately, both new generated solutions are valid which are depicted in Fig. 14.

One important thing to mention is that all of the walking around procedures in lévy flight are conducted in such a way to decrease the exploring costs. For this end, regarding to the style of the problem encoding in which for each corresponding target \({\text{t}}_{p}\) for instance, a set of sensors can cover that target is considered in List[p]; the reason why operators of the proposed walking around algorithms utilize corresponding gene from other side’s solution or from List[p]. To do so, the pairwise solutions are incorporated in which a pair of solutions creates new pair of solutions. After applying each of walking around algorithms, the new born solutions may violate the rules; for this reason, after each production, the Check&Correct(.) procedure is called to repair impaired solutions. The fault gene in a solution can be simply replaced with a valid gene. It is elaborated in Algorithm 5. It is obvious that the Algorithm 5 consumes O(\({m}^{2}\)) as time complexity.

Time complexity of the proposed D-CSA algorithm

To measure the time complexity of the main proposed algorithm that is Algorithm 2, the effective statements must be taken into consideration. The sub-procedures of lévy flight in Algorithm 4 have at most O(m) where the parameter m is the length of encoded solutions or the number of targets in the observing field because all of the walking around procedures Crossover-one-point, Crossover-two-point, Shuffle-Odd–Even, and Mutation-two take at most O(m), but the Check&Correct(.) consumes O(\({m}^{2}\)). Therefore, the Algorithm 4 takes O(\({m}^{2}\)) as the time complexity. Algorithm 2, the main proposed algorithm, iterates NCover times which while-loop does. In each loop, the main cuckoo search is executed to generate an efficient cover; then, it is added to rudimentary scheduling. This part is iterated MaxGen times. The lévy flight walking around procedures are called in this loop. Thus, its complexity must be multiplied by O(\({m}^{2}\)). In addition, after each cover is constituted, remaining battery of all sensors must be checked; therefore the O(n) should be added to the complexity in this inner loop where the parameter n is for the number of available sensors. Overall, the time complexity of proposed Algorithm 2 is O(NCover \(\times\)(MaxGen \(\times {m}^{2}+n\))) which is a rational cost.

Performance evaluation

This section presents the performance assessment of the proposed discrete cuckoo search optimization algorithm (D-CSA) which solves DSN lifespan maximization problem along with considering battery limitation of utilized homogeneous sensors. It is compared against other state-of-the-arts in term of network lifetime parameter which is the most prominent evaluation metric in this field.

Experimental settings

To have better performance assessment, number of scenarios that are conducted in three directions are presented. At first the number of targets is fix and the number of sensors in observing field is gradually increased; these are scenarios 1 through 3 drawn in Table 4. At second, the number of sensors is fix and the number of targets is gradually increased; these are scenarios 3 through 5 in Table 4. For the scalability testing, the third direction is taken into account. To this end, the scenarios sixth through ninth are conducted which increase both the number of targets and sensors significantly. Table 4 draws considered scenarios.

As discussed in the related work section, brilliant papers in this domain were selected to be compared with the current proposal. The selected papers are based on two successful heuristic, two meta-heuristics, and two hybrid meta-heuristic approaches. After examination in some scenarios by miscellaneous algorithms, the most efficient approaches have been selected for each category. In this regard, the first heuristic which integrates some greedy algorithms to a weighted objective function is selected; this is called heuristic MNLAR (H-MNLAR) [8]. The second successful heuristic algorithm which efficiently works in WSN domain is Homo-LifMax-BC (Hm-LifMax-BC) algorithm which was proposed by Hong et al. in 2022 [35]. It was originally developed to maximize network lifetime in 3D camera sensor networks (CSNs) for monitoring the intruder in observing field. Since our stated problem is two dimensional homogeneous 2D-DSN, their proposed graph-theory-based heuristic was customized for homogeneous 2D-DSN by considering intruders as targets in the field. Other comparative algorithms which are meta-heuristic-based are the genetic algorithm (GA) and the ant colony optimization (ACO) algorithm. The efficient GA algorithm in the stated problem domain has been selected from [4]. Since a coverage problem is one of the most critical issues in wireless sensor networks domain, this issue has been formulated into an optimization problem and was solved by an ant colony optimization for sweep coverage (ACOSC) problem [28]; this algorithm is customized based on the circumstance of the current paper. In the last, two hybrid algorithms were selected. For the first, the hybrid discrete PSO (HDPSO) algorithm has been selected and customized for the stated problem [29]. The reason behind it revolves around the fact that the stated problem is discrete in nature; so, the fast, discrete-based, and at the same time a hybrid algorithm which balances exploration and exploitation in search space is favorable; the reason why the HDPSO algorithm has been selected from literature to be customized based on the stated problem. Finally, the second hybrid meta-heuristic-based algorithm is hybrid GA mixing Tabu search algorithm (H-GATS) which was utilized in 2023 for WSN networks at the aim of target coverage and prolonging the network age [34]. In this paper, the H-GATS is also customized to be tailored with the conditions of the stated problem. Note that, to improve the quality of traditional GA, the H-GATS utilized cyclic crossover instead of canonical one-point or two-point crossover. Also, we customize H-GATS to be commensurate with the stated problem by incorporating cyclic crossover in its GA’s exploration phase similar to the used approach in [42]. Table 5 shows parameter settings of the comparative algorithms.

All comparative algorithms were executed in different scenarios in the same conditions to have fair and efficient comparisons. In this regards, every comparative algorithm in any scenario was independently run 20 times. Except for the heuristic algorithms which have constant behavior, statistical analysis is presented to observe the temper of stochastic comparative algorithms, meta-heuristics and hybrid meta-heuristics, in several circumstances. Therefore, the best, worst, average, standard deviation (STD) values of each algorithm are reported for better assessment. To have fair comparisons, all of the simulations are performed in the same platform. So, the simulation programs were written in the Matlab 2018 programming language. Moreover, the programs were run on a laptop with Intel® Core i5-3230 M CPU@ 2.60 GHz, 8 GB RAM memory specification and Windows 7 64-bit as a platform. In this vein, the Table 6 is dedicated to show simulation parameters. The parameters are monitoring district in term of squared meter (\({m}^{2}\)), number of targets in the observing field, number of directional sensors deployed in the field, maximum number of directions, maximum adjustable ranges, and battery usage level for each adjusted range.

Experimental results analysis

The proposed algorithm is designated for solving DSN’s lifetime maximization problem which at the same time monitors all targets in the observing field continuously. It is run in all of the defined scenarios along with all comparative algorithms in the same circumstance and fair conditions. Then, the experimental results are presented in a descriptive statistics, namely, the min–Max range, average, and standard deviation (STD) values gained via different executions of each comparative algorithm that are separately reported based on each scenario. To this end, Table 7 is dedicated to show min–Max range values of the lifetime that is gained from the output running of the comparative algorithms by separating each scenario. Since each scenario has been implemented many times independently, the minimum (min), maximum (Max), average (Avg), and standard deviation (STD) values of evaluation metric are reported.

As Table 7 reports, the range values which D-SCA produces is better than others; in addition, its average values in all scenarios outperforms against other state-of-the-arts (c.f. in Table 8). To observe schematically, the Figs. 15, 16, 17, 18, 19, 20, 21, 22, 23 are dedicated to the first to the ninth scenarios respectively.

Figure 15 is dedicated to schematically depict the performance of all comparative algorithms in the first scenario where the number of targets in the field is 20 and the utilized sensors are 30. It is obvious that the H-MNLAR and Hm-LifMax-BC treat the constant behavior because they are heuristic or greedy algorithms that gradually complete the solution based on a predetermined criteria. On the other hand, the proposed and other comparatives behave stochastically the reason why the output of each execution result is different. As the Fig. 15 shows the distance between min and Max range resulted of GA is high which indicates the degree of convergence is low in comparison with others. In contrast, this distance is low for the proposed D-CSA which implicitly indicates the high level of convergence. Also, for some little cases the Max values are gained because the average value in D-CSA is a little far from the average value. In the GA and ACOSC the average values is near to Max value which means that most cases out of 20 executions result near to Max value. In contrast, the H-GATS competes marginally with HDPSO algorithm, but on the average HDPSO beats H-GATS.

Figure 16 is dedicated to schematically depict the performance evaluation of all comparative approaches in the second scenario where the number of targets in the field is 20 and the utilized sensors are 40. As mentioned earlier, H-MNLAR and Hm-LifMax-BC treat the constant behavior. On the other hand, the proposed and other comparatives behave stochastically. As the Fig. 16 shows the distance between min and Max range resulted of GA and ACOSC algorithms are the highest which indicates the degree of convergence is low in comparison with others. In contrast, this distance is lowest for the proposed D-CSA and HDPSO which implicitly indicates the high level of convergence. Also, for some little cases the Max values are gained because the average values in both D-CSA and HDPSO are a little far from the average value. In the GA the average values is near to Max value but in ACOSC the average value in near to min value which means that most cases out of 20 executions result near to Max and min values for GA and ACOSC respectively. In contrast, the H-GATS competes marginally with HDPSO algorithm, but on the average HDPSO are H-GATS are the same as Table 8 informs. Note that, the average of H-GATS is near to its min value.

For the third scenario where the number of targets in the field is 20 and the utilized sensors are 50, the Fig. 17 is dedicated. This figure schematically depicts the performance evaluation of all comparative approaches in term of network lifetime. As mentioned earlier, the heuristics H-MNLAR and Hm-LifMax-BC behave deterministically because these greedy algorithms work based on a constant criteria in each stage. So, each execution results the same. On the other hand, the proposed and other comparatives behave stochastically or non-deterministically the reason why each execution results different. As the Fig. 17 shows the distance between min and Max range resulted of all meta-heuristic-based algorithms except for HDPSO and H-GATS are the lowest which indicates the degree of convergence is high in comparison with D-CSA. Except for HDPSO and H-GATS which the average values are near to the Max value, for other meta-heuristic-based algorithm the average value is approximately in the middle between the min and Max values. In the third scenario, both comparative HDPSO and H-GATS algorithms have the same treatment.

For the fourth scenario where the number of targets in the field is 30 and the utilized sensors are 50, the Fig. 17 is dedicated. This figure schematically depicts the performance evaluation of all comparative approaches in term of network lifetime. Apart from H-MNLAR and Hm-LifMax-BC which naturally behave constantly, the other comparative algorithms and the proposed D-CSA behave stochastically; so, the min–max ranges and the average values are approximately the near, but the proposed D-CSA marginally outperforms against others. With closer look, the reason revolves around the fact that in distributed dataset for the fourth scenario, for each critical targets number of sensors which can monitor them was low the reason why the all algorithms converge sooner than other scenarios with rather poor results. This scenario proves that in some cases which the number of critical targets are high in comparison with the number of active sensors even every strong algorithm encounter with challenges. In other words, although the number of alive sensors are rather high but they cannot cover available targets because of early battery depletion of sensors which monitors critical targets. In this scenario, the HDPSO outperforms against H-GATS (Fig. 18).

For the fifth scenario where the number of targets in the field is 40 and the utilized sensors are 50, the Fig. 19 is dedicated. This figure schematically depicts the performance evaluation of all comparative approaches in term of network lifetime. The min–max distance range for GA, ACOSC, H-GATS, and HDPSO are rather low but in contrast this distance is high for D-CSA. However, the average result gained by the proposed D-CSA proves the superiority of this algorithm against other counterparts in term of network lifetime extension. Note that, the comparative heuristics have inferior results in comparison with other approaches as the scale of the input size of scenario increases. In this scenario similar to fourth scenario, the HDPSO algorithm outperforms against H-GATS algorithm.