Abstract

Information leakage has become an urgent problem in multiple Online Social Networks (OSNs). The interactive communication of users has raised several privacy concerns. However, the current related work on privacy measurement only considers the privacy disclosure of user profile settings, ignoring the importance of profile attributes. To solve the efficient measurement problem, we consider the influence of attribute weight on privacy disclosure scores and propose a privacy measurement method by quantifying users’ privacy disclosure scores in social networks. Through introducing Technique for Order Preference by Similarity to an Ideal Solution (TOPSIS), we propose a Privacy Scores calculation model based on Fuzzy TOPSIS decision method (PSFT), that is more accurate calculate users’ privacy disclosure scores and that can improve users’ privacy awareness in multiple OSNs. Users can reasonably set the attribute file configuration based on privacy scores and attribute weight. We conduct extensive experiments on synthetic data set and real data set. The results of the experiments demonstrate the effectiveness of our model.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The Internet is constantly overturning traditional social interaction at an alarming rate. It is estimated that about billions of users have actively used OSNs so far [1]. As the popularity of various online social application services, the scale of online social networks formed based on WeChat, Weibo, Facebook, Twitter and many other applications, meanwhile greatly enriching the diversity of human interactions and providing platforms for people to express their feelings [2]. It also accumulated a vast amount of users information and interaction contents, which potentially expose personal information and further develop the related research of social networks. In OSNs, users can set up their profiles including structured data and unstructured data, and lead to the property settings of personal data on different social platforms which are an indicator to measure personal privacy. Moreover, the information interaction between users in multiple social networks platforms also poses a potential threat to the disclosure of users’ privacy. It should be emphasized that when users share information on OSNs, due to lack of privacy awareness, they may not consider the access right and sharing scope [3]. This will result in negative privacy related experience [4].

To prevent privacy disclosure, social platforms implement a series of privacy policies to protect the security of personal data [7], such as restricting access to users’ data, designing general-sum stochastic game model and access-control policies [5, 6]. However, users’ social information is real-time and complex, and the social information between users is relevant. Although these policies are intended to protect users’ privacy to the greatest extent possible, social platforms still cannot provide absolute security for users [8,9,10]. And profile privacy settings for users are subjective on social platforms. Users may change levels of access, privacy awareness for attribute settings, as well as varied privacy requirements for individual attributes, resulting in the range of privacy status. For more accurately measure the degree of privacy leakage from the perspective of users, the best solution is to quantify the users’ privacy status and provide reasonable attribute configuration opinions for each user.

In this study, a new privacy measurement model PSFT is proposed for solving the privacy measurement problem in multiple OSNs. The proposed approach is generalized highly, considering and measuring the influence factors of privacy disclosure scores more comprehensively and accurately. The existing privacy measurement methods do not consider the important impact of attributes on different social platforms. We fully take into account attribute weight based on the fuzzy theory [11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44] and enable users to know their privacy status. At the same time, users can adjust their property profile settings according to the weight value. Compared with traditional methods, the significant contributions of this work are as follows.

-

By evaluating the influence of attribute weight on privacy scores and combining with fuzzy TOPSIS decision methods [12], we proposed a computing model PSFT to obtain the ranking of users’ final privacy disclosure scores, which can more accurate measure users’ privacy disclosure.

-

According to the ranking, users can have a clearer understanding of their privacy disclosure. For users with a large degree of disclosure, they can reduce the disclosure risk by changing the profile attribute settings.

-

Users can reset the visibility of attributes according to attribute weight, thus increasing privacy awareness and reducing privacy concerns.

-

Extensive experiments have demonstrated the effectiveness of the PSFT model and its important improvements in reducing the privacy risk of users.

The rest of this paper is organized as follows. Some existing work on the privacy measurement are reviewed in section “Related work”. “Attributes privacy scores evaluation in OSNs” mainly presents the evaluation of attribute privacy scores. In “TOPSIS-based online social network privacy scores calculation model”, the TOPSIS decision method and the overall privacy score calculation model PSFT are provided. Then “Evaluation” describes the data set with experiment analysis. Finally, some summary and future work are given in “Conclusions”.

Related work

Privacy measurement is a challenging issue. Several papers have provided definitions of privacy measurement [13,14,15]. In the research of privacy protection, privacy measurement usually reveal the risk of privacy information leakage through metric indicators or methods. It reflects the strength of privacy protection methods from the perspective of disclosing private information.

In social networks, many works measured the degree of privacy through calculating the privacy disclosure scores. Michael et al. [16] first proposed a model for calculating privacy scores based on sensitivity and visibility in 2010. However, he did not verify the effectiveness of the model through the data set. Liu and Terzi [19] proposed a mathematical model based on sensitivity and visibility using the Item Response Theory [17], and proved the effectiveness and practical utility of the model through synthetic data and real data.

However, these models do not consider the relationships between these attributes and whether they can be used to infer additional information about the users. Petkos et al. presented Pscore in 2015 [18]. The framework can use data-driven mechanisms to infer information that is not explicitly displayed. Inferred information is particularly useful to help the users understand what attackers may know from analysing the OSNs presence data. Pensa and Blasi proposed a further framework based on the model of Liu and Terzi for data protection [19, 20]. They calculated the users’ privacy scores and set a threshold for each user. When the threshold was exceeded, they will notified that users proactively learned to help exposed users customize their privacy settings. On this basis, Erfan applied fuzzy inference system [29] and expanded the calculation of users’ privacy scores to multiple social networks.

With regard to privacy setting configuration and privacy awareness in OSNs, it has become an important issue to provide indicators and mechanisms to promote personal privacy management [21, 22]. The related applications typically only provide the mechanism for configuring users privacy profiles. Most approaches focus on protecting information involving profile rather than protecting the visibility of other information posted by the users. Pensa found some suggestions to try to solve these problems through the automation of privacy settings [20]. However, these methods often require some interventions from the users and do not solve the problem of raising privacy awareness. To quantify the amount of information leaked by users inadvertently, Becker proposed privacy awareness in 2009 [24], and provided solutions to reduce information loss. Privacy awareness motivate users to take measures to lessen privacy risk in addition to decreasing information loss. The Xue Feng model calculated the impact of attribute content and privacy awareness on privacy scores, and verified on multiple social platforms. Experiments show that the Xue Feng model can reduce information loss [27].

Other research have assessed users’ privacy in ways other than sensitivity and visibility. Srivastava et al. described and calculated the Privacy Quotient [23]. That is to say to measure the privacy of the users’ profile using the naive approach. They made full use of the Item Response Theory model to measure the privacy leak of messages and text. Talukder et al. proposed Privometer [25], a tool including information installed in the users’ friend profiles by malicious applications to measure privacy leakage in social networks. According to their personal privacy contributions, Privometer ranks them and offers self-sanitization recommendations to reduce the leakage. Moreover, many studies in recent years have transformed real-world problems into mathematical models [38,39,40]. Kumar applied fuzzy theory to transportation problems and transform the fuzzy transportation problem into a clear-cut problem. Furthermore, he presented algorithms for solving optimization problems using fuzzy and intuitionistic fuzzy sets, and found the optimal objective value [41,42,43].

In our model, we assess the impact of the attributes weight on privacy disclosure scores for multiple social platforms, and introduce fuzzy TOPSIS decision method to rank the value of privacy scores. According to the ranking results and the weight value, users with higher privacy scores are more likely to leak privacy and the attributes of higher weight can be reset.

Attributes privacy scores evaluation in OSNs

On social media networks, user attributes can be extracted through profiles, posts, photographs, and videos. We evaluate the attribute privacy disclosure by calculating attribute privacy scores. The research focuses on two metrics that influence attribute privacy scores, namely sensitivity and visibility. In our model, we measure the sensitivity of the information, and then calculate the visibility based on four factors including accessibility to information, the difficulty of data extraction, reliability of data and privacy aware, which all have the direct impact on visibility. Through Euclidean distance, four factors are aggregated to find the visibility cluster center of each user [27]. Next, by aggregating sensitivity and visibility we get the attribute privacy scores. The calculation of users’ overall privacy scores and ranking are introduced in the next section.

There are a set of users \(\Upsilon =\{u_{1},u_{2},\ldots , u_{m}\}\) and each user includes n attributes in s OSN platforms. Through calculating the sensitivity \(\theta \) and visibility v, the attribute privacy scores p are obtained. The overall privacy scores are R. For the convenience of intuitive understanding, the main symbols and meanings involved in the technical methods in this paper are introduced here, as shown in Table 1.

Sensitivity

Sensitivity refers to the influence of various attributes on different social platforms, reflects the most intuitive impact of attribute settings on privacy scores, and represents the potential harm of attackers to target users. The higher the sensitivity of the attribute, the stronger the concern after the disclosure of the attribute content. Srivastava et al. [23] calculated the sensitivity scores of 11 attributes based on the quotient model to measure the privacy scores. The results showed that address, political views and contact number were highly sensitive, while birthdate and current town were less sensitive. We use the sensitivity values derived by Srivastava et al. [23], as shown in Table 2.

Visibility

Visibility represents the public degree of the attribute information. Accessibility is the degree of access to users information, extraction difficulty includes the difficulty of extracting structured data and unstructured data, the accuracy of privacy measurement depends on the reliability of attributes and other information, and privacy awareness refers to the importance users attach to their privacy on social platforms. By evaluating the value of accessibility, the difficulty of extraction, reliability and privacy aware, we get the visibility of each attribute. The four factors have been quantified [27], which will not be repeated here. Euclidean distance is used to calculate users’ visibility [28]. Each user’s accessibility, extraction difficulty, reliability and privacy aware form a four-dimensional vector. The Euclidean distance between the four-dimensional vector and a certain classification center is the smallest, and the visibility of this vector is the value represented by this classification center.

The formula for n-dimensional Euclidean distance is:

where x, y denote two n-dimensional vectors respectively.

After the classification center is designated, the visibility of any sample is the value represented by the classification center [28]. We also set that the range of visibility value is [1,6]. Finally, the visibility value of each attribute is obtained. The higher the visibility of the attributes, the more serious the possibility of information leakage.

Calculation of attribute privacy scores

Formula (2) can be used to calculate users’ attribute privacy scores through visibility and sensitivity.

where \(v_{i}\) is visibility of attributes and \(\theta _{i}\) is sensitivity of attributes of user i. The higher the attribute privacy scores, the more serious the privacy leakage of users.

TOPSIS-based online social network privacy scores calculation model

Fuzzy logic

Fuzzy set theory is widely used in fuzzy modeling of human thinking, and it also fully addresses the uncertainty of decision information based on multiple criteria. In fuzzy sets, Fuzzy items are used to describe the mapping from language variables to numerical variables [26]. The relevant definition of fuzzy set is given as follows.

Definition 1 Let the set \(X\lnot \emptyset \). The fuzzy set F is an object having the form [33]:

where \(\mu _{F}\) is the membership function of fuzzy set F and \(\mu _{F}:X\rightarrow [0,1]\) represents the degree of relevance of x in F.

In the fuzzy set F, the experts’ evaluation of attribute weight has no non-subordination, which means that the experts only give the evaluation of attributes with different degree of importance. In addition, since it is difficult to quantify the importance of attributes with precise values on social platforms, considering the specification of related applied field of the present work, triangular membership functions were adopted. The process of fuzzification and defuzzification comes next. The triple (a, b, c) with the pertinence function can be used to set the triangular fuzzy number. Defuzzification is the process of converting fuzzy numbers into single numbers in fuzzy logic.

Treatment of attribute weight

Diverse social attributes have different effects on privacy disclosure scores. The configuration of attributes directly determines whether their privacy will be disclosed. Whether an attribute is important depends on the impact of attribute characteristics on privacy. Attributes that have a greater impact on privacy will have the greater weight, while attributes with a lesser impact will have the correspondingly lower weight. So we evaluate the weight values to generate more accurate privacy scores.

The evaluation of attribute weight is a difficult step in the process of computing privacy scores, and weight of different attributes has a greater effect on the privacy scores. The definition of attributes importance depends on the experience and knowledge of each expert. The treatment of attributes weight constitutes the central contribution of this work.

Table 3 provides a detailed breakdown of how linguistic terms [33,34,35,36,37] supplied by experts were converted into fuzzy triangular numbers. To measure the information disclosure, we collect data on 11 attributes for each user in multiple OSNs.

Table 4 simulates the definition of the degree of importance for each attribute using the Language Terms defined by four experts. The purpose of this evaluation is to provide different degree of evaluation for the importance of the users’ attributes.

Table 5 shows the calculation process of attribute weight value. Firstly, the fuzzy average values of attributes are computed, and then the fuzzy values are defuzzied using the average method. Finally, the normalization is performed. The attribute weight values will be applied to the process of calculating the overall privacy scores.

Overall privacy scores calculation model

Multiple criteria decision making (MCDM) is a sub discipline of operations research [30, 31], which clearly evaluates multiple conflicting criteria in decision-making. The difficulty of the problem stems from the existence of multiple criteria. MCDM focuses on structuring and solving decision-making and planning problems involving multiple standards. The process of evaluating users’ privacy measurement can be said a decision-making problem. TOPSIS method is a common comprehensive evaluation method and can accurately reflect the gap between the evaluation projects.

TOPSIS developed by Hwang and Yoon is one of the typical decision-making methods [32], which makes full use of the information from the data and can judge the advantages and disadvantages of each solution. It is based on the positive ideal solution and the negative ideal solution, two fundamental ideas. The positive ideal solution is the best alternative that can be considered, and all of its criteria result in the best value when compared to the alternatives. The worst solution ever proposed is the negative ideal solution, and all of its requirements result in the worst value among the alternatives. Comparing each alternative to both the positive and negative ideal solutions is the principle of scheme ranking. One of the schemes is the best among the alternatives if it is both farthest from the negative ideal solution and closer to the positive ideal solution [30].

TOPSIS takes into account not only the closest distance to the positive ideal solution, but also the longest distance to the negative ideal solution, so as to determine the optimal solution to maximize the effectiveness. And the process of TOPSIS method does not incorporate any subjective factors and can only get the unique optimal solution. It is more suitable for the decision-making environment of social networks that require complete objectivity for privacy leakage.

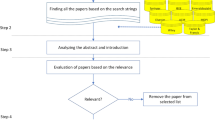

On the basis of the distance from the extraction difficulty, accessibility, reliability and privacy awareness of each user to the clustering center, we obtained the users’ visibility according to formula 1, and the attribute privacy scores can be obtained through the aggregation with the sensitivity according to formula 2. Combining attribute privacy scores with attribute weight, the general model of the proposed method is presented in Fig. 1. First, the attribute privacy scores matrix is constructed for user list and user attribute privacy scores. According to formula 4, the matrix is normalized. The attribute weight have been obtained by means of decision experts evaluation. Then the attribute scores matrix and weight matrix are aggregated to obtain the attributes decision matrix in step 4. Next calculating the PIS(positive ideal solutions) and NIS (negative ideal solutions) of the evaluation matrix, calculating the distance from the attribute decision matrix to PIS and NIS in step 6. The minimum overall privacy score of the user is the most ideal scheme in the model PSFT. Finally through calculating the relative closeness between privacy scores of the particular user to the minimum privacy score, we get the overall privacy score for each user. The overall privacy disclosure score ranking is obtained according to the relative closeness. The steps of the TOPSIS-based online social network privacy scores calculation can be summarized as follows.

Step 1. Construct a matrix \([u_{ij}]_{m \times n}\) composed of m users(alternatives) and n attributes(criteria). \(u_{ij}\) represents the privacy score of the j-th attribute of the i-th user, i = 1, 2, \(\ldots \), m; j = 1, 2, \(\ldots \), n.

Step 2. The matrix \((u_{ij})_{m\times n}\) is normalized to form the matrix \([NU_{ij}]_{m \times n}\). The values in this matrix range from 0 to 1, where 1 is the users’ attribute privacy completely leaked and 0 is no leakage of users’ attribute privacy. The normalization method used is as follows:

where i = 1, 2, \(\ldots \), m; j = 1, 2, \(\ldots \), n.

Step 3. A set of attribute weight \(w_{j}(j=1, 2,\ldots , n)\), such that \(\sum \nolimits _{j=1}^{n} w_{j}=1\) have to be decided by experts.

Step 4. Calculate the weighted normalized decision matrix \(T=[t_{ij}]_{m \times n}\).

\(t_{ij}=w_{j}NU_{ij}\), i=1, 2, \(\ldots \), m; j=1, 2, \(\ldots \), n.

Step 5. Determine ideal alternatives \(t_{iI}\) and non-ideal alternatives \(t_{iN}\) for each attribute parameter.

\(t_{iI}=\min \limits _{j = 1}^n t_{ij} \), \(i=1,2,\ldots ,m\).

\(t_{iN}=\max \limits _{j = 1}^n t_{ij} \), \(i=1,2,\ldots ,m\).

Step 6. The distance between \(t_{ij}\) and ideal alternatives and non-ideal alternatives is given by the Euclidean distance in the following equation:

where \(i=1,2,\ldots ,m\); j=1, 2, \(\ldots \), n.

Step 7. Calculate the relative closeness between privacy scores of the particular user to the minimum privacy score as overall privacy scores \(R_{i}\).

The higher the privacy score, the better the ranking, and the worse the users’ privacy status. According to the ranking results, users can know their privacy protection degree. Users can choose the sharing range of attributes with higher weight values to be narrower and the privacy level of the attribute with higher weight to be set higher by combining the weight values and their overall privacy scores in detail.

Evaluation

This section shows the degree of privacy protection by ranking the privacy disclosure scores on multiple social platforms. We validate the PSFT model by synthetic data set and real data set on multiple platforms.

Case study I

In this experiment, we use two users’ disclosed data [29] and 13 other generated data randomly. The dataset mainly contains 15 users information with 11 most representative profile attributes on the four online social platforms of Facebook, ResearchGate, LinkedIn, and Google+, and contains the accessibility values with attribute content and data extraction difficulty of two users [27]. In this paper, the accessibility and data extraction difficulty values of the two users are used as the input values of a and b. Then, we comprehensively quantify the visibility of user a and user b, find out the shortest Euclidean distance of users to the clustering center, and get the classification result. The visibility of sample is represented by this center, as shown in Table 6.

According to calculation steps, we calculate the 11 attribute privacy scores of 15 users in Table 7. Then combined with the evaluation matrix for attribute weight \(\omega _{j}\) in Table 5, the attribute decision matrix T is obtained. And we determine that the ideal alternatives PIS for each attribute is [2.4000, 0.7332, 4.2500, 0.6996, 0.9000, 0.6996, 1.2000, 2.4996, 1.8000, 3.3996, 4.0998] and the non-ideal alternatives NIS is [0.6000, 0.3666, 1.7000, 0.2332, 0.4500, 0.2332, 0.4000,0.8332, 0.9000, 1.1332, 2.0499]. According to formula 5–7, we obtain the values of \(D_{i+}\) and \(D_{i-}\), and get the privacy scores ranking result of 15 users that is l > n > o > i > d > b > h > k > c > j > f > e > g > a > m in Table 8 finally. The higher the scores, the more serious the privacy disclosure. On the contrary, the lower the scores, the greater the degree of privacy protection and the stronger the privacy security.

The comparison measured by PSFT model, XueFeng model [27] and Erfan model [29] is shown in Fig. 2, which illustrates the overall privacy disclosure scores of 15 users. All in all, privacy disclosure scores of PSFT model are generally lower, because we consider the weight of attributes, namely the importance of the different attributes privacy disclosure on different platforms. Most users using the PSFT model show lower privacy disclosure scores, except for ‘d’, ‘i’ and ‘l’. Because ‘d’ and ‘i’ have a wider range of access to attributes with larger weight, leading to the higher overall privacy scores. For user ‘l’, he has the same content for the attribute with higher weight in different platforms, so he has a higher score of privacy disclosure and is easier to be attacked. On the whole, Erfan in the FIS model [29] neither considers the impact of attribute content on accessibility, nor the impact of privacy awareness on visibility. Although XueFeng model [27] considers attribute content, the overall privacy scores are higher because the users may often enter the same content on different platforms. However, PSFT model considers content and the weight value of the attributes. For the attribute with greater weight, users make reasonable adjustment to their profile attributes. Therefore, the privacy of users in various social networks will be more strongly guaranteed, and the better social experience will be obtained on different social platforms.

Case study II

We apply our model to multiple platforms that are widely used in the real world social networks. According to the survey, the most widely used social platforms are QQ, WeChat, Sina Weibo and Taobao in China. On these four platforms, users have registered attribute data. Through questionnaire survey and analysis [27], the sensitivity of the attributes is obtained. To ensure the authenticity and reliability of the experimental data, we investigate and collect the real social information of 100 college students, and record their attribute information on four social platforms. Due to the limited space, we select the information of 6 users, namely ‘p’,‘q’,‘r’,‘s’,‘t’,‘u’, who use social platforms to be more diversified as experimental data in Table 9. As with related studies, to represent the accessible level and content of attributes, we use 1–4 to represent the accessibility of the attributes where 1 means only visible to oneself, 2 means visible to family and oneself, 3 means visible to oneself, family and friends, and 4 means the attribute content is public [29]. A-Z represent the content of the attributes [27]. Similarly, after expert review, the weight of username, avatar, political belief and education are 0.53, 0.42, 0.65 and 0.71, and the weight of other attributes are shown in Table 5.

The privacy score experiment results of these six users in PSFT model, Xue Feng model [27] and Erfan model [29] are shown in Fig. 3. Due to insufficient privacy awareness, user ‘t’ has the wider range of access to the privacy settings of more important attributes, and other users have low privacy scores in the PSFT model. The Erfan model has the highest privacy scores, because the default attribute content filled in is the same on different platforms. Xuefeng model does not consider the attributes of users with different degree of importance. In our model, users can set a smaller sharing range for the attribute privacy level, so as to effectively prevent malicious attackers from prying into users’ privacy. To prove the advantage of our model in multiple social networks, Fig. 4 compares the privacy scores of users ‘m’ and ‘n’ about the PSFT model, Xue Feng model and Erfan model in three social platforms and four social platforms respectively. As the number of platforms increases, attribute reliability and privacy awareness continue to improve. However, as shown in Fig. 4, the privacy scores of PSFT model, Xue Feng model and Erfan model increase with the number of platforms, which means that the more platforms used, the greater the possibility of exposing privacy. In other words, the process of attackers attacking users will be easier, and the degree of privacy protection of users will be worse. Overall, the privacy scores of the PSFT model are much lower than Xue Feng model or Erfan model. Because users’ awareness of protecting the privacy of important attributes is increasing with the use of more social platforms, and the sharing scope of attribute information with large weight is shrinking, resulting in smaller privacy scores.

Change profile

To further verify the usability of our proposed model in practical social networking, the confirmatory description is made. According to the evaluation of attribute weight, contract number, e-mail, address, religious views, and political views have higher weight. We set the access level of these attributes to higher access settings. The experiment shows how to use the PSFT model to reduce privacy leakage. Taking user a and user b as examples, users can reasonably change the configuration file settings on the basis of the weight of attributes, as shown in Table 10. For example, set the sharing range of \(a \)’s contact number on Facebook and Researchgate to be accessible only to himself, set the sharing scope of \(a \)’s email on Facebook and LinkedIn to be accessible only to family and themselves, set the sharing range of \(b \)’s address on Facebook to be accessible only to himself et al.

In Fig. 5, after modifying the settings, the privacy scores of both user a and user b are significantly reduced. Because the user sets the accessible range of the profile attribute with larger weight to a higher level resulting in the lower overall privacy scores, which meets the users’ demand for higher privacy protection intensity. In the real world, people have different requirements for privacy protection, so there is no general protection method. The fundamental purpose of calculating privacy scores is to hope that users can understand their privacy status and reasonably set personal data attributes according to attribute weight, so as to make users’ privacy inviolable from the beginning.

We also verify the model on the single platform dataset. This dataset is published by Stanford Network Analysis Project (SNAP) [45], where we use the Facebook dataset including 4039 users and 10 user attributes. Due to space limitation, 8 users were selected to validate this model on the Facebook dataset. By calculating the overall privacy disclosure scores, users know their privacy status and attributes vulnerable to attackers. And then they adjusted the sharing range of the attribute profile according to the weight, so that the visible range of attributes is set narrower. The experimental results in Fig. 6 show that the privacy scores of users are significantly reduced, and the model is also suitable for the single platform social network.

We propose a privacy measurement model combining TOPSIS to calculate privacy scores. In references [46,47,48], privacy measurement methods based on anonymity, information entropy and differential privacy are respectively proposed. In view of the existing privacy measurement methods and the privacy measurement model proposed in this paper, Table 11 explains the privacy measurement indicators, the relationship between measures and privacy intensity, and the advantages and disadvantages of different privacy measurement methods in detail.

Conclusions

In this paper, we propose a more accurate privacy score calculation model PSFT which reflects the strength of privacy measurement through the disclosure of privacy information. To quantify privacy leakage of users in social network platforms, we first combined sensitivity and visibility to calculated attributes privacy scores. Then considering the importance of attribute weight, we propose TOPSIS-based online social network privacy scores calculation model that can obtain the ranking of users’ overall privacy scores by combining the TOPSIS decision method. Finally, based on the ranking of the users’ privacy scores and the weight values of attributes, users can reset their profiles, thus effectively reducing the risk of privacy leakage. We compare our method with XueFeng model and Erfan model in the same dataset and multiple OSNs. The results show privacy disclosure scores of PSFT model are generally lower and better protect the privacy of users from disclosure.

The PSFT privacy measurement model is effective for solving the privacy measurement problem. However, the experts’ evaluation of attribute weight is not objective enough and the attack through background knowledge should also be considered. To defend the inferred attacks of attackers, we can use (2,1)-fuzzy set to measure the degree of privacy disclosure more comprehensively through the membership evaluation of users’ background knowledge for attackers and the non-membership evaluation of attributes for users. Due to the large-scale and dynamic of social networks, the existing decision-making methods are not yet able to meet the dynamic changes of attribute settings in social networks. The decision model of triangular fuzzy soft level with dynamic intervals deserves further study in privacy score disclosure.

References

Statista (2019) Number of social media users worldwide from 2010 to 2021. https://www.statista.com/statistics/278414/number-of-worldwide-social network-users/

Al-Asmari HA, Saleh MS (2019) A conceptual framework for measuring personal privacy risks in Facebook online social network. In: 2019 International conference on computer and information sciences (ICCIS), IEEE, pp 1–6

Joe MM, Ramakrishnan DB (2014) A survey of various security issues in online social networks. Int J Comput Netw Appl 1(1):11–14

Zhang S, Yao T, Arthur Sandor VK, Weng TH, Liang W, Su J (2021) A novel blockchain-based privacy-preserving framework for online social networks. Connect Sci 33(3):555–575

Wu Y, Pan L (2021) SG-PAC: a stochastic game approach to generate personal privacy paradox access-control policies in social networks. Comput Secur 102(102157):1–17

Mitchell D, El-Gayar OF (2020) The effect of privacy policies on information sharing behavior on social networks: a systematic literature review. In: Proceedings of the 53rd Hawaii international conference on system sciences, pp 4223–4230

Lin X, Liu H, Li Z, Xiong G, Gou G (2022) Privacy protection of Chinas top websites: a multi-layer privacy measurement via network behaviours and privacy policies. Comput Secur 114(102606):1–20

Bui D, Shin KG, Choi JM, Shin J (2021) Automated extraction and presentation of data practices in privacy policies. Proc Priv Enhanc Technol 2:88–110

Amato F, Coppolino L, D’Antonio S, Mazzocca N, Moscato F, Sgaglione L (2020) An abstract reasoning architecture for privacy policies monitoring. Future Gener Comput Syst 106:393–400

Alemany J, Val ED, Garcła-Fornes A (2022) A review of privacy decision-making mechanisms in online social networks. ACM Comput Surv 55(2):1–32

Feng F, Fujita H, Ali MI, Yager R, Liu X (2018) Another view on generalized intuitionistic fuzzy soft sets and related multiattribute decision making methods. IEEE Trans Fuzzy Syst 27(3):474–488

Kannan ASK, Alias Balamurugan SA, Sasikala S (2019) A novel software package selection method using teaching–learning based optimization and multiple criteria decision making. IEEE Trans Eng Manag 68(4):941–954

Chen B, Zhu N, Jingsha HE, He P, Jin S, Pan S (2020) A semantic inference based method for privacy measurement. IEEE Access 8:200112–200128

Zhao Y, Wagner I (2022) Using metrics suites to improve the measurement of privacy in graphs. IEEE Trans Dependable Secur Comput 19(1):259–274

Hsu H, Martinez N, Bertran M, Sapiro G, Calmon F (2021) A survey on statistical, information, and estimation-theoretic views on privacy. IEEE BITS Inf Theory Mag 1(1):45–56

Michael E, Grandison T, Sun T, Richardson D, Guo S, Liu K (2010) Privacy-as-a-service: models, algorithms, and results on the Facebook platform. Proc Web 2:5–10

Baker FB, Kim SH (2014) Item response theory: parameter estimation techniques. Lan Test 2(2):117–126

Petkos G, Papadopoulos S, Kompatsiaris Y (2015) PScore: a framework for enhancing privacy awareness in online social networks. In: 2015 10th International conference on availability, reliability and security, pp 592–600

Liu K, Terzi E (2010) A framework for computing the privacy scores of users in online social networks. ACM Trans Knowl Discov Data 5(1):1–30

Pensa RG, Di Blasi G (2017) A privacy self-assessment framework for online social networks. Expert Syst Appl 86:18–31

Wagner I, Boiten E (2018) Privacy risk assessment: from art to science, by metrics. Data Privacy Management, Cry Bloc Tec 225–241

Bakopoulou E, Shuba A, Markopoulou A (2020) Exposures exposed: a measurement and user study to assess mobile data privacy in context. arXiv preprint arXiv:2008.08973. 1–18

Srivastava A, Geethakumari G (2013) Measuring privacy leaks in online social networks. In: 2013 International conference on advances in computing, communications and informatics (ICACCI), IEEE, pp 2095–2100

Becker JL (2009) Measuring privacy risk in online social networks. University of California, Davis

Talukder N, Ouzzani M, Elmagarmid AK, Elmeleegy H (2010) Privometer: privacy protection in social networks. In: 2010 IEEE 26th international conference on data engineering workshops, IEEE, pp 266–269

Zhu B, Xu Z (2016) Probability-hesitant fuzzy sets and the representation of preference relations. Technol Econ Dev Econ 24(3):1029–1040

Li X, Yang Y, Chen Y, Niu X (2018) A privacy measurement framework for multiple online social networks against social identity linkage. Appl Sci 8(10):1790

Li XF (2020) Research on privacy measurement method in social networks. Beijing University of Posts and Telecommunications

Aghasian E, Garg S, Gao L, Yu S, Montgomery J (2017) Scoring users privacy disclosure across multiple online social networks. IEEE Access 5:13118–13130

Rao RV (2007) Decision making in the manufacturing environment: using graph theory and fuzzy multiple attribute decision making methods. Springer, London

Figueira J, Greco S, Ehrgott M (2005) Multiple criteria decision analysis: state of the art surveys. Springer Science & Business Media, Berlin

Tzeng GH, Huang JJ (2011) Multiple attributes decision making—methods and applications. Lec Ecos Mathl Syst 404(4):287–288

Zadeh LA (1965) Fuzzy sets. Inf Control 8(3):338–353

Lin MW, Chen ZY, Xu ZS, Gou XJ, Herrera F (2021) Score function based on concentration degree for probabilistic linguistic term sets: an application to TOPSIS and VIKOR. Inf Sci 551:270–290

Lin MW, Huang C, Xu ZS, Chen RQ (2020) Evaluating IoT platforms using integrated probabilistic linguistic MCDM method. IEEE Internet Things J 7(11):11195–11208

Lin MW, Chen ZY, Liao HC, Xu ZS (2019) ELECTRE II method to deal with probabilistic linguistic term sets and its application to edge computing. Nonlinear Dyn 96(3):2125–2143

Lin MW, Xu ZS, Zhai YL, Yao ZQ (2018) Multi-attribute group decision-making under probabilistic uncertain linguistic environment. J Oper Res Soc 69(2):157–170

Kumar PS (2016) A simple method for solving type-2 and type-4 fuzzy transportation problems. Int J Fuzzy Logic Intell 16(4):225–237

Kumar PS (2016) PSK method for solving type-1 and type-3 fuzzy transportation problems. Int J Fuzzy Syst Appl 5(4):121–146

Kumar PS, Hussain RJ (2016) Computationally simple approach for solving fully intuitionistic fuzzy real life transportation problems. Int J Syst Assur Eng 7(1):90–101

Kumar PS (2022) Computationally simple and efficient method for solving real-life mixed intuitionistic fuzzy 3D assignment problems. Int J Softw Sci Comput. https://doi.org/10.4018/ijssci.291715

Kumar PS (2020) Algorithms for solving the optimization problems using fuzzy and intuitionistic fuzzy set. Int J Syst Assur Eng 11(1):189–222

Kumar PS (2019) Intuitionistic fuzzy solid assignment problems: a software-based approach. Int J Syst Assur Eng 10(4):661–675

Al-shami TM (2022) (2,1)-Fuzzy sets: properties, weighted aggregated operators and their applications to multi-criteria decision-making methods. Complex Intell Syst. https://doi.org/10.1007/s40747-022-00878-4

Leskovec J, Leskovec, Krevl A (2014) SNAP datasets: Stanford large network dataset collection. http://snap.stanford.edu/data

Li NH, Li TC, Venkata S (2007) t-Closeness: privacy beyond k-anonymity and l-diversity. In: 2007 IEEE 23rd international conference on data engineering, IEEE, pp 106-115

Zhang HL, Shi YL, Zhang SD, Zhou ZM, Cui LZ (2016) A privacy protection mechanism for dynamic data based on partition-confusion. J Comput Rese Dev 53(11):2454–2464

Jorgensen Z, Yu T, Cormode G (2015) Conservative or liberal? Personalized differential privacy. In: 2015 IEEE 31St international conference on data engineering, IEEE, pp 1023–1034

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grant Nos. 61872090, 62272103, 61872086, and 61972096, Natural Science Foundation of Fujian Province of China under Grant No. 2022J06020, Young Top Talent of Young Eagle Program of Fujian Province, China under Grant No. F21E0011202B01.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Guo, L., Yao, Z., Lin, M. et al. Fuzzy TOPSIS-based privacy measurement in multiple online social networks. Complex Intell. Syst. 9, 6089–6101 (2023). https://doi.org/10.1007/s40747-023-00991-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-023-00991-y