Abstract

Document clustering has long been an important research direction in intelligent system. When being applied to process Chinese documents, new challenges were posted since it is infeasible to directly split the Chinese documents using the whitespace character. Moreover, many Chinese document clustering algorithms require prior knowledge of the cluster number, which is impractical to know in real-world applications. Considering these problems, we propose a general Chinese document clustering framework, where the main clustering task is fulfilled with an adaptive encoding-based evolutionary approach. Specifically, the adaptive encoding scheme is proposed to automatically learn the cluster number, and novel crossover and mutation operators are designed to fit this scheme. In addition, a single step of K-means is incorporated to conduct a joint global and local search, enhancing the overall exploitation ability. The experiments on benchmark datasets demonstrate the superiority of the proposed method in both the efficiency and the clustering precision.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Document clustering aims to group similar documents together and put the dissimilar ones into different clusters. Researches on document clustering play key roles in intelligent text processing and have been widely used in various advanced applications such as keywords extraction [1], collaborative filtering recommendation [2], text summarization [3], document retrieval [4] and other natural language processing tasks [5]. Generally, this task relies heavily on a commonly accepted clustering hypothesis, i.e., documents on the same topic show high similarity, while those belonging to different clusters distinguish a lot. That is, the guiding principle of document clustering is to minimize the intra-cluster similarity while maximizing the inter-cluster similarity.

Different types of algorithms have been developed for document clustering, such as the partitioning methods, the hierarchical methods, and the word and phrase-based methods. For the partitioning methods, various distance measures are adopted and documents are clustered accordingly [6, 7]. The hierarchical methods usually create a cluster hierarchy, in which the leaf nodes correspond to the input documents, the parent nodes are the merged clusters, and the topmost hierarchies are the final clusters [8, 9]. The word and phrase-based methods find the key sets of words so as to cluster the documents [10, 11]. In this work, we focus on the partitioning method since it is lightweight, simple to implement, and the number of model parameters is small [12]. Many techniques [13,14,15] in this category are based on or derived from K-means. However, the K-means algorithm gets easily trapped in a local optima and the clustering performance is, therefore, sensitive to the selection of initial cluster centroids. This unwelcome property restricts the usage of K-means based methods [16].

To solve the above limitation, the Evolutionary Co-mputation (EC) algorithms were adopted for global search. For example, a Particle Swarm Optimization (PSO)-based document clustering algorithm was proposed to perform a comprehensive search in the entire solution space [17]. Song et al. [18] put forward a hybrid evolutionary approach for document clustering. They exploited an evolutionary algorithm to improve the initialization of PSO and adopted the particles to find the optimal solution. Zhang et al. [19] developed a genetic algorithm-based aggregation method to construct enhanced clusters from several weak clustering results.

In addition to the common problems in document clustering, new challenges may arise from the language of the documents. That is, in contrast to English documents, it is impractical to directly split the Chinese documents using the whitespace character. The characters in the Chinese sentence do not have any space between them, and different ways of teasing apart the sentence may lead to different understandings. As such, it is urgently needed to design clustering algorithms that are specially developed for Chinese document clustering. Toward this end, the algorithm in [20] decomposes the task of Chinese document clustering into two phases. In the first stage, it preprocesses the Chinese documents using a hash-based method and constructs term vectors to represent the documents. Then, with the extracted features, a dynamical method based on the self-organizing maps was proposed for clustering. Geng et al. [21] put forward a three-layer hybrid algorithm to cluster Chinese microblogs. To start with, the K-means algorithm is adopted to group the microblog texts; then, an agglomerative algorithm is used to merge the small clusters with the same topic; afterward, the potential noise is eliminated in the third layer. Considering the fact that the new center of a cluster was easily affected by the isolated texts, Yao et al. [13] presented an improved clustering method to solve the isolated point problem.

Although plenty of works have been developed for Chinese document clustering, three major problems are still unsolved. First, there lacks a Chinese document clustering framework that provides global optimization capability. Second, many document clustering algorithms require the cluster number as the input. In practical working scenarios, nevertheless, manually specifying the number of document categories may be inaccurate, resulting in unsatisfactory results. Besides, most document clustering algorithms remain the problem of weak exploitation ability. They cannot fine-tune the cluster centroids to better locations and thus may trapped in a wrong cluster structure. To address the above-mentioned problems, we propose a general framework for Chinese documents clustering. The novelties and main contributions of this work are summarized as follows:

-

1.

We propose a general framework for Chinese document clustering, in which the preprocessing and feature extraction modules are used for effectively representing the dataset and the main clustering module is fulfilled with an evolutionary approach for global optimization.

-

2.

We propose an Adaptive Encoding-based Evolutionary Approach (AEEA) to adaptively estimate the cluster number, equipped with carefully designed crossover and mutation operators.

-

3.

To solve the problem of weak exploitation ability, we introduce a hybrid evolutionary approach with a single step of K-means. In the evolutionary process, the K-means module helps to fine-tune the cluster centroids and to enhance the exploitation ability of the proposed AEEA method.

-

4.

We compare our method with the state-of-the-arts on different Chinese document clustering datasets. Experimental results validate the effectiveness of our method.

The rest of this paper is organized as follows. Section “Related work” reviews the related document clustering algorithms. In Sect. “The proposed framework”, we introduce the proposed Chinese document clustering framework. Section “Experiments” shows the experimental results. Conclusions are drawn in Sect. “Conclusion”.

Related work

Generally, document clustering can be tackled by the phrase-based document indexing [22], concept factorization [23], predictive network [24], and the evolutionary algorithms [17, 19]. As the evolutionary algorithms are powerful for handling the NP-hard problems [25], we focus on this category and classify existing evolutionary algorithms into two classes considering whether the cluster number is pre-defined.

Evolutionary clustering with fixed cluster number

Algorithms in this category require the cluster number as the input. For example, Zhang et al. [19] put forward a genetic algorithm for document clustering, where the cluster centroids are fine-tuned in the evolutionary process. Yogesh et al. [26] combined the genetic algorithm and the discrete differential evolution algorithm for finding the best clustering centroids. A hybrid K-means algorithm-based on PSO was proposed by Kamel et al. [27], where the PSO module optimizes the cluster centroids, and the K-means module is used for generating and refining the solutions. In addition to the single-objective methods, multi-objective optimization methods can also be used for document clustering. A multi-objective genetic algorithm was proposed to solve the premature convergence problem [28]. This method takes two cluster validation indices as separate optimization goals. Laith et al. [29] proposed a multi-objective method that combined distance and similarity measures for improving the clustering performance.

Evolutionary clustering with variable number of clusters

Practically, it is hard to determine the underlying cluster numbers in advance. To handle this problem, methods in this category were designed to learn the underlying cluster number along the evolutionary process. For example, Cobos et al. [30] proposed a memetic algorithm for web document clustering, where the niching methods were used to promote the diversity in the population. Onan et al. [31] developed an improved ant algorithm with latent dirichlet allocation-based representation for document clustering. Furthermore, an improved two-phased genetic algorithm for document clustering was proposed by Akter and Chung [32]. By modifying the cluster centroids during crossover, this method allows the generation of new centroids, and therefore increases the searching space. In addition, several multi-objective optimization methods were proposed for dealing with document clustering. For instance, Abdul et al. [33] introduced a multi-objective genetic algorithm for clustering the web documents by considering the multi-view data representations.

The proposed framework

To handle the problem of document clustering, in this paper, we put forward a general clustering framework based on a novel global optimization strategy. Within this framework, various evolutionary approaches can be adapted for global search. As an illustration, we adopt an Adaptive Encoding-based Evolutionary Approach (AEEA) for Chinese document clustering.

As shown in Fig. 1, the general pipeline for our Chinese document clustering framework is introduced as follows. First, we conduct data preprocessing to analyze the document dataset. Then, the Vector Space Model (VSM) is constructed to represent the dataset. Afterward, the dimension of the initial feature representations is reduced for efficient computation, and the evolutionary approach is applied to get the final clustering results.

Data preprocessing

Given an unlabeled document dataset \(\{d_{i}\mid i=1,2,..., N\}\), we firstly remove the stop words for refining the data and split the sentences into meaningful words via word segmentation. The stop words denote some certain meaningless words in documents. For example, in English context, these words can be function words like ‘the’, ‘is’, ‘at’, etc. While in Chinese context, they can be “啊,哎,吧”, etc. Most stop words have no contribution in differentiating the topic of documents. On the contrary, they have negative effects such as misleading the clustering process and increasing the computational complexity.

After removing the stop words, we adopt the ‘Jieba’ software [34] to process the documents for word segmentation. Unlike the English sentence that directly adopts the whitespace character as the splitter, the Chinse sentence fuses all characters all together. Thus, to analyze Chinese documents, we require additional efforts to segment the words, and the ‘Jieba’ provides an automatic way. In addition, the segmentation can be performed at different granularities. For example, a university name “华南理工大学” can be split into a coarse granularity “华南理工\(\setminus \)大学” or a fine granularity “华南 \(\setminus \) 理工 \(\setminus \) 大学”. We adopt the full mode of ‘Jieba’, which will list all possible words in the sentence, providing the finest granularity. It helps us to fully address the semantics of Chinese sentences, but the side effect is that it brings relatively higher problem dimensionality. After preprocessing, the documents consist of different meaningful words, providing the convenience for the following feature extraction procedure.

Feature extraction using VSM

We construct the feature vectors via VSM [35] to extract the compact representation of initial documents. We first count the word frequencies and then construct the document weight vector as an efficient feature. For example, when the ith document \(d_{i}\) is traversed, the frequency \(z_{d_{i},j}\) is incremented by one each time the feature word j appears. Afterward, the Term Frequency (TF) and Inverse Document Frequency (IDF) values of each feature word j in the ith document \(d_{i}\) are calculated as

where N is the total number of documents in the whole dataset, and \(u_{j}\) is the number of documents that contain the feature word j. Physically, TF\((d_{i},j)\) can be interpreted as the regularized word frequency and IDF\((d_{i},j)\) represents the anti-document frequency. With these notations, the weight of the feature word j in the document \(d_{i}\) can be calculated as

All the weights of feature words in the document \(d_{i}\) work together to form the feature vector of the ith document, i.e., \({\varvec{{f}}}_{i}\).

The extracted feature vectors are concatenated as the representation of the dataset, i.e., the feature matrix \(M=[{\varvec{{f}}}_{1},{\varvec{{f}}}_{2},...,{\varvec{{f}}}_{N}]^{T}\). We also reduce the dimension of the feature vectors by applying Principal Component Analysis (PCA) [36] to preserve 90% of the original data variation. Here, we need to strike a balance between data quality and data dimensionality. According to researches in [37, 38], the 90% is a recommended setting that covers most of the data information while keeping a good dimension reduction rate. The reduction of the data dimensions brings at least two benefits: (1) the documents generally have tens of thousands of attributes. By performing dimension reduction, the computing time and resources can be significantly reduced; (2) the original feature vector contains many zero values. Through dimension reduction, these sparse features can be compressed and the potential redundancy and noise are reduced so as to accurately represent the documents.

AEEA for document clustering

With the extracted features, we propose a novel Adaptive Encoding-based Evolutionary Approach (AEEA) for Chinese document clustering. The flexible encoding scheme is able to adaptively adjust the cluster number. To fit this scheme, new crossover and mutation operators are proposed so as to adaptively adjust the cluster number. Besides, a single step of K-means is introduced in the selection step for enhancing the exploitation ability. The details of our AEEA algorithm are presented as follows.

Population initialization

Initialization of the population is to generate np individuals, where each individual encodes the centroids of randomly generated clusters. Specifically, for the ith (i = 1, 2, ..., np) individual, an integer \(K_{i}\) is randomly chosen within the range of [2, \(\sqrt{N}\)]. Afterwards, the \(K_{i}\) cluster centroids are randomly selected from columns of the feature matrix M, constituting the parameter vector of ith individual. The length of each parameter vector is \(D\times K_{i}\), where D represents the dimension of the feature, i.e., the number of feature words in current dataset. Here, by adopting an adaptive encoding scheme, different individuals can be used to model various cluster numbers. Finally, when the evolutionary process is terminated, the optimal cluster cluster number is learned from the individual with the best fitness. Figure 2 shows three examples of the encoding scheme in a 2-D dataset. For instance, the first individual has two clusters (\(K_{1}\) = 2) and hence its parameter vector contains four dimensions. After randomly selecting the cluster centroids, the first individual becomes (1.8, 2.2, 1.9, 2.3). Similarly, the parameter vectors of the last two individuals contain six and eight dimensions to encode three and four clusters, respectively.

Note that there are a few existing EAs that can adapt the cluster number, but most of them adopt the fixed length encoding scheme [39, 40]. The adjusting of cluster number is conducted through posing additional activation thresholds of preserving or removing some invalid clusters within the chromosome. Compared to our AEEA with adaptive encoding scheme, these methods endure encoding redundancy problems and require activation control strategies to determine the cluster number.

Illustrations of the cross-dimension learning error. a The individuals exchange information in the correct way according to the spatial information (marked with the solid line). b The individuals exchange information in the wrong way according to the index-based information (marked with the dotted line)

Crossover

The classic crossover operator, i.e., the indices-based crossover, remains the problem of cross-dimension learning error. As illustrated in Fig. 3, an individual may exchange wrong information using the indices-based cross-over operator. By taking the spatial information into consideration, we design a new crossover operator under the adaptive encoding scheme. The crossover operator can effectively exchange the information between the adaptive length individuals without incurring the cross-dimension learning error. As shown in Fig. 4, the new crossover operator adopts a subspace swapping strategy so as to exploit the spatial information. The detailed procedures are presented as follows.

Step (a): For the target vector \(X_{i}\) in the current population, we first generate a random number \(r_{1}\in [0,1]\). If \(r_{1}\) is smaller than the crossover rate CR and the target vector \(X_{i}\) contains at least two data points, then proceed to step (b). Otherwise, the trial vector \(U_{i}\) will be a reproduction of \(X_{i}\).

Step (b): A paired vector \(V_{i}\) is randomly selected from the current population for crossover. Next, we randomly select two cluster centers \({\textbf {m}}^\mathrm{{first}}\) and \({\textbf {m}}^\mathrm{{second}}\) from \(V_{i}\).

Step (c): We calculate the distance of \({\textbf {m}}^\mathrm{{first}}\) and \({\textbf {m}}^\mathrm{{second}}\) and set the radius \(rd_{j}\) of the swapping space to half of the distance. The midpoint of these two centers is taken as the center O. Specifically, the center O and the radius \(rd_{j}\) are calculated as

Then, a hypersphere, representing the exchanging subspace, is formed using O and \(rd_{j}\) as the center and the radius respectively. Through the swapping operator, the numbers of clusters for different individuals are varied and the corresponding cluster structures are also changed. On the other hand, as the proposed swapping operator is not based on the index, the genes in some positions of the individual have the opportunity to learn information from the genes in other positions of the individual. Since the subspace swapping strategy can increase the population diversity, it helps to enhance the exploration ability of our AEEA algorithm. Note that the subspaces determined by the two vectors are always consistent, because they indeed represent the same area in the spatial layout.

Step (d): For the target vector \(X_{i}\), we use the consistent subspace defined in Step (c) to retrieve the subset of the cluster centroids in \(X_{i}\). Then, we exchange the selected cluster centroids of \(V_{i}\) and \(X_{i}\) to generate the trial vector as

where \(\otimes \) is the exchange procedure. As shown in Fig. 4, the cluster numbers in the swapping subspace of \(X_{i}\) and \(V_{i}\) are distinct. This indicates that the cluster number decoded from the trail vector \(U_{i}\) could be different from those decoded from parent vectors. In this way, the new crossover operator supports the flexibility of our AEEA algorithm in adaptively adjusting the cluster number. Practically, if the cluster number in the trial vector \(U_{i}\) is less than the minimum cluster number \(K_\mathrm{{min}}\) = 2 or exceeds the maximum number \(K_\mathrm{{max}}=\sqrt{N}\), the cluster number of \(U_{i}\) will be set to that of \(X_{i}\), and the cluster centroid in the individual \(X_{i}\) will be replaced by the nearest cluster centroid in the paired vector \(V_{i}\).

To summarize, the proposed crossover operator not only enables the flexibility of adjusting the cluster number, but also tackles the problem of the cross-dimension learning error. The target and paired vectors exchange the information through the consistent subspace. In this way, the trail vector can learn the spatial information to reach a better clustering structure, which is different from the crossover operator used in traditional genetic algorithm that conducts index-based crossover. Thus, the new crossover operator is well suitable for our adaptive encoding scheme. By effectively exploiting the spatial information, the potential error from cross-dimension learning can be fixed.

Mutation

The traditional genetic algorithm prefers to use the uniform distribution as the mutation mediation mechanism. As such, cluster centroids will be perturbed in an uniform way. However, the real-world problems may be affected by the noise, which cannot be accurately modeled using the uniform distribution. We, instead, adopt the Gaussian distribution for fine-tuning the cluster centroids so as to enhance the exploitation ability. For each cluster centroid decoded from the mutant vector \(M_{i}\), we first generate a random number \(r_{2}\in [0,1]\). If \(r_{2}\) is smaller than the mutation probability \(M_{u}\), the cluster centroid in the mutant vector \(M_{i}\) is updated as

where \({\varvec{{m}}}_k\) represents the kth cluster centroid decoded from the mutant vector; G(0, 0.1) generates random numbers following the Gaussian distribution, and 0 and 0.1 are the mean and standard deviation, respectively; \({\varvec{{ub}}}\) and \({\varvec{{lb}}}\) are the upper and lower bounds of the range of feature vectors, respectively. The Gaussian mutation fine-tunes the cluster centroids in small neighborhoods, and thus the local and global searches can be carried out at the same time.

Objective evaluation

For document clustering, the distance between the cluster centroids should be maximized while the intra-cluster distance is expected to be minimized. Both the internal indices and external indices [41] can be adopted for validating the clustering results. In this work, we use the Davies-Bouldin (DB) [42] and I [43] indices owning to their popularity. The two indices are briefly reviewed as follows.

DB index To calculate the DB index, we first calculate an index value \(R_{i}\) that corresponds to each cluster centroid

where \(e_{i}=(1/N_{i})\sum _{{\varvec{{f}}}_{i}\in C_{i}} \mathrm{{dist}}({\varvec{{f}}}_{i},{\varvec{{m}}}_{i})\) is the average error within cluster i; \({\varvec{{m}}}_{i}\) represents the cluster centroid for concise. \(D_{ij}=\mathrm{{dist}}({\varvec{{m}}}_{i},{\varvec{{m }}}_{j})\) is the distance between the cluster centroids i and j; \(N_{i}\) denotes the number of documents in cluster \(C_{i}\); \( {K}\) is the number of clusters. Then, the DB index is formulated as

Since the clustering hypothesis is to maximize the inter-cluster distance and minimize the intra-cluster distance, a smaller DB index indicates better clustering results.

I index The I index includes three mutually competing factors are defined as

where \( {K}\) is the number of clusters; P is a dataset-dependent parameter, which is commonly set to the number of samples N; \(E_k\) and \(D_\mathrm{{max}}\) are calculated as

where \({\varvec{{m}}}_{k}\) denotes the kth cluster centroid, \({\varvec{{f}}}_{i}\) is the ith document and \(C_{k}\) represents the kth cluster. Besides, the parameter \(p>1\) controls the contrast of the I index for different clustering configurations. Generally, a larger I index indicates better clustering results.

To obtain the above indices, the distances between documents need to be calculated. It is generally accepted that the distance measure, or equivalently, the similarity measure is important for document clustering [44]. Previously, different types of distance measures have been adopted to fit various scenarios. In this work, we conduct experiments to evaluate the clustering performance using three commonly used distance measures, i.e., the cosine distance, the Euclidean distance and the Manhattan distance. The distance measures can be calculated as

where \({\varvec{{f}}}_{i}\) and \({\varvec{{f}}}_{j}\) denote the feature vectors of the cluster centroids i and j. The effects of the three distance measures will be examined in our experimental section.

Selection

First, we calculate the fitness of the mutant vector \(M_{i}\). After the cluster centroids are determined, samples will be assigned accordingly. For each data point, we calculate its distances to all the cluster centroids in \(M_{i}\) and assign it to the nearest cluster.

In the evolutionary process, there remain lots of zero values in the feature vectors. This is because, when conducting the words frequency, some words will not appear in a single document. The clustering results may lead to empty clusters, containing less than two data points. To relieve this side effect, we adopt a random reassignment method [40] to construct clusters. The clustering results can be evaluated through the validation indices, and we adopt the DB index and I index in this work. Then, the current individual \(X_{i}\) and the mutant vector \(M_{i}\) are compared and the one with better fitness is proceeded to next generation

Single step of K-means

In addition to the empty clusters, the weak local search ability may also lead to zero values in many dimensions. Considering the fact that the large number of zero values will increase the sparsity of the feature matrix and thus bring problems for optimization, we introduce a single step of K-means to tackle it. After selection, each data point has its own cluster membership. The single step of K-means is to update the cluster centroids through averaging all data points within current cluster and thus the cluster center gains more information by considering the whole cluster. In this way, the single step of K-means not only prevents the feature matrix from being too sparse, but also speeds up the local search. This strategy enables our algorithm to well exploit the clustering space as well as enhancing the computation efficiency.

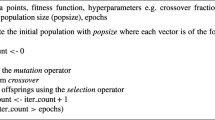

In summary,the overall procedure of AEEA is summarized in Algorithm 1. The algorithm takes advantage of our general framework and thus is highly effective for the Chinese document clustering task. Besides, note that the encoding scheme we adopt is more efficient than the traditional fixed length encoding scheme in representing solutions of uncertain lengths. The crossover and mutation operations are designed by adding spatial operators to the traditional genetic algorithm. These operations can also be extended to other evolutionary algorithms for similar problems.

Experiments

Experimental setup

To validate the accuracy and effectiveness of our framework, we conduct extensive experiments for performance evaluation. As summarized in Table 1, the experiments are based on five Chinese document datasets according to the Fudan corpus. Dataset D3-600 contains samples from three categories, i.e., the computer, economy and politics (“计算机,经济,政治” in Chinese), with 200 documents per category. To construct dataset D4-800, we select different topics, i.e., the environment, history, agriculture and sports (“环境,历史,农业,体育” in Chinese). To validate the scalability of our AEEA algorithm, we then merge D3-600 and D4-800 into a large dataset D7-1400. That is, D7-1400 contains seven categories, i.e., “计算机,经济,政治,环境,历史,农业,体育” in Chinese. Furthermore, we increase the volumes of documents in D7-3500 with 500 documents per category, resulting in 3500 samples in the whole dataset. In D10-2000, we increase the category number to ten, i.e., politics, environment, history, agriculture, sports, transportation, education, military, medicine and art (“政治,环境,历史,农业,体育,交通,教育,军事,医学,艺术” in Chinese), with 2000 samples in the whole dataset. In D12-4500, we further enrich the category number to 12, i.e., computer, economy, politics, environment, history, agriculture, sports, transportation, education, military, medicine and art (“计算机,经济,政治,环境,历史,农业,体育,交通,教育,军事,医学,艺术” in Chinese), with 4500 samples in the whole dataset.

We compare AEEA with a classical algorithm K-means [16], and four state-of-the-art algorithms, i.e., Density-K-means [14], KSI-H-K [21], Two-phased Genetic Algorithm (TGA) [32] and Spectral Clustering algorithm with PSO (SCPSO) [45]. K-means requires a pre-defined cluster number as input to partition the document dataset into K clusters. The Density-K-means takes the shape of clusters into consideration and improves the performance of traditional K-means. KSI-H-K constructs a model that contains three layers, each layer is set up to handle big clusters, noise and clustering result refinement issues, respectively. TGA is a two-phased genetic algorithm. The method allows the crossover point to be anywhere in the chromosome. SCPSO combines the spectral clustering with the PSO method. On the one hand, the spectral clustering helps to recognize the non-convex distribution. On the other hand, the PSO module enables the hybrid algorithm to better explore the global space. For all competitors, we adopt the parameter settings recommended in their original papers. The K-means, Density-K-means algorithms and the first and third layers of KSI-H-K are terminated when the cluster centroids are not updated. And the second layer of KSI-H-K ends up when the merge condition is reached. Besides, TGA, SCPSO and the proposed AEEA are assigned with a pre-defined maximum number of fitness evaluation times \(10^{6}\).

For the AEEA algorithm, the size of population np is set to 100. The minimum and maximum cluster numbers are set to 2 and \(\sqrt{N}\) respectively. The crossover rate CR is set to 0.4, while the mutation rate \(M_{u}\) varies with the iteration times G

where \(G_{\text {max}}\) denotes the maximum generations and is set to \(10^{6}/np\). All the simulation results were conducted on a DELL computer with an \(\mathrm Intel^{TM}\) \(\mathrm Core^{TM}\) CPU at 3.30 GHz. To avoid undesired random disturbance, we repeat each algorithm 30 times on all the four datasets and report the average values for comparison.

Following [46], we adopt the commonly used precision, recall and F-score to examine the performance of competing algorithms. The three measures are defined as

where TP, FP and FN represent the true positive, the false positive and the false negative respectively. As the F-score is a way of combining the precision and recall of the model, it is usually more convincing than the precision and recall for performance evaluation [47].

Experiment results

Validation by the DB Index

First, we use the DB index as the fitness evaluation, for guiding the optimization direction. Besides, following [46, 48], three external indices are adopted to evaluate the performance of competing algorithms. Table 2 shows the mean clustering results with the standard deviations, including the average DB index value, the precision, the recall and the F-score. In addition, at the bottom of Table 2, we provide the average F-score of different algorithms. For K-means and Density-K-means, the ground-truth cluster number is adopted as the prior information. In KSI-H-K, a cluster number need to be given for initial data clustering, and the second layer set up the parameter in AGNES to perform cluster merge, which can adaptively adjust the cluster number to some extent. The remainders, namely, TGA, SCPSO and the proposed AEEA, adaptively adapt the cluster number during the optimization. The explanation for the boldface in Table 2 is marked in blue fold.

According to the F-score, AEEA exhibits the best performance on all the datasets, with \(6\%\) improvements over the second best algorithms. K-means algorithm witnesses the lowest F-score. Although obvious improvements are observed for the Density-K-means, the performance is still far from satisfactory. What’s worse, the Density-K-means shows significantly worse performance when the number of documents is increased. The two competing evolutionary algorithms and AEEA can determine the cluster number adaptively and thus obtain relative better performance. The F-score of AEEA is better than those of the KSI-H-K, TGA and SCPSO algorithms. This may come from the innovative design of our evolutionary operators and the appropriate utilization of the single step of K-means. Besides, although the recall of TGA and SCPSO may be comparable to that of AEEA on some datasets, but these two algorithms witness much smaller F-scores than AEEA. This indicates that TGA and SCPSO would likely fail to correctly find all samples in the same group.

Compared to TGA and SCPSO, the AEEA algorithm tackles the problem of cross-dimension learning error, which enables the exchange individuals to gain the correct information. And thus, it is able to find a better fitness value and thus obtain a higher clustering precision. SCPSO shows better performance than TGA, because it adopts spectral clustering that helps to detect clusters of arbitrary shapes. This way, SCPSO is able to discover the correct cluster structure in an efficient and accurate way. Although the prior information on the underlying cluster number is used, the three K-means-based algorithms cannot identify clusters with non-convex shapes and thus may fail to find the complex underlying clustering structure.

Further, convergence curves of AEEA are compared with TGA and SCPSO. The K-means based algorithms are excluded, because they stop within 10–100 iterations. Taking the D4-800 and D7-3500 datasets as examples, Fig. 5 presents the convergence curves of different algorithms using the DB index values. As AEEA obtains lower DB index value, it is always able to reach a better solution compared with TGA and SCPSO. As shown in Fig. 5b, when the volume and category of documents increase, SCPSO may fall into local optimum at the initial stage, and thus fails to reach the global optimal solution in the later stages. Besides, as the performance of spectral clustering is quite sensitive to small changes in the similarity graph, the clustering results of SCPSO relies heavily on the model parameters.

Validation by the I Index

Next, we use the I index as the fitness evaluation, and the results are presented in Table 3, with the best results marked in boldface. It can be observed that our AEEA algorithm outperforms other methods on all datasets.

We also take the D4-800 and D7-3500 datasets as examples to examine the convergence of competing algorithms. From Fig. 6a, we can see that AEEA obtains larger I index value, showing the ability of reaching a better solution compared with TGA and SCPSO. In both examples presented in Fig. 6, the AEEA algorithm obtains better fitness than the other two evolutionary algorithms.

Significance tests

To examine the significance of the performance improvements in AEEA, we conduct statistical tests based on the fitness evaluation indices. Particularly, the Wilcoxon’s rank-sum test [49] is performed for one-on-one comparisons, while the Kruskal Wallis (KW) test [50] is conducted for multiple comparisons. In Tables 4 and 5, we presents the significance tests using DB and I indices. The symbol (+) indicates that the performance of AEEA is significantly better than the competing algorithm(s), whereas the symbol (-) indicates the opposite. In both examples, the differences between the results of AEEA and those of other algorithms are statistically significant, showing the significantly better performance of AEEA in all comparisons. To summarize, our AEEA algorithm can achieve statistically better results since the p-values are always smaller than 0.05.

Further study for AEEA

In this section, we conduct a detailed investigation to afford a comprehensive understanding of AEEA.

Clustering results under different distance measurements

In this part, we run AEEA by adopting under different distance measurements, i.e., the Manhattan distance, the Euclidean distance and the Cosine distance. The results are presented in Table 6, with the best results marked in boldface. As can be seen, AEEA always obtains the best clustering performance when the cosine distance is adopted. This indicates that the cosine distance is more suitable for measuring the similarities among documents. With the increase of the data volume and dimension, the clustering results of Euclidean and Manhattan distances become worse. Thus, we equip AEEA with the cosine distance measure for all experiments in this manuscript.

Parameter investigation

There are three controlling parameters in AEEA, i.e., the crossover rate CR, the mutation rate \(M_{u}\) and the size of population np. Different parameter settings have a direct influence on the clustering results. Following the classical work [51], we set the population size np to 100. Physically, the mutation rate represents the disturbance probability of the clustering center. We thus design an adaptive adjustment process to decrease the mutation probability with the increase of the iteration number. This way the disturbance of population is reduced in the late evolution stage. We conduct further investigations on the setting of CR. As examples, we test the performance of AEEA with CR adjusted from 0.1 to 0.9 and the F-score is regarded as the comparative indicator. Figure 7 shows the effects of setting different CR values on the final clustering performance. On all datasets, when CR is set to 0.4, AEEA reaches the best performance, and hence we empirically set CR to 0.4 to ensure that AEEA always works efficiently and accurately.

Time complexity analysis

This part further compares the time complexity of AEEA and its competitors. The evolutionary procedure is generally divided into two parts: 1) different genetic operators and 2) the fitness evaluation. In the first part, the crossover and mutation operators adopt comparable time complexity, i.e., O(K) for each individual. Besides, the single step of K-means takes an additional O(K) cost. For fitness evaluation, it takes O(\(N\times K\)) time. Thus, our AEEA algorithm requires a total cost of O(\(\text {MaxFes} \times N\times K\)), where \(\text {MaxFes}\) denotes the maximum of fitness evaluations. For the compared TGA and SCPSO, they have exactly the same time complexity with AEEA. We also present the practical running time of the three evolutionary algorithms in the Fig. 8, it can be seen that the time consumed by AEEA is slightly higher than the other two algorithms. But considering the significantly improved accuracy, this magnitude of increase in the running time is acceptable.

Conclusion

In this work, we propose a general evolutionary framework for Chinese document clustering. This novel framework includes the evolutionary approach as the main clustering techniques and thus provides opportunities for designing new global optimization models. Specifically, within this framework, we design an Adaptive Encoding-based Evolutionary Approach (AEEA) so as to adaptively learn the cluster numbers. The experimental results show that our AEEA algorithm outperforms all competitors on all datasets. To summarize, our framework is flexible and scalable for solving the practical document clustering tasks and will raise promising prospects for applications in many intelligent systems.

Future directions of this work may include the enhancement of the AEEA model. For example, advanced models that are less sensitive to distance measures can be considered to remove the noisy points and to discover clusters of arbitrary shapes.

References

Huang Z, Xie Z (2022) A patent keywords extraction method using textrank model with prior public knowledge. Complex Intell Syst 8(1):1–12

Chen J, Zhao C, Chen L et al (2020) Collaborative filtering recommendation algorithm based on user correlation and evolutionary clustering. Complex Intell Syst 6(1):147–156

Zhang Q, Lu J, Jin Y (2021) Artificial intelligence in recommender systems. Complex Intell Syst 7(1):439–457

Cong H, Chen W-N, Yu W-J (2021) A two-stage information retrieval system based on interactive multimodal genetic algorithm for query weight optimization. Complex Intell Syst 7(5):2765–2781

Yin F, Wang Y, Liu J, Tosato M (2021) Modeling multi-prototype Chinese word representation learning for word similarity. Complex Intell Syst 7(6):2977–2990

Jain AK, Dubes RC (1988) Algorithms for clustering data. Prentice-Hall Inc, USA

Muflikhah L, Baharudin B (2009) Document clustering using concept space and cosine similarity measurement. In: 2009 international conference on computer technology and development, vol 1, pp 58–62

Voorhees E (1986) Implementing agglomerative hierarchic clustering algorithms for use in document retrieval. Inf Process Manag 22:465–76

Gil-Garcia R, Pons-Porrata A (2010) Dynamic hierarchical algorithms for document clustering. Pattern Recognit Lett 31(6):469–477 (cIARP 2008: robust and efficient analysis of signals and images)

Dhillon IS (2001) Co-clustering documents and words using bipartite spectral graph partitioning. In: Proceedings of the seventh ACM SIGKDD international conference on knowledge discovery and data mining, ACM, pp 269–274

Dhillon IS, Mallela S, Modha DS (2003) Information-theoretic co-clustering. In: Proceedings of the ACM SIGKDD international conference on knowledge discovery and data mining, ACM, pp 89–98

Elavarasi SA, Akilandeswari J, Sathiyabhama B (2022) A survey on partition clustering algorithms. Int J Enterprise Comput Bus Syst 1(1)

Yao M, Pi D, Cong X (2012) Chinese text clustering algorithm based k-means. Phys Procedia 33:301–307

Xiong C, Hua Z, Lv K, Li X (2016) An improved k-means text clustering algorithm by optimizing initial cluster centers. In: International conference on cloud computing and big data, pp 265–268

Mehta V, Bawa S, Singh J (2021) Weclustering: word embeddings based text clustering technique for large datasets. Complex Intell Syst 7(6):3211–3224

Hartigan JA, Wong MA (1979) Algorithm as 136: a \(k\)-means clustering algorithm, Journal of the Royal Statistical Society. Ser C (Appl Stat) 28(1):100–108

Cui X, Potok TE, Palathingal P (2005) Document clustering using particle swarm optimization. In: Proceedings IEEE swarm intelligence symposium, pp 185–191

Song W, Qiao Y, Park SC, Qian X (2015) A hybrid evolutionary computation approach with its application for optimizing text document clustering. Expert Syst Appl 42(5):2517–2524

Zhang Z, Cheng H, Zhang S, Chen W, Fang Q (2008) Clustering aggregation based on genetic algorithm for documents clustering. In: IEEE congress on evolutionary computation, pp 3156–3161

Tseng C-M, Tsai K-H, Hsu C-C, Chang H-C (2005) On the Chinese document clustering based on dynamical term clustering. In: Information retrieval technology. Springer, Berlin, pp 534–539

Geng X, Zhang Y, Jiao Y, Mei Y (2019) A novel hybrid clustering algorithm for topic detection on Chinese microblogging. IEEE Trans Comput Soc Syst 6(2):289–300

Hammouda KM, Kamel MS (2004) Efficient phrase-based document indexing for web document clustering. IEEE Trans Knowl Data Eng 16(10):1279–1296

Pei X, Chen C, Gong W (2016) Concept factorization with adaptive neighbors for document clustering. IEEE Trans Neural Netw Learn Syst 29(2):343–352

Brockmeier AJ, Mu T, Ananiadou S, Goulermas JY (2018) Self-tuned descriptive document clustering using a predictive network. IEEE Trans Knowl Data Eng 30(10):1929–1942

Hochba DS (1997) Approximation algorithms for np-hard problems. ACM Sigact News 28(2):40–52

Meena YK, Shashank V, Singh P (2012) Article: text documents clustering using genetic algorithm and discrete differential evolution. Int J Comput Appl 43(1):16–19

Kamel N, Ouchen I, Baali K (2014) A sampling-pso-k-means algorithm for document clustering. In: Genetic and evolutionary computing. Springer International Publishing, pp 45–54

Lee JS, Park SC (2012) Document clustering using multi-objective genetic algorithms on matlab distributed computing. In: International conference on information science and applications, pp 1–6

Abualigah LM, Khader AT, Al-Betar MA (2016) Multi-objectives-based text clustering technique using k-mean algorithm. In: 7th international conference on computer science and information technology, pp 1–6

Cobos C, Montealegre C, Mejia M, Mendoza M, Leon E (2010) Web document clustering based on a new niching memetic algorithm, term-document matrix and Bayesian information criterion. In: IEEE congress on evolutionary computation, pp 1–8

Onan A, Bulut H, Korukoglu S (2017) An improved ant algorithm with lda-based representation for text document clustering. J Inf Sci 43(2):275–292

Akter R, Chung Y (2017) An improved evolutionary approach for document clustering. In: Proceedings of the international conference on research in adaptive and convergent systems, ACM, pp 40–43

Wahid A, Gao X, Andreae P(2014) Multi-view clustering of web documents using multi-objective genetic algorithm. In: IEEE congress on evolutionary computation, pp 2625–2632

(Sep. 29, 2012). Jieba Software. [Online]. https://github.com/fxsjy/jieba

Salton G, McGill MJ (1986) Introduction to modern information retrieval. McGraw-Hill, Inc

Jolliffe IT (2002) SpringerLink, principal component analysis, 2nd edn. Springer, Secaucus

Weng J, Zhang Y, Hwang W-S (2003) Candid covariance-free incremental principal component analysis. IEEE Trans Pattern Anal Mach Intell 25(8):1034–1040

Zhang M, Ge Z, Liu T, Wu X, Qu T (2020) Modeling of individual hrtfs based on spatial principal component analysis. IEEE/ACM Trans Audio Speech Lang Process 28:785–797

Krishna K, Murty MN (1999) Genetic \(k\)-means algorithm. IEEE Trans Syst Man Cybern Part B (Cybern) 29(3):433–439

Das S, Abraham A, Konar A (2008) Automatic clustering using an improved differential evolution algorithm. IEEE Trans Syst Man Cybern Part A Syst Hum 38(1):218–237

Liang S, Han D, Yang Y (2020) Cluster validity index for irregular clustering results. Appl Soft Comput 95:106583

Davies DL, Bouldin DW (1979) A cluster separation measure. IEEE Trans Pattern Anal Mach Intell 2:224–227

Bandyopadhyay S, Maulik U (2001) Nonparametric genetic clustering: comparison of validity indices. IEEE Trans Syst Man Cybern Part C (Appl Rev) 31(1):120–125

Kuppili V, Biswas M, Edla DR, Prasad KJR, Suri JS (2020) A mechanics-based similarity measure for text classification in machine learning paradigm. IEEE Trans Emerg Top Comput Intell 4(2):180–200

Janani R, Vijayarani S (2019) Text document clustering using spectral clustering algorithm with particle swarm optimization. Expert Syst Appl 134:192–200

Kent A, Berry MM, Luehrs FU Jr, Perry JW (1955) Machine literature searching viii. operational criteria for designing information retrieval systems. Am Document 6(2):93–101

Goutte C, Gaussier E (2005) A probabilistic interpretation of precision, recall and f-score, with implication for evaluation. In: European conference on information retrieval. Springer, pp 345–359

Meila M (2005) Comparing clusterings: an axiomatic view. In: Proceedings of the 22nd international conference on machine learning, pp 577–584

Mann HB, Whitney DR (1947) On a test of whether one of two random variables is stochastically larger than the other. Ann Math Stat 50–60

Kruskal WH, Wallis WA (1952) Use of ranks in one-criterion variance analysis. J Am Stat Assoc 47(260):583–621

Storn R, Price K (1996) Minimizing the real functions of the ICEC’96 contest by differential evolution. In: 1996 international conference on evolutionary computation, IEEE, pp 842–844

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grants 62006080 and 62276100, in part by the Guangdong Natural Science Funds for Distinguished Young Scholars under Grant 2022B1515020049, in part by the Fundamental Research Funds for the Central Universities, in part by the Guangdong Regional Joint Fund for Basic and Applied Research under Grant 2021B1515120078, and in part by the 2022 Guangdong-Hong Kong-Macao Greater Bay Area Exchange Programs of SCNU.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chen, JX., Gong, YJ., Chen, WN. et al. Adaptive encoding-based evolutionary approach for Chinese document clustering. Complex Intell. Syst. 9, 3385–3398 (2023). https://doi.org/10.1007/s40747-022-00934-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-022-00934-z