Abstract

Turnover prediction has an important impact on alleviating the brain drain, which can help organizations reduce costs and enhance competitiveness. Existing studies on turnover are mainly based on analyzing the turnover correlation, using different models to predict various employee turnover scenarios, and only predicting turnover category, while the class imbalance and turnover possibility have been ignored. To this end, in this paper, we propose a novel fine-grained adaptation-based turnover prediction neural network (FATPNN) model. Specifically, we first employ a GRU to learn profile-aware features representations of the personnel samples. Then, to evaluate the contribution of various turnover factors, we further exploit an attention mechanism to model the profile information. Finally, we creatively design a weighted-based probability loss function suitable for our turnover prediction tasks. Experimental results show the effectiveness and universality of the FATPNN model in terms of turnover prediction.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Talent turnover will reduce the competitiveness of companies [1, 2]. When talented employees quit their jobs, companies need to cost a large number of time, money, and energy to find suitable alternative employees [3]. If the companies do not attract and select right talents to the jobs, this may affect the normal development of companies. It is very critical to proactively predict potential turnovers. This helps decision-makers to take a preventive action to retain the talents predicted to be at risk of leaving and minimize the loss of companies [2]. Thus, accurate turnover prediction can play an important role in the healthy and stable development of companies.

Existing studies on turnover analysis have been of interest to many researchers [1, 3,4,5,6,7,8]. In the literature, previous methods can be divided into three categories, which are correlation analysis methods of diverse factors and turnover, traditional machine learning methods, and deep learning methods.

For the first category study on turnover, previous work [9,10,11] has explored the correlation relationships of diverse factors and turnover. However, they do not predict turnover intentions, which make decision-makers unable to immediately and effectively avoid the loss of the key talents in an organization.

In recent years, several researchers have faced the turnover problem from a different perspective. Their main concern is to predict turnover through different classifiers. Turnover prediction using various machine learning methods [1, 2, 4, 12, 13] has aroused interest of researchers. However, these studies mentioned above do not consider data imbalance. In our work, the ratio of positive (turnover) samples and negative (non-turnover) samples of the two turnover data is 1:5.2 and 1:3.2, respectively. We face a data imbalance problem. The main problem is that negative samples account for the majority. In other words, the easily classified negative (non-turnover) samples account for the majority of the loss, and control the direction of gradient update. This makes it easier for the final training model to misclassify all samples into this class. Consequently, we need to adopt a strategy to tackle with the class imbalance problem.

Recently, researchers mainly tended to use deep learning methods [14, 15] to predict turnover. One notable work is done by Teng et al. [3], who adopted a deep learning framework to predict personnel future turnover. This work has achieved a success in turnover prediction tasks. However, existing work is not suitable for addressing class imbalance issue. Previous studies only provide the turnover category of each personnel, but do not consider the turnover probability distribution information. To our best knowledge, we have not found any relevant research to explore turnover possibility, which is an original contribution of this work.

In view of these challenges, it is an important effort to make full use of the employee turnover profile factors and consider class imbalance and turnover probability distribution information for effective turnover prediction. Consequently, the proposed model should effectively predict talent turnover behaviors and provide decision-making for proactive talent management.

Besides achieving accurate turnover prediction, it is valuable to explore the contribution of various turnover factors. Identifying important factors will reveal the employees’ turnover reasons. Thus, attention mechanism is introduced, which helps to evaluate the importance of employee profiles, and recognize the most influential factors in the final turnover decision.

To predict accurate turnover category, it is critical to address the class imbalance problems. This data imbalance causes one issue in our turnover prediction tasks: the training process is inefficient as it is dominated by the majority of negative (non-turnover) samples. Consequently, we employ a weighted cross-entropy loss function to automatically balance the loss between positive (turnover) samples and negative (non-turnover) ones.

In addition to providing accurate turnover category, i.e., the positive (turnover) samples and negative (non-turnover) ones, it is also valuable to explore turnover probability for the employees to quit their jobs. There may be various turnover probability for each turnover employee. The employee may think that he (she) is 90% sure that he (she) will turnover, or 80% sure that that he (she) will turnover, or 60% sure that he (she) will turnover. The value of the fitting probability distribution can be denoted

To quantify this information, it is necessary to consider both possible turnover category and the related probabilistic information. In fact, this information is the probabilistic distribution, which helps decision-makers to accurately analyze and make correct decisions.

To this end, in this paper, we propose a novel fine-grained adaptation-based turnover prediction neural network (FATPNN) model. Specifically, for turnover modeling, inspired by the practical talent management scenarios and deep learning techniques, we first design a GRU to automatically learn profile features’ representations of the samples. To evaluate the importance of diverse turnover factors, we introduce attention mechanism. Then, we design an improved loss function suitable to our turnover prediction tasks, which is called weighted-based probability loss (WPL) to predict turnover. Finally, we evaluate the FATPNN model with several state-of-the-art baselines on two turnover data. Experimental results validate the effectiveness of the FATPNN model. We emphasize that our proposed FATPNN model achieves impressive performance not only based on the innovations in neural network but also because of the novel loss we design.

In summary, the main contributions of this paper are as follows:

-

1)

We propose a novel and effective FATPNN model for turnover prediction, which allows the same model to adapt to turnover prediction tasks on various scenarios, and its generality is better.

-

2)

Due to several factors such as class imbalance and possible turnover probabilistic information, we further design a novel improved loss function (called WPL) to improve the performance of turnover prediction.

-

3)

We conduct experiments on two different turnover datasets to evaluate the effectiveness of our proposed model.

The rest of the paper is organized as follows. “Related work” discusses some related works for turnover analysis with different research methods. “Method” introduces our proposed technical details of turnover prediction. “Experiments” illustrates data for experiments, experimental setup, baseline methods, and evaluation metrics, and then comprehensively evaluate the performance of the proposed model. Finally, “Conclusion” concludes this work.

Related work

In recent years, a rising trend of applying advanced deep learning technologies to solve complex problems [16, 17] has emerged. The research of talent related complex problems is personnel performance prediction [18], personality trait recognition [19], two critical issues (i.e., talent turnover and job performance) of Person-Organization ft (P-O ft) in talent management [15], turnover prediction [3, 6], etc. Regarding the problem of personnel turnover prediction, how to accurately predict turnover is always a hot topic that has been studied for years. Existing research can be grouped into three categories, i,e., correlation analysis methods of diverse factors and turnover, machine learning methods, and deep learning methods.

The first category research on turnover has explored correlation analysis methods of diverse factors and turnover. Existing research are divided into voluntary turnover and involuntary turnover. In our study, we pay attention to voluntary turnover. Mobley et al. [9] quantitatively analyzed the correlations between job satisfaction and turnover, age-tenure and turnover, and intention to quit and turnover. The correlation between employee turnover and performance has been studied in the following work. The research of Glenn et al. [10] showed that turnover was lower for high performers, while the research of Trevor et al. [11] indicated that turnover was higher for poor and good performers. These studies mainly tended to explore various factors behind turnover. However, they did not predict employee turnover intentions. Consequently, company policymakers could not immediately and effectively prevent the loss of key talents in an organization.

Recent years, several researchers have studied the turnover problems from a novel perspective. They mainly focused on the turnover prediction performance obtained by different classifiers. For instance, Hong et al. [12] employed support vector machines (SVM) to predict employee turnover. Fan et al. [1] used hybrid data mining and machine learning clustering analysis techniques to predict the turnover of technology professionals in well-known Taiwan companies, which helped organizations prevent talents turnover and enhance the competitiveness of the companies. Valle et al. [4] employed Random Forest (RF) and Naive Bayes (NB) algorithms to forecast turnover of the sales agents in a call center. Two classifiers performed similarly. Yedida et al. [13] used the k-Nearest Neighbors (KNN) algorithm to predict whether an employee will leave or not. Although these studies in turnover prediction tasks have produced some developments, the work mentioned above is still in its infancy, and machine learning methods have limitation in accurate turnover prediction. Cai et al. [2] adopted DBGE to learn vector representations of employees and companies. The features learned by DBGE and basic features were fed to machine learning methods, which could significantly boost turnover prediction performance. However, the biggest challenge of this work is to effectively learn features to achieve better prediction performance.

In contrast to traditional machine learning methods, neural networks have an advantage of capturing non-linear relationships, and provide a new insight for predicting employee turnover. Neural networks have been used to tackle the problem of voluntary employee turnover, which outperform logistic regressions in accurately predicting turnover in a company [20]. Regarding turnover prediction, neural networks have become the mainstream methods for researchers. Oliveira et al. [14] found that deep learning model can be suitable for employee turnover prediction. In Sun’s work, Sun et al. [15] developed an Organizational Structure-aware Convolutional Neural Network (called OSCN) to address two critical issues (i.e., talent turnover and job performance) in talent management, which extracted organization-aware compatibility features in Person-Organization fit. Then, Recurrent Neural Network with attention mechanism was used to model temporal information of talents and organizations. Teng et al. [3] presented a contagious effect heterogeneous neural network (called CEHNN) framework for employee turnover prediction. The framework CEHNN integrated employee profiles, environmental factors, and the influence of turnover behaviors of peers, and then used a global attention mechanism to identify importance of different factors. However, this study is not suitable for addressing imbalance data. Our goal is to study if the proposed model can surpass the performance of the existing works.

Method

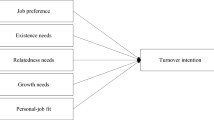

In this section, we describe the technical details of fine-grained adaptation-based turnover prediction neural network (FATPNN). As depicted in Fig. 1, FATPNN model contains three components, namely Profile-aware Neural Network, Attention Mechanism, and Personnel Turnover Prediction.

Specifically, given turnover profile features of an employe e, a GRU network is first used to learn these features. Then, to assess the contributions of various turnover factors to the final decision, we introduce the attention mechanism. Finally, for the imbalanced problem of turnover samples, we employ an improved loss function to make accurate turnover predictions.

The model architecture of our proposed turnover prediction, illustrating the handling procedure of predicting the possible category a sample belongs to. x is an input features vector; h represents a series of hidden states of GRU. y indicates the label of the turnover sample. A fully connected (FC) layer with sigmoid activation function is used to learn a two-dimensional vector for predicting the label y. We use a weighted-based probability loss to train our model

Problem formulation

In this paper, the goal of our proposed model is to predict employee turnover. Specifically, for the ith personnel sample, the FATPNN model is used to predict the label y based on employee profile information \({T}_i\). The profile information \({T}_i\) contains satisfaction_level, average_monthly_hours, time_spend_company, number_project, etc. The classification label y indicates the prediction results, e.g., turnover or non-turnover. With general loss, here, we treat the turnover problem as a binary classification problem, e.g., \(y \in \{0,1\}\). Let \(y = 1\) if the ith personnel will quit his/her job. Otherwise, \(y = 0\). Our task is to estimate the turnover category \(P({\hat{y}}_{i} | {T}_i)\), so we give a formal definition of personnel turnover prediction as follows:

Since we want to know about employees’ turnover intention, a softer fitting probability distribution strategy is proposed. \(L_{\text {probability}}\) denote the formulation of the fitting probability distribution.

Profile-aware Neural Network

The input turnover features exist dependency, so GRU [21] neural network is used to learn profile features’ representations of the samples. As a variant of recurrent neural network, GRU is powerful to model sequence information. GRU also has the ability to learn the dependency of personnel profile features and avoid the exploding and vanishing gradients issues. Profile features of each turnover sample are as the input vector of the FATPNN model. We denote all the turnover features in each sample as an initial vector sequence \(x=\{{x}_1^{\text {turnover}},{x}_2^{\text {turnover}},\ldots ,{x}_n^{\text {turnover}}\}\), where n refers to the number of the profile features.

Our turnover prediction task is implemented as GRU with two components, a reset gate \({r}_t\) with corresponding weight matrix \({W}_r\), \({U}_r\) which are learned, and an update gate \({z}_t\) with corresponding weight matrix \({W}_z\), \({U}_z\) which are learned. All the components are set to generate the current hidden state \({h}_t\) with the input vector \({x}_t\) and the previous hidden state \(h_{t-1}\). GRU can be formulated as

where \(\sigma \) expresses the sigmoid function, \(\odot \) refers to an element-wise product, \({r}_t\) determines how to join the new input with the previous memory, \({z}_t\) determines how many previous memories are added to the current state, and \({\tilde{h}}_{t}\) indicates the candidate state of \({h}_{t}\).

Attention mechanism

As discussed in the section “Datasets”, we aim to identify the contributions of diverse turnover factors to the final decision. At this point, the attention mechanism is introduced to address this problem.

In this subsection, we will describe attention mechanism in detail. After obtaining the profile features of the input samples, attention [22] is employed to learn the weight coefficient, which evaluates the importance of various factors in the samples. Suppose, for each personnel turnover sample, we have N series of output from N GRU cells, attention is computed based on Eqs. (6)–(8). The vector \(c_i\) is computed as a weighted sum. The calculation can be denoted as

The weight coefficient \(\alpha _{ij}\) of each \(v_j\) can be formulated as

where \(c_i\) refers to the representation of the sequence as the weighted sum of hidden representation, \(\alpha _{ij}\) indicates the normalized importance, and \(v_{ij}\) denotes the score about the degree of dependency between \(v_i\) and \(v_j\). Fscore is a function to calculate the score about \(v_i\) and \(v_j\), where u, \(W_1\), and \(W_2\) are learned attention parameters. \(v_{ij}\) computed by function Fscore is normalized by softmax via the formulation (7). The output of the attention is a weighted sum.

Personnel turnover prediction

For the two turnover data, the ratio of the positive (turnover) samples and negative (non-turnover) ones is 1:5.2 and 1:3.2, respectively. The two data in our experiments are a class imbalance issue. The major issues in our turnover prediction tasks are that negative samples account for the majority. Easily classified negative (non-turnover) samples account for the majority of the loss and control the direction of gradient update. This makes the final training model more likely to classify all samples into this category. However, binary cross-entropy loss function does not work well in our turnover classification tasks. Consequently, to tackle with the class imbalance problem in the turnover prediction tasks, we use a strategy (e.g., a weighted cross-entropy loss function) to address this.

We adopt the strategy (e.g., a weighted cross-entropy loss) to automatically balance the loss between positive (turnover) classes and negative (non-turnover) ones. In the weighted cross-entropy loss function, we introduce a balanced class weight \(\alpha \) and \(\beta \) for positive and negative classes. That is to say, we employ this weight to balance this class imbalance between turnover and non-turnover classes.

We introduce the weighted cross-entropy loss [23] starting from binary cross-entropy (CE) loss. Specifically, we define the CE loss in turnover prediction tasks as follows:

The weighted cross-entropy objective function is shown as follows:

where \(P_t\) is the conditional probability to be estimated, \(N\_{\text {turnover}}\) is the number of positive samples,\(N\_{\text {noneturnover}}\) denotes the number of negative samples, \(\alpha \) represents the weighs of turnover samples whose value is \(N\_{\text {noneturnover}}/ (N\_{\text {turnover}}+ N\_{\text {noneturnover}})\), and \(\beta \) expresses the weighs of non-turnover samples whose value is \(N\_{\text {turnover}}/(N\_{\text {turnover}} + N\_{\text {noneturnover}})\).

This simply divides the turnover samples into positive samples or negative ones. In fact, decision-makers may want to know more about employees’ turnover intention. For instance, the employee may have 90% turnover intention, or 80% turnover intention, or 60% turnover intention. Encouraging, a softer probability distribution over classes [24] has led to new ideas for dealing with this issue. To obtain the probability distribution predictions, we add a “fitting probability distribution” strategy to the weighted cross-entropy loss function during training. The improved formulation of the fitting probability distribution can be denoted by

where \({\hat{y}}_{i}=1\) if the ith employee will quit the job in the final prediction, \({\hat{y}}_{i}=0\) if the ith employee will continue to work in the prediction, and N is the number of categories from the turnover samples.

Finally, we design an improved loss function suitable for the turnover prediction tasks, which is called weighted-based probability loss (WPL). The final WPL function becomes

The objective function \(L_{\text {goal}}\) is employed to complete the training. We adopt Adam optimizer to optimize the parameters of this objective function \(L_{\text {goal}}\) in each training step. Our FATPNN model can predict the personnel turnover category with a competitive performance at the end of training.

Experiments

In this section, we will evaluate our FATPNN model with experiments on personnel turnover data. We will also discuss the experimental results, comparing the performance with several state-of-the-art baselines. Meanwhile, we analyze the probability distribution information learnt through our model in case study. To understand our proposed FATPNN model better, we conduct experiments to answer the following four questions. (1) Does our FATPNN model have an advantage over previous methods on various turnover prediction tasks? (2) Is attention mechanism useful for identifying the importance of various turnover factors? (3) Is the proposed WPL improvement effective for turnover prediction tasks? 4) Do the two loss (i.e., the weighted cross-entropy loss and the fitting probability distribution loss) both make sense for turnover prediction?

Datasets

We conducted experiments on two public turnover datasets. The two datasets are from IBM [25] and Kaggle [26], respectively. The IBM dataset includes plentiful information about employees, such as age, businessTravel, department, education, jobInvolvement, jobLevel, jobSatisfaction, monthlyIncome, numCompaniesWorked, overTime, stockOptionLevel, yearsAtCompany, yearsSinceLastPromotion, etc. The Kaggle dataset contains rich information of employees, such as satisfaction_level, number_project, time_spend_company, salary, etc. An employee who quits the job can be viewed as a positive sample, otherwise viewed as a negative sample. The ratio of positive and negative samples of the two datasets is a class imbalance problem. The details of the two datasets are shown in Table 1.

In the experiments, for the convenience of representation, we name satisfaction_level as satisfaction, last_evaluation as evaluation, number_project as projectCount, average_monthly _hours as averageMonthlyHours, time_spend_company as yearsAtCompany, Work_accident as workAccident, promotion_last_5years as promotion, sales as department,and left as turnover for Ka_t dataset.

Datasets’ analysis

It is observed that working hours have an impact on the occurrence of employee turnover, as shown in Figs. 2 and 3. For Ka_t dataset, the employees who had lower and higher average monthly working hours left the company, as shown in Fig. 2. We believe that the reason for employee turnover may be due to underworked or overworked. For Ib_t dataset, Fig. 3 shows that there are more employee working overtime for employees who left, while there are fewer employee working overtime for employees who stay. We think that overtime has an impact on whether employees leave.

Experimental setup

In this subsection, we introduce the detailed experimental settings. For the two datasets, we employed the same architecture. In the GRU layer, we set the dimension of the hidden state as 128|512 to capture the long- and short-term dependency of employee’s turnover profile. Meanwhile, in the model training process, the learning of our FATPNN model was performed during 200 epochs with a mini-batch size of 128 samples, and the dropout probability was set to 0.5 to avoid the overfitting. Moreover, we adopted Adam algorithm to optimize our model. Table 2 lists our hyperparameter setting.

Baseline methods

To evaluate the effectiveness of FATPNN model, we compared our implementation with several state-of-the-art baselines. We choose some traditional machine learning methods, such as Logistic Regression, Decision Tree, Naive Bayes, Random Forest, Ada Boost, VC, VC feature-based, stacker-top5-RF, and stacker-top5-LDA as the baselines. Furthermore, we also select CHRNNA, ANN7, CEHNN, and GCFNN deep learning methods as the baselines. Specifically, all the methods can be described as follows:

-

Logistic Regression: Logistic Regression is a popular classification model without ability to process sequential data. We adopted each personnel turnover features as input.

-

Decision Tree: Decision Tree is a classic supervise learning method. The input features we used is personnel turnover features.

-

Naive Bayes (NB): NB is a classic supervised model for classification task. We used personnel turnover features as input [4].

-

Random Forest: Random Forest is a representative approach with ensemble learning and perform well in many classification tasks. We employed employee turnover features as input [27].

-

Ada Boost: Ada Boost is also a representative method with ensemble learning and performs well in various classification tasks. We used employee turnover features as input.

-

CHRNNA: CHRNNA is a deep learning framework that employs convolutional recurrent neural networks and attention mechanism to model personnel performance prediction task. The inputs are each personnel performance features [18].

-

ANN7: ANN7 model is used for employee turnover prediction task. This artificial neural network model consists of three layers, which is an input layer, a hidden layer, and an output layer, respectively. ANN7 model used nine input features as input [28].

-

CEHNN: CEHNN is an employee turnover prediction framework for talent management, which adopts the heterogeneous structure to process the sequential/nonsequential feature. Then, the global attention mechanism is introduced to assess the contributions of various turnover factors in the final decision [3].

-

GCFNN: GCFNN for personnel performance prediction is a classification method which first employs a-layer GRU to capture sequential information from personnel performance data. Then, capsule network is used to better cluster the personnel performance features. Finally, an improved loss function is embedded into the capsule network to precisely make predictions in the final decision [29].

-

Voting Classifier (VC): VC is an ensemble learning model which is based on the hard vote strategy to predict the employee attrition label. Another employee attrition model uses the feature selection method of Recursive Feature Elimination (RFE) and SelectKBest. After applying RFE and SelectKbest method, it uses only 11 features from IBM dataset. The proposed employee attrition model that contains 11 features is used to predict employee attrition intention [30]. For convenience, we name this method as VC feature-based.

-

Stacker-top5-RF: stacker-top5-RF model means the ensemble learning model obtained by stacking the top five base classifiers with RF as the meta estimator. The proposed model adopted two types of feature engineering techniques, including feature encoding and feature interaction [31].

-

Stacker-top5-LDA: stacker-top5-LDA model refers to the ensemble learning model obtained by stacking the top five base classifiers with LDA as the meta estimator. The proposed model applied one type of feature engineering technique, including feature selection [31].

-

ECDT-GRID: ECDT-GRID is a novel deep learning model that uses extended convolutional decision tree (ECDT) with grid search optimization to predict employee turnover (churn) [32].

-

FATPNN: is our model.

Evaluation metrics

Turnover prediction task in this paper can be regarded as a binary classification problem. Thus, we evaluate FATPNN model on turnover prediction from classification perspectives. The two turnover data are imbalanced data. We used four evaluation metrics, which are accuracy, precision, recall, and F1-scores (F1), respectively. The final measurement is accuracy, precision, recall, and F1 on the test datasets. Accuracy refers to the ratio of the number of correctly predicted positive (turnover) samples and negative (non-turnover) ones to all samples. Precision is the proportion of true-positive (turnover) samples in all turnover samples predicted as positive. Recall is the proportion of turnover samples predicted correctly in all real positive (turnover) samples. However, precision and recall indicators are one-sided. F1 is determined by precision and recall, which is a comprehensive evaluation index of precision and recall. The indexes, incorporating accuracy, precision, recall, and F1, are defined by TP, FN, FP, and TN. The mathematical formulation of accuracy, precision, recall, and F1 is as follows:

where TP, FN, FP, and TN represent true positives, false negatives, false positives, and true negatives, respectively. The indexes accuracy, precision, recall, and F1 are in the range of \(\left[ 0,1\right] \). Generally, the value 0.5 of the indexes indicates the prediction result by randomly guessing.

For classification tasks, accuracy is an important evaluation metric but only works when the samples classified are balanced. However, the turnover datasets used in this paper are imbalanced. For the imbalanced turnover data, we mainly focus on F1 evaluation indicator. The higher the value of F1 is, the better the prediction performance is.

Results’ analysis

To evaluate the effectiveness of our proposed FATPNN model on the turnover prediction, we compared FATPNN model with several baselines. This paper compared the methods of Logistic Regression, Decision Tree, Naive Bayes, Random Forest, Ada Boost, CHRNNA, ANN7, CEHNN, GCFNN, VC, VC feature-based, stacker-top5-RF, and stacker-top5-LDA. The previous works (e.g., Logistic Regression, Decision Tree, Naive Bayes, Random Forest, Ada Boost, VC, VC feature-based, stacker-top5-RF, and stacker-top5-LDA) were based on traditional machine learning methods, while CHRNNA, ANN7, CEHNN, GCFNN, and our FATPNN model employed deep learning methods. The experimental results of accuracy, precision, recall, and F1 metrics are listed in Table 3. The 13 baselines and our FATPNN model used in the comparison are displayed in the first column of the table. We adopt bold font to emphasize the top 1 result for precision, recall, and F1 evaluation metric.

As shown in Table 3, we can have some observations. For Ib_t dataset, we can see that the proposed FATPNN model outperforms all the baselines in terms of precision, recall, and F1. For Ka_t dataset, FATPNN obtains higher performance in three of the four evaluation metrics. In terms of precision, the precision of FATPNN (98.11%) is slightly lower than Random Forest (98.51%), but for this dataset, FATPNN performs significantly better in terms of F1. For instance, the F1 of FATPNN is 98.22% for the dataset Ka_t, while Random Forest’s is 95.41%. To be precise, it is 2.81% higher than Random Forest in terms of F1. Because the turnover datasets in this paper are imbalanced, we pay more attention to F1 indicator. We compare against the baseline ANN7 model. As Table 3 shows, compared to ANN7 model, the accuracy, precision, and recall of our FATPNN model for the Kaggle dataset increases by 1.22%, 2.60%, and 6.78%, respectively. We believe that neural network fusion model with fine-grained adaptation learning helps to achieve a significant performance improvement.

Next, we compare against the recent baseline VC model and VC feature-based model. The ratio of turnover samples and non-turnover samples of the Ib_t and Ka_t turnover data is 1:5.2 and 1:3.2. The two turnover data are unbalanced. Therefore, F1 works well for imbalanced turnover prediction tasks. As Table 3 shows, compared with VC feature-based model, the F1 improvements range from 27.05% on Ib_t dataset. Compared with VC model, the F1 improvements range from 10.22% on Ka_t dataset. The experimental results show that our FATPNN model is superior over VC model and VC feature-based model for the two unbalanced turnover data in terms of F1. We believe that the reason is that the proposed WPL improvement strategy in the FATPNN model can automatically balance the loss between turnover classes and non-turnover ones to mitigate against the issue caused by data imbalance.

We now discuss the experimental results in Table 3 relating to the recent state-of-the-art baselines stacker-top5-RF and stacker-top5-LDA. In terms of F1, the proposed FATPNN model achieved 89.05 on Ib_t dataset and 98.22 on Ka_t dataset. For Ib_t dataset, the F1 of FATPNN significantly improves by 23.53% compared to stacker-top5-LDA. For Ka_t dataset, the F1 of FATPNN achieve almost the same performance as stacker-top5-RF, furthermore too cumbrous and time-consuming feature engineering techniques are avoided. Results in Table 3 show that Ib_t dataset is a more difficult task than Ka_t dataset, with a lower F1 presented across most baseline methods. This is mainly due to the fact that Ib_t dataset is a very serious class imbalanced data (1 vs. 5.2 in both classes).

We also compare against the state-of-the-art method ECDT-GRID. As Table 3 shows, compared with ECDT-GRID model, the F1 of our proposed FATPNN significantly improves by 18.4% and 3.98% on Ib_t dataset and Ka_t dataset, respectively. We believe that the reason is that the proposed FATPNN model is suitable to deal with the very serious imbalanced employee turnover prediction task. The experimental results show the effectiveness of our proposed model.

It is also observed that our proposed FATPNN model is advantageous on the employee turnover prediction task. First, FATPNN achieves a significant performance improvement in very serious imbalanced turnover prediction task. The results show that our FATPNN model has sufficient ability for distinguishing the positive (turnover) samples and negative (non-turnover) on serious imbalanced Ib_t dataset. Second, the apparent advantage of our FATPNN model is to use the same model to predict turnover on two different datasets, but reference [31] used two different models on two datasets (e.g., Ib_t dataset and Ka_t dataset). This demonstrates that our proposed FATPNN model is more flexible and effective than stacker-top5-RF and stacker-top5-LDA model in reference [31]. It further shows the proposed model’s feasibility for various employee turnover prediction scenarios, and its universality is better. Finally, we note that our FATPNN model is an end-to-end model without feature engineering, while stacker-top5-RF model and stacker-top5-LDA model used different types of feature engineering tricks to solve the tasks for both Ka_t and Ib_t data.

For the main results, there are several observations. First, our model is more advantageous than other baselines. For example, compared to the recent baselines (e.g., VC, VC feature-based, stacker-top5-RF, and stacker-top5-LDA), the proposed FATPNN model uses the same model on different employee turnover prediction tasks, while these baselines used different models on different turnover prediction scenarios. This fact suggests that our FATPNN model is effective and universal in the final turnover prediction tasks.

Second, among classic classification models, the linear model (e.g., Logistic Regression) has relatively lower F1 score than non-linear models (i.e., Random Forests and Ada Boost). Indeed, it is because that most of the samples are classified as non-turnover samples by the linear model, which do not have sufficient ability for recognizing turnover samples and non-turnover samples with profile features.

Third, the deep learning models (i.e., CHRNNA, CEHNN, GCFNN, and FATPNN models) have higher F1-scores on test data than the classic supervised models (i.e., Logistic Regression, Decision Tree, and Naive Bayes models). This indicates that the deep models may learn much more efficient information than the shallow models from raw turnover data, which can be beneficial to boosting the final performance in our turnover prediction tasks.

Fourth, among deep learning models, FATPNN is superior to GCFNN, which indicates that identifying the importance of various profile factors is very important for the identification of turnover employees.

Last but not least, by comparing the performance of FATPNN with CEHNN, we can find that FATPNN model has better performance of turnover identification on test data than the CEHNN model, which validates the effectiveness and applicability of the improved loss function (WPL) in FATPNN model for the imbalanced turnover datasets. The reason is that we use WPL for binary classification problem to avoid the negative impact on class imbalance in turnover tasks. Moreover, one may benefit from rich information provided by the probability distribution of WPL.

In conclusion, all above evidences evaluate that our FATPNN model has an excellent ability to predict turnover by taking full advantage of the profile features of employees and the class imbalance of the turnover data.

To assess which features learned by FATPNN contribute to the turnover prediction task, we calculate the importance of turnover factors. For Kaggle turnover dataset, various factors have different contributions in the final turnover decision. The features learned by FATPNN are satisfaction, yearsAtCompany, projectCount, evaluation, and averageMonthlyHours, respectively. The satisfaction feature has the largest contribution to personnel turnover prediction task, and then the yearsAtCompany feature, followed by the projectCount, evaluation, and averageMonthlyHours feature. For IBM turnover dataset, various factors also have different contributions in turnover decision-making process. The features learned by FATPNN are monthlyIncome, monthlyRate, environmentSatisfaction, totalWorkingYears, overTime, stockOptionLevel, jobInvolvement, numCompaniesWorked, yearsSinceLastPromotion, and distanceFrom Home, respectively. The monthlyIncome feature has the largest contribution to personnel turnover prediction task, and then the monthlyRate, environmentSatisfaction, totalWorkingYears, overTime, and stockOptionLevel feature, followed by the jobInvolvement, numCompaniesWorked, yearsSinceLastPromotion, and distanceFromHome feature. From Table 3, we can see that the features learned by FATPNN model achieve better performance, which shows the effectiveness of our proposed model for turnover prediction task.

Ablation study of various modules

In this subsection, to further demonstrate the effectiveness of different strategies, we report the ablation study of our FATPNN model. Our main model performs better than the other variants, although the variants may still be beneficial when applied for other tasks. We applied our FATPNN model and its four sub-modules, which are without attention mechanism, WPL, loss_probability, and loss_weight, to the two turnover datasets, respectively. The experimental results for the ablation study are summarized in Table 4. The results of “FATPNN w/o attention mechanism” row refer to the results without the attention mechanism. The results of “FATPNN w/o WPL” row refer to the results without the WPL. The results of “FATPNN w/o loss_probability” row indicate the results without the loss_probability (the improved fitting probability distribution loss function). The results of “FATPNN w/o loss_weight” row express the results without the loss_weight (the weighted cross-entropy loss function).

The experimental results listed in Table 4 indicate: (1) our main model outperforms the four sub-modules (e.g., FATPNN w/o attention mechanism, FATPNN w/o WPL, FATPNN w/o loss_probability, and FATPNN w/o loss_weight) under the same settings; (2) as expected, the results for the simplified models (i.e., FATPNN w/o attention mechanism and FATPNN w/o WPL) all drop a lot in the two turnover datasets. This clearly shows the effectiveness of the two sub-modules. For the sub-module “FATPNN w/o attention mechanism”, the drop of F1 demonstrates that the attention mechanism is useful. Specifically, attention mechanism helps to recognize the contributions of diverse turnover factors, which can identify the most critical factors in the final turnover decision. For the sub-module “FATPNN w/o WPL”, the drop of F1 demonstrates that the improved loss called WPL is indeed meaningful. The drop of performance of “FATPNN w/o WPL” also shows that WPL is critical to achieving a good performance. (3) The results for the simplified models (i.e., FATPNN w/o loss_probability and FATPNN w/o loss_weight) all drop. This demonstrates that our weighted cross-entropy loss and improved fitting probability distribution loss are both useful for our turnover tasks. For the sub-module “FATPNN w/o loss_probability”, the drop of F1 shows that the weighted cross-entropy loss is useful for the imbalanced turnover data. This observation indicates that FATPNN model could mitigate against the issue caused by data imbalance. For the sub-module “FATPNN w/o loss_weight”, the drop of F1 validates that the improved fitting probability distribution loss is also indeed helpful, which further enhance the performance of turnover prediction.

Case study

As mentioned in the section “Evaluation Metrics”, we compare different methods (i.e., Logistic Regression, Decision Tree, Naive Bayes, Random Forest, Ada Boost, CHRNNA, ANN7, CEHNN, GCFNN, VC, VC feature-based, stacker-top5-RF, and stacker-top5-LDA), and our proposed FATPNN model achieves the best performance in terms of F1 on turnover prediction tasks. To intuitively feel that FATPNN model has better performance, we will next provide several case studies on turnover test data, which is helpful to help the decision-makers in an organization to pay more attention to the high-probability turnover talents.

For better illustration, we only display partial test data. Table 5 lists the probabilistic experimental results of our FATPNN model on partial test data. The column of “Employees” includes four test samples. The column of “Turnover probability” refers to possible turnover probabilistic information of the personnel. The talents who are predicted to leave with a high-probability require more attention from decision-makers. For example, when the key talents are predicted to have a 75% or higher turnover probability, the decision-makers can not ignore these instances. The key talents’ turnover may lead to reduce the competitiveness of companies. Our model finds the key talents with high turnover probability as early as possible, which helps decision-makers to take a preventive measure to avoid these key talents turnover.

We note that the FATPNN model provides rich information with the help of WPL. In this way, we can benefit from rich information provided by the probabilistic output of FATPNN.

Conclusion

In the competitive and fast-evolving business environments, the key talents’ turnover will reduce the competitiveness of modern organizations. Thus, it is very important to proactively predict potential turnovers. In this paper, we propose a fine-grained adaptation-based turnover prediction neural network (FATPNN) model for turnover prediction. Specifically, we first adopt GRU to learn profile-aware features’ representations of each personnel. Then, to identify importance of various turnover factors, we further use attention mechanism to model the profile information. Finally, we design an improved weighted-based probability loss function suitable to turnover prediction tasks. We conducted experiments for evaluating our model on turnover data, which validated the effectiveness and universality on various turnover prediction scenarios.

In the future, first, we will cooperate with company to further build datasets with turnover time to predict employee turnover behavior at a specific time. Second, we are also willing to extend our solutions to other tasks of talent computing field (e.g., resume analysis).

Data Availability

The data used to support the findings of this study are included within the article. The data used to support the findings of this study are available from the first author upon request.

References

Fan C-Y, Fan P-S, Chan T-Y, Chang S-H (2012) Using hybrid data mining and machine learning clustering analysis to predict the turnover rate for technology professionals. Expert Syst Appl 39(10):8844–8851

Cai X, Shang J, Jin Z, Liu F, Qiang B, Wu X, Liang Z (2020) DBGE: employee turnover prediction based on dynamic bipartite graph embedding. IEEE Access 8:10390–10402

Mingfei T, Hengshu Z, Chuanren L, Chen Z, Hui X (2019) Exploiting the contagious effect for employee turnover prediction. In: The Thirty-Third AAAI conference on artificial intelligence, AAAI 2019, Honolulu, Hawaii, USA, January 27–February 1, 2019, pp 1166–1173

Valle MA, Ruz GA (2015) Turnover prediction in a call center: behavioral evidence of loss aversion using random forest and naïve bayes algorithms. Appl Artif Intell 29(9):923–942

Zhu X, Seaver W, Sawhney R, Ji S, Holt B, Sanil GB, Upreti G (2017) Employee turnover forecasting for human resource management based on time series analysis. J Appl Stat 44(8):1421–1440

Valle MA, Ruz GA, Masias VH (2017) Using self-organizing maps to model turnover of sales agents in a call center. Appl Soft Comput 60:763–774

Ali Alaeldeen Bader Wild (2021) Prediction of employee turn over using random forest classifier with intensive optimized pca algorithm. Wirel Personal Commun 119:1–18

Teng M, Zhu H, Liu C, Xiong H (2021) Exploiting network fusion for organizational turnover prediction. ACM Trans Manag Inf Syst (TMIS) 12(2):1–18

Mobley WH, Horner SO, Hollingsworth AT (1978) An evaluation of precursors of hospital employee turnover. J Appl Psychol 63(4):408–414

McEvoy GM, Cascio WF (1987) Do good or poor performers leave? A meta-analysis of the relationship between performance and turnover. Acad Manag J 30(4):744–762

Trevor CO, Gerhart B, Boudreau JW (1997) Voluntary turnover and job performance: curvilinearity and the moderating influences of salary growth and promotions. J Appl Psychol 82(1):44–61

Wei-Chiang H, Ping-Feng P, Yuying H, Shun-Lin Y (2005) Application of support vector machines in predicting employee turnover based on job performance. In: Advances in natural computation, first international conference, ICNC 2005, Changsha, China, August 27–29, 2005, Proceedings, Part I, pp 668–674

Rahul Y, Rahul R, Rakshit V, Rahul J, Abhilash GV, Deepti K (2018) Employee attrition prediction. CoRR, arXiv:1806.10480

Oliveira JMD, Zylka MP, Gloor PA, Joshi T (2019) Mirror, mirror on the wall, who is leaving of them all: predictions for employee turnover with gated recurrent neural networks. Collaborative innovation networks. Springer, Cham, pp 43–59

Ying S, Fuzhen Z, Hengshu Z, Xin S, Qing H, Hui X (2019) The impact of person-organization fit on talent management: a structure-aware convolutional neural network approach. In: Proceedings of the 25th ACM SIGKDD international conference on knowledge discovery & data mining, KDD 2019, Anchorage, AK, USA, August 4–8, 2019, pp 1625–1633

Tian L, Wang Z, Liu W, Cheng Y, Alsaadi FE, Liu X (2022) An improved generative adversarial network with modified loss function for crack detection in electromagnetic nondestructive testing. Complex Intell Syst 8(1):467–476

Ouma YO, Cheruyot R, Wachera AN (2022) Rainfall and runoff time-series trend analysis using lstm recurrent neural network and wavelet neural network with satellite-based meteorological data: case study of nzoia hydrologic basin. Complex Intell Syst 8(1):213–236

Xue X, Feng J, Gao Y, Liu M, Zhang W, Sun X, Zhao A, Guo SX (2019) Convolutional recurrent neural networks with a self-attention mechanism for personnel performance prediction. Entropy 21(12):1227

Xue X, Feng J, Sun X (2021) Semantic-enhanced sequential modeling for personality trait recognition from texts. Appl Intell 51(11):7705–7717

Somers JM (1999) Application of two neural network paradigms to the study of voluntary employee turnover. J Appl Psychol 84(2):177–185

Kyunghyun C, van Bart M, Çaglar G, Dzmitry B, Fethi B, Holger S, Yoshua B (2014) Learning phrase representations using RNN encoder-decoder for statistical machine translation. In: Proceedings of the 2014 conference on empirical methods in natural language processing, EMNLP 2014, October 25–29, 2014, Doha, Qatar, A meeting of SIGDAT, a Special Interest Group of the ACL, pp 1724–1734

Dzmitry B, Kyunghyun C, Yoshua B (2015) Neural machine translation by jointly learning to align and translate. In: 3rd international conference on learning representations, ICLR 2015, San Diego, CA, USA, May 7–9, 2015, Conference Track Proceedings

Saining X, Zhuowen T (2015) Holistically-nested edge detection. In: 2015 IEEE international conference on computer vision, ICCV 2015, Santiago, Chile, December 7–13, 2015, pp 1395–1403

Hinton G, Dean J, Vinyals O (2014) Distilling the knowledge in a neural network. In: Annual conference on neural information processing systems (NIPS) 2014 deep learning workshop, pp 1–9

Stacker IV McKinley (2015) Ibm waston analytics. sample data: Hr employee attrition and performance [data file]. https://www.ibm.com/communities/analytics/watson-analytics-blog/hr-employee-attrition/

L. (2020) Hr analytics. https://www.kaggle.com. Accessed 30 Mar 2020

Yadav S, Jain A, Singh D (2018) Early prediction of employee attrition using data mining techniques. In: 2018 IEEE 8th international advance computing conference (IACC), pp 349–354

Sampe MZ, Ariawan E, Ariawan IW (2019) Predictive analysis of employee loyalty: a comparative study using logistic regression model and artificial neural network. J Indones Math Soc 25:325–335

Xue X, Gao Y, Liu M, Sun X, Zhang W, Feng J (2021) Gru-based capsule network with an improved loss for personnel performance prediction. Appl Intell 51(7):4730–4743

Yahia NB, Hlel J, Palacios RC (2021) From big data to deep data to support people analytics for employee attrition prediction. IEEE Access 9:60447–60458

Wang X, Zhi J (2021) A machine learning-based analytical framework for employee turnover prediction. J Manag Anal 8(3):351–370

Ozmen EP, Ozcan T (2022) A novel deep learning model based on convolutional neural networks for employee churn prediction. J Forecast 41(3):539–550

Acknowledgements

This work was supported by the Applied Basic Research Program of Shanxi Province (No. 202203021212174), Scientific and Technologial Innovation Programs of Higher Education Institutions in Shanxi, (No. 2022L474), the Key Research and Development Program in Shaanxi Province of China (No. 2019ZDLGY03-10), the National Natural Science Foundation Projects of China (No. 61877050), and Yuncheng University Doctoral Research Foundation Program (No. YQ-2022003).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xue, X., Sun, X., Wang, H. et al. Neural network fusion with fine-grained adaptation learning for turnover prediction. Complex Intell. Syst. 9, 3355–3366 (2023). https://doi.org/10.1007/s40747-022-00931-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-022-00931-2