Abstract

Currently, single-stage point-based 3D object detection network remains underexplored. Many approaches worked on point cloud space without optimization and failed to capture the relationships among neighboring point sets. In this paper, we propose DCGNN, a novel single-stage 3D object detection network based on density clustering and graph neural networks. DCGNN utilizes density clustering ball query to partition the point cloud space and exploits local and global relationships by graph neural networks. Density clustering ball query optimizes the point cloud space partitioned by the original ball query approach to ensure the key point sets containing more detailed features of objects. Graph neural networks are very suitable for exploiting relationships among points and point sets. Additionally, as a single-stage 3D object detection network, DCGNN achieved fast inference speed. We evaluate our DCGNN on the KITTI dataset. Compared with the state-of-the-art approaches, the proposed DCGNN achieved better balance between detection performance and inference time.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

3D object detection is holding great importance in computer vision with the rapid development of many real-life applications, including autonomous driving[3], augmented reality [12, 14], and etc. LiDAR can capture point cloud data, which is a mainstream form of 3D data representation [7] and widely used in 3D object detection. In contrast to RGB-D images, point clouds can provide accurate depth and adequate geometric information [28], which benefits feature extraction processes in deep-learning-based 3D object detection approaches. However, because of the unordered, irregular, and unstructured characteristics of point clouds [1], effectively leveraging point cloud data for 3D object detection still remains challenging.

To address the above issue, 3D object detection from point cloud is mainly divided into two branches: voxel-based [11, 19, 25, 31] and point-based [9, 16, 21, 26]. For voxel-based approaches, they first perform voxelization and then use convolutional neural networks to extract features. These approaches suffer from huge computational burdens when resolution increases, and they cannot learn detailed geometric information from point cloud. For point-based approaches, they directly process point clouds, mainly using PointNet [17] or PointNet + + [18] as their backbone network. However, existing point-based approaches have the following issues: (1) these networks often consist of two stages, which can be briefly described as follows: the first stage is to generate proposals, and the second stage is to classify and refine these proposals. Two-stage networks usually suffer from slow inference speed and they cannot meet the requirements of real-time applications. (2) Although PointNet and PointNet + + can capture the relationships in local point sets, they cannot capture the relationships among neighboring grouped point sets. (3) The point cloud space partitioned by ball query in the Set Abstraction (SA) layer is often not optimal. We utilized ball query in the SA layer of PointNet + + to perform grouping on a point cloud and visualize it in Fig. 2b, which shows that half of the point set (a sphere) is empty in geometry, indicating that those point sets are not optimal.

Graph neural networks (GNNs) [24] are very suitable for processing irregular and unstructured data; more specifically, they can extract relationships among points and point sets. GNNs represent data and their relationships in the form of graphs and utilize neural networks to extract features. Due to the irregular and unstructured nature of the point clouds, we believe that GNNs are more suitable for dealing with point clouds.

In this paper, we propose DCGNN: A Single-Stage 3D Object Detection Network based on Density Clustering and Graph Neural Network. We design a multi-scale hierarchical single-stage 3D object detection network with graph neural networks. Besides, we propose a unique point set optimization approach based on density clustering to better capture local features in grouped point sets.

Our key contributions are summarized as follows:

-

(1)

We propose a novel optimization approach in point cloud partition with density clustering. We utilize density clustering approaches to find the optimal centers of point sets and move the point sets towards the new centers. Performing such movements properly can better fill the point sets with point clouds, thus leading to better local feature extraction.

-

(2)

We design a backbone network with graph neural networks, which is able to capture both local relationships in point sets and global relationships between point sets. We design a local GNN layer and a global GNN layer to extract local and global features of point sets. Feature fusion is also performed between those layers.

-

(3)

We design a novel hierarchical single-stage 3D object detection network. Experiments on KITTI dataset show our proposed network achieves satisfactory results in inference speed and detection performance.

The rest of this paper is organized as follows: the following section discusses the related works. The subsequent section explains our proposed network. “Experiments” illustrates the experiments of our network. The summary and conclusion of the paper are given in “Conclusions”.

Related works

LiDAR-based 3D object detection approaches can be mainly divided into two streams: voxel-based approaches and point-based approaches [5]. There are also projection-based approaches [2, 13] and frustum-based approaches [16, 23], but because most of them cannot achieve the same performance as voxel-based and point-based approaches, their popularity has significantly decreased compared with state-of-the-art approaches. The voxel-based approaches usually perform voxelization and use 3D convolutional neural networks (CNNs) to extract features. Some of them also utilizes 2D CNNs. The point-based approaches process point clouds directly, and they do not convert point clouds into structural forms. Generally, the voxel-based approaches outperform point-based approaches both in performance and speed (in lower resolution). However, we believe that point-based approaches have greater potential if being well exploited, due to its advantage of processing point clouds straightforwardly.

Voxel-based approaches

Voxel-based approaches are the mainstream of the state-of-the-art approaches. VoxelNet [31] proposed the Voxel Feature Encoding (VFE) layer, and utilized a region proposal network (RPN) to generate bounding boxes. PointPillars [11] proposed pillar structures in 3D object detection utilizing 2DCNNs and achieved fast inference speed with high performance. SE-SSD [30] used a voxel-based backbone network and integrated 3D object detection with a teacher-student network, and it outperformed previous networks in terms of both speed and accuracy. CenterPoint [27] designs a two-stage network by using center-based representation, and this simplified both 3D object detection networks and tracking networks.

Although voxel-based approaches outperform all other approaches in both accuracy and speed, we consider the point-based approaches superior to voxel-based ones. Voxel-based approaches have more computational complexity when resolution increases compared to point-based approaches, and during the process of voxelization, some detailed and important features are often lost. As voxel-based approaches are different from point-based approaches from the beginning, it is unsuitable to compare the point-based approaches to the voxel-based ones in accuracy and speed.

Point-based approaches

Unlike the voxel-based 3D object detection approaches, point-based approaches process point clouds directly, and they do not convert them into any structural forms, e.g., voxels or pillars.

Among all point-based approaches, there are two-stage approaches and single-stage approaches. Two-stage approaches first generate region proposals using a backbone network, and then classify and refine the proposals to generate final classification scores and bounding boxes. PointRCNN [20] utilizes PointNet + + as the backbone network and designs an anchor-free mechanism to generate 3D proposals. PV-RCNN [19] combines both voxel-based and point-based approaches and makes intermediate features shared in the first stage and the second stage to improve performance. The two-stage approaches are generally slow in speed, and they usually cannot meet the demand of real-time applications.

In contrast to two-stage approaches, point-based single-stage approaches remain underexplored. 3DSSD [26] proposes F-FPS in point-based object detection network and removes FP layers of PointNet + + backbone, and thus it is the first network which makes real-time point-based 3D object detection possible. PointRGCN [29] is a pioneer utilizing graph neural networks in 3D object detection. Point-GNN [21] makes use of graph neural networks in 3D object detection and proposed a single-stage network which achieves high performance. SVGA-Net [9] performs spherical voxelization combined with local and global graph neural networks for feature extraction.

Although 3DSSD proposes F-FPS to adjust point positions queried by FPS with features, they are not performing F-FPS in the first layer due to lack of features; while our proposed point set optimization approach (DC-ball query) can optimize the initial point set. Point-GNN and SVGA-Net utilizes graph neural networks in 3D object detection, but we believe that organizing the networks in a hierarchical structure can further improve performance. Additionally, SVGA-Net also utilizes local and global GNN, but the global GNN of SVGA-Net acts as an attention parameter to the local GNN, which is considered inadequate feature extraction. Different from SVGA-Net, we perform global GNN based on the results of local GNN and add the results of global GNN back to the features. From our perspective of view, we consider it a balance of performance and speed.

The proposed method

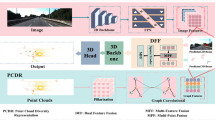

In this section, we first discuss the issues of ball query, which is a step in Set Abstraction layer. We then propose a new approach to solve them, and we term this approach density clustering (DC) ball query. Next, we introduce the detailed framework of DCGNN, as shown in Fig. 1.

DC-ball query

Issues We first discuss the issue of ball query by considering a scene from KITTI dataset as shown in Fig. 2. The point cloud space of the scene is visualized in Fig. 2a. In Fig. 2b, the point cloud space is grouped into point sets by ball query, and each point set is likely to contain only a part of a target object (i.e., a car object).

However, we found that there were point sets where points are merely occupying only a half or a corner of an object car in many scenarios. As shown in Fig. 2b, after performing ball query over the scene, the blue point set is almost empty, which grouped only a part of point cloud of the object car. The issue can lead to non-adequate local feature extraction, and performing optimization on grouping of point cloud space can further improve the detection results.

Motivations We believe that there are more features in an area containing more points. More features lead to better local feature extraction and thus can improve detection results. Additionally, we believe that it is beneficial to improving detection results when the points of an object in a point set are more complete. For a spherical point set with more than half space empty, it is feasible to move the center of the sphere to a position containing more points, leading to better points occupation with more features and likely more complete of objects.

Approaches We first perform the original ball query using the initial centers sampled from FPS. By obtaining each spherical point set and its center, we perform density clustering on the point set to get several clusters of points in the sphere. Then, we choose a cluster with the most points (if there are more than one cluster) and calculate its center of gravity. Finally, we move the center of the sphere to the center of gravity of the cluster and perform the original ball query again to obtain the new point sets. After performing the second ball query, we also check the points which moved out of the new point set during the process. If the number of those points exceeds a limit \({m}_{i}\), we use the original center and point set instead to prevent loss of important detail information in the original point set. The whole process is explained in Algorithm 1 and illustrated in Fig. 3.

In Algorithm 1, we take \(C\) and \(V\) as input, where \(C\) stands for the centers sampled from FPS, and \(V\) stands for the whole point cloud set. The algorithm outputs \({S}_{d}\) and \({C}_{d}\). \({S}_{d}\) stands for the new point sets grouped by DC-ball query, and \({C}_{d}\) stands for the new centers. \({m}_{i}\) is a conditional parameter, which stands for the point number limit of points moving out of the original point set, and it is used to prevent loss of important detail information in the original grouping.

Figure 3a illustrates a single point set by performing original ball query from Fig. 2b, and Fig. 3b illustrates the point set from Fig. 3a with moved center calculated by the proposed DC-ball query algorithm. The point set in Fig. 3b is a new grouping result of the point set in Fig. 3a. The grouping result contains more points by performing DC-ball query, which indicates more detailed features.

The DCGNN architecture

Overall, our proposed DCGNN network (Fig. 1) consists of three similar structures \({S}_{1}\), \({S}_{2}\), \({S}_{3}\) and a final layer \(F\). \({S}_{1}\), \({S}_{2}\) and \({S}_{3}\) are of identical architecture, but with different parameters (e.g. sizes of layers, number of points in FPS). Therefore, we only illustrate the detailed architecture of \({S}_{1}\), and use the dark blue and purple strip to represent \({S}_{2}\) and \({S}_{3}\). The parameters of \({S}_{1}\), \({S}_{2}\) and \({S}_{3}\) are documented in Sect. 4.1.

Grouping with Density Clustering Similar to many point-based networks, we first perform furthest point sampling and grouping to construct the initial point sets. Inspired by 3DSSD, we use F-FPS to ensure more points are sampled into an object by calculating distances with X–Y-Z coordinates and semantic features, as shown in Formula 1,

where \({L}_{d}\left(A,B\right)\) and \({L}_{f}\left(A,B\right)\) represent Euclidean X–Y-Z distance and feature distance, respectively.

In \({S}_{1}\), we perform DC-ball query to optimize the point sets. We also perform multi-scale grouping to capture features of objects in different scales.

After FPS and ball query, the grouped point sets (balls) are created for two scales \({R}_{1}\), \({R}_{2}\) and \({R}_{3}\), and MLP is used to derive local point-wise features.

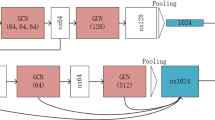

Local GNN Layer We design a graph neural network to extract local point-wise features in grouped point sets. A graph is a pair \(G=(V, E)\), where \(V\) is a set of vertices and \(E\) is a set of edges. In our case, we construct \(N\) complete graphs for \({R}_{1}\) and \({R}_{2}\) respectively. In jth (\(j\in 1\dots N\)) grouped point set of scale \({R}_{k} (k\in \mathrm{1,2},3)\), graph \({G}_{j}\) is constructed, where \({V}_{j}\) consists of all points in a grouped point set, and \({E}_{j}\) is generated according to Formula 2.

Then, GraphSAGE[8] is used to extract the relationship within the complete graphs, as shown in Formula 3,

where \(\mathcal{N}(i)\) represents the neighbor of point \(i\), \(W\) represents the weight of a linear layer, and \({h}_{i}^{l}\) represents the features of point \(i\) in the lth layer.

Global GNN Layer We design another graph neural network to extract the relationship of features between each grouped point sets. For each grouped point set, a max pooling operation is performed to obtain determinant as vertices in \({V}_{0}\), and we construct KNN graph \({G}_{0}\) in \({V}_{0}\), where \({E}_{0}\) is generated according to Formula 4,

where \({KNN}_{k}\left(u\right)\) represents \(k\) nearest neighbor(s) of \(u\) (when \(k=1\), the neighbor is \(u\) itself).

We perform the same GraphSAGE in Formula 3 to extract the relationship within the KNN graph.

Complete graphs cannot be used to extract global features due to computational complexity, as \(N\) is much bigger than vertex counts in \({V}_{1}\) to \({V}_{n}\).

Feature Fusion After the completeness of graphs to extract local features and the KNN graph to extract global features, each vertex in the KNN graph is divided equally and added into the features of each points in the corresponding grouped point set. Then, a max pooling is performed to obtain the determinant of each grouped point set.

Finally, we concatenate the features of all scales. An MLP is then used to reduce redundancy, and the output of the MLP is sent to the next structure.

Vote Layer After \({S}_{3}\), we add a vote layer to shift the center points queried by FPS to the real center of objects, as in 3DSSD and VoteNet [15]. F-FPS is also used in this layer.

Final Layer We design a layer \(F\) positioned after the vote layer to generate dense predictions for detection head. From parctice, we know that dense predictions are beneficial to detection results. This layer takes the shifted center in the vote layer as input, performs ball query with large radius, and then generates dense prediction using MLPs.

Followed by layer \(F\), the classification scores and bounding boxes are ouput by the detection head. We use a single stage detection head, which perform direct classification and regression, enabling us to train the network in an end-to-end manner.

Loss function

The total loss consists of classification loss, residual loss and shifting loss, as in Formula 5 below:

where \({N}_{c}\) and \({N}_{p}\) are the numbers of total center points and positive center points in objects. In the classification loss \({L}_{c}\), \({s}_{i}\) and \({u}_{i}\) represent the predicted classification score and the center-ness label introduced in 3DSSD, respectively, and we use cross entropy loss for \({L}_{c}\).

The regression loss \({L}_{r}\) consists of distance regression loss \({L}_{dist}\), size regression loss \({L}_{size}\), angle regression loss \({L}_{angle}\), and corner loss \({L}_{corner}\). We use smooth-L1 loss for \({L}_{dist}\) and \({L}_{size}\). \({L}_{angle}\) and \({L}_{corner}\) are calculated by Formula 6 and Formula 7, respectively:

In angle loss, \({d}_{c}^{a}\) and \({d}_{r}^{a}\) are predicted angle class and residual, while \({t}_{c}^{a}\) and \({t}_{r}^{a}\) are their ground truths. In corner loss, \({P}_{m}\) and \({G}_{m}\) are the predicted and ground-truth location of 8 corners of a bounding box.

Shifting loss is guided by the ground-truth centers of object in Vote layer. We use a smooth-L1 loss to calculate the distance between the predicted centers and the ground-truth centers of objects. \({N}_{p}^{*}\) is the number of positive centers from F-FPS.

Experiments

We evaluate the proposed DCGNN on KITTI Object Detection Benchmark [6], which includes 7481 training samples and 7518 testing samples with three levels of difficulties: easy, moderate and hard. The KITTI dataset contains three classes: car, pedestrian, and cyclist. We evaluate our DCGNN on cars because of complicated scene and large quantity of data. More importantly, most existing works evaluate their network on cars. and the proposed DCGNN can be objectively compared with these studies. We divide the training samples into a training set (3712 scenes) and a validation set (3769 scenes) at a ratio about 1:1, the same as other works. We perform ablation study on the 1:1-splitting train-val set. We also divide a 4:1-splitting train-val set to train DCGNN submitted to the KITTI official benchmark. Following PV-RCNN, we utilize the 20% local validation set to obtain an approximate trend to the performance of our trained model submitted to the KITTI official benchmark. We compare the results of DCGNN's KITTI official benchmark with state-of-the-art models. The average precision (AP) is used to compare performance of different models for evaluation and the IoU threshold for car category is 0.7.

Implementation details

Network architecture As shown in Fig. 1, there are three similar structures \({S}_{1}\), \({S}_{2}\) and \({S}_{3}\) in DCGNN.

In \({S}_{1}\), we sample 4,096 points by FPS, and perform our proposed DC-ball query for grouping with radii 0.2, 0.4, 0.8 for each scale. We choose DBSCAN [4] as clustering algorithm with parameter \(eps=0.1\), \(minPts=4\) and \({m}_{i}=4\). We use MLPs with [16, 32], [16, 32], [32, 64] for each scale to extract initial feature. We perform one local GNN layer and one global GNN layer (\(K=3\)) for each of three scales using GraphSAGE [8]. The output channels of the local GNNs in each scale are 32, 32, 32. The output channels of the global GNNs in each scale are identical to the local GNN. Two-layer MLPs with the same channel as input are performed after the GNNs of each scale. Finally, we concatenate the features of three scales, and aggregate them to 64 channels by a one-layer MLP to get the output.

In \({S}_{2}\) and \({S}_{3}\), the numbers of sampled points are 1,024 and 512. We perform F-FPS and D-FPS in the two layers. For \({S}_{2}\), we use 0.4, 0.8, 1.6 as radii. For \({S}_{3}\), we use 1.6, 3.2, 4.8 as radii. The MLPs for initial feature extraction is with [128], [128], [128] and [256], [256], [256] for \({S}_{2}\) and \({S}_{3}\) respectively. In addition, we perform GNN layers the same as \({S}_{1}\) in \({S}_{2}\) and \({S}_{3}\), but with different sizes. The output channel of the local GNNs in \({S}_{2}\) is 128, 128, 128 and 256, 256, 256 for \({S}_{3}\). We also perform two-layer MLPs for each scale of \({S}_{2}\) and \({S}_{3}\). Finally, the aggregation channel is 128 and 256 respectively.

In Vote layer, we sample 256 points using F-FPS and D-FPS. We use 128-channel feature to shift the center. We do not change the features of centers.

In \(F\), we take the shifted centers from Vote layer. There are only two scales in \(F\). The radii of ball query is 4.8, 6.4. The MLPs for feature extraction is with [256, 256, 512] and [256, 512, 1024]. There is no GNN layer in this layer. Finally, the features are aggregated into 512 channels.

Training The network is trained in an end-to-end manner on a Tesla T4 GPU. We use ADAM [10] as the optimizer to train DCGNN and use one-cycle train mechanism [22] to adjust learning rate dynamically. We set the maximum learning rate to 0.012, and train DCGNN for 160 epochs with the batch size of 16 on 4 GPUs. We also use mix-up [25], rotating and flipping as data augmentation methods to prevent overfitting.

Main results

We evaluate our model on the 3D detection benchmark of the KITTI test server. The name of the proposed network on the KITTI test server is DCGNN. As shown in Table 1, we compare our results with state-of-the-art single-stage point-based 3D object detection approaches. Note that the APs in Table 1 are of 40 recall points according to the KITTI online benchmark.

We selected state-of-the-art single-stage point-based models: 3DSSD [26], Point-GNN [21], and SVGA-Net [9] to compare with our proposed DCGNN. In addition, we selected a two-stage model PointRCNN [20], because PointRCNN is the first point-based 3D object detection network. Another two-stage model, PointRGCN [29], is a GNN based model. From Table 1, we can learn that our model achieved satisfactory performance compare to the state-of-the-art single-stage point-based models. DCGNN outperforms 3DSSD, Point-GNN, PointRCNN, and PointRGCN on the moderate split. On the easy split, DCGNN outperforms all comparison methods. On the hard split, DCGNN outperforms Point-GNN, PointRCNN, PointRGCN.

Qualitative results

Figure 4 illustrates four sample scenes from KITTI dataset and our detection results highlighted in green. We also illustrate the ground truths in yellow. There are many vehicles in each scene in Fig. 4, and there is occlusion between vehicles from the position of the coordinate axis. We can know from Fig. 4 that our model can detect cars successfully and accurately despite occlusions and large quantities of cars.

Ablation studies

We carried out ablation studies to verify the effectiveness of the DC-ball query algorithm, the local GNNs, and the global GNN.

We performed ablation study on DC-ball query algorithm and the results are shown in Table 2. We can see from Table 2 that DC-ball query can increase the performance of our DCGNN in all easy, moderate and hard splits.

We also performed experiments on different layers of local GNNs in our DCGNN, as shown in Table 3. We can see that although there are slight increase in performance in the easy and hard splits when using 2 layers, the performance of moderate split decreases compared to one layer. Additionally, the performance of all splits decreases when using 3 layers. Therefore, it is optimal to use 1 layer of local GNN.

We did ablation study on the existence of local GNN and global GNN to verify their effectiveness, and the results are shown in Table 4. We can know the performance decreases without global GNN and the performance further decreases without local and global GNNs. Note that the global GNN depends on the local GNNs in our network, so it is unfeasible to perform experiments with global GNN but without local GNN.

We did ablation study on utilizing different models of GNN in local and global GNN, and the results are shown in Table 5. We perform experiments on GAT and EdgeConv. Utilizing GAT led to a performance drop. Utilizing EdgeConv led to a significant inference speed drop, and even cannot be trained successfully, due to performing edge feature mapping first. Therefore, we cannot list the AP of EdgeConv. Note the original GAT also performs edge feature mapping first, but it can be optimized to calculate vertex features instead of edge features to achieve great inference speed improvement.

Inference time

3DSSD [26] is the fastest single-stage and point-based network so far to the best of our knowledge. We evaluate the TensorFlow version of 3DSSD provided by the authors and our DCGNN locally using NVIDIA RTX2080Ti GPU with single batch size and compared them with respect to inference time, and the results are shown in Table 6.

From Table 6, we can see that our DCGNN is slightly slower than 3DSSD (2fps). However, our DCGNN is much faster than PointGNN, which is the only open-source GNN-based network so far.

We also evaluate the inference time of DC-ball query and GNNs in a single batch to better justify the fast inference speed of our network, as shown in Table 7.

From Table 7, we can see that although DC-ball query and GNNs are considered time-consuming, our fine-optimized implementation can still achieve fast inference speed.

Conclusions

In this paper, we proposed a single-stage 3D object detection network based on density clustering and graph network (DCGNN). We proposed a novel optimization method of point set division (DC-ball query) and verified its effectiveness through systematic experiments. By constructing local and global graphs and making use of graph neural networks, we captured both the local and global relationships among point clouds. Compared with the state-of-the-art networks, the proposed DCGNN achieves satisfactory results in inference speed and detection performance.

Data availability

The dataset and performance data of this study are available in the KITTI website, http://www.cvlibs.net/datasets/kitti/index.php.

References

Bello SA, Yu S, Wang C, Adam JM, Li J (2020) Deep learning on 3D point clouds. Remote Sensing 12(11):1729

Chen X, Ma H, Wan J, Li B, Xia T. Multi-view 3d object detection network for autonomous driving. Proceedings of the IEEE conference on Computer Vision and Pattern Recognition2017. p. 1907–15.

Chen Y, Liu S, Shen X, Jia J. Fast point r-cnn. Proceedings of the IEEE/CVF International Conference on Computer Vision2019. p. 9775–84.

Ester M, Kriegel H-P, Sander J, Xu X. A density-based algorithm for discovering clusters in large spatial databases with noise. kdd1996. p. 226–31.

Fernandes D, Silva A, Névoa R, Simões C, Gonzalez D, Guevara M et al (2021) Point-cloud based 3D object detection and classification methods for self-driving applications: a survey and taxonomy. Information Fusion 68:161–191

Geiger A, Lenz P, Stiller C, Urtasun R (2013) Vision meets robotics: the KITTI dataset. Int J Robot Res 32(11):1231–1237

Guo Y, Wang H, Hu Q, Liu H, Liu L, Bennamoun M (2020) Deep learning for 3d point clouds: a survey. IEEE Trans Pattern Anal Mach Intell 43(12):4338–4364

Hamilton W, Ying Z, Leskovec J. Inductive representation learning on large graphs. Advances in neural information processing systems. 2017;30.

He Q, Wang Z, Zeng H, Zeng Y, Liu S, Zeng B. Svga-net: Sparse voxel-graph attention network for 3d object detection from point clouds. arXiv preprint arXiv:200604043. 2020.

Kingma DP, Ba J. Adam: A method for stochastic optimization. arXiv preprint arXiv:14126980. 2014.

Lang AH, Vora S, Caesar H, Zhou L, Yang J, Beijbom O. Pointpillars: Fast encoders for object detection from point clouds. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition2019. p. 12697–705.

Li B, Zhu S, Lu Y (2022) A single stage and single view 3D point cloud reconstruction network based on DetNet. Sensors 22(21):8235

Liang M, Yang B, Chen Y, Hu R, Urtasun R. Multi-task multi-sensor fusion for 3d object detection. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition2019. p. 7345–53.

Park Y, Lepetit V, Woo W. Multiple 3d object tracking for augmented reality. 2008 7th IEEE/ACM International Symposium on Mixed and Augmented Reality: IEEE; 2008. p. 117–20.

Qi CR, Litany O, He K, Guibas LJ. Deep hough voting for 3d object detection in point clouds. Proceedings of the IEEE/CVF International Conference on Computer Vision2019. p. 9277–86.

Qi CR, Liu W, Wu C, Su H, Guibas LJ. Frustum pointnets for 3d object detection from rgb-d data. Proceedings of the IEEE conference on computer vision and pattern recognition 2018. p. 918–27.

Qi CR, Su H, Mo K, Guibas LJ. Pointnet: Deep learning on point sets for 3d classification and segmentation. Proceedings of the IEEE conference on computer vision and pattern recognition 2017. p. 652–60.

Qi CR, Yi L, Su H, Guibas LJ. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. Advances in neural information processing systems. 2017;30.

Shi S, Guo C, Jiang L, Wang Z, Shi J, Wang X, et al. Pv-rcnn: Point-voxel feature set abstraction for 3d object detection. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2020. p. 10529–38.

Shi S, Wang X, Li H. Pointrcnn: 3d object proposal generation and detection from point cloud. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition 2019. p. 770–9.

Shi W, Rajkumar R. Point-gnn: Graph neural network for 3d object detection in a point cloud. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition 2020. p. 1711–9.

Smith LN, Topin N (2019) Super-convergence: very fast training of neural networks using large learning rates. International Society for Optics and Photonics, Artificial intelligence and machine learning for multi-domain operations applications, p 1100612

Wang Z, Jia K. Frustum convnet: Sliding frustums to aggregate local point-wise features for amodal 3d object detection. 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS): IEEE; 2019. p. 1742–9.

Wu Z, Pan S, Chen F, Long G, Zhang C, Philip SY (2020) A comprehensive survey on graph neural networks. IEEE Trans Neural Netw Learning Syst 32(1):4–24

Yan Y, Mao Y, Li B (2018) Second: sparsely embedded convolutional detection. Sensors 18(10):3337

Yang Z, Sun Y, Liu S, Jia J. 3dssd: Point-based 3d single stage object detector. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition2020. p. 11040–8.

Yin T, Zhou X, Krahenbuhl P. Center-based 3d object detection and tracking. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition2021. p. 11784–93.

Yu Y, Huang Z, Li F, Zhang H, Le X (2020) Point Encoder GAN: a deep learning model for 3D point cloud inpainting. Neurocomputing 384:192–199

Zarzar J, Giancola S, Ghanem B. PointRGCN: Graph convolution networks for 3D vehicles detection refinement. arXiv preprint arXiv:191112236. 2019.

Zheng W, Tang W, Jiang L, Fu C-W. SE-SSD: Self-ensembling single-stage object detector from point cloud. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition2021. p. 14494–503.

Zhou Y, Tuzel O. Voxelnet: End-to-end learning for point cloud based 3d object detection. Proceedings of the IEEE conference on computer vision and pattern recognition 2018. p. 4490–9.

Acknowledgements

This research is partially supported by: Natural Science Foundation Project of Science and Technology Department of Jilin Province under Grant no. 20200201165JC.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no potential conflict (financial or non-financial) of interests regarding the publication of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xiong, S., Li, B. & Zhu, S. DCGNN: a single-stage 3D object detection network based on density clustering and graph neural network. Complex Intell. Syst. 9, 3399–3408 (2023). https://doi.org/10.1007/s40747-022-00926-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-022-00926-z