Abstract

Reinforcement learning (RL) techniques nurture building up solutions for sequential decision-making problems under uncertainty and ambiguity. RL has agents with a reward function that interacts with a dynamic environment to find out an optimal policy. There are problems associated with RL like the reward function should be specified in advance, design difficulties and unable to handle large complex problems, etc. This led to the development of inverse reinforcement learning (IRL). IRL also suffers from many problems in real life like robust reward functions, ill-posed problems, etc., and different solutions have been proposed to solve these problems like maximum entropy, support for multiple rewards and non-linear reward functions, etc. There are majorly eight problems associated with IRL and eight solutions have been proposed to solve IRL problems. This paper has proposed a hybrid fuzzy AHP–TOPSIS approach to prioritize the solutions while implementing IRL. Fuzzy Analytical Hierarchical Process (FAHP) is used to get the weights of identified problems. The relative accuracy and root-mean-squared error using FAHP are 97.74 and 0.0349, respectively. Fuzzy Technique for Order of Preference by Similarity to Ideal Solution (TOPSIS) uses these FAHP weights to prioritize the solutions. The most significant problem in IRL implementation is of ‘lack of robust reward functions’ weighting 0.180, whereas the most significant solution in IRL implementation is ‘Supports optimal policy and rewards functions along with stochastic transition models’ having closeness of coefficient (CofC) value of 0.967156846.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The influential solution for solving complex and uncertain decision-making problems is RL. This algorithm uses reward functions that help an agent converse with the dynamic environment. The output is a policy that helps in dealing with uncertain and complex problems. The policy is a probability of action that can take place in a state. RL is different from supervised learning (SL). It does not require target labels and this helps in building generalization abilities. However, it is hard to set down reward function in advance for handling unpredictable, large, and intricate problems. This leads to the development of IRL which helps in tackling complex problems by understanding reward function through expert demonstrations [1]. IRL is a stream of Learning from Demonstration (LfD) [2] or imitation learning [3, 4], or theory of mind [5]. In IRL, the policy is modified according to the demonstrated behavior. Function mapping and reward function are generally two methods of deriving the policy. Both have their limitations. Function mapping is used in SL and it requires target labels that are expensive and complex methods, whereas reward function is used in RL and knowing the reward function in advance for large and complex problems is a tedious task. These problems can be overcome using LfD and IRL. IRL helps in using the experts’ knowledge in such a way that it can be used in other scenarios.

IRL is formulated by the author Russell [6] as:

-

1.

Given: Agents behavior estimation, sensory inputs to agents, environment model

-

2.

Output: Reward function.

IRL is formally defined by the authors Ng and Russell [7], for the machine learning community as:

To optimize the reward function R for justification of agent behavior by figuring out the optimal policy for the function (S, A, T, D, P) where S is a finite state of space, A is a set of actions, P is transition probability, D is a discount factor, and P is policy [7].

The motivation of the current study

-

Researchers in the current and the past are developing programs for successful IRL implementation by targeting one or two identified problems that may or may not be important at present in the real-time scenario [8,9,10,11]. The current study tries to fill this research gap by prioritizing the IRL implementation problems using fuzzy AHP.

-

The past literature shows that IRL’s theoretical background including problems and solutions is not disclosed comprehensively much by researchers.

-

Different researchers have mentioned different solutions for the IRL problems, and these solutions are not been properly organized and analyzed in the past [12,13,14,15,16]. The current study analyzes and ranks the solutions using the fuzzy TOPSIS method, and helps the decision-makers to make decisions by targetting the prioritized solutions for IRL problems.

Contributions to the paper

-

This is the first study that uses the fuzzy AHP approach to rank the IRL implementation barriers/problems.

-

This is the first study that uses a fuzzy TOPSIS approach to rank the solutions that will overcome the IRL implementation problems.

-

To the best of our knowledge, no other research exists that reports the scope and analysis of this current study about IRL barriers and their solutions. The only hybrid fuzzy AHP–TOPSIS proposed study can be a torchbearer for the researchers to understand the barriers and their solutions in the IRL field.

-

The experts’ opinions in the IRL have been collected in the form of linguistics scales for fuzzy AHP and fuzzy TOPSIS implementation. The current study has used fuzzy MCDM methods as they are capable to handle vagueness and uncertainties in decision-makers’ judgments.

-

The results of the current study can be beneficial to the software companies, industries, and governments that are using reinforcement learning in real-time scenarios.

-

The results show that the most important solution is ‘Supports optimal policy and rewards functions along with stochastic transition models’ and the most significant problem that should be taken care of, while IRL implementation is ‘lack of robust reward functions’.

Hybrid fuzzy AHP–TOPSIS approach performance aspects in IRL

-

Traditional IRL methods are unable to estimate the reward function when there are no state-action trajectories available. The hybrid approach helps to look for the solutions to solve the above problem. Let us illustrate the issue with an example, “Person A can go from position X to position Y with any route. There exists different scenery while going routing through different routes. Person A has some specific preferences for the scenery while routing from position X to position Y. Let’s suppose, the routing time is known, Can we predict person A preferences regarding scenery?” [17]. This is a classical IRL problem having a large problem size or large state spaces. The fuzzy AHP approach of the current study has weighted and ranked this problem high and this is the problem of scalability with large problem sizes or large state spaces. The fuzzy TOPSIS of the current study has focused on solving such problems using “Support multiple rewards and non-linear reward functions for large state spaces”. The new algorithms which support non-linearity for large state spaces or large problem sizes will be used for the proper estimation of reward functions, and it also motivates the researchers and companies to develop new algorithms that can solve such problems in better ways.

-

Feature expectation is another issue with IRL. This is the quality evaluation or assessment of the reward function. The fuzzy AHP approach of the current study has ranked this issue as the number one issue “Lack of robust reward functions” and the fuzzy TOPSIS approach has advocated the use of a solution “Supports optimal policy and rewards functions along with stochastic transition models” that is also ranked one solution to solve the above-mentioned issue. The solution advocated for the building up of algorithms for handling robust reward functions.

-

Using the results of the hybrid approach, the failure rate of IRL projects in the software companies and manufacturing industries can be minimized or reduced.

Literature review

The comprehensive literature review of the current study is carried out in two different phases: the first phase targets to figure out the problems associated with the implementation of IRL, and the second phase targets to find out the solutions to overcome the identified problems.

Problems in the implementation of IRL

IRL mainly targets learning from demonstration or imitation learning [2]. Imitation learning is a way of learning and thriving new skills by perceiving the actions executed by another agent. IRL suffers from various problems. IRL is ill-posed [18], which means that multiple reward functions are consistent with the same optimal policy, and multiple policies exist with the same reward function [18, 19]. The reward function is typically anticipated to be a linear grouping of features [1] which is erroneous. One more thing, original IRL implementation codes consider that demonstrations given by experts’ are optimal, but usually, this thing is not performed in practice. These codes should handle noisy and shabby demonstrations [1, 20]. The policy imitated from the apprenticeship IRL is stochastic, which may not be a good discretion if the expert’s policy is deterministic [1]. When a new reward function is added to iteratively solve IRL problems, the overall computational overhead is hefty [1, 21, 22]. The demonstrations cannot be representative enough and the algorithms should be generalized demonstrations to uncover areas [1]. Different solutions are proposed for solving IRL algorithms. IRL fails to learn robust reward functions [23, 24]. IRL suffers from Ill-posed problems [1]. IRL algorithms have a lack of scalability, which means existing techniques unable to handle large systems due to their run-down performance and incompetence [9] as well as lack of reliability, which means a lack of learning of the reward function by existing techniques due to their incompetence in the learning process [9]. The algorithms have an obstacle to accurate inference [23] Sensitivity to Correctness of Prior Knowledge [23], Disproportionate Growth in Solution Complexity with Problem Size [23], and Direct learning of reward function or policy matching [23]. Table 1 shows the problems associated with the implementation of IRL.

Eight problems have been identified in the IRL implementation from the literature, as shown in Table 1. All these problems have arisen due to the IRL basic assumptions and IRL goal to learn the reward function, find the right policy, and deal with complex and large state spaces. IRL is a machine learning framework that has been recently developed to solve the inverse problem of reinforcement learning. IRL targets to figure out the reward function by learning from the observed behavior of the agents and the underlying control model in the process of IRL implementation is the Markov decision process (MDP). In other words, it can be said that IRL portrays learning from humans. IRL also works on the assumptions; one assumption is that the observed behavior of the agent is optimal (this is a very strong assumption when talking about human behavior learning) and the other assumption is that agent policies are optimal when there is an unknown reward function. These assumptions can result in inaccurate inferences and lead to incorrect reward function learning. This reduces the overall performance of the IRL. The IRL problems can arise due to the agent's action, information available to the agent as well as long-term plans of the agent [33]. The correct reward function estimation becomes very difficult when data are complex, inaccurate and agent actions on this data lead to many large state spaces. For most observations of the agent behavior, there exist multiple fitting reward functions and the selection of the best reward function is a challenge. The short-term action of an agent is quite different from its long-term plan and it also acts as a hurdle to estimating the reward function properly. There exist many problems in the IRL implementation and their solutions have also been proposed in the literature and these are described in Sect. Solutions to overcome the identified problems.

Solutions to overcome the identified problems

Different solutions have been proposed to solve the problems faced by the classical IRL algorithm. One is to modify the existing algorithm that improves imitation learning and rewards functions learning. Some of the algorithms are adversarial inverse reinforcement learning (AIRL) [24], cooperative inverse reinforcement learning (CIRL) [34], DeepIRL [16, 35], gradient-based IRL approach [36], relative entropy IRL (REIRL) [37, 38], Bayesian IRL [1, 23], and score-based IRL [25]. Other solutions like maximum margin optimization. It means to introduce the loss functions that optimize the demonstrations for other available solutions by a margin [18]. It also solves the problem of ill-posed. Bayesian IRL uses the probabilistic model to pact with the uncertainty that is allied with the reward function as in IRL. Moreover, this model if extended helps in uncovering the posterior distribution of the expert’s preference [9, 12, 18, 28, 39]. IRL can also accommodate incorrect and partial policies along with noisy observations [8, 23]. One of the solutions is maximum entropy or its optimization [16, 18, 21, 23, 40], and it mainly solves the problem of ill-posed. To extract rewards in problems with large state spaces [29,30,31] and support for non-linear reward functions [41]. More advancements in the field for support of stochastic transition models and transition models are optimized [22, 36]. Many researchers have worked on rewards and optimal policies [10, 13,14,15, 20, 25,26,27, 42,43,44,45], and multiple reward functions [46]. Learning from failed and successful demonstrations [12, 13, 15, 32]. Some authors have worked to cover the risk factors involved in IRL like risk-aware active IRL [47] and risk-sensitive inverse reinforcement learning [12]. Table 2 shows the solutions that are implemented to overcome the identified problems of IRL.

By analyzing the literature, the IRL algorithms have been divided into four categories to find out the optimal reward function. The first category is the development of max-margin planning methods as they try to match feature expectations. In other words, these methods estimate reward functions that try to maximize the margin between the value function or optimal policy and other policies or value functions. The second category is maximum entropy methods. These methods try to estimate the reward function using the maximum entropy concept in the optimization routine. These methods can handle large state spaces as well as sub-optimal issues of expert demonstrations. These methods try to handle the trajectory noises and agent imperfect behavior. The third category develops improved IRL algorithms like AIRl, CIRL, DeepIRL, Gradient IRL, REIRL, Score-based IRL, and Bayesian IRL for improving imitation learning. The fourth category is the miscellaneous category that targets the development of IRL algorithms that considers risk-awareness factors, learn from failed demonstration, support multiple and non-linear reward functions, and posterior distribution on the agent’s preferences [33]. All the above algorithms solve different IRL problems. The selection of these algorithms is an important step while working on IRL implementation.

IRL has been used in many domains and its applications have been divided into three categories [33]. The first one is the development of autonomous intelligent agents that mimic the expert. Some of the examples of this category include the development of autonomous helicopters [48], robot autonomous systems [38, 49], path planning [50, 51], autonomous vehicles [16, 52], and playing games [12, 34]. The second category is the agent's interaction with other systems to improve the reward function estimation. Some of the examples of this category include pedestrians trajectory [53,54,55,56], haptic assistance, and dialogue system [57,58,59]. The third category is learning about the system using the estimated reward function. Some of the examples of this third category include cyber-physical systems [60], finance trading [61], and market estimation [62].

Fuzzy AHP

AHP is a quantitative technique that was introduced by the author Saaty [63]. This technique armatures a multi-person, multi-criteria, multi-period problem hierarchically, so that solutions are simplified. AHP also has some limitations. These are listed below:

-

(a)

Unable to handle ambiguity and vagueness related to human judgments.

-

(b)

Experts’ opinions and preferences influence the AHP method.

-

(c)

AHP ranking method is imprecise.

-

(d)

It uses an unbalanced scale of judgment.

To overcome these limitations, fuzzy set theory is integrated with AHP. This fuzzy AHP helps in capturing the vagueness, impreciseness, and ambiguity of human judgments by better handling linguistic variables. This approach has been used extensively in many different applications like risk assessment in construction sites [64], gas explosion risk assessment in coal mines [65], selection of strategic renewable resources [66], steel pipes supply selection [67], aviation industry [68], banking industry [69], supply chain management [70], etc. Fuzzy AHP was introduced by the author Chang [71]. The pairwise comparison scale uses mostly triangular fuzzy numbers (TFNs), and for synthetic extent value of pairwise comparisons, the extent analysis method is used. It is important why fuzzy AHP has been preferred over other MCDM methods, this is because:

-

(1)

Fuzzy AHP is having less computational complexity as compared with other MCDM methods like ANP, TOPSIS, ELECTRE, and multi-objective programming.

-

(2)

It is the most widely used MCDM method [72].

-

(3)

One of the main advantages of the fuzzy AHP method is that it can simultaneously evaluate the effects of different factors in realistic situations.

-

(4)

To deal with imprecision and vagueness of judgments, fuzzy AHP uses a pairwise comparison.

Definition1: A fuzzy set S is represented as {E, μS (x) | x € X} where X = {x1, x2, x3 …} and μS (x) = [73] For a TFN (u, v, w), its membership function is defined in Eq. (1)

Equation (1) states that u ≤ v ≤ w where u means lower value and w means the upper value of the fuzzy set M and v lies between p and r or, in other words, it is the modal value. Different operations can be performed on the TFNs S1 = (u1, v1, w1) and S2 = (u2, v2, w2). These are mentioned below in Eqs. (2)–(6)

The linguistic variables and corresponding TFNs are shown in Table 3.

The steps used by the author Chang [71] are mentioned below:

Step 1: The pairwise fuzzy matrix (\(\tilde{S}\)) is created using the mathematical Eq. (7). TFNs are used while creating a pairwise fuzzy matrix

where \(\tilde{s}\) = (ugh, vgh, wgh) where g, h = 1, 2, 3, ……n are the criterion and u, v, w are triangular fuzzy numbers. Here, \(\tilde{s}_{gh}\) indicates the decision makers' preference with the help of fuzzy numbers of gth criterion over hth criterion. Parameter u represents minimal value, parameter v depicts median value and parameter w characterizes maximum possible value.

The pairwise fuzzy matrix is an n x n matrix having fuzzy numbers \(\tilde{s}_{gh}\) as shown in Eq. (8)

The values in the pairwise fuzzy matrix \(\tilde{S}_{gh}\) are filled using the linguistic scale as mentioned in Table 3.

Step 2: The fuzzy synthetic extent values (CVs) are calculated using Eq. (9) for the xth object for all criteria (C) as

Parameters u and w are lower limit and upper limit, respectively, whereas v is the modal limit. Parameters g and h are the criteria, and n denotes the maximum number of criteria.

Step 3: Suppose, S1 = (u1, v1, w1) and S2 = (u2, v2, w2) are two fuzzy matrices. S1 and S2 denote the values of extent analysis. The degree of possibility of S1 ≥ S2 can be defined in Eq. (10) as

Here, D denoted the degree of possibility. For comparing S1 and S2, it is essential to calculate both D (S1 ≥ S2) and D (S2 ≥ S1). The degree of possibility for convex fuzzy numbers to be greater than t convex fuzzy numbers Sg (g = 1, 2, 3, t) can be illustrated as Eq. (11)

In Eq. 11, g is denoted as complex fuzzy numbers, and n denotes the limit of complex fuzzy numbers.

Step 4: Calculate fuzzy weight (FW`) and non-fuzzy weight or normalized weight (FW) using Eqs. (12) and (13) for all criteria (i.e., problems). In Eq. 12, d` (Ag) denotes the minimum degree of programming among associated criteria. In Eq. 12, d` (Ag) denotes the minimum value of criteria g among all criteria, and in Eq. 13, d (An) denotes the normalized value of d` (An)

Fuzzy TOPSIS

Huang and Yoon [74] have introduced this classical multi-criteria decision-making TOPSIS method. The concept of TOPSIS is based on the ideal solution determination. It differentiates between the cost and benefit category, and selects the solution that is closer to the ideal solution. The solution is selected when it is far away from the negative ideal solution (NIS) and closer to the positive ideal solution (PIS). In the classical TOPSIS, human judgments are based on crisp values, but this representation method is not always suitable for real life as some uncertainty and vagueness are associated with judgments. Therefore, the fuzzy approach is the best method to handle the uncertainty and vagueness of human judgments. In other words, it can be said that fuzzy linguistic values are preferred over crisp values. For this reason, fuzzy TOPSIS is used for handling real-life problems that are multifaceted as well as not well defined [75,76,77,78]. The current study has used TFNs for the implementation of fuzzy TOPSIS as they are easy to understand, calculate, and analyze.

The steps used in this approach are mentioned below:

Step 1: Use the linguistic rating scale as mentioned in Table 3 for the computation of the fuzzy matrix. Here, linguistic values are apportioned to each solution (i.e., alternatives) corresponding to the identified problems (i.e., criteria).

Step 2: After the computation of the fuzzy matrix, compute the aggregate fuzzy evaluation matrix for the solutions.

Suppose, there are ‘i’ experts then fuzzy rating for ith expert is Tghi = (aghi, bghi, cghi) where g = 1, 2, 3…….m and h = 1, 2, 3……n. Tghi depicts a fuzzy evaluation matrix and it is denoted by TFNs having parameters letters a, b, c where parameter a is the minimum value, b is the average value, and c denotes the maximum possible value. Parameter g denotes alternatives, h denotes criteria, and i denotes the expert. Here, m denotes maximum alternatives and n denotes maximum criteria. The aggregate fuzzy rating of solutions for 8 identified problems is computed as mentioned in Eq. (14)

where g denotes alternatives, h denotes criteria, and i denotes the expert.

Step 3: Create the normalized fuzzy matrix. In this, the raw data are normalized using the linear scale transformations, such that all solutions are comparable. The normalized fuzzy matrix is depicted in Eqs. 15, 16, and 17 as

where \(\tilde{Q}\) depicts the normalized fuzzy matrix, g denotes alternatives, m denotes maximum alternatives, h denotes criteria, and n denotes maximum criteria in the approach

\(\tilde{q}_{gh}\) also denotes the normalized fuzzy matrix, in Eq. 16, it is first calculated by dividing the each TFNs value by \(c_{h}^{*}\) (it denotes the maximum possible value of benefit criteria), and Eq. 17 shows the updated value of normalized fuzzy matrix values by dividing \(a_{h}^{ - }\) (it denotes the minimum value of cost criteria) with maximum possible value.

Step 4: Calculate the weighted normalized fuzzy matrix.

It is calculated when its weight wh is multiplied by a normalized fuzzy matrix \(\tilde{q}_{gh}\). It is denoted as \(\tilde{Z}\) in Eq. (18)

Step 5: Compute fuzzy PIS (FPIS) and fuzzy NIS (FNIS) using Eqs. (19) and (20)

where \(A^{*}\) is fuzzy positive ideal solution (FPIS), \(\tilde{z}_{n}^{*}\) denotes TFNs having FPIS, and \(c_{h}^{*}\) denotes the maximum possible value of benefit criteria

where \(A^{ - }\) is fuzzy negative ideal solution (FNIS), \(\tilde{z}_{n}^{ - }\) denotes TFNs having FNIS, and \(\tilde{a}_{h}^{ - }\) denotes the minimum possible value of cost criteria.

Step 6: Compute the distance (\(d_{g}^{ + }\), \(d_{g}^{ - } )\) of each solution from \(A^{*}\) and \(A^{ - }\) with the help of Eqs. (21) and (22)

Step 7: The last step is to compute CofC using Eq. (23) and rank the solutions based on CofC

The ranking is done for all the solutions by looking at the values of \(CofC_{g}\). The highest value is ranked highest and the lowest value is ranked lowest.

Proposed method

The current study is based on a hybrid fuzzy AHP–TOPSIS approach. It consists of three phases. Phase 1 is about identifying and finalizing the barriers and solutions of IRL (explained in Sect. Literature review). Phase 2 is about the fuzzy AHP that is used to calculate the weights for each barrier/problem that is associated with IRL (results are mentioned in Sect. Fuzzy analytical hierarchical process experimental results). Phase 3 targets the fuzzy TOPSIS approach that is used to rank the solutions/alternatives for the identified problems (results are mentioned in Sect. Fuzzy TOPSIS experimental results). In the proposed method, some key assumptions have been taken into consideration regarding the experts’ evaluation:

-

TFNs are used for the formalization of the experts’ evaluation as pairwise comparison matrix and fuzzy evaluation matrix are involved in the proposed method phases.

-

For any subjective evaluation process, there are imprecision and vagueness associated inherently. Therefore, all experts’ evaluations are affected by uncertainty and ambiguity as all experts have a different level of cognitive vagueness (based on their experience and knowledge). This is the reason for the usage of the fuzzy approach with TFNs, so that uncertainty and ambiguity can be handled in a better way.

-

There are no external conditions that impact the uncertainty as experts’ are confident about their evaluation in the proposed method phases and there is no necessity for the usage of more complex fuzzy tools like type-2 fuzzy sets, neutrosophic, etc.

There are some other methods for multi-criteria decision-making (MCDM) like interpretive structural modeling (ISM), elimination and choice expressing reality (ELECTRE), and analytic network process (ANP), but these decision-making processes take a lot of computation time and experts' judgments are not precise as fuzzy AHP. However, for better decision-making, fuzzy AHP has been used and integrated with TOPSIS [79]. The fuzzy-based approach is more suitable for handling uncertainty, ambiguity, and imprecision of the experts' linguistic inputs. Therefore, the hybrid fuzzy AHP–TOPSIS approach has been preferred and implemented in the current study to rank the solutions identified for IRL problems.

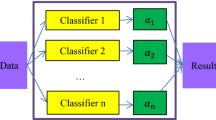

Figure 1 shows the architectural schematization of the proposed method. In the first phase, literature and the decision group play a key role in the finalization of the problems and solutions of IRL. The decision group consists of experts from the software industry, academics, and startups. At the end of the first phase, eight problems of IRL have been finalized with eight solutions have also been finalized that can overcome these IRL problems. Most importantly, decision hierarchy structuring is finalized in this phase, as shown in Fig. 2. In the second phase, the fuzzy AHP approach is implemented. The fundamental step in this approach is to create a pairwise matrix with experts’ opinions.

The linguistic terms are used to define the relative importance of identified criteria (IRL problems) with one another, and then, they are mapped with the fuzzy set, so that the same experts' opinions are produced. TFNs are one of the most popular ways of representing experts' opinions. In the proposed method, they are represented with three letters u, v, and w. These letters represent minimum possible value, median value, and maximum possible value, respectively. After this fundamental step, fuzzy synthetic criteria values, degree of possibility along with normalized weights are computed. These normalized weights act as an input to the third and final phase. The identified IRL problems can also be ranked according to the computed normalized weights. The weight with the highest value is the top most priority IRL problem and the weight with the lowest value is the least priority IRL problem among all the identified IRL problems. In the third phase, the fuzzy TOPSIS approach is implemented. The fundamental step in this approach is to create a fuzzy evaluation matrix with experts’ opinions, and then, aggregated fuzzy evaluation matrix is created followed by a normalized fuzzy evaluation matrix and a weighted normalized fuzzy evaluation matrix. Further computes FPIS and FNIS and computes the distance of each solution from FPIS and FNIS. In the end, calculate the closeness coefficient that is used to rank the solutions based on the identified weights of the IRL problems. The top priority is given to the solutions that are ranked 1 followed by 2 and so on. The pseudocode schematization of the proposed approach is mentioned below.

The decision hierarchy for overcoming barriers/problems of IRL implementation is mentioned in Fig. 2. It consists of three levels where level 1 states the overall goal (overcoming barriers/problems of IRL implementation). Level 2 (Barrier criteria) focuses on the identified barriers/problems of IRL implementation and Level 3 (Solution Alternatives) focuses on the identified solutions that can be used for achieving the overall goal of the successful implementation of IRL.

Experimental results

Fuzzy analytical hierarchical process experimental results

To implement fuzzy AHP for rating the IRL problems, first, the linguistic scale should be finalized which is shown in Table 3. After the finalization of the linguistic scale, the TFNs’ decision matrix for IRL problems is made using experts’ opinions. Table 4 shows the decision matrix that has been made using one expert opinion. Table 5 shows the aggregated decision matrix that has been made using 15 experts’ opinions. Out of these, six are software developers of industries, two are project managers who have used IRL in their projects, two are software engineers, three are academic professors, and two are directors of startups.

After computing aggregated decision matrix, fuzzy synthetic extent values (CVs) for all IRL problems are calculated for all fuzzy synthetic criteria (Cs), as shown in Table 6. After performing this, compute the degree of possibility and a minimum degree of possibility for convex fuzzy numbers (see Table 7).

The last step is to compute the fuzzy weight and normalized weight using Eqs. (12) and (13). After figuring out the normalized weights, the IRL problems are prioritized. The highest weight is ranked highest and the lowest weight is ranked the lowest among IRL problems as can be seen in Table 8.

The results show that the most weighted IRL problem is ‘Lack of robust reward functions’ and the least weighted IRL problem is ‘inaccurate inferences’. To tackle different problems, different solutions have been identified and they are ranked using the approach fuzzy TOPSIS.

Performance analysis metrics

Many performance metrics are used to evaluate the fuzzy AHP model. These are mean, mean absolute error (MAE), root-mean-squared error (RMSE), mean-squared error (MSE), and relative error. The lower the value of MSE, RMSE, and MAE, the better the model fits and the higher the relative accuracy, the better the model fits. All the performance analysis metrics of FAHP are calculated using the normalized weights of Table 8. The performance metrics calculations for the current study are mentioned below using Eqs. (24) to (29)

-

(a)

Mean

where \(\overline{x}\) is the arithmetic mean, n is the number of weight vectors, and \(x_{i}\) is the result of ith measurement.

-

(b)

Mean absolute error (MAE)

It is the average magnitude difference between the observations and its mean. It will be good for the model if the value of this metric is low

where \(\overline{x}_{i}\) is the arithmetic mean, n is the number of weight vectors, and \(x_{i}\) is the magnitude of ith observation

-

(iii)

Mean-squared error (MSE)

It is the average squared difference between the estimated values and the actual value. For value to be model fit, it should be on the lower side

where \(\overline{x}_{i}\) is the arithmetic mean, n is the number of weight vectors, and \(x_{i}\) is the magnitude of ith observation

-

(iv)

Root-mean-squared error (RMSE)

It is known as a good measure to predict the error of a model while working on quantitative data

where \(\overline{x}_{i}\) is the arithmetic mean, n is the number of weight vectors, and \(x_{i}\) is the magnitude of ith observation

-

(e)

Relative accuracy

It is used to find out the relative accuracy of the model. The value of relative accuracy should be higher, such that model is a good fit

where \(\overline{x}_{i}\) is the arithmetic mean, n is the number of weight vectors, and \(x_{i}\) is the magnitude of ith observation

Fuzzy TOPSIS experimental results

The experts’ inputs help in building the fuzzy evaluation matrix using linguistic variables as mentioned in Table 3. These variables are then transformed into TFNs as mentioned in Table 3. The current study has rated eight solutions corresponding to eight problems with the help of 15 experts. Table 9 shows the fuzzy evaluation matrix created by expert 1 only, whereas Table 10 shows the aggregated fuzzy evaluation matrix of all 15 experts. The aggregation rule is already mentioned in Eq. (14).

The current study targets to reduce the problems and these problems are reflected as cost criteria. A normalized fuzzy matrix (see Table 11) is built using Eqs. (15) to (17) and a weighted normalized matrix (see Table 12) is built using Eq. (18).

All the problems are considered as cost criteria in the current study. Therefore, FPIS (\(A^{*}\)) and FNIS (\(A^{ - }\)) are mentioned as \(z^{*}\) = (0, 0, 0) and \(z^{ - }\) = (1, 1, 1), respectively, for each problem. The distance (\(d_{g}^{ + }\), \(d_{g}^{ - } )\) of each solution from FPIS and FPIN is computed with the help of Eqs. (21) and (22). For example, the distance d (A1, \(A^{*}\)) of S-IRL1 and d (A1, \(A^{ - }\)) of P-IRL1 from FPIS and FNIS is calculated as follows:

Using these CofC for all solutions is calculated using Eq. 23.

Table 13 shows d (A1, \(A^{*}\)), d (A1, \(A^{ - }\)) and CofCg for all solutions, and they are ranked based on CofC in descending order.

Results and discussion

Hybrid fuzzy systems are used in the past for understanding customer behavior [80], heart disease diagnosis [81], and diabetes prediction [81]. Fuzzy logic classifier along with reinforcement learning is used for the development of an intelligent power transformer [82], for handling continuous inputs and learning from continuous actions [83], for small lung nodules detection [84], for finding appropriate pedagogical content [85], for robotic soccer games [44, 45], for water blasting system for ship hull corrosion cleaning [86], and for classification of diabetes [73]. All the above mentioned have used fuzzy systems to figure out the issues and solutions in different concepts, but none of them has prioritized the problems or solutions identified in the different concepts. The same case is also applied to the IRL. Therefore, the first-ever hybrid fuzzy AHP–TOPSIS approach has been used in the current study which turns out to be the elementary and rudimental approach for digging out the important problems and solutions for successful implementation of IRL technique in real life by ranking all the solutions. There are 8 problems and 8 solutions that are identified through literature. Fuzzy AHP has been used to get the weights of the problems and these calculated weights by fuzzy TOPSIS to rank the solutions. The computed weights during the fuzzy AHP approach are compared to rank the IRL problems. These are ranked as P-IRL1 > P-IRL7 > P-IRL6 > P-IRL4 > P-IRL3 > P-IRL2 > P-IRL8 > P-IRL5, as shown in Table 8. The major concern that is identified during the accurate implementation of IRL is ‘lack of robust reward functions’. The next ranked problem is ‘Lack of scalability with large problem size’. It tells the IRL users to need to put more emphasis on scalability with large problem sizes instead of only concentrating on just solving problems. ‘Sensitivity to Correctness of Prior Knowledge’ is the next ranked category that tells experts should be aware of the feature functions and transition functions of the Markov decision process, such that human judgment is not too subjective for taking decisions. ‘Ill-posed problems’ is ranked fourth in IRL implementation and it states that uncertainty is involved in obtaining the reward functions, or in other words, it can be said that loss functions are not introduced extensively in classical IRL algorithms. The problem category based on ‘Stochastic policy’ is ranked fifth. If experts’ behavior is deterministic in nature, then mixed or dynamic policy can be inaccurate due to its stochastic nature. The next ranked problem is ‘Imperfect and Noisy inputs’. If in real-life implementation of IRL, the inputs are incorrect and noisy, then it leads to failure of IRL in real life. The next ranked category is ‘Lack of reliability. This means inappropriate learning of classical IRL algorithm from reward functions for failed and successful demonstrations. The last ranked category is ‘Inaccurate inferences’. It states that generalization of learned information of states and actions to other initial states is difficult, or in other words, greater approximation errors in reward functions. For the effective implementation of IRL, the fuzzy TOPSIS approach has been used to rank the solutions based on the closeness coefficient, as shown in Table 13. The ranking of the solutions is S-IRL2 > S-IRL8 > S-IRL3 > S-IRL7 > S-IRL4 > S-IRL1 > S-IRL6 > S-IRL5. The results show that the least important solution is ‘Inculcate risk- awareness factors in IRL algorithms’ and the most important solution is ‘Supports optimal policy and rewards functions along with stochastic transition models’. The current study reveals that usage of optimal policy and learning of rewards functions is necessary for the IRL implementation success.

Conclusion and future scope

In the present scenario, the demand for autonomous agents is at a great height as they can do mundane and complex tasks without the help of other sources. IRL is used in autonomous agents’ example cars without drivers. IRL is mostly used in the automotive industry, textile industry, automatic transport system, supply and chain management, etc. IRL is also suffering from many problems. Majorly eight problems have been identified from the literature and different solutions have been proposed for mitigating these IRL problems. It is very difficult to implement all the solutions together, so these solutions are prioritized while doing decision-making. The current study has used a hybrid fuzzy AHP–TOPSIS approach for ranking the solutions. The fuzzy AHP method is used to obtain the weights of the IRL problems, whereas the fuzzy TOPSIS method ranks the solutions for the implementation of IRL in a real-life scenario. The important thing to note is, computed weights are used in figuring out the rank of solutions. Fifteen expert opinions are used to compute weights and rank the solutions. Results show that the most significant issue in IRL is of ‘lack of robust reward functions’ with a weight of 0.180. The least significant problem in IRL real-life implementation is ‘Inaccurate inferences’ with a weight of 0.063. It mainly focuses that human judgments fail to do a generalization of outputs. The most significant solution is ‘Supports optimal policy and rewards functions along with stochastic transition models’ and the least significant solution is ‘Inculcate risk- awareness factors in IRL algorithms’. This ranking is based on the CofC having the value of 0.967156846 and 0.94958721, respectively. These solutions help the industries in their decision-making, so that projects will become successful. The findings of the current study provide the following insights for the future research:

-

The fuzzy TOPSIS results can be influenced by the distance measures, approaches like weighted correlation coefficients [87] and picture fuzzy information measure, [88] should be used to improve the reliability of results.

-

In the future, other multi-criteria and multi-facet decision-making criteria can be used like fuzzy VIKOR, fuzzy PROMETHEE, or fuzzy ELECTRE, and the results can be compared with the current study results.

-

The experts' experience and knowledge play important role in the results of the current study and there are chances of biases in the results. This can be minimized or eliminated in the future by adding more experts to the study.

-

In the future, case studies can be done in industries that are using IRL implementation in their processes.

-

As there is up-gradation of technology in the future, some other barriers, as well as solutions, may be identified [89] and they can be taken into the future study.

-

IRL methods should be studied in the future in the context of multi-view learning and transfer learning approaches [56].

References

Zhifei S, Joo EM (2012) A survey of inverse reinforcement learning techniques. Int J Intell Comput Cybern 5(3):293–311. https://doi.org/10.1108/17563781211255862

Argall BD, Chernova S, Veloso M, Browning B (2009) A survey of robot learning from demonstration. Robot Auton Syst 57(5):469–483. https://doi.org/10.1016/j.robot.2008.10.024

Datta P, Sharma B (2017) A survey on IoT architectures, protocols, security and smart city based applications. In: 8th IEEE International Conference on Computing, Communications and Networking Technologies, ICCCNT 2017, 1–5. https://doi.org/10.1109/ICCCNT.2017.8203943

Schaal S (1999) Is imitation learning the route to humanoid robots? Trends Cogn Sci 3(6):97–114. https://doi.org/10.1007/978-3-319-15425-1_6

Jara-Ettinger J (2019) Theory of mind as inverse reinforcement learning. Curr Opin Behav Sci 29:105–110. https://doi.org/10.1016/j.cobeha.2019.04.010

Russell S (1998) Learning agents for uncertain environments. In: Proceedings of the Annual ACM Conference on Computational Learning Theory, 101–103.

Ng AY, Russell S (2000) Algorithms for inverse reinforcement learning. ICML 1:2–9

Dimitrakakis C, Rothkopf CA (2012) Bayesian multitask inverse reinforcement learning. Eur Worksh Reinforce Learn. https://doi.org/10.1007/978-3-642-29946-9_27

Imani M, Ghoreishi SF (2021) Scalable inverse reinforcement learning through multifidelity bayesian optimization. IEEE Trans Neural Netw Learn Syst. https://doi.org/10.1109/TNNLS.2021.3051012

Ni T, Sikchi H, Wang Y, Gupta T, Lee L, Eysenbach B (2020) f-IRL: inverse reinforcement learning via state marginal matching. ArXiv: 1–25

Rhinehart N, Kitani KM (2020) First-person activity forecasting from video with online inverse reinforcement learning. IEEE Trans Pattern Anal Mach Intell 42(2):304–317. https://doi.org/10.1109/TPAMI.2018.2873794

Majumdar A, Singh S, Mandlekar A, Pavone M (2017) Risk-sensitive inverse reinforcement learning via coherent risk models. Robot Sci Syst 16: 117–126. https://doi.org/10.15607/rss.2017.xiii.069

Pirotta M, Restelli M (2016) Inverse reinforcement learning through policy gradient minimization. In: 30th AAAI Conference on Artificial Intelligence, AAAI 2016, 1993–1999.

Qureshi AH, Boots B, Yip MC (2018) Adversarial imitation via variational inverse reinforcement learning, 1–14. arXiv:1809.06404

Shiarlis K, Messias J, Whiteson S (2016) Inverse reinforcement learning from Failure. In: Proceedings of the International Conference on Autonomous Agents and Multiagent Systems, 1060–1068

You C, Lu J, Filev D, Tsiotras P (2019) Advanced planning for autonomous vehicles using reinforcement learning and deep inverse reinforcement learning. Robot Auton Syst 114:1–18. https://doi.org/10.1016/j.robot.2019.01.003

Kangasrääsiö A, Kaski S (2018) Inverse reinforcement learning from summary data. Mach Learn 107: 1517–1535. https://doi.org/10.1007/s10994-018-5730-4

Shao Z, Er MJ (2012) A review of inverse reinforcement learning theory and recent advances. IEEE Cong Evolut Comput CEC 2012:10–15. https://doi.org/10.1109/CEC.2012.6256507

Lopes M, Melo F, Montesano L (2009) Active learning for reward estimation in inverse reinforcement learning. Jt Eur Conf Mach Learn Knowl Discov Datab. https://doi.org/10.1007/978-3-642-04174-7_3

Brown DS, Goo W, Nagarajan P, Niekum S (2019) Extrapolating beyond suboptimal demonstrations via inverse reinforcement learning from observations. In: International Conference on Machine Learning, 783–792.

Ziebart BD, Maas A, Bagnell JA, Dey AK (2008) Maximum entropy inverse reinforcement learning. In: Proceedings of the Twenty-Third AAAi Conference of Artificial Intelligence, 1433–1438https://doi.org/10.1007/978-3-662-49390-8_64

Ziebart BD, Bagnell JA, Dey AK (2010) Modeling interaction via the principle of maximum causal entropy. In: Proceedings, 27th International Conference on Machine Learning, 1255–1262.

Arora S, Doshi P (2021) A survey of inverse reinforcement learning: challenges, methods and progress. Artif Intell 103500:1–48. https://doi.org/10.1016/j.artint.2021.103500

Fu J, Luo K, Levine S (2017) Learning robust rewards with adversarial inverse reinforcement learning. Arxiv 1710:1–15

Asri LE, Piot B, Geist M, Laroche R, Pietquin O, Asri LE, Piot B, Geist M, Laroche R, Inverse OPS, Asri LE, Geist M, Laroche R, Moulineaux I (2016) Score-based Inverse Reinforcement Learning. In: International Conference on Autonomous Agents and Multiagent Systems, 1–9.

Lee SJ, Popović Z (2010) Learning behavior styles with inverse reinforcement learning. ACM Trans Graph (TOG) 29(4):1–7. https://doi.org/10.1145/1778765.1778859

Klein E, Piot B, Geist M, Pietquin O (2013) A cascaded supervised learning approach to inverse reinforcement learning. Jt Eur Conf Mach Learn Knowl Discov Datab. https://doi.org/10.1007/978-3-642-40988-2_1

Rothkopf CA, Dimitrakakis C (2011) Preference elicitation and inverse reinforcement learning. Jt Eur Conf Mach Learn Knowl Discov Datab. https://doi.org/10.1007/978-3-642-23808-6_3

Sharifzadeh S, Chiotellis I, Triebel R, Cremers D (2016) Learning to drive using inverse reinforcement learning and deep Q-networks. arXiv preprint http://arxiv.org/abs/1612.03653

Šošic A, KhudaBukhsh WR, Zoubir AM, Koeppl H (2017) Inverse reinforcement learning in swarm systems. In: Proceedings of the International Joint Conference on Autonomous Agents and Multiagent Systems, AAMAS, 1413–1420.

Lin JL, Hwang KS, Shi H, Pan W (2020) An ensemble method for inverse reinforcement learning. Inf Sci 512:518–532. https://doi.org/10.1016/j.ins.2019.09.066

Piot B, Geist M, Pietquin O (2017) Bridging the gap between imitation learning and inverse reinforcement learning. IEEE Trans Neural Netw Learn Syst 28(8):1814–1826. https://doi.org/10.1109/TNNLS.2016.2543000

Adams S, Cody T, Beling PA (2022) A survey of inverse reinforcement learning. In: Artificial Intelligence Review. Springer, Netherlands. https://doi.org/10.1007/s10462-021-10108-x

Hadfield-Menell D, Dragan A, Abbeel P, Russell S (2016) Cooperative inverse reinforcement learning. In: 30th Conference on Neural Information Processing Systems (NIPS), 3916–3924

Wulfmeier M, Ondruska P, Posner I (2015) Deep inverse reinforcement learning, 1–9. ArXiv PreprintarXiv:1507.04888

Herman M, Gindele T, Wagner J, Schmitt F, Burgard W (2016) Inverse reinforcement learning with simultaneous estimation of rewards and dynamics. Artif Intell Stat 51:102–110

Boularias A, Kober J, Peters J (2011) Relative entropy inverse reinforcement learning. In: JMLR Workshop and Conference Proceedings, 182–189.

Vasquez D, Okal B, Arras KO (2014) Inverse Reinforcement Learning algorithms and features for robot navigation in crowds: an experimental comparison. IEEE Int Conf Intell Robot Syst. https://doi.org/10.1109/IROS.2014.6942731

Castro PS, Li S, Zhang D (2019). Inverse reinforcement learning with multiple ranked experts. ArXiv arXiv:1907.13411.

Bloem M, Bambos N (2014) Infinite time horizon maximum causal entropy inverse reinforcement learning. IEEE Conf Decis Control. https://doi.org/10.1109/TAC.2017.2775960

Self R, Abudia M, Kamalapurkar R (2020) Online inverse reinforcement learning for systems with disturbances. ArXiv. https://doi.org/10.23919/ACC45564.2020.9147344

Memarian F, Xu Z, Wu B, Wen M, Topcu U (2020) Active task-inference-guided deep inverse reinforcement learning. Proc IEEE Conf Decis Control. https://doi.org/10.1109/CDC42340.2020.9304190

Nguyen QP, Low KH, Jaillet P (2015) Inverse reinforcement learning with locally consistent reward functions. Adv Neural Inform Process Syst: 1747–1755

Shi H, Lin Z, Hwang KS, Yang S, Chen J (2018) An adaptive strategy selection method with reinforcement learning for robotic soccer games. IEEE Access 6:8376–8386. https://doi.org/10.1109/ACCESS.2018.2808266

Shi Z, Chen X, Qiu X, Huang X (2018) Toward diverse text generation with inverse reinforcement learning. IJCAI Int Joint Conf Artif Intell. https://doi.org/10.24963/ijcai.2018/606

Choi J, Kim KE (2015) Nonparametric bayesian inverse reinforcement learning for multiple reward functions. IEEE Trans Cybern 45(4):793–805. https://doi.org/10.1109/TCYB.2014.2336867

Brown DS, Cui Y, Niekum S (2018) Risk-aware active inverse reinforcement learning. In: Conference on Robot Learning, 362–372.

Abbeel P, Coates A, Quigley M, Ng A (2006) An application of reinforcement learning to aerobatic helicopter flight. Advances in Neural Information Processing Systems, 1–8.

Inga J, Köpf F, Flad M, H S (2017) Individual human behavior identification using an inverse reinforcement learning method. IEEE Int Conf Syst Man Cybern (SMC): 99–104.

Kim B, Pineau J (2016) Socially adaptive path planning in human environments using inverse reinforcement. Int J Soc Robot 8(1):51–66

Pflueger M, Agha A, Gaurav S (2019) Rover-IRL: inverse reinforcement learning with soft value. IEEE Robot Autom Lett 4(2):1387–1394

Kuderer M, Gulati SBW (2015) Learning driving styles for autonomous vehicles from demonstration. In: IEEE International Conference on Robotics and Automation (ICRA), 2641–2646.

Kuderer M, Kretzschmar HBW (2013) Teaching mobile robots to cooperatively navigate in populated environments. In: IEEE/RSJ International Conference on Intelligent Robots and Systems, 3138–3143.

Pfeiffer M, Schwesinger U, Sommer H, Galceran ESR (2016) Predicting actions to act predictably: cooperative partial motion planning with maximum entropy models. In: IEEE/RJS International Conference on Intelligent Robots and Systems (IROS), 2096–2101.

Ziebart BD, Ratliff N, Gallagher G, Mertz C, Peterson K, Bagnell JA, Hebert M, Dey AK, Srinivasa S (2009) Planning-based prediction for pedestrians. In: IEEE/RSJ International IEEE Conference Intelligent Robots and Systems, 3931–3936

Chinaei HR, Chaib-Draa B (2014) Dialogue POMDP components (part II): learning the reward function. Int J Speech Technol 17(4):325–340

Scobee DR, Royo VR, Tomlin CJ, S S. (2018) Haptic assistance via inverse reinforcement learning. IEEE Int Conf Syst Man Cybern (SMC): 1510–1517

Chandramohan S, Geist M, Lefevre FPO (2011) User simulation in dialogue systems using nverse reinforcement learning. Interspeech, 1025–1028.

Chinaei HR, Chaib-Draa B (2014) Dialogue POMDP components (part I): learning states and observations. Int J Speech Technol 17(4):309–323

Elnaggar MBN (2018) An IRL approach for cyber-physical attack intention prediction and recovery. In: IEEE Annual American Control Conference (ACC), 222–227.

Yang SY, Qiao Q, Beling PA, Scherer WT, Kirilenko A (2015) Gaussian process-based algorithmic trading strategy identification. Quant Finan 15(10):1683–1703

Yang SY, Yu YAS (2018) An investor sentiment reward-based trading system using Gaussian inverse reinforcement learning algorithm. Expert Syst Appl 114:388–401

Saaty TL (1980) The analytic hierarchy process. New McGraw-Hill. https://doi.org/10.1016/0305-0483(87)90016-8

Lyu H-M, Sun W-J, Shen S-L, Zhou AN (2020) Risk assessment using a new consulting process in fuzzy AHP. J Constr Eng Manag 146(3):04019112. https://doi.org/10.1061/(asce)co.1943-7862.0001757

Li M, Wang H, Wang D, Shao Z, He S (2020) Risk assessment of gas explosion in coal mines based on fuzzy AHP and bayesian network. Process Saf Environ Prot 135:207–218. https://doi.org/10.1016/j.psep.2020.01.003

Wang Y, Xu L, Solangi YA (2020) Strategic renewable energy resources selection for Pakistan: based on SWOT-fuzzy AHP APPROACH. Sustain Cities Soc 52:1–14. https://doi.org/10.1016/j.scs.2019.101861

Zavadskas EK, Turskis Z, Stević Ž, Mardani A (2020) Modelling procedure for the selection of steel pipe supplier by applying the fuzzy ahp method. Oper Res Eng Sci Theory Appl 3(2):39–53. https://doi.org/10.31181/oresta2003034z

Büyüközkan G, Havle CA, Feyzioğlu O (2020) A new digital service quality model and its strategic analysis in aviation industry using interval-valued intuitionistic fuzzy AHP. J Air Transp Manag. https://doi.org/10.1016/j.jairtraman.2020.101817

Raut R, Cheikhrouhou N, Kharat M (2017) Sustainability in the banking industry: a strategic multi-criterion analysis. Bus Strateg Environ 26(4):550–568. https://doi.org/10.1002/bse.1946

Somsuk N, Laosirihongthong T (2014) A fuzzy AHP to prioritize enabling factors for strategic management of university business incubators: resource-based view. Technol Forecast Soc Chang 85:198–210. https://doi.org/10.1016/j.techfore.2013.08.007

Chang DY (1996) Applications of the extent analysis method on fuzzy AHP. Eur J Oper Res 95(3):649–655. https://doi.org/10.1016/0377-2217(95)00300-2

Kaya İ, Çolak M, Terzi F (2019) A comprehensive review of fuzzy multi criteria decision making methodologies for energy policy making. Energy Strategy Rev 24 (May 2017): 207–228. https://doi.org/10.1016/j.esr.2019.03.003

Aamir KM, Sarfraz L, Ramzan M, Bilal M, Shafi J, Attique M (2021) A fuzzy rule-based system for classification of diabetes. Sensors 21(23):8095. https://doi.org/10.3390/s21238095

Huang C, Yoon K (1981) Attribute multiple decision making. Springer

Baykasoǧlu A, Kaplanoglu V, Durmuşoglu ZDU, Şahin C (2013) Integrating fuzzy DEMATEL and fuzzy hierarchical TOPSIS methods for truck selection. Expert Syst Appl 40(3):899–907. https://doi.org/10.1016/j.eswa.2012.05.046

Patil SK, Kant R (2014) A fuzzy AHP–TOPSIS framework for ranking the solutions of knowledge management adoption in supply chain to overcome its barriers. Expert Syst Appl 41(2):679–693. https://doi.org/10.1016/j.eswa.2013.07.093

Rampasso IS, Siqueira RG, Anholon R, Silva D, Quelhas OLG, Leal Filho W, Brandli LL (2019) Some of the challenges in implementing education for sustainable development: perspectives from Brazilian engineering students. Int J Sust Dev World 26(4):367–376. https://doi.org/10.1080/13504509.2019.1570981

Senthil S, Srirangacharyulu B, Ramesh A (2014) A robust hybrid multi-criteria decision making methodology for contractor evaluation and selection in third-party reverse logistics. Expert Syst Appl 41(1):50–58. https://doi.org/10.1016/j.eswa.2013.07.010

Prakash C, Barua MK (2015) Integration of AHP–TOPSIS method for prioritizing the solutions of reverse logistics adoption to overcome its barriers under fuzzy environment. J Manuf Syst 37:599–615. https://doi.org/10.1016/j.jmsy.2015.03.001

Asghar MZ, Subhan F, Ahmad H, Khan WZ, Hakak S, Gadekallu TR, Alazab M (2021) Senti-eSystem: a sentiment-based eSystem-using hybridized fuzzy and deep neural network for measuring customer satisfaction. Softw Pract Exp 51(3):571–594. https://doi.org/10.1002/spe.2853

Reddy GT, Reddy MPK, Lakshmanna K, Rajput DS, Kaluri R, Srivastava G (2020) Hybrid genetic algorithm and a fuzzy logic classifier for heart disease diagnosis. Evol Intel 13(2):185–196. https://doi.org/10.1007/s12065-019-00327-1

Malik H, Sharma R, Mishra S (2020) Fuzzy reinforcement learning based intelligent classifier for power transformer faults. ISA Trans 101:390–398. https://doi.org/10.1016/j.isatra.2020.01.016

Chen G, Douch CIJ, Zhang M (2016) Accuracy-based learning classifier systems for multistep reinforcement learning: a fuzzy logic approach to handling continuous inputs and learning continuous actions. IEEE Trans Evol Comput 20(6):953–971. https://doi.org/10.1109/TEVC.2016.2560139

Capizzi G, Sciuto GL, Napoli C, Polap D, Wozniak M (2020) Small lung nodules detection based on fuzzy-logic and probabilistic neural network with bioinspired reinforcement learning. IEEE Trans Fuzzy Syst 28(6):1178–1189. https://doi.org/10.1109/TFUZZ.2019.2952831

Madani Y, Ezzikouri H, Erritali M, Hssina B (2020) Finding optimal pedagogical content in an adaptive e-learning platform using a new recommendation approach and reinforcement learning. J Ambient Intell Humaniz Comput 11(10):3921–3936. https://doi.org/10.1007/s12652-019-01627-1

Le AV, Kyaw PT, Veerajagadheswar P, Muthugala MAVJ, Elara MR, Kumar M, Khanh Nhan NH (2021) Reinforcement learning-based optimal complete water-blasting for autonomous ship hull corrosion cleaning system. Ocean Eng 220(December):108477. https://doi.org/10.1016/j.oceaneng.2020.108477

Joshi R, Kumar S (2022) A novel VIKOR approach based on weighted correlation coefficients and picture fuzzy information for multicriteria decision making. Granul Comput 7:323–336. https://doi.org/10.1007/s41066-021-00267-1

Joshi R (2022) A new picture fuzzy informatio n measure based on Tsallis–Havrda–Charvat concept with applications in presaging poll outcome. Comput Appl Math 39(2):1–24

Diabat A, Khreishah A, Kannan G, Panikar V, Gunasekaran A (2013) Benchmarking the interactions an, among barriers in third-party logistics implementation: an ISM approach. Benchmark Int J 20(6): 805–824

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

No potential conflict of interest was reported by the authors and no financial and non-financial conflict of the authors. On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kukreja, V. Hybrid fuzzy AHP–TOPSIS approach to prioritizing solutions for inverse reinforcement learning. Complex Intell. Syst. 9, 493–513 (2023). https://doi.org/10.1007/s40747-022-00807-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-022-00807-5