Abstract

This paper presents a novel random vector functional link (RVFL) formulation called the 1-norm RVFL (1N RVFL) networks, for solving the binary classification problems. The solution to the optimization problem of 1N RVFL is obtained by solving its exterior dual penalty problem using a Newton technique. The 1-norm makes the model robust and delivers sparse outputs, which is the fundamental advantage of this model. The sparse output indicates that most of the elements in the output matrix are zero; hence, the decision function can be achieved by incorporating lesser hidden nodes compared to the conventional RVFL model. 1N RVFL produces a classifier that is based on a smaller number of input features. To put it another way, this method will suppress the neurons in the hidden layer. Statistical analyses have been carried out on several real-world benchmark datasets. The proposed 1N RVFL with two activation functions viz., ReLU and sine are used in this work. The classification accuracies of 1N RVFL are compared with the extreme learning machine (ELM), kernel ridge regression (KRR), RVFL, kernel RVFL (K-RVFL) and generalized Lagrangian twin RVFL (GLTRVFL) networks. The experimental results with comparable or better accuracy indicate the effectiveness and usability of 1N RVFL for solving binary classification problems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Neural networks are powerful models for data mining and information engineering which can learn from data to construct feature-based classification models and nonlinear prediction models. Training neural networks (NNs) requires the optimization of a highly non-convex landscape with several local minima and saddle points. Alternative kernel-based methods like support vector machine (SVM) [7, 11, 17] on the other hand, produce well-posed convex problems, which is one of the main reasons for their success over the last few decades. Kernel methods, however, fail to scale effectively to large datasets because one of their main tasks is to compute pairwise kernel values over the complete dataset. The main benefit of simple NN architecture is that it enables an acceptable level of solution to be achieved in one-hundredth (or even one-millionth) of the time taken by larger, more complex models while maintaining high optimality [37]. A single hidden layer feed-forward NN (SLFN) can handle a function that contains non-linearity with arbitrary precision [26]. They have been widely implemented in various problems associated with classification [19, 22, 24, 33]. Moreover, one of the most popular categories of NN is feed-forward NNs with random weights which were popularized by Pao et al. [32] in their work. They introduced novel random vector functional link networks (RVFL) [5, 8, 30]. In RVFL, it is possible to connect inputs and outputs directly, leading towards an excellent generalization performance. The weights between the input and the hidden layers can also be produced randomly [16, 45]. Zhang and Suganthan [45] further extended the study by performing a comprehensive evaluation of RVFL networks. They performed operations on RVFL using some popular activation functions and discovered that the hardlim and sign activation function significantly degrade the performance of the algorithm. They also suggested that the bias used in RVFL can be a tunable configuration for specific problems. Li et al. [25] proposed a novel SVM+ which uses the learning using privileged information (LUPI) [40] procedure. They further suggested a kernelized version of that model for non-linear data processing. They also use the QP solver from MATLAB R2014b as a starting point, to solve the QP problem and proposed MAT-SVM+ . Inspired by the work of Li et al. [25], Zhang and Wang [48] embedded the LUPI in the RVFL and proposed a novel RVFL+ model. They further used the kernel trick in the model and proposed a kernel RVFL+ (KRVFL+). Both RVFL+ and KRVFL+ are in analogy to Teacher–Student Interaction [39] in the human learning process. Xu et al. [43] proposed a novel kernel-based RVFL model (K-RVFL) for learning the spatiotemporal dynamic process. Because of the complexity embedded in the kernel, the K-RVFL can handle the complex process better. Kernel ridge regression (KRR) [36] has gained the attention of researchers over the last few decades due to its non-iterative learning approach. It has been widely used to solve a variety of classification [23, 35] and regression [29, 42] problems. KRR is computationally fast since, it adapts equality constraints rather than inequality constraints, and solves a series of linear equations. Several variants of KRR have been proposed to improve its classification performance. For example, Zhang et al. [46], Chang et al. [6], Zhang and Suganthan [47] and more. However, recently the growing popularity of extreme learning machine (ELM) [21, 27] is because of its high generalization performance with low computational cost [2, 14, 15, 18, 20, 38]. Peng et al. [34] proposed a novel discriminative graph regularized extreme learning machine (GELM) model to improve the classification ability of ELM. Due to its closed-form solution, the outputs for GELM can be obtained efficiently. As per the theory, conventional ELM does not guarantee its convergence. The correct convergence results have been shown and proved in Igelnik and Pao [22], Wang and Wan [41]. The RVFL has been a very efficient and powerful model for tasks related to classification and regression. Despite the high computational efficiency and high generalization ability of RVFL, it was observed that because of the randomly selected weights and hidden layer biases, it requires many nodes to accomplish satisfactory performance. Recently, the 1 norm regularization has gained tremendous popularity among researchers [13, 44] since it results in sparse outputs. The sparse output indicates that most of the elements in the output matrix are zero, hence, the decision surface can be obtained by incorporating lesser hidden nodes compared to the conventional models. Also, these sparse models are easily implementable [1]. An influential contribution in this direction is the 1-norm SVM developed by Mangasarian [28]. The solution of 1-norm SVM is computed by solving its exterior penalty problem as an unconstrained convex minimization problem using the Newton Armijo algorithm. Hence, inspired by the work of Mangasarian [28] and the recent significant pieces of literature on 1-norm regularizations, this paper proposes a novel 1 norm random vector functional link (1N RVFL) network for binary classification. The key innovations of this work are:

-

1.

A novel 1N RVFL classifier is proposed using two different activation functions.

-

2.

Due to the incorporation of the L1 norm in the proposed model, sparse feature vectors are generated, which is a very useful property for problems related to classification.

-

3.

1N RVFL produces a classifier that is based on a smaller number of input features. To put it another way, this method will suppress the required number of neurons in the hidden layer.

-

4.

Experiments on real world datasets have been considered to demonstrate the classification ability of 1N RVFL compared to other models.

The advantages and limitations of a few related classifiers are tabulated in Table 1.

The remaining paper is structured as follows; Section “Mathematical background” gives a brief mathematical description of a few related models, viz., ELM, KRR, GLTRVFL and RVFL. Section “Proposed 1-norm random vector functional link (1N RVFL)” shows the formulation and description of the proposed 1N RVFL model. In Sect. Simulation and analysis of results, the numerical experiments and comparative analysis with ELM, KRR, RVFL, K-RVFL and GLTRVFL are undertaken. In Section “Conclusion”, we conclude the paper.

Mathematical background

Consider, \(x_{i} \in R^{n}\) for \(i = 1,2,3, \ldots ,m\), to be an \(n\)-dimensional input vector and \(D\) is the \(m \times n\) dimensional matrix of training examples. \(y_{i} \in \{ + 1, - 1\}\) for \(i = 1,2,3, \ldots ,m\), is the class level of \(D\); and hence \(y = (y_{1} , \ldots y_{m} )^{t}\) is the class labels vectors and the diagonal matrix \(y\) is presented by \(Y = {\text{diag}}(y)\). Let \(A\) data samples have \(+ 1\) and \(B\) data samples have \(- 1\) class label of \(m_{1} \times n\) and \(m_{2} \times n\) dimensions respectively.

The ELM model

Let, \(\beta = (\beta_{1} , \ldots ,\beta_{T} )^{t}\), where \(T = L + n\), be the weight vector (WV) to the output neuron with \(L\) indicates the hidden layer nodes quantity. \(h_{l} (x_{i} ) = G(a_{l} ,b,x_{i} )\) for \(l = 1, \ldots ,L\) and \(i = 1,2,3, \ldots ,m\) be the output of the activation function \(G(.,.,.)\) of the \(l{\text{th}}\) hidden layer neuron with respect to the \(i{\text{th}}\) training sample. \(a_{l} = (a_{l1} , \ldots ,a_{lm} )^{t}\) indicates the WV and \(b\) represents the bias to the hidden layer nodes.

The output equation for ELM [21] can be expressed as:

The Hessian matrix can be formulated as \(H = \left[ {h_{1} (D)\; \ldots \;h_{L} (D)} \right]\), i.e.,

\(\beta\) represents the solution in the primal space that can computed as:

where, \( H^{\dag }\) is the Moore–Penrose inverse of \(H\) Now, the final classifier of ELM may be expressed as,

The KRR model

The primal problem of KRR [36] may be defined as:

where w is the unknown, e is the one’s vector and \(\psi\) is the slack variable. y is the output vector and \(\varphi (x)\) indicates the feature mapping function of the input x.

The Lagrangian of (4) may be formulated as:

where \(\ell\) is the Lagrangian multiplier.

Now equating Eq. (5) to zero and further applying the KKT condition, the dual form may be obtained as:

where \(I\) is the identity matrix with the appropriate dimension.

For a new input example, \(x \in \Re^{n}\) the KRR classifier may be generated as:

The RVFL model

RVFL [31] is a type of SLFN that randomly generates the weights to the hidden layer nodes and fixes them without tuning them iteratively.

The regularized version of RVFL can be expressed as

where \(\Omega = [H\quad D]\). Now, by differentiating (7) with respect to \(\beta\) and further equating it to zero we obtain,

For any new instance,\(x\) the classification function for RVFL can be generated as,

where, \(h(x) = \left[ {h_{1} (x) \ldots h_{L} (x)} \right]\).

The GLTRVFL model

Recently Borah and Gupta [3] proposed a generalized Lagrangian RVFL model called GLTRVFL. The primal problems of GLTRVFL are:

and

The dual formulation of (10) and (11) may be expressed in generalized form as:

Now (10) and (11) can be expressed in dual form and after forming their duals we apply the Newton iterative technique, which can be expressed as:

and

Proposed 1-norm random vector functional link (1N RVFL)

The1-norm RVFL with absolute loss is suggested in this section as a standardized classification model resulting in a robust representation of the model. Moreover, motivated by the study of Mangasarian [28], the proposed 1N RVFL model is formulated by using the Newton–Armijo algorithm that considers its dual exterior penalty problem as an unconstrained convex minimization problem. The proposed formulation leads to an iterative solution for the binary classification problem that is simple and rapidly converging.

Consider the regularized formulation of RVFL as:

where, \(C > 0\) is the trade-off parameter. By using the same procedure as Mangasarian [28] and Balasundaram and Gupta [1], Eq. (14) can be rewritten in linear programming problem form as:

Using (15) on (14), the linear programming RVFL in primal form as:

where \(d_{l}\) and \(d_{m}\) are one’s vector of dimensions \(l\) and \(m\), respectively. The optimization problem of (16) is easily solvable using the optimization toolbox in MATLAB.

However, it is recommended to determine its dual external penalty problem as an unconstrained minimization problem in \(m\) variables, whose solution can be obtained by the Newton-Armijo technique. This is due to an increase in the number of unknowns and constraints and thus an increase in the problem size.

Preposition 1: ([1, 28]) Consider the primal linearly programmable problem:

is solvable, where \(e \in R^{n} ,\) \(f \in R^{l} ,\) \(P \in R^{m \times n} ,Q \in R^{m \times l} ,b \in R^{m} ,S \in R^{k \times n} ,{\rm N} \in R^{k \times l}\) and \(h \in R^{k} ,\)

Therefore, the dual penalty optimization problem may be defined as:

Equation (18) is also solvable \(\forall \phi > 0.\) Furthermore for every \(\phi \in (0,\overline{\phi }],\;\exists \overline{\phi } > 0\) the \((w,v)\) will be a solution of (18) which leads to:

Now following Preposition 1, the dual penalty optimization problem [1] of (19) may be obtained as:

where \(\phi\) is the penalty parameter and (20) is solvable for \(\phi > 0.\) Additionally for any \(\overline{\phi } > 0\) there exists:

The unconstrained minimization problem of (20) can be solved by Newton-Armijo iterative technique.

Here \(L(w)\) is the gradient of \(L( \cdot )\) expressed by:

which is not differentiable. Hence the second-order derivative of \(L( \cdot )\) does not exist. But its “Generalized Hessian” can be formed for \(w \in R^{m}\) as:

Equation (23) follows the following form of equality:

where \({\text{diag}}\) indicates the diagonal matrix. The “Generalized Hessian” can be useful while solving the unconstrained smooth optimization problems and leads to a unique solution.

However, \(\nabla^{2} L(w)\) is positive semi-definite and might get ill-conditioned.

Remark 1

To avoid the ill-conditioning of (25) a very small positive integer \(\tau > 0\) is added to (25) and multiplied with an identity matrix \(I\) of appropriate dimension. Therefore \(\nabla^{2} L + \tau I\) is used.

In this work, the optimization problem of (24) is solved using the Newton method without the Armijo step for simplicity. This indicates that \(w^{i + 1}\) at \((i + 1){\text{th}}\) iteration is obtained by finding the solution of,

where \(i = 0,\;1\;, \ldots\)

We can determine the value of \(w\) by solving the above iterative schemes in (27).

Simulation and analysis of results

This segment investigates the performance of the 1 norm RVFL model in comparison to ELM, KRR, RVFL, K-RVFL and GLTRVFL for classification problems on some real-world benchmark datasets. All the simulations are performed in MATLAB 2008b environment on a desktop computer of 4 GB of RAM, 64-bit Windows 7 OS, Intel i5 processor with 3.20 GHz speed. No external optimization toolbox was required to solve the optimization problems of the reported models.

Zhang and Suganthan [45] suggested that the Hardlim and sign activation function generally degrade the overall performance of the RVFL algorithm. To select the best activation function, we have performed experiments on a few real-world datasets using different activation functions for the proposed 1N RVFL, viz., Hardlim, multiquard, radial basis function (RBF), triangular bias (Tri-bas), sigmoid, sine and ReLU. The average ranks are shown in Table 2 and based on that we have picked the best and the second-best activation functions i.e., ReLU, and sine have been tested in the experiments for ELM, RVFL, GLTRVFL and the proposed 1 N RVFL. Let us consider \(x\) as an input vector. For this purpose, the two activation functions could be defined as-

-

(a)

ReLU: \(\phi (x) = \max (\;0,x)\)

-

(b)

Sine: \(\phi (x) = \sin (x)\)

where \(\phi (x)\) represents the output function for the input sample \(x\).

The tests were performed using tenfold cross-validation technique. Here, the sample is split into 10 equal subsamples. One portion is used for testing each of the subsamples and the other portion is used for training. This method runs 10 times until all components are trained for at least one time [4]. For computational convenience, the input data is split into two parts, where 30% of the data are training data and the other 70% are testing data. To validate the efficacy of the proposed 1 norm RVFL model, the performance of this algorithm was compared with the ELM, KRR, RVFL, K-RVFL and GLTRVFL models on some interesting real-world benchmark datasets.

Since the large value of ELM leads to an increase in computational time [1], hence, for ELM, KRR, RVFL and GLTRVFL the optimum values of the parameter \(L\) is considered from a set of {20, 50, 100, 200, 500, 1000}. For, KRR, RVFL, K-RVFL and GLTRVFL, the optimum parameters of \(C\) are obtained from {10–5,…,105} respectively. The Gaussian kernel is selected while implementing the KRR. The kernel parameter \(\mu\) is chosen from {2–5,…,25}. In the proposed 1 N RVFL, the optimum values for the two parameters \(C\) and \(L\), are chosen from the range of {10–5,…,105} and \(\{ \,20,\,50,\,100,\,200,\,500,1000,2000\}\), respectively. The statistics of the datasets that are considered during the experiment are tabulated in Table 3, where \(S\) indicates the length and \(N\) is the total number of attributes.

All the experimental datasets are collected from UCI machine learning databases [12]. The numerical experiments on various datasets are performed after the normalization of the data. The raw data is normalized by considering \(\overline{r}_{ij} = \frac{{r_{ij} - r_{j}^{\min } }}{{r_{j}^{\max } - r_{j}^{\min } }}\), where \(r_{j}^{\max } = \,\mathop {\max }\nolimits_{i = 1, \ldots ,m} (x_{ij} )\) and \(r_{j}^{\min } = \,\mathop {\min }\nolimits_{i = 1, \ldots ,m} (x_{ij} )\) denotes the maximum and minimum values respectively of the \(j{\text{th}}\) attribute over all of the input data \(r_{i}\). \(\overline{r}_{ij}\) represents the normalized value of \(r{}_{ij}\).

For all the datasets, the attributes, the number of training and test samples and the optimum parameters are obtained using the tenfold cross-validation method. The total number of training and testing samples, the optimum values of the parameters and the classification accuracies of the models are shown in Table 4. Comparable or better performance indicates the efficacy and applicability of the proposed model. Additionally, the ranks based on classification accuracy for each dataset are exhibited in Table 5 for each reported classifier.

Friedman test with Nemenyi statistics for classifier comparison

To compare the performance of the reported algorithms with the proposed algorithm, we perform a non-parametric Friedman test from Table 5 [9] where the average ranks of the models are tabulated. The lowest average rank of our proposed 1N RVFL Sine reflects the efficiency of the model. The Friedman test for the null hypothesis may be obtained as:

\(F_{F}\) is distributed according to \(F\)-distribution with \(((10 - 1),(10 - 1) \times (23 - 1)) = (9,\,198)\) degrees of freedom. For the level of significance, \(\alpha = 0.10\) the critical value (CV) for \(F \, (9,\;198)\) is \({1}{\text{.927}}\) which is less than the \(F_{F}\). Hence, the null hypothesis can be rejected. Now, let us perform the Nemenyi test to compare the methods. Here, the critical difference (CD) is:

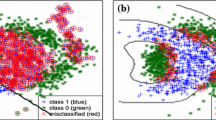

Figure 1 shows the statistical comparison of classifiers against each other based on the Nemenyi test. Groups of classifiers that are not significantly different (at \(\alpha = 0.10\)) are connected. One can notice from the figure that the 1N RVFL sine model is significantly better than ELM ReLU, ELM sine, KRR, RVFL ReLU, GLTRVFL ReLU, GLTRVFL sine and 1N RVFL ReLU models. However, despite showing a better average rank than K-RVFL, 1N RVFL sine is not significantly different from K-RVFL.

The training time and testing time (in seconds) of the reported models are shown in Tables 6 and 7 respectively for the models. It can be observed that 1N RVFL is that it is computationally less efficient than RVFL despite showing better generalization performance.

Win/Tie/Loss test

The statistical analysis approach win/tie/loss [10] for our best-proposed model, i.e., 1N RVFL sine is used to further validate the efficacy of the proposed 1N RVFL sine model. The outcomes are exhibited in the last row of Table 4. For example, the second column shows the comparison between 1N RVFL sine and ELM ReLU. It can be noted that 1N RVFL wins in 20 cases, tie in no case and loss in 3 cases compared to ELM ReLU. Similarly, the third column shows the comparison between 1N RVFL sine and ELM sine. It can be noticed that 1N RVFL wins in 18 cases, tie in no case and loss in 5 cases compared to ELM ReLU. Similar conclusions can be derived from the other columns. As it can be observed from the last row of Table 3, the proposed method has the best classification accuracy in most situations, indicating that 1N RVFL sine outperforms other algorithms.

The parameter insensitivity plots of the proposed models are presented in Figs. 2, 3 and 4 for Habarman, Vehicle 1 and Yeast3 datasets. One can notice from Figs. 2, 3 and 4 that the proposed models are not very sensitive towards the user-defined parameters \(C\) and \(L\).

Moreover, to reveal the sparseness of the proposed model, the average number of “actually” contributing nodes are portrayed in Fig. 5. A lower number of non-zero components indicates that the model is sparse. Ecoli2, New thyroid1 and Habarman datasets are used to see whether the proposed 1NRVFL solution method results in the least number of hidden nodes when determining the decision function. Hence, the degree of sparseness for each pair of (C, L) is determined. It can be observed from Fig. 5 that the 1N RVFL always leads to sparse solutions.

Conclusion

In this work, a novel 1N RVFL has been proposed for solving binary classification problems. The solutions are obtained by solving the dual using Newton-Armijo technique as an unconstrained minimization problem. The basic advantage of the proposed 1N RVFL is that it produces many coefficients with zero values, which results in a sparse output. The 1 norm RVFL is a robust model as it only considers the absolute value and can ignore the extreme values. This leads to an increase in the outliers cost exponentially. Extensive experiments on several classification datasets using the reported models portray that the proposed 1 norm RVFL shows better performance compared to ELM, KRR, RVFL, K-RVFL and GLTRVFL. The good generalization ability of the proposed model implies the usability and efficiency of the same.1N RVFL is useful for classification problems with very high dimensional input spaces. However, the major limitation of 1N RVFL is that it is computationally less efficient than RVFL. Future work of this model will be based on developing this model for solving the multiclass classification problems using the one-versus-rest or the one-versus-one procedure. This can be fruitful for various real-life classification problems such as face classification, character recognition, plant species recognition and others.

References

Balasundaram S, Gupta D (2014) 1-Norm extreme learning machine for regression and multiclass classification using Newton method. Neurocomputing 128:4–14

Balasundaram S, Gupta D (2016) On optimization based extreme learning machine in primal for regression and classification by functional iterative method. Int J Mach Learn Cybern 7(5):707–728

Borah P, Gupta D (2019) Unconstrained convex minimization based implicit Lagrangian twin random vector Functional-link networks for binary classification (ULTRVFLC). Appl Soft Comput 81:105534

Brownlee J (2018) A gentle introduction to k-fold cross-validation. https://machinelearningmastery.com/k-fold-cross-validation/. Accessed 22 June 2021

Cao F, Ye H, Wang D (2015) A probabilistic learning algorithm for robust modeling using neural networks with random weights. Inf Sci 313:62–78

Chang X, Lin SB, Zhou DX (2017) Distributed semi-supervised learning with kernel ridge regression. J Mach Learn Res 18(1):1493–1514

Cortes C, Vapnik V (1995) Support-vector networks. Mach Learn 20(3):273–297

Dai W, Chen Q, Chu F, Ma X, Chai T (2017) Robust regularized random vector functional link network and its industrial application. IEEE Access 5:16162–16172

Demšar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30

Dixon WJ, Mood AM (1946) The statistical sign test. J Am Stat Assoc 41(236):557–566

Drucker H, Wu D, Vapnik VN (1999) Support vector machines for spam categorization. IEEE Trans Neural Netw 10(5):1048–1054

Dua D, Graff C (2019) UCI machine learning repository, 2017. http://archive.ics.uci.edu/ml 7(1). Accessed 12 July 2019

Floyd S, Warmuth M (1995) Sample compression, learnability, and the Vapnik-Chervonenkis dimension. Mach Learn 21(3):269–304

Gupta D, Hazarika BB, Berlin M (2020) Robust regularized extreme learning machine with asymmetric Huber loss function. Neural Comput Appl. https://doi.org/10.1007/s00521-020-04741-w

Gupta U, Gupta D (2021) Regularized based implicit Lagrangian twin extreme learning machine in primal for pattern classification. Int J Mach Learn Cybern 12(5):1311–1342

Hazarika BB, Gupta D (2020) Modelling and forecasting of COVID-19 spread using wavelet-coupled random vector functional link networks. Appl Soft Comput 96:106626

Hazarika BB, Gupta D (2021) Density-weighted support vector machines for binary class imbalance learning. Neural Comput Appl 33(9):4243–4261

Hazarika BB, Gupta D, Berlin M (2020) Modeling suspended sediment load in a river using extreme learning machine and twin support vector regression with wavelet conjunction. Environ Earth Sci 79:234

Hornik K, Stinchcombe M, White H (1989) Multilayer feedforward networks are universal approximators. Neural Netw 2(5):359–366

Huang GB, Chen L, Siew CK (2006) Universal approximation using incremental constructive feedforward networks with random hidden nodes. IEEE Trans Neural Netw 17(4):879–892

Huang GB, Zhu QY, Siew CK (2005) Extreme learning machine: a new learning scheme of feedforward neural networks. Neural Netw 2:985–990

Igelnik B, Pao YH (1995) Stochastic choice of basis functions in adaptive function approximation and the functional-link net. IEEE Trans Neural Netw 6(6):1320–1329

Katuwal R, Suganthan PN (2019) Stacked autoencoder based deep random vector functional link neural network for classification. Appl Soft Comput 85:105854

Leshno M, Lin VY, Pinkus A, Schocken S (1993) Multilayer feedforward networks with a nonpolynomial activation function can approximate any function. Neural Netw 6(6):861–867

Li W, Dai D, Tan M, Xu D, Van Gool L (2016) Fast algorithms for linear and kernel svm+. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 2258–2266

Li R, Wang X, Lei L, Song Y (2018) $ L_ 21 $-norm based loss function and regularization extreme learning machine. IEEE Access 7:6575–6586

Liu Q, He Q, Shi Z (2008) Extreme support vector machine classifier. In: Pacific-Asia conference on knowledge discovery and data mining, vol 1. Springer, Berlin, Heidelberg, pp 222–233

Mangasarian OL (2006) Exact 1-norm support vector machines via unconstrained convex differentiable minimization. J Mach Learn Res 7:1517–1530

Naik J, Satapathy P, Dash PK (2018) Short-term wind speed and wind power prediction using hybrid empirical mode decomposition and kernel ridge regression. Appl Soft Comput 70:1167–1188

Pao YH, Takefuji Y (1992) Functional-link net computing: theory, system architecture, and functionalities. Computer 25(5):76–79

Pao YH, Park GH, Sobajic DJ (1994) Learning and generalization characteristics of the random vector functional-link net. Neurocomputing 6(2):163–180

Pao YH, Phillips SM, Sobajic DJ (1992) Neural-net computing and the intelligent control of systems. Int J Control 56(2):263–289

Park J, Sandberg IW (1991) Universal approximation using radial-basis-function networks. Neural Comput 3(2):246–257

Peng Y, Wang S, Long X, Lu BL (2015) Discriminative graph regularized extreme learning machine and its application to face recognition. Neurocomputing 149:340–353

Rakesh K, Suganthan PN (2017) An ensemble of kernel ridge regression for multi-class classification. Proc Comput Sci 108:375–383

Saunders C, Gammerman A, Vovk V (1998) Ridge regression learning algorithm in dual variables. In: Proceedings of the 15th international conference on machine learning

Scardapane S, Wang D (2017) Randomness in neural networks: an overview. Wiley Interdiscipl Rev Data Min Knowl Discov 7(2):e1200

Sun Y, Li B, Yuan Y, Bi X, Zhao X, Wang G (2019) Big graph classification frameworks based on extreme learning machine. Neurocomputing 330:317–327

Vapnik V, Izmailov R (2015) Learning using privileged information: similarity control and knowledge transfer. J Mach Learn Res 16(1):2023–2049

Vapnik V, Vashist A (2009) A new learning paradigm: learning using privileged information. Neural Netw 22(5–6):544–557

Wang LP, Wan CR (2008) Comments on "The extreme learning machine”. IEEE Trans Neural Netw 19(8):1494–1495

Wu XH, Zhao PW (2020) Predicting nuclear masses with the kernel ridge regression. Phys Rev C 101(5):051301

Xu KK, Li HX, Yang HD (2017) Kernel-based random vector functional-link network for fast learning of spatiotemporal dynamic processes. IEEE Trans Syst Man Cybern Syst 49(5):1016–1026

Zhang L, Zhou W (2010) On the sparseness of 1-norm support vector machines. Neural Netw 23(3):373–385

Zhang L, Suganthan PN (2016) A comprehensive evaluation of random vector functional link networks. Inf Sci 367:1094–1105

Zhang Y, Duchi J, Wainwright M (2015) Divide and conquer kernel ridge regression: a distributed algorithm with minimax optimal rates. J Mach Learn Res 16(1):3299–3340

Zhang L, Suganthan PN (2017) Benchmarking ensemble classifiers with novel co-trained kernel ridge regression and random vector functional link ensembles [research frontier]. IEEE Comput Intell Mag 12(4):61–72

Zhang PB, Yang ZX (2020) A new learning paradigm for random vector functional-link network: RVFL+. Neural Networks 122:94–105

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that they have no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hazarika, B.B., Gupta, D. 1-Norm random vector functional link networks for classification problems. Complex Intell. Syst. 8, 3505–3521 (2022). https://doi.org/10.1007/s40747-022-00668-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-022-00668-y