Abstract

Alongside the particular need to explain the behavior of black box artificial intelligence (AI) systems, there is a general need to explain the behavior of any type of AI-based system (the explainable AI, XAI) or complex system that integrates this type of technology, due to the importance of its economic, political or industrial rights impact. The unstoppable development of AI-based applications in sensitive areas has led to what could be seen, from a formal and philosophical point of view, as some sort of crisis in the foundations, for which it is necessary both to provide models of the fundamentals of explainability as well as to discuss the advantages and disadvantages of different proposals. The need for foundations is also linked to the permanent challenge that the notion of explainability represents in Philosophy of Science. The paper aims to elaborate a general theoretical framework to discuss foundational characteristics of explaining, as well as how solutions (events) would be justified (explained). The approach, epistemological in nature, is based on the phenomenological-based approach to complex systems reconstruction (which encompasses complex AI-based systems). The formalized perspective is close to ideas from argumentation and induction (as learning). The soundness and limitations of the approach are addressed from Knowledge representation and reasoning paradigm and, in particular, from Computational Logic point of view. With regard to the latter, the proposal is intertwined with several related notions of explanation coming from the Philosophy of Science.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Alongside the particular need to explain the behavior of black box Artificial Intelligence (AI) systems, there is a general need to explain the behavior of any type of AI-based system (the explainable AI, XAI) or complex system, that integrate this type of technology, due to the importance of its economic, political or industrial rights impact. In either case, the AI component might not be isolated, but possibly part of a broader treatment of information, or integrated into a broader Complex System (CS). The integration complicates the task of explaining systems behavior, which can be so complex that traditional systems theory thinking becomes insufficient. In areas where it is important (or even critical) to monitor the behavior of a system that includes AI-based modules, such as systems for Big Data (BD), Internet of Things, or Cloud Computing, some kind of specification of the behavior of such module or at least an explanation of the decisions taken will be needed. Please note that the need is not generalized, not every automatic or even AI-based system should be controlled. Still, there exist cases (for example when people’s rights are affected) in which the system must be subject to some form of certification, traceability, and assessment of both applicability and performance. Also, the XAI itself aims for the achievement of many goals different in nature (cf. [1]).

It is clear that not every system that makes an automated decision is AI, nor every AI is Machine Learning (ML), nor everything that is announced as AI is actually AI-based there is an evident hype on the subject and the term is often used as a marketing resource or for justifying business strategies. This hype around certain techniques does not refute the above-mentioned fact of the current ubiquity and power of AI-based systems, which makes it very common for them to be used to improve other systems. The latter inherits potential explainability problems from the former, in any of the three notions (levels) of Explainable AI (XAI) that actually exist, according Doran et al. [2]: opaque systems that offer no insight into its algorithmic mechanisms; interpretable systems where users can mathematically analyze its algorithmic mechanisms; and comprehensible systems that emit symbols enabling user-driven explanations of how a conclusion is reached.

The challenges that comes with Explainability (and its impact or relationship on diagnosis or debugging—verification—for example) are similar (of course, bridging the gap) to a crisis of foundations proper to the dizzying advance of a branch of knowledge (as occurred with the emergence of Set Theory in the last century, for example). A crisis that affects the validity of the results is usually confronted by going back to the analysis of the capacity of fundamental models of formalization and their properties. However, even sharing similarities with older challenges in Mathematics, there are some characteristics of XAI that make the problem somewhat transversal in nature. For example, its (social, psychological) inter-agent nature and its relationship with system correction.

Two natural strategies used to address the problem of XAI namely, using interpretable models or, if it is not possible, with post hoc explaining, by argumenting/explaining the result once it is obtained—may be insufficient. As argued by Miller [3], the creation of explainable intelligent systems requires addressing major issues. Firstly, Explainability can be a question interactive in nature between humans and the (automated or semi-automated) AI system; and secondly, it is peremptory the design of representations that support the articulation of the explanations is required. Tuning up more, Weld and Bansal [4] require a good explanation to be simple, easy to understand, and faithful (accurate), conveying the true cause of the event. Therefore a balancing problem between two demands (Miller’s versus Weld and Bansal’s ones) is faced.

The Knowledge Representation and Reasoning paradigm faces other issues that increase the complexity of the challenge. Mainly that one comes from the difficulty of translating some sort of logical explanation into a language that is acceptable and intelligible by the non-expert. In fact, we could consider that two elements need to be translated frequently, for example, when it is necessary to justify the decision taken (i.e. a complete argument). Of course the conclusion, but also the part of the Knowledge Base (KB) that has been used to entail it, that is, the initial hypotheses beside the inference links. As for the justification process itself, it should also be translated or adapted when it is not legible for the explainee (cf. [5]).

Working in a massive data framework can exacerbate the problem of Explaining. It implies dealing with problems with thousands of features (among other issues [6]), thus performance requirements are likely to force the adoption of methods that are difficult or impossible to explain in particular scenarios such as deep neural networks or enhanced decision forests [7]. It is often the case that post hoc explanations of events may be the only way to facilitate human understanding; such kind of explanations could be more easily accepted if some common human reasoning patterns, which are not direct variants of purely logical reasoning, are selected.

The use of surrogate modelsFootnote 1 could simplify certain aspects to make possible to explain the event/result to a wider audience as well as to discuss the reasons why concrete results are predicted or occur. The explanation could even go as far as using economic arguments such as the cost-effectiveness of the decision, to estimate the economic cost of each possibility (true/false or positive/negative outputs).

Naturally, it is not the only option. For example, other approach to research is based on treating AI instruments as biological entities, studying them from that point of view. For example, considering neural networks as experimental objects in biology, rather than as analytical and purely mathematical objects (see e.g. Bornstein’s [8]). The approach would involve, for example, analyzing the individual components, disturbing some inputs or sectioning parts in order to check their role and the elasticity of the model according to the ideas from Neuroscience.

The construction of models to support explanation—especially for ML-based intelligent systems—is a challenge that embraces several techniques ranging from logical causal models (as the already mentioned tradition of classical Expert Systems) to those specialized in deep learning (cf. [9]). To approach the issue from Knowledge Level (KL), the need to reconcile two levels of (representation and) reasoning (for the explainer and the explainee) through some kind of accepted, consensual models, becomes more pressing. What can Newell’s KL [10] paradigm offers to meet the challenge of explainability? Mainly explanation, interpretation, and justification, which are activities deeply rooted in AI research, as they provide reliability in systems with autonomy in the decision-making process. At KL, a first source of explanation comes from KL’s paradigm itself.

The idea of surrogation can bridge several levels of sophistication between predictions and experiments. By helping to understand the event or system, the surrogate model helps to interpret or justify decision making by drawing from data source. In the case at hand, the gap would be epistemological in nature. A KL-based surrogate model should bridge the response offered by the AI-based system and those that are acceptable to humans. For example, expert systems can be considered as surrogate models for human expertise. As well as trying to reproduce the expert’s results, they also map and make explicit both the knowledge used and acquired.

In KL paradigm, agents/systems work mainly with logical formulas or symbolic expressions that seek to represent information from the world, to obtain conclusions about it, by mechanized symbolic manipulations without any intended meaning. All that is needed is to specify what the agent knows or believes and what its goals are. Therefore, by considering the idea of KL-based surrogate models, the separation between the logical abstraction and the implementation details (including the implementation of the inference/decision process itself) has to be assumed. Newell’s proposal for KL was intended to clarify the elements that should be considered in order to formalize the idea of rational agent by separating the two modules for the purpose of studying without ambiguity two problems: Knowledge Representation and Reasoning (KRR) problems. Davis et al. [11] explicit as one of the roles of the Knowledge Representation that of surrogate, which serves as a substitute for the original, to reason about the world and infer the decision to be made. Of course it is not the only one. It is also useful to represent ontological commitments (including background knowledge) and serves as theories for reasoning. And the one that will interest us for the paper: an environment in which information can be organized and where agents can think. More elaborate theories on knowledge taxonomy such as Addis’ [12] (p. 46) further break down these roles.

Classic surrogate models are useful for the expert. However, their adaptation to face the problem of explainability can raise questions with differentiating nuances. On the one hand, the qualitative nature of human reasoning, its cognitive and functional limitations, make rational conceptual and qualitative explanations easier to follow and accept by non-specialists (clients/users, legislators, planners, product validation managers, etc.). Therefore, any numerical solution should be adapted to one of those if possible. On the other hand, this type of explanation is approximate, and tends to sacrifice rigor for the benefit of its understanding. It might be difficult to find a balance between the robustness of quantitative models and the local, qualitative, approximate, or even example-based ones that can be used in the KRR paradigm.

Focusing on the model that supports our explanation, similar questions to other situations where other kinds of surrogate models are considered arise [13]. Among those of interest for the paper, and thinking for explaining: What data and sample are used for the explanation provided by the surrogate model? What approximation method should be used? How is the surrogate model that produces the explanation? What if there’s a discrepancy between two different explanations? The soundness of a surrogate model designed to bring the theoretical model closer to an explainable one will, therefore, depend on an adequate response to each of the questions.

The considerations set out so far are intended to outline the challenge of formalization that XAI represents. From a broad perspective, factors of very different nature influence the treatment of explainability and make clear the need for foundational (and also interdisciplinary) analyses. A desirable objective would be to accommodate formal and philosophical discussions and proposals in the same model. Although it is utopian in its generality, any model that serves as a partial solution would be welcomed by the scientific community.

Motivation and aim of the paper

As it can be guessed from the introduction above, the paper discusses some foundational ideas from Knowledge Representation in AI for explainability, as well as how these could be studied from a formal point of view, despite their various edges and implications. The paper is devoted to the design of an epistemological model to support some of the notions discussed in the first part. The model is the basis for the different activities that epistemology applied to CS states: from the representation of systems from a qualitative point of view to the simulation and subsequent prediction about their future. The aim is to show that the model can be an useful tool for representing both the theoretical concepts involved in the process of explainability and for addressing associated problems. Ideas toward a formal epistemological model for foundations of explaining are discussed, as well as how related issues are represented in such a model, and philosophical considerations. We believe that it is necessary, to soundly outline a general vision of the document, to briefly review this model, which will be addressed in detail in the second part of the document.

Towards an abstract epistemological model

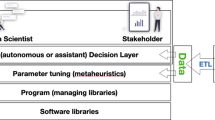

As stated above, the main motivation of the paper is to establish a common formal framework to serve as theoretical model that can be used to discuss foundational (formal, philosophical) issues in Explaining. Starting from a purely perceptual scenario, a model for specifying several notions is built. Due to the heterogeneity of the different types of CS (which include some of the more sophisticated AI-based systems), there are no general mechanisms for addressing issues related to systems that may be essentially different in nature and purpose. However, if an abstraction with the language of events and observations is made, it is possible to provide a mathematical model that allows illustrating, in a logical-mathematical language, some foundational properties. Partial versions of the framework have been presented by authors and applied in several case studies of a different nature [14,15,16,17,18]. However, until now we had not presented a unified vision of the model, as well as its contextualization within the problem of explanation. Its theoretical character aims to clarify some of the ideas and issues discussed in the paper, thus providing a framework and/or a set of guidelines to be followed for the modeling and qualitative analysis of CS and related problems. In Fig. 1, the proposal (which comes from the philosophical and computational study of CS) is depicted. For now, it is enough to say that the idea is to mathematize the universe of data that comes from perception and the need to contextualize the part of that information that will be transformed into ’knowledge’ to reason about the system.

Step 1: Extracting and fusing data

The model starts from raw data (right arrow in the top, Fig. 1), understanding this as everything that is perceived and analyzed, as raw information (at the bit level, if desired). In other words, information is accumulated with the maximum possible granularity and then fused into knowledge. This way we do not presuppose a conceptual representation and reasoning language beyond the one that comes from direct perception since, as argued, prefixing it would limit the vision of the events. Some questions arise immediately, as Why avoiding any high-level specification in the first instance? Why resorting to bit-level event observation (including input–output data) to indicate their basic properties? The answer is that it is not desired to limit the model by the selection of a language, beyond one denoting the very basic attributes of perceived signals. Also, we intend to offer a universal model for justification of notions and thus it is not to presuppose features inherited from a previous choice of language. The idea is that conceptual language emerges from the analysis and selection of characteristics, and its description (and further explanation) should be easy to accept by the observer.

Step 2: Contextual selection

Selecting the right context in which to find the explanation (fusing the associated information) is the basis that would serve to build perspectives using an algorithmic combination of the raw information. With this aim, Formal Concept Analysis (FCA) [19] has been chosen as a building tool (box, downright in Fig. 1). It will be used, for example, as an interface between the perceptions and the system that generates the response (explanation in our case), building what one might call event/observational concepts. Some concepts are spontaneously activated or made available for use solely based on a subject’s being in a certain perceptual state [20]. To avoid the so-called content inflation [20], the analysis should be delimited, thus a sub-context is selected from the global context of all perceptions (right down arrow, Fig. 1). In this way, the explaining synthesis is confined to what the agent believes or decides that plays some role in the explanation.

Step 3: Explaining: Machine Learning tools ideas are applied on the contextual selection and using FCA-based semantics.

Please note that the paper should not be considered a research work on FCA. Such theory is used as tool to formalize some of the notions through an abstraction of the epistemological reconstruction of CS from available observations. This type of approach allows the simulation, validation and prediction tasks to be carried out based on the model. Furthermore, justifications based on logical mechanisms with relatively less complex representations, enabling the use of traditional KRR techniques such as Inductive Logic Programming (ILP), will be presented. In short, a theoretical model is proposed, with a foundational vision, to design a surrogate meta-model based on the KL paradigm that allows formalizing and discussing some of the (philosophical and formal) notions about observability, justification, argumentation and explanation that we will be dealing with in the paper. It could be considered general methods that allow us evaluating the quality of the models (both their structure and the information they contain).

Structure of the paper

After the preceding introduction, the paper starts noting some observations about the role Bounded Rationality (BR) can play in solving the explanation problem. Thus, the factors that influence what the explanation under BR would be and how the explanation techniques could be applied in that case are analyzed, including the perspectivist nature of explanation inherited from BR’s role in the process, and their relationship with the so-called data curation (see “Perspectivism as explaining strategy”). “Towards a theoretical general model for explaining phenomena: background” is dedicated to motivating the choice of FCA as a tool free of specific semantic ties to analyze the problem, and to make the paper largely self-contained—the basic elements of FCA are introduced. “A toolbox for specifying explanations in complex systems” represents the formalization of the model and the introduction of a basic explanation format inspired by ideas from the Argumentation. From this notion, and combining it with others of Inductive Logic Programming, the different elements are represented. The foundational nature of the paper is highlighted through the analysis of the model’s behavior in the face of the extension of available information. The paper ends with some considerations about the proposal and future direction of the extension of the work.

Some notes on bounded rationality and explaining

Contextual selection (and possibly subsequent reasoning) will be guided by techniques that reduce the search space and decrease the complexity of reasoning. For example, those based on bounded rationality [21] may be useful. Although it is not the aim of this paper to break down such techniques (nor to specialize them to our specific model), we do believe it is interesting to comment on certain issues in this respect.

Following the analysis carried out in Lewis et al.’s paper [22], three types of explanations that could be produced by an explainer agent can be distinguished: Optimality explanations (no machine bounds), Ecological-optimality explanations (the environment where actions are decided upon responding to a given distribution but there are no limitations to the processing of the information), Bounded-optimality explanations (limitation to information processing, which reduces the repertoire of accessible solutions and the associated explanation, the policies), and lastly the Ecological-bounded-optimality explanations (in which both policy space and information processing are constrained). Therefore, to the extent that the expected behavior or the structure of the policy resulting from the analysis corresponds to the observed behavior, then the behavior has been explained in each context of explainability.

Formal epistemology, complex systems and BR

Data collection and processing are key daily tasks in CS with the aim of to obtain a reasonably accurate and concise approximation of the system and its behavior (that could lead us to a surrogate model), so that we can understand it to some extent [23]. If one wishes to explain the events that perceives, it is natural to consider an approach similar to formal epistemology. Moreover, if one wants to extract actionable knowledge, the natural approach would be the Applied Epistemology that KL would represent.

It has already justified that the use of techniques and ideas from BR provide interesting advantages, since they aim to obtain results similar to those humans conclude. An adequate choice of key features and their specification is a first step in order to reconstruct the (complex) phenomena. In Fig. 2, a schema of the main activities aimed at the study of CS is shown, with emphasis on the phenomenological reconstruction phase based on the data available, since this is a fundamental tool for the construction of explanations. The tasks can be grouped into three levels or phases: Reconstruction of the system (modeling), Simulation of the system from the dynamics reconstructed in the first phase, and finally, experimental Validation of the simulated behavior with the real behavior of the system. After the last phase, it is possible to reconsider the reconstruction initially obtained in the first phase to bring another more similar with reality, more accurate model. The theoretical reconstruction of CS could cover only those relevant aspects that are related to the explanation of the phenomenon of interest. It is, therefore, a valuable tool for event explanation.

A phenomenological-based methodology suggested by the application of ideas from BR has been applied to study and simulate CS in previous papers (see, e.g. [14, 24]) roughly subsumed in Fig. 1. The first step of the methodology is the selection of relevant attributes from all the available attributes to obtain good predictions, classifications, or explanations on CS. The second step is the use of a sound reasoning method on the selected elements.

Perspectivism as explaining strategy

The application of BR techniques that inherently limit the search for solutions (explanations), outlines a framework where both the search space and, ultimately, the way of seeing the system or event to be explained is implicitly limited by the elaboration of aggregate information from the data. We could say then that a particular perspective is created. This point of view is not free of problems.

If AI engineer assists stakeholders with AI-based systems, what is the plausible explaining acceptable by them? Explanations that focus on a (necessarily) partial view do not necessarily provide the best answer, or even a right answer. Therefore, it is reasonable to think that systems do not offer general solutions to the problem of explanation (or justification of the proposed decision). It is necessary to measure the question from the perspective we are led to by the selective access to (massive) data as well as the inevitable biased selection of dimensions, features and datasets. In our case, the perspective might be strongly based on data for its curation and exploration. This basis compromises the desired independence of the observer’s information.

Tasks in Formal and Applied Epistemology involved in the study of complex systems (from [23])

Introduced by Leibniz (and further developed by Nietzsche) Perspectivism starts from the premise that all perception and ideation takes place from a particular perspective (a particular cognitive point of view). Therefore the existence of possible conceptual schemes (perspectives) influencing how the phenomenon is understood and the judgment of its veracity, is assumed. It is important to note that, although it is assumed that there is no single true perspective to explain the world, it is not necessarily proposed that all perspectives are equally valid.

In a perspectivist view, Science is primarily observer-dependent. Moreover, we see a growing acknowledgment in science studies that all scientific knowledge is perspectival [25]; i.e., that the context established by a scientific discipline is decisive for the kind of observations that can be made. The same phenomenon occurs intra-theory, that is, between different contextual observations sharing the same theory. Hence, it can be concluded that explanations will be in many cases inherently perspectivist artifacts. The perspectivist point of view proposes the existence of many different scientific perspectives with which to analyze a complex problem, all of which can bring value to the study, similar to the fact that a single scientific discipline cannot provide adequate solutions to complex problems. The perspectivist view represents a powerful tool supporting mutual respect and relationship between even very different scientific perspectives [25].

Perspectivism and data curation

The taking of perspective and the use of BR techniques entails in most cases the intended selection of the data for the task of explanation, data curation. In document [26] DARPA agency motivates the focus on Data Science in Explanability Challenge because decisions assisted by BD analytics need from such a selection of which resources will be the study objective to support evidence in their analysis. Such selection could lead to failures or errors that must be analyzed to refine both the procedure and the curation of the content. It is clear that the provision of effective explanations would greatly help with all these tasks [26]. Actually, what might hide Data Curation (particularly selection and interpretation) is the practice of data hermeneutics [27, 28]; the entire process is accompanied by information that could become explanations of both, the result and the extraction and curation policies. There is a clear need for this to be documented.

Another risk that comes from data curation is that data bias may lead to the inability to replicate studies, compromising their ability to be reused or generalized as well as the acceptability of the explanation itself [29]. Still, the level of abstraction of the explaining can determine its generalizability, because to consider a too abstract level can compromise both its real understanding and its practical value. Abstractions can simplify explanations, but automating the discovery of abstractions is very challenging (also both sharing your understanding and sharing them) [30]. Such difficulties could lead to a greater gap between scientific rigor and practical relevance. The generalization of explanations, understood as their reusability for several case studies would be strengthened by the availability of more data from multiple sources. This generalization can influence the issue of the preservation of data curation criteria (which could accompany the explanation, since they provide insight). This would also allow the development of richer models and greater understanding. Each model is a reduction of reality and the modeler needs to make choices in light of limited resources for data collection and modeling. However, when more data are available, models often become more complex and too detailed to interpret, unless they possess certain semantic features. For example, instead of trying to confirm the theory through purely deductive approaches, resources such as Linked Data can facilitate the search and analysis of counterfactuals [29], instead of just gathering a representative data sample to confirm our theory.

Perspectivism versus veracity

The adoption of perspectives could affect the veracity (of the explanation given or the model itself). It is adopted here the notion of Veracity as how precise or true a dataset can be. It is referred to as the fidelity of the data concerning the reality that they represent. In the context of Data Science, it takes additional meaning, namely how reliable the data source is, and the confidence in the type and processing of the data. Such aspects need to be studied since they are essential for issues such as avoiding biases, abnormalities, inconsistencies, and others associated with processing such as duplication and volatility. It is a critical issue to be studied in new systems [31], and mandatory if one wants to abandon the idea that ML is data alchemy.

It is particularly interesting the distinction between Veracity in general on one side and the concepts associated with the correctness and validity of the results on the other side. Since databases can be understood at a certain level as models of the definition schemes that govern them, and these are in turn formal theories that represent the universe from which the data are extracted, veracity is very much related to classic problems in Knowledge Engineering (or, if we demand a certain discipline in the database definition schemes, with the Semantic Web [32]). Therefore, veracity also depends on the quality, safety, accuracy, completeness of the information, etc.

Towards a theoretical general model for explaining phenomena: background

The issue to be addressed now is whether the elements and ideas formerly discussed can be formalized (from a logical, foundational point of view in nature). It should be noted that the aim is to provide a proof of concept, a common framework on which—at least theoretically—one could compare different approaches for explaining. The proposed model is data driven and intended for information fusion from input modules, to meet the conditions outlined in the previous sections. It will be a sort of universal KRR-based (phenomenological) surrogate model. The idea is that any other surrogate model coming from data could be considered immersed within this. Bridging the considerable gap, it is a modest proposal towards a model for XAI similar to what was done in other areas such as ZFC (actually, its so-called Inner Models) that allowed to establish basic elements of Set Theory by means providing a common formal framework.

Our aim is to address the question whether it is possible to consider a universal surrogate model that encompasses any surrogate model and enable the production of acceptable explanations, including those under BR (which limits both options/choices and inferences). It is important to note that both the question (a meta-epistemological question) and the answer (a mathematical approach) are epistemological in nature and based on phenomenological reconstruction philosophy sketched in “Formal epistemology, complex systems and BR”. It is not intended here to demonstrate the benefits in practice of this approach, beyond its role as a facilitator of formalizations of some of the issues we have discussed so far (although some of its most important mathematical properties will be demonstrated).

The idea of a universal model for the phenomenological reconstruction of CS is not completely original from the paper (in principle, it can be considered a case of the general strategy of addressing the CS study). The first time it was sketched was in a case study in the specific field of sports betting [14] that clearly illustrated some of the characteristics that the model should have.

The method offers a description of the relationships between the observed data (the basic attributes that can be considered as raw data) and system of logic implications (which can be seen as Horn clauses, which will make it easier to formalize the explanation). The model composed by the elements obtained (a network of concepts and a system of rules) can be considered a surrogate model for explaining qualitative grounded relationships of the System. Mathematical results justify the soundness and completeness of the model concerning the raw data coming from the CS, always from the foundational vision of the problem. The shift to the use of more complex properties should be formalized by what is called formal perspectives (see “The monster context”).

In order to present the ideas within a common framework, the notions that have been discussed so far, Formal Concept Analysis (FCA) [19] has been selected. FCA is a mathematical theory for the analysis of qualitative data, hence it is an ideal tool for our purposes Since its origins, with the pioneering works by Rudolph Wille from the 1980s, FCA has experienced an outstanding development in both its theoretical [33] and applied sides [34]. For the reader’s convenience, in Table 1 a summary of frequently used notations is presented.

The choice of FCA as basis theory to describe the model is due to, among other reasons, the fact that FCA does not prefix the language of concepts. So, one could start from variables denoting the basic attributes (considering the latter as data coming from perception and output, for example). FCA allows the extraction of concepts, in the following sense: it is mathematized the philosophical understanding of a concept as a unit of thought, comprising its extent and its intent. The extent covers all objects belonging to the concept, and the intent comprises all common attributes valid for all the objects under consideration.

FCA provides algorithms to extract, from data, all units of knowledge with meaning in the sense of the above-mentioned concept notion, as well as it also allows the computation of concept hierarchies from data tables. In short, FCA theory and techniques represents a method for both Data Analysis (organization, exploration, visualization) and Knowledge Retrieval, among other applications. At the computational-logic level, it also provides tools to extract patterns (rules) of behavior from the data and reason with them. One could summarize the reasons that lead us to choose FCA in that two of our objectives resemble two FCA main goals: conceptual clusters extraction (formal concepts endowed with semantic network structure) and data dependencies (implications between attributes) analysis.

Next subsection the basic elements of FCA will be briefly described, as well as the notation that will be used in the rest of the paper, in an effort to make the paper self-contained. The reader is referred to [19] to get both more technical details and a more comprehensive view of FCA.

Formal contexts

The information format used in FCA is organized in an object–attribute table specifying whether an object have an attribute. This table is called Formal Context. It is a three elements set \({\mathbb {K}} = (G,M,I)\), where G is a non-empty set of objects (events), M is a non-empty set of attributes, and \(I \subseteq G \times M\) is a (object–attribute) relation. In the table representation of the formal context, objects and attributes correspond to table rows and columns, respectively, and \((g,m)\in I\) denotes that object g has attribute m. Figure 3 (left) shows a formal context describing fishes (objects) living on different aquatic ecosystems (attributes) is shown. Please note that attributes can be considered as Boolean functions on the set of objects. Any attribute \(a\in M \) defines a function \(f_a:X \rightarrow {\mathbb {B}}\) where \({\mathbb {B}}=\{ \mathbf{0}, \mathbf{1}\}\) as \(f_a(o)=\mathbf{1}\) if and only if \((o,a)\in I\).

A formal context \({\mathbb {K}} = (G,M,I)\) induces a pair of operators, which we will call here derivation operators. Given \(A \subseteq G\) and \(B \subseteq M\), they are defined by

(that is, the set of attributes shared by all the objects in A) and reciprocally

(the objects that have all the attributes of B).

The mathematical instantiation in FCA of the philosophical definition of concept is called formal concept. A formal concept is defined by means of the derivation operators: it is a pair (A, B) of object and attribute sets (called the extent and the intent of the concept, respectively) such that \(A'=B\) and \(B'=A\). That is, the definition by intention characterizes all the elements that satisfy that definition, and vice versa: the definition by intention contains all the attributes common to those objects. Sometimes concepts are referred to by their intent, which are the so called closed sets. An attribute set B is closed if \(B''=B\) (or equivalently, is the intent of a concept).

Concept lattice

The set of concepts of a context given \({\mathbb {K}}\) can be endowed with the mathematical structure of lattice, by means of the subconcept relationship. For example, the concept lattice associated with the formal context on fishes of Fig. 3 left is shown in Fig. 3, rightFootnote 2.

In this representation, each node is a concept, and its intent (extent resp.) is formed by the set of attributes (objects resp.) included along the path to the top (bottom resp.) concept. For example, the bottom concept \((\{eel\} , \{ Coast, Sea, River\})\) is a concept that could be interpreted as euryhaline-fish (this is not a term of the language represented by attribute set, is something new). This is an example of how FCA does not limit the concepts considered by the chosen language of attributes, and how it induces the discovery of new ones (concepts that do not fit with the extension of any attribute). A more complex example, where the authors analyzed concept lattices about sentiments in social networks [35], in particular on Twitter. The aim was to show that the conceptual structure associated with a large set of aggregated opinions on the same topic can provide an interesting overview of the collective opinion on that subject. From the retrieved conceptual structure it can analyze, at the language level, the evolution of the opinion lexicon in social networks. The work shows how concepts about feelings are not adequately represented with most of the sentiment lexicon used in Social Media [36] arise.

An important feature is that basic FCA algorithms extract all concepts from the formal context, which can lead to very complex concept lattices. If a selection of these is desired, more refined algorithms, that focus on the most general or important concepts according to some measure, can be used (see e.g. [37]). The refinement would allow focusing the analysis on an easy-to-handle attribute set, but without losing (as far as possible) the original relations among these. For instance, it is possible to simplify the concept structure but keeping important properties (see, e.g. [38] or [39]). Along with the concept lattice, it is also possible to obtain a KB extracted from the formal context that uses the attributes as representation language using an implication logic.

Implication basis

In FCA, the format of the logical expressions denoting relations among properties (the attributes) is very similar to Horn’s clauses. An attribute implication L (over a set M of attributes) is an expression \(A \rightarrow B\), where \(A,B \subseteq M\). The set of implications on M is denoted by Imp(M).

The semantics of implications is inherited from the natural interpretation of implications in propositional logic but relativized to consider the formal context as the universe of all objects (understanding each set of attributes \(\{g\}'\) associated with an object g as an interpretation, that is, the set of true attributes). Formally, it is said that \(A \rightarrow B\) is valid for a set T of attributes (or T is a model of the implication), written \(T \models A \rightarrow B\), if the following condition is satisfied: \( \text{ If } A \subseteq T \text{ then } B \subseteq T\). The implication \(A \rightarrow B\) is valid in the context \({\mathbb {K}} = (G,M,I)\), denoted by \({\mathbb {K}} \models A \rightarrow B\), if \(\{g\}' \models A \rightarrow B\) for any object \(g\in G\) (that is to say, the set of attributes of any object in the context formal models the implication). For instance, the implication

(any fish that lives in both rivers and the sea also live in the coast) is valid within the context of Fig. 3, whilst the implication \(River \rightarrow Coast\) does not.

Once semantic truth has been defined, it is possible to formalize the derived notion of entailment.

Definition 1

Let \({\mathbb {K}} = (G,M,I)\) be a formal context, \({\mathcal {L}}\) be an implication set and L be an implication. It is said that

-

1.

L follows from \({{\mathcal {L}}}\) (or L is consequence of \({\mathcal {L}}\), denoted by \({{\mathcal {L}}} \models L\)) if each model (subsets of attributes) of \({{\mathcal {L}}}\) also models L. Similarly, it will be written \({\mathcal {L}} \models {\mathcal {L}}'\) every implication from \({\mathcal {L}}'\) is consequence of \({\mathcal {L}}\).

-

2.

It is said that \({\mathcal {L}}\) and \({\mathcal {L}}'\) are equivalent, \({\mathcal {L}}' \equiv {\mathcal {L}}\), if \({\mathcal {L}}\models {\mathcal {L}}'\) and \({\mathcal {L}}'\models {\mathcal {L}}\).

-

3.

\({{\mathcal {L}}}\) is complete for \({\mathbb {K}}\) if for every implication L

$$\begin{aligned} \text{ If } {\mathbb {K}} \models L \text{ then } {{\mathcal {L}}} \models L \end{aligned}$$ -

4.

\({{\mathcal {L}}}\) is non-redundant if for each \(L\in {{\mathcal {L}}}\), \({{\mathcal {L}}}\setminus \{L\} \not \models L\).

-

5.

\({{\mathcal {L}}}\) is an implication basis for \({\mathbb {K}}\) if \({{\mathcal {L}}}\) is both complete for \({\mathbb {K}}\) and non-redundant.

The computation of implication bases can be studied from a more general setting, within the field of Lattice theory [40]. A particular basis is the so called Duquenne–Guigues Basis, also called Stem Basis (SB) [41], which is extracted from a type of attribute sets (pseudo-intents) [19]. Figure 4 show a context and its associated Stem basis. Actually, working with complete implication sets would be enough. Regarding implication bases, there exist relatively few solutions to compute them from the formal context. One of the most popular algorithms is Ganter’s construction of canonical basis that is a modification of his Next-Closure method for computing concept sets (see e.g. [42] for a discussion on the topic). Stem basis computation, based on pseudo-closed sets computation, also suffers of theoretical complexity barriers. For instance, deciding pseudo-closedness of attribute sets is coNP-complete.

As for any logical implication, forward reasoning is defined in the natural way. The entailment relationship based on the classic production system style will be denoted by \(\vdash _p\). In formal terms, without specifying any particular algorithm, the definition that captures the usual rule-firing closure:

Definition 2

Let \({\mathbb {K}} = (G,M,I)\) and \({{\mathcal {L}}} \subseteq Imp(M)\) and \(H\subseteq M\). The implicational closure of H, with respect to \({{\mathcal {L}}}\), \({{\mathcal {L}}}[H]\), is the smallest set \(B \subseteq M\) such that:

-

\(H \subseteq B\)

-

If there exists \(Y_1 \rightarrow Y_2 \in {{\mathcal {L}}}\) such that \(Y_1 \subseteq B\), then \(Y_2 \subseteq B\)

Given C a subset of attributes, it will be denoted by \({\mathcal {L}} \cup H \vdash _p C\) if \(C\subseteq {{\mathcal {L}}}[H]\).

The logical soundness and completeness, with respect to the entailment, is based on the following result:

Theorem 1

Let \({\mathcal {L}}\) be an implication basis for \({\mathbb {K}}\) and let \(\{ a_1,\ldots ,a_n \} \cup Y\) be a set of attributes of \({\mathbb {K}}\). The following statements are equivalent:

-

1.

\({\mathcal {L}} \cup \{a_1,\ldots a_n \} \vdash _p Y \).

-

2.

\({{\mathcal {L}}} \models \{ a_1,\ldots a_n \} \rightarrow Y\)

-

3.

\({\mathbb {K}} \models \{a_1,\ldots a_n\} \rightarrow Y\).

The construction of our model for formalizing explaining is based on a series of assumptions that will be introduced when needed or when they can be described. The first one is the following:

Assumption 1

The use of a system of rules (technically, they are definite Horn clauses) enables the construction of explanatory systems.

Note that it is not claimed that every event can be explained, only that we will consider for our model explanations based on information represented by such kind of formulas. Of course, it can be extended.

Semantics for propositional formulas and association rules

Recall that it has already been mentioned that one might consider \(\{g\}'\) (being \(g\in G\)) as an interpretation of propositional logic on language formed by the attributes of M (being \(\{g\}'\) the true attributes of such interpretation). Therefore, the validity of any propositional formula can be considered.

Implication basis are sound KBs to be used within a rule-based system in order to reason and also learn. For example, theory and tools from Inductive Logic Programming (ILP) [43] can be applied (as in fact will be done in “Inductive logic programming versus explaining in the model” bellow). Please note, however, that implication basis are designed for entailing only true implications, without any exceptions within the object set nor implications with a low number of counterexamples in the context. Consequently Theorem 1 applies only to valid implications. They should, therefore, also be considered rules with confidence. That is, implications that, while not necessarily logically true, are validated by a significant set of objects. For this purpose, the initial production system must be revised in order to work with confidence [44] as any rule-based system [45], following a relaxed version of Pollock’s notion of statistical syllogism [46] (see also [47]). Due to paper length issues, association rules in FCA will not be discussed here.

A toolbox for specifying explanations in complex systems

After introducing the basic elements that will be used in FCA, we return to the reasoning process represented in Fig. 1. It is started with a formal context which contains all the information about the system to study, which comes from all the perceptions/observations that will be objects of a formal context (please note that this would be the closest possible approximation to the event, this digital shadow is the most faithful to the perceptive capacity of the system). The (Boolean) attributes represent everything perceived. Each attribute represents any available data, for example, the ith bit of the temperature representation, the color of the object is red? or the ith bit of the time representation, that is from bit to bit information to indexes that the agent elaborates from information provided by the sensors. Thus a very large set of attributes is available (which could be assumed to be numerable, although if we talk about representing real information that the system receives it is not, it actually is finite). From this set, the values of more descriptive variables (i.e. more complex attributes) can be obtained through computable functions from the available ones. The formal context built from these data will be called Monster Context, and will be denoted by \({\mathbb {M}}\).Footnote 3

Once \({\mathbb {M}}\) is considered, the observer (who probably will be the explainer agent) has to select a set of attributes and observations that she/he has considered relevant to study the event (surely, the product of a selection phase following some BR strategy of those described in previous sections). The reasoning is focused then on the formal context induced by that selection (contextual selection) using original attributes, or with new computed attributes (in the latter case the context will be called formal perspective). It is expected reasoning with the induced formal context (represented in Fig. 1 by the box at the bottom right) to explain the event. As discussed, the ideas of the process come from authors’ former works on similar strategies [14, 49].

The synthesis of simpler formal contexts but with more elaborated (computable) attributes allows the observer to work with aggregated data but of a reasonable size. From the new context (which we will call formal perspective), the observer can focus the study on specific aspects such as the past evolution of that system and/or create hypotheses about its future evolution as well as to explain a specific perception with more elaborated concepts and attributes. In [44, 49] some technical aspects were detailed. It will be briefly summarized here so that the rest of the article is self-contained and respects a common notation.

Likewise, although the model will be presented using Implication Logic for the formalization of explaining, association rules or more sophisticated probability tools (such as [50,51,52]) can be used. As stated by Gigerenzer and Goldstein in [53], Probabilistic Mental Models assumes that inferences about unknown states of the world are based on probability cues (see also [54]). It can be said that association rules’ confidence extracted from the subcontexts can serve to establish probability cues.

The monster context

Since \({\mathbb {M}}\) covers all perceivable attributes from events, used or not by the engineer [55], this can be considered as a universal memory from which any other contexts are extracted (corresponding to partial observations, perspectives, or approximations due to perception or information limitations).

Assumption 2

The Monster Context contains all the information on CS available from the observations/perceptions.

Once a specific smaller context is computed from \({\mathbb {M}}\), it is possible to work with the elements extracted from that, namely concept lattices, implication basis or association rules [55]. Subcontexts of \({\mathbb {M}}\) can be selected according to BR techniques (the selection of the elements that make up that sub-context and which is in fact a limitation of the solutions search space, see “Some notes on bounded rationality and explaining”) to obtain a reasoning system in which it is feasible to predict, analyze or explain events [49, 55] (with the obvious limitations from BR). That is to say, concepts of a qualitative nature are drawn from partial data that consider only partial characteristics of the CS, i.e. a partial understanding.

The basic subcontext is one for which it is not necessary to compute new attributes, that is, those of the form

Given \({\mathbb {K}}_i=(G_i,M_i,I_i), \ i=1,2\) two subcontexts of \({\mathbb {M}}\), the intersection of \({\mathbb {K}}_1\) and \(K_2\) is the context

Note that this context takes advantage of the values of the attributes of both contexts on the common objects.

In general terms, a way to select a sub-context of \({\mathbb {M}}\) when we want to study a particular event \(o\in {\mathbb {O}}\) is through what we call contextual selection, formally defined as follows.

Definition 3

Let \({\mathbb {M}}=({\mathbb {O}},{\mathbb {A}},{\mathbb {I}})\) be the monster context, and let \(O \subseteq {\mathbb {O}}\).

-

1.

A contextual selection on \(O\subseteq {\mathbb {O}}\) and M is a map

$$\begin{aligned}&s: O \rightarrow {{\mathcal {P}}}(O_1)\times {{\mathcal {P}}}(M) \\&s(o)=(s_1(o),s_2(o)) \end{aligned}$$such that \(o\in s_1(o)\)

-

2.

A contextual KB for an object o w.r.t. a selection s is an implication basis of \(M_{s(o)}:= \displaystyle (s_1(o),s_2(o),{\mathbb {I}}\cap (s_1(o)\times s_2(o)))\)

That is, s maps to each o object a sub-context containing o.

This way the reasoning will be focused on a subcontext using a selection function on objects and attributes around the event o.

Formal perspectives are contexts built with more elaborated attributes. We will now assume that it has a computability model that outlines the class of computable functions. More precisely, what interests us is the representation of the functions computed by programs as functions on objects (on their attribute values) belonging to sub-contexts. We will not detail this issue (which does not affect the development of the AI part of the model construction).

Definition 4

A computable attribute b on \({\mathbb {O}}\) is an attribute defined by means of a computable function \(f: {\mathbb {B}}^n \rightarrow {\mathbb {B}}\) and \(\{a_1,\ldots a_n\}\subseteq {\mathbb {A}}\) as

A formal perspective is a context built from M that uses attributes computed from \({\mathbb {M}}\):

Definition 5

Let \({\mathbb {M}}= ({\mathbb {O}},{\mathbb {A}},{\mathbb {I}})\) a monster context. A formal perspective is a formal context \({\mathbb {P}}= (G,M,I)\) built from the monster context with a set M of computable attributes.

According to the definition, subcontexts are formal perspectives.

KBs extracted from contextual selections or formal perspectives would be our theoretical model of KL-based surrogate model. Despite its simple data structure, formal contexts are useful structures for Knowledge extraction and reasoning (cf. [19, 33, 34]).

By considering the interpretation made of the explanation from the monster context as an ILP process (to be considered in “Inductive logic programming versus explaining in the model”), the approach is aligned with the idea of addressing the explaining of (not necessarily emerging) events and concepts from raw data for reasoning in CS.

Argument-based reasoning as a BR-based activity

To make the model explanation more general, available background knowledge B shall be deemed (e.g. in form of propositional logic formulas). This background knowledge would help obtaining or supporting the explanation offered. For example, it can be used to refine the selection of events to those who satisfy B. Also, one could consider B as knowledge shared by both explainer and explainee; information (about the events of the subcontext) known to the explainer or known by explainee (for example, in medical diagnosis [56]). Background knowledge B would be combined with the knowledge extracted from the formal context (implication basis or association rules).

Note that background knowledge B may not be true in \({\mathbb {M}}\) (for example, due to erroneous or deficient data from sensors, or because \({\mathbb {M}}\) contains events that are not relevant to the particular problem being studied and therefore do not necessarily have to satisfy B). Also, bear in mind that by its phenomenological nature, this situation is plausible (one does not work with the System but with its digital perception). There exist two options for solving the inconsistency problem. The one chosen here is similar in nature to what would be called existential argumentation (inspired here by Hunter’s paper [57]) but by considering sub-contexts rather than subsets of formulas in a knowledge base. In our case that explanation is supported by a contextual selection that models both B and the explanation obtained. Such formal context, the contextual selection/perspective, is what really supports the explanation and thus inconsistency of the implication basis with B is avoided. Therefore, data and sample used for explanation comes from \({\mathbb {M}}\) (thus answering one of the questions from Paragraph 45). Other option which will not be considered here would be using conservative retraction by means of variable forgetting [58,59,60].

The idea is that the arguments that will explain the properties of an event will consist of a set of implications plus a subset of the available perceptions, being the set of implications valid in the contextual selection where we work. Therefore, it is interesting to know how the contexts that allow us to extract an explanation behave. The explanation process consists in finding an explanation that implies the attributes of the event under existential argumentation, \(\vdash ^{B}_{\exists }\) which involves three steps [49]:

-

1.

A question on why an event has a property (attribute a) is raised. On the event (object) some known properties that comes from perceptions o evidences (attribute values) \(P=\{a_1,\ldots a_n\}\).

-

2.

A contextual selection outputs a sub-context of \({\mathbb {M}}\) satisfying—if exists—the available background knowledge B. A contextual KB, \({{\mathcal {L}}}\) (in the case of working with association rules, a Luxenburger basis for for some confidence threshold) is computed for the subcontext. Here BR techniques can be very helpful.

-

3.

Learning tools are applied (for example ILP techniques).

-

4.

The result, the explanation, will be the format \(H= \langle Y, {\mathcal {L}}_0\rangle \) where \(Y\subseteq P\), \({{\mathcal {L}}}\models {{\mathcal {L}}}_0\) and

$$\begin{aligned} {\mathcal {L}}_0 \vdash _{p} Y \rightarrow \{a_0 \}. \end{aligned}$$

When the process is successful, it will be denoted by

The procedure can be extended to explain other types of information, for example implications (actually \(\{a\}\) is in FCA the implication \(\emptyset \rightarrow \{a\}\)). Taking into account the completeness properties of the bases for its associated context, it will be denoted as follows:

Definition 6

Let L be an implication and B background knowledge.

-

1.

It is said that L is a possible consequence of \({\mathbb {M}}\) under the background knowledge B, denoted by \({\mathbb {M}}\models ^{B}_{\exists } L\), if there exists \({\mathbb {K}}\), a nonempty subcontext of \({\mathbb {M}}\) such that \({\mathbb {K}}\models B \cup \{ L \}\) (so called supporting context).

-

2.

It is said that L is a possible consequence of \({\mathbb {M}}\) under the background knowledge B for an event \(o\in {\mathbb {O}}\) if the supporting context is induced by a contextual selection for the event o.

Note that, by Theorem 1, when the background knowledge B is a implication set, \(\models ^B_{\exists }\) would be equivalent to \(\vdash ^B_{\exists }\), which is defined by: \({\mathbb {M}}\vdash _{\exists } L\) if there exists \({\mathbb {S}}\models B\), a subcontext of \({\mathbb {M}}\) such that \({\mathcal {L}}_{{\mathbb {S}}} \vdash _p L\).

Implication logics do not suffer inconsistency issues. However, the monster context could have incompatible attributes, for example, a pair \(a_1,a_2\) of incompatible attributes verifies that \(\lnot (a_1\wedge a_2)\) is true in the environment. When such a formula is included in the background knowledge B it is possible to deal with incompatibility issues, because \(\vdash _p\) is an argumentative entailment which works on subcontexts (see classic \(\vdash _{\exists }\) in [57]).

To study \(\vdash _{\exists }\) under background knowledge, it may be necessary to study the relationship among arguments based on distinct contexts, checking the compatibility of the knowledge implicit in them. A caveat is that compatibility is not assured under background knowledge in any case. For example, let us look at the two compatibility notions associated with the pull back and the push out ones:

Definition 7

Let \(M_i=(O_i,A_i,I_i), \ i=1,2\) be two subcontexts of \({\mathbb {M}}\), and let B be background propositional knowledge on the language of \(A_1 \cap A_2\).

-

It is said that \(M_1\) and \(M_2\) are upward compatible w.r.t B if there exists a supercontext M of \(M_1\) and \(M_2\) such that \(M\models B\).

-

It is said that \(M_1\) and \(M_2\) are downward compatible w.r.t B if \(M_1 \cap M_2 \models B\).

If two contexts are upward compatible, then they are downward compatible (therefore, event information can be combined through different contextual selections without compromising consistency with background knowledge) but unfortunately, the reciprocal is not true [49] (thus the union of the information of both contextual selections can lead to inconsistencies). It will be seen below how knowledge behaves under continuous extensions of contextual selection.

Inductive logic programming versus explaining in the model

The consideration of explanations as local in nature are a common practice in AI systems, especially those based on deduction. For example, rule-based systems allow, by following the execution trace, extracting an explanation that has two differentiated parts: the rules triggered in the deduction of the particular attribute associated with the event, and the facts of the initial KB that triggered the rules. Therefore, different explanations can be obtained from different executions for the same result. Something similar occurs with recommendation systems, a special case in which the base of facts is the history of previous customers’ choices, product valuations, etc. and the rules are those extracted in the data mining process [4].

In the case of FCA, given some observations about a set of attributes, other values can be inferred by executing the production system—implicational closure—associated with an implication basis the format of an explanation for an attribute m will be a pair \(H=\langle Y, {\mathcal {L}}_0\rangle \) where Y is a set of attributes (that will be observed or assumed by both explainer and explainee, possibly perceptions shared by both) and \({\mathcal {L}}_0\) is a set of valid implications verifying that \({\mathcal {L}}_0 \cup Y \models m\) (that is, \({\mathcal {L}}_0 \vdash _p Y \rightarrow m\)). To simplify notation, this fact will be rewritten by \(H\models m\). The search for the explanation will be limited to the formal perspective chosen (recall that contextual selections are also formal perspectives). It is, therefore, a sound way to address the complexity of the explanation offered (introduced in Paragraph 5), and would help isolating the beliefs in the hypotheses that conflict with the beliefs of the involved agents. In this way it has also been decided to simplify the notion of explanation so that it is easier to avoid Heuristic Fallacy ?‘HACE FALTA? (Paragraph reffallacy) .

A plausible objection to this type of explanation is that even a local explanation may be too complex to be understood without some sort of approximation [4] (in the case of FCA, the complexity of \({\mathcal {L}}_0\) itself). In this case, the key challenge is to decide what details to leave out in order to create an explanation based on a simple, explanatory model.

Our model shares many characteristics with the version of classic ILP [43] for Propositional Logics. Therefore, core algorithms from ILP can be applied; the general setting for ILP is used here [43, 61]. In ILP one starts with some examples (a set of evidences, E), the background theory B, and the hypothesis H. The problem of inductive inference consists, in our case, in ensuring that H behaves as a sufficient knowledge in order to justify the evidence and observing the validity of B.

A set of implications can be rewritten as a logic program, and therefore, Herbrand interpretations can be considered. With that in mind, \(\{o\}'\) can be such interpretation for the implication basis. In the case of explaining, it starts with E a subset of the potential explanandum set \({\mathcal {E}}\), containing all the attributes of \({\mathbb {M}}\) for which explanations may be requested, plus a set P of attributes that we consider the perceptions from which the explanation process starts, and which are given to the agents (that is, basic perceptions that will not require explanation and thus outside from \({\mathcal {E}}\)). In this case, \(E^+\subseteq {\mathcal {E}}\) and \(E^- \subseteq {\mathcal {E}}{\setminus }E^+\), where \(E^+, E^-\) are, respectively, the attributes the event has and does not have.

Notions are described and compared with ILP in Table 2. The aim is to find a hypothesis H such that the following conditions, shown in the second column, hold (normal semantics), in the case of ILP, while for the candidate explanation these are shown in the third column. Let us make this idea a little more concrete

Given \(H=\langle Y, {\mathcal {L}}_0\rangle \), the set \(Y+ {\mathcal {L}}_0\) can be considered as a set of defined clauses, there exist an unique minimal Herbrand model \({\mathcal {M}}(H)\) of \(Y +{\mathcal {L}}_0\). In our case, knowing that every set \(\{o\}'\) of the contextual selection actually is a Herbrand model, a classic result of Logic Programming guarantees that there is a unique minimal Herbrand model contained in all, namely the intersection of these. However, in our case, only those induced by objects from the contextual selection \({\mathbb {K}}\) (each object being one such interpretation) would be used at the intersection. That is, it is the smallest model relativized to \({\mathbb {K}}\). This model will be denoted by \(M_{{\mathbb {K}}}(H+B)\). In Table 3, ILP under definite semantics and the corresponding FCA-based explaining are compared.

Some aspects of the ILP approach

Please note that the in the description of the general definition framework, any restriction of minimalism or other restrictions on the explanation \(H=\langle Y ,{\mathcal {L}}_0\rangle \) have been excluded in the above definitions. However, this might be desirable in order to produce simpler explanations and, therefore, objective of further extensions. The algorithmic part is not tackled either. However it is interesting to mention that, in our case, variants of classic backward reasoning algorithms for clausal KB can be applied to an implication basis of \({\mathbb {K}}\) (based on the evidence we wish to explain) to extract explanations. For example, by modifying diagnostic or other techniques to detect anomalies (cf. [62] Chap. 5 for a general overview) to get the explanation in the required format. Of course, also with ILP techniques

Nevertheless, there exist other approaches to achieve the best explanations, as [63], where authors employ a logical calculus and starting from conditions of a similar nature to those of ILP. Earlier work on this subject was [64], where Josephson and Josephson propose a way of inferring the best explanation as a kind of argument scheme

Simplifying explanations by means of formal perspectives

It should be noted at this point that to simplify the presentation, no formal perspectives have been considered in comparison with ILP. However, the choice of formal perspectives plays a very important role in the intelligibility of the explanation—which is provided by the contextual selection. The reason is that an explanation based on attributes coming from perceptions can be large or cumbersome. Perspectives allow aggregating information in form of attributes understandable by the explainee that can significantly simplify the explanation offered. Let us see an illustrative example, taken from [65], which shows the importance, of the formal perspective selection, in obtaining explanations acceptable to the explainee.

The example is based on the well-known Conway’s game of life (GoL). Suppose that in the attribute set M we have the attributes that we will call geometric, that is, those that represent whether each cell in Moore’s neighborhood of a given one is alive or dead, \(\{Top-Left-Alive, Top-Left-Dead, \ldots , Bottom-Right-Alive, Bottom-Right-Dead\}\) (a total of 18 attributes), plus the attribute that represents whether the cell is alive (\(Is-Alive\)) or not, \(Will-Be-Alive\) (see two examples in Fig. 5). The world from we consider the contextual selection is the monster model corresponding to a grid of 10,000 cells (so 10,000 objects in the associated formal context), which is taken as contextual selection [65]. Using the previous method, it is possible to obtain an explanation of the Conway’s game by means of those attributes. This can be done simply by extracting a base of implications and selecting those having in the head of the implication, the attribute relative to the state of the cell studied, which in this case contains more than 700 implications and that are all necessary since they represent essentially different combinations of the environment. Although the shot of rules will always be one of them, if we want to use it to predict the live/dead state in the transition of a cell-object, which is not a readable explanation.

The formal perspective on the same set of objects is now considered, but using the computable attributes from the previous ones, which determine the number of live adjacent cells describing the neighborhood: \(\{0-Live-Neighbors, \ldots , 8-Live-Neighbors\}\) (note that they are representable by, for example, DNF formulas using the geometric attributes). These are essentially what Conway would use in the original definition, 9 Attributes that are understandable by the explainee, both their definition and the method of their calculation, (see two examples of representation in Fig. 5). The size of the implication base is considerably smaller for the computable attributes (its size is 6). As the attributes are intelligible and the implication set is small, such a base would be considered an acceptable explanation, as opposed to the one built with the raw data from the monster model. In conclusion, note that in that acceptability two issues play a key role: that the formal perspective significantly reduces the number of implications and, most importantly, the attributes used in the perspective are easily computable and intelligible, possessing a simple definition accepted by the explainee (see Fig. 6, where the last implication of the figure is depicted in the concept lattice).

Approximating the monster context and its information

A question to be solved is whether the model allows us to evaluate (theoretically) the security of the explanation, or to study the convergence to a common explanation if we add experience (we extend the contextual selection). Another question is whether there exists any equivalence between contextual selections with full counterfactual information, in the sense that if there is a counterexample for some implication or explanation, the contextual selection contains one. Having the difficulties of compatibility of different contextual selections (Paragraph 74), the study will focus on the continuous extension of a given context. It is being assumed that one works with approximations to knowledge on the CS that could be extracted from \({\mathbb {M}}\) (either from sub-contexts or perspectives). Therefore, it is necessary to study what happens when more (empirical) pieces of evidence are available, that is to say, when the induced context is increased. The problem will be restricted to the case of subcontexts, and to the following question: If the explanation depends on the contextual selection and this is extended by the experience (i.e. collection of information of events), to what extent we can approach one stable explanation? That is, one would work with formal contexts that are related by the order \({\mathbb {K}}_1 \subseteq {\mathbb {K}}_2\) on subcontexts of \({\mathbb {M}}\), that has to be understood as that \(G_{{\mathbb {K}}_1}\subseteq G_{{\mathbb {K}}_2}\) and \(I_{{\mathbb {K}}_1}\subseteq I_{{\mathbb {K}}_2}\) holds.

The formal scenario will consist of a sequence (Fig. 8) of sub-contexts (contextual selections) \(\{{\mathbb {K}}_i\}_{\{i\in I\}}\) where \(\langle I,< \rangle \) is a (partial) order and all formal contexts satisfy B, \({\mathbb {K}}_i\models B\). The knowledge depends on the one hand, on the implication basis of each \({\mathbb {K}}_i\), \({\mathcal {L}}_{{\mathbb {K}}_i}\). On the other hand, it also depends on the behavior of the sequence towards the limit (thinking that this should be a sound approach to the knowledge on the system to study). As mentioned, one is interested in the specific case of incremental observations (each observation adds new items to the subcontext and I the natural numbers), that is, \({\mathbb {K}}_i \subseteq {\mathbb {K}}_{i+1}\). The challenge would be to characterize the knowledge from the formal context \(\bigcup _i {\mathbb {K}}_{i}\), in the expectation of obtaining richer information on the system under observation. If the information on the limit context is not useful, a reconsideration of features will be necessary [15].

There exists a logical characterization of \({{\mathcal {L}}}_{\bigcup {\mathbb {K}}_i}\) that allows focusing the study in desirable features for \(\bigcup _i {\mathbb {K}}_{i}\). By taking into account that the attribute set can be increased, it is possible to define the limit of bases \(\{{\mathcal {L}}_{{\mathbb {K}}_i}\}_i\) by means the set of implications defined by

The idea is that the value \(i_0\) is related to the point in which there is available information about all attributes from implication L.

Theorem 2

\(\displaystyle {{\mathcal {L}}}_{\bigcup {\mathbb {K}}_i} \equiv \lim {{\mathcal {L}}}_{{\mathbb {K}}_i}\).

Proof

Let att(.) be the set of attributes that occur in an implication or set of implications, and \({\mathbb {K}}_i = (G_i,M_i,I_i)\).

Context extending that of Fig. 4

\(\underline{\lim {{\mathcal {L}}}_i \models \displaystyle {{\mathcal {L}}}_{\bigcup {\mathbb {K}}_i}}\):

Let \(L \in \displaystyle {{\mathcal {L}}}_{\bigcup {\mathbb {K}}_i}\). Then \(\bigcup {\mathbb {K}}_i \models L\). Consider \(i_0\) such that \(att(L)\subseteq M_i\). Since \({\mathbb {K}}_{i_0}\subseteq \bigcup {\mathbb {K}}_i\), it has \({\mathbb {K}}_{i_0}\models L\) and the same applies to any \({\mathbb {K}}_j\) with \(j\ge i_0\).

\(\underline{\displaystyle {{\mathcal {L}}}_{\bigcup {\mathbb {K}}_i} \models \lim {{\mathcal {L}}}_i}\):

Let \(L\in lim {{\mathcal {L}}}_i\). Let \(i_0\) be such that for any \(j\ge i_0\) \({\mathbb {K}}_j\models L\) (thus \({\mathcal {L}}_j \models L\)).

Let \(i_1 \ge i_0\) such that \(att(L)\subseteq G_j\). For being a growing succession, for everything \(j \ge i_1\) is also true the condition. Using the characterization of Theorem 1, for everything \(j\ge i_1\) it has \({\mathbb {K}}_j\models L\). Since all \(o\in \bigcup _i {\mathbb {K}}_i\) belongs to some \({\mathbb {K}}_j\) with \(j\ge i_1\), then \(\{o\}'\models L\). That is, \(\bigcup {\mathbb {K}}_i \models L\), and by the Theorem 1 again \(\mathcal {{\mathcal {L}}}_{\bigcup {\mathbb {K}}_i}\models L\). \(\square \)

The question now is whether the limit reaches full counterfactual information, in the following sense. It aims that, for any important event of \({\mathbb {M}}\), that invalidates the conjectured explanation, there is an event in that context with the same properties (concerning the attributes). Therefore, the ideal case of an (incremental) sequence of observation sets should occur when the model \({\mathbb {K}}=\bigcup {\mathbb {K}}_{i}\) satisfies that every relevant type of observation on the system would be represented by an exemplary object. Working with background knowledge and contextual selections has the risk of considering sub-contexts that do not necessarily have maximum information. For example, this could happen when the selection chosen to construct the explanation does not contain relevant events to extract explanations consistent with reality.

In our model, we can formalize the idea of formal context with complete relevant information. Theoretically, it is desirable to work with saturated sub-contexts defined as follows. In the next definition, the following notation will be used. Given a set of attributes Y, the propositional formula formed by the conjunction (resp. disjunction) of attributes from Y, will be denoted by \(\bigwedge Y\) (resp. \(\bigvee Y\)). The notion of B-saturation aims to capture the idea that the sub-context contains at least an exemplary event for each possible event that is consistent with B and it is also possible in \({\mathbb {M}}\). Although the notion would be circumscribed to the language used for explanations, no such restriction will be imposed here in order to avoid complicating the formalization. In addition, it also should be restricted to the events that are of interest for the explanation, and therefore, relative to the sub-language that serves to represent the event. In order to keep the formalization simple, it is supposed to be the whole language, although in each specific problem a much smaller language would be used.

Definition 8

Let \({\mathbb {M}}=({\mathbb {O}},{\mathbb {A}},{\mathbb {I}})\) be the monster context, B background (propositional) knowledge and \({\mathbb {K}}=(G,M,I)\) a subcontext. It is said that \({\mathbb {K}}\) is B- saturated in \({\mathbb {M}}\) if for every \(Y\subseteq M\), if there exists \(o\in {\mathbb {O}}\) such that

then there exists \(o_1\in G\) such that \(\{ o_1\}'\) also models it.

Please note that it will be assumed, for simplicity, that the attribute language (the context attributes \({\mathbb {K}}\)) will be \({\mathbb {A}}\).

Proposition 1

Saturated model exists (although it could be empty)

Proof

Let us consider

Then the context \(( \bigcup \{ Y' \ : \ Y \in {{\mathcal {A}}}\}, {\mathcal {A}}, I)\), where I is the corresponding restriction of \({\mathbb {I}}\), is B-saturated \(\square \)