Abstract

Entropy is a standard measure used to determine the uncertainty, randomness, or chaos of experimental outcomes and is quite popular in statistical distribution theory. Entropy methods available in the literature quantify the information of a random variable with exact numbers and lacks in dealing with the interval value data. An indeterminate state of an experiment generally generates the data in interval form. The indeterminacy property of interval-valued data makes it a neutrosophic form data. This research proposed some modified forms of entropy measures for an important lifetime distribution called Weibull distribution by considering the neutrosophic form of the data. The performance of the proposed methods is assessed via a simulation study and three real-life data applications. The simulation and real-life data examples suggested that the proposed methodologies of entropies for the Weibull distribution are more suitable when the random variable of the distribution is in an interval form and has indeterminacy or vagueness in it.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The field of information theory quantifies the information of an event by considering the concept of the extent of surprise that underlies an event. The events with low probability are generally had more surprising packages and information than the events with high probability because high-probability events are common in nature. The method used to calculate the amount of information that a random variable may possess is named Shannon information [1] and later on called entropy [2]. Clausius initially introduced entropy in the late nineteenth century by studying the dish gas disorder problem and quantifying the disorder produced by the gas through a measure named entropy [3]. The first statistical entropy measure proposed by Shannon [4] is defined as

where \(f\left(x\right)\) is a pdf of a continuous probability distribution for the continuous random variable \(X\). Later on, other forms of entropy have been proposed and available in the literature are Shannon entropy [5], maximum entropy [6], Tsallis entropy [7], Topology entropy [8], Graph entropy [9], minimum entropy [10], approximate entropy [11], spectral entropy [12], sample entropy [13], fuzzy entropy [14], and cross-entropy measures [15]. Rényi entropy for order \(\alpha \) where \(\alpha \ge 0\) proposed by [16] is defined as

where \({P}_{i} (i=\mathrm{1,2},\dots ,n)\) are the probabilities for \(n\) possible outcomes of the r.v \(X\) and log2 is a logarithm to the base 2 operator.

The methods cited above for entropy measures consider only the deterministic outcome of an experiment or certain values and lacks in dealing with the interval form data. The interval form data arise from the experiment whose outcomes are not sure, and the exact outcome of the experiment is not certain. In such situations, the results of the experiment classified in interval form with some indeterminacy or vagueness. Smarandache [17] introduced the concept of fuzzy logic and neutrosophic statistics by incorporating vague, uncertain, unclear, or indeterminate states of data into mathematics and statistics theories.

It becomes challenging for the researchers to determine the exact sample size of a study when the responses for a survey are incomplete and confused for its inclusion. It is tough in real life to record the data precisely in crisp numbers, e.g., if the temperature of a particular area is to be measured, it is nearly impossible to estimate it precisely due to rapid fluctuations. Similarly, it is hard to obtain the water level of any river due to the same reason. Fuzzy techniques are great methods to deal with such types of situations. These techniques allow the statisticians to say that the particular room temperature is “around 29 °C” and the value 29 is considered a fuzzy number [18]. According to [19], the truthfulness or falseness of observations is just a matter of implementation of the degree of fuzzy logic, e.g., in the variables like tall, expensive, cold, low, etc. Zadeh introduced the theory of fuzzy sets in 1965 [19].

where \(V\) is the variable that takes values “\(v\)” from the universal set \(V\), and \(F\) is the fuzzy set on \(V\) that represents vague predicate. If \(v\) is any particular value of \(V\), it is said to belong to the fuzzy group with membership grade \(F(v)\), the degree of truth, and \(T(p)\) of the proposition is used in (1).

Fuzzy sets played a central role in the investigation of non-deterministic techniques. In other words, fuzzy logic can help deal with imprecise environments. The concept of fuzzy logic in entropy measure was introduced by [14] and proposed a combination of fuzzy logic and entropy measure in characterizing the EMG (Electromyography) signals. This measure was a negative logarithm of conditional probability. [20] introduce the concept of neutrosophy and neutrosophic environment. The decision about the membership of interval numbers is proposed by Atanassove [21] under fuzzy environments through the interval-valued intuitionistic fuzzy set (IVIFS). Wei et al. [22] presented the concept of entropy measure for IVIFS in pattern identification and [23, 24] generalized the entropy measures for the neutrosophic set environment. Deli [25] developed a method for the linear optimization of the single-valued neutrosophic set and discussed its sensitivity. [26] presented a comparison of the attribute value and attributed weight single-valued trapezoidal neutrosophic numbers for the multiple attribute decision-making problems. Readers may find further details on neutrosophic set environment and entropy measures from [27,28,29,30,31,32,33,34,35]. However, instead of choosing a fuzzy number, we approach a more specific technique where both the numbers, in which lies the uncertainty, could be used. This approach is named neutrosophic statistics.

Neutrosophic statistics is a generalization of classical statistics because the methods of classical statistics are limited to only exact numbers and are helpless for indeterminate numbers or parameters [36]. [37] mentioned that fuzzy logic is a particular case of neutrosophic logic. Neutrosophic statistics is an extension to classical statistics known for its capacity to deal with values, i.e., values in which there is confusion, instead of real crisp whole numbers. In this type of statistics, we replace the indeterminate parameters and values (uncertain, unsure). Usually, it is represented by a capital “N” in the subscript of the parameter or the statistic such as \({x}_{N}\). Neutrosophic statistics deal with sets of values instead of crisp numbers. It replaces the parameters that are indeterminate with sets. Any number \(x\) replaced by a set-id denoted here as \({x}_{N}\) i.e. neutrosophic \(x\) or uncertain \(x\). This \({x}_{N}\) is mostly the interval including \(x\), generally, it is a set that approximates \(x\), see [38]. More information on neutrosophic Weibull distribution can be seen in [39] and [40].

The presence of inaccurate data, mainly in lifetime problems, is familiar, and the classical statistics are helpless in providing accurate estimates. These motivate the researchers to model the vague situation more precisely and present some methodologies capable of estimating the imprecise parameters. These sorts of situations could be dealt with neutrosophic sets where there is undoubtedly high and low value and uncertainty. Here in this study, neutrosophic logic and entropy are combined to provide a novel measure named neutrosophic entropy for an important lifetime distribution. Weibull distribution since the applications of neutrosophic theory with a combination of entropy measures has not been studied much in the past in statistical distribution theory. The developed methodologies will be beneficial in understanding the concept of entropy measures for the interval-valued data, mainly in the case of Weibull distribution.

Neutrosophic entropy of Weibull distribution

A neutrosophic set is a generalization of the classical fuzzy set. This set has the feature of fuzziness (truth, falsity, and indeterminacy) and imperfect information. Zadeh [41] used the entropy term to measure the fuzziness of a set. Details about entropy measures for fuzzy sets can be seen [2, 31, 42,43,44,45]. In this section, the neutrosophic entropy for a two-parameter Weibull distribution is derived. The distribution of a random variable X is known as the Weibull distribution with the shape parameter \(\alpha \) and scale parameter \(\beta \) with cumulative distribution function as [46]:

and probability density function (pdf) as

A neutrosophic form of the Weibull distribution with parameters [\({\alpha }_{L,}{\alpha }_{U}\)] and [\({{\beta }_{L},\beta }_{U}\)] is

Shannon entropy of Weibull distribution

In this section, Shannon entropy for Weibull distribution is proposed under the logic of neutrosophy. The Shannon entropy of Weibull distribution can be obtained by substituting (6) in (1), i.e.

where \(\gamma \) is the Euler’s constant and\(\gamma \;\;\int_{0}^{\infty } {{\text{e}}^{ - x} \ln x\;dx} .\)

Equation (8) shows the Shannon entropy of the Weibull distribution. This statistic is ideal for cases where we have crisp numbers and values for parameters. However, in real life, we scarcely come across crisp values, i.e., there is some uncertainty, and we are unable to decide among a pair of values such as the temperature does not have an exact value. It lies between the highest recorded temperature and the lowest at that time. The same is the case with the stock exchange records and numerous other cases in the real world. Neutrosophy as an extension of classical statistics could be helpful in situations having doubt or uncertainty among two values. To deal with such problems, we proposed a new entropy measure with the combination of neutrosophic statistics and named it neutrosophic entropy.

Using this Eq. (8), the neutrosophic Shannon entropy of Weibull distribution is

Here, \({{\alpha }}_{L}\) is the estimate of the parameter \({\alpha }\) obtained from the lower bound for which the uncertainty lies in the sample; and \({\alpha }_{U}\) is the estimate of the parameter α of the Weibull distribution is obtained from the upper bound, among which lies the uncertainty in the sample. Similarly \({\beta }_{L}\) is the estimate of the parameter \(\beta \) of the Weibull distribution, estimated from the lower bounds in the sample also \({\beta }_{U}\) is the estimate of the parameter \(\beta \) for the Weibull distribution, estimated from the lower bounds in the sample. Where \({{\alpha }}_{L}{\upbeta }_{L}\) be the lower and \({{\alpha }}_{U}{\upbeta }_{U}\) be the upper-level values of the parameters. In circumstances where there appears doubt among more than one value (two values here), then the lower among them are considered as “L” whereas the more considerable value is “U”.

Using Eq. (5), we can obtain two results, one for the lower and the other for the upper bound, and we can state that the entropy in the particular case lies between these results.

Rényi entropy of Weibull distribution

The Rényi entropy of Weibull distribution is obtained by substituting (6) in the (3), i.e.

Equation (11) shows the Rényi entropy of the Weibull distribution. Entropy obtained from Eq. (11) is considered ideal when there is the availability of exact estimates of the parameters of Weibull distribution. As already discussed, it is infrequent in real life to obtain precise measures and estimates of parameters. There lies confusion or doubt, or the value cannot be precisely measured and calculated. Here is a need for a more general measure to calculate the entropy which is proposed here.

Neutrosophic Rényi entropy of Weibull distribution

The neutrosophic Rényi Entropy for Weibull distribution using Eq. (11) is

where \({{\alpha }}_{L}{\upbeta }_{L}\) be the lower and \({{\alpha }}_{U}{\upbeta }_{U}\) be the upper-level estimates of the parameters. In circumstances where there appears doubt among more than one value (two values here), then the lower among them are considered as “L” whereas the larger value is “U”.

Using Eq. (5), we can obtain two results, one for the lower and the other for the upper bound, and we can state that the entropy in the particular case lies between these results.

Simulation study

In this section, a simulation study is performed to assess the effectiveness and efficiency of the proposed measure. The steps used for the simulation study are

-

1.

The theoretical neutrosophic Shannon and Rényi entropy for some chosen intervals of the shape parameter \(\alpha \) and scale parameter \(\beta \) in the interval form were computed.

-

2.

Random numbers x1 and x2 were generated from the Weibull distribution of sample size 10,000.

-

3.

Compute the estimates of the shape parameter \(\alpha \) and scale parameter \(\beta \) for the lower and upper bound from the obtained samples.

-

4.

Now HN(x) can be attained using Eq. (9) and H2N(x) can be achieved using Eq. (12).

-

5.

We repeat the steps mentioned above for 1000 random samples and different parameters to check the efficacy of the novel measure.

-

6.

The empirical neutrosophic Shannon and Rényi entropy for Weibull distribution were computed by taking the average of the obtained matrix of the obtained entropies in the interval form.

-

7.

The theoretical and empirical neutrosophic entropies were compared and the obtained results were plotted.

The empirical results obtained from simulations for the neutrosophic Shannon entropy of the Weibull distribution are shown in Table 1 for different values of α and β. First, \(\alpha \) is fixed and variations due to \(\beta \) were observed. It can be observed from Table 1 that there are some patterns and relations among the values of estimates of parameters and proposed entropy measure. It can be seen that on increasing the values of parameters, the neutrosophic Shannon entropy slightly increases. Second, the parameter \(\beta \) is fixed and variations due to \(\alpha \) are observed. It is observed that there is a slight increase in the lower bound of neutrosophic Shannon entropy on increasing the values of parameters, whereas the upper bound remains the same.

Similarly, Table 2 shows the empirical results, obtained from simulation, of neutrosophic Rényi entropy of Weibull distribution, for different values of α and β. It can be seen from Table 2 that on increasing the value of parameter \(\beta \) the neutrosophic entropy increases. It can also be stated that it approaches zero. It can also be seen from Table 2 that on increasing the value of parameter \(\alpha \) there is very little increase in the upper bound of neutrosophic entropy while the lower bound remains constant.

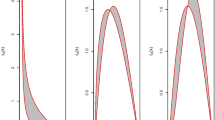

Figure 1 shows the neutrosophic Shannon and Rényi entropy for Weibull distribution for \(\alpha \) = (76.25, 97.2) and \(\beta \) = (9.95, 12.05). The red line represents the lower bound of the obtained interval of neutrosophic entropy while the blue line represents the lower bound of the obtained interval of neutrosophic entropy of the Weibull distribution. In this situation, there exists indeterminacy among an interval of values of parameter, i.e. \(\alpha \) = (76.25, 97.2) and \(\beta \) = (9.95, 12.05), so the neutrosophic entropy is suitable here. Figure 1 shows that the value (true value) of entropy lies in the obtained interval.

Applications on real-life data sets

Example 1:

We have taken the data of the past 5 years, i.e., 2016–2020 temperatures (Average Low and Average High) of Lahore, Punjab, Pakistan. The data include a forecast of the remaining months of 2020, i.e. (September–December). The data were collected from World Weather Online [47]. Figure 2 shows the graph of the highest recorded temperature in Lahore with an orange line. The average temperature recorded is represented by the blue line, while the black line represents the lowest recorded temperature.

Here temperatures for five years are observed, i.e., from the year 2016–2020. Using these observations the estimates of the neutrosophic Weibull parameters were obtained. The analysis was done in Minitab (Ver. 18), and the parameters of neutrosophic Weibull distribution (Eq. 7) were estimated. These estimates are used to find the neutrosophic Shannon entropy of the Weibull distribution (Eq. 9). Graph (1) shows these estimates.

From Table 3 in the Appendix and Fig. 3, the values of \({{\alpha }}_{L}{{ and \beta }}_{L}\) be the lower and \({{\alpha }}_{U} and {\upbeta }_{U}\) be the upper-level estimates of the interval parameters \({\alpha and \beta }\), (\({{\alpha }}_{L}{{\alpha }}_{U}\)) = (76.35, 97.22) also (\({\upbeta }_{L}{\upbeta }_{U}\)) = (5.770, 8.544) and N = 60. The result obtained for the neutrosophic Shannon entropy of Weibull distribution using Eq. 9 is \({H}_{N}\left(x\right)=(0.3897, 0.3451)\) and the result obtained for the neutrosophic Rényi entropy of Weibull distribution using Eq. 12 is, i.e., H2N(x)\(=(-0.005,-0.0039)\). The study consists of average low and average high temperatures of Lahore, Punjab, Pakistan for the past five years (including 2020). The data also incorporate the weather forecast for the remaining months of 2020. These data were collected from World Weather Online. To analyze the data, Minitab (ver. 18) software was used. The results showed that the entropy value is not so large, i.e., close to 1 and quite far from 0. It is said that entropy value lies mostly between 0 and 1. Zero means no uncertainty or error, whereas one states maximum uncertainty and unpredictability; in other words, we can say a very high level of disorder or low purity level. From this example, we get the information that the system indulged in estimating the average monthly temperatures of a particular city, country, or area (Lahore, Punjab, Pakistan here) is accurate, and it has a significantly less amount of disorder. The neutrosophic Shannon entropy obtained here is close to zero, i.e. (0.3897, 0.3451), which indicates less uncertainty in the data taken and the system used to collect this kind of data efficiently. It can also be said that the weather condition of Lahore is not that uncertain as the entropy value is close to zero, and the value of H(x) close to zero indicates less amount of uncertainty. The neutrosophic Rényi entropy obtained here is close to zero, i.e. (− 0.005, − 0.0039), indicating less uncertainty in the data taken and the system used to collect this kind of data is efficient. It can also be said that the weather condition of Lahore is not that uncertain as the entropy value is close to zero, and the value of H(x) close to zero indicates less amount of uncertainty.

Example 2:

Lieblein and Zelen [48] give the results of tests of the endurance of nearly 5000 deep-groove ball bearings. The graphical estimates of cover all lots tested appear to have an average value of about 1.6. Consider the following sample given the results of the tests, in millions of revolutions, of 23 ball bearings were 17.88, 28.92, 33.00, 41.52, 42.12, 45.60, 48.48, 51.84, 51.96, 54.12, 55.56, 67.80, 68.64, 68.64, 68.88, 84.12, 93.12, 98.64, 105.12, 105.84, 127.92, 128.04, 173.40. The neutrosophic form data are provided in the Appendix.

The maximum likelihood estimate of \(\beta \) is 2.102, the estimate of \({\alpha }\) from the equation is 81.99. The upper-level estimates were randomly generated using Weibull distribution with parameters alpha = 85.99 and beta = 2.259, keeping n = 23.

From Table 4 in the Appendix, the values of \({{\alpha }}_{L}{{ and \beta }}_{L}\) be the lower and αU\( and {\upbeta }_{U}\) be the upper-level estimates of the parameters α\({ and \beta }\), (\({{\alpha }}_{L}{{\alpha }}_{U}\)) = (81.99, 85.99) also (\({\upbeta }_{L}{\upbeta }_{U}\)) = (2.102 ,2.259) and n = 23. The result obtained is the neutrosophic Shannon entropy and Rényi entropy of Weibull distribution, i.e. \({H}_{N}\left(x\right)=( -0.2505884 1.3711825)\) and H2N(x)\(=(3.991186, 17.589184)\).

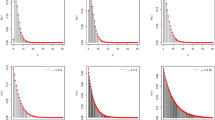

Figure 4 shows the neutrosophic Shannon and Rényi entropy for Weibull distribution for \(\alpha \) = (81.99, 85.99) and \(\beta \) = (2.102, 2.259). The red line represents the lower bound of the obtained interval of neutrosophic entropy. In contrast, the blue line represents the lower bound of the obtained interval of neutrosophic entropy of the Weibull distribution. In this situation there exists indeterminacy among an interval of values of parameter i.e. \(\alpha \) = (81.99, 85.99) and \(\beta \) = (2.102, 2.259) so the neutrosophic entropy is suitable here. The Fig. 3 shows that the value (true value) of entropy lies in the obtained interval. The Shannon entropy and Renyi entropy estimates under classical statistics for the parameters α = 83 and β = 2.15 are \(H(x)=-2.5\), H2(x) = 4.07, respectively, that are quite far from the estimates under the proposed forms of Shannon and Renyi entropies. These indicate that our proposed methodologies are better than the classical methods under a neutrosophic environment.

Example 3:

The data in Table 5 are the times to failure measurement of 25 lamp bulbs on accelerated test. The data were taken from Wadsworth [49] (shape parameter 1.985, and scale = 0.976). The upper-level estimate was estimated with shape parameter = 2.220 and scale parameter = 0.999. Also, \({H}_{N}(x)=( 0.312, 1.023)\) and H2N(x)\(=\left(0.965, 1.989\right).\)

Figure 5 shows the neutrosophic Shannon and Rényi entropy for Weibull distribution for \(\alpha \) = (1.985, 2.220) and \(\beta \) = (0.976, 0.999). The red line represents the lower bound of the obtained interval of neutrosophic entropy, while the blue line represents the lower bound of the obtained interval of neutrosophic entropy of the Weibull distribution. In this situation there exists indeterminacy among an interval of values of parameter i.e. \(\alpha \) = (1.985, 2.220) and \(\beta \) = (0.976, 0.999). The Shannon entropy and Renyi entropy estimates under classical statistics for the parameters α = 1.996 and β = 0.986 are \(H(x)=1.01\), H2(x) = 0.98, respectively, are far from the estimates under the proposed forms of Shannon and Renyi entropies. These indicate that our proposed methodologies are better than the classical methods under a neutrosophic environment. Figure 3 shows that the value (true value) of entropy lies in the obtained interval.

Concluding remarks

Entropy is an essential measure from information theory to determine the vagueness of a data set in an exact form. The measure is also necessary for the distribution theory as a measure of uncertainty. Several studies have been conducted to fix the problem in distribution theory were based on the numbers generated in an exact form and unable to solve the problems having interval-valued data. Although some entropy measures available in the literature deal with the interval-valued data but in sets form data and lacks in studying a probability distribution having interval-valued data. This research fills the gap by setting the entropy measures in distribution theory in the context of neutrosophy and interval-valued data. Important entropy measures, i.e., Shannon and Renyi, were modified for the Weibull distribution under the neutrosophic interval-valued logic. The proposed entropy methods were the generalization of the existing entropy methods under classical statistics. We showed both simulation and real data set applications that the proposed modified forms of the entropies measure for Weibull distribution produced efficient estimates in the presence of interval-valued data. As the Weibull distribution is a vital lifetime distribution in probability theory and has wider applications in engineering, quality control, medical sciences, etc., we can say that our proposed forms of entropy measures also have broad applications problems generated by the interval-valued data. The concept and applications of the proposed entropy measures can be extended for other neutrosophic probability distributions from the distribution theory. Moreover, other entropy measures available in the literature can be generalized under the neutrosophic environment for a more comprehensive application of entropy theory.

References

Cincotta PM et al (2021) The Shannon entropy: an efficient indicator of dynamical stability. Phys D: Nonlinear Phenom 417:132816

Sethna J (2021) Statistical mechanics: entropy, order parameters, and complexity, vol 14. Oxford University Press, USA

Gillispie CC (2016) The edge of objectivity: an essay in the history of scientific ideas. Princeton University Press; Reprint edition.

Shannon CE (1948) A mathematical theory of communication. Bell Syst Tech J 27(3):379–423

Shannon CE, Weaver W (1949) The mathematical theory of communication. The University of Illinois Press, United States

Jaynes ET (1957) Information theory and statistical mechanics. Phys Rev 106(4):620–630

Thilagaraj M, Pallikonda Rajasekaran M, Arun Kumar N (2019) Tsallis entropy: as a new single feature with the least computation time for classification of epileptic seizures. Cluster Comput 22(6):15213–15221

Adler RL, Konheim AG, McAndrew MH (1965) Topological entropy. Trans Am Math Soc 114(2):309–319

Mowshowitz A (1968) Entropy and the complexity of graphs: II. The information content of digraphs and infinite graphs. Bull math biophys 30(2):225–240

Posner E (1975) Random coding strategies for minimum entropy. IEEE Trans Inf Theory 21(4):388–391

Pincus SM (1991) Approximate entropy as a measure of system complexity. Proc Natl Acad Sci 88(6):2297–2301

Kapur JN, Kesavan HK (1992) Entropy optimization principles and their applications. Entropy and energy dissipation in water resources. Springer, Heidelberg, pp 3–20

Richman JS, Moorman JR (2000) Physiological time-series analysis using approximate entropy and sample entropy. Am J Physiol-Heart Circulat Physiol 278(6):H2039–H2049

Chen W et al (2007) Characterization of surface EMG signal based on fuzzy entropy. IEEE Trans Neural Syst Rehabil Eng 15(2):266–272

Song Y et al (2019) Divergence-based cross entropy and uncertainty measures of Atanassov’s intuitionistic fuzzy sets with their application in decision making. Appl Soft Comput 84:105703

Rényi A (1961) On measures of entropy and information. Proceedings of the fourth berkeley symposium on mathematical statistics and probability, volume 1: contributions to the theory of statistics. The Regents of the University of California, California

Smarandache F (2016) Neutrosophic overset, neutrosophic underset, and neutrosophic offset. Similarly for neutrosophic over-/under-/off-logic, probability, and statistics. Infinite Study, Bruxelles

Kruse R (1983) On the entropy of fuzzy events. Kybernetes. 12(1): 53-57. https://doi.org/10.1108/eb005641

Yager RR, Zadeh LA (2012) An introduction to fuzzy logic applications in intelligent systems, vol 165. Springer Science & Business Media, Heidelberg

Smarandache F, Broumi S, Singh PK, Liu CF, Rao VV, Yang HL, Elhassouny A (2019) Introduction to neutrosophy and neutrosophic environment. Neutrosophic Set in Medical Image Analysis ( 3–29

Atanassov KT (2020) On interval valued intuitionistic fuzzy sets. Interval-valued intuitionistic fuzzy sets. Springer, Heidelberg, pp 9–25

Wei C-P, Wang P, Zhang Y-Z (2011) Entropy, similarity measure of interval-valued intuitionistic fuzzy sets and their applications. Inf Sci 181(19):4273–4286

Che R, Suo C, Li Y (2021) An approach to construct entropies on interval-valued intuitionistic fuzzy sets by their distance functions. Soft Comput 25(10):6879–6889

Tiwari P (2021) Generalized entropy and similarity measure for interval-valued intuitionistic fuzzy sets with application in decision making. Int J Fuzzy Syst Appl (IJFSA) 10(1):64–93

Deli I (2020) Linear optimization method on single valued neutrosophic set and its sensitivity analysis. TWMS J Appl Eng Math, 10(1): 128–137

Deli İ (2019) A novel defuzzification method of SV-trapezoidal neutrosophic numbers and multi-attribute decision making: a comparative analysis. Soft Comput 23(23):12529–12545

Dalapati S et al (2017) IN-cross entropy based MAGDM strategy under interval neutrosophic set environment. Neutrosophic Sets Syst 18:43–57

Deli I (2018) Operators on single valued trapezoidal neutrosophic numbers and SVTN-group decision making. Neutrosophic Sets Syst 22:131–150

Deli İ, Keleş MA (2021) Distance measures on trapezoidal fuzzy multi-numbers and application to multi-criteria decision-making problems. Soft Comput 25(8):5979–5992

Mondal K, Pramanik S, Giri BC (2020) Some similarity measures for MADM under a complex neutrosophic set environment. Optimization theory based on neutrosophic and plithogenic sets. Elsevier, Amsterdam, pp 87–116

Pramanik S et al (2018) NS-cross entropy-based MAGDM under single-valued neutrosophic set environment. Information 9(2):37

Qin K, Wang L (2020) New similarity and entropy measures of single-valued neutrosophic sets with applications in multi-attribute decision making. Soft Comput 24(21):16165–16176

Rashid T, Faizi S, Zafar S (2018) Distance based entropy measure of interval-valued intuitionistic fuzzy sets and its application in multicriteria decision making. Adv Fuzzy Syst 2018:1

Tan R-P (2020) Decision-making method based on new entropy and refined single-valued neutrosophic sets and its application in typhoon disaster assessment. Appl Intell 51:283–307

Zhang H, Mu Z, Zeng S (2020) Multiple attribute group decision making based on simplified neutrosophic integrated weighted distance measure and entropy method. Math Probl Eng 2020:1

Smarandache F (1985) Paradoxist mathematics. Infinite Study

Smarandache F (2003) Definitions derived from neutrosophics. Infinite Study.

Smarandache F (2010) Neutrosophic logic—a generalization of the intuitionistic fuzzy logic. Multispace Multistruct. Neutrosophic Transdiscipl (100 Collect Papers Sci) 4:396

Hamza Alhasan KF, Smarandache F (2019) Neutrosophic Weibull distribution and neutrosophic family Weibull distribution. Neutrosophic Sets Syst 28(1):15

Arif OH, Aslam M (2021) A new sudden death chart for the Weibull distribution under complexity. Complex Intell Syst 7, 2093– 2101. https://doi.org/10.1007/s40747-021-00316-x

Zadeh LA (2015) Fuzzy logic—a personal perspective. Fuzzy Sets Syst 281:4–20

Biswas P, Pramanik S, Giri BC (2014) Entropy based grey relational analysis method for multi-attribute decision making under single valued neutrosophic assessments. Neutrosophic Sets Syst 2:102–110

Majumdar P, Samanta SK (2014) On similarity and entropy of neutrosophic sets. J Intell Fuzzy Syst 26(3):1245–1252

Smarandache F (2015) Neutrosophic precalculus and neutrosophic calculus: neutrosophic applications. Infinite Study.

Ye J et al (2016) Neutrosophic functions of the joint roughness coefficient and the shear strength: a case study from the pyroclastic rock mass in Shaoxing City, China. Math Probl Eng 2016:1

Cho Y, Sun H, Lee K (2015) Estimating the entropy of a Weibull distribution under generalized progressive hybrid censoring. Entropy 17(1):102–122

Zoomash. Lahore Monthly Climate Averages. 2020 [cited 2020; Available from: https://www.worldweatheronline.com/lahore-weather-averages/punjab/pk.aspx#.

Lieblein J, Zelen M (1956) Statistical investigation of the fatigue life of deep-groove ball bearings. J Res Natl Bur Stand 57(5):273–316

Kanji G, Arif OH (2001) Median rankit control chart for Weibull distribution. Total Qual Manag 12(5):629–642

Acknowledgements

The authors are deeply thankful to the editor and reviewers for their valuable suggestions to improve the quality of the paper.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sherwani, R.A.K., Arshad, T., Albassam, M. et al. Neutrosophic entropy measures for the Weibull distribution: theory and applications. Complex Intell. Syst. 7, 3067–3076 (2021). https://doi.org/10.1007/s40747-021-00501-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-021-00501-y