Abstract

Knowledge graph question answering is an important technology in intelligent human–robot interaction, which aims at automatically giving answer to human natural language question with the given knowledge graph. For the multi-relation question with higher variety and complexity, the tokens of the question have different priority for the triples selection in the reasoning steps. Most existing models take the question as a whole and ignore the priority information in it. To solve this problem, we propose question-aware memory network for multi-hop question answering, named QA2MN, to update the attention on question timely in the reasoning process. In addition, we incorporate graph context information into knowledge graph embedding model to increase the ability to represent entities and relations. We use it to initialize the QA2MN model and fine-tune it in the training process. We evaluate QA2MN on PathQuestion and WorldCup2014, two representative datasets for complex multi-hop question answering. The result demonstrates that QA2MN achieves state-of-the-art Hits@1 accuracy on the two datasets, which validates the effectiveness of our model.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Intelligent human–robot interaction provides a convenient way for the communication between human and the robots. Question answering over knowledge base (KBQA) is one of the important technologies of intelligent human–robot interaction. It aims at using the given knowledge base to answer users’ natural language question by cognitive computing [6]. The development of semantic web and the improvement of information acquisition technology promote the establishment and application of large-scale knowledge graph (KG), e.g., Freebase [2], DBpedia [13], etc. The massive information contained in knowledge graph further promotes the research and application of KBQA. Therefore, recent years have witnessed an increasing demand for conversational question answering agent that allows user to query a large-scale knowledge base (KB) in natural language [1].

It is a long-standing problem which aims to answer user’s natural language question using a structured knowledge base. A typical KB can be viewed as a knowledge graph consisting of entities, properties, and relations between them [18]. Historically, KBQA can be divided into two mainstreams [7]. The first branch, namely, the semantic parser method (SP-based method), tries to parse the natural language question into a logical form that can be used to query the knowledge base, e.g., SPARQL, \(\lambda \)-DCS [14] and \(\lambda \)-calculus. However, SP-based method heavily depends on data annotation and hand-crafted templates. The second branch treats KBQA as information retrieval problem, namely, information retrieval method (IR-based method). This approach encodes the question and each candidate as high-dimension vectors in a continuous semantic space and a ranking model is used to predict the correct answers. Recently, deep learning also leads an upward trend for IR-based methods. These approaches range from simple neural embedding-based models [4], to attention-based recurrent model [9] and then to memory-augmented neural controller architectures [5, 7, 11].

An example of multi-relation question over knowledge graph from WorldCup2014 [31]. The rounded rectangles represent the entities in KG and the solid arrows represent the relations between entities. The dot arrows represent the attention flow in the reasoning process. The entity “L_MESSI” is the first part to focus on, the phrase “play professional in” next and “country” finally

More recent work [25, 32, 33] focuses on enhancing the reasoning capability for multi-hop question. To be specific, multi-hop question means that the question has multiple relations and needs more steps inference to get the final answer. For example in Fig. 1, considering the question “which country does L_MESSI play professional in ?”, where more than one relations (i.e., “plays_in_club” and “is_in_country”) are involved. Due to the variety and complexity of semantic information and the large scale of knowledge graph, multi-hop question answering over knowledge base is still a challenging task. It remains an open question how to improve the knowledge representation. Generally, there are two challenges need to be addressed.

First, the triplets have implicit relationship as some of them share entities or relations. From the way of humans’ thinking, we often find associated information from related notions. Take for example the knowledge base in Fig. 1, “FC_Barcelona” and “Real_Madrid_CF” share the same relation “is_in_country” and tail entity “Spain”, which would enhance our memory that the two clubs are located in the same country. Therefore, the graph context between triplets needs to be modeled to improve the representation of entities and relations [17]. However, previous work only considers the individual triplet and local information, and the explicit graph context of knowledge base has not been fully explored.

Second, the multi-hop question has more complicated semantic information. The tokens of the question have different influence on the triples selection in each reasoning step. As an example the question in Fig. 1, the entity “L_MESSI” is the first part that should be focused on, the phrase “play professional in” next and “country” finally. Accordingly, the model should dynamically pay attention to different parts of the question during reasoning. However, current model often takes the question as a whole and ignores the priority information in it.

Considering the aforementioned challenges, we enhance key-value memory neural network with KG embedding and question-aware attention, named QA2MN (Question-Aware Memory Network for Question Answering), to improve the representation of tokens in question and the entities and relations in knowledge base. Specifically, to address the first challenge, we utilize KG embedding model to pre-train the embedding of entities and relations. For the triplets are modeled and scored independently in general KG model, we integrate graph context into the scoring function to enrich the semantic representation of entities and relations. To address the second challenge, we use question-aware attention to update the focus on question timely during reasoning process. The question-aware attention can dynamically change attention to different parts of the question in each reasoning steps.

To summarize, we have threefold contributions: (i) incorporating graph context information into KG embedding model to enhance the representation of entities and relations; (ii) proposing a question-aware attention in the reasoning process to enhance the query update mechanism in key-value memory neural network; (iii) achieving state-of-the-art Hits@1 accuracy on two representative datasets and the ablation study demonstrates the interpretability of QA2MN.

The rest of the paper is structured as follows. We first give a review of related work in “Related work”. Then, background is showed in “Background” and the detailed model is followed in “Proposed model”. Experimental setups and results are reported in “Experiments”. Finally, we end the paper with conclusion and future work in “Conclusion”.

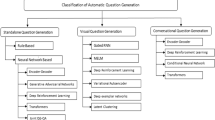

Related work

Traditional SP-based models heavily depend on predefined templates instead of exploring the inherent information in knowledge graph [1, 24]. Yih et al. [30] proposed query graph method to effectively leverage the graph information by cutting the semantic parsing space. For multi-hop question, Xu et al. [29] used key-value memory neural network to store the graph information, and a new query update mechanism was proposed to remove the key and value that had been located in the query when updating. Therefore, the model could better pay attention to the content that needs reasoning in the next step. The SP-based methods give logic form representation of natural language question and the query operation is followed to get the final answer. However, the SP-based methods more or less rely on feature engineering and data annotation. In addition, they are demanding for researchers to master the syntax and logic structures of data, which poses additional difficulties for non-expert researchers.

The IR-based methods treat KBQA as information retrieval problem by modeling questions and candidate answers with ranking algorithm. Bordes et al. [4] first employed embedding vectors to encode the question and knowledge graph into high-dimension semantic space. Hao et al. [9] presented a novel cross-attention-based neural network model to consider the mutual influence between the representation of questions and the corresponding answer aspects, where attention mechanism was used to learn the dynamically relevance between answer and words in the question to effectively improve the matching performance. Chen et al. [7] proposed bidirectional attentive memory network to capture the pairwise correlation between question and knowledge graph information and simultaneously improved the query expression by the attention mechanism. However, those models are not enough to handle multi-relation questions due to the lack of multi-hop reasoning ability. Zhou et al. [33] proposed an interpretable, hop-by-hop reasoning process for multi-hop question answering. The model predicted the complete reasoning path till the final answer. However, considering the cost of data collection, it is scarcely possible to be generalized to other domains. Therefore, weak-supervisionFootnote 1 with the final answer labeled is better suited to current needs. The IR-based method converts the graph query operation into a data-driven learnable matching problem and can directly get the final answer by end-to-end training. Its advantages is that it reduces the dependence on hand-crafted templates and feature engineering, while the method is blamed for poor interpretability.

Recent work [19, 32] also formulated multi-hop question answering as a sequential decision problem. Zhang et al. [32] treated the topic entity as a latent variable and handled multi-hop reasoning with variational inference. Qiu et al. [19] performed path search with weak-supervision to retrieve the final answer. The model proposed a potential-based reward shaping strategy to alleviate the delayed and sparse reward problem.

Aforementioned work mainly focused on the reasoning ability. Some works like [10, 20, 28] take advantage of the structure and relation information preserved in the KG embedding representation to advance the KBQA task. [10, 28] used knowledge graph embedding to handle simple one-hop questions. Saxena et al. [20] leveraged knowledge graph embedding to perform multi-hop KBQA. However, the learned embeddings were only required to be compatible within each individual fact, without considering the graph context information. To bridge the gap, we pre-train the knowledge graph embedding with graph context and use it to initialize the QA2MN model and allow it to be fine-tuned in the training process.

Background

Task description

For the given structured knowledge graph \({\mathscr {G}}\) with entity set \({\mathscr {E}}\) and relation set \({\mathscr {R}}\), each triplet \(T = (h, r, t) \in {\mathscr {G}}\) represents an atomic fact, where \(h\in {\mathscr {E}}\), \(t\in {\mathscr {E}}\), \(r\in {\mathscr {R}}\) denote head entity, tail entity, and the relation between them, respectively. Given a natural language question X, the task is to reason over \({\mathscr {G}}\) and predict Y to answer X. Generally, the possible answers include (i) an entity from the entity set \({\mathscr {E}}\), (ii) the numerical results of arithmetic operations, such as SUM or COUNT, and (iii ) one of the possible Boolean values, such as True or False [6]. In this paper, we mainly focus on the first problem of entity-centroid natural language question. To facilitate understanding, we summarize the important symbols used in the paper in Table 1.

Preliminary

KG embedding

KG embedding converts symbolic representation of knowledge triples into continuous semantic spaces by embedding entities and relations into high-dimension vectors [26]. It can effectively improve the downstream tasks such as KG completion [3], relation extraction [27], and KBQA [20].

For a triple (h, r, t), KG embedding first maps it into continuous hidden representation \((E_h,E_r,E_t)\). Then, a scoring function \(\psi (\cdot )\) assigns a score to the possible triple to measure its plausibility, as illustrated in Fig. 2. The triplets existed in \({\mathscr {G}}\) tend to have higher score than those not. To learn those entities and relations representation, an optimization method is used to maximize the total plausibility of observed triplets.

Memory neural network

The memory neural network [22] is well known for its multiple hop reasoning ability and has been successfully applied in many natural language processing applications, such as question answering [7] and reading comprehension [22]. A memory neural network is often stacked with multi-layers; each layer has two independent embedding matrices to transform the supporting facts into input memory representation and output memory representation. As shown in Fig. 3a, given the query vector, it first finds the supporting memories from the input memory representation and then produces output features by a weighted sum over the output memory representation.

Key-value memory neural network generalizes the standard memory network by dividing the memory arrays into two parts, i.e., the key slot and the value slot, as shown in Fig. 3b. The model learns to use the query to address relevant memories with the keys, whose values are subsequently returned for output computation. Compared to the flat representation in standard memory network, the key-value architecture gives more flexibility to encode prior knowledge via functionality separation and is more applicable to complex structured knowledge sources [16, 29].

The architecture of QA2MN, which consists of three components (i) KG embedding, (ii) Question Encoder, and (iii) KG reasoning, see Proposed model 4 for details.  denotes the head and tail entity of the concerned triplet,

denotes the head and tail entity of the concerned triplet,  denotes head-related context for “L_MESSI”, and

denotes head-related context for “L_MESSI”, and  denotes tail-related context for “FC_Barcelona”

denotes tail-related context for “FC_Barcelona”

Proposed model

The proposed QA2MN has three main components, i.e., KG Embedding, Question Encoder and KG Reasoning, and Fig. 4 illustrates the architecture. First, we exploit the graph context information in knowledge base by pre-training KG embedding model. Then, we use Bi-directional Gated Recurrent Unit (\(\text {BiGRU}\)) to encode the question into continuous hidden representation. Finally, we use a question-aware key-value memory network to reason over the knowledge graph.

KG embedding with graph context

We adopt translational distance model [15] to train the embedding of entities and relations. For each fact \(T_i = (h_i, r_i, t_i) \in {\mathscr {G}}\), we apply translational distance constraint for the entities and the relations by the following equation:

where \(E_{h_i}\in \mathbf {R}^{d_{ent}}\), \(E_{r_i}\in \mathbf {R}^{d_{rel}}\), and \(E_{t_i}\in \mathbf {R}^{d_{ent}}\) are the embeddings of head entity, relation, and tail entity, respectively, \(W_{e2r}\in \mathbf {R}^{d_{rel}\times d_{ent}}\) is a projection matrix from the entity space to the relation space. In our implementation, \(d_{ent}\) is equal to \(d_{rel}\). Then, we obtain the translational distance score by

where \(\Vert \cdot \Vert \) denotes the \(l_2\) norm of variable.

To explore the implicit context information of knowledge graph, we integrate graph context into the distance scoring to improve the representation of entities and relations. For the triplet \(T_i\), we consider two kinds of context information: (i) head-related context: all the triples share the same head with \(T_i\), i.e., \(C_h(T_i) = \{T_j|T_j=(h_j, r_j, t_j) \in G, h_j=h_i\}\); (ii) tail-related context: all the triples share the same tail with \(T_i\), i.e., \(C_t(T_i) = \{T_j|T_j=(h_j, r_j, t_j) \in G, t_j=t_i\}\).

First, we integrate the head-related context with \(E_{h_i}\) by taking average over the triplets from \(C_h(T_i)\)

where \(|C_h(T_i)|\) is the number of head-related context triplets, and \(-1\) is the inverse operator. Then, we compute the distance of the head-related context representation and \(E_{h_i}\) by

In the same way, we compute the tail-related context representation \(\tilde{E}_{t_i}\) as the average from triplets in \(C_t(T_i)\)

where \(|C_t(T_i)|\) is the number of tail-related context triplets. Correspondingly, the distance of the tail-related context representation and \(E_{t_i}\) is computed by

Question encoder

We use \(\text {BiGRU}\) [8] to encode the question to keep the token-level and sequence-level information. With a question \(X = [x_{1},x_{2},...,x_M]\), where M is the total number of tokens in X. We feed X into the \(\text {BiGRU}\) encoder, which is computed as follows:

where \(\text {GRU}\) is the standard Gated Recurrent Unit, \(E_{x_i} \in \mathbf {R}^{d_{emb}}\) is the embedding of token, \(\overrightarrow{h}_{x_i}\in \mathbf {R}^{d_{hid}/2}, \overleftarrow{h}_{x_i}\in \mathbf {R}^{d_{hid}/2}\) is the hidden representation, \(d_{emb}\) is the token embedding size, and \(d_{hid}\) is the hidden size. Then, we obtain the hidden representation for each token, \(H_{X} = [h_{x_1},h_{x_2},...,h_{x_M}]\), where \(h_{x_i}\) is the concatenation of \(\overrightarrow{h}_{x_i}\) and \(\overleftarrow{h}_{x_i}\), i.e., \(h_{x_i}=[\overrightarrow{h}_{x_i}, \overleftarrow{h}_{x_i}]\).

KG reasoning

The focus of vanilla key-value memory neural network is about understanding the knowledgeable triplets in the memory slots. It often encodes the question as a whole vector and ignores the priority information. It is relatively enough for single-relation question but insufficient for complex multi-hop question. To improve the reasoning ability of key-value memory neural network, we introduce QA2MN to dynamically pay attention to different parts of the question in each reasoning step. In implement, QA2MN consists of five parts, i.e., key hashing, key addressing, value reading, query updating, and answer prediction.

Key hashing

Key hashing uses the question to select a list of candidate triplets to fill the memory slot. In our implementation, we first detect core entity as the entity mentioned in the question and find out its neighboring entities within K hops relation. Then, we extract all triplets in \({\mathscr {G}}\) that contains any one of those core entities as the candidate triplets, denoted as \(T_C =\{T_1,T_2, ...,T_N\}\), where N is the number of candidate triplets. All the entities in \(T_C\) are extracted as candidate answers, and we denote it as \(A_C = \{A_1,A_2,...,A_L\}\), where L is the number of candidate answers. For each candidate triplet \(T_i = (h_i, r_i, t_i) \in T_C\), we store the head and relation in the i-th key slot, which is denoted as

Correspondingly, the tail is stored in the ith value slot, denoted as

where \(W_k \in \mathbf {R}^{d_{hid}\times d_{rel}}\) and \(W_v \in \mathbf {R}^{d_{hid}\times d_{ent}}\) are trainable parameters.

At the zth reasoning hop, QA2MN makes multiple hop reasoning over the memory slot by (i) computing relevance probability between query vector \(q_z \in \mathbf {R}^{d_{hid}}\) and the key slots, (ii) reading from the value slots, and (iii) updating the query representation based on the value reading output and the question hidden representation.

Key addressing

Key addressing computes the relevance probability distribution between \(q_z\) and \(\varPhi _K(k_i)\) in the key slots

Value reading

Value reading component reads out the value of each value slot by taking the weighted sum over them with \(p_i^{qk}\)

Query updating

The value reading output is used to update the query representation to change the query focus for next hop reasoning. First, we compute the attention distribution between the value reading output \(o_z\) and the hidden representation of each token in the question

Then, we update the query vector by summing the value reading output \(o_z\) and the weighted sum over tokens in question with \(p_i^{vq}\)

Answer prediction

We initialize the query \(q_1\) with the self-attention of the question representation \(H_{X}\)

where \(h_{x_M}=[\overrightarrow{h}_{x_M},\overleftarrow{h}_{x_1}]\) is the integrated representation of question, and \(\top \) is the transposition operator. After Z hops of reasoning over the memories, the final value representation \(o_Z\) is used to perform the final prediction over all candidate answers. Finally, we compute the matching score between final value representation \(o_Z\) and candidate answers and normalize it into the range of (0,1)

where \(W_p \in {\mathbb {R}}^{L\times d_{hid}}\) is a trainable parameter. Finally, the candidate answers are ranked by their score.

Training

The training process can be divided into two stages. We first pre-train the KG embedding for several epochs, and then, we optimize the parameters of QA2MN and KG embedding iteratively. We combine the three distance score stated in Eqs. (2, 4, 6) as the loss function for KG embedding training

As for QA2MN optimization, we use the cross-entropy to define the loss function. Given an input question X, we denote y as the gold answer and P(y) as the predicted answer distribution. We compute the cross-entropy loss between y and P(y) by

Experiments

Dataset

We evaluate QA2MN and the baselines on PathQuestion [33] and WorldCup2014 [31], two representative datasets for complex multi-hop question answering.

-

PathQuestion (PQ): It is a manually generated dataset with predefined templates and its knowledge base is adopted from subset of FB13 [21]. PathQuestion-Large (PQL) is more challenging with less training instances and larger scale of knowledge base adopted from Freebase [2]. Both contain two-hop relation questions (2H) and three-hop relation questions (3H).

-

WorldCup2014 (WC): The dataset is based on the knowledge base about soccer players that participated in FIFA World Cup 2014. It contains single-relation questions (1H), two-hop relation questions (2H), and conjunctive questions (C); M denotes the mixture of 1H and 2H. The statistics of PathQuestion and WorldCup2014 are listed in Table 2.

The complete KG setting in original dataset is too ideal, since the model has sufficient supportive information to answer the questions. However, there are often missing links in practical application. The model should also be able to work on an incomplete KG setting. Following [33], we simulate an incomplete KG setting, named PQ-50, by randomly removing half of the triples from the PQ-2H dataset.

Evaluation metric

Following [19], we measure the performance of models by Hits@1, which is the percentage of examples the predicted answer exactly matches the gold one. When a question has multiple possible answers, the predicted answer would be correct if matching any one of them.

Implementation detail

We use ADAM [12] to optimize the trainable parameters. Gradients are clipped when their norm is bigger than 10. We partition the datasets in the proportion of 8:1:1 for training, validating and testing. The batch size is set to 48. The relation hop K is set to 3 and the reasoning hop Z is set to 3. The learning rate is initialized to \(10^{-3}\) and exponentially annealed in the range of [\(10^{-3}\), \(10^{-5}\)] with a decay rate of 0.96. The entity embedding dimension and the relation embedding dimension are set to 100. The token embedding dimension and hidden size are also set to 100. To increase model generalization, dropout mechanism is adopted by randomly masking 10% of the memory slots.

For the pre-training of KG embedding, we set the same optimizer and embedding dimension as above. We set the batch size to 64 and pre-train KG embeddings for 20 epochs.

Baseline

We have six baselines for comparison, including current state-of-the-art model. All of them are listed as follows:

-

Seq2Seq [23]. It is an encoder–decoder model, adopting an LSTM to encode the input question sequence and another LSTM to decode the answer path.

-

MemNN [22]. It is an end-to-end memory network that stores the KG triplets in memory arrays by bag-of-words representation.

-

KV-MemNN [16]. It uses a key-value memory neural network to generalize the original memory network by dividing the memory arrays into two parts. For each triplet, the head and the relation are stored in the key slot, and the tail is stored in the value slot.

-

IRN [33]. It proposes an interpretable, hop-by-hop reasoning process to predict the complete intermediate relation path. The answer module chooses the corresponding entity from KB at each hop and the last selected entity is chosen as the answer.

-

IRN-weak [33]. IRN needs label the complete paths from topic entities to gold answers, which need extra annotation for the dataset. IRN-weak is a variant of IRN which only utilizes supervision from the final answer.

-

SRN [19]. SRN formulates multi-relation question answering as a sequential decision problem. The model performs path search over the knowledge graph to obtain the answer and proposes a potential-based reward shaping strategy to alleviate the delayed and sparse reward problem caused by weak-supervision.

Experimental result

The results are shown in Table 3. QA2MN outperforms or shows comparable performance to all the baselines on the two datasets, which demonstrates that QA2MN is effective and robust in face with different datasets and questions. Seq2Seq shows the worst performance on the two datasets, indicating that multi-hop question answering is a challenging problem and the vanilla Seq2Seq model is not good at the complex reasoning process. KV-MemNN always outperforms MemNN, confirming that the key-value architecture of KV-MemNN gives more flexibility to encode the triplet in KG and is more applicable to the multi-hop reasoning problem. After further observations, we draw the following conclusions:

(1) QA2MN shows robustness on both simple and complex question.

We classify the simple question as dataset with less hops and larger data scale, including PQ-2H and WC-1H. Correspondingly, complex question has more hops and smaller data scale, including PQ-3H, PQL-3H, WC-2H, and WC-C.

As can be seen from Table 3, QA2MN performs similar to prior state-of-the-art model in case of simple question, since it has less challenge to predict the correct answer as the answer is directly connected to the core entity. For the complex question, IRN and SRN significantly lag behind QA2MN, showing that multi-hop reasoning is a challenging task, even to prior state-of-the-art model. IRN initializes the question by adding the token embeddings as a whole vector, which would loss the priority information in question. The action space of SRN would exponentially growth as the reasoning hop increasing. Therefore, the performance drop is inevitable for IRN and SRN as the question becomes more complex. On the other hand, the highest scores on PQ-3H, PQL-3H, WC-2H, and WC-C reveal that QA2MN is able to precisely focus on the proper position of the question and infer the correct entity from the candidate triplets. Therefore, the results suggest that QA2MN is more robust when facing with complex multi-hop questions.

(2) QA2MN is effective on incomplete KG setting.

In the incomplete KG setting, only half of the original triples are reserved. Current model like IRN requires a path between the core entity and the answer entity. On the other hand, QA2MN uses dropout mechanism to randomly mask triplets in the memory slot to prevent it from over-fitting. QA2MN can implicitly explore the observed and unobserved paths around the core entity, which greatly improve the robustness of model to deal with the incomplete setting. Therefore, even there is no path between the core and answer entity, QA2MN can work to predict the answer.

(3) QA2MN meets current demand with weak-supervision learning.

IRN outperforms IRN-weak for IRN need full-supervision along the whole intermediate relation and entity path. However, full-supervised method need large amount of data annotation which is cost and impractical for most case [19]. That is to say, weak-supervised or unsupervised method is more suitable to the current demand.

QA2MN and SRN achieved the best and second-best performance on the two datasets, which confirms that weak-supervised method has great potential to explore the inherent semantic information in knowledge graph.

Ablation study

To further verify the significance of the graph context-based knowledge graph embedding and the question-aware query update mechanism, we do model ablation to explore the following two questions: (i) is KG embedding necessary for model training? (ii) is the question-aware query update mechanism helpful for reasoning over the knowledge graph? We use two ablation models to answer them.

-

QA2MN\(\backslash \)KE. It removes the pre-training of KG embedding .

-

QA2MN\(\backslash \)QA. It removes the question-aware query update mechanism and replaces it with standard key-value memory neural network.

We evaluate the ablation models on PQ and PQL dataset and take KV-MemNN for comparison. As shown in Table 4, comparing with QA2MN, the performance obviously dropped after removing any one of the two components, which confirms that both the knowledge graph embedding and the question-aware query update mechanism are effective for improving the model performance.

QA2MN\(\backslash \)QA always outperforms KV-MemNN, which approves that KG embedding adds context information from knowledge base to improve the representation of entities and relations. QA2MN\(\backslash \)KE outperforms KV-MemNN, as well, which confirms that the question-aware query update mechanism could improve the model to deal with more complex questions. To account for the performance improvement, we visualize the weight distributions on the question during the reasoning process in the next subsection.

Visualization analysis

To illustrate how QA2MN allocates the attention hop-by-hop in the reasoning process, we choose a testing example from PathQuestion and visualize the attention distributions on the question in each reasoning step.

Figure 5 shows the attention heat-map of question “what is the archduke_johann_of_austria -s mother -s father -s religious belief ?”. A core entitiy (i.e., “archduke_johann_of_austria”) and three relations ( i.e., “mother”, “father”, and “religious belief”) are contained in the question contains. To answer the question, three triplets, i.e., (archduke_johann_of_austria, parents, maria_louisa_of_spain), (maria_louisa_of_spain, parents, charles_iii_of_spain), and (charles_iii_of_spain, religion, catholicism) are needed to do reasoning. From Fig. 5, we find that QA2MN can focus on the correct position during reasoning process as human do. The question-aware attention detects relation “mother” initially. Then, the attention turns to “father” and focuses on “religious belief” finally.

Previous work often uses bag-of-word representation or RNN/LSTM/GRU to encode the question into an integrated vector, resulting in the loss of inherent priority information in the sentence. In the reasoning process, the integrated vector is used to retrieve and rank the candidate triplets. It is challenging for the coarse-grained semantic representation to do complex reasoning. Figure 5 intuitively illustrates the fine-grained information brought from question-aware attention, which is also the main reason for performance improvement. That is to say, question-aware attention can effectively explore the priority of the question, and utilize the fine-grained information for precisely reasoning.

Conclusion

Multi-hop question answering over knowledge bases is a challenging task. There are two main aspects need to be addressed. First, multi-hop questions have more various and complicated semantic information. Then, the triplets have implicit relation as some of them share the heads or tails. We propose QA2MN to dynamically focus on different parts of the questions during reasoning steps. In addition, KG embedding is incorporated to learn the representation of entities and relations to extract the context information in knowledge graph. Extensive experiments demonstrate that QA2MN achieves state-of-the-art performance on two representative datasets.

In application, there are more complex questions which need arithmetic function or Boolean logical operation. Furthermore, user may ask sequential questions continuously, which would lead to co-reference resolution problem. We would explore these problems in future work.

Notes

Full-supervision means annotating the complete answer path till the final answer. The weak-supervision means only the final answer is labeled. The un-supervision means that no label is needed. For example, considering the question “which country does L_MESSI play professional in ?”, full-supervision would annotate the complete answer path as (L_MESSI, plays_position, FC_Barcelona), (FC_Barcelona, is_in_country, Spain) and “Spain”, while weak-supervision only resorts to the final answer “Spain”.

References

Berant J, Chou A, Frostig R, Liang P (2013) Semantic parsing on Freebase from question-answer pairs. In: Proceedings of the 2013 conference on empirical methods in natural language processing, association for computational linguistics, Seattle, Washington, USA, pp 1533–1544

Bollacker K, Evans C, Paritosh P, Sturge T, Taylor J (2008) Freebase: a collaboratively created graph database for structuring human knowledge. In: Proceedings of the 2008 ACM SIGMOD international conference on management of data, association for computing machinery, New York, NY, USA, SIGMOD’08, pp 1247–1250

Bordes A, Usunier N, Garcia-Duran A, Weston J, Yakhnenko O (2013) Translating Embeddings for Modeling Multi-relational Data. In: Neural information processing systems (NIPS), South Lake Tahoe, United States, pp 1–9

Bordes A, Chopra S, Weston J (2014) Question answering with subgraph embeddings. In: Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), association for computational linguistics, Doha, Qatar, pp 615–620

Bordes A, Usunier N, Chopra S, Weston J (2015) Large-scale simple question answering with memory networks. arXiv:1506.02075

Chakraborty N, Lukovnikov D, Maheshwari G, Trivedi P, Lehmann J, Fischer A (2019) Introduction to neural network based approaches for question answering over knowledge graphs. arXiv:1907.09361

Chen Y, Wu L, Zaki MJ (2019) Bidirectional attentive memory networks for question answering over knowledge bases. In: Proceedings of the 2019 conference of the north american chapter of the association for computational linguistics: human language technologies, volume 1 (long and short papers), association for computational linguistics, Minneapolis, Minnesota, pp 2913–2923

Chung J, Gulcehre C, Cho K, Bengio Y (2014) Empirical evaluation of gated recurrent neural networks on sequence modeling. In: NIPS 2014 workshop on deep learning, December 2014

Hao Y, Zhang Y, Liu K, He S, Liu Z, Wu H, Zhao J (2017) An end-to-end model for question answering over knowledge base with cross-attention combining global knowledge. In: Proceedings of the 55th annual meeting of the association for computational linguistics (volume 1: long papers), association for computational linguistics, Vancouver, Canada, pp 221–231

Huang X, Zhang J, Li D, Li P (2019) Knowledge graph embedding based question answering. In: Culpepper JS, Moffat A, Bennett PN, Lerman K (eds) Proceedings of the twelfth ACM international conference on web search and data mining, WSDM 2019, Melbourne, VIC, Australia, February 11–15, 2019, ACM, pp 105–11. https://doi.org/10.1145/3289600.3290956

Jain S (2016) Question answering over knowledge base using factual memory networks. In: Proceedings of the NAACL student research workshop, association for computational linguistics, San Diego, California, pp 109–115

Kingma DP, Ba J (2015) Adam: A method for stochastic optimization. In: The 3rd International Conference on Learning Representations

Lehmann J, Isele R, Jakob M, Jentzsch A, Kontokostas D, Mendes PN, Hellmann S, Morsey M, Van Kleef P, Auer S et al (2015) Dbpedia - a large-scale, multilingual knowledge base extracted from wikipedia. Soc Work 6(2):167–195

Liang P (2013) Lambda dependency-based compositional semantics. arXiv:1309.4408

Lin Y, Liu Z, Sun M, Liu Y, Zhu X (2015) Learning entity and relation embeddings for knowledge graph completion. In: Proceedings of the twenty-ninth AAAI conference on artificial intelligence, AAAI Press, AAAI’15, p 2181–2187

Miller A, Fisch A, Dodge J, Karimi AH, Bordes A, Weston J (2016) Key-value memory networks for directly reading documents. In: Proceedings of the 2016 conference on empirical methods in natural language processing, association for computational linguistics, Austin, Texas, pp 1400–1409

Nathani D, Chauhan J, Sharma C, Kaul M (2019) Learning attention-based embeddings for relation prediction in knowledge graphs. In: Proceedings of the 57th annual meeting of the association for computational linguistics, association for computational linguistics, Florence, Italy, pp 4710–4723. https://doi.org/10.18653/v1/P19-1466

Oguz B, Chen X, Karpukhin V, Peshterliev S, Okhonko D, Schlichtkrull M, Gupta S, Mehdad Y, Yih S (2020) Unified open-domain question answering with structured and unstructured knowledge. arXiv:2012.14610

Qiu Y, Wang Y, Jin X, Zhang K (2020) Stepwise reasoning for multi-relation question answering over knowledge graph with weak supervision. In: Proceedings of 13th ACM international WSDM conference, pp 474–482

Saxena A, Tripathi A, Talukdar P (2020) Improving multi-hop question answering over knowledge graphs using knowledge base embeddings. In: Proceedings of the 58th annual meeting of the association for computational linguistics, association for computational linguistics, Online, pp 4498–4507. https://doi.org/10.18653/v1/2020.acl-main.412

Socher R, Chen D, Manning CD, Ng AY (2013) Reasoning with neural tensor networks for knowledge base completion. In: Burges CJC, Bottou L, Ghahramani Z, Weinberger KQ (eds) Advances in neural information processing systems 26: 27th annual conference on neural information processing systems 2013. Proceedings of a meeting held December 5-8, 2013, Lake Tahoe, pp 926–934

Sukhbaatar S, Szlam A, Weston J, Fergus R (2015) End-to-end memory networks. In: Cortes C, Lawrence ND, Lee DD, Sugiyama M, Garnett R (eds) Advances in neural information processing systems 28: annual conference on neural information processing systems 2015, December 7–12, 2015, Montreal, Quebec, Canada, pp 2440–2448

Sutskever I, Vinyals O, Le QV (2014) Sequence to sequence learning with neural networks. arXiv:1409.3215

Unger C, Bühmann L, Lehmann J, Ngonga Ngomo AC, Gerber D, Cimiano P (2012) Template-based question answering over rdf data. In: Proceedings of the 21st international conference on world wide web, association for computing machinery, New York, NY, USA, WWW’12, pp 639–648

Vakulenko S, Garcia JDF, Polleres A, de Rijke M, Cochez M (2019) Message passing for complex question answering over knowledge graphs. arXiv:1908.06917

Wang Q, Mao Z, Wang B, Guo L (2017) Knowledge graph embedding: a survey of approaches and applications. IEEE Trans Knowl Data Eng 29(12):2724–2743. https://doi.org/10.1109/TKDE.2017.2754499

Weston J, Bordes A, Yakhnenko O, Usunier N (2013) Connecting language and knowledge bases with embedding models for relation extraction. In: Conference on empirical methods in natural language processing. Seattle, United States, pp 1366–1371

Wu Z, Kao B, Wu T, Yin P, Liu Q (2020) PERQ: predicting, explaining, and rectifying failed questions in KB-QA systems. In: Caverlee J, Hu XB, Lalmas M, Wang W (eds) WSDM ’20: the thirteenth ACM international conference on web search and data mining, Houston, TX, USA, February 3-7, 2020, ACM, pp 663–671. https://doi.org/10.1145/3336191.3371782

Xu K, Lai Y, Feng Y, Wang Z (2019) Enhancing key-value memory neural networks for knowledge based question answering. In: Proceedings of the 2019 Conference of the North American chapter of the association for computational linguistics: human language technologies, volume 1 (long and short papers), association for computational linguistics, Minneapolis, Minnesota, pp 2937–2947

Yih W, Chang MW, He X, Gao J (2015) Semantic parsing via staged query graph generation: Question answering with knowledge base. In: Proceedings of the 53rd annual meeting of the association for computational linguistics and the 7th international joint conference on natural language processing (volume 1: long papers), association for computational linguistics, Beijing, pp 1321–1331

Zhang L, Winn JM, Tomioka R (2016) Gaussian attention model and its application to knowledge base embedding and question answering. arXiv:1611.02266

Zhang Y, Dai H, Kozareva Z, Smola AJ, Song L (2018) Variational reasoning for question answering with knowledge graph. In: McIlraith SA, Weinberger KQ (eds) Proceedings of the thirty-second AAAI conference on artificial intelligence, (AAAI-18), the 30th innovative applications of artificial intelligence (IAAI-18), and the 8th AAAI symposium on educational advances in artificial intelligence (EAAI-18), New Orleans, Louisiana, USA, February 2–7, 2018, AAAI Press, pp 6069–6076

Zhou M, Huang M, Zhu X (2018) An interpretable reasoning network for multi-relation question answering. In: Proceedings of the 27th international conference on computational linguistics, association for computational linguistics, Santa Fe, New Mexico, USA, pp 2010–2022

Funding

This work was partially funded by the National Natural Science Foundation of China (Grand number 61473300).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, X., Alazab, M., Li, Q. et al. Question-aware memory network for multi-hop question answering in human–robot interaction. Complex Intell. Syst. 8, 851–861 (2022). https://doi.org/10.1007/s40747-021-00448-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-021-00448-0