Abstract

The increasing reliability and availability requirements of power electronic systems have drawn great concern in many industrial applications. Aiming at the difficulty in fault characteristics extraction and fault modes classification of the three-phase full-bridge inverter (TFI) that used as the drive module of brushless DC motor (BLDCM). A hybrid convolutional neural network (HCNN) model consists of one-dimensional CNN (1D-CNN) and two-dimensional CNN (2D-CNN) is proposed in this paper, which can tap more effective spatial feature for TFI fault diagnosis. The frequency spectrum from the three-phase current signal preprocess are applied as the input for 1D-CNN and 2D-CNN to conduct feature extraction, respectively. Then, the feature layers information are combined in the fully connected layer of HCNN. Finally, the performance status of TFI could be identified by the softmax classifier with Adam optimizer. Several groups of experiments have been studied when the BLDCM under different operating conditions. The results show that the fusion features can get a higher degree of discrimination so as to the presented network model also obtains better classification accuracy, which verify the feasibility and superiority to the other networks.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Power electronic converters (PECs) are widely used in electric vehicles (EVs), smart grids, renewable energy generation, aerospace and other fields. Considerable manufacturers have been getting a growing awareness of the protection efficiency and maintenance costs of PECs. The reliability and availability are essential to these industrial applications [11, 13, 25, 28], as a fault not only may cause unscheduled shutdown but also even lead to a disastrous accident. Thus, prognostics and health management (PHM) received great attention and promotion in numerous fields [8, 10, 23, 35], which is the development of condition-based maintenance (CBM) that can forecast the occurrence moment of faults and greatly improve the operation and maintenance efficiency.

As the development of new energy electric vehicles, the brushless DC motor (BLDCM) system gets more and more attention, because its reliability relates to the whole vehicles’ performance and safety. The brushless DC motor (BLDCM) drive system, as a typical PEC, the faults of that mainly come from the vulnerable components such as power MOSFET, capacitor, connector, etc. As the core part of the drive system, the power MOSFET is more prone to failure due to its frequent on–off movements and the influence of thermal and electrical overstress. If the failure of the power MOSFET cannot be detected effectively, it will get an adverse effect on the motor drive system and result in immeasurable economic losses [4, 16, 20].

The fault types of power MOSFET can be divided into open circuit fault (OCF) and short circuit fault (SCF). Usually, the SCF occurs rapidly, the hardware circuit equipped with the corresponding protection measures, such as connecting a fast fuse in series in the drive circuit, which can convert the SCF into OCF. However, the OCF of the inverter is not easy to be detected, because the circuit can still operate normally over a period of time. If the OCF fault is not fixed in time, it will result in a secondary fault to the power MOSFET even the whole power electronic circuits, owing to the current through other power MOSFETs and components may increase the electrical stress greatly. At the same time, the harmonic interference will also damage the devices under the working mode with fault [34, 36]. Therefore, the technology of fault diagnosis for power electronic systems can guarantee the reliability and availability which make it more intelligent and safety [33].

Related work

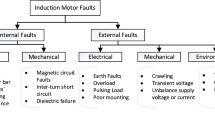

The fault diagnosis method of the inverter is shown in Fig. 1, which is mainly divided into model-based method and data-driven method. The method based on analytical model usually needs to understand the dynamic properties and operation mechanism of the system, then establish an accurate mathematical model. According to the detection of input and output signals, the residual signal is formed, the signal is further analyzed to realize fault diagnosis. Hu et al. [17] used four observers corresponding to four types of open-circuit faults of switch tubes to detect and locate faults. No additional sensors are needed, which is a single-phase PWM rectifier switch tube open-circuit fault diagnosis method based on switch system theory. For the open circuit, fault diagnosis of voltage source inverter (VSI), Chen et al. [9] proposed an online fault diagnosis method based on current observer. By analyzing the state space model of VSI when fault occurs, a state observer is constructed to estimate the current. However, methods based on analytical models rely too much on precise mathematical models. Once the structure of the research object changes, it needs to be remodeled.

The method based on data-driven does not need to know the exact analytical model of the system, which directly analyzes and processes the measured data. It has been widely used in the field of fault diagnosis. Considering that the topology of the inverter is extremely symmetrical and the failure mode will destroy the symmetry of the three-phase current, Hang et al. [6] proposed the Mannharden distance to detect and locate open-circuit faults based on the similarity measurement algorithm of the order residual of the current. Aiming at the open-circuit fault of multiple IGBTs in the inverter, Bae et al. [7] studied a fault diagnosis technology based on Park vector-based online monitoring and identification. According to the dwell time of the vector of the three-phase current, the open circuit fault of the switch is detected and normalized. Determine the branch open condition based on the average value of the normalized current and perform fault diagnosis. However, the data-driven method requires nuclear principal component analysis of a specific fault signal, which leads to excessive workload.

With the continuous development of artificial intelligence, more and more machine learning methods are applied to the field of fault diagnosis, as shown in Table 1. CNN is widely used in speech recognition, image processing and image recognition because of its powerful feature extraction ability. CNN does not need to know the specific features of the input, which achieves the encapsulation of feature extraction through learning. The advantages of CNN can solve the problem of low fault diagnosis rate due to the lack of obvious features. Watanabe et al. [2] used a real-time monitoring scanning acoustic microscope to capture images during a failed power cycle test. An image classifier based on CNN is designed, the pre-training and overlapping pooling are added to the system to improve the accuracy of fault diagnosis. Poma et al. used fuzzy gravitational search algorithm to optimize convolutional neural network. It can not only optimize the size of convolution kernel [26], but also obtain the number of images per block that will enter in the training phase [27]. The optimized CNN is applied in the field of recognition and image classification. Li et al. [31] proposed an infinite convolutional neural network (FAC-CNN) for adaptive fusion of multi-source data. Multi-source data are captured by an adaptive convolution kernel matching the number of data channels, meanwhile, an irregular convolution kernel is introduced to expand the field of view of FAC-CNN. Zare et al. [32] considered changes in each measurement variable and identified subsystem failures, they used a multi-channel convolutional neural network with multiple parallel local heads. The image composed of the time domain signal obtained from the wind turbine is input into the convolutional neural network. A majority of machine-learning networks are performed in an ideal experimental environment. In the face of changing working conditions, the single machine neural network has problems such as low fault diagnosis accuracy and poor generalization ability.

To solve these problems, a convolutional neural network based on fault feature fusion is proposed, which focuses on mining the deep information of the original fault data to avoid complex feature engineering. After preprocessing, the one-dimensional structure features of the fault samples are extracted by 1D-CNN and the two-dimensional spatial features are extracted by 2D-CNN. The deeper feature information of fault data is generated by fusion in the full connection layer. It can not only avoid the one-dimensional network to get smaller receptive field, but also prevent the two-dimensional network from destroying the original data space structure. The main contributions of this paper can be summarized as follows:

-

1.

A hybrid convolutional neural network structure is proposed, which can adaptively learn features.

-

2.

Through PCA visual analysis, the feature extraction effect of HCNN is significantly better than other networks.

-

3.

A new index is applied to evaluate the effect of feature extraction.

-

4.

HCNN can be competent for fault diagnosis under different working conditions.

The rest of this paper is organized as follows: "Theory of HCNN" introduces the theory of CNN; "Fault diagnosis model based on HCNN" introduces the process of HCNN fault diagnosis. In "Experiment study and analysis", the effectiveness of HCNN is verified by experiments. Finally, conclusions are drawn in "Conclusion".

Theory of HCNN

Convolutional neural network

The traditional convolutional neural network is a typical feedforward neural network, its powerful feature extraction ability makes it widely used in image recognition, speech detection and other fields. Since Lecun et al. proposed LeNet-5 [38], as shown in Fig. 2, the basic structure of the convolutional neural network has been basically determined. The typical convolutional neural network is usually composed of an input layer, convolutional layer, pooling layer and a fully connected layer.

The function of the convolutional layer is to adaptively extract the fault characteristics of the input data while eliminating noise. The appropriate convolution kernel is selected to process the input data in turn. Different convolution kernels represent different features. Usually, the convolutional neural network increases the feature extraction ability of the model by adding different convolution kernels, then obtains multi-layer data with fault features. The convolution formula [19] is:

where Mj is the dimension of the input data, l is the number of layers of the network, xil − 1 is the part of input data to be convolved, w1 ij is the weight matrix, b1 j is the bias matrix, f(·) is the activation function [15], the activation function selected by this model is the ReLU function, which mathematical expression is:

The pooling layer is also called the downsampling layer. The pooling layer is commonly used as the maximum pooling function and the mean pooling function, the convolution kernel slides through the target data in turn and extracts the maximum or average value of the corresponding area. Therefore, the role of the pooling layer is to extract the main features of the data output from the convolutional layer while reducing its dimensions. This model chooses the maximum pooling function. Its expression is:

where βl j is the weight matrix, bl j is the bias matrix, down (·) is the downsampling function.

The fully connected layer combines the fault features extracted by the convolutional layer and the pooling layer. The fully connected layer is connected to the last pooling layer, finally, obtains the prediction result by softmax classifier. The expression of the fully connected layer is:

where k is the network layer number, yk is the output of the fully connected layer, wk is the weight coefficient, xk−1 is a one-dimensional feature vector, bk is the bias coefficient, f(·) is softmax activation function [14], its expression is:

where \({{\rm exp}(}x_{i}^{{\rm T}} w_{j} {)}{/}\sum\nolimits_{{{j = 1}}}^{{k}} {{{\rm exp}(}x_{i}^{{\rm T}} w_{j} {)}}\) represents the probability value of the ith sample belonging to the jth category.

HCNN model

Traditional CNN has been widely used in image recognition, natural language processing and other fields [5, 22, 30]. Based on its powerful feature extraction capabilities, the improved CNN is applied to TFI fault diagnosis in this paper. Since the fault signal is a one-dimensional spectrum signal, the network structure can choose 1D-CNN. However, under the same convolution kernel, 1D-CNN can obtain fewer receptive fields which has the disadvantage of insufficient data and it is prone to overfitting during the training process. The fault data signal can also be converted into a matrix form as the input of 2D-CNN. However, this method may destroy the spatial correlation in the original sequence, resulting in the loss of fault information, and cannot maximize the advantages of deep learning to automatically learn the characteristics of the original signal [35]. Therefore, HCNN is proposed in this paper, its network structure is shown in Fig. 3.

As can be seen from Fig. 3, the data processed by FFT are used as the input of 1D-CNN and 2D-CNN in one-dimensional and two-dimensional forms, respectively. Both networks are alternately connected by two convolutional layers and pooling layers to achieve feature extraction of the fault sample data, the fault feature vectors extracted from the two networks are fused in the full connection layer to generate the fault feature vectors of HCNN. Finally, the softmax classifier is used to complete the fault mode recognition. In the learning process of HCNN, the cross-entropy loss function is selected as the training objective loss function fused with fault features, its mathematical expression is shown in:

where \(I(\cdot)\) is indicator function, (xi, yi) is the training sample of the model, m is the number of training samples, n is the total number of fault categories. Calculating the partial derivative of formula (6), the weight parameters w and b of CNN can be updated layer by layer.

where \(w^{\prime}\) and \(b^{\prime}\) is the updated weight and bias, w and b is the current weight and bias. η ∈ (0, 1) is the learning rate. The goal of training is to minimize J(w).

Fault diagnosis model based on HCNN

Fault mode of TFI

The schematic diagram of the three-phase full-bridge brushless DC motor drive circuit is shown in Fig. 4, T1–T6 are power MOSFETs, D1–D6 are flyback diodes, Vin is the DC bus voltage of 48 V. The fault data are obtained from the three-phase winding currents ia, ib, ic. The single open fault mode of six switches is discussed in this paper, including a total of seven failure modes in the normal working state. The failure mode is shown in Table 2.

Fault diagnosis process

The flowchart of fault diagnosis based on HCNN is shown in Fig. 5. The specific steps are as follows:

-

1.

Collecting DC–AC drive circuit A, B, C three-phase current signal ia, ib, ic as fault signal.

-

2.

FFT is used to convert the measured current signal into the frequency domain signal of fault signal. The appropriate frequency domain signal is intercepted as the fault feature vector, and the fault feature vector is normalized to obtain the fault data sample, which are divided into training set and testing set.

-

3.

HCNN training stage: First, manually assign the same training parameters (such as the number of hidden layer nodes, activation function, etc.) to 1D-CNN and 2D-CNN, respectively. The training set of fault data samples is input into HCNN, through layer-by-layer training and backpropagation, continuously adjusting network parameters and weight bias, and softmax classifier is used for fault diagnosis in the last layer.

-

4.

HCNN testing stage: The testing set of fault data samples is input into the trained HCNN, calculated the fault diagnosis accuracy rate in different situations and compared with SDAE, 1D-CNN and 2D-CNN to verify the effectiveness of this model.

Experiment study and analysis

Experimental platform

The experimental platform is shown in Fig. 6. The fan load is fixed on the BLDCM shaft with a rated speed of 800 rad/min. In the experiment, the drive signal is controlled by software to simulate the open circuit fault of different power MOSFETs. Similarly, the fan speed is controlled by software to make the BLDCM work under different load conditions. In the experiment, the fan load is set to three situations, the speed is 550 rad/min, 650 rad/min and 750 rad/min, which are recorded as load situations Cload1, Cload2 and Cload3. Under each load condition, the phase currents ia, ib, ic are sampled 100 times for each failure mode (including normal working state). The current signal sampling adopts the chip ACS712 to convert it into a voltage signal, the sampling frequency of the data collector is set to 200 kHz, and the length of each data sampling is 100 k sampling points.

When the BLDCM is in FC2 failure mode, the phase current ia, ib, ic waveforms are shown in Fig. 7a. FFT is used to analyze the frequency domain, the obtained amplitude spectrum is shown in Fig. 7b. The first 432 frequency points in the current amplitude spectrum of ia, ib, ic are taken as the fault feature vectors. Therefore, the dimension of the fault feature vector is 1296, meanwhile, there are 700 fault sample data in each load situation. The samples are randomly divided into three groups. The ratio of the testing set to the training set is shown in Table 3.

Model creation

The main parameters involved in HCNN are structural parameters and training parameters. The structural parameters directly affect the adaptive feature extraction capabilities of HCNN, including the size and number of convolution kernels, etc. The structure parameters of HCNN are shown in Table 4. Training parameters affect the quality of the network model and directly affect the accuracy of fault diagnosis. Parameters usually include optimizer, optimizer learning rate, batch number, number of iterations, etc. The training parameters of HCNN are shown in Table 5.

The sample dimension of the original data is 1296, the input signal of 1D-CNN is 1 × 1296 and that of 2D-CNN is 36 × 36. Both 1D-CNN and 2D-CNN are composed of two convolutional layers, two pooling layers, and a fully connected layer. The convolutional layer and the pooling layer are alternately connected. The convolution layer uses the commonly used 3 × 1 and 3 × 3 convolution kernels, and the step size is set to 1. The pooling layer uses the smaller 2 × 1 and 2 × 2 convolution kernels, and the step size is set to 2. The selection of network model structure parameters in this paper not only expands the receptive field area of model input, increases the amount of information input, but also deepens the network structure and enhances the feature extraction capabilities of the network.

The fault diagnosis algorithms used in this experiment are all executed in the Pycharm environment, the operating environment is Windows10 (64-bit) system, the central processing unit is Intel Core i7-9750, 16G memory. The compilation environment is python3.6, tensorflow-gpu1.13.2.

Adam optimizer is selected as the network model. According to the experiment in [3], the best classification result can be achieved when the learning rate is 0.001, which is verified in Fig. 8a. The number of iterations is shown in Fig. 8b. After 400 times, there is basically no fluctuation and remains stable. To avoid minor fluctuations, the number of epochs is selected as 500 times in this article.

Evaluation and analysis of fault characteristics

PCA analysis of fault feature

To analyze the effect of HCNN fault feature extraction, principal component analysis (PCA) was performed on the original data set and the data set extracted by the three networks. The first three principal components of the feature are visualized in a three-dimensional image. The test data set of the experiment uses the fault data sample with the load of Cload1 as an example, the result is shown in Fig. 9.

It can be seen from Fig. 9a that the main components of the various failure modes in the original data sample are not clustered together and the scattered points of each failure mode overlap each other, which is messy and difficult to distinguish. It shows that the original fault samples cannot clearly reflect the fault characteristics of the TFI.

Figure 9b, c are PCA visualization scatter plots of 1D-CNN and 2D-CNN extracted features, respectively. It can be seen that the same types of failure modes are clustered together well, but for different failure modes, only FT1 failure modes are clearly separated from other failure modes, indicating that the two methods are only sensitive to obvious failure modes and sensitive to minor failures. There is still overlap between different failure modes, so the feature extraction effect is not ideal.

Figure 9d is the PCA visualization scatter points extracted by HCNN. It can be seen that the same failure modes of the TFI are extremely gathered together, and the feature scatter points between different failure modes are effectively separated, which shows a regular pattern of gathering. Compared with 1D-CNN and 2D-CNN, the feature extraction ability of HCNN is obviously the best.

Distinguishing evaluation of fault characteristics

To evaluate the ability of deep neural network feature extraction, it is assumed that the discrete points of each state mode on the PCA map are surrounded by a minimum characteristic sphere, its center coordinates and radius are determined by the minimum enclosing sphere random increment algorithm. Assume that \(P_{j} = (a_{j} ,b_{j} ,c_{j} )\) is the center coordinate of each state mode. \(P_{ji} = (a_{ji} ,b_{ji} ,c_{ji} )\) is the sample point coordinates of each state mode. rj represents the radius of each state mode and j represents the state mode. The following feature indicators are used to quantitatively analyze the features extracted by the above three methods [39]:

-

1.

The radius of the characteristic sphere in each mode (CSR);

$$ FSR_{j} = r_{j} \quad j = 1,2, \ldots ,5 $$(9) -

2.

The average distance between the center of the characteristic ball of each state mode and others (DCC);

$$ DCC_{j} = \frac{1}{n - 1}\sum\limits_{i = 1}^{{\text{n}}} {\sqrt {(a_{i} - a_{j} )^{2} + (b_{i} - b_{j} )^{2} + (c_{i} - c_{j} )^{2} } } $$(10) -

3.

Ratio between CSR and DCC (CSR/DCC).

The first indicators represent the degree of aggregation of each state mode, the other two indicators analyze the degree of separation between each state mode. All distance calculations involved use Euclidean distance.

It can be seen from Fig. 10a that HCNN has the largest DCC in the seven failure modes, indicating that the distinction between different failure modes is particularly obvious. The failure mode discrimination ability of 2D-CNN is better than 1D-CNN.

It can be seen from Table 6 that the CSR of HCNN is larger than others, but it must be combined with the indicator DCC of each state mode. Therefore, CSR/DCC is introduced to measure the difference between different state modes and their discrete point distribution. The smaller the index is it shows that the better the fault extraction effect is. From Fig. 10b, it can be seen that the CSR/DCC of HCNN is basically the smallest among the seven failure modes. Only in the FT4 failure mode, the index is slightly higher than the other two networks, indicating that the other two networks are only sensitive to the FT4 failure mode, the generalization ability is weakness.

In summary, the different fault features extracted by HCNN are scattered far, and the fault extraction effect is significantly better than 1D-CNN and 2D-CNN.

The performance of fault diagnosis methods

The performance of HCNN under different load

HCNN is used to test the experiment of different sample proportion under three load conditions. The confusion matrix of HCNN fault diagnosis in the case of Cload2 is shown in Fig. 11, and the accuracy of other fault diagnosis is shown in Fig. 12.

It can be seen from Fig. 12 that the fault diagnosis accuracy rate of HCNN under any conditions has reached over 95.86%. Under mixed load conditions, fault diagnosis accuracy of HCNN is also ideal, indicating that HCNN has a strong generalization ability.

When the sample ratio of the training set and the test set changes, the change trend of the accuracy of fault diagnosis in different situations is also the same. When the data sample ratio is CASE 1, the network parameters have not been optimally trained due to fewer training samples, resulting in lower accuracy. As the number of training samples increases, when the proportion of data samples is case 3, the test set is also reduced, meanwhile, the accuracy of fault diagnosis is significantly improved.

Fault diagnosis result in different state modes

SDAE, 1D-CNN, 2D-CNN and HCNN are used to classify the failure modes of the failure samples under various load conditions, the result is shown in Fig. 14. It can be seen from experiment 4.4.1 that fault diagnosis has the highest diagnostic accuracy under case 3 conditions, so the experiment is carried out under case 3 conditions. The fault diagnosis result based on HCNN is shown in Fig. 13.

According to Figs. 13 and 14, the fault classification accuracy of SDAE is about 90%, 1D-CNN is about 93%, the result of 2D-CNN is about 95%. The classification accuracy of SDAE is overall lower than that of CNN. It shows that the ordinary convolutional neural network itself has better fault feature extraction ability. The fault diagnosis accuracy rate of HCNN is above 98.57%. It is obviously better than the comparison network, indicating that HCNN can extract deeper fault feature information from the original fault data samples, which can diagnose the open circuit of each TFI switches efficiently and accurately.

In Fig. 14, the fault diagnosis accuracy of Cload3 is slightly lower than that of Cload1 and Cload2, indicating that the fault sample data in the case of Cload3 contains a lot of noise, which will affect the classification accuracy of the network. But in the case of Mixed Cload, the accuracy of SDAE is 84.29%, while the accuracy of HCNN is 98.57%, which indicates that HCNN has stronger generalization ability.

Conclusion

For the open-circuit fault diagnosis of TFI switches, the HCNN method is used in this paper, which uses 1D-CNN and 2D-CNN to extract fault features at the same time, merges fault features at the fully connected layer to generate HCNN fault features, the softmax is used to classify. By compared with 1D-CNN and 2D-CNN algorithms, the results show as follows:

-

1.

The adaptive fault feature extraction effect of the HCNN algorithm is more obvious and deeper fault feature information can be extracted from the same fault data, which improves the accuracy of fault diagnosis results.

-

2.

Under different loads and different sample division ratios, the accuracy of fault diagnosis of HCNN remains above 95.86%. When the sample ratio is case 3, the diagnostic accuracy rate of HCNN under various load conditions is maintained above 98.57%, which is significantly better than the other networks. Reflects the strong generalization ability of HCNN.

Experiments show that HCNN can complete TFI fault diagnosis under different working conditions, and can accurately locate the fault components. HCNN can also be applied to the field of fault diagnosis where the original signal is one-dimensional signal, such as the voltage signal of transformer fault diagnosis. It is not necessary to know the characteristics of the fault in the original signal. With the powerful learning ability of HCNN, it can adaptively identify different fault modes. The fault diagnosis ability of HCNN model lays a good foundation for fault tolerance and is also beneficial to PHM of power electronic system.

References

Aljemely AH, Xuan J, Jawad FKJ et al (2020) A novel unsupervised learning method for intelligent fault diagnosis of rolling element bearings based on deep functional auto-encoder [J]. J Mech Sci Technol 34:4367–4381

Watanabe A, Hirose N, Kim H et al (2019) Convolutional neural network (CNNs) based image diagnosis for failure analysis of power devices. Microelectron Reliab 100–101:113399

Bera S (2020) Analysis of various optimizers on deep convolutional neural network model in the application of hyperspectral remote sensing image classification. Int J Remote Sens 41(7):2664–2683

Li B, Delpha C, Diallo D et al (2021) Application of artificial neural networks to photovoltaic fault detection and diagnosis: A review. Renew Sustain Energy Rev 138:110512

Wang B, Lei Y, Yan T et al (2020) Recurrent convolutional neural network: a new framework for remaining useful life prediction of machinery. Neurocomputing 379:117–129

Hang CC, Ying LM, Shu NQ (2018) Transistor open-circuit fault diagnosis in two-level three-phase inverter based on similarity measurement. Microelectron Reliab 91:291–297

Bae CJ, Lee DC, Nguyen TH (2019) Detection and identification of multiple IGBT open-circuit faults in PWM inverters for AC machine drives. IET Power Electron 12(4):923–931

Michele C, Luca B, Enrico Z (2019) Optimal allocation of prognostics and health management capabilities to improve the reliability of a power transmission network. Reliab Eng Syst Saf 184:164–180

Yong C, Zhang JJ, Chen ZY (2020) Current observer-based online open-switch fault diagnosis for voltage-source inverter. ISA Trans 99:445–453

Duan CQ, Deng C (2020) Prognostics of health measures for machines with aging and dynamic cumulative damage. IEEE/ASME Trans Mechatron 25(5):2264–2275

Massimiliano DB, Fabio L, Fabrizio M et al (2018) Anomaly detection and predictive maintenance for photovoltaic systems. Neurocomputing 310:59–68

Guo J, Liu X, Li S et al (2020) Bearing intelligent fault diagnosis based on wavelet transform and convolutional neural network [J]. Shock Vib 2020(19):1–14

Stefan H, Johann J (2020) Advanced classification of converter control concepts for integration in electrical power systems. Int J Electr Power Energy Syst 123:106210

Hu J, Kuang Y, Liao B et al (2019) A multichannel 2D Convolutional neural network model for task-evoked fMRI data classification. Comput Intell Neurosci 2019:1–9

Jiao J, Zhao M, Lin J et al (2020) A comprehensive review on convolutional neural network in machine fault diagnosis. Neurocomputing 417:36–63

Zhang JJ, Chen Y, Chen ZY, Zhou AJ (2019) Open-switch fault diagnosis method in voltage-source inverters based on phase currents. IEEE Access 7:63619–63625

Hu KT, Liu ZG, Iannuzzo F et al (2018) Simple and effective open switch fault diagnosis of single-phase PWM rectifier. Microelectron Reliab 88–90:423–427

Liang Y, Li B, Jiao B (2021) A deep learning method for motor fault diagnosis based on a capsule network with gate-structure dilated convolutions. Neural Comput Appl 33:1401–1418

Wan L, Chen Y, Li H et al (2020) Rolling-element bearing fault diagnosis using improved LeNet-5 network. Sensors 20(6):1693

Xu L, Cao MY, Song BY et al (2018) Open-circuit fault diagnosis of power rectifier using sparse autoencoder based deep neural network. Neurocomputing 311:1–10

Fei M, Ning L, Huiyu M, Yi P, Haoyuan S, Jianyong Z (2018) On-line fault diagnosis model for locomotive traction inverter based on wavelet transform and support vector machine [J]. Microelectron Reliab 88–90:1274–1280

Aslam N, Ramay WY, Xia K, Sarwar N (2020) Convolutional neural network based classification of app reviews. IEEE Access 8:185619–185628

Adebena O, Jae CJ (2020) The application of machine learning for the prognostics and health management of control element drive system. Nucl Eng Technol 52(10):2262–2273

Pan H, Tang W, Xu JJ et al (2020) Rolling bearing fault diagnosis based on stacked autoencoder network with dynamic learning rate [J]. Adv Mater Sci Eng 2020:1–12

Majid P, Praveen KJ (2020) Soft-switching power electronics technology for electric vehicles: a technology review. IEEE J Emerg Sel Top Ind Electron 1(1):80–90

Poma Y, Melin P, González CI, Martinez GE (2020) Optimal recognition model based on convolutional neural networks and fuzzy graphical search algorithm method. In: Castillo O, Melin P (eds) Hybrid intelligent systems in control, pattern recognition and medicine. Studies in computational intelligence, vol 827. Springer, Cham. pp 71–81

Poma Y, Melin P, González C, Martinez G (2020) Filter size optimization on a convolutional neural network using FGSA. In: Castillo O, Melin P, Kacprzyk J (eds) Intuitionistic and type-2 fuzzy logic enhancements in neural and optimization algorithms: Theory and applications. pp 391–403

Saee P, Wang ZX, Frede B (2020) A guideline for reliability prediction in power electronic converters. IEEE Trans Power Electron 35(10):10958–10968

Rajpathak D, De S (2016) A data- and ontology-driven text mining-based construction of reliability model to analyze and predict component failures [J]. Knowl Inf Syst 46(1):87–113

Zahedinasab R, Mohseni H (2020) Neuroevolutionary based convolutional neural network with adaptive activation functions. Neurocomputing 381:306–313

Li S, Wang H, Song L et al (2020) An adaptive data fusion strategy for fault diagnosis based on the convolutional neural network. Measurement 165:108122

Zare S, Ayati M (2021) Simultaneous fault diagnosis of wind turbine using multichannel convolutional neural networks. ISA Trans 108:230–239

Zhao S, Frede B, Wang H (2021) An overview of artificial intelligence applications for power electronics. IEEE Trans Power Electron 36(4):4633–4658

Enes U, Xu C, Yang F, Pu S, Bilal A (2021) A new complete condition monitoring method for SiC power MOSFETs. IEEE Trans Ind Electron 68(2):1654–1664

Gong W, Chen H, Zhang Z et al (2019) A novel deep learning method for intelligent fault diagnosis of rotating machinery based on improved CNN-SVM and multichannel data fusion. Sensors 19(7):1693

Chen WQ, Zhang LY, Krishna P et al (2020) Data-driven approach for fault prognosis of SiC MOSFETs. IEEE Trans Power Electron 35(4):4048–4062

Xu L, Cao M, Song B et al (2018) Open-circuit fault diagnosis of power rectifier using sparse autoencoder based deep neural network [J]. Neurocomputing 311:1–10 (S0925231218306155)

Lecun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324

Wang Y, Jin Q, Sun G et al (2019) Planetary gearbox fault feature learning using conditional variational neural networks under noise environment. Knowl-Based Syst 163:438–449

Zhong Z, Xu T, Wang F et al (2018) Text case-based reasoning framework for fault diagnosis and predication by cloud computing [J]. Math Probl Eng 2018(PT.8):9464971.1-9464971.10

Zhou S, Chen B, Zhang Y et al (2020) A feature extraction method based on feature fusion and its application in the text-driven failure diagnosis field [J]. Int J Interact Multimed Artif Intell 6(4):121

Funding

This work was supported by the National Natural Science Foundation of China (61901212), the Natural Science Foundation of the Jiangsu Higher Education Institutions of China (19KJB510032, 20KJA510007), the Open Research Fund of Jiangsu Collaborative Innovation Center for Smart Distribution Network (No. XTCX201909), The Scientific Research Foundation for the High-Level Personnel of NJIT(YKJ201916).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sun, Q., Yu, X., Li, H. et al. Adaptive feature extraction and fault diagnosis for three-phase inverter based on hybrid-CNN models under variable operating conditions. Complex Intell. Syst. 8, 29–42 (2022). https://doi.org/10.1007/s40747-021-00337-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-021-00337-6