Abstract

Surface electromyography (sEMG) is a kind of valuable bioelectric signal and very potential in the field of human–machine interaction. Ideal interactions require sEMG based patterns recognition not only with high accuracy but also with good rapidity. However, too much real-time feature-related computation will greatly slow down the interaction, especially for multichannel sEMG. To decrease the feature-related time consumption, the paper formulates the feature reduction as an optimization problem, and develops a double-phases particle swarm optimization (PSO) with hybrid coding to solve the problem. In the research, the initial feature data set with 31 kinds of feature is built firstly based on eight subjects’ 16 channels forearm sEMG signals, then PSO is introduced to conduct the feature reduction of 31 × 16 dimensions through the feature and channel optimization in double phases. During the optimization, two improved k-nearest neighbor (KNN) methods such as weighted representation based KNN (WRKNN) and weighted local mean representation based KNN (WLMRKNN) are introduced to classify the gestures, and the classification accuracy is used to evaluate the particles of PSO. Experimental results and comparison analysis show that PSO based feature reduction methods outperform genetic algorithm (GA), ant colony optimization (ACO) and principal component analysis (PCA) based feature reduction methods. With the optimized feature data subset by PSO, WRKNN and WLMRKNN are superior to KNN, quadratic discriminant analysis (QDA), and naive bayes (NB) greatly. The proposed method can be applied in the pattern recognition of high dimensional sEMG with multichannel or high-density channels for the purpose of rapidity and without a decline of accuracy in real-time control. Further, it can be used to reduce the economic cost of the personalized customization equipment through the optimal channels for any subjects in the future.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Surface electromyography (sEMG) is a kind of weak bio-electrical signal, which contains rich information to reflect human’s neuron-muscular activity and moving intentions. The sEMG signals can be obtained in a simple and non-invasive way by placing electrodes on the skin surface of the experimental subjects, which is low-cost and does not harm the human body [1]. Therefore, the sEMG signals are more and more popular in many fields such as clinical diagnosis, rehabilitation, human–computer interaction, etc. Besides, it is also widely applied in the commercial wearable devices such as prosthetic hand or limb [2] and provides a natural human–machine interaction method for users. However, the control accuracy, robustness and rapidity of the sEMG based pattern recognition directly influence the user’s comforting satisfaction in the real-time interaction. To improve the real-time control performance of sEMG based human–machine interaction, feature extraction and classification methods should be designed in details during the pattern recognition of sEMG [3].

Feature extraction can effectively extract hidden information from surface EMG signals and reduce the impact of noise, so researchers have been studying the features of surface EMG signals for decades. Numerous features have been proposed in recent years. Graupe and Cine introduced the autoregressive (AR) model to classify EMG signal [4], Meek and Fetherston used signal-to-noise ratio to measure the quality of EMG signal [5]. Zardoshti et al. evaluated a variety of EMG features including integral of average value, the variance, the number of zero crossing, Willison amplitude, v-order, log detector, histogram and autoregressive model parameters [6]. The approximate entropy (ApEn), introduced by Pincus, has proved to be an efficient measure of the regularity of a time series, especially in the field of physiology and medicine [7, 8]. Later, Richman and Moorman developed ApEn into Sample entropy (SampEn) to reduce the bias induced by self-matching [9]. SampEn and ApEn can be used for sEMG pattern recognition, but they usually consume a large amount of computation which significantly reduce the speed of feature recognition in real-time control. Recently, several fractal analysis methods have been proposed as sEMG features. For example, Arjunan introduced a novel feature, Maximum Fractal Length (MFL), to measure the strength of contraction of the associated muscle, after that he presented another new fractal method named Higuchi’s fractal dimension (HFD), which has shown better performance than other fractal methods in the study of [10–12].

To ensure the performance of sEMG based pattern recognition, especial in real-time control, feature reduction or feature selection becomes an important problem in the feature extraction process. Principal component analysis (PCA) is commonly used to deal with feature reduction problem [13, 14], but it performs weakly when solving high dimensional feature reduction problems. Some researchers try to find out some better feature combination to describe the sEMG signal. Phinyomark et al. summarized fifty features including time domain, frequency domain and fractal method, and compared the possible combinations of 2, 3 and 4 out of 50 features to find the best feature combination [15, 16]. Bai manually compared several time-domain feature combinations and finally choose MAV, ZC and RMS as the best feature combination [17]. However, those fixed feature combinations are less robust and cannot be suitable for subjects with different physiological characteristics simultaneously.

Classifiers also have a significant impact on the pattern recognition accuracy. Numerous researches had explored the appropriate classifiers for sEMG signal pattern recognition. The popular classification algorithms, including k-nearest neighbor (KNN), artificial neural network (ANN), linear discriminant analysis (LDA), quadratic discriminant analysis (QDA), support vector machine(SVM) are introduced as the classifiers for sEMG recognition [18, 19]. Phinyomark adopted LDA to classify sEMG based on the elaborated chosen feature combination of 2, 3 and 4 out of 50 features [16]. Kim observed the classification accuracy of KNN, QDA and LDA based on thirty features of two-channel sEMG, and found out that KNN performs better than QDA and LDA [20]. Pan [21] introduced two improved KNN, named as the weighted representation based k-nearest neighbor (WRKNN) and weighted local mean representation based KNN (WLMRKNN) [22], to classify the sEMG, and the results showed that WRKNN and WLMRKNN are superior to KNN and ANN. In fact, it is difficult to judge which feature or which feature combination are superior or inferior for one classifier because of the physical difference between people.

Particle swarm optimization (PSO) is a random search algorithm based on group collaboration, firstly proposed by Kennedy and Eberhart [23]. As a robust swarm intelligence technology, PSO has been widely used to solve complex optimization problems in real engineering area [24,25,26,27,28], it also has been introduced into machine learning to solve pattern recognition problems. Huang [29] proposed a PSO-SVM model to improve the performance of support vector machine (SVM) classifier and select the discriminating input features in the field of data mining. To find an optimal feature set, Khushaba [30] proposed a feature selection method based on modifying the binary PSO and mutual information (BPSOMI). All the researches show PSO based method can be applied to feature reduction.

To decrease the feature-related time consumption, the paper describes the feature reduction as an optimization problem, and develops a double-phases PSO with hybrid coding to solve the problem. PSO is introduced to sEMG pattern recognition procedure and to conduct the feature reduction through the feature and channel optimization in double phases. During the optimization, WRKNN or WLMRKNN is introduced to classify the gestures, and the classification accuracy is used to evaluate the particles of PSO.

The remainder of the paper is organized as follows. Section 2 describes the detailed process about the pattern recognition of sEMG, including signal collection, signal preprocessing, feature extraction and classifier. Section 3 describes the formulation of the optimization problem and the methodology of double-phases PSO based feature optimization and channel optimization. Section 4 shows the experiment results and analysis. Finally, Sect. 5 provides the conclusion and outlook.

Signal acquisition and processing

This section introduces the process of sEMG pattern recognition which includes the signal collection, signal preprocessing, features used for feature extraction, and common classifiers.

Signal collection

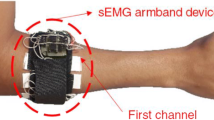

In this research, a kind of sEMG signal collecting device with an electrode sleeve named ELONXI EMG 100-Ch-Y-RA is used to collect raw sEMG signal. The 18 dry electrodes on the electrode sleeve are arranged in a novel bipolar Montage distribution [1]. Each electrode is modified from the standard disposable Ag/AgCl ECG electrodes. 16 of the 18 dry electrodes are used for data acquisition, and the other two are electrical reference electrode and bias electrode. The distribution of the myoelectric electrodes and its wearing method is shown in Fig. 1.

Eight human subjects, seven males and one female, aged 21–29 years, participated in the study. They are all healthy and volunteered for the study. Each subject was required to collect sEMG data for three trials. For every trial, each subject was asked to make five gestures, including OK, Victory, Eight, Orchid Fingers, and Thumb Up, which are shown in the following figures (Fig. 2).

The data collection for each gesture lasted 20 s. The first 8 s is the rest time, and the volunteers' forearms and fingers were placed in a natural state of relaxation. The next 12 s is the time for gesture signal collection. At this time, subjects should make corresponding gestures and keep them until the end of gesture data collection. For every 12 s of gesture signals, to ensure the stability of gesture data, the middle 10 s are selected as samples and then marked.

Signal preprocessing

After sEMG signal is collected, it will go through the preprocessing of filter, which can validly reduce the noise of the raw sEMG signal. Since the most useful information of the sEMG is between the frequency range of 20–500 Hz, the sampling frequency is set to 1000 Hz according to the Shannon Theory, thus the signal below 500 Hz can be collected without distortion. The raw sEMG signals are filtered with a 20 Hz Butter-worth high pass filter and a notch filter of 50 Hz. This filtering method can ensure that the acquired signal contains most of the muscle information under the premise of effective noise reduction, as shown in Figs. 3 and 4. Obviously, the filtered signal reduces the low-frequency noise existing in the entire signal collecting process.

Feature extraction

Feature extraction is an important process during pattern recognition of sEMG. Referring to the research of Fang [31], the sEMG signals were segmented with a 300 ms window and 100 ms window shift for feature extraction. A total of 31 features are collected to describe gestures, including 27 time-domain features and 4 frequency-domain features. The detailed information on those features is shown below (Table 1):

Integrated EMG Integrated EMG (IEMG) was presented by Merletti [32]. It is expressed as an integral value of the absolute value of the sEMG signal and can be used to detect muscle activity. It is widely used in the field of non-pattern recognition and clinical medicine. IEMG is usually used together with the sliding window method.

Mean Absolute Value Mean absolute value (MAV) feature is one of the most popular EMG features. Like the IEMG feature, it is often used as an indicator of the disease, especially in the detection of the surface EMG signal for the prosthetic limb control [6, 33]. The MAV feature is the mean value of the absolute amplitude of the signal processed by the time window method.

Modified MAV1 and MAV2 Modified mean absolute value type1 (MAV1) is an extension of the MAV feature and the weight coefficient \({w}_{i}\) is added to improve the robustness of the MAV feature [6]. Modified mean absolute value type2 (MAV2) is also an extension of the MAV feature, and it is similar to the MAV1 feature, but the difference is that the weight value \({w}_{i}\) of the window in the formula is assigned by a continuous function. So MAV2 is smoother than MAV1 [34].

Simple Square Integral Simple square integral (SSI) or integral square represents the energy of the EMG signal. It is the sum of the squares of the amplitudes of the EMG signals. In general, this feature is defined as the energy index of the EMG signal [35].

Variance of EMG Variance of EMG (VAR) reflects the concentration and dispersion of the signal data value. It also reflects the index of signal energy [6], which is generally defined as the mean value of the sum of squares of the EMG signal.

Absolute Value of the 3rd, 4th, and 5th Temporal Moment The 3rd, 4th, and 5th temporal moment (TM3, TM4, and TM5) feature were proposed by Saridis and Gootee in 1982 for the control of prostheses [36].

Root Mean Square Root mean square (RMS) is a widely used EMG feature [20], which is related to the contraction force of the muscles and the state of muscle fatigue.

V-Order V-Order is also a feature that has a certain relationship with muscle contraction force. According to the study [6], the optimal value of the variable v was set to 2, so that the v-order feature is the same as the RMS feature.

Log Detector Log detector (LOG) feature is generally used to estimate the contractility of muscle.

Waveform Length The waveform length (WL) feature, also known as waveform features, describes the complexity of EMG signals [33]. The WL feature is the cumulative length of the entire waveform, and it usually used together with the sliding window method.

Difference Absolute Standard Deviation Value The difference absolute standard deviation value. (DASDV) feature looks like a combination of WL features and RMS features, which can be seen as the standard deviation of the wavelength [20].

Zero Crossing Zero crossing (ZC) is defined as the number of times the signal passes through zero, which can reflect the fluctuation degree of point data and is an important feature of signal recognition. ZC is also a method for obtaining frequency information of myoelectric signals from the time-domain [37]. By setting a threshold, low voltage fluctuations can be avoided and noise eliminated.

Myopulse Percentage Rate Myopulse percentage rate (MYOP) is the average of a series of myopulse outputs, and the myopulse output is 1 if the myoelectric signal is greater than a pre-defined threshold [37].

Willison Amplitude Wilson amplitude (WAMP), like the ZC feature, can be used to obtain frequency information of the myoelectric signal from the time domain [37]. It is determined by the difference between the amplitudes of adjacent EMG signals and a predetermined threshold. It is related to the firing of motor unit action potentials (MUAP) and muscle contraction force [35].

Slope Sign Change Slope sign change (SSC) is similar to the ZC, MYOP and WAMP features and can also be used to obtain frequency domain information from the time domain of the signal. The threshold is preferably 50–100 mV [34]. The value of threshold needs to be determined based on the actual equipment and noise.

Auto-Regressive Coefficients Previous studies have shown that the changes in muscle state will cause changes in AR coefficients [38], which describe each segment of the signal as a linear combination of signal before the pth segment and noise [39]. In its equation, ap is AR coefficient, and P is the order of AR model. Several researches showed that a model order of 4 is adequate for AR time series modeling of sEMG signal.

Cepstral Coefficients Cepstral coefficients (CC) is defined as the inverse Fourier transform of the logarithm of the magnitude of the signal power spectrum [40].

Mean Frequency The mean frequency (MNF) of a spectrum is calculated as the sum of the product of the spectrogram intensity (in dB) and the frequency, divided by the total sum of spectrogram intensity [41]. In its equation, M is the number of frequency bins in the spectrum, \({f}_{j}\) is the frequency of spectrum at bin j of M, and \({P}_{j}\) is Intensity (dB scale) of the spectrum at bin j of M.

Peak Frequency Peak frequency (PKF) is the maximum frequency within a window of the EMG timing signal [42].

Mean Power Mean power (MNP) is the average power of the sEMG energy spectrum [39].

Total Power The total power (TTP) is the sum of all the frequencies in the power spectrum.

Maximum Fractal Length Maximum fractal length (MFL) is related to the strength of contraction of the associated muscle [10].

Classifiers

In general, the most popular classifiers for sEMG pattern recognition are LDA, QDA, KNN, SVM, ANN, NB, etc. LDA, QDA and KNN are characterized by fast computing speed, but their classification accuracy is not well enough to reach the application level; SVM, ANN, NB can achieve higher classification accuracy, but they require huge computational costs. To be applied in clinical or industrial environments, classification algorithms for sEMG need to have some necessary characteristics, such as high classification accuracy, strong robustness and good rapidity. WRKNN and WLMRKNN are firstly proposed by Gou [22] for the pattern recognition of UCI and UCR data sets as well as face databases. Comparing to KNN, these two algorithms increase the weight of nearest neighbors. In 2019, we introduced WRKNN and WLMRKNN for sEMG pattern recognition, the results showed their superiority. So, these two modified KNN are involved in the following experiments.

Weighted representation-based K-nearest neighbor algorithm

WRKNN is a weighted extension of the KNN. The main process of WRKNN is as follows. Firstly, choose k nearest neighbors of test sample y from each class j based on Euclidean distance as follows:

where \({x}_{i}^{j}\) indicates the ith training sample from class j and denotes the k-nearest neighbor as \({X}_{kN}^{j}=[{x}_{1N}^{j},{x}_{2N}^{j},\cdots ,{x}_{kN}^{j}]\). Secondly, represent the test sample y as the linear combination of categorical k nearest neighbors which can be defined as:

where \({\eta }_{i}^{j}\) indicates the ith coefficient of ith nearest neighbor from class j. Thirdly, solve the optimal representation coefficient \({\eta }^{j*}\) which can be defined as:

where \(\lambda \) is the regularization coefficient, \({T}^{j}\) is the distance matrix between the test sample and each nearest neighbor, \({W}^{j}\) is defined as:

Then calculate the categorical representation-based distance between the test sample and k-local mean vectors in class j as:

Finally, classify the test sample y into the class with the minimum categorical representation-based distance among all the classes, the definition can be expressed as:

Weighted local mean representation-based K-nearest neighbor

WLMRKNN can be regarded as an improved one of WRKNN. Different from WRKNN, WLMRKNN uses k local mean vectors to represent the test sample y. Supposed there are k nearest neighbors of test sample y as the following, which are chosen from class j based on Euclidean distance:

Here \({x}_{i}^{j}\) indicates the \(i\mathrm{th}\) training sample from class j as a nearest neighbor. Then the mean vectors of k nearest neighbors from each class can be expressed as:

where \({\stackrel{-}{x}}_{iN}^{j}\) indicates the ith local mean vector from class j, and represent the test sample y as the linear combination of categorical k local mean vectors which can be defined as:

where \({s}_{i}^{j}\) indicates the ith coefficient of ith local mean vector from class j. The optimal coefficient \({s}^{j*}\) can be computed by the following:

Here \(\gamma \) is the regularization coefficient, \({W}^{j}\) is the distance matrix between the test sample and each local mean vector which is defined as:

So, the categorical representation-based distance between the test sample and k-local mean vectors in class j is computed as:

Finally, classify the test sample y into the class with the minimum categorical representation-based distance among all the classes, the definition can be expressed as:

Feature data reduction based on double phases PSO

Theoretically, the more characteristic information there is, the higher the classification accuracy will be. However, high dimensional features set of sEMG will consume great computation, especially for sEMG with high-density channels. As it’s well known, the high cost will directly influence the application of sEMG in real-time man–machine interaction. So, it’s necessary to conduct the feature reduction before pattern classification for high dimensional features set with multiple channels, shown in Fig. 5 sEMG pattern recognition flowchart. To achieve better results, we introduce PSO to reduce the feature dimensions in double phases.

Double-phases PSO is divided into two parts. In the first phase, PSO is used to obtain an optimal feature subset in the whole channel data set. In the second phase, PSO is adopted to go on the channel optimization with the optimal feature subset. The methods will be described in detail as follows, including problem description, PSO, feature and channel optimization.

Problem description

Suppose there are M hand gestures need to be recognized, so the data set Q for those gestures can be recorded as the following:

Here, Dm stands for the feature data vector of the mth hand gesture, while ym stands for the real label of Dm. If the sEMG signal of each hand gesture is acquired by an equipment with C channels over time, and F features are adopted to describe each gesture, Dm can be recorded as the following equation:

where \(d_{cf}^{m}\) means the fth feature data of the cth channel for the mth hand gesture.

Suppose that classifier Z is used to recognize M gestures for any one subject, and each gesture has been collected K samples over time in data set Q, the classification accuracy of the classifier Z can be computed as the following:

Obviously, there are C*F dimensions of feature data for each gesture in the data set Q. The larger the C and F, the higher the dimension of feature data, and the higher the computational cost of gesture recognition. To reduce the computing cost in real-time tasks, we use PSO to find the optimal feature subset from the data set Q to provide the optimal accuracy for gesture recognition.

Suppose the optimal feature data subset is recorded as Qos:

Here, Dom stands for the optimal feature data of the mth gesture chosen from the data set Q by PSO in the QOS. According to the Eq. (16), the feature optimization problem can be described as follows:

Particle swarm optimization

PSO originated from the study of the behavior of preying on birds, its basic idea is that the whole swarm of birds will tend to follow the bird which found the best path to food. To search an optimum, PSO defines a swarm of particles to represent the potential solutions to an optimization problem. Each particle begins with an initial position randomly and flies through the D-dimensional solution space. The flying behavior of each particle can be described by its velocity and position in standard particle swarm optimization (SPSO) [43] as the following.

where Vi = (vi1, vi2,…, vid,…,viD) is the velocity vector of the ith particle; Xi = (xi1, xi2,…, xid,…, xiD) is the position vector of the ith particle; Pi = ( pi1, pi2,…, pid,…, piD) is the best position found by the ith particle; Pg = ( pg1, pg2,…, pgd,…, pgD) is the global best position found by the whole swarm; c1, c2 are two learning factors, usually c1 = c2 = 2; r1, r2 are random numbers between (0, 1); w is the inertia weight to control the velocity.

After some comparative analysis, Shi and Eberhart found out that the inertia weight has a great influence on the optimization performance [43]. To get suitable search step, they developed a modified PSO, in which a strategy of linearly decreased inertial weight over time (LDIW) is introduced to keep the trade-off between the exploration and the exploitation, the modified equation of inertial weight can be found as the following:

where wI and wT stand for the initial and the terminal inertial weights respectively, while Tmax is the terminal iteration.

Double phases PSO for feature and channel optimization

In our work, we choose 31 features to describe the sEMG of 16 channels, that is, there are 31 × 16 = 496 dimension feature data in each gesture feature data set Q. It’s no doubt that such a data set is a super-high dimensional problem. To reduce the data computation cost and make clear how the features and the channels influence the recognition accuracy for each subject, we develop double phases PSO for feature data optimization.

Feature optimization

In the first phase of optimization, PSO is anticipated to find the best feature combination among 31 features to reduce the feature data. Considering the problem and the optimization algorithm as a whole, a hybrid coding method with real and binary coding is adopted to describe the particle information in the search and the decision space. For example, the position vector in the search space of arbitrary particle i is encoded in the real string:

At the same time, its velocity also is encoded in real value in the search space.

where f stands for the feature index,\(f \in [{1},F]\), and F = 31.\(x_{if}^{R} \in [{0},1]\), \(v_{if}^{R} \in \left[ { - V_{\max } ,V_{\max } } \right]\) and Vmax is set as 1.0.

In the decision space of the feature optimization problem, the position of the particle can switch to a binary code:

where \(x_{if}^{B} \in \{ 0,1\}\). If the fraction of \(x_{if}^{R}\) is bigger than 0.5, \(x_{if}^{B} = 1\), which indicates the feature f is selected into the feature subset during the classification process; else \(x_{if}^{B} = 0\), which means the feature f is not selected into the feature subset. Binary coding-based particle i makes it easy to construct feature subsets.

To clarify the hybrid coding method, a coding example for particle i is presented in the following table:

According to the decision vector XiB in Table 2, the value of 1th, 3th and 7th sub-vectors are equal to 1, what means there are the 1th, 3th and 7th features are chosen to construct the feature subset {IEMG, MAV1, TM3}. Based on the feature subset, the corresponding data subset for particle i can be obtained:

Obviously, the dimension of the feature data has been reduced greatly through feature optimization. The reduced feature data subsets are directly used for pattern recognition, and the recognition accuracy is calculated as the fitness of particle i. After sEMG signal is preprocessed, the above hybrid coding method can be used for feature optimization by PSO. The main procedure is as the following:

Channel optimization

As it’s well known, each gesture is determined by the movement of multiple muscle blocks, and each involved muscle block provides a different contribution to the output of sEMG signal. Therefore, it is necessary to identify the most significant muscle blocks involved in each gesture movement, which mainly determine the corresponding EMG signal strength of the gesture. During the acquisition of sEMG signal, each channel index just indicates the muscle block motion signal corresponding to the acquisition point. Therefore, after the feature optimization in the first phase, it is expected to use PSO for channel optimization in the second phase, further reducing the dimension of the data set.

As same as the feature optimization, the hybrid coding method with real and binary coding is also adopted to describe the particle information in the search and the decision space during the channel optimization.

Considering the coding of the arbitrary particle j, its position and velocity vector in the search space is coded in real strings:

where C = 16. c stands for the channel index and \(c \in [{1},C]\). \(x_{jc}^{R} \in [{0},1]\), \(v_{jc}^{R} \in \left[ { - V_{\max } ,V_{\max } } \right]\) and Vmax is set as 1.0.

In the decision space of the channel optimization problem, the position of the particle can be switched to a binary code:

where \(x_{jc}^{{\text{B}}} \in \{ 0,1\}\). If the fraction of \(x_{jc}^{R}\) is bigger than 0.5, \(x_{jc}^{B} = 1\), which indicates the channel c is selected into the channel subset during the classification process; else \(x_{jc}^{B} = \, 0\), which means the channel c is not selected into the channel subset. So, a channel subset is easy to be decided based on the binary coding of the particle j.

Suppose the value of 1th, 2th, 5th, 8th sub-vectors in XjB are equate to 1, which means that the 1th, 2th, 5th and 8th channels provide a significant contribution to the sEMG signal of the gestures, and can be chosen to construct the channel subset. The data subset in the Eq. (25) will be further reduced as the following:

Based on the reduced data subset, the gesture patterns are recognized and the recognition accuracy will be computed as the fitness to evaluate the particle j. The main procedure of channel optimization by PSO is same as the procedure of feature optimization in the above section.

Algorithm procedure

The algorithm procedure is described below.

Experiments and discussions

In the experiments, three trials of sEMG signal data are acquired under the same sleeve wearing position from eight subjects firstly. After that, the first trial of data is selected as a training data set, and one of the rest trials of data is used as a test data set for each subject. The parameters of PSO are set as: c1 = c2 = 2.0, wI = 0.7, wT = 0.2, and swarm size Ns = 20; In GA, crossover probability is 0.8, and mutation probability is 0.1; in ACO, evaporation pheromone rate is 0.8, and P0 is 0.2. All the programs such as filtering, feature extraction, classifier and optimization are completed on the MATLAB R2018b platform.

Experimental comparison analysis of feature optimization

In the first experiment, PSO, GA, ACO and PCA were combined with five classifiers (KNN, QDA, NB, WRKNN and WLMRKNN) respectively to perform feature reduction and classification of sEMG signal, thus 20 different combinations were obtained. The classification accuracy of the combined methods for each subject and the average accuracy for all subjects are shown in Tables 3, 4, 5, 6 and 7 below.

According to the average accuracy of each classifier for all subjects in the above five tables, most of the classifiers combined with PCA-based feature reduction are below 60%, and only the average classification accuracy of PCA-QDA classifier was slightly higher (62.18%). The reason for this result is that PCA inevitably weakens and loses information when reducing large dimensions to very small ones. The average results of ACO are a little better than PCA, but it performed unstable and sometimes may be trapped into local optimum. GA based feature optimization method performed well but it's still worse than PSO except with KNN classifier in Table 3. According to the result of PSO in five tables, whether combined with any classifier, the average classification accuracy of PSO based feature reduction has been significantly improved, which means that PSO based feature reduction is very valid for the high dimensional feature reduction in the gesture recognition, and can effectively select the optimal feature combination from the large feature set with less redundant information.

In addition, the results in Tables 3, 4, 5, 6 and 7 show that different classifiers combined with different feature extraction methods have different performance. It can be seen from Tables 6 and 7, PSO-WRKNN and PSO-WLMRKNN are obviously superior to other methods. Compared with PSO-KNN, the average accuracy of these two methods are improved by about 4%. This is because WRKNN and WLMRKNN increase the nearest neighbor weights and reduce the influence of data from the remote time window. Therefore, these two improved KNN can be regarded as a satisfactory choice for classifiers with PSO based feature optimization to improve the classification accuracy.

To further make clear the influence of different features, the distribution statistics about the optimal features in each trial are presented in the following Figs. 6, 7 and 8.

Figures 6, 7 and 8 shows the rate at which each feature is selected in the optimal feature set. Among them, both MNF and MFL are most likely to be chosen, more than other characteristics, indicating these two features are suitable to describe the forearm sEMG signal for more subjects in gesture recognition. It is worth mentioning that, according to the results of multiple optimal feature combinations of eight subjects, each subject's optimal feature sub-set tends to select specific features, that is, each person has a set of optimal features that are most suitable for his or her physical condition and dressing condition.

Experimental comparison analysis of channel optimization

Through the above analysis, PSO is very effective for dimension reduction of high-dimensional features. On this basis, PSO is continued to be used for channel optimization. In the experiment, we observed five classifiers combined with PSO for double phases optimization respectively, and the statistical data are shown in Table 8.

Table 8 shows the gesture classification accuracy after feature and channel optimization of five different classifiers. The accuracy of PSO-KNN, PSO-QDA, and PSO-NB increased by 4.16%, 3.78%, and 3.53% respectively after channel optimization, meanwhile, the accuracy PSO-WRKNN and PSO-WLMRKNN is also improved slightly by 0.70% and 0.13%, respectively. Obviously, the channel optimization doesn’t cause accuracy degradation but improves it more or less. The fact is that, in most situation, the sEMG signal acquisition device acquires a series of noises due to the crashed sEMG electrodes or bad contact, and this problem can be solved through channel optimization to a certain extent. In other words, channel optimization can accurately remove the unimportant channels which provided less contribution to the sEMG of one gesture, so that eliminating the signal data of some channels after channel optimization not only can improve the operation speed in real-time control, but also ensure the classification accuracy in real-time control.

To further make clear the influence of the channels, the number of the optimal channels in each trial is observed and the distribution statistics of them are illustrated in the following Fig. 9.

It can be seen from Fig. 9, the optimal number of channels in this experiment is mostly distributed between 6 and 10, among which 9 channels are the most, and the experimental results of using nine channels as the optimal channel account for 35.24%. It should be noted that in this experiment, the maximum number of channels is limited to 10, because the more channels, the more difficult it is to reduce the channel data. All the results further reveal that there are some individual differences among different subjects for the same gesture. Therefore, PSO based channel optimization not only helps to reduce the time consumption of pattern recognition but also provides some valuable ideas for reducing the economic cost of sEMG based human–computer interaction devices.

Conclusions

Improving the rapidity of real-time control while ensuring accuracy is a key problem for sEMG based human–computer interaction. Suitable feature exaction and classification play a vital role in pattern recognition. To get high classifying accuracy with low time consumption in pattern recognition, the paper presents a double-phases PSO model combined with WRKNN and WLMRKNN classifiers for multichannel sEMG signal with high dimensional features. After the preprocessing of sEMG, PSO is introduced firstly to select the optimal feature subset from the total 31 feature set, and then to select the optimal channel. During the optimization, WRKNN and WLMRKNN classifiers are used to classify the gestures based on the optimal channels and the optimal features. The experimental results show that PSO based feature reduction method outperform GA, ACO and PCA based feature reduction methods in high dimensional feature space of sEMG. The combination of PSO and WRKNN or WLMRKNN classifier further improves the classification accuracy. The proposed method requires only a small amount of feature extraction computation and can still reach a significant accuracy for multichannel sEMG. In the future, we will further study the sEMG based applications in the human–machine interaction, such as the real control of the robot, or some rehabilitation treatments based on sEMG, and seek for better pattern recognition method [44, 45].

Data availability

All data generated or analyzed during this study are included in this published article.

Code availability

If the code is necessary, please email 111003@zust.edu.cn for it.

References

Fang Y, Hettiarachchi N, Zhou D et al (2016) Multi-modal sensing techniques for interfacing hand prostheses: a review. IEEE Sens J 15(11):6065–6076

Deluca C (1979) Physiology and mathematics of myoelectric signals. IEEE Trans Biomed Eng 26(3):313–325

Phinyomark A, Phukpattaranont P, Limsakul C (2012a) Feature reduction and selection for emg signal classification. Expert Syst Appl 39(8):7420–7431

Graupe J, Cine K (1975) Functional separation of EMG signals via ARMA identification method for prosthesis control purpose. IEEE Trans Syst Man Cybern 5:252–259

Meek SG, Fetherston SJ (1992) Comparison of signal-to-noise ratio of myoelectric filters for prosthesis control. J Rehab R&D 29(4):9–20

Zardoshti-Kermani M, Wheeler BC, Badie K, Hashemi RM (1995) EMG feature evaluation for movement control of upper extremity prostheses. IEEE Trans Rehabil Eng 3(4):324–333

Pincus SM (1991) Approximate entropy as a measure of system complexity. Proc Natl Acad Sci USA 88:2297–2301

Pincus SM (1995) Approximate entropy (ApEn) as a complexity measure. Chaos 5:110–117

Richman JS, Moorman JR (2000) Physiological time-series analysis using approximate entropy and sample entropy. Am J Physiol Heart Circ Physiol 278(6):H2039–H2049

Arjunan SP (2008) Fractal features of surface electromyogram: a new measure for low level muscle activation. RMIT University, Melbourne

Arjunan SP, Kumar DK (2010) Decoding subtle forearm flexions using fractal features of surface electromyogram from single and multiple sensors. J Neuro Eng Rehabil 7:53

Esteller R, Vachtsevanos G, Echauz J, Litt B (2001) A comparison of waveform fractal dimension algorithms. IEEE Trans Circ Syst I Fundam Theory Appl 48(2):177–1831

Xu YB (2018) Teleoperation control of robot based on EMG signal. South China University of Technology, Guangzhou ((in Chinese))

Zhou L, Zheng J, Ge ZQ et al (2018) Multimode process monitoring based on switching autoregressive dynamic latent variable model. IEEE Trans Industr Electron 65(10):8184–8194

Phinyomark A, Phukpattaranont P, Limsakul C (2012b) Fractal analysis features for weak and single-channel upper-limb EMG signals. Expert Syst Appl 39(12):11156–11163

Phinyomark A, Quaine F, Charbonnier S, Serviere C, Tarpin-Bernard F, Laurillau Y (2013) EMG feature evaluation for improving myoelectric pattern recognition robustness. Expert Syst Appl 40(12):4832–4840

Bai Y (2018) Research on robot control method based on EMG signal. Shfenyang Ligong University, Shfenyang ((in Chinese))

Sang HP, Seok PL (1998) EMG pattern recognition based on artificial intelligence techniques. IEEE Trans Rehabil Eng 6:400–405

Reza B, Mohammad HM (2003) Evaluation of the forearm EMG signal features for the control of a prosthetic hand. Physiol Meas 24:309–319

Kim KS, Choi HH, Moon CS et al (2011) Comparison of k-nearest neighbor, quadratic discriminant and linear discriminant analysis in classification of electromyogram signals based on the wrist-motion directions. Curr Appl Phys 11(3):740–745

Pan S, Jie J, Liu K, et al. (2019) Classification methods of sEMG through weighted representation-based k-nearest neighbor. In: International conference on intelligent robotics and applications. Springer, Cham, pp. 456–466.

Gou JP, Qiu WM, Zhang Yi et al (2019) Locality constrained representation-based K-nearest neighbor classification. Knowl Based Syst 167(3):38–52

Kennedy J, Eberhart R. (1995) Particle swarm optimization. In: Proceedings of ICNN'95-international conference on neural networks. IEEE, vol 4, pp 1942–1948.

Sun C, Jin Y, Cheng R et al (2017) Surrogate-assisted cooperative swarm optimization of high-dimensional expensive problems. IEEE Trans Evol Comput 21(4):644–660

Qin S, Sun C, Zhang G et al (2020) A modified particle swarm optimization based on decomposition with different ideal points for many-objective optimization problems. Complex Intell Syst 6:263–274

Guo Y, Zhang X, Gong D et al (2020) Novel interactive preference-based multi-objective evolutionary optimization for bolt supporting networks. IEEE Trans Evol Comput 24(4):750–764

Guo Y, Yang H, Chen M et al (2019) Ensemble prediction-based dynamic robust multi-objective optimization methods. Swarm Evol Comput 48:156–171

Jie J, Zhang J, Zheng H et al (2016) Formalized model and analysis of mixed swarm based cooperative particle swarm optimization. Neurocomputing 174:542–552

Huang CL, Dun JF (2008) A distributed PSO-SVM hybrid system with feature selection and parameter optimization. Appl Soft Comput 8(4):1381–1391

Khushaba RN, AlSukker A, Al-Ani A et al (2009) A novel swarm based feature selection algorithm in multifunction myoelectric control. J Intell Fuzzy Syst 20(4):175–185

Fang Y (2015) Interacting with prosthetic hands via electromyography signals. University of Portsmouth, London, pp 62–65

Merletti R (1996) Standards for reporting EMG data. J Electromyogr Kinesiol 6(1):3–4

Hudgins B, Parker P, Scott R (1993) A new strategy for multifunction myoelectric control. IEEE Trans Biomed Eng 40(1):82–94

Phinyomark A, Limsakul C, Phukpattaranont P (2009) A novel feature extraction for robust EMG pattern recognition. J Comput 1:71–80

Du S, Vuskovic M. Temporal vs. spectral approach to feature extraction from prehensile EMG signals. In: Proceedings of IEEE international conference on information reuse and integration, pp. 344–350, 2004.

Saridis GN, Gootee TP (1982) EMG pattern analysis and classification for aprosthetic arm. IEEE Trans Biomed Eng 29(6):403–412

Philipson L (1987) The electromyographic signal used for control of upper extremity prostheses and for quantification of motor blockade during epidural an aesthesia. Linköing University, Linköing

Granpe D, Salahi J, Zhang D (1985) Stochastic analysis of myoelectric temporal signatures for mula-functional single-site activation of prostheses and orthoses. J Biomed Eng 7(1):18–28

Zecca M, Micera S, Carrozza MC, Dario P (2002) Control of multifunctional prosthetic hands by processing the electromyographic signal. Crit Rev Biomed Eng 30(4–6):459–485

Park SH, Lee SP (1998) EMG pattern recognition based on artificial intelligence techniques. IEEE Trans Rehabil Eng 6(4):400–405

Öberg T, Sandsjö L, Kadefors R (1994) EMG mean power frequency: obtaining a reference value. Clin Biomech 9(4):253–257

Winter DA (2005) Biomechanics and motor control of human movement, 3rd edn. Wiley, Hoboken

Shi Y, Eberhart RC. Empirical study of particle swarm optimization. In: Proceedings of IEEE congress on evolutionary computation, Washington, pp. 1945–1950, 1999.

Sharma D, Willy C, Bischoff J (2020) Optimal subset selection for causal inference using machine learning ensembles and particle swarm optimization. Complex Intell Syst 466:1–19

Zhang H, Zhou A, Lin X (2020) Interpretable policy derivation for reinforcement learning based on evolutionary feature synthesis. Complex Intell Syst 6(3):741–753

Funding

This work was supported in part by National Natural Science Foundation of China (Grant No. 62003306) and Natural Science Foundation of Zhejiang Province (LY19F030003, LQ21F030009).

Author information

Authors and Affiliations

Contributions

Conceptualization, JJ; methodology, JJ and HZ; validation, KL, BW, RD; data curation, KL; writing—original draft preparation, KL and JJ; writing, review and editing, JJ and HZ; supervision, JJ and HZ.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jie, J., Liu, K., Zheng, H. et al. High dimensional feature data reduction of multichannel sEMG for gesture recognition based on double phases PSO. Complex Intell. Syst. 7, 1877–1893 (2021). https://doi.org/10.1007/s40747-020-00232-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-020-00232-6