Abstract

This paper presents a hybrid algorithm based on using moth-flame optimization (MFO) algorithm with simulated annealing (SA), namely (SA-MFO). The proposed SA-MFO algorithm takes the advantages of both algorithms. It takes the ability to escape from local optima mechanism of SA and fast searching and learning mechanism for guiding the generation of candidate solutions of MFO. The proposed SA-MFO algorithm is applied on 23 unconstrained benchmark functions and four well-known constrained engineering problems. The experimental results show the superiority of the proposed algorithm. Moreover, the performance of SA-MFO is compared with well-known and recent meta-heuristic algorithms. The results show competitive results of SA-MFO concerning MFO and other meta-heuristic algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Optimization is defined as the process of finding global or near global optimum solutions for a given problem. Many problems in the real world can be observed as optimization problems. Over the past several decades, several optimization algorithms are proposed to solve many optimization problems. Simulated annealing (SA) algorithm is one of the most popular iterative meta-heuristic optimization algorithm. It used to solve continuous and discrete problems [16]. The key privilege of SA is that its ability to escape from local optima called metropolis process. However, the major shortage of SA is that its efficiency for finding the global optimum is unsatisfactory. This is due to, when SA generates a new candidate solution, it does not learn intelligently from its searching history [37]. Several studies have been introduced for enhancing SA performance. Some of these are based on changing the acceptance or generation mechanisms. However, this kind of modifications in SA schema does not usually inherit the ability of SA for escaping from local optima [11]. On the other hand, population-based meta-heuristics algorithms equipped with the ability to guide their search through using intelligent learning mechanism. Thus, the population-based meta-heuristics algorithms showed better efficiency. Some of these algorithms are particle swarm optimisation (PSO) [15], ant colony optimization (ACO) [4], ant lion optimizer (ALO) [22], differential evolution (DE) [35], Social group optimization (SGO) [30], and moth-flame optimization (MFO) [24].

In this paper, MFO is selected to be studied and analyzed. MFO algorithm is one of recent meta-heuristic optimization algorithms proposed in 2015. The main inspiration of MFO came from the navigation method of moths in nature called transverse orientation. The inventor of this algorithm, Mirjalili, showed that MFO obtained very competitive results compared with other meta-heuristic optimization algorithms. Also, MFO shows competitive results for automatic mitosis detection in breast cancer histology images [32]. However, MFO like other meta-heuristic algorithms suffers from entrapping at local optima and low convergence rate. Thus, many studies are proposed to solve this problem. Several studies have been proposed to enhance the performance of MFO. Li et al. [17] proposed Lévy-flight moth-flame optimization (LMFO) algorithm to improve the performance of MFO. The proposed LMFO used Lévy-flight strategy in the searching mechanism of MFO. The experimental results on 19 unconstrained benchmark functions and two other constrained problems showed the superior performance of LMFO. Emery et al. [5] employed chaos parameter in the spiral equation of updating the position of moths. They applied their approach in feature selection application. Recently, many studies hybridize two or more algorithms to obtain optimal solutions for optimization problems [31]. Some of these studies hybridize differential evolution with biogeography-based optimization to solve global optimization problem [8]. Some hybridize particle swarm optimization with differential evolution for solving constrained numerical and engineering optimization problems [19]. Moreover, some of them hybridize chaotic with meta-heuristic algorithms for solving feature selection problem [33]. Also, some hybridize genetic algorithm and particle swarm optimization for multimodal problems [13]. Furthermore, some researchers considering introducing new methods to improve meta-heuristic algorithms. Author in [25] introduces new mutation rule to be combined with the basic mutation strategy of differential evolution algorithm. He named his algorithm as enhanced adaptive differential evolution (EADE). The experimental results show that EADE is very competitive algorithm for solving large-scale global optimization problems. Authors in [28] combined two binary bat algorithm (BBA) and with local search scheme (LSS)to improve the diversity of BBA and enhance the convergence rate. The results show that the proposed hybrid algorithm obtained promising results for solving large-scale 01 knapsack problems. Authors in [36] introduced a mutation operator guided by preferred regions to enhance the performance of an existing set-based evolutionary many-objective optimization algorithm. The results obtained from 21 instances of seven benchmark show that their proposed algorithm is superior. The main drivers for this kind of hybridization are to avoid local optima and improve search results from global optimization. This becomes an essential task in multidimensional and multiobjective problems.

Therefore, in this paper, we tried another kind of hybridization to solve the main problems of MFO. To best of our knowledge, this kind of hybridization of MFO with SA is not used before. The salient features of SA and MFO are hybrid to create a new approach, namely simulated annealing moth-flame algorithm (SA-MFO). In this paper, simulated annealing method is applied during the updating positions of flames of the standard version of MFO to further enhance the performance of MFO. The proposed SA-MFO is applied to solve both constrained and unconstrained benchmark problems. The proposed mimetic algorithm is mainly based on MFO algorithm, whereas, metropolis mechanism of SA is used to slow down the convergence rate of MFO and increases the probability of moths to reach the global optima. Also, metropolis mechanism is used to increase the diversity of the population. The proposed SA-MFO has the advantages of both SA and MFO algorithms: the ability to escape from local optima mechanism of SA and fast searching ability and learning mechanism for guiding the generation of candidate solutions of MFO.

The proposed mimetic algorithm applied on 23 benchmark functions and four well-known engineering problems. These problems are applied previously on the original MFO. Thus, we almost make a fair comparison between the proposed SA-MFO and MFO in terms of evaluation criteria, number of iterations, number of independent runs, population size, and number of dimensionality. The simulation results demonstrate that the proposed SA-MFO can significantly improve the performance of MFO. Also, the experimental results show that SA-MFO is superior to the other algorithms.

The rest of this paper is organized as follows: Section 2 provides a brief overview of the standard moth flame optimization algorithm, followed by a brief introduction to simulated annealing. Section 3 describes in detail the proposed hybrid algorithm, and then Sect. 4 discusses the main results and evaluate the performance of the proposed algorithm. Finally, Sect. 5 concludes the paper.

Basics and background

Moth flame optimization (MFO)

Inspiration

In this section, one of the recent stochastic population-based algorithms is employed namely moth flame optimization (MFO) [24]. The main inspiration of MFO came from the navigation method of moths in nature. Moths are fancy insects that are very similar to the butterfly family. In nature, there are greater than 160,000 various species of this insect. Larvae and adult are the two main milestones in their lifetime. The larva is converted to moth by cocoons. Special navigation methods in the night are the most interesting fact about moths. They used a mechanism called transverse orientation for their navigation. Moths fly using a fixed angle with respect to the moon, which is a very efficient mechanism for long traveling distances in a straight line.

Mathematical model of MFO

Let the candidate’s solutions are moths, and the problem’s variables are the position of moths in the space. P is the spiral function where moths move around the search space. Each moth updates his position with respect to flame using the following equations:

where \(M_i\) indicates the ith moth and \(F_j\) is \(j_th\) flame.

There are other types of spiral functions can be utilized respect to the following rules:

-

1.

The initial point of spiral should start from the moth.

-

2.

The final point of spiral should be the position of the flame.

-

3.

The Fluctuation range of spiral shouldn’t exceed the search space.

where \(D_i\) is the distance of the ith moth for the jth flame, t is a random number in \([-1,1]\) and b is a constant for defining the shape of the P. D is calculated using the following equation.

where \(M_i\) is the ith moth, \(F_j\) indicates the jth flame, and \(D_i\) indicates the distance of the ith moth to the jth flame.

Another concern, the moths update their position with respect to n different locations in the search space which can degrade the best promising solutions exploitation. Therefore, the number of flames adaptively decreases over the course of iterations using the following formula:

where I is the current number of iterations, IN is the maximum number of iterations and FN is the maximum number of flames.

MFO utilizes Quicksort method and the computational complexity of this sort method is O(nlogn) and \(O(n^{2})\) in the best and worst case, where n denotes the number of moths.

Simulated annealing (SA)

Simulated annealing (SA) was proposed by Metropolis et al. [21] in 1953. The main inspiration of SA came from the simulation modeling of a molecular movement in the materials during annealing (physical annealing principles). The process of cooling and heating a material to recrystallized is called annealing. At high temperature, the particles move in disorder and when the temperature decreases gradually, the particles converge to the lowest state of energy. SA uses probability acceptance mechanisms for considering the current optimal solution. SA was successfully applied to solve the optimization problems by Kirkpatrick et al. [15] in 1983. Through using the probability of acceptance of metropolis process, it can be simulated the annealing mechanism in finding the lowest energy state which is the optimum solution. The main parameters of SA are metropolis acceptance mechanism, initial condition, and cooling scheduling method. They are described as the following:

-

Initial condition at the beginning of the searching process of SA algorithm starts with initializing the initial temperature \(T_{0}\), which have a significant impact on the performance. The higher value of \(T_{0}\) will increase the computational time of the optimization process. On the contrary, if the \(T_{0}\) is too low, it will cause that SA will not effectively explore the search space. Thus it is needed to be selected carefully to obtain the optimal solution.

-

Metropolis acceptance mechanism It is one of key affecting on obtaining a near optimal solution quickly. The metropolis rules used to indicate whether the nearby solution with low fitness value is accepted or not as the current solution. This mechanism affects the capability of SA to escape from the local optimum. Let \(F_\mathrm{{C}}\) represents the fitness value of the current solution, and \(F_\mathrm{{N}}\) denotes the fitness value of the neighboring solution. When \(F(Y_{0})\) is better than F(Y), then SA will use the acceptance probability mechanism to indicating either to consider the neighbor solution as the current solution or not. The probability of acceptance mechanism is defined as follows:

$$\begin{aligned} {\text {Pr}}=\mathrm{{e}}^{\left( \frac{-(F(Y)-F(Y_{0}))}{T_{k}}\right) }, \end{aligned}$$(5)where Pr is defined as the probability of acceptance and \(T_{k}\) is temperature value at time k.

-

Cooling schedule method During the physical annealing process, as the temperature decreases, the cooling rate decreases too to reach the stable ground state. This it’s needed that the system at the beginning to be cooled faster and slower as gradually decreased of the temperature. The cooling schedule parameter is defined as follows:

$$\begin{aligned} T_{k+1}=\alpha \times T_{k}, \end{aligned}$$(6)where \(\alpha \) denotes the temperature coefficient, \(T_k\) is the initial temperature value and \(T_{k+1}\) is temperature at time k

The proposed SA-MFO algorithm

According to the MFO algorithm, moths’ positions are randomly initialized. Then moth swarm updates their position movement using Eq. (2). Through this, the next moth position is determined. The standard version of MFO automatically accepts the current moth position as the new moth position. However, SA-MFO uses the metropolis acceptance mechanism of SA. This mechanism indicates whether to accept the new moth position or not. In case of the new moth, the position is not accepted, another candidate position is recalculated. The selection is based on its fitness value. Using this mechanism, a moth solution able to escape from the local optima. Also, the quality of the solution is enhanced with fastest convergence rate [34]. Based on temperature, cooling schedule parameters and the fitness value difference of the metropolis mechanism, the optimization process will explore the search space effectively to find an optimal solution. This process will be repeated until a new position is accepted using metropolis acceptance rule, or the termination criterion is reached.

Parameters initialization

In the beginning, SA-MFO starts with setting MFO parameters and randomly initialized moths’ positions within the search space. Each position represents a solution in the search space. The initial parameter settings are presented in Table 1.

Fitness function

At each iteration, each moth position is evaluated using a predefined fitness function \(f(\mathbf {z})\). Then based on this value, the metropolis rule is applied to determine whether to accept or not.

Updating positions

If the current moth accepted as the new moth, then the new moths change its position using Eq. (2).

Termination criteria

The optimization process terminates when it reaches the maximum number of iterations or when the best solution is found [7]. In our case, we used the maximum number of iterations is 1000 iterations (see Table 1).

The overall SA-MFO algorithm is proposed in Algorithm (1). Moreover, the summarize flowchart of the proposed simulated annealing moth-flame algorithm is given in Fig. 1.

Results and discussion

In this paper, 23 optimization benchmark problems with different characteristics and four other engineering problems are employed to evaluate the performance of the proposed SA-MFO algorithm. The employed 23 benchmark problems divided into two families: unimodal and multimodal. Tables 11 and 12 in Appendix 1 and 2 describes the mathematical formulation and the properties of the benchmark functions used in this paper. In the table, lb and ub represent the lower and upper bound of the search space respectively, dim indicates the number of dimensions, and opt indicates the optimum solution of the function. More information about the adopted benchmark functions can be found in [18]. The main characteristics of the first family, namely unimodal test functions have only one global optimum and no local optima. These functions are used to evaluate the exploitation and convergence rate of the adopted algorithms. However, the second family, namely multimodal test functions have multiple global optimum and multiple local optima. The characteristic of this family is used to evaluate the capability of the algorithm in avoiding the local optima and the explorative ability of an algorithm.

In this section, three main experiments were conducted to evaluate the performance of the SA-MFO algorithm. The first experiment aims to determine the optimal parameter value for both \(T_{0}\) and \(\alpha \). The second experiment aims to evaluate and compare the performance of SA-MFO with MFO and five well-known optimization algorithms: particle swarm optimization (PSO) [15], ant bee colony (ABC) [14], moth flame optimization (MFO) [24], multi verse optimization (MVO) [23], and ant lion optimizer (ALO) [22] in solving 23 numerical optimization problems using different statistical measurements. The parameters settings for all meta-heuristic optimization algorithms are shown in Table 2. The rest of parameters including, the maximum number of iterations for all functions is set to 1000, the number of search agents is set to 50 individuals for all comparisons and the initialization of the search agents is same for all adopted algorithms. The reason behind this that we want to make almost a fair comparison for these algorithms. The third and last experiment aims to evaluate the performance of SA-MFO on solving engineering problems, also to compare the best-obtained results with other meta-heuristic algorithms. All the obtained results for both benchmark and engineering problems are calculated on average for 30 independent runs. Moreover, all these experiments are performed on the same PC with Core i3 and RAM 2 GB on OS windows 7.

Parameters optimization experiment

Due to the stochastic behavior of the optimization algorithms, there is a need to set certain parameters carefully. These parameters can have a significant influence on the performance of the algorithm. The main parameters of SA are \(T_{0}\) and \(\alpha \) which affecting the searching efficiency to find the optimal solution. These two parameters need to be optimized effectively. In this paper, the optimal parameters values for \(T_{0}\) and \(\alpha \) are identified based on the highest success rate for 50 runs of \(F_1,\ F_{10}\) and \(F_{18}\). This paper uses the same mechanism proposed in [34] which depends on optimizing one parameter per simulation. When an optimal value of the parameter is determined, the optimization process is repeated till to find an optimal value of the next parameter. All the obtained results reach \(1\times E^{-3}\) are observed as a success.

Table 3 shows the simulation results obtained for selecting the optimal value of \(T_{0}\). Changing the value of \(T_{0}\) can highly affect on the initial acceptance rate of SA and SA-MFO. As, if the \(T_{0}\) is too high that will lead the algorithm to spend much time in exploiting solution space and if the \(\alpha \) is too low that will cause increasing the search time. In this paper, \(T_{0}\) set with 10% and increases by 10% each time till to reach 90% as shown in Table 3. As it can be seen, \(T_{0}\) with value 90% improves the success rate (Table 3).

\(\alpha \) can highly affect by the acceptance of the poor solution which may lead to exploring the search space without finding an optimal solution. If \(\alpha \) is too low, it may lead to stuck in the local optima. In this paper, \(\alpha \) set with 10% and increases by 10% each time till to reach 90% as shown in (Table 4). As it can be seen, \(\alpha \) with value 10% improves the success rate.

Comparison using numerical benchmark functions experiment

In this subsection, the performance of SA-MFO is analyzed and compared with other meta-heuristic algorithms in terms of mean, standard deviation (std.) for 30 independent runs. Moreover, the p value from Wilcoxon rank sum test is used as well. Table 5 shows the statistical results obtained for 23 benchmark functions using SA-MFO, PSO, ALO, ABC, MFO, and MVO. As it can be observed from Table 5, SA-MFO outperforms the other algorithms for both unimodal and multimodal test function in most of the functions. The second best results belong the MFO and PSO algorithms. Considering the characteristic of both multi-modal and uni-modal test functions and the obtained results, it may be concluded that SA-MFO algorithm has a high exploration capability for exploring the promising area in the search space and the capability for avoiding the local optima.

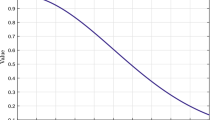

For further analyzing the performance of the proposed SA-MFO algorithm, convergence curves for all the used benchmark functions are shown in Fig. 2. In this figure, the performance of the proposed mimetic algorithm is compared with MFO, ABC, MVO, PSO, and ALO. As it can be seen, the proposed SA-MFO algorithm overtakes the other algorithms in most of the cases in terms of both stability and high performance. Moreover, it can be observed that ABC in the most case provides the lowest score. PSO and MVO are in second place. Figure 3 shows the mathematical representation of some benchmark functions, average fitness values calculated for whole moths in each iteration, trajectory changes for the first moth in the first dimension during optimization. As it can be observed, the fluctuations of the first moth are gradually decreased over the course of the iteration. Through this behavior, it can be guaranteed the transition between exploration and exploitation of the search space. Also, Fig. 3 compares the convergence curves of the proposed algorithm with original MFO. From all these figures, it can be observed that using metropolis mechanism can significantly enhance the performance of the original MFO.

From all the obtained results, it can be observed that the proposed SA-MFO in some cases obtained similar results as the original MFO. The explanation for these results is that Metropolis mechanism is only applied in case of the current solution is worse than the best solution obtained so far. Thus, in some cases, based on the probability of acceptance, the current solution is not accepted. Therefore, SA-MFO obtains similar results as original MFO.

A non-parametric statistical test namely Wilcoxon rank sum test is conducted in this paper. This test is used to verify if a pair of two solutions is statistically different or not [40]. In this paper, Wilcoxon rank sum test is used to compare and analyze the difference of the solution (population) of the proposed algorithm with other algorithms. The best values of p when p value < 0.05 which can be considered sufficient evidence against the null hypothesis. The obtained p values produced by Wilcoxon’s test of 23 benchmark functions for the pair-wise comparison of the best score obtained for all 1000 iterations with a 5% significance level from the employed statistical test are reported in Table 6. Such pair-wise comparison is constituted by SA-MFO vs. MFO, SA-MFO vs. ALO, SA-MFO vs. PSO, SA-MFO vs. ABC, and SA-MFO vs. MVO. In this table, N/A denotes "not applicable," which mean that the proposed algorithm could not be compared with the other algorithm in the rank-sum test. As it can be observed from this table, SA-MFO outperforms the other algorithms for both unimodal and multimodal test function. As the SA-MFO provides p value less than 0.05 for most of the functions. Also, it can be seen that SA-MFO reject the null hypothesis for \(F_{16}\) and \(F_{17}\) for most of the competitive algorithms. From all the obtained results, it can be concluded that the SA-MFO algorithm has high exploitation and exploration compared with the original version MFO and other meta-heuristic algorithms. Regarding the p value, it can be concluded that SA-MFO is statistically significant.

Comparison using engineering design problems experiment

In this section, the performance of the proposed SA-MFO is evaluated using four well-known engineering problems, namely three-bar truss, welded beam, pressure vessel and tension/compression spring design problems. The detailed description of the adopted engineering problem is presented in Appendix 2. Table 7 presents the optimal solution for three-bar truss design problem obtained by particle swarm optimization with differential evolution (PSODE) [20], dynamic stochastic selection (DEDS) [42], mine blast algorithm (MBA) [29], water cycle algorithm (WCA) [6], MFO and SA-MFO. As it can be seen from this table, SA-MFO obtained better results compared with the standard MFO. Table 8 compares the optimal solution obtained for pressure vessel problem by SA-MFO with hybrid particle swarm optimization (HPSO) [10], co-evolutionary particle swarm optimization using Gaussian distribution (CPSOGD) [27], GA based on using of dominance-based tour tournament selection (GA-TTS) [3], co-evolutionary differential evolution (CDE) [12], PSO [15], hybrid Nelder-mead simplex search and particle swarm optimization (NMPSO) [41], Gaussian quantum-behaved particle swarm optimization (GQPSO) [1], and MFO. As it can be observed, SA-PSO and ACO obtained the optimal solutions compared with the other algorithms.

Table 9 compares the best obtained results for tension/compression spring design problem of SA-MFO and CPSO, DEDS, NM-PSO, GA-TTS, hybrid evolutionary algorithm and adaptive constraint handling technique (HEAA) [39], mine blast algorithm (MBA) [30], WCA, differential evolution with level comparison (DELC) [38], and MFO. In this table, NA indicates "not available" value. As it can be seen, NM-PSO obtained the optimal solution. However, it can be observed that SA-MFO obtained better results compared with original MFO. The best solutions for welded beam problem obtained by WCA, GA-TTS, cultural algorithms with evolutionary programming (CAEP) [2], MFO, HGA, MBA, CPSO, NM-PSO, gravitational search algorithm (GSA) [26], HPSO, and SA-MFO are shown in Table 10. From this table, SA-MFO overtakes the other algorithms.

Computational complexity of the proposed SA-MFO algorithm

In this section, the computational complexity of the proposed hybrid algorithm is computed. The computational complexity of a meta-heuristic algorithm is mainly depended on the number of variables, the number of moths, the flames’ sorting mechanism in each iteration and the maximum number of iterations [24]. Since the original MFO uses Quicksort mechanism, the computational complexity is \(O(n^2)\) in the worst case. In addition, the temperature in simulated annealing goes through O(log(m)) [9]. The overall computational complexity is \(O(T\times log(m)(m^2+m\times v))\), where T is the maximum number of iterations and v is the number of variables.

Conclusions

This paper introduced a new hybrid algorithm based on using MFO and SA. The metropolis acceptance mechanism of SA is employed into the standard MFO to enhance the performance of MFO. The simulation results using 23 benchmark functions show that the proposed SA-MFO is superior compared with the original version MFO and other recently meta-heuristic algorithms, namely GWO, ALO, PSO, and MVO. Moreover, the paper considered solving four well-known engineering design problems using SA-MFO. The experimental results show that the proposed SA-MFO obtained competitive results compared with the other algorithms proposed in the literature for solving engineering problems. Future work could concentrate on applying the proposed SA-MFO on more complex optimization problems.

References

Coelho L (2010) Gaussian quantum-behaved particle swarm optimization approaches for constrained engineering design problems. Expert Syst Appl 37:1676–1683

Coello C, Becerra R (2004) Efficient evolutionary optimization through the use of a cultural algorithm. Eng Optim 36:219–236

Coello C, Mezura E (2002) Constraint-handling in genetic algorithms through the use of dominance-based tournament selection. Adv Eng Inform 16:193–203

Colorni A, Dorigo M, Maniezzo V (1992) Distributed optimization by ant colonies. In: Proceedings of the first European conference on artificial life. Paris, France, p 134–142

Emary E, Zawbaa H (2016) Impact of chaos functions on modern swarm optimizers. PLoS One 11(7):1–26

Eskandar H, Sadollah A, Bahreininejad A, Hamdi M (2012) Water cycle algorithm a novel metaheuristic optimization method for solving constrained engineering optimization problems. Comput Struct 110:151–166

Gaber T, Sayed G, Anter A, Soliman M, Ali M, Semary N et al (2015) Thermogram breast cancer prediction approach based on neutrosophic sets and fuzzy c-means algorithm. In: The 37th annual international conference of the IEEE engineering in medicine and biology society (EMBC). Milan, Italy, pp 4254–4257

Gong W, Cai Z, Ling C (2010) De/bbo: a hybrid differential evolution with biogeography-based optimization for global numerical optimization. Soft Comput 15(4):645–665

Hansen PB (1992) Simulated annealing. Electr Eng Comput Sci Tech Rep 170:1–14

He Q, Wang L (2007) A hybrid particle swarm optimization with a feasibility-based rule for constrained optimization. Appl Math Comput 186:1407–1422

Henderson D, Jacobson S, Johnson A (2003) The theory and practice of simulated annealing. Handb Metaheuristics 57:287–319

Huang F, Wang L, He Q (2007) An effective co-evolutionary differential evolution for constrained optimization. Appl Math Comput 186(1):340–356

Kao T, Zahara E (2008) A hybrid genetic algorithm and particle swarm optimization for multimodal functions. Appl Soft Comput 8(2):849–857

Karaboga D, Basturk B (2007) Artificial bee colony (abc) optimization algorithm for solving constrained optimization problems. In: 12th international fuzzy systems association world congress, vol 4529. Mexico, pp 789–798

Kennedy J, Eberhart R (1995) Particle swarm optimization. In: IEEE international conference on neural networks. Perth, WA, pp 1942–1948

Kirkpatrick S, Gelatt CD, Vecchi MP (1983) Optimization by simulated annealing. Science 220(4598):671–680

Li Z, Zhou Y, Zhang S, Song J (2016) Lévy-flight moth-flame algorithm for function optimization and engineering design problems. Math Probl Eng 2016:1–22

Liang J, Suganthan P, Deb K (2005) Novel composition test functions for numerical global optimization. In: Proceedings 2005 IEEE swarm intelligence symposium. p 68–75

Liu H, Cai Z, Wang Y (2010) Hybridizing particle swarm optimization with differential evolution for constrained numerical and engineering optimization. Appl Soft Comput 10(2):629–640

Liu H, Cai Z, W Y (2010) Hybridizing particle swarm optimization with differential evolution for constrained numerical and engineering optimization. Appl Soft Comput 10:629–640

Metropolis N, Rosenbluth AW, Rosenbluth MN, Teller AH (1953) Equation of state calculations by fast computer machines. J Chem Phys 21(6):10871092

Mirjalili S (2015) The ant lion optimizer. Adv Eng Softw 83:80–98

Mirjalili S, Mirjalili SM, Hatamlou A (2016) Multi-verse optimizer: a nature-inspired algorithm for global optimization. Neural Comput Appl 27(2):495–513

Mirjalili SM (2015) Moth-flame optimization algorithm: a novel nature-inspired heuristic paradigm. Knowl Based Syst (Elsevier) 89:228–249

Mohamed A (2017) Solving large-scale global optimization problems using enhanced adaptive differential evolution algorithm. Complex Intell Syst 3(4):205–231

Rashedi E, Nezamabadi-Pour H, Saryazdi S (2009) Gsa: a gravitational search algorithm. Inf Sci 179:2232–2248

Renato A, Leandro D, Santos C (2006) Coevolutionary particle swarm optimization using Gaussian distribution for solving constrained optimization problems. IEEE Trans Syst Man Cybern Part C (Applications and Reviews) 36:1407–1416

Rizk-Allah R, Hassanien A (2017) New binary bat algorithm for solving 0–1 knapsack problem. Complex Intell Syst. https://doi.org/10.1007/s40747-017-0050-z

Sadollaha A, Bahreininejada A, Eskandarb H, Hamdi M (2013) Mine blast algorithm: a new population based algorithm for solving constrained engineering optimization problems. Appl Soft Comput 13(5):2592–2612

Satapathy S, Naik A (2016) Social group optimization (sgo): a new population evolutionary optimization technique. Complex Intell Syst 2(3):173–203

Sayed G, Darwish A, Hassanien A (2017) Quantum multiverse optimization algorithm for optimization problems. Neural Comput Appl. https://doi.org/10.1007/s00521-017-3228-9

Sayed G, Hassanien A (2017) Moth-flame swarm optimization with neutrosophic sets for automatic mitosis detection in breast cancer histology images. Appl Intell 47(2):397408

Sayed G, Hassanien A, Azar A (2017) Feature selection via a novel chaotic crow search algorithm. Neural Comput Appl. https://doi.org/10.1007/s00521-017-2988-6

Shieh H, Kuo C, Chiang C (2011) Modified particle swarm optimization algorithm with simulated annealing behavior and its numerical verification. Appl Math Comput 218(8):4365–4383

Storn R, Price K (1997) Differential evolution–a simple and efficient heuristic for global optimization over continuous spaces. J Glob Optim 11(4):341–359

Sun J, Sun F, Gong D, Zeng X (2017) A mutation operator guided by preferred regions for set-based many-objective evolutionary optimization. Complex Intell Syst 3(4):265–278

Wang CY, Lin M, Zhong YW, Zhang H (2016) Swarm simulated annealing algorithm with knowledge-based sampling for travelling salesman problem. Int J Intell Syst Technol Appl 15(1):74–94

Wang L, Li L (2010) An effective differential evolution with level comparison for constrained engineering design. Struct Multidiscip Optim 41:947–963

Wang Z, Cai Y, Zhou Y, Fan Z (2009) Constrained optimization based on hybrid evolutionary algorithm and adaptive constraint handling technique. Struct Multidiscip Optim 37:395–413

Wilcoxon F (1945) Individual comparisons by ranking methods. Biom Bull 1:80–83

Zahara E, Kao Y (2009) Hybrid Nelder-Mead simplex search and particle swarm optimization for constrained engineering design problems. Expert Syst Appl 36:3880–3886

Zhang M, Luo W, Wang X (2008) Differential evolution with dynamic stochastic selection for constrained optimization. Inf Sci 178:3043–3074

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1: List of 23 benchmark optimization functions

Appendix 2. Details of the 4 constrained design benchmark optimization problems

Compression spring design problem

This is a minimization problem which contain three main variables are coil diameter (CD), wire diameter (WD), and the number of active coils (NAC) under some restrictions such as minimum deflection, surge frequency, and shear stress. This problem can be mathematically formulated as follows:

The design of welded beam problem

Welded beam design is a minimization problem of the cost under some constraints where there are four variables in this problem which are the length of bar attached to the weld (l), weld thickness (h), the height of the bar (t), and the thickness of the bar (b). Moreover, there are some constraints in this problem which are bending stress in the beam \((\alpha )\), the beam deflection \((\beta )\), buckling load on the bar (BL), the end deflection of the beam \((\delta )\), and side constraints. This design problem can then formulated as follows:

Design of pressure vessel problem

The main objective of the problem of pressure vessel design is to minimize the total cost of the welding and forming of pressure vessel problem. In this problem there are four variables which are thickness of the head \((T_\mathrm{{h}})\), thickness of the shell \((T_\mathrm{{s}})\), inner radius (R), and length of the cylindrical section of the vessel (L).

The three-bar trust design problem

This is a minimization problem. This problem can be described mathematically as follows:

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Sayed, G.I., Hassanien, A.E. A hybrid SA-MFO algorithm for function optimization and engineering design problems. Complex Intell. Syst. 4, 195–212 (2018). https://doi.org/10.1007/s40747-018-0066-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-018-0066-z