Abstract

The signaling perspective offers an alternative to the Skinnerian view of understanding behavior. The signaling effects of reinforcers have predominantly been explored in the laboratory with nonhuman subjects. To test the implications of this view for applied behavior analysis, we contrasted the effect of discriminative stimulus versus reinforcer control in children with autism spectrum disorder (ASD). We aimed to determine whether the duration of their transitions from one reinforcer context to another is controlled by their most recent past or the likely future based on more extended past experience. Reinforcer context (rich, moderate, or lean) was signaled in the first condition. We observed that transition times to the leaner reinforcer were longer than those to the richer. The reinforcer context was unsignaled in the second condition. The differences between transition times disappeared in the second condition. The difference in durations of transitions to signaled and unsignaled reinforcer densities suggests that behavior is primarily controlled by signals of likely future reinforcers as extrapolated from extended past experience rather than strengthened by the most recent event.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

The main concern of all behavior analysts is how the behavior and the environment of organisms interact. Both behavior analysts working in experimental laboratories and those working in the applied field try to partition the stream of environmental events and behavior into measurable units to understand their interaction. Those raised in Skinner’s tradition (Skinner, 1938) usually partition the interactions into discriminative stimuli, responses, and reinforcers. Discriminative stimuli are understood to be the antecedents of responses, which signal when specific response types (members of response classes) will produce reinforcers, which in turn will strengthen the response class in the sense of making the future appearance of this kind of responses more likely (Skinner, 1938, 1953). For strengthening to occur, reinforcers need to be delivered contingent on responses. For Skinner (1948, 1953), a contingency was primarily defined by temporal contiguity between responses and reinforcers. In “‘Superstition’ in the pigeon,” he explicates that “[t]o say that a reinforcement is contingent upon a response may mean nothing more than that it follows the response . . . conditioning takes place presumably because of the temporal relation only, expressed in terms of the order and proximity of response and reinforcement” (Skinner, 1948, p. 168).

However, during the past decades, evidence in favor of a more parsimonious partitioning of the behavior–environment interaction has been accumulating, questioning if contiguity is the primary defining characteristic of a contingency. Moreover, many experimental results suggest that the signaling properties of environmental events are sufficient to explain the effects of reinforcers. Accounts of behavior based on a contingency between environmental events with signaling properties and behavior render the concept of response strength superfluous, which is a good thing due to plenty of theoretical problems with the concept (see, e.g.. Baum, 2002, 2012; Cowie et al., 2011; Cowie & Davison, 2016; Simon et al., 2020, for elaboration). Several terms have been suggested for environmental events that guide behavior. Cowie et al. (2017) called them "signals," Shahan (2010) called them "signposts," Baum (2018) called them "inducers" and "phylogenetically important events" (Baum, 2012), and Borgstede and Eggert (2021) called them "statistical fitness predictors." We will continue to speak of the signaling effects of “reinforcers” to facilitate readability for a behavior-analytically trained audience. In this translational study, which aims to be informative for both an applied and a basic scientific audience, we prioritize comprehensibility. However, we wish to move beyond the “re-in-forcing” or “strengthening” connotation in the sense that the words are used in material sciences (see Shahan, 2017, for a more detailed discussion of this terminologyFootnote 1). Although we write of the signaling effects of “reinforcers,” we reserve the term “reinforcement” for the process of strengthening by reinforcement in Skinner’s (1938) sense.

Next to wishing to get a better understanding of the dynamics of transitions in children with ASD, our interest in the role of signaling effects has been catalyzed by the following findings, which do not fit smoothly in Skinner’s (1938) contiguity-based paradigm of response strength modulation by reinforcement:

The signaling properties of reinforcers appear to account smoothly for response patterns on fixed-interval (FI) and fixed-ratio (FR) schedules. The absence of or decreased responding following food delivery on FI and FR schedules may occur because each obtained food pellet signals the beginning of a period when no food pellets will be delivered as long as the schedule alternates in such a way that the last food pellet predicts the next one (Cowie et al., 2011; Cowie & Davison, 2016). Ferster and Skinner (1957) first observed the postreinforcer pause. They concluded that the absence of a reinforcer after several responses have been made or after a fixed time elapsed serves as a discriminative stimulus.

The discriminative function of reinforcers also accounts for Davison and Baum's (2006) results on conditioned reinforcer effects. They used frequently changing concurrent schedules procedures in which the relative rates of primary reinforcers varied across unsignaled components with seven different food delivery ratios arranged during the session. In the first experiment, certain reinforcer deliveries (food) were replaced with the display of a food-magazine light alone. Because the magazine light was paired with food, they assumed it would constitute a conditioned reinforcer. Both food and magazine-light delivery produced preference pulses at the option that generated them, but the magazine-light pulses tended to be smaller. Thus, they concluded that stimulus presentation served as a signal for where food was likely to be obtained. In their second experiment, they investigated the role of pairing a stimulus with food delivery by arranging a similar procedure as in the first experiment, but using a brief color change of the key light that was never paired with food. They observed that if the stimulus predicted more food on the same option, the preference pulse occurred on that option. However, if the stimulus predicted food on the other option, the pulse would occur on the other option. A process of strengthening by reinforcement, however, would predict that the pulse occurs on the last reinforced option instead. This did not happen. In other words, because the correlation of the stimulus with the location was important and pairing the food with the stimulus did not matter, conditioned reinforcer effects seem to be best understood as signaling effects.

Krägeloh et al.’s (2005) data also suggest that environmental events’ signaling rather than strengthening properties account for behavior. They presented two keys producing food pellets to pigeons. Food was available contingent on a pigeon’s pecking the key not most recently pecked, i.e., it was contingent on switching pecking location. Pigeons readily learned to alternate between the keys. If the most recent behavior had been strengthened, the pigeons would have pecked in the same location again. However, the extended pattern of food availability (contingent on not having pecked in the same location for the last food delivery) signaled where food would be available next. Quickly learning this behavioral switching pattern makes sense from a phylogenetic perspective because organisms often consume resources that deplete in a specific location after consumption. Having consumed the resource will signal that a location switch will generate more of that resource.

In a similar setup with children as subjects, Cowie et al. (2021) let participants play a game in which some responses could produce a reinforcer. If the participant chose the same response for the second time in a row, they would experience the lowest likelihood of the same reinforcer being available again in the same spot. By and large, children switched from the just successful response to the alternative one, which is difficult to explain based on the concept of response strength which would predict repetition of the last response occurring before the reinforcer. Instead of reinforcing the last response, the reinforcers presumably signaled that switching is required to obtain the next reinforcer.

In Science and Human Behavior (1953), Skinner clarified once more that, in his view, contiguity between responses and reinforcers was the central characteristic of effective reinforcers: “So far as the organism is concerned, the only important property of the contingency is temporal. The reinforcer simply follows the response. . . . We must assume that the presentation of a reinforcer always reinforces something since it necessarily coincides with some behaviour” (p. 85). To contrast between strengthening and (mere) signaling effects, Simon and Baum (2017) tested how contiguous and noncontiguous reinforcers affected human speech. In their systematic replication of Conger and Killeen’s (1974) experiment on matching in conversations, Simon and Baum investigated speech and gaze allocation in conversations with two different interlocutors. In one condition, confederates delivered approval (a putative reinforcer) contingent on the participant’s gaze and speech, creating a contiguity between talking to a specific confederate and her approval. In the other condition, approval was delivered independently of whom the participant looked at when talking. If the participant’s gaze directed at a specific confederate had been strengthened by approval, the contiguity condition would have produced different relative gaze rates from those in the noncontiguity condition. Results showed no such difference between conditions, suggesting that the confederates’ speech induced participants’ gaze and speech.

Motivated by these experimental findings, which suggest that the signaling effects are most central to explaining behavior, we designed a study contrasting strengthening effects with mere signaling effects in a setting close to home for applied behavior analysts. To build a bridge to applied behavior analysis, we extended a procedure first used by Jessel et al. (2016). Although Jessel et al. (2016) originally designed their study to evaluate transition characteristics of human and nonhuman subjects, we found their procedure suitable to test if the signaling properties of environmental events can fully account for transition times or if strengthening by reinforcement will explain additional variance in transition times.

We choose to apply our interest in signaling effects to an investigation of transitions in children with ASD because transitions between activities are an integral part of everyday life, taking up to 25% of time daily (Sterling-Turner & Jordan, 2007) and often cause challenges such as stereotypy, physical aggression, dawdling, noncompliance with instructions and tantrums (Brewer et al., 2014; Castillo et al., 2018). Those difficulties usually arise during the process of shifting from one situation to another. In this context, the term "transition" implies that the period between the conclusion of one situation and the beginning of the next presents challenges (Luczynski & Rodriguez, 2015). This transitional period can occur when there is a requirement to organize previously used materials and distribute new ones (i.e., in a classroom), or change physical locations (i.e., moving from the floor to the table; Luczynski & Rodriguez, 2015). In other words, the structural features of a transition include “(a) termination of the pre-change context, (b) initiation of the post-change context, and (c) the period between the two contexts” (Luczynski & Rodriguez, 2015, p. 153).

Transitions between activities have been studied both in basic and applied experiments. The focus is often placed on transitions to the less favorable context because that is when challenges occur. Advance notice is one of the procedures that can reduce the challenging behavior because it signals the end of the current activity and announces the upcoming transition to another one ahead of time (Brewer et al., 2014; Toegel & Perone, 2022). However, both basic and applied studies have also produced the opposite result, suggesting that also signaling the transition to a leaner context slows them down (Castillo et al., 2018; Jessel et al., 2016; Langford et al., 2019). In operant labs, transitions between activities are usually studied using multiple FR schedules as in Perone and Courtney (1992). In their study, multiple FR schedules consisted of different components that resulted in access to varying reinforcers magnitudes. For instance, access to grain for one second was considered a "lean" reinforcer, whereas 7-s access was considered a "rich" reinforcer. During the experimental session, the components were presented in a quasirandom order, ensuring an equal number of transitions between the different reinforcer magnitudes: lean-to-lean, lean-to-rich, rich-to-lean, and rich-to-rich. The different discriminative stimuli (key color) signaled the forthcoming reinforcer magnitude. Perone and Courtney (1992) discovered that pauses were up to nine times longer during the transition from a rich reinforcer to a lean reinforcer compared to all other types of transitions. When the same transitions were arranged in a mixed schedule (when two or more component schedules alternate, with all components accompanied by the same stimulus), longer pauses tended to occur after components with rich reinforcers, although these pauses were generally much shorter than the pauses observed during the rich-to-lean transitions in multiple schedules (when two or more component schedules alternate, each correlated with a distinctive stimulus).

Perone and Courtney (1992) concluded that one of the functions of pausing was to signal the upcoming context. Pausing was reduced when transitions to leaner contexts were unsignaled. Similar results were observed in applied studies such as run by Brewer et al. (2014) and Jessel et al. (2016) where dawdling was observed during signaled rich-lean transitions.

In the study whose procedure inspired our design, Jessel et al. (2016) examined transitions between different reinforcers in children with ASD. Children walked from rich to rich, lean to lean, rich to lean, and lean to rich reinforcers. The transition to a lean reinforcer (a less preferred toy) took the children significantly longer than the transition to a rich one (a more preferred toy). This phenomenon was observed in the first condition of the study, where the color of the playmat the children were transitioning to matched the reinforcer richness. Green and yellow mats signaled rich reinforcers, and blue and red signaled lean reinforcers. For example, when asked to transition to the green mat, they would always have access to their preferred toy. Only the less preferred toy was available when they were asked to transition to the blue mat. In the second condition, the upcoming reinforcer was unsignaled. This removed the differences in transition time. In that condition, the colors of the mats did not correspond to the availability of certain toys as in the first condition. As a result, the likely future was no longer predictable from the extended past experience, with the color of the playing mats matching the availability of preferred or less preferred toys. These findings suggest that the availability of discriminative stimuli signaling the reinforcer richness waiting after the transition was responsible for the duration of the transition and problem behavior that accompanied it during shifts to the lean reinforcers. It was the likely future (toy they were going to, signaled by the mat color) and not the immediate past (toy they were coming from) that controlled their behavior (degree of dawdling) when signals (mat colors with 100% correspondence to reinforcer richness) were available.

In addition to using Jessel et al. (2016) design to illuminate a different question, we extended it by adding a moderate reinforcer richness because the spectrum of contexts that organisms are experiencing exceeds two-dimensional rich-lean contexts. We also used continuous instead of discrete reinforcers because most behavior analytic studies have been conducted with discrete reinforcers (foot pellets). In contrast, many real-life reinforcers are continuous as they consist of (access to) activities. Furthermore, we applied measures (described in detail in the methods section) to ensure procedural fidelity during data collection that we found was missing in Jessel et al.’s study.

Our experiment aims at contrasting the effects of stimulus versus reinforcer control in children with ASD during transitions among three different reinforcer contexts in two conditions. We assessed signal versus reinforcer control by comparing transition times between a condition where the upcoming reinforcer richness was signaled and a condition where the upcoming reinforcer richness was unsignaled.

Method

Participants

Four children diagnosed with ASD participated in the experiment. Their names were changed to protect confidentiality. All participants were 5-year-old males with at least some verbal repertoire, good listener and motor skills, and could follow the instructions that were required to participate in the study.

Video watching was a highly preferred activity for all participants. None of the participants engaged in problem behavior that could have interfered with performance during the experiments. We would have terminated the trial if problem behavior had occurred. All children received early intensive behavioral intervention services provided to them at their (typical) kindergartens.

Settings and Materials

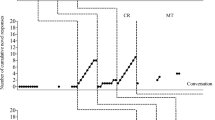

All sessions took place at the children’s kindergartens in a small treatment room (5m x 4m) containing three playmats, a Samsung tablet, a timer, and a chair for the observer. Each trial lasted approximately 10–12 min and was scored by an independent observer to ensure interobserver agreement (IOA) and procedural integrity. We used three different color playmats (green, blue, and yellow, arranged in a triangular shape, see Fig. 1 for details) placed on the floor within a 1.2m distance from each other. To measure the time it took participants to transition among mats, play the videos, and provide visual prompts, we used the TapTimer app on the Samsung tablet. The TapTimer was explicitly developed for this research. It is an Android application that measures the transition time, displays the visual prompt with the color of the mat, and plays videos with a button press. Hence, the multifunctioning TapTimer application allowed the experimenter to control testing environment and reduce the number of devices needed to conduct the study such as additional timers, pen, and paper forms etc. The app also allowed the experimenter to transfer data on the duration of transitions to software for further analysis.

A Diagram of the Setting Used in the Predictable and the Unpredictable Condition. Note. The arrows represent the distance between the mats; each mat represents a specific reinforcer context (rich, moderate, or lean). The mats were always placed in the shape of a triangle. The position of each mat varied between the trails

Procedure

All children participated in the experimental sessions three times a week. Each session consisted of three to four trials. Each trial compromised of a set 24 transitions between three playmats. A video preference assessment was conducted before the beginning of the experimental sessions. In the Predictable Condition, the upcoming reinforcer context (rich, moderate, or lean) was signaled. On the green mat, a rich reinforcer was available (30-s video); on the yellow mat, a moderate reinforcer was available (10-s video). On a blue mat, a lean reinforcer was available (5-s video). In the Unpredictable Condition, the upcoming reinforcer context (rich, moderate, or lean) was unsignaled, meaning it could be rich, moderate, or lean, independent of a mat’s color.

Preference Assessment

The type of video chosen for each participant was based on the results of multiple stimulus without replacement assessment (MSWO; DeLeon & Iwata, 1996). It consisted of different geometric illusion videos (see Table 1 for details) and was performed before each experimental session. (The results of the MSWO are available on request.) Watching geometric illusion videos was primarily found to function as a reinforcer for the behavior of children with ASD (Eldevik et al., 2019). For each participant, watching videos was ranked the highest on average and was included in the procedure (chosen from an array of other objects such as small toys and bricks). The video chosen by each participant was loaded into the TapTimer app before each experimental session. The videos were only shown to the children when on the playmats.

Experimental Sessions

Each trial within the experimental session lasted for 10–12 min. At the beginning of each trial, the experimenter opened the TapTimer app and said, “Go to green/ yellow/ blue mat,” based on the color specified by the app following the experimental design. A transition duration was defined as time spent travelling between two mats starting after the delivery of the instruction and concluding when making physical contact with the destination mat. When the transition was successfully completed the experimenter stopped the timer and the video started playing automatically. The participant was given continuous access to watch the video while making physical contact with the mat up to the time limit set by the reinforcer context. The tablet, which was used to play a video, was held by the experimenter approximately 30 cm from the child. No child attempted to touch the tablet nor to interact with the experimenter. When the video stopped playing, the experimenter would prompt the child by saying: “Go to [color of the mat]” while presenting the tablet displaying the next mat’s color. The color of the square presented on the tablet was the exact representation of the color of the mat the child was supposed to go to. When the child initiated the transition, the experimenter started the timer and returned to the middle of the triangle, where she remained until the child arrived on the prompted playmat. There were no instances of a child transitioning to the wrong area or refusing to transition. However, if that had occurred a verbal prompt would have been repeated once and if that had not resulted in the correct transition, the trial would have been terminated. Each trial consisted of a set of 24 transitions leading to an experience of eight rich, eight moderate, and eight lean contexts, with the initial context serving as the final context. (See Fig. 1 for an illustration.) Regardless of the context the child started from, it always experienced 24 transitions, four of each type (rich-moderate, rich-lean, moderate-rich, moderate-lean, lean-rich, lean-moderate). Reinforcer context was determined by the length of the geometric illusion video available on the tablet. The order of the transitions was randomized across the trials.

The Predictable Condition: Signaled Reinforcer Context

In this condition, the color presented by the tablet signaled the upcoming reinforcer context. Each time a green square was visible on a screen, it meant an upcoming experience of the rich context (30-s video), a yellow one meant moderate context (10-s video), and the blue square meant lean context (5-s video).

The Unpredictable Condition: Unsignaled Reinforcer Context

In this condition, the color presented by the tablet did not signal the upcoming reinforcer context. A participant could experience rich, moderate, or lean reinforcer context on each mat, with a 33.3% chance of it being one of the possibilities.

Interobserver Agreement and Procedural Integrity

The data were collected by the first author using the TapTimer app throughout the study in addition to trained observers who attended and scored 100% of the sessions for each participant. The trained observers used a timer application on their phones to measure transition duration and then transferred the scores onto the table. IOA scores of the transition duration were calculated by dividing the shorter duration by the longer duration, converting the quotient to percentage, and averaging across trials within the session. John’s average IOA score was 97%, with the low score of 95% and high score of 100%. Joe’s average IOA was 95%, with the low score of 91% and high score of 100%. Tom’s average IOA score was 98%, with the low score of 94% and high score at 100%. Ben’s average IOA was 97% with the low score of 89% and high score of 100%. We implemented a similar procedure to calculate procedural integrity as Shvarts et al. (2020). A checklist separated each session into the following four sections: (1) MSWO was conducted before the session; (2) playmats were in the correct locations; (3) instructions were delivered; and (4) the correct video was loaded into the TapTimer app. Any errors within those four sections of the checklist received zero points for those sections. The procedural integrity was calculated for each session by dividing the total number of sections executed correctly by the total number of all sections (errorless and delivered with errors) and multiplying that number by 100 to receive a percentage. Procedural integrity scored 92% on average across conditions and participants. The individual procedural integrity score measured 90% for John, 92.5% for Joe, 95% for Tom, and 92.5% for Ben. (Detailed data is available on request.)

Results

The transition times between different reinforcer contexts in the Predictable Condition varied according to the upcoming context, but not in the Unpredictable Condition. Figure 2 shows transition time across sessions during the Predictable Condition and the Unpredictable Condition. All children’s transition times were longer when walking towards the leaner context in the Predictable Condition. Those results are in line with the existing research (Jessel et al., 2016; Langford et al., 2019; Perone & Courtney, 1992). However, differences occurred between John and the other three boys. John’s transition times were longer than other participants (see Fig. 3); for example, in Lean-Rich transitions, his mean time transitioning was considerably longer with M = 6.4s, SD = 1.03, and M = 4.55s, SD = 0.07, M = 4.11s, SD = 0.09. M = 4.77s, and SD = 0.16 for Joe, Tom, and Ben, respectively. We observed only a slight difference between Rich-Moderate with M = 9.16s, SD = 0.04, M = 5.36s, SD = 0.06, M = 9.9s, SD = 0.08, M = 9.46s, SD = 0.14 for John, Joe, Tom, and Ben respectively, and Moderate-Lean transitions with M = 8.81s, SD = 0.06, M = 6.2s, SD = 0.07, M = 10.25s, SD = 0.9, M = 8.21s, SD = 0.13 for John, Joe, Tom, and Ben, respectively, in the Predictable Condition. Nevertheless, our procedure successfully showed that introducing moderate context can reduce the transition time when the upcoming context is signaled, as shown in Table 1 and Fig. 2. However, the most considerable difference was observed for all participants transitioning from Rich to Lean context (see Fig. 2) with M = 11.26s, SD = 0.07, M = 7.5s SD = 0.05, M = 12.47s, SD = 0.07, M = 11.5s, SD = 0.15 for John, Joe, Tom, and Ben, respectively.

Average Response Times for All Participants in both Conditions when Transitioning from One Context to the Other. Rich-Lean transitions, Rich-Moderate transitions, Moderate-Lean transitions, Lean-Moderate transitions, Lean-Moderate transitions excluding John, Moderate-Rich transitions, Lean-Rich transitions, Lean-Rich transitions, excluding John)

In the Predictable Condition, the transition times from leaner to richer contexts were shorter across all participants, with the fastest transitions being Lean-Rich (values above), Moderate-Rich (M = 7.28s, SD = 0.71, M = 4.93s, SD = 0.08, M = 5.33s, SD = 0.06, M = 5.2s, SD = 0.15 for John, Joe, Tom, and Ben, respectively) and Lean-Moderate (M = 10.74s, SD = 0.03, M = 6.12s, SD = 0.06, M = 5.42s, SD = 0.08, M = 5.34s, SD = 0.15 for John, Joe, Tom, and Ben, respectively; see Fig. 2). Those results suggest that signaled upcoming reinforcers served as “signals,” informing where and how more could be obtained.

However, we did not observe those differences in the Unpredictable Condition; all children’s average response times were similar regardless of the upcoming reinforcer context. In this condition, we observed longer transition times from historically leaner to richer context compared with the Predictable Condition; for example, Lean-Rich transitions times were as follows: M = 7.34s, SD = 0.04, M = 8.96s, SD = 0.04, M = 8.91s, SD = 0.04, M = 8.97s, SD = 0.04, for John, Joe, Tom, and Ben, respectively. Similar transition times were observed in historically Rich-Lean transitions with M = 7.4s, SD = 0.05, M = 8.49s, SD = 0.05, M = 8.65s, SD = 0.04, M = 9s, SD = 0.06 for John, Joe, Tom, and Ben, respectively.

However, the transition times from the richer to the leaner context in the Unpredictable Condition did not exceed the transition times between those contexts in the Predictable Condition. Moreover, despite the longer transition times from leaner to richer context, the overall transition times were shorter compared to the Predictable Condition.

To examine the overall effects across sessions for each participant, Fig. 3 shows that average response times among different reinforcer contexts were more similar across all participants during the Unpredictable Condition than the Predictable Condition. The difference in durations of transitions to signaled and unsignaled reinforcer contexts suggests that behavior is primarily controlled by signals of likely future reinforcers as extrapolated from extended past experience rather than strengthened by the most recent event (the most recently experienced reinforcer context).

Discussion

Our discipline's primary concern is understanding how behavior and the environment interact. Our findings add to the existing and growing body of research (Baum, 2018; Baum & Rachlin, 1969; Cowie et al., 2021; Cowie & Davison, 2016; Davison & Baum, 2006; Simon & Baum, 2017), suggesting that we do not need to rely on the hypothetical construct of response strength (Skinner, 1938) to explain this interaction. Reinforcers might not strengthen the response which they follow, but rather guide behavior to where and how more of them can be obtained. By replicating and extending Jessel et al.’s (2016) finding that transition times between different reinforcer contexts are controlled by the upcoming context and not by the previous one, our results provide further evidence for the importance of signaling properties of reinforcers.

Of interest to applied behavior analysts, we also replicated the finding that transition time to a leaner reinforcer context can be shortened by including an unsignaled reinforcer context (Jessel et al., 2016; Toegel & Perone, 2022). Differences in transition times among participants in unpredictable conditions were smaller than in predictable conditions. This finding has important implications and can be used as a part of an intervention plan to reduce problem behavior associated with transitions. For example, introducing an unsignaled reinforcer context can help shorten the transitions time when problem behavior during transitions is not maintained by the unpredictability of the upcoming activity, but rather by worsening in conditions (Matson, 2023). Also, in situations when termination of the preferred activity and initiation of a signaled aversive activity are accompanied by problem removing the signal can be useful however, completely removing all stimuli that signal the upcoming activity may be challenging (e.g., walking towards a table where typically nonpreferred activities take place; Brewer et al., 2014; Matson, 2023).

In instances when a functional analysis reveals that unpredictability, rather than the pleasant or unpleasant aspects of the situations, influences the problem behavior providing signals can help. Flannery and Horner (1994) observed that problem behavior occurred less frequently when the order and duration of activities were random but signaled, compared to when activities were random and not signaled. This suggested that predictability was functionally related to problem behavior. The assessment included a treatment condition where signals, functioning as discriminative stimuli, provided information about upcoming events, thus preventing problem behavior by avoiding the triggering of the establishing operation (Matson, 2023).

Thus, the decision whether signal the upcoming events should be based on the functional analysis, but also practical considerations. In emergency situations, the signals cannot be provided ahead of time and unpredictability cannot be avoided. Hence, a reasonable goal would be to gradually and systematically increase the percentage of transitions that are unsignaled while maintaining low levels of problem behavior (Luczynski & Rodriguez, 2015; Matson, 2023).

One potential limitation of the present study is that we only ran the signaled condition before the unsignaled one. By using a reversal design and repeating the signaled condition the signaling effects of the upcoming context could also have been validated. Another potential limitation is the homogeneity of the participants included in the study, due to several challenges with recruitment we were not able to include children with additional diagnosis or from different age groups. By diversifying participants, we would have been able to generalize the results further.

Our experiment shows that time spent transitioning changes according to the upcoming reinforcer context. Our results are in line with previous work on the signaling properties of reinforcers in humans and nonhumans (Baum, 1974; Cowie, 2018; Cowie et al., 2021; Cowie & Davison, 2020; Simon & Baum, 2017).

A three-term contingency could not explain our results because there is no evidence that the videos strengthened traveling (duration) because, for example, shorter traveling times following longer videos would have implied. If “what had happened most recently,” that is, which reinforcer richness participants had just experienced had explained any variance in travel times, strengthening by reinforcement would have provided a reasonable explanation. However, the most recent video length did not account for any variance, only the signaled upcoming video length did, which aligns with the literature emphasizing signaling effects (Cowie et al., 2011; Cowie & Davison, 2016; Davison & Baum, 2006; Shahan, 2010; Simon & Baum, 2017).

In addition, our study extends previous investigations on transitions between different reinforcer contexts by adding a moderate context. Transition to or from moderate reinforcer contexts had not been investigated before, neither in basic nor in the applied experiments. Our results show that a moderate context can substantially reduce the transition time. A moderate context can be useful when teachers or caregivers want to avoid problem behavior associated with the lean context, but still maintain a demand to transition from a rich one. For example, instead of transitioning from playing outside (rich context) straight to completing math worksheets (lean context), a child may be asked to practice their reading (moderate context). Such modification can help reduce occurrence of schedule-induced problem behavior.

The increasing number of studies reporting that an advance notice procedure (Brewer et al., 2014; Toegel & Perone, 2022) is ineffective in reducing rich-lean transition times motivates further investigations including unsignaled moderate reinforcer contexts. This should include studies testing if our results can be replicated with adults, neurotypical children, as well as with nonhuman subjects.

In conclusion, our finding that transitions to leaner reinforcer contexts take longer in predictable conditions, shows the power of discriminative properties of reinforcers. This finding carries the potential to contribute to making these properties more known to applied behavior analysts, who are frequently unaware of the “signalling versus strengthening debate” (Wood & Simon, 2021). Translation of this debate among basic researchers to the applied field carries the potential to improve interventions aiming at improving socially significant behavior.

Data Availability

Data can be made available upon request from the corresponding author.

Notes

For the readers entertained by a discussion of appropriate terminology beyond the scope of this article, we can note that the verbs “reinforcing” and “strengthening” cannot be distinguished in the Germanic as well as in some Slavic (Polish and Russian) languages because both English words correspond to the same Germanic and Slavic word.

References

Baum, W. M. (1974). On two types of deviation from matching law: Bias and undermatching. Journal of the Experimental Analysis of Behavior, 22(1), 231–242. https://doi.org/10.1901/jeab.1974.22-231

Baum, W. M. (2002). From molecular to molar: A paradigm shift in behavior analysis. Journal of the Experimental Analysis of Behavior, 78(1), 95–116. https://doi.org/10.1901/jeab.2002.78-95

Baum, W. M. (2012). Rethinking reinforcement: Allocation, induction, and contingency. Journal of the Experimental Analysis of Behavior, 97(1), 101–124. https://doi.org/10.1901/jeab.2012.97-101

Baum, W. M. (2018). Multiscale behavior analysis and molar behaviorism: An overview. Journal of the Experimental Analysis of Behavior, 110(3), 302–322. https://doi.org/10.1002/jeab.476

Baum, W. M., & Rachlin, H. C. (1969). Choice as time allocation. Journal of the Experimental Analysis of Behavior, 12(6), 861–874. https://doi.org/10.1901/jeab.1969.12-861

Borgstede, M., & Eggert, F. (2021). The formal foundation of an evolutionary theory of reinforcement. Behavioural Processes, 186, 104370. https://doi.org/10.1016/j.beproc.2021.104370

Brewer, A. T., Strickland-Cohen, K., Dotson, W., & Williams, D. C. (2014). Advance notice for transition-related problem behavior: Practice guidelines. Behavior Analysis in Practice, 7(2), 117–125. https://doi.org/10.1007/s40617-014-0014-3

Castillo, M. I., Clark, D. R., Schaller, E. A., Donaldson, J. M., DeLeon, I. G., & Kahng, S. W. (2018). Descriptive assessment of problem behavior during transitions of children with intellectual and developmental disabilities. Journal of Applied Behavior Analysis, 51(1), 99–117. https://doi.org/10.1002/jaba.430

Conger, R., & Killeen, P. (1974). Use of concurrent operants in small group research: A demonstration. Pacific Sociological Review, 17(4), 399–416. https://doi.org/10.2307/1388548

Cowie, S. (2018). Behavioral time travel: Control by past, present, and potential events. Behavior Analysis: Research & Practice, 18(2), 174–183. https://doi.org/10.1037/bar0000122

Cowie, S., & Davison, M. (2016). Control by reinforcers across time and space: A review of recent choice research. Journal of the Experimental Analysis of Behavior, 105(2), 246–269. https://doi.org/10.1002/jeab.200

Cowie, S., & Davison, M. (2020). Generalizing from the past, choosing the future. Perspectives on Behavior Science, 43(2), 245–258. https://doi.org/10.1007/s40614-020-00257-9

Cowie, S., Davison, M., & Elliffe, D. (2011). Reinforcement: Food signals the time and location of future food. Journal of the Experimental Analysis of Behavior, 96(1), 63–86. https://doi.org/10.1901/jeab.2011.96-63

Cowie, S., Davison, M., & Elliffe, D. (2017). Control by past and present stimuli depends on the discriminated reinforcer differential. Journal of the Experimental Analysis of Behavior, 108(2), 184–203. https://doi.org/10.1002/jeab.268

Cowie, S., Virués-Ortega, J., McCormack, J., Hogg, P., & Podlesnik, C. A. (2021). Extending a misallocation model to children’s choice behavior. Journal of Experimental Psychology. Animal Learning & Cognition, 47(3), 317–325. https://doi.org/10.1037/xan0000299

Davison, M., & Baum, W. M. (2006). Do conditional reinforcers count? Journal of the Experimental Analysis of Behavior, 86(3), 269–283. https://doi.org/10.1901/jeab.2006.56-05

DeLeon, I. G., & Iwata, B. A. (1996). Evaluation of a multiple-stimulus presentation format for assessing reinforcer preferences. Journal of Applied Behavior Analysis, 29(4), 519–533. https://doi.org/10.1901/jaba.1996.29-519

Eldevik, S., Arnesen, L., Sakseide, K., & Gale, C. (2019). The effects of delayed reinforcement in children with autism spectrum disorder. Norsk Tidsskrift for Atferdsanalyse, 46, 45–54.

Ferster, C. B., & Skinner, B. F. (1957). Schedules of reinforcement. Appleton-Century-Crofts. https://doi.org/10.1037/10627-000

Flannery, K. B., & Horner, R. H. (1994). The relationship between predictability and problem behavior for students with severe disabilities. Journal of Behavioral Education, 4(2), 157–176. https://doi.org/10.1007/BF01544110

Jessel, J., Hanley, G. P., & Ghaemmaghami, M. (2016). A translational evaluation of transitions. Journal of Applied Behavior Analysis, 49(2), 359–376. https://doi.org/10.1002/jaba.283

Krägeloh, C. U., Davison, M., & Elliffe, D. M. (2005). Local preference in concurrent schedules: The effects of reinforcer sequences. Journal of the Experimental Analysis of Behavior, 84(1), 37–64. https://doi.org/10.1901/jeab.2005.114-04

Langford, J. S., Pitts, R. C., & Hughes, C. E. (2019). Assessing functions of stimuli associated with rich-to-lean transitions using a choice procedure. Journal of the Experimental Analysis of Behavior, 112(1), 97–110. https://doi.org/10.1002/jeab.540

Luczynski, K. C., & Rodriguez, N. M. (2015). Assessment and treatment of problem behavior associated with transitions. In F. DiGennaro Reed & D. Reed (Eds.), Autism service delivery (pp. 151–173). Springer. https://doi.org/10.1007/978-1-4939-2656-5_5

Matson, J. L. (Ed.). (2023). Handbook of applied behavior analysis for children with autism. Springer. https://doi.org/10.1007/978-3-031-27587-6

Perone, M., & Courtney, K. (1992). Fixed-ratio pausing: joint effects of past reinforcer magnitude and stimuli correlated with upcoming magnitude. Journal of the Experimental Analysis of Behavior, 57(1), 33–46. https://doi.org/10.1901/jeab.1992.57-33

Shahan, T. A. (2010). Conditioned reinforcement and response strength. Journal of the Experimental Analysis of Behavior, 93(2), 269–289. https://doi.org/10.1901/jeab.2010.93-269

Shahan, T. A. (2017). Moving beyond reinforcement and response strength. The Behavior Analyst, 40(1), 107–121. https://doi.org/10.1007/s40614-017-0092-y

Shvarts, S., Jimenez-Gomez, C., H. Bai, J. Y., Thomas, R. R., Oskam, J. J., & Podlesnik, C. A. (2020). Examining stimuli paired with alternative reinforcement to mitigate resurgence in children diagnosed with autism spectrum disorder and pigeons. Journal of the Experimental Analysis of Behavior, 113(1), 214–231. https://doi.org/10.1002/jeab.575

Simon, C., & Baum, W. M. (2017). Allocation of speech in conversation. Journal of the Experimental Analysis of Behavior, 107(2), 258–278. https://doi.org/10.1002/jeab.249

Simon, C., Bernardy, J. L., & Cowie, S. (2020). On the “strength” of behavior. Perspectives on Behavior Science, 43(4), 677–696. https://doi.org/10.1007/s40614-020-00269-5

Skinner, B. F. (1938). The behavior of organisms: An experimental analysis. In The behavior of organisms: An experimental analysis. Appleton-Century.

Skinner, B. F. (1948). “Superstition” in the pigeon. Journal of Experimental Psychology, 38(2), 168–172. https://doi.org/10.1037/h0055873

Skinner, F. B. (1953). Science and human behaviour. Macmillan.

Sterling-Turner, H. E., & Jordan, S. S. (2007). Interventions addressing transition difficulties for individuals with autism. Psychology in the Schools, 44(7), 681–690. https://doi.org/10.1002/pits.20257

Toegel, F., & Perone, M. (2022). Effects of advance notice on transition-related pausing in pigeons. Journal of the Experimental Analysis of Behavior, 117(1), 3–19. https://doi.org/10.1002/jeab.730

Wood, A., & Simon, C. (2021). Stimulus control in applied work with children with autism spectrum disorder from the signalling and the strengthening perspective. Norsk Tidsskrift for Atferdsanalyse, 48(2), 279–293.

Acknowledgments

We thank William Baum for his helpful comments on earlier versions of the article.

Funding

Open access funding provided by University of Agder

Author information

Authors and Affiliations

Contributions

The first author collected and analysed all the data and wrote all the sections of the article. The second author provided multiple revisions of the first author’s writing and approved the final version of the manuscript. The study was conducted under Approval 282790 granted by the Regional Committee for Medical and Health Research Ethics, Approval 931299 granted by the Norwegian Center for Research Data and Approval RITM0140084 granted by the University of Agder Faculty Ethics Committee. The study was conducted in partial fulfillment of the first author’s PhD degree.

Corresponding author

Ethics declarations

Ethical Approval

All procedures performed in this study involving human participants were in accordance with the ethical standards of the institutional and national research committee and with the 1964 Helsinki Declaration and its later amendments. The study was conducted under Approval 282790 granted by the Regional Committee for Medical and Health Research Ethics, Approval 931299 granted by the National Center for Research Data and Approval RITM0140084 granted by the University Faculty Ethics Committee. The study was conducted in partial fulfilment of the first author’s PhD degree. Written informed consent was obtained from the parents. Parents signed informed consent regarding publishing their children’s data.

Conflict of Interest

We have no known conflicts of interest to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wood, A., Simon, C. Control of Transition Time by the Likely Future as Signalled from the Past in Children with ASD. Psychol Rec 73, 443–453 (2023). https://doi.org/10.1007/s40732-023-00553-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40732-023-00553-1