Abstract

Emotion plays an important role in human interaction. People can explain their emotions in terms of word, voice intonation, facial expression, and body language. However, brain–computer interface (BCI) systems have not reached the desired level to interpret emotions. Automatic emotion recognition based on BCI systems has been a topic of great research in the last few decades. Electroencephalogram (EEG) signals are one of the most crucial resources for these systems. The main advantage of using EEG signals is that it reflects real emotion and can easily be processed by computer systems. In this study, EEG signals related to positive and negative emotions have been classified with preprocessing of channel selection. Self-Assessment Manikins was used to determine emotional states. We have employed discrete wavelet transform and machine learning techniques such as multilayer perceptron neural network (MLPNN) and k-nearest neighborhood (kNN) algorithm to classify EEG signals. The classifier algorithms were initially used for channel selection. EEG channels for each participant were evaluated separately, and five EEG channels that offered the best classification performance were determined. Thus, final feature vectors were obtained by combining the features of EEG segments belonging to these channels. The final feature vectors with related positive and negative emotions were classified separately using MLPNN and kNN algorithms. The classification performance obtained with both the algorithms are computed and compared. The average overall accuracies were obtained as 77.14 and 72.92% by using MLPNN and kNN, respectively.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Emotion is a human consciousness and plays a critical role in rational decision-making, perception, human interaction, and human intelligence. While emotions can be reflected through non-physiological signals such as words, voice intonation, facial expression, and body language, many studies on emotion recognition based on these non-physiological signals have been reported in recent decades [1, 2].

Signals obtained by recording voltage changes occurring on skull surface as a result of electrical activity of active neurons in the brain are called EEG [3]. From the clinical point of view, EEG is the mostly used brain-activity-measuring technique for emotion recognition. Furthermore EEG-based BCI systems provide a new communication channel by detecting the variation in the underlying pattern of brain activities while performing different tasks [4]. However, BCI systems have not reached the desired level to interpret people’s emotions.

The interpretation of people’s different emotional states via BCI systems and automatic identification of the emotions may enable robotic systems to emotionally react to humans in the future. They will be more useful especially in fields such as medicine, entertainment, education and in many other areas [5]. BCI systems need variable resources that can be taken from humans and processed to understand emotions. EEG signal is one of the most important resources to achieve this target. Emotion recognition is combined with different areas of knowledge such as psychology, neurology and engineering. SAM questionnaires are usually used for classified affective responses of subjects in the design of emotion recognition systems [6]. However, affective responses are not easily classified into distinctive emotion responses due to the overlapping of emotions.

Emotions can be discriminated with either discrete classification spaces or dimensional spaces. A discrete space allows the assessment of a few basic emotions such as happiness and sadness and is more suitable for unimodal systems [7]. A dimensional space (valence–arousal plane) allows a continuous representation of emotions on two axes. Valence dimension is ranging from unpleasant to pleasant, and arousal dimension is ranging from calm to excited state [8]. Higher dimension is better in the understanding of different states, but the classification accuracies of application can be lower as obtained in Ref [7]. Thus, in this study, EEG signals that are related to positive and negative emotions have been classified with channel selection for only valence dimension.

In the literature, there are studies in which various signals obtained/measured from people are used in order to determine emotions automatically. We can gather these studies under three areas [9]. The first approach includes studies intended to predict emotions using face expressions and/or speech signals [10]. However, the main disadvantage of this approach is that permanently catching the spontaneous face expressions that do not reflect real emotions is quite difficult. Speech and facial expressions vary across cultures and nations as well. The second main approach is based on emotion prediction by tracking the changes in central automatic nervous system [11, 12]. Various signals such as electrocardiogram (ECG), skin conductance response (SCR), breath rate, and pulse are recorded; hence, emotion recognition is applied by processing them. The third approach includes studies intended for EEG-based emotion recognition.

In order to recognize emotions, a large variety of studies were specifically conducted within the scope of EEG signals. These studies can simply be gathered under three main areas; health, game, and advertisement. Studies in health are generally conducted by physicians for purposes of helping in disease diagnosis [13–15]. Game sector involve studies in which people use EEG recordings instead of joysticks and keyboards [16, 17]. As per this study, advertisement sector generally involves studies which aim at recognizing emotions from EEG signals.

There are several studies in which different algorithms related to EEG-based classification of emotional states were used. Some main studies related with emotions are given below.

-

Murugappan et al. [18] classified five emotions based on EEG signals. They used EEG signals that recorded from 64, 24, and 8 EEG channels, respectively. They achieved maximum classification accuracy of 83.26 and 75.21% using kNN and linear discriminant analysis (LDA) algorithm, respectively. Researchers employed DWT method for decomposing the EEG signal into alpha, beta, and gamma bands. These frequency bands were analyzed for feature extraction.

-

Channel et al. [19] classified two emotions. They employed SAM to determine participant emotions. They used Naive Bayes (NB) and Fisher discriminant analysis (FDA) as the classification algorithms. Classification accuracy was obtained as 72 and 70% for NB and FDA, respectively.

-

Zhang et al. [20] applied PCA method for feature extraction. The features were extracted from two channels (F3 and F4). The classification accuracy was obtained as 73% by the researchers.

-

Bhardwaj et al. [21] recognized seven emotions using support vector machine (SVM) and LDA. Three EEG channels (Fp1, P3 and O1) were used in their experiment. Researchers investigated sub-bands (theta, alpha and beta) of EEG signal. The overall average accuracies obtained were 74.13% using SVM and 66.50% using LDA.

-

Lee et al. [22] classified positive and negative emotions. Classification accuracy was obtained as 78.45% by using adaptive neuro-fuzzy inference system (ANFIS).

In reference to the literature, it is seen that a limited number of EEG channels (e.g., two or three) have been used to detect different emotional states with different classification algorithms such as SVM, MLPNN, and kNN.

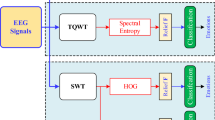

The aim of this study was to classify EEG signals related to different emotions based on audiovisual stimuli with the preprocessing of channel selection. SAM was used to determine participants’ emotional states. Participants rated each audiovisual stimulus in terms of the level of valence, arousal, like/dislike, dominance and familiarity. EEG signals that related to positive and negative emotions have been classified according to participants’ valence ratings. DWT method was used for feature extraction from EEG signals. Wavelet coefficients of EEG signals were assumed as feature vectors and statistical features were used to reduce the dimension of those feature vectors. EEG signals related to positive and negative emotions groups have been classified by MLPNN and kNN algorithm. After the preprocessing and feature extraction stages, classifiers were used for channel selection (Fig. 1). EEG channels that offer the best classification performance were determined. Thus, final feature vectors were obtained by combining the features of those EEG channel. The final feature vectors were classified and their performances were compared. The steps followed in the classification process are depicted in Fig. 1.

As a remainder, this paper is organized as follows Sects. 2 and 3 describe the Materials and Methods employed in the proposed EEG-based emotion recognition system. Section 4 presents the experimental results. Section 5 presents the results and discussion. Finally, Sect. 6 provides the conclusion of this paper.

2 Materials

2.1 Database and participants questionnaire

In this study, the publicly available DEAP database related to emotional states was used. The DEAP database contains EEG and peripheral physiological signals of 32 healthy subject [23]. Thirty-two healthy participant (15 female and 17 male), aged between 23 and 37, participated in the experiment. The experiments were performed in Twente and Geneva university laboratories. Participants 1–22 were analyzed in Twente and the remaining ones 23–32 in Geneva. Due to some minor differences between the two universities, participants taken from Twente University were examined in this study.

2.2 Stimulus material

The DEAP database was recorded by using music clips to stimuli emotions in the participants. The music clips used in the experiment were selected in several steps. Initially, the authors selected 120 music clips. Half of these stimuli were selected by semi automatically and another half was selected manually [24]. From the initial collection of 120 music clips, the final 40 test music clips were determined to present in the paradigm. These music clips were selected to elicit emotion prominently. A 1-min segment related to the maximum emotional content was extracted from each music clips, and these segments were presented in final experiment.

2.3 Task

The EEG signals were recorded from each participant in a particular paradigm framework based on audiovisual stimuli. The paradigm consists of two sections and each section contains 20 trials, each consisting of the following steps:

-

1.

Trial number: A 2-s screen displaying the current trial.

-

2.

Fixation: A 5-s fixation crosses.

-

3.

Music Clip: The 60-s display of the music clips to stimuli different emotions.

-

4.

Assessment: SAM employed for valence, arousal, dominance, likes, and familiarity.

The paradigm applied to participant in order to record EEG and peripheral physiological signals is shown in Fig. 2.

2.4 Participants ratings

At the end of each music clip, participants assessed their emotional states in terms of valence, arousal, dominance, likes, and familiarity. SAM [25] were used to visualize for valence, arousal and dominance scales. For the likes scale, thumbs-down/thumbs-up icons were used (Fig. 3). Participants assessed each music clip for familiarity effects as well. Participants rated valence, arousal, dominance and likes on a continuous 9-point scale, but the familiarity was rated on a 5-point integer scale. In this study, to recognize the EEG signals related to positive and negative emotions, participants’ evaluations from SAM visuals according to valence panel were taken into consideration. The valence scale is ranging from unhappy or sad to happy or joyful (Fig. 3). The valence ratings of participants were smaller than 5, and this was accepted as negative emotion; else ratings were accepted as positive emotion.

In Fig. 4, sample EEG signals related to positive and negative emotions state measured from a randomly selected channel are shown.

2.5 EEG signal recordings

EEG signals related to audiovisual stimuli were recorded using a Biosemi Active Two system. For each participant, EEG signals were recorded from 32 channels. Thirty-two active AgCl electrodes placed according to international 10–20 electrode placement system were used in EEG recordings. EEG signals were digitized by 24-bit analog–digital convertor with 512 Hz sample rate [24].Then, the digitized EEG signals were down sampled to 128 Hz. A band-pass frequency filter from 4.0–45.0 Hz was applied to EEG recordings and electrooculography (EOG) artifacts were removed. Sections that were present before and after the audiovisual stimulus were removed from the recorded EEG signals. Therefore, EEG segment for each music clip were obtained. At the end of these procedures, 40 EEG segments related to 40 music clips for every participant were obtained.

3 Methods

In this study, EEG signals related to different emotional states were classified. DWT was used for feature extraction from EEG signals. Wavelet coefficients of EEG signals were assumed as feature vectors and statistical features were used to reduce dimension of those feature vectors.

3.1 Discrete wavelet transform

DWT is widely used for analyzing non-stationary signals [3, 9]. DWT have been famously preferred for analyzing EEG signals. The desired frequency range can be obtained by using DWT method. DWT method decomposes the signal into sub-bands by filtering with consecutive high pass filter g[n] and low pass filter h[n] in the time period [10].As a result, first-level D1 detail and A1 approximation sub-bands of signal are obtained. First-level approximation and detail sub-bands are defined as

In order to reach the desired band range, A1 called approximation band is re-separated, and procedures are continued consecutively until the intended frequency range is reached. In Fig. 5, decomposition of a EEG signal (x[n]) into its multi-level frequency ranges via DWT method is shown. Frequency ranges related to detail wavelet coefficients are listed in Table 1 as well.

In literature, EEG theta band dynamics including important information about emotions can be seen [24, 26, 27]. Therefore, theta band dynamics were used in order to obtain feature vectors. Selection of suitable mother wavelet and the number of decomposition levels is very important to analyze non-stationary signals [10]. In this study, the number of decomposition levels was chosen as 4 because theta band dynamics of EEG signals were considered to recognize different emotions based on audiovisual stimulus. Dabuechies wavelets have provided useful results in analyzing EEG signals [10], and hence Daubechies wavelet of order 2 (db2) was chosen in this study.

3.2 Feature extraction

In this study, feature vectors for EEG signals were obtained by using DWT method. The decomposition of the signal creates a set of wavelet coefficients in each sub-band. Theta band wavelet coefficients (cD4) which are composed of 506 samples were assumed as feature vectors. The wavelet coefficients provide a compact representation of EEG features [10]. In order to decrease the dimensionality of feature vector, five statistical parameters were used. The following statistical features were used to reduce dimension of the feature vectors based on theta band;

-

1.

Maximum of the absolute values of the wavelet coefficients.

-

2.

Mean of the absolute values of the wavelet coefficients.

-

3.

Standart deviation of the coefficients.

-

4.

Average power of the coefficients.

-

5.

Average energy of the coefficients.

In this study, above statistical parameters in feature extraction from EEG segments for different emotional states were selected. In addition to these features, different attributes were used. Different combinations have been tried for obtaining the highest success rate. After the calculations, the optimal results were observed by above features.

At the end of DWT method and statistical procedures used for feature extraction, five-dimensional feature vectors belonging to EEG segments related to every emotional state were obtained.

3.3 Classification algorithms

The feature vectors of EEG signals related to positive and negative emotions were classified by MLPNN and kNN algorithm. The performances of these algorithms applied for pattern recognition were evaluated and also compared.

3.3.1 Artificial neural network

Artificial neural network (ANN) is widely used in engineering area after the development of computer technology [28]. ANN is a computing system inspired from biological neural networks. It consists of an input layer, one or more hidden layer(s), and a output layer. A neural network can be generated by a series of neurons that are contained in these layers. Each neuron in a layer connects with another neuron in the next layer via weights. The weights w ij represent the connection between the ith neuron in a layer and the jth neuron in the next layer [29]. Feature vectors obtained after the feature extraction are applied to input layer. All the input components are distributed to the hidden layer. The function of hidden layer is to intervene between the external input and the neurons in the network output. The hidden layer helps to detect nonlinearity in the feature vectors. The set of output signals of the neurons in output layer constitutes the overall response of the network [30, 31]. The neurons of hidden layer process external input. External input is defined as x i . The first, weighted sum is calculated, and a bias term \(\theta_{j}\) is added in order to determine net j that is defined as [29]

where m and n are the number of neurons in the input layer and the number neurons of hidden layer, respectively.

In order to transform the net j to neurons and in the next layer and generated desired output, a suitable transfer function should be chosen. There are various transfer functions, but the most widely used is the sigmoid one that is defined as [29]

In this study, a feed-forward back-propagation multilayer neural network was used. The back-propagation training algorithm technique adjusts the weights to obtain network that is closed to the desired output [32].

3.3.2 k-nearest neighborhood

kNN is a classification algorithm based on distance. kNN allows an object encountered in (n) dimensional feature space to be assigned to a predetermined class by examining the attributes of a new object [33].In kNN algorithm, classification process starts with the calculation of the distance of a new object with unknown class to each object in the training set. Classification process is completed by assigning the new object to the class most common among its k-nearest neighbors. Known classes of objects in the training set of k-nearest neighbors were taken into account for classification.

Different methods used to calculate the distance in kNN algorithm. For these methods, Minkowski, Manhattan, Euclidean, and Hamming distance measures can be given as examples. In this study, the Euclidean distance was used to determine nearest neighbors. The Euclidean distance is defined as

where x and y are the position of points in a Euclidean n-space.

The choice of k is optimized by calculating the classification algorithm ability with different k values. In order to acquire the best classification accuracy, appropriate value of k was determined as different for each participant.

3.4 Performance criteria

Accuracy, specificity, and sensitivity can be used to test the performance of the trained network. These criteria are among the success evaluation criteria which are frequently used in the literature. In this study, specificity represents the ability to correctly classify the samples belonging to a positive emotion; sensitivity represents the ability to correctly classify the samples belonging to a negative emotion. Accuracy, specificity, and sensitivity are computed by Eqs. (6), (7), and (8), respectively.

True positive (TP), the number of true decision related to positive emotion by automated system. False positive (FP), the number of false decision related to positive emotion by automated system. True negative (TN), the number of true decision related to negative emotion, and False negative (FN), the number of false decision related to negative emotion.

4 Experimental results

4.1 Channel selection

In this study, MLPNN was firstly used for channel selection. EEG recordings measured from 32 channels for every participant were evaluated separately and five EEG channels having the highest performance were dynamically determined. As we evaluate the results related to all participants, same channels provided the highest performances. The classification of EEG signals were achieved by a dynamic model in which the channels were selected for each participant. The dynamic selection process is given below.

For every participant, feature vectors related to EEG segments consisting of positive and negative emotions were classified by a MLPNN. The feature vectors obtained by DWT with statistical calculation were used as input sets for MLPNN. The number of neurons in the input layer of MLPNN was five due to the size of feature vector. MLPNN output vectors were defined as [1 0] for positive emotion and [0 1] for negative emotion. Thus, the number of neurons in the output layer of network was two. Hence, the structure used in this study was (5 × n × 2), which is shown in Fig. 6, where n represents the number of neurons in the hidden layer. The number of neurons used in the hidden layer is separately determined for each participant. Each participant had 40 EEG segments (training and testing patterns) totally. Thirty EEG segments were randomly selected for network training stage, and the remaining 10 EEG segments selected for testing stage. In order to increase reliability of the classification results, the training and testing data were randomly changed four times.

Single hidden layer with 5 × n × 2 architecture was used in MLPNN architecture for determining five EEG channels having the best classification performances. Accuracy (Eq. 6) was taken as model success criteria for determining the channels. In training stage of MLPNN, the network parameters are learning coefficient 0.7 and momentum coefficient 0.9.

In this study, EEG signals recorded from 32 channels were examined, and five EEG channels having highest performance in emotion recognition were dynamically determined. It was generally observed that same channels except a few of them provided the highest performances. The main intention of determining the EEG channels is the simultaneous processing of EEG signals recorded from different regions of the brain. EEG signals recorded from different regions provide a more comprehensive and dynamic solution to the description of emotional state. At the end of the process, the classification results revealed that the channels having high performances were P3, FC2, AF3, O1 and Fp1 (Fig. 7). From this point of the study, those five channels were used instead of 32.

4.2 Classification of emotions by using MLPNN

Final feature vectors were obtained by combining the features of EEG segments belonging to the selected channels (P3, FC2, AF3, O1 and Fp1). Thus, new feature vectors composed of 25 samples for every EEG segment related to positive and negative emotions were obtained. The formation procedure of final feature vectors with the selected five EEG channels is shown in Fig. 8. All procedures were applied separately for each participant.

The same procedure that was applied for channel selection was employed for the classification of emotions as well. MLPNN output vectors were defined as [1 0] for positive emotion and [0 1] for negative emotion. While training the network, 30 EEG segments are used and 10 EEG segments are used for testing. Single hidden layer with 25 × n × 2 architecture was used to classify EEG related with emotional states. The number of neurons used in the hidden layer is separately determined for each participant. In training stage, learning and momentum coefficients were 0.7 and 0.9, respectively.

The classification process was applied for each participant and results are shown in Table 2. According to SAM valence, two participants out of 22 lacked health assessment and for that reason classification process was not applied on them.

As shown in Table 2, the percentages of accuracy, specificity, and sensitivity are in the range of [60 90], [62.5 100] and [58.3 89.4], respectively. To estimate the overall performance of the MLPNN model, statistical measures (accuracy, specificity, sensitivity) were averaged.

4.3 Classification of emotion by using kNN algorithm

In this section, final feature vectors obtained from EEG segments were classified by using kNN algorithm. Since Euclidean distance measure method is frequently used in the literature [33], Euclidean distance measure was selected. Thirty EEG segments are used for the algorithm training and 10 EEG segment are used for testing. In order to increase reliability of the classification results, the training and testing data were randomly changed four times (fourfold cross-validation). Classification accuracies showed that k value offering the lowest error value varied for participants. From that point, k parameter is determined as 1 or 3 for each participant. The same procedure was applied for each participant and the classification performance in terms of statistical parameters is show in Table 3. As shown in the table, the percentages of accuracy, specificity, and sensitivity are in the range of [55 85], [53.5 100], and [63.1 85], respectively. To estimate the overall performance of the kNN model, statistical measures (accuracy, specificity, sensitivity) were averaged.

The highest classification accuracy was obtained as 85%, and it was determined for participants 12 and 20, as seen in Table 3. For 20 participants evaluated, the average classification accuracy was determined as 72.92% using kNN algorithm.

In order to compare the performance of two classifier algorithms, classification performances in terms of accuracy, sensitivity, and specificity are shown in Fig. 9.

5 Results and discussion

The aim of this section of the paper is to examine, discuss, and compare the results obtained from the proposed methods with channel selection. The discussion of findings obtained from this study is presented below.

-

(a)

Wavelet coefficients related to different emotions were obtained using DWT method. These wavelet coefficients were evaluated as feature vectors. The size of feature vectors was reduced by using five statistical parameters to get rid of the computing load.

-

(b)

Thirty-two channels for every participant were evaluated separately, and five channels were determined. MLPNN (5 × 10 × 2) structure was used for channel selection. The results revealed that similar channels (P3, FC2, AF3, O1 and Fp1) had the highest performance for every participant. The channels having the highest performance were selected for classification of emotions. From this point of the study, five channels were used instead of 32. The results revealed that the channels accepted as brain regions had relation with emotions. The selected channels determined in this study were also compatible with the channels selected in other papers [21].

-

(c)

Combined feature vectors of selected five channels were classified by using MLPNN and kNN methods. In reference to the literature, MLPNN is one of the most popular tools for EEG analysis. In this study, MLPNN was employed for determining the emotional state from the EEG signals. In this study, kNN algorithm was also used as a classifier for increasing the reliability of the results obtained by MLPNN. kNN is one of the most fundamental and simple classification methods. In many EEG applications, kNN algorithm is frequently used. The fact that the results produced by the two algorithms were close to each other. This matching support the process reliability.

-

(d)

As shown in Table 2, the percentages of accuracy, specificity, and sensitivity of MLPNN are in the ranges of [60 90], [62.5 100], and [58.3 89.4], respectively. These values indicate that the proposed MLPNN model is successful, and the test results also show that the generalization ability of MLPNN is well. On the other hand, the percentages of accuracy, specificity, and sensitivity of kNN are in the ranges of [55 85], [53.5 100], and [63.1 85], respectively (Table 3). It was observed that both classifiers showed parallel performance for each participant. The best classification accuracy of MLPNN was obtained as 90% (specificity: 100% and sensitivity: 83.3%) for participant 7. On the other part, the best classification accuracy of kNN was obtained as 85% for participant 12 and 20.

-

(e)

To estimate the overall performance of MLPNN and kNN classifiers, statistical measures (accuracy, specificity, sensitivity) were averaged. The comparison of averaged values for classification of emotions is shown in Fig. 10. As shown in the figure, the performance of MLPNN was higher than that of kNN. However, both methods can be accepted as successful.

-

(f)

It was concluded that MLPNN and kNN used in this study give good accuracy results for classification of emotions.

The channel selection has opened the door to improve the performance of automatic detection for emotion recognition. Recently, Zhang et al [20] used two EEG channels (F3 and F4) for feature extraction. Bhardwaj et al. [21] used three EEG channels (Fp1, P3 and O1) in order to detect emotion. Murugappan et al. [18] extracted features from 64, 24 and 8 EEG channels, respectively. When these studies were reviewed, it can be observed that the main EEG channels and region of brain was considered for detection of emotions. However, in this study, all EEG channels were evaluated and considered separately and five EEG channels that offer the best classification performance were determined. Final feature vectors were obtained by combining the features of those EEG channels, and the classification performance was improved. In literature, same database was used by Atkinson and Campos [34] for emotion recognition. They obtained the classification accuracy as 73.14% in valence dimension. In this study, the better accuracy was obtained by applying channel selection. Further, the channel selection has been used to improve the other areas of EEG classification.

6 Conclusion

The aim of this study was to classify EEG signals related to different emotions based on audiovisual stimuli with the preprocessing of channel selection.

In this study, the publicly available DEAP database related to emotional states were used. EEG signals recorded from 20 healthy subjects in Twente University were examined.

For classification of emotions, DWT was used as feature extraction, while MLPNN and kNN methods were used as classifiers.

In this study, dynamic channel selection was used for determining the channels having more relation with emotions. Five channels (P3, FC2, AF3, O1, and Fp1) having the highest performances selected successfully among the 32 channels with MLPNN.

The performance ranges of classification for emotions are compatible to both MLPNN and kNN. As shown from the results, the key points were feature and channel selections. It is considered that, with correct channels and features, the performance can be increased.

In literature, it can be seen that useful features can be obtained from the alpha, beta, and gamma bands as well as the tetra band in the detection of the emotional state from EEG signals. In future studies, the proposed model can be used for all sub-bands separately to identify the effectiveness of the bands in emotional activity. Furthermore, in order to increase the classification success, other physiological signals such as blood pressure, respiratory rate, body temperature, and GSR (galvanic skin response) can be used with EEG signals.

References

Petrrushin V (1999) Emotion in speech: recognition and application to call centers. In: Processing of the artificial networks in engineering conference, pp 7–10

Anderson K, McOwan P (2006) A real-time automated system for the recognition of human facial expression. IEEE Trans Syst Man Cybern B Cybern 36:96–105

Adeli H, Zhou Z, Dadmehr N (2003) Analysis of EEG records in an epileptic patient using wavelet transform. J Neurosci Methods 123(1):69–87

Atyabi A, Luerssen MH, Powers DMW (2013) PSO-based dimension reduction of EEG recordings: implications for subject transfer in BCI. Neurocomputing 119(7):319–331

Petrantonokis PC, Hadjileontiadis LJ (2010) Emotion recognition from EEG using higher order crossing. IEEE Trans Inf Technol Biomed 14(2):186–197

Khosrowbadi R, Quek HC, Wahab A, Ang KK (2010) EEG based emotion recognition using self-organizing map for boundary detection. In: International conference on pattern recognition, pp 4242–4245

Torres-Valencia C, Garcia-Arias HF, Alvarez Lopez M, Orozco-Gutierrez A (2014) Comparative analysis of physiological signals and electroencephalogram (EEG) for multimodal emotion recognition using generative models. In: 19th symposium on image, signal processing and artificial vision, Armenia-Quindio

Russell JA (1980) A circumplex model of affect. J Personal Soc Psychol 39:1161–1178

Wang XW, Nie D, Lu BL (2014) Emotional state classification from EEG data using machine learning approach. Neurocomputing 129:94–106

Kim J, Andre E (2006) Emotion recognition using physiological and speech signal in short-term observation. In: proceedings of the perception and interactive technologies, 4021:53–64

Brosschot J, Thayer J (2006) Heart rate response is longer after negative emotions than after positive emotions. Int J Psychophysiol 50:181–187

Kim K, Bang S, Kom S (2004) Emotion recognition system using short-term monitoring of physiological signals. Med Biol Eng Comput 42:419–427

Subaşı A, Erçelebi E (2005) Classification of EEG signals using neural network and logistic regression. Comput Methods Progr Biomed 78:87–99

Subası A (2007) Signal classification using wavelet feature extraction and a mixture of expert model. Expert Syst Appl 32:1084–1093

Fu K, Qu J, Chai YDY (2014) Classification of seizure based on the time-frequency image of EEG signals using HHT and SVM. Biomed Signal Process Control 13:15–22

Lopetegui E, Zapirain BG, Mendez A (2011) Tennis computer game with brain control using EEG signals. In: The 16th international conference on computer games, pp 228–234

Leeb R, Lancelle M, Kaiser V, Fellner DW, Pfurtscheller G (2013) Thinking Penguin: multimodal brain computer interface control of a VR game. IEEE Trans Comput Intell AI in Games 5(2):117–128

Murugappan M, Ramachandran N, Sazali Y (2010) Classification of human emotion from EEG using discrete wavelet transform. J. Biomed Sci Eng 3:390–396

Cahnel G, Kroneeg J, Grandjean D, Pun T (2005) Emotion assesstment: arousal evaluation using EEG’s and peripheral physiological signals, 24 rue du genaral dufour, Geneva

Zhang Q, Lee M (2009) Analysis of positive and negative emotions in natural scene using brain activity and GIST. Neurocomputing 72:1302–1306

Bahrdwaj A, Gupta A, Jain P, Rani A, Yadav J (2015) Classification of human emotions from EEG signals using SVM and LDA classifiers. In: 2nd international conference on signal processing and integrated networks (SPIN), pp 180–185

Lee G, Kwon M, Sri SK, Lee M (2014) Emotion recognition based on 3D fuzzy visual and EEG features in movie clips. Neurocomputing 144:560–568

DEAP: a dataset for emotion analysis EEG physiological and video signals (2012) http://www.eecs.qmul.ac.uk/mmv/datasets/deap/index.html. Accessed 01 May 2015

Koelstra S, Mühl C, Soleymani M, Lee J, Yazdani A, Ebrahimi T, Pun T, Nijholt A, Patras I (2012) DEAP: a database for emotion analysis using physiological signals. IEEE Trans Affect Comput 3(1):18–31

Bradley MM, Lang PJ (1994) Measuring emotions: the self-assessment manikin and the sematic differential. J Behav Ther Exp Psychiatry 25(1):49–59

Uusberg A, Thiruchselvam R, Gross J (2014) Using distraction to regulate emotion: insights from EEG theta dynamics. Int J Psychophysiol 91:254–260

Polat H, Ozerdem MS (2015) Reflection emotions based on different stories onto EEG signal. In: 23th conference on signal processing and communications applications, Malatya, pp 2618–2618

Kıymık MK, Akın M, Subaşı A (2004) Automatic recognition of alertness level by using wavelet transform and artificial neural network. J Neurosci Methods 139:231–240

Amato F, Lopez A, Mendez EMP, Vanhara P, Hampl A (2013) Artificial neural networks in medical diagnosis. J Appl Biomed 11:47–58

Haykin S (2009) Neural networks and learning machines, 3rd edn. Prentice Hall, New Jersey, p 906

Basheer IA, Hajmeer M (2000) Artificial neural networks: fundamentals computing design and application. J Microbiol Methods 43:3–31

Patnaik LM, Manyam OK (2008) Epileptic EEG detection using neural networks and post-classification. Comput Methods Progr Biomed 91:100–109

Berrueta LA, Alonso RM, Heberger K (2007) Supervised pattern recognition in food analysis. J Chromatogr A 1158:196–214

Atkinson J, Campos D (2016) Improving BCI–based emotion recognition by combining EEG feature selection and kernel classifiers. Expert Syst Appl 47:35–41

Acknowledgements

The authors would like to thank Julius Bamwenda for his support in editing the English language in the paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Özerdem, M.S., Polat, H. Emotion recognition based on EEG features in movie clips with channel selection. Brain Inf. 4, 241–252 (2017). https://doi.org/10.1007/s40708-017-0069-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40708-017-0069-3