Abstract

Partial differential equation models and their associated variational energy formulations are often rotationally invariant by design. This ensures that a rotation of the input results in a corresponding rotation of the output, which is desirable in applications such as image analysis. Convolutional neural networks (CNNs) do not share this property, and existing remedies are often complex. The goal of our paper is to investigate how diffusion and variational models achieve rotation invariance and transfer these ideas to neural networks. As a core novelty, we propose activation functions which couple network channels by combining information from several oriented filters. This guarantees rotation invariance within the basic building blocks of the networks while still allowing for directional filtering. The resulting neural architectures are inherently rotationally invariant. With only a few small filters, they can achieve the same invariance as existing techniques which require a fine-grained sampling of orientations. Our findings help to translate diffusion and variational models into mathematically well-founded network architectures and provide novel concepts for model-based CNN design.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Partial differential equations (PDEs) and variational methods are core parts of various successful model-based image processing approaches; see, e.g., [6, 11, 75] and the references therein. Such models often achieve invariance under transformations such as translations and rotations by design. These invariances reflect the physical motivation of the models: Transforming the input should lead to an equally transformed output.

Convolutional neural networks (CNNs) and deep learning [31, 42, 43, 66] have revolutionised the field of image processing in recent years. The flexibility of CNN models allows to apply them to various tasks in a plug-and-play fashion with remarkable performance. Due to their convolution structure, CNNs are shift invariant by design. However, they lack inherent rotation invariance. Proposed adaptations often inflate the network structure and rely on complex filter design with large stencils; see, e.g., [80].

In the present paper, we tackle these problems by translating rotationally invariant PDEs and their corresponding variational formulations into neural networks. This alternative view on rotation invariance within neural architectures yields novel design concepts which have not yet been explored in CNNs.

Since in the literature, multiple notions of rotation invariance exist, we define our terminology in the following. We call an operation rotationally invariant, if rotating its input yields an equally rotated output. Thus, rotation and operation are interchangeable. This notion follows the classical definition of rotation invariance for differential operators. Note that some recent CNN literature refers to this concept as equivariance.

1.1 Our contributions

We translate PDE and variational models into their corresponding neural architectures and identify how they achieve rotation invariance. We start with simple two-dimensional diffusion models for greyscale images. Extending the connection [2, 63, 86] between explicit schemes for these models and residual networks [36] (ResNets) leads to neural activation functions which couple network channels. Their result is based on a rotationally invariant measure involving specific channels representing differential operators.

By exploring multi-channel and multiscale diffusion models, we generalise the concept of coupling to ResNeXt [84] architectures as an extension of the ResNet. Activations which couple all network channels preserve rotation invariance, but allow to design anisotropic models with a directional filtering.

We derive three central design principles for rotationally invariant neural network design, discuss their effects on practical CNNs, and evaluate their effectiveness within an experimental evaluation. Our findings transfer inherent PDE concepts to CNNs and thus help to pave the way to more model-based and mathematically well-founded learning.

1.2 Related work

Several works connect numerical solution strategies for PDEs to CNN architectures [2, 44, 46, 55, 87] to obtain novel architectures with better performance or provable mathematical guarantees. Others are concerned with using neural networks to solve [16, 34, 59] or learn PDEs from data [45, 62, 64]. Moreover, the approximation capabilities [17, 32, 40, 71] and stability aspects [2, 10, 33, 61, 63, 70, 86] of CNNs are often analysed from a PDE viewpoint.

The connections between neural networks and variational methods have become a topic of intensive research. The idea of learning the regulariser in a variational framework has gained considerable traction and brought the performance of variational models to a new level [23, 47, 52, 58, 60]. The closely related idea of unrolling [50, 69] the steps of a minimising algorithm for a variational energy and learning its parameters has been equally prominent and successful [1, 5, 8, 13, 35, 38, 39].

We exploit and extend connections between variational models and diffusion processes [65], and their relations to residual networks [2, 63]. In contrast with our previous works [2, 4] which focussed on the one-dimensional setting and corresponding numerical algorithms, we now concentrate on two-dimensional diffusion models that incorporate different strategies to achieve rotation invariance. This allows us to transfer concepts of rotation invariance from PDEs to CNNs, which yield hitherto unexplored CNN design strategies.

A simple option to learn a rotationally invariant model is to perform data augmentation [68], where the network is trained on randomly rotated input data. This strategy, however, only approximates rotation invariance and is heavily dependent on the data at hand.

An alternative is to design the filters themselves in a rotationally invariant way, e.g. by weight restriction [12]. However, the resulting rotation invariance is too fine-grained: The filters as the smallest network component are not oriented. Thus, the model is not able to perform a directional filtering.

Other works [24, 41] create a set of rotated input images and apply filters with weight sharing to this set. Depending on the amount of sampled orientations, this can lead to large computational overhead.

An elegant solution for inherent rotation invariance is based on symmetry groups. Gens and Domingos [28] as well as Dieleman et al. [21] propose to consider sets of feature maps which are rotated versions of each other. This comes at a high memory cost as four times as many feature maps need to be processed. Marcos et al. [49] propose to rotate the filters instead of the features, with an additional pooling of orientations. However, the pooling reduces the directional information too quickly. A crucial downside of all these approaches is that they only use four orientations. This only yields a coarse approximation of rotation invariance.

This idea has been generalised to arbitrary symmetry groups by Cohen and Welling [15] through the use of group convolution layers. Group convolutions lift the standard convolution to other symmetry groups which can also include rotations, thus leading to rotation invariance by design. However, also there, only four rotations are considered. This is remedied by Weiler et al. [78, 80] who make use of steerable filters [27] to design a larger set of oriented filters. Duits et al. [22] go one step further by formulating all layers as solvers to parametrised PDEs. Similar ideas have been implemented with wavelets [67] and circular harmonics [83], and the group invariance concept has also been extended to higher dimensional data [14, 57, 79]. However, processing multiple orientations in dedicated network channels inflates the network architecture, and discretising the large set of oriented filters requires the use of large stencils.

We provide an alternative by means of a more sophisticated activation function design. By coupling specific network channels, we can achieve inherent rotation invariance without using large stencils or group theory, while still allowing for models to perform directional filtering. In a similar manner, Mrázek and Weickert proposed to design rotationally invariant wavelet shrinkage [51] by using a coupling wavelet shrinkage function. However, to the best of our knowledge coupling activation functions have not been considered in CNNs so far.

1.3 Organisation of the paper

We motivate our view on rotationally invariant design with a tutorial example in Sect. 2. Afterward, we review variational models and residual networks as the two other basic concepts in Sect. 3. In Sect. 4, we connect various diffusion models and their associated energies to their neural counterparts and identify central concepts for rotation invariance. We summarise our findings and discuss their practical implementation in Sect. 5 and conduct experiments on rotation invariance in Sect. 6. We finish the paper with our conclusions in Sect. 7.

2 Two views on rotational invariance

To motivate our viewpoint on rotationally invariant model design, we review a nonlinear diffusion filter of Weickert [73] for image denoising and enhancement. It achieves anisotropy by integrating one-dimensional diffusion processes over all directions. This integration model creates a family of greyscale images \(u(\varvec{x}, t): \varOmega \times [0, \infty ) \rightarrow \mathbb {R}\) on an image domain \(\varOmega \subset \mathbb {R}^2\) according to the integrodifferential equation

where \(\partial _{e_\theta }\) is a directional derivative along the orientation of an angle \(\theta \). The evolution is initialised as \(u(\cdot ,0) = f\) with the original image f, and reflecting boundary conditions are imposed. The model integrates one-dimensional nonlinear diffusion processes with different orientations \(\theta \). All of them share a nonlinear decreasing diffusivity function g which steers the diffusion in dependence of the local directional image structure \(\left| \partial _{e_\theta } u_\sigma \right| ^2\). Here, \(u_\sigma \) is a smoothed version of u which has been convolved with a Gaussian of standard deviation \(\sigma \).

As this model diffuses more along low contrast directions than along high contrast ones, it is anisotropic. It is still rotationally invariant, since it combines all orientations of the one-dimensional processes with equal importance. However, this concept comes at the cost of an elaborate discretisation. First, one requires a large amount of discrete rotation angles for a reasonable approximation of the integration. Discretising the directional derivatives in all these directions with a sufficient order of consistency requires the use of large filter stencils; cf. also [9]. The design of rotationally invariant networks such as [80] faces similar difficulties. Processing the input by applying several rotated versions of an oriented filter requires large stencils and many orientations.

A much simpler option arises when considering the closely related edge-enhancing diffusion (EED) model [74]

where \(\varvec{\nabla }= \left( \partial _x, \partial _y\right) ^\top \) denotes the gradient operator and \(\varvec{\nabla }^\top \) is the divergence. Instead of an integration, the right-hand side is given in divergence from. Thus, the process is now steered by a diffusion tensor \(\varvec{D} \left( \varvec{\nabla }u_\sigma \right) \). It is a \(2\times 2\) positive semi-definite matrix which is designed to adapt the diffusion process to local directional information by smoothing along, but not across dominant image structures. This is achieved by constructing \(\varvec{D}\) from its normalised eigenvectors \(\varvec{v}_1 \parallel \varvec{\nabla }u_\sigma \) and \(\varvec{v}_2 \bot \varvec{\nabla }u_\sigma \) which point across and along local structures. The corresponding eigenvalues \(\lambda _1 = g\left( \left| \varvec{\nabla }u_\sigma \right| ^2\right) \) and \(\lambda _2=1\) inhibit diffusion across dominant structures and allow smoothing along them. Thus, the diffusion tensor can be written as

Discretising the EED model (2) is much more convenient. For example, a discretisation of the divergence term with good rotation invariance can be performed on a \(3\times 3\) stencil, which is the minimal size for a consistent discretisation of a second order model [77].

This illustrates a central insight: One can replace a complex discretisation by a sophisticated design of the nonlinearity. This motivates us to investigate how rotationally invariant design principles of diffusion models translate into novel activation function designs.

3 Review: variational methods and residual networks

We now briefly review variational methods and residual networks as the other two central concepts in our work.

3.1 Variational regularisation

Variational regularisation [72, 82] obtains a function \(u(\varvec{x})\) on a domain \(\varOmega \) as the minimiser of an energy functional. A general form of such a functional reads

Therein, a data term D(u, f) drives the solution u to be close to an input image f, and a regularisation term R(u) enforces smoothness conditions on the solution. The balance between the terms is controlled by a positive smoothness parameter \(\alpha \).

We restrict ourselves to energy functionals with only a regularisation term and interpret the gradient descent to the energy as a parabolic diffusion PDE. This connection serves as one foundation for our findings. The variational framework is the simplest setting for analysing invariance properties, as these are automatically transferred to the corresponding diffusion process.

3.2 Residual networks

Residual networks (ResNets) [36] belong to the most popular neural network architectures to date. Their specific structure facilitates the training of very deep networks and shares a close connection to PDE models.

ResNets consist of chained residual blocks. A single residual block computes a discrete output \(\varvec{u}\) from an input \(\varvec{f}\) by means of

First, one applies an inner convolution to \(\varvec{f}\), which is modelled by a convolution matrix \(\varvec{W}_1\). In addition, one adds a bias vector \(\varvec{b}_1\). The result of this inner convolution is fed into an inner activation function \(\varphi _1\). Often, these activations are fixed to simple functions such as the rectified linear unit (ReLU) [53] which is a truncated linear function:

The activated result is convolved with an outer convolution \(\varvec{W}_2\) with a bias vector \(\varvec{b}_2\). Crucially, the result of this convolution is added back to the original input signal \(\varvec{f}\). This skip connection is the key to the success of ResNets, as it avoids the vanishing gradient phenomenon found in deep feed-forward networks [7, 36]. Lastly, one applies an outer activation function \(\varphi _2\) to obtain the output \(\varvec{u}\) of the residual block.

In contrast with diffusion processes and variational methods, these networks are not committed to a specific input dimensionality. In standard networks, the input is quickly deconstructed into multiple channels, each one concerned with different, specific image features. Each channel is activated independently, and information is exchanged through trainable convolutions. While this makes networks flexible, it does not take into account concepts such as rotation invariance. By translating rotationally invariant diffusion models into ResNets and extensions thereof, we will see that shifting the focus from the convolutions towards activations can serve as an alternative way to guarantee built-in rotation invariance within a network.

4 From diffusion PDEs and variational models to rotationally invariant networks

With the concepts from Sects. 2 and 3 , we are now in a position to derive diffusion-inspired principles of rotationally invariant network design.

4.1 Isotropic diffusion on greyscale images

We first consider the simplest setting of isotropic diffusion models for images with a single channel. By reviewing three popular models, we identify the common concepts for rotation invariance, and find a unifying neural network interpretation.

We start with the second order diffusion model of Perona and Malik [56], which is given by the PDE

with reflecting boundary conditions. This model creates a family of gradually simplified images \(u(\varvec{x}, t)\) according to the diffusivity \(g(s^2)\). It attenuates the diffusion at locations where the gradient magnitude of the evolving image is large. In contrast with the model of Weickert (1), the Perona–Malik model is isotropic, i.e. it does not have a preferred direction.

The variational counterpart of this model helps us to identify the cause of its rotation invariance. An energy for the Perona–Malik model can be written in the following way which allows different generalisations:

with an increasing regulariser function \(\varPsi \) which can be connected to the diffusivity g by \(g=\varPsi ^\prime \) [65]. Comparing the functional 8 to the one in (4), we have now specified the form of the regulariser to be \(R(u) =\varPsi \left( \text {tr}\left( \varvec{\nabla }u \varvec{\nabla }u^\top \right) \right) \).

The argument of the regulariser is the trace of the so-called structure tensor [26], here without Gaussian regularisation, which reads

This structure tensor is a \(2 \times 2\) matrix with eigenvectors \(\varvec{v}_1 \parallel \varvec{\nabla }u\) and \(\varvec{v}_2 \bot \varvec{\nabla }u\) parallel and orthogonal to the image gradient. The corresponding eigenvalues are given by \(\nu _1 = \left| \varvec{\nabla }u\right| ^2\) and \(\nu _2 = 0\), respectively. Thus, the eigenvectors span a local coordinate system where the axes point across and along dominant structures of the image, and the larger eigenvalue describes the magnitude of image structures.

The use of the structure tensor is the key to rotation invariance. A rotation of the image induces a corresponding rotation of the structure tensor and the structural information that it encodes: Its eigenvectors rotate along, and its eigenvalues remain unchanged. Consequently, the trace as the sum of the eigenvalues is rotationally invariant.

In the following, we explore other ways to design the energy functional based on rotationally invariant quantities and investigate how the resulting diffusion model changes.

The fourth-order model of You and Kaveh [85] relies on the Hessian matrix. The corresponding energy functional reads

Here, the regulariser takes the squared trace of the Hessian matrix \(\varvec{H}(u)\) as an argument. Since the trace of the Hessian is equivalent to the Laplacian \(\varDelta u\), the gradient flow of (10) can be written as

This is a fourth-order counterpart to the Perona–Malik model. Instead of the gradient operator, one considers the Laplacian \(\varDelta \). This change was motivated as one remedy to the staircasing effect of the Perona–Malik model [85].

The rotationally invariant matrix at hand is the Hessian \(\varvec{H}(u)\). In a similar manner as the structure tensor, the Hessian describes local structure and thus follows a rotation of this structure. Also in this case, the trace operation reduces the Hessian to a scalar that does not change under rotations.

To avoid speckle artefacts of the model of You and Kaveh, Lysaker et al. [48] propose to combine all entries of the Hessian in the regulariser. They choose the Frobenius norm of the Hessian \(||\varvec{H}(u)||^2_F\) together with a total variation regulariser. For more general regularisers, this model reads [20]

which yields a diffusion process of the form

where the differential operator \({\mathcal {D}}\) induced by the Frobenius norm reads

This shows another option how one can use the rotationally invariant information of the Hessian matrix. While the choice of a Frobenius norm instead of the trace operator changes the associated differential operators in the diffusion model, it does not destroy the rotation invariance property: The squared Frobenius norm is the sum of the squared eigenvalues of the Hessian, which in turn are rotationally invariant.

4.2 Coupled activations for operator channels

In the following, we extend the connections between residual networks and explicit schemes from [2, 63, 86] in order to transfer rotation invariance concepts to neural networks. To this end, we consider the generalised diffusion PDE

Here, we use a generalised differential operator \({\mathcal {D}}\) and its adjoint \({\mathcal {D}}^*\). This PDE subsumes the diffusion models (7), (11), and (13). Since the diffusivities take a scalar argument, we can express the diffusivity as \(g(|{\mathcal {D}} u|^2)\). The differential operator \(\mathcal D\) is induced by the associated energy functional.

To connect the generalised model (15) to a ResNet architecture, we first rewrite (15) by means of the vector-valued flux function \(\varvec{\varPhi }(\varvec{s}) = g(|\varvec{s}|^2)\, \varvec{s}\) as

Let us now consider an explicit discretisation for this diffusion PDE. The temporal derivative is discretised by a forward difference with time step size \(\tau \), and the spatial derivative operator \(\mathcal {D}\) is discretised by a convolution matrix \(\varvec{K}\). Consequently, the adjoint \(\mathcal {D}^*\) is discretised by \(\varvec{K}^\top \). Depending on the number of components of \(\mathcal {D}\), the matrix \(\varvec{K}\) implements a set of convolutions. This yields an explicit scheme for (16)

where a superscript k denotes the discrete time level. One can connect this explicit step (17) to a residual block (5) by identifying

and setting the bias vectors to \(\varvec{0}\) [2, 63, 86].

In contrast with the one-dimensional considerations in [2], the connection between flux function and activation in the two-dimensional setting yields additional, novel design concepts for activation functions. This yields the first design principle for neural networks.

Design Principle 1

Activation functions which couple network channels can be used to design rotationally invariant networks. At each position of the image, the channels of the inner convolution result are combined within a rotationally invariant quantity which determines the nonlinear response.

Activation functions which couple network channels can be used to design rotationally invariant networks. At each position of the image, the channels of the inner convolution result are combined within a rotationally invariant quantity which determines the nonlinear response.

The coupling effect of the diffusivity and the regulariser directly transfers to the activation function. This is apparent when the differential operator \({\mathcal {D}}\) contains multiple components. For example, consider an operator \({\mathcal {D}} = \left( {\mathcal {D}}_1, {\mathcal {D}}_2\right) ^\top \) with two components and its discrete variant \(\varvec{K} = \left( \varvec{K}_1, \varvec{K}_2\right) ^\top \).

The application of the operator \(\varvec{K}\) transforms the single-channel signal \(\varvec{u}^k\) into a signal with two channels. Then the activation function couples the information from both channels within the diffusivity g. For each pixel position i, j, we have

Afterwards, the application of \(\varvec{K}^\top \) reduces the resulting two-channel signal to a single channel again.

In the general case, the underlying differential operator \({\mathcal {D}}\) determines how many channels are coupled. The choice \({\mathcal {D}} = \left( \partial _{xx}, \partial _{xy}, \partial _{yx}, \partial _{yy}\right) ^\top \) of Lysaker et al. [48] induces a coupling of four channels containing second order derivatives. This shows that a central condition for rotation invariance is that the convolution \(\varvec{K}\) implements a rotationally invariant differential operator. We discuss the effects of this condition on the practical filter design in Sect. 5.

Diffusion block for an explicit diffusion step (17) with activation function \(\tau \varvec{\varPhi }\), time step size \(\tau \), and convolution filters \(\varvec{K}\). The activation function couples the channels of the operator \(\varvec{K}\)

We call a block of the form (17) a diffusion block. It is visualised in Fig. 1 in graph form. Nodes contain the state of the signal, while edges describe the operations to move from one state to another. We denote the channel coupling by a shaded connection to the activation function.

The coupling effect is natural in the diffusion case. However, to the best of our knowledge, this concept has not been proposed for CNNs in the context of rotation invariance.

4.3 Diffusion on multi-channel images

So far, the presented models have been isotropic. They only consider the magnitude of local image structures, but not their direction. However, we will see that anisotropic models inspire another form of activation function which combines directional filtering with rotation invariance.

To this end, we move to diffusion on multi-channel images. While there are anisotropic models for single-channel images [75], they require a presmoothing as shown in the EED model (2). However, such models do not have a conventional energy formulation [81]. The multi-channel setting allows one to design anisotropic models that do not require a presmoothing and arise from a variational energy.

In the following, we consider multi-channel images \(\varvec{u} = \left( u_1, u_2, \dots , u_M\right) ^\top \) with M channels. To distinguish them from the previously considered channels of the differential operator, we refer to image channels and operator channels in the following.

A naive extension of the Perona–Malik model (7) to multi-channel images would treat each image channel separately. Consequently, the energy would consider a regularisation of the trace of the structure tensor for each individual channel. This in turn does not respect the fact that structural information is correlated in the channels.

To incorporate this correlation, Gerig et al. [29] proposed to sum up structural information from all channels. An energy functional for this model reads

Here, we again use the trace formulation. It shows that this model makes use of a colour structure tensor, which goes back to Di Zenzo [18]. It is the sum of the structure tensors of the individual channels. In contrast with the single-channel structure tensor without Gaussian regularisation, no closed form solution for its eigenvalues and eigenvectors are available. Still, the sum of structure tensors stays rotationally invariant.

The corresponding diffusion process is described by the coupled PDE set

with reflecting boundary conditions. As trace and summation are interchangeable, the argument of the regulariser corresponds to a sum of channel-wise gradient magnitudes. Thus, the diffusivity considers information from all channels. It allows to steer the diffusion process in all channels depending on a joint structure measure.

Interestingly, a simple change in the energy model (20) incorporates directional information [76] such that the model becomes anisotropic. Switching the trace operator and the regulariser yields the energy

Now the regulariser acts on the colour structure tensor in the sense of a power series. Thus, the regulariser modifies the eigenvalues \(\nu _1, \nu _2\) to \(\varPsi \left( \nu _1\right) , \varPsi \left( \nu _2\right) \) and leaves the eigenvectors unchanged. For the \(2\times 2\) colour structure tensor we have

The eigenvalues are treated individually. This allows for an anisotropic model, as each eigenvalue determines the local image contrast along its corresponding eigenvector. Still, the model is rotationally invariant as the colour structure tensor rotates accordingly. Consequently, the trace of this regulariser is equivalent to the sum of the regularised eigenvalues:

This illustrates the crucial difference to the isotropic case, where we have

Both eigenvalues of the structure tensor are regularised jointly and the result is a scalar, which shows that no directional information can be involved. At this point, the motivation for using the structure tensor notation in the previous models becomes apparent: Switching the trace operator and the regulariser changes an isotropic model into an anisotropic one.

The gradient descent of the energy (22) is an anisotropic nonlinear diffusion model for multi-channel images [76]:

The diffusivity inherits the matrix-valued argument of the regulariser. Thus, it is applied in the same way and yields a \(2\times 2\) diffusion tensor. In contrast to single-channel diffusion, this creates anisotropy as its eigenvectors do not necessarily coincide with \(\varvec{\nabla }u\). Thus, the multi-channel case does not require Gaussian presmoothing.

We have seen that the coupling effect within the diffusivity goes beyond the channels of the differential operator. It combines both the operator channels as well as the image channels within a joint measure. Whether the model is isotropic or anisotropic is determined by the shape of the diffusivity result: Isotropic models use scalar diffusivities, while anisotropic models require matrix-valued diffusion tensors. In the following, we generalise this concept and analyse its influence on the ResNet architecture.

4.4 Coupled activations for image channels

A generalised formulation of the multichannel diffusion models (21) and (26) is given by

As the flux function uses more information than only \(\mathcal {D} u_m\), we switch to the notation \(\varvec{\varPhi }\left( \varvec{u}, \mathcal {D} u_m\right) \). An explicit scheme for this model is derived in a similar way as before, yielding

The activation function now couples more than just the operator channels, it couples all its input channels. In contrast to Design Principle 1, this coupling is more general and provides a second design principle.

Design Principle 2

(Fully Coupled Activations for Image Channels) Activations which couple both operator channels and image channels can be used to create anisotropic, rotationally invariant models. At each position of the image, all operator channels for all image channels are combined within a rotationally invariant quantity which determines the nonlinear response.

Different coupling effects serve different purposes: Coupling the image channels accounts for structural correlations and can be used to create anisotropy. Coupling the channels of the differential operators guarantees rotation invariance.

This design principle becomes apparent when explicitly formulating the activation function. Isotropic models use a scalar diffusivity within the flux function

which couples all channels of \(\varvec{u}\) at the position i, j, as well as all components of the discrete operator \(\varvec{K}\). Anisotropic models require a matrix-valued diffusion tensor in the flux function

This concept is visualised in Fig. 2 in the form of a fully coupled multi-channel diffusion block. To clarify the distinction between image and operator channels, we explicitly split the image into its channels. We see that all information of the inner filter passes through a single activation function and influences all outgoing results in the same manner.

Fully coupled multi-channel diffusion block for an explicit step (28) with a fully coupled activation function \(\tau \varvec{\varPhi }\), time step size \(\tau \), and convolution filters \(\varvec{K}\). The activation function couples all operator and image channels of its input jointly. Depending on the design of the activation, the resulting model can be isotropic or anisotropic

Design Principle 2 shows that coupling cannot only be used for rotationally invariant design, but also makes sense for implementing modelling aspects such as anisotropy. This is desirable as anisotropic models often exhibit higher performance through better adaptivity to data.

4.5 Integrodifferential diffusion

The previous models work on the finest scale of the image. However, image structures live on different scales of the image. Large image structures are present on coarser scales than fine ones. Generating a structural measure which incorporates information from multiple image scales can be beneficial.

To this end, we consider integrodifferential extensions of single scale diffusion which have proven advantageous in practical applications such as denoising [3]. In analogy to the multi-channel diffusion setting, these models inspire a full coupling of scale information for a variation in residual networks.

We start with the energy functional

We denote the scale parameter by \(\sigma \) and assume that the differential operators \({\mathcal {D}}^{(\sigma )}\) are dependent on the scale. This can be realised for example by an adaptive presmoothing of an underlying differential operator; see e.g. [3, 19].

Instead of summing structure tensors over image channels, this model integrates generalised structure tensors \(\left( {\mathcal {D}}^{(\sigma )} u\right) \left( {\mathcal {D}}^{(\sigma )} u\right) ^\top \) over multiple scales. This results in a multiscale structure tensor [3] which contains a semi-local measure for image structure. If \({\mathcal {D}}^{(\sigma )}\) are rotationally invariant operators, then the multiscale structure tensor is also invariant.

The corresponding diffusion model reads

where \(g = \varPsi ^\prime \). Due to the chain rule, one obtains two integrations over the scales: The outer integration combines diffusion processes on each scale. The inner integration, where the scale variable has been renamed to \(\gamma \), accumulates multiscale information within the diffusivity argument.

This model is a variant of the integrodifferential isotropic diffusion model of Alt and Weickert [3]. Therein, however, the diffusivity uses a scale-adaptive contrast parameter. Thus, it does not arise from an energy functional.

As in the multi-channel diffusion models, switching trace and regulariser yields an anisotropic model, which is described by the energy

In analogy to the multi-channel model, the regulariser is applied directly to the structure tensor, which creates anisotropy. Consequently, the resulting diffusion process is a variant of the integrodifferential anisotropic diffusion [3]:

The anisotropic regularisation is inherited by the diffusivity and results in a flux function that implements a matrix-vector multiplication.

4.6 Coupled activations for image scales

Both the isotropic and the anisotropic multiscale models can be summarised by the flux formulation

To discretise this model, we now require a discretisation of the scale integral. To this end, we select a set of L discrete scales \(\sigma _1, \sigma _2, \dots , \sigma _L\). On each scale \(\sigma _\ell \), we employ discrete differential operators \(\varvec{K}_\ell \). This yields an explicit scheme for the continuous model (35) which reads

Here, \(\omega _\ell \) is a step size over the scales, discretising the infinitesimal quantity \(\mathrm{d}\sigma \). It is dependent on the scale to allow a non-uniform sampling of scales \(\sigma _\ell \). A simple choice is \(\omega _\ell = \sigma _{\ell +1} - \sigma _{\ell }\).

Interestingly, an extension of residual networks called ResNeXt [84] provides the corresponding neural architecture to this model. Therein, the authors consider a sum of transformations of the input signal together with a skip connection. We restrict ourselves to the following formulation:

This ResNeXt block modifies the input image \(\varvec{f}\) within L independent paths and sums up the results before the skip connection. Each path may apply multiple, differently shaped convolutions. Choosing a single path with \(L=1\) yields the ResNet model.

We can identify an explicit multiscale diffusion step (36) with a ResNeXt block (37) by

and all bias vectors \(\varvec{b}_{1,\ell }, \varvec{b}_{2,\ell }\) are set to \(\varvec{0}\), for all \(\ell =1,\dots ,L\).

In contrast with the previous ResNet relation (18), we apply different filters \(\varvec{K}_\ell \) in each path. Their individual results are summed up before the skip connection, which approximates the scale integration. While the ResNeXt block allows for individual activation functions in each path, we use a common activation with a full coupling for all of them. This constitutes a variant of Design Principle 2, where one now couples image scales.

Design Principle 3

(Fully Coupled Activations for Image Scales) Activations which couple both operator channels and image scales can be used to create anisotropic, rotationally invariant multiscale models. At each position of the image, all operator channels for all image scales are combined within a rotationally invariant quantity which determines the nonlinear response.

Fully coupled multiscale diffusion block for an explicit multiscale diffusion step (36) with a single activation function \(\tau \omega _\ell \varvec{\varPhi }\), time step size \(\tau \), and convolution filters \(\varvec{K}_\ell \) on each scale. The activation function couples all inputs jointly. Depending on the design of the activation, the resulting model can be isotropic or anisotropic

Also in this case, the combined coupling serves different purposes. Coupling the operator channels yields rotation invariance, and coupling of scales allows to obtain a more global representation of the image structure. Isotropic models employ a coupling with a scalar diffusivity in the flux function

and a matrix-valued diffusion tensor in the flux function

can be used to create anisotropic models.

We call a block of the form (36) a fully coupled multiscale diffusion block. This block is visualised in Fig. 3. Comparing its form to that of the multichannel diffusion block in Fig. 2, one can see that different architectures use the same activation function design, however, with different motivations.

5 Discussion

We have seen that shifting the design focus from convolutions to activation functions can yield new insights into CNN design. We summarise all models that we have considered in Table 1 as a convenient overview.

All variational models are rotationally invariant, as they rely on a structural measure which accounts for rotations. This directly transfers to the diffusion model, its explicit scheme, and thus also its network counterpart, resulting in Design Principle 1. Moreover, the different coupling options for models with multiple scales and multiple channels show how a sophisticated activation design can steer the model capacity. This has led to the additional Design Principles 2 and 3 .

The coupling effects are naturally motivated for diffusion, but are hitherto unexplored in the CNN world. While activation functions such as maxout [30] and softmax introduce a coupling of their input arguments, they only serve the purpose of reducing channel information. Even though some works focus on using trainable and more advanced activations [13, 25, 54], the coupling aspect has not been considered so far.

The rotation invariance of the proposed architectures can be approximated efficiently in the discrete setting. For example for second order models, Weickert et al. [77] present \(L^2\) stable discretisations with good practical rotation invariance that only require a \(3\times 3\) stencil. For models of second order, this is the smallest possible discretisation stencil which still yields consistent results.

In a practical setting with trainable filters, one is not restricted to the differential operators that we have encountered so far. To guarantee that the learned filter corresponds to a rotationally invariant differential operator, one has several options. For example, one can design the filters based on a dictionary of operators which fulfil the rotation invariance property, which are then combined into more complex operators through trainable weights. In a similar manner, one can employ different versions of a base operator which arise from a rotationally invariant operation, e.g. a Gaussian smoothing. We pursue this strategy in our experiments in the following section in analogy to [3].

Apart from the coupling aspect, the underlying network architecture is not modified. This is a stark contrast to the CNN literature where a set of orientations is discretised, requiring much larger stencils for a good approximation of rotation invariance. We neither require involved discretisations, nor a complicated lifting to groups. Thus, we regard the proposed activation function design as a promising alternative to the directional splitting idea.

6 Experiments

In the following, we present an experimental evaluation to support our theoretical considerations. To this end, we design trainable multiscale diffusion models for denoising. We compare models with and without coupling activations, and evaluate their performance on differently rotated datasets. This shows that the Design Principle 1 is indeed necessary for rotation invariance.

6.1 Experimental setup

We train the isotropic and anisotropic multiscale diffusion models (32) and (34). Both perform a full coupling of all scales, i.e. they implement Design Principles 1 and 3 . As a counterpart, we train the same multiscale diffusion model with the diffusivity applied to each channel of the discrete derivative operator separately. Thus, the activation is applied independently in each direction. This violates Design Principle 1. Hence, the model should yield worse rotation invariance than the coupled models.

Still, all models implement Design Principle 3 by integrating multiscale information. For an evaluation of the importance of this design principle we refer to [3], where multiscale models outperform their single scale counterparts.

The corresponding explicit scheme for the considered models is given by

The choice for \(\omega _\ell \) is set to \(\sigma _{\ell +1}-\sigma _{\ell }\).

As differential operators \(\varvec{K}_\ell \), we choose weighted, Gaussian smoothed gradients \(\beta _\ell \varvec{\nabla }_{\sigma _\ell }\) on each scale \(\sigma _\ell \). The application of a smoothed gradient to an image via \(\varvec{\nabla }_{\sigma } u = G_{\sigma } *\varvec{\nabla }u\) is equivalent to computing a Gaussian convolution with standard deviation \(\sigma \) of the image gradient. Moreover, we weight the differential operators on each scale by a scale-adaptive, trainable parameter \(\beta _\ell \).

A discrete set of \(L=8\) scales is determined by an exponential sampling between a minimum scale of \(\sigma _{\text {min}}=0.1\) and a maximum one of \(\sigma _{\text {max}}=10\). This yields discrete scales [0.1, 0.18, 0.32, 0.56, 1.0, 1.77, 3.16, 5.62].

To perform edge-preserving denoising, we choose the exponential Perona–Malik [56] diffusivity

It attenuates the diffusion at locations where the argument exceeds a contrast parameter \(\lambda \). This parameter is trained in addition to the scale-adaptive weights.

Moreover, we train the time step size \(\tau \) and we use 10 explicit steps with shared parameter sets. This amounts to a total number of 10 trainable parameters: \(\tau \), \(\lambda \), and \(\beta _1\) to \(\beta _8\).

In the practical setting, a discretisation with good rotation invariance is crucial. We use the nonstandard finite difference discretisation of Weickert et al. [77]. It implements the discrete divergence term

on a stencil of size \(3\times 3\). For isotropic models, it has a free parameter \(\alpha \in \left[ 0, \frac{1}{2}\right] \) which can be tuned for rotation invariance, with an additional parameter \(\gamma \in \left[ 0, 1\right] \) for anisotropic ones. We found that in the denoising case, the particular choice of these two parameters constitutes a trade-off between performance and rotation invariance.

We train all models on a synthetic dataset which consists of greyscale images of size \(256\times 256\) with values in the range \(\left[ 0, 255\right] \). Each image contains 20 randomly placed white rectangles of size \(140\times 70\) on a black background. The rectangles are all oriented along a common direction, which creates a directional bias within the dataset. The training set contains 100 images and is oriented with an angle of \(30^\circ \) from the x-axis. As test datasets, we consider rotated versions of a similar set of 50 images. The rotation angles are sampled between \(0^\circ \) and \(90^\circ \) in steps of \(5^\circ \). To avoid an influence of the image sampling on the evaluation, we exclude the axis-aligned datasets.

To train the models for the denoising task, we add noise of standard deviation 60 to the clean training images and minimise the Euclidean distance to the ground truth images. We measure the denoising quality in terms of peak-signal-to-noise ratio (PSNR). All models are trained for 250 epochs with the Adam optimiser [37] with standard settings and a learning rate of 0.001. One training epoch requires 50 seconds on an NVIDIA GeForce GTX 1060 6GB, and the evaluation on one of the test sets requires 7 seconds.

6.2 Evaluation

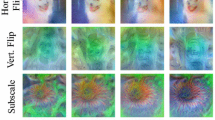

A rotationally invariant model should produce the same PSNR on all rotations of the test dataset. Thus, in Fig. 4 we plot the PSNR on the test datasets against their respective rotation angle.

We see that the fluctuations within both anisotropic and isotropic coupled models are much smaller than those within the uncoupled model. A choice of \(\alpha =0.41\) and \(\gamma =0\) yields a good balance between performance and rotation invariance. However, rotation invariance can also be driven to the extreme: A choice of \(\alpha =0.5\), which renders the choice of \(\gamma \) irrelevant, eliminates rotational fluctuations almost completely, but also drastically reduces the quality. The reason for this is given by Weickert et al. [77]: A value of \(\alpha =0.5\) decouples the image grid into two decoupled checkerboard grids which do not communicate except at the boundaries.

For the balanced choice of \(\alpha =0.41\), the anisotropic model consistently outperforms the isotropic one, as it can smooth along oriented structures. As the uncoupled model can only do this for structures which are aligned with the x- and y-axes, it performs better the closer the rotation is to \(0^\circ \) and \(90^\circ \), respectively. Hence, it performs worst for a rotation angle of \(45^\circ \). Thus, it does not achieve rotation invariance.

We measure the rotation invariance in terms of the variance of the test errors over the rotation angles. While the isotropic and anisotropic coupled models with \(\alpha =0.41\) achieve variances of 0.035 dB and 0.014 dB, the uncoupled model suffers from a variance of 1.25 dB. The extreme choice of \(\alpha =0.5\) even reduces the variances of the coupled models to 0.013 dB and \(8.7\cdot 10^{-4}\) dB, respectively.

A visual inspection of the results in Fig. 5 supports this trend. Therein, we present the denoised results on an example from the test data set with \(45^\circ \) orientation. The uncoupled model suffers from ragged edges as the training on the differently rotated dataset has introduced a directional bias. The coupled isotropic model preserves the edges far better, and the coupled anisotropic model can even smooth along them to obtain the best reconstruction quality.

These findings show that the coupling effect leads to significantly better rotation invariance properties.

7 Conclusions

We have seen that the connection between diffusion and neural networks allows to bring novel concepts for rotation invariance to the world of CNNs. The models which we considered inspire different activation function designs, which we summarise in Table 1.

The central design principle for rotation invariance is a coupling of operator channels. Diffusion models and their associated variational energies apply their respective nonlinear design functions to rotationally invariant quantities based on a coupling of multi-channel differential operators. Thus, the activation function as their neural counterpart should employ this coupling, too. Moreover, coupling image channels or scales in addition allows to create anisotropic models with better measures for structural information.

This strategy provides an elegant and minimally invasive modification of standard architectures. Thus, coupling activation functions constitute a promising alternative to the popular network designs of splitting orientations and group methods in orientation space. Evaluating these concepts in practice and transferring them to more general neural network models are part of our ongoing work.

References

Adler, J., Öktem, O.: Solving ill-posed inverse problems using iterative deep neural networks. Inverse Probl. 33, Article no. 124007 (2017)

Alt, T., Peter, P., Weickert, J., Schrader, K.: Translating numerical concepts for PDEs into neural architectures. In: Elmoataz, A., Fadili, J., Quéau, Y., Rabin, J., Simon, L. (eds.) Scale Space and Variational Methods in Computer Vision. Lecture Notes in Computer Science, vol. 12679, pp. 294–306. Springer, Cham (2021)

Alt, T., Weickert, J.: Learning integrodifferential models for denoising. In: Proceedings of the 2021 IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 2045–2049. IEEE Computer Society Press, Toronto, Canada (2021)

Alt, T., Weickert, J., Peter, P.: Translating diffusion, wavelets, and regularisation into residual networks. arXiv:2002.02753v3 [cs.LG] (2020)

Arridge, S., Maas, P., Öktem, O., Schönlieb, C.: Solving inverse problems using data-driven models. Acta Numer. 28, 1–174 (2019)

Aubert, G., Kornprobst, P.: Mathematical Problems in Image Processing: Partial Differential Equations and the Calculus of Variations, Applied Mathematical Sciences, vol. 147, 2nd edn. Springer, New York (2006)

Bengio, Y., Simard, P., Frasconi, P.: Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 5(2), 157–166 (1994)

Bibi, A., Ghanem, B., Koltun, V., Ranftl, R.: Deep layers as stochastic solvers. In: Proceedings of the 7th International Conference on Learning Representations. New Orleans, LA (2019)

Bodduna, K., Weickert, J., Cárdenas, M.: Multi-frame super-resolution from noisy data. In: Elmoataz, A., Fadili, J., Quéau, Y., Rabin, J., Simon, L. (eds.) Scale Space and Variational Methods in Computer Vision. Lecture Notes in Computer Science, vol. 12679, pp. 565–576. Springer, Cham (2021)

Bungert, L., Raab, R., Roith, T., Schwinn, L., Tenbrinck, D.: CLIP: Cheap Lipschitz training of neural networks. In: Elmoataz, A., Fadili, J., Quéau, Y., Rabin, J., Simon, L. (eds.) Scale Space and Variational Methods in Computer Vision. Lecture Notes in Computer Science, vol. 12679, pp. 307–319. Springer, Cham (2021)

Chan, T.F., Shen, J.: Image Processing and Analysis: Variational, PDE, Wavelet, and Stochastic Methods. SIAM, Philadelphia (2005)

Chen, Y., Lyu, Z.X., Kang, X., Wang, Z.J.: A rotation-invariant convolutional neural network for image enhancement forensics. In: Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 2111–2115. IEEE Computer Society Press, Calgary, Canada (2018)

Chen, Y., Pock, T.: Trainable nonlinear reaction diffusion: a flexible framework for fast and effective image restoration. IEEE Trans. Pattern Anal. Mach. Intell. 39(6), 1256–1272 (2016)

Cohen, T., Geiger, M., Koehler, J., Welling, M.: Spherical CNNs. In: Proceedings of the 6th International Conference on Learning Representations. Vancouver, Canada (2018)

Cohen, T., Welling, M.: Group equivariant convolutional networks. In: Balcan, M.F., Weinberger, K.Q. (eds.) Proceedings of the 33rd International Conference on Machine Learning, Proceedings of Machine Learning Research, vol. 48, pp. 2990–2999. New York City, NY (2016)

Combettes, P.L., Pesquet, J.: Deep neural network structures solving variational inequalities. Set-Valued Var. Anal. 28(3), 491–518 (2020)

Daubechies, I., DeVore, R., Foucart, S., Hanin, B., Petrova, G.: Nonlinear approximation and (deep) ReLU networks. Constr. Approx. (2021) (Online first)

Di Zenzo, S.: A note on the gradient of a multi-image. Comput. Vis. Graph. Image Process. 33, 116–125 (1986)

Didas, S., Weickert, J.: Integrodifferential equations for continuous multiscale wavelet shrinkage. Inverse Probl. Imaging 1(1), 47–62 (2007)

Didas, S., Weickert, J., Burgeth, B.: Properties of higher order nonlinear diffusion filtering. J. Math. Imaging Vis. 35, 208–226 (2009)

Dieleman, S., De Fauw, J., Kavukcuoglu, K.: Exploiting cyclic symmetry in convolutional neural networks. In: Proceedings of the 33rd International Conference on Machine Learning, Proceedings of Machine Learning Research, vol. 48, pp. 1889–1898. New York, NY (2016)

Duits, R., Smets, B., Bekkers, E., Portegies, J.: Equivariant deep learning via morphological and linear scale space PDEs on the space of positions and orientations. In: Elmoataz, A., Fadili, J., Quéau, Y., Rabin, J., Simon, L. (eds.) Scale Space and Variational Methods in Computer Vision. Lecture Notes in Computer Science, vol. 12679, pp. 27–39. Springer, Cham (2021)

Fablet, R., Drumetz, L., Rousseau, F.: End-to-end learning of variational models and solvers for the resolution of interpolation problems. In: Proceedings of the 2021 IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 2360–2364. IEEE Computer Society Press, Toronto, Canada (2021)

Fasel, B., Gatica-Perez, D.: Rotation-invariant neoperceptron. In: Proceedings of the 18th International Conference on Pattern Recognition, vol. 3, pp. 336–339. IEEE Computer Society Press, Hong Kong (2006)

Feng, W., Qiao, P., Xi, X., Chen, Y.: Image denoising via multiscale nonlinear diffusion models. SIAM J. Imaging Sci. 10(3), 1234–1257 (2017)

Förstner, W., Gülch, E.: A fast operator for detection and precise location of distinct points, corners and centres of circular features. In: Proceedings of the ISPRS Intercommission Conference on Fast Processing of Photogrammetric Data, pp. 281–305. Interlaken, Switzerland (1987)

Freeman, W.T., Adelson, E.H.: The design and use of steerable filters. IEEE Trans. Pattern Anal. Mach. Intell. 13(9), 891–906 (1991)

Gens, R., Domingos, P.: Deep symmetry networks. In: Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N.D., Weinberger, K.Q. (Eds.) Proceedings of the 28th International Conference on Neural Information Processing Systems, Advances in Neural Information Processing Systems, vol. 27, pp. 2537–2545. Montréal, Canada (2014)

Gerig, G., Kübler, O., Kikinis, R., Jolesz, F.A.: Nonlinear anisotropic filtering of MRI data. IEEE Trans Med. Imaging 11, 221–232 (1992)

Goodfellow, I., Warde-Farley, D., Mirza, M., Courville, A., Bengio, Y.: Maxout networks. In: Dasgupta, S., McAllester, D. (Eds.) Proceedings of the 30th International Conference on Machine Learning, Proceedings of Machine Learning Research, vol. 28, pp. 1319–1327. Atlanta, GA (2013)

Goodfellow, I.J., Bengio, Y., Courville, A.: Deep Learning. MIT Press, Cambridge (2016)

Gribonval, R., Kutyniok, G., Nielsen, M., Voigtlaender, F.: Approximation spaces of deep neural networks. Constr. Approx. (2021). Online first

Haber, E., Ruthotto, L.: Stable architectures for deep neural networks. Inverse Probl. 34(1), Article no. 014004 (2017)

Han, J., Jentzen, A., E, W.: Solving high-dimensional partial differential equations using deep learning. Proc. Natl. Acad. Sci. 115(34), 8505–8510 (2018)

Hasannasab, M., Hertrich, J., Neumayer, S., Plonka, G., Setzer, S., Steidl, G.: Parseval proximal neural networks. J. Fourier Anal. Appl. 26, 59 (2020)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778. IEEE Computer Society Press, Las Vegas, NV (2016)

Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. arXiv:1412.6980v1 [cs.LG] (2014)

Kobler, E., Effland, A., Kunisch, K., Pock, T.: Total deep variation for linear inverse problems. In: Proceedings of the 2020 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 7549–7558. IEEE Computer Society Press, Seattle, WA (2020)

Kobler, E., Klatzer, T., Hammernik, K., Pock, T.: Variational networks: connecting variational methods and deep learning. In: Roth, V., Vetter, T. (eds.) Pattern Recognition. Lecture Notes in Computer Science, vol. 10496, pp. 281–293. Springer, Cham (2017)

Kutyniok, G., Petersen, P., Raslan, M., Schneider, R.: A theoretical analysis of deep neural networks and parametric PDEs. Constr. Approx. (2021). Online first

Laptev, D., Savinov, N., Buhmann, J.M., Pollefeys, M.: TI-POOLING: Transformation-invariant pooling for feature learning in convolutional neural networks. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, pp. 289–297. IEEE Computer Society Press, Las Vegas, NV (2016)

LeCun, Y., Bengio, Y., Hinton, G.: Deep learning. Nature 521, 436–444 (2015)

LeCun, Y., Bottou, L., Bengio, Y., Haffner, P.: Gradient-based learning applied to document recognition. Proc. IEEE 86(11), 2278–2324 (1998)

Li, M., He, L., Lin, Z.: Implicit Euler skip connections: enhancing adversarial robustness via numerical stability. In: Daumé III, H., Singh, A. (Eds.) Proceedings of the 37th International Conference on Machine Learning, Proceedings of Machine Learning Research, vol. 119, pp. 5874–5883. Vienna, Austria (2020)

Long, Z., Lu, Y., Dong, B.: PDE-net 2.0: learning PDEs from data with a numeric-symbolic hybrid deep network. J. Comput. Phys. 399(2197), Article no. 108925 (2019)

Lu, Y., Zhong, A., Li, Q., Dong, B.: Beyond finite layer neural networks: bridging deep architectures and numerical differential equations. In: Dy, J., Krause, A. (Eds.) Proceedings of the 35th International Conference on Machine Learning, Proceedings of Machine Learning Research, vol. 80, pp. 3276–3285. Stockholm, Sweden (2018)

Lunz, S., Schönlieb, C., Öktem, O.: Adversarial regularizers in inverse problems. In: Bengio, S., Wallach, H., Larochelle, H., Grauman, K., Cesa-Bianchi, N., Garnett, R. (Eds.) Proceedings of the 32nd International Conference on Neural Information Processing Systems, Advances in Neural Information Processing Systems, vol. 31, pp. 8516–8525. Montréal, Canada (2018)

Lysaker, M., Lundervold, A., Tai, X.C.: Noise removal using fourth-order partial differential equations with applications to medical magnetic resonance images in space and time. IEEE Trans. Image Process. 12(12), 1579–1590 (2003)

Marcos, D., Volpi, M., Tuia, D.: Learning rotation invariant convolutional filters for texture classification. In: Proceedings of the 23rd International Conference on Pattern Recognition, vol. 2, pp. 2012–2017. Cancun, Mexico (2016)

Monga, V., Li, Y., Eldar, Y.C.: Algorithm unrolling: interpretable, efficient deep learning for signal and image processing. IEEE Signal Process. Mag. 38(2), 18–44 (2021)

Mrázek, P., Weickert, J.: Rotationally invariant wavelet shrinkage. In: Michaelis, B., Krell, G. (eds.) Pattern Recognition. Lecture Notes in Computer Science, vol. 2781, pp. 156–163. Springer, Berlin (2003)

Mukherjee, S., Dittmer, S., Shumaylov, Z., Lunz, S., Öktem, O., Schönlieb, C.: Learned convex regularizers for inverse problems. arXiv:2008.02839v2 [cs.LG] (2021)

Nair, V., Hinton, G.E.: Rectified linear units improve restricted Boltzmann machines. In: Proceedings of the 27th International Conference on Machine Learning, pp. 807–814. Haifa, Israel (2010)

Ochs, P., Meinhardt, T., Leal-Taixe, L., Möller, M.: Lifting layers: Analysis and applications. In: Ferrari, V., Herbert, M., Sminchisescu, C., Weiss, Y. (eds.) Computer Vision–ECCV 2018. Lecture Notes in Computer Science, vol. 11205, pp. 53–68. Springer, Cham (2018)

Ouala, S., Pascual, A., Fablet, R.: Residual integration neural network. In: Proceedings of the 2019 IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 3622–3626. IEEE Computer Society Press, Brighton, UK (2019)

Perona, P., Malik, J.: Scale space and edge detection using anisotropic diffusion. IEEE Trans. Pattern Analy. Mach. Intell. 12, 629–639 (1990)

Poulenard, A., Rakotosaona, M., Ponty, Y., Ovsjanikov, M.: Effective rotation-invariant point CNN with spherical harmonics kernels. In: Proceedings of the 2019 International Conference on 3D Vision, pp. 47–56. IEEE Computer Society Press, Quebec City, Canada (2019)

Prost, J., Houdard, A., Almansa, A., Papadakis, N.: Learning local regularization for variational image restoration. In: Elmoataz, A., Fadili, J., Quéau, Y., Rabin, J., Simon, L. (eds.) Scale Space and Variational Methods in Computer Vision. Lecture Notes in Computer Science, vol. 12679, pp. 358–370. Springer, Cham (2021)

Raissi, M., Perdikaris, P., Karniadakis, G.E.: Physics-informed neural networks: a deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 378, 686–707 (2019)

Romano, Y., Elad, M., Milanfar, P.: The little engine that could: regularization by denoising (RED). SIAM J. Imaging Sci. 10(4), 1804–1844 (2017)

Rousseau, F., Drumetz, L., Fablet, R.: Residual networks as flows of diffeomorphisms. J. Math. Imaging Vis. 62, 365–375 (2020)

Rudy, S.H., Brunton, S.L., Proctor, J.L., Kutz, J.N.: Data-driven discovery of partial differential equations. Sci. Adv. 3(4), Article no. e1602614 (2017)

Ruthotto, L., Haber, E.: Deep neural networks motivated by partial differential equations. J. Math. Imaging Vis. 62, 352–364 (2020)

Schaeffer, H.: Learning partial differential equations via data discovery and sparse optimization. Proc. R. Soc. Lond. Ser. A 473(2197), Article no. 20160446 (2017)

Scherzer, O., Weickert, J.: Relations between regularization and diffusion filtering. J. Math. Imaging Vis. 12(1), 43–63 (2000)

Schmidhuber, J.: Deep learning in neural networks: an overview. Neural Netw. 61, 85–117 (2015)

Sifre, L., Mallat, S.: Rotation, scaling and deformation invariant scattering for texture discrimination. In: Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, pp. 1233–1240. IEEE Computer Society Press, Portland, OR (2013)

Simard, P.Y., Steinkraus, D., Platt, J.C.: Best practices for convolutional neural networks applied to visual document analysis. In: Proceedings of the 7th International Conference on Document Analysis and Recognition, pp. 958–963. Edinburgh (2003)

Sulam, J., Aberdam, A., Beck, A., Elad, M.: On multi-layer basis pursuit, efficient algorithms and convolutional neural networks. IEEE Trans. Pattern Anal. Mach. Intell. 42(8), 1968–1980 (2019)

Terris, M., Repetti, A., Pesquet, J., Wiaux, Y.: Building firmly nonexpansive convolutional neural networks. In: Proceedings of the 2020 IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 8658–8662. Barcelona, Spain (2020)

Thorpe, M., van Gennip, Y.: Deep limits of residual neural networks. arXiv:1810.11741v2 [math.CA] (2019)

Tikhonov, A.N.: Solution of incorrectly formulated problems and the regularization method. Sov. Math. Doklady 4, 1035–1038 (1963)

Weickert, J.: Anisotropic diffusion filters for image processing based quality control. In: Fasano, A., Primicerio, M. (Eds.) Proceedings of the Seventh European Conference on Mathematics in Industry, pp. 355–362. Teubner, Stuttgart (1994)

Weickert, J.: Theoretical foundations of anisotropic diffusion in image processing. Comput. Suppl. 11, 221–236 (1996)

Weickert, J.: Anisotropic Diffusion in Image Processing. Teubner, Stuttgart (1998)

Weickert, J., Brox, T.: Diffusion and regularization of vector- and matrix-valued images. In: Nashed, M.Z., Scherzer, O. (eds.) Inverse Problems, Image Analysis, and Medical Imaging, Contemporary Mathematics, vol. 313, pp. 251–268. AMS, Providence (2002)

Weickert, J., Welk, M., Wickert, M.: \({L}^2\)-stable nonstandard finite differences for anisotropic diffusion. In: Kuijper, A., Bredies, K., Pock, T., Bischof, H. (eds.) Scale Space and Variational Methods in Computer Vision. Lecture Notes in Computer Science, vol. 7893, pp. 390–391. Springer, Berlin (2013)

Weiler, M., Cesa, G.: General E(2)-equivariant steerable CNNs. In: Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E.B., Garnett, R. (Eds.) Proceedings of the 33rd International Conference on Neural Information Processing Systems, Advances in Neural Information Processing Systems, vol. 32, pp. 14334–14345. Vancouver, Canada (2019)

Weiler, M., Geiger, M., Welling, M., Boomsma, W., Cohen, T.: 3D steerable CNNs: Learning rotationally equivariant features in volumetric sdata. In: Bengio, S., Wallach, H., Larochelle, H., Grauman, K., Cesa-Bianchi, N., Garnett, R. (Eds.) Proceedings of the 32nd International Conference on Neural Information Processing Systems, Advances in Neural Information Processing Systems, vol. 31, pp. 10402–10413. Montréal, Canada (2018)

Weiler, M., Hamprecht, F.A., Storath, M.: Learning steerable filters for rotation equivariant CNNs. In: Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, pp. 849–858. IEEE Computer Society Press, Salt Lake City, UT (2018)

Welk, M.: Diffusion, pre-smoothing and gradient descent. In: Elmoataz, A., Fadili, J., Quéau, Y., Rabin, J., Simon, L. (eds.) Scale Space and Variational Methods in Computer Vision. Lecture Notes in Computer Science, vol. 12679, pp. 78–90. Springer, Cham (2021)

Whittaker, E.T.: A new method of graduation. Proc. Edinb. Math. Soc. 41, 65–75 (1923)

Worrall, D.E., Garbin, S.J., Turmukhambetov, D., Brostow, G.J.: Harmonic networks: deep translation and rotation equivariance. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, pp. 5028–5037. IEEE Computer Society Press, Honolulu, HI (2017)

Xie, S., Girshick, R., Dollár, P., Tu, Z., He, K.: Aggregated residual transformations for deep neural networks. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, pp. 1492–1500. IEEE Computer Society Press, Honolulu, HI (2017)

You, Y.L., Kaveh, M.: Fourth-order partial differential equations for noise removal. IEEE Trans. Image Process. 9(10), 1723–1730 (2000)

Zhang, L., Schaeffer, H.: Forward stability of ResNet and its variants. J. Math. Imaging Vis. 62, 328–351 (2020)

Zhu, M., Chang, B., Fu, C.: Convolutional neural networks combined with Runge–Kutta methods. In: Proceedings of the 7th International Conference on Learning Representations. New Orleans, LA (2019)

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (Grant Agreement No. 741215, ERC Advanced Grant INCOVID).

Missing Open Access funding information has been added in the Funding Note.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Alt, T., Schrader, K., Weickert, J. et al. Designing rotationally invariant neural networks from PDEs and variational methods. Res Math Sci 9, 52 (2022). https://doi.org/10.1007/s40687-022-00339-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40687-022-00339-x