Abstract

Quantized or low-bit neural networks are attractive due to their inference efficiency. However, training deep neural networks with quantized activations involves minimizing a discontinuous and piecewise constant loss function. Such a loss function has zero gradient almost everywhere (a.e.), which makes the conventional gradient-based algorithms inapplicable. To this end, we study a novel class of biased first-order oracle, termed coarse gradient, for overcoming the vanished gradient issue. A coarse gradient is generated by replacing the a.e. zero derivative of quantized (i.e., staircase) ReLU activation composited in the chain rule with some heuristic proxy derivative called straight-through estimator (STE). Although having been widely used in training quantized networks empirically, fundamental questions like when and why the ad hoc STE trick works, still lack theoretical understanding. In this paper, we propose a class of STEs with certain monotonicity and consider their applications to the training of a two-linear-layer network with quantized activation functions for nonlinear multi-category classification. We establish performance guarantees for the proposed STEs by showing that the corresponding coarse gradient methods converge to the global minimum, which leads to a perfect classification. Lastly, we present experimental results on synthetic data as well as MNIST dataset to verify our theoretical findings and demonstrate the effectiveness of our proposed STEs.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Deep neural networks (DNNs) have been the main driving force for the recent wave in artificial intelligence (AI). They have achieved remarkable success in a number of domains including computer vision [14, 19], reinforcement learning [18, 23] and natural language processing [4], to name a few. However, due to the huge number of model parameters, the deployment of DNNs can be computationally and memory intensive. As such, it remains a great challenge to deploy DNNs on mobile electronics with low computational budget and limited memory storage.

Recent efforts have been made to the quantization of weights and activations of DNNs while in the hope of maintaining the accuracy. More specifically, quantization techniques constrain the weights or/and activation values to low-precision arithmetic (e.g., 4-bit) instead of using the conventional floating-point (32-bit) representation [2, 12, 17, 31,32,33]. In this way, the inference of quantized DNNs translates to hardware-friendly low-bit computations rather than floating-point operations. That being said, quantization brings three critical benefits for AI systems: energy efficiency, memory savings and inference acceleration.

The approximation power of weight quantized DNNs was investigated in [6, 8], while the recent paper [22] studies the approximation power of DNNs with discretized activations. On the computational side, training quantized DNNs typically calls for solving a large-scale optimization problem, yet with extra computational and mathematical challenges. Although people often quantize both the weights and activations of DNNs, they can be viewed as two relatively independent subproblems. Weight quantization basically introduces an additional set constraint that characterizes the quantized model parameters, which can be efficiently carried out by projected gradient-type methods [5, 10, 15, 16, 28, 30]. Activation quantization (i.e., quantizing ReLU), on the other hand, involves a staircase activation function with zero derivative almost everywhere (a.e.) in place of the subdifferentiable ReLU. Therefore, the resulting composite loss function is piecewise constant and cannot be minimized via the (stochastic) gradient method due to the vanished gradient.

To overcome this issue, a simple and hardware-friendly approach is to use a straight-through estimator (STE) [1, 9, 26]. More precisely, one can replace the a.e. zero derivative of quantized ReLU with an ad hoc surrogate in the backward pass, while keeping the original quantized function during the forward pass. Mathematically, STE gives rise to a biased first-order oracle computed by an unusual chain rule. This first-order oracle is not the gradient of the original loss function because there exists a mismatch between the forward and backward passes. Throughout this paper, this STE-induced type of “gradient” is called coarse gradient. While coarse gradient is not the true gradient, in practice it works as it miraculously points toward a descent direction (see [26] for a thorough study in the regression setting). Moreover, coarse gradient has the same computational complexity as standard gradient. Just like the standard gradient descent, the minimization procedure of training activation quantized networks simply proceeds by repeatedly moving one step at current point in the opposite of coarse gradient with some step size. The performance of the resulting coarse gradient method, e.g., convergence property, naturally relies on the choice of STE. How to choose a proper STE so that the resulting training algorithm is provably convergent is still poorly understood, especially in the nonlinear classification setting.

1.1 Related works

The idea of STE dated back to the classical perceptron algorithm [20, 21] for binary classification. Specifically, the perceptron algorithm attempts to solve the empirical risk minimization problem:

where \(({{\varvec{x}}}_i, y_i)\) is the \(i^{\mathrm {th}}\) training sample with \(y_i\in \{\pm 1\}\) being a binary label; for a given input \({{\varvec{x}}}_i\), the single-layer perceptron model with weights \({{\varvec{w}}}\) outputs the class prediction \(\text{ sign }({{\varvec{x}}}_i^{\top }{{\varvec{w}}})\). To train perceptrons, Rosenblatt [20] proposed the following iteration for solving (1.1) with the step size \(\eta >0\):

We note that the above perceptron algorithm is not the same as gradient descent algorithm. Assuming the differentiability, the standard chain rule computes the gradient of the \(i^{\mathrm {th}}\) sample loss function by

Comparing (1.3) with (1.2), we observe that the perceptron algorithm essentially uses a coarse (and fake) gradient as if \((\text{ sign})^\prime \) composited in the chain rule was the derivative of identity function being the constant 1.

The idea of STE was extended to train deep networks with binary activations [9]. Successful experimental results have demonstrated the effectiveness of the empirical STE approach. For example, [1] proposed a STE variant which uses the derivative of sigmoid function instead of identity function. [11] used the derivative of hard tanh function, i.e., \(1_{\{|x|\le 1\}}\), as an STE in training binarized neural networks. To achieve less accuracy degradation, STE was later employed to train DNNs with quantized activations at higher bit-widths [2, 3, 12, 29, 32], where some other STEs were proposed including the derivatives of standard ReLU (\(\max \{x, 0\}\)) and clipped ReLU (\(\min \{\max \{x, 0\}, 1\}\)).

Regarding the theoretical justification, it has been established that the perceptron algorithm in (1.2) with identity STE converges and perfectly classifies linearly separable data; see, for example, [7, 25] and references therein. Apart from that, to our knowledge, there had been almost no theoretical justification of STE until recently: [26] considered a two-linear-layer network with binary activation for regression problems. The training data are assumed to be instead linearly non-separable, being generated by some underlying model with true parameters. In this setting, [26] proved that the working STE is actually non-unique and that the coarse gradient algorithm is descent and converges to a valid critical point if choosing the STE to be the proxy derivative of either ReLU (i.e., \(\max \{x, 0\}\)) or clipped ReLU function (i.e., \(\min \{\max \{x, 0\}, 1\}\)). Moreover, they proved that the identity STE fails to give a convergent algorithm for learning two-layer networks, although it works for single-layer perception.

Quantized activation functions. \(\tau \) is a value determined in the network training; see Sect. 8.2

1.2 Main contributions

Figure 1 shows examples of 1-bit (binary) and 2-bit (ternary) activations. We see that a quantized activation function zeros out any negative input, while being increasing on the positive half. Intuitively, a working surrogate of the quantized function used in backward pass should also enjoy this monotonicity, as conjectured by [26] which proved the effectiveness of coarse gradient for two specific STEs: derivatives of ReLU and clipped ReLU, and for binarized activation. In this work, we take a further step toward understanding the convergence of coarse gradient methods for training networks with general quantized activations and for classification of linearly non-separable data. A major analytical challenge we face here is that the network loss function is not in closed analytical form, in sharp contrast to [26]. We present more general results to provide meaningful guidance on how to choose STE in activation quantization. Specifically, we study multi-category classification of linearly non-separable data by a two-linear-layer network with multi-bit activations and hinge loss function. We establish the convergence of coarse gradient methods for a broad class of surrogate functions. More precisely, if a function \(g:{\mathbb {R}}\rightarrow {\mathbb {R}}\) satisfies the following properties:

-

\(g(x) = 0\) for all \(x\le 0\),

-

\(g'(x) \ge \delta >0\) for all \(x>0\) with some constant \(\delta \),

then with proper learning rate, the corresponding coarse gradient method converges and perfectly classifies the nonlinear data when \(g^\prime \) serves as the STE during the backward pass. This gives the affirmation of a conjecture in [26] regarding good choices of STE for a classification (rather than regression) task under weaker data assumptions, e.g., allowing non-Gaussian distributions.

1.3 Notations

We have Table 1 for notations used in this paper.

2 Problem setup

2.1 Data assumptions

In this section, we consider the n-ary classification problem in the d-dimensional space \({\mathcal {X}}={\mathbb {R}}^{d}\). Let \({\mathcal {Y}}=[n]\) be the set of labels, and for \(i\in [n]\) let \({\mathcal {D}}_i\) be probabilistic distributions over \({\mathcal {X}}\times {\mathcal {Y}}\). Throughout this paper, we make the following assumptions on the data:

-

1.

(Separability) There are n orthogonal subspaces \(V_i \subseteq {\mathcal {X}}\), \(i\in [n]\) where \(\dim V_i=d_i\), such that

$$\begin{aligned} \mathop {{\mathbb {P}}}_{\{{\varvec{x}},y\}\sim {\mathcal {D}}_i}\left[ {\varvec{x}}\in {\mathcal {V}}_i\text { and }y=i\right] =1, \; \text{ for } \text{ all } i \in [n]. \end{aligned}$$ -

2.

(Boundedness of data) There exist positive constants m and M, such that

$$\begin{aligned} \mathop {{\mathbb {P}}}_{\{{\varvec{x}},y\}\sim {\mathcal {D}}_i}\left[ m<\left| {\varvec{x}}\right| <M\right] =1, \; \text{ for } \text{ all } i \in [n]. \end{aligned}$$ -

3.

(Boundedness of p.d.f.) For \(i\in [n]\), let \(p_i\) be the marginal probability distribution function of \({\mathcal {D}}_i\) on \({\mathcal {V}}_i\). For any \({{\varvec{x}}}\in {\mathcal {V}}_i\) with \(m<\left| {\varvec{x}}\right| <M\), it holds that

$$\begin{aligned} 0< p_i( {{\varvec{x}}})<p_{\text {max}}<\infty . \end{aligned}$$

Later on, we denote \({\mathcal {D}}\) to be the evenly mixed distribution of \({\mathcal {D}}_i\) for \(i\in [n]\).

Remark 1

The orthogonality of subspaces \({\mathcal {V}}_i\)’s in the data assumption (1) above is technically needed for our proof here. However, the convergence in Theorem 3.1 to a perfect classification with random initialization is observed in more general settings when \({\mathcal {V}}_i\)’s form acute angles and contain a certain level of noise. We refer to Sect. 8.1 for supporting experimental results.

Remark 2

Assumption (3) can be relaxed to the following, while the proof remains basically the same.

\({\mathcal {D}}_i\) is a mixture of \(n_i\) distributions, namely \({\mathcal {D}}_{i,j}\) for \(j\in [n_i]\). There exists a linear decomposition of \({\mathcal {V}}_i=\bigoplus _{j=1}^{n_i}{\mathcal {V}}_{i,j}\) and \({\mathcal {D}}_{i,j}\); each has a marginal probability distribution function \(p_{i,j}\) on \({\mathcal {V}}_{i,j}\). For any \({{\varvec{x}}}\in {\mathcal {V}}_{i,j}\) and \(<m<|{{\varvec{x}}}|<M\), it holds that

2.2 Network architecture

We consider a two-layer neural architecture with k hidden neurons. Denote by \({{\varvec{W}}}=\left[ {\varvec{w}}_1,\ldots ,{\varvec{w}}_{k}\right] \in {\mathbb {R}}^{d\times k}\) the weight matrix in the hidden layer. Let

the input to the activation function, or the so-called pre-activation. Throughout this paper, we make the following assumptions:

Assumption 1

The weight matrix in the second layer \({\varvec{V}}=[{\varvec{v}}_1,\ldots ,{\varvec{v}}_n]\) is fixed and known in the training process and satisfies:

-

1.

For any \(i\in [n]\), there exists some \(j\in [k]\) such that \(v_{i,j}>0\).

-

2.

If \(v_{i,j}>0\), then for any \(r\in [n]\) and \(r\not =i\), we have \(v_{r,j}=0\).

-

3.

For any \(i\in [n]\) and \(j\in [k]\), we have \(v_{i,j}<1\).

One can easily show that as long as \(k\ge n\), such a matrix \({\varvec{V}}=(v_{i,j})\) is ubiquitous.

For any input data \({\varvec{x}}\in {\mathcal {X}}={\mathbb {R}}^{d}\), the neural net output is

where

The \(\sigma (\cdot )\) is the quantized ReLU function acting element-wise; see Fig. 1, for example, of binary and ternary activation functions. More general quantized ReLU function of the bit-width b can be defined as follows:

The prediction is given by the network output label

ideally \({\hat{y}}({{\varvec{x}}})=i\) for all \({{\varvec{x}}}\in {\mathcal {V}}_i\). The classification accuracy in percentage is the frequency that this event occurs (when network output label \({\hat{y}}\) matches the true label) on a validation data set.

Given the data sample \(\{{{\varvec{x}}}, y\}\), the associated hinge loss function reads

To train the network with quantized activation \(\sigma \), we consider the following population loss minimization problem

where the sample loss \(l\left( {{\varvec{W}}}; \{{{\varvec{x}}}, y\}\right) \) is defined in (2.2). Let \(l_i\) be the population loss function of class i with the label \(y=i\), \(i \in [n]\). More precisely,

Thus, we can rewrite the loss function as

Note that the population loss

fails to have simple closed-form solution even if \(p_i\) are constant functions on their supports. We do not have closed-form formula at hand to analyze the learning process, which makes our analysis challenging.

For notational convenience, we define:

and

2.3 Coarse gradient methods

We see that derivative of quantized ReLU function \(\sigma \) is a.e. zero, which gives a trivial gradient of sample loss function with respect to (w.r.t.) \({\varvec{w}}_j\). Indeed, differentiating the sample loss function with respect to \({\varvec{w}}_j\), we have

where \(\xi =\mathop {\text {argmax}}_{i\not =y}o_i\).

The partial coarse gradient w.r.t. \({{\varvec{w}}}_j\) associated with the sample \(\{{{\varvec{x}}}, y\}\) is given by replacing \(\sigma '\) with a straight-through estimator (STE) which is the derivative of function g, namely

The sample coarse gradient \({\tilde{\nabla }}l({{\varvec{W}}};\{{{\varvec{x}}},y\})\) is just the concatenation of \({\tilde{\nabla }}_{{\varvec{w}}_j}l({{\varvec{W}}};\{{{\varvec{x}}},y\})\)’s. It is worth noting that coarse gradient is not an actual gradient, but some biased first-order oracle which depends on the choice of g.

Throughout this paper, we consider a class of surrogate functions during the backward pass with the following properties:

Assumption 2

\(g:{\mathbb {R}}\rightarrow {\mathbb {R}}\) satisfies

-

1.

\(g(x) = 0\) for all \(x\le 0\).

-

2.

\(g'(x)\in [\delta ,{{\tilde{\delta }}}]\) for all \(x>0\) with some constants \(0<\delta<{{\tilde{\delta }}}<\infty \).

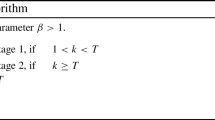

Such a g is ubiquitous in quantized deep networks training; see Fig. 2, for example, of g(x) satisfying Assumption 2. Typical examples include the classical ReLU \(g(x) = \max (x, 0)\) and log-tailed ReLU [2]:

where \(q_b := 2^b-1\) is the maximum quantization level. In addition, if the input of the activation function is bounded by a constant, one also can use \(g(x)=\max \{0,q_b (1- e^{-x/q_b})\}\), which we call reverse exponential STE.

To train the network with quantized activation \(\sigma \), we use the expectation of coarse gradient over training samples:

where \({\tilde{\nabla }}l({{\varvec{W}}};\{{{\varvec{x}}},y\})\) is given by (2.4). In this paper, we study the convergence of coarse gradient algorithm for solving the minimization problem (2.3), which takes the following iteration with some learning rate \(\eta >0\):

3 Main result and outline of proof

We show that if the iterates \(\{{{\varvec{W}}}^t\}\) are uniformly bounded in t, coarse gradient decent with the proxy function g under Assumption 2 converges to a global minimizer of the population loss, resulting in a perfect classification.

Theorem 3.1

Suppose data assumptions (1)–(3) and STE Assumptions 1–2 hold. If the network initialization satisfies \({{\varvec{w}}}_{j,i}^0\not =0\) for all \(j\in [k]\) and \(i\in [n]\) and \({{\varvec{W}}}^t\) is uniformly bounded by R in t, then for all \(v_{i,j}>0\) we have

Furthermore, if \({{\varvec{W}}}^\infty \) is an accumulation point of \(\{{{\varvec{W}}}^t\}\) and all nonzero unit vectors \(\tilde{{\varvec{w}}}_{j,i}^\infty \)’s are distinct for all \(j\in [k]\) and \(i\in [n]\), then

We outline the major steps in the proof below.

Step 1: Decompose the population loss into n components. Recall the definition of \(l_i\) which is population loss functions for \(\{{{\varvec{x}}},y\}\sim {\mathcal {D}}_i\). In Sect. 4, we show under certain decomposition of \({{\varvec{W}}}\), the coarse gradient decent of each one of them only affects a corresponding component of \({{\varvec{W}}}\).

Step 2: Bound the total increment of weight norm from above. Show that for all \(v_{i,j}>0\), \(|{\varvec{w}}_{j,i}|\)’s are monotonically increasing under coarse gradient descent. Based on boundedness on \({{\varvec{W}}}\), we further give an upper bound on the total increment of all \(|{\varvec{w}}_j|\)’s, from which the convergence of coarse gradient descent follows.

Step 3: Show that when the coarse gradient vanishes, so does the population loss. In Sect. 6, we show that when the coarse gradient vanishes toward the end of training, the population loss is zero which implies a perfect classification.

4 Space decomposition

With \({\mathcal {V}}=\bigoplus _{i=1}^n {\mathcal {V}}_i\), we have the orthogonal complement of \({\mathcal {V}}\) in \({\mathcal {X}}={\mathbb {R}}^d\), namely \({\mathcal {V}}_{n+1}\). Now, we can decompose \({\mathcal {X}}={\mathbb {R}}^d\) into \(n+1\) linearly independent parts:

and for any vector \({\varvec{w}}_j\in {\mathbb {R}}^d\), we have a unique decomposition of \({\varvec{w}}_j\):

where \({{\varvec{w}}}_{j,i}\in {\mathcal {V}}_i\) for \(i\in [n+1]\). To simply notation, we let

Lemma 4.1

For any \({{\varvec{W}}}\in {\mathbb {R}}^{k\times d}\) and \(i\in [n]\), we have

Proof

Note that for any \({{\varvec{x}}}\in {\mathcal {V}}_i\) and \(j\in [k]\), we have \({{\varvec{x}}}\in {\mathcal {V}}\), so

and

Hence,

for all \({{\varvec{W}}}\in {\mathbb {R}}^{d\times k}\), \({{\varvec{x}}}\in {\mathcal {V}}_i\). The desired result follows. \(\square \)

Lemma 4.2

Running the algorithm (2.5) on \(l_i\) only does not change the value of \({{\varvec{W}}}_r\) for all \(r\not =i\). More precisely, for any \({{\varvec{W}}}\in {\mathbb {R}}^{d\times k}\), let

then for any \(r\in [n]\) and \(r\not =i\)

Proof of Lemma 4.2

Assume \(i,r\in [n]\) and \(i\not =r\). Note that

and

Since \({\mathcal {V}}_i\)’s are linearly independent, we have

and

\(\square \)

By the above result, we know (2.5) is equivalent to

5 Learning dynamics

In this section, we show that some components of the weight iterates have strictly increasing magnitude whenever coarse gradient does not vanish, and it quantifies the increment during each iteration.

Lemma 5.1

Assume

we have the following estimate:

Proof of Lemma 5.1

\(\square \)

Lemma 5.2

For any \(j\in [k]\), if

we have

where

Proof of Lemma 5.2

First, we prove an inequality which will be used later. Recall that \(|{{\varvec{x}}}|\le M\), and that \({\tilde{\nabla }}_{{\varvec{w}}_j}l({{\varvec{W}}},\{{{\varvec{x}}},y\})\not =0\) only when \({\varvec{x}}\in \Omega _{{{\varvec{W}}}}^j\). Hence, we have \(\left\langle \tilde{{\varvec{w}}}_{j,i},{{\varvec{x}}}\right\rangle >0\). We have

Now, we use Fubini’s theorem to simplify the inner product:

Now, using the inequality just proved above, we have

Combining the above two inequalities, we have

\(\square \)

Lemma 5.3

If \({\tilde{v}}_{i,j}>0\) in Lemma 5.2, then \(\{|{\varvec{w}}_{j,i}^{t}|\}\) in Eq. (2.1) is non-decreasing with coarse gradient decent (2.5). Moreover, under the same assumption, we have

where \(C_p\) is defined as in Lemma 5.2 and \({\hat{v}}_j\) as in Lemma 5.1.

Proof of Lemma 5.3

Since \({\varvec{w}}_{j,i}^{t+1}={\varvec{w}}_{j,i}^{t}-\frac{\eta }{n}{\tilde{\nabla }}_{{\varvec{w}}_j}l_i({{\varvec{W}}}^t)\), we have

Hence, it follows from Lemmas 5.1 and 5.2 that

which is the desired result. \(\square \)

Note that one component of \({\varvec{w}}_j\) is increasing but the weights are bounded by assumption, hence, summation of the increments over all steps should also be bounded. This gives the following proposition:

Proposition 1

Assume \(\{|{\varvec{w}}_j^t|\}\) is bounded by R, then if \({\tilde{v}}_{i,j}>0\) in Lemma 5.2, then

where \(C_p\) is as defined in Lemma 5.2 and \({\hat{v}}_j\) defined in Lemma 5.1. This implies that

as long as \({\tilde{v}}_{i,j}>0\).

Remark 3

Lemmas 5.1, 5.2, 5.3 and Proposition 1 were proved without Assumption 1. Under Assumption 1, we have \({\hat{v}}_j=\max _{i\in [n]}v_{i,j}\) in Lemma 5.1 and \({\tilde{v}}_{i,j}={\hat{v}}_j\) if \(v_{i,j}>0\) and \({\tilde{v}}_{i,j}=-{\hat{v}}_j\) if \(v_{i,j}=0\) in Lemma 5.2.

6 Landscape properties

We have shown that under boundedness assumptions, the algorithm will converge to some point where the coarse gradient vanishes. However, this does not immediately indicate the convergence to a valid point because coarse gradient is a fake gradient. We will need the following lemma to prove Proposition 2, which confirms that the points with zero coarse gradient are indeed global minima.

Lemma 6.1

Let \(\Omega =\left\{ {{\varvec{x}}}\in {\mathbb {R}}^l:m<|{{\varvec{x}}}|<M\right\} \), where \(0<m<M<\infty \). For \(j\in [k]\), let \(\Omega _j=\left\{ {{\varvec{x}}}:\langle {{\varvec{w}}}_j,{{\varvec{x}}}\rangle >a\right\} \), where \(a\ge 0\) and \(\Omega _i\not =\Omega _j\) for all \(i\not =j\). If for \(i\in [k]\) and \({{\varvec{x}}}\in \Omega _i\cap \Omega \), there exists some \(j\not =i\) such that \({{\varvec{x}}}\in \Omega _j\), then

Proof (Proof of Lemma 6.1)

Define \({\tilde{\Omega }}=\bigcup _{j=1}^k\Omega _j\), by De Morgan’s law, we have

Note that k is finite and \({\varvec{0}}\in \Omega _j^c\) for all \(j\in [k]\), we know \({\tilde{\Omega }}^c\) is a generalized polyhedron and hence either

or

The first case is trivial. We show that the second case contradicts our assumption. Note that

we know there exists some \(j^\star \in [k]\) such that \({\mathcal {H}}^{l-1}\left( \partial \Omega _{j^\star }\cap \Omega \right) >0.\) It follows from our assumption that \({\tilde{\Omega }}=\mathop {\cup }_{j=1}^k\Omega _j=\mathop {\cup }_{j\not =j^\star }\Omega _j\), and hence,

Note that \(\partial \Omega _j\)’s are hyperplanes. Therefore, \(\Omega _j=\Omega _{j^\star }\), contradicting with our assumption that all \(\Omega _j\)’s are distinct. \(\square \)

The following result shows that the coarse gradient vanishes only at a global minimizer with zero loss, except for some degenerate cases.

Proposition 2

Under Assumption 1, if \({\tilde{\nabla }}_{{\varvec{w}}_j} l_i({{\varvec{W}}})={\varvec{0}}\) for all \({\tilde{v}}_{i,j}>0\) and \(\tilde{{\varvec{w}}}_{j,i}\)’s are distinct, then \(l_i({{\varvec{W}}})=0\).

Proof of Proposition 2

For quantized ReLU function, let \(q_b :=\max \limits _{x\in {\mathbb {R}}}\sigma (x)\) be the maximum quantization level, so that

Note that

By assumption, \({\tilde{\nabla }}_{{\varvec{w}}_j} l_i({{\varvec{W}}})={\varvec{0}}\) for all \({\tilde{v}}_{i,j}>0\) which implies \(\mathbb {1}_{\Omega _{{{\varvec{W}}}}}({{\varvec{x}}})\mathbb {1}_{\Omega _{{\varvec{w}}_j}^a}({{\varvec{x}}})=0\) for all \({\tilde{v}}_{i,j}>0\) and \(a\in [n]\) almost surely. Now, for any \({{\varvec{x}}}\in \Omega _{{\varvec{w}}_j}^a\) we have \({{\varvec{x}}}\not \in \Omega _{{{\varvec{W}}}}\). Note that \({{\varvec{x}}}\in \Omega _{{{\varvec{W}}}}\) if and only if \(o_i-o_\xi \ge 1\), then for any \({\varvec{x}}\in \Omega _{{\varvec{w}}_j}^a\), since \(v_{i,j}-v_{\xi ,j}<1\), there exist \(j'\not =j\) and \(a'\in [n]\) such that \(v_{i,j'}>0\) and \({{\varvec{x}}}\in \Omega _{{\varvec{w}}_{j'}}^{a'}\). By Lemma 6.1, \(\mathop {{\mathbb {P}}}_{\{{{\varvec{x}}},y\}\sim {\mathcal {D}}_i}\left[ \Omega _{{{\varvec{W}}}}\right] =0\) is empty, and thus, \(l_i({{\varvec{W}}})=0\). \(\square \)

The following lemma shows that the expected coarse gradient is continuous except at \({\varvec{w}}_{j,i}={\varvec{0}}\) for some \(j\in [k]\).

Lemma 6.2

Consider the network in (2.1). \({\tilde{\nabla }}_{{\varvec{w}}_j}l_i({{\varvec{W}}})\) is continuous on

Proof of Lemma 6.2

It suffices to prove the result for \(j\in [k]\). Note that

For any \({{\varvec{W}}}^0\) satisfying our assumption, we know

The desired result follows from the dominant convergence theorem. \(\square \)

7 Proof of main results

Equipped with the technical lemmas, we present:

Proof of Theorem 3.1

It is easily noticed from Assumption 1 that \(v_{i,j}>0\) if and only if \({\tilde{v}}_{i,j}>0\). By Lemma 5.3, if \(v_{i,j}>0\) and \(|{\varvec{w}}_{j,i}^0|>0\), then \(|{\varvec{w}}_{j,i}^t|>0\) for all t. Since \({{\varvec{W}}}\) is randomly initialized, we can ignore the possibility that \({\varvec{w}}_{j,i}^0={\varvec{0}}\) for some \(j\in [k]\) and \(i\in [n]\). Moreover, Proposition 1 and Eq. (2.5) imply for all \(v_{i,j}>0\)

Suppose \({{\varvec{W}}}^\infty \) is an accumulation point and \({\varvec{w}}_{j,r}^{\infty }\not ={\varvec{0}}\) for all \(j\in [k]\) and \(r\in [n]\), we know for all \(v_{i,j}>0\)

Next, we consider the case when \({\varvec{w}}_{j,r}={\varvec{0}}\) for some \(j\in [k]\) and \(r\in [n]\). Lemma 5.2 implies \(v_{r,j}=0\). We construct a new sequence

and

With

we know \({\hat{o}}_r=o_r\) for all \(r\in [n]\). Hence, we have

This implies that \(\Omega _{{\hat{{{\varvec{W}}}}}^t}=\Omega _{{{\varvec{W}}}^t}\), so we have for all \(j\in [k]\),

Letting t go to infinity on both side, we get

By Lemmas 5.1 and 5.2, we know

so \({\tilde{\nabla }}_{{{\varvec{W}}}}l_i({{\varvec{W}}}^\infty )=0.\) By Proposition 2, \(l_i({{\varvec{W}}}^t)=0\), which completes the proof. \(\square \)

8 Experiments

In this section, we conduct experiments on both synthetic and MNIST data to verify and complement our theoretical findings. Experiments on larger networks and data sets will left for a future work.

8.1 Synthetic data

Let \(\left\{ {\varvec{e}}_1,{\varvec{e}}_2,{\varvec{e}}_3,{\varvec{e}}_4\right\} \) be orthonormal basis of \({\mathbb {R}}^4\), \(\theta \) be an acute angle and \({\varvec{v}}_1={\varvec{e}}_1\), \({\varvec{v}}_2=\sin \theta \,{\varvec{e}}_2+\cos \theta \,{\varvec{e}}_3\), \({\varvec{v}}_3={\varvec{e}}_3\), \({\varvec{v}}_4={\varvec{e}}_4\). Now, we have two linearly independent subspaces of \({\mathbb {R}}^4\), namely \({\mathcal {V}}_1=\text {Span}\left( \left\{ {\varvec{v}}_1,{\varvec{v}}_2\right\} \right) \) and \({\mathcal {V}}_2=\text {Span}\left( \left\{ {\varvec{v}}_3,{\varvec{v}}_4\right\} \right) \). We can easily calculate that the angle between \({\mathcal {V}}_1\) and \({\mathcal {V}}_2\) is \(\theta \). Next, with

we define

and

Let \(\hat{{\mathcal {D}}}_i\) be uniform distributed on \(\hat{{\mathcal {X}}}_i\times \{i\}\) and \(\hat{{\mathcal {D}}}\) be a mixture of \(\hat{{\mathcal {D}}}_1\) and \(\hat{{\mathcal {D}}}_2\). Let \(\hat{{\mathcal {X}}}=\hat{{\mathcal {X}}}_1\cup \hat{{\mathcal {X}}}_2\). The activation function \(\sigma \) is 4-bit quantized ReLU:

For simplicity, we take \(k=24\) and \(v_{i,j}=\frac{1}{2}\) if \(j-12(i-1)\in [12]\) for \(i\in [2]\) and \(j\in [24]\) and 0 otherwise. Now, our neural network becomes

where \(h_j=\langle {\varvec{w}}_j,{{\varvec{x}}}\rangle \) and \({{\varvec{x}}}\in {\mathbb {R}}^4\). The population loss is given by

We choose the ReLU STE (i.e., \(g(x) = \max \{0,x\}\)) and use the coarse gradient

Taking learning rate \(\eta =1\), Eq. 2.5 becomes

We find that the coarse gradient method converges to a global minimum with zero loss. As shown in box plots of Fig. 3, the convergence still holds when the subspaces \({\mathcal {V}}_1\) and \({\mathcal {V}}_2\) form an acute angle, and even when the data come from two levels of Gaussian noise perturbations of \({\mathcal {V}}_1\) and \({\mathcal {V}}_2\). The convergence is faster and with a smaller weight norm when \(\theta \) increases toward \(\frac{\pi }{2}\) or \({\mathcal {V}}_2\) are orthogonal to each other. This observation clearly supports the robustness of Theorem 1 beyond the regime of orthogonal classes.

2D projections of MNIST features from a trained convolutional neural network [24] with quantized activation function. The 10 classes are color coded, and the feature points cluster near linearly independent subspaces

8.2 MNIST experiments

Our theory works for a board range of STEs, while their empirical performances on deeper networks may differ. In this subsection, we compare the performances of the three types of STEs in Fig. 2.

As in [2], we resort to a modified batch normalization layer [13] and add it before each activation layer. As such, the inputs to quantized activation layers always follow unit Gaussian distribution. Then, the scaling factor \(\tau \) applied to the output of quantized activation layers can be pre-computed via k-means approach and get fixed during the whole training process. The optimizer we use to train quantized LeNet-5 is the (stochastic) coarse gradient method with momentum = 0.9. The batch size is 64, and learning rate is initialized to be 0.1 and then decays by a factor of 10 after every 20 epochs. The three backward pass substitutions g for the straight-through estimator are (1) ReLU \(g(x) = \max \{x,0\}\), (2) reverse exponential \(g(x)=\max \{0,q_b(1- e^{-x/q_b})\}\) and (3) log-tailed ReLU. The validation accuracy for each epoch is shown in Fig. 4. The validation accuracies at bit-widths 2 and 4 are listed in Table 2. Our results show that these STEs all perform very well and give satisfactory accuracy. Specifically, reverse exponential and log-tailed STEs are comparable, both of which are slightly better than ReLU STE. In Fig. 5, we show 2D projections of MNIST features at the end of 100 epoch training of a 7 layer convolutional neural network [24] with quantized activation. The features are extracted from input to the last fully connected layer. The data points cluster near linearly independent subspaces. Together with Sect. 8.1, we have numerical evidence that the linearly independent subspace data structure (working as an extension of subspace orthogonality) occurs for high-level features in a deep network for a nearly perfect classification, rendering support to the realism of our theoretical study. Enlarging angles between linear subspaces can improve classification accuracy, see [27] for such an effort on MNIST and CIFAR-10 data sets via linear feature transform.

8.3 CIFAR-10 experiments

In this experiment, we train VGG-11/ResNet-20 with 4-bit activation function on CIFAR-10 data set to numerically validate the boundedness assumption upon the \(\ell _2\)-norm of weight. The optimizer is momentum SGD with no weight decay. We used initial learning rate \(=0.1\), with a decay factor of 0.1 at the 80-th and 140-th epoch.

We see from Fig. 6 that the \(\ell _2\) norm of weights is bounded during the training process. This figure also shows that the norm of weights is generally increasing in epochs which coincides with our theoretical finding shown in Lemma 5.3.

9 Summary

We studied a novel and important biased first-order oracle, called coarse gradient, in training quantized neural networks. The effectiveness of coarse gradient relies on the choice of STE used in backward pass only. We proved the convergence of coarse gradient methods for a class of STEs bearing certain monotonicity in nonlinear classification using one-hidden-layer networks. In experiments on LeNet and MNIST data set, we considered three different proxy functions satisfying the monotonicity condition for backward pass: ReLU, reverse exponential function and log-tailed ReLU for training LeNet-5 with quantized activations. All of them exhibited good performance which verified our theoretical findings. In future work, we plan to expand theoretical understanding of coarse gradient descent for deep activation quantized networks.

References

Bengio, Y., Léonard, N., Courville, A.: Estimating or propagating gradients through stochastic neurons for conditional computation. arXiv preprint arXiv:1308.3432 (2013)

Cai, Z., He, X., Sun, J., Vasconcelos, N.: Deep learning with low precision by half-wave gaussian quantization. In: IEEE Conference on Computer Vision and Pattern Recognition (2017)

Choi, J., Wang, Z., Venkataramani, S., Chuang, P.I.-J., Srinivasan, V., Gopalakrishnan, K.: Pact: parameterized clipping activation for quantized neural networks. arXiv preprint arXiv:1805.06085 (2018)

Collobert, R., Weston, J.: A unified architecture for natural language processing: deep neural networks with multitask learning. In: International Conference on Machine Learning. ACM, pp. 160–167 (2008)

Courbariaux, M., Bengio, Y., David, J.-P.: Binaryconnect: training deep neural networks with binary weights during propagations. In: Advances in Neural Information Processing Systems, pp. 3123–3131 (2015)

Ding, Y., Liu, J., Xiong, J., Shi, Y.: On the universal approximability and complexity bounds of quantized relu neural networks. arXiv preprint arXiv:1802.03646 (2018)

Freund, Y., Schapire, R.E.: Large margin classification using the perceptron algorithm. Mach. Learn. 37, 277–296 (1999)

He, J., Li, L., Xu, J., Zheng, C.: ReLU deep neural networks and linear finite elements. J. Comput. Math. 38, 502–527 (2020)

Hinton, G.: Neural Networks for Machine Learning, Coursera. Coursera, Video Lectures (2012)

Hou, L., Kwok, J.T.: Loss-aware weight quantization of deep networks. In: International Conference on Learning Representations (2018)

Hubara, I., Courbariaux, M., Soudry, D., El-Yaniv, R., Bengio, Y.: Binarized neural networks. In: Advances in Neural Information Processing Systems (2016)

Hubara, I., Courbariaux, M., Soudry, D., El-Yaniv, R., Bengio, Y.: Quantized neural networks: training neural networks with low precision weights and activations. J. Mach. Learn. Res. 18, 1–30 (2018)

Ioffe, S., Szegedy, C.: Batch normalization: accelerating deep network training by reducing internal covariate shift. In: International Conference on Machine Learning (2015)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, pp. 1097–1105 (2012)

Li, F., Zhang, B., Liu, B.: Ternary weight networks. arXiv preprint arXiv:1605.04711 (2016)

Li, H., De, S., Xu, Z., Studer, C., Samet, H., Goldstein, T.: Training quantized nets: a deeper understanding. In: Advances in Neural Information Processing Systems, pp. 5811–5821 (2017)

Louizos, C., Reisser, M., Blankevoort, T., Gavves, E., Welling, M.: Relaxed quantization for discretized neural networks. In: International Conference on Learning Representations (2019)

Mnih, V., Kavukcuoglu, K., Silver, D., Rusu, A.A., Veness, J., Bellemare, M.G., Graves, A., Riedmiller, M., Fidjeland, A.K., Ostrovski, G., et al.: Human-level control through deep reinforcement learning. Nature 518, 529 (2015)

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. In: Advances in Neural Information Processing Systems, pp. 91–99 (2015)

Rosenblatt, F.: The Perceptron, a Perceiving and Recognizing Automaton Project Para. Cornell Aeronautical Laboratory, Buffalo (1957)

Rosenblatt, F.: Principles of neurodynamics. In: Spartan Book (1962)

Shen, Z., Yang, H., Zhang, S.: Deep network approximation with discrepancy being reciprocal of width to power of depth. arXiv preprint arXiv:2006.12231 (2020)

Silver, D., Huang, A., Maddison, C.J., Guez, A., Sifre, L., Van Den Driessche, G., Schrittwieser, J., Antonoglou, I., Panneershelvam, V., Lanctot, M., et al.: Mastering the game of go with deep neural networks and tree search. Nature 529, 484 (2016)

Wang, H., Wang, Y., Zhou, Z., Ji, X., Li, Z., Gong, D., Zhou, J., Liu, W.: Cosface: large margin cosine loss for deep face recognition. In: IEEE Conference on Computer Vision and Pattern Recognition (2008)

Widrow, B., Lehr, M.A.: 30 years of adaptive neural networks: perceptron, madaline, and backpropagation. Proc. IEEE 78, 1415–1442 (1990)

Yin, P., Lyu, J., Zhang, S., Osher, S.J., Qi, Y., Xin, J.: Understanding straight-through estimator in training activation quantized neural nets. In: International Conference on Learning Representations (2019)

Yin, P., Xin, J., Qi, Y.: Linear feature transform and enhancement of classification on deep neural network. J. Sci. Comput. 76, 1396–1406 (2018)

Yin, P., Zhang, S., Lyu, J., Osher, S., Qi, Y., Xin, J.: Binaryrelax: a relaxation approach for training deep neural networks with quantized weights. SIAM J. Imag. Sci. 11, 2205–2223 (2018)

Yin, P., Zhang, S., Lyu, J., Osher, S., Qi, Y., Xin, J.: Blended coarse gradient descent for full quantization of deep neural networks. Res. Math. Sci. 6, 14 (2019)

Yin, P., Zhang, S., Qi, Y., Xin, J.: Quantization and training of low bit-width convolutional neural networks for object detection. J. Comput. Math. 37, 349–359 (2019)

Zhou, A., Yao, A., Guo, Y., Xu, L., Chen, Y.: Incremental network quantization: towards lossless CNNs with low-precision weights. In: International Conference on Learning Representations (2017)

Zhou, S., Wu, Y., Ni, Z., Zhou, X., Wen, H., Zou, Y.: Dorefa-net: training low bitwidth convolutional neural networks with low bitwidth gradients. arXiv preprint arXiv:1606.06160 (2016)

Zhu, C., Han, S., Mao, H., Dally, W.J.: Trained ternary quantization. In: International Conference on Learning Representations (2017)

Acknowledgements

This work was partially supported by NSF Grants IIS-1632935, DMS-1854434, DMS-1924548, DMS-1924935 and DMS-1952644. On behalf of all authors, the corresponding author states that there is no conflict of interest.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was funded by NSF Grants IIS-1632935, DMS-1854434, DMS-1924548, and DMS-1924935.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Long, Z., Yin, P. & Xin, J. Learning quantized neural nets by coarse gradient method for nonlinear classification. Res Math Sci 8, 48 (2021). https://doi.org/10.1007/s40687-021-00281-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40687-021-00281-4