Abstract

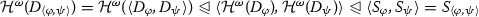

The present paper introduces a novel notion of ‘(effective) computability,’ called viability, of strategies in game semantics in an intrinsic (i.e., without recourse to the standard Church–Turing computability), non-inductive, non-axiomatic manner and shows, as a main technical achievement, that viable strategies are Turing complete. Consequently, we have given a mathematical foundation of computation in the same sense as Turing machines but beyond computation on natural numbers, e.g., higher-order computation, in a more abstract fashion. As immediate corollaries, some of the well-known theorems in computability theory such as the smn theorem and the first recursion theorem are generalized. Notably, our game-semantic framework distinguishes high-level computational processes that operate directly on mathematical objects such as natural numbers (not on their symbolic representations) and their symbolic implementations that define their ‘computability,’ which sheds new light on the very concept of computation. This work is intended to be a stepping stone toward a new mathematical foundation of computation, intuitionistic logic and constructive mathematics.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The present work introduces an intrinsic, non-inductive, non-axiomatic formulation of ‘(effectively) computable’ strategies in game semantics and proves as a main theorem that they are Turing complete. This result leads to a novel mathematical foundation of computation beyond classical computation, e.g., higher-order computation, that distinguishes high-level and low-level computational processes, where the latter defines ‘effective computability’ of the former.

Convention

We shall informally use computational processes and algorithms almost as synonyms of computation, but they put more emphasis on ‘processes.’

1.1 Search for Turing machines beyond classical computation

Turing machines (TMs) introduced in the classic work [67] by Alan Turing have been widely accepted as giving a reasonable, highly convincing definition of ‘effectivity’ or ‘(effective) computability’ of (partial) functions on (finite sequences of) natural numbers, which let us call in this paper recursiveness, classical computability or Church–Turing computability, in a mathematically rigorous manner. This is because ‘computability’ of a function intuitively means the very existence of an algorithm that implements the function’s input/output behavior, and TMs are none other than a mathematical formulation of this informal concept.

In mathematics, however, there are various kinds of non-classical computation, where by classical computation we mean what merely implements a function on natural numbers, since there are a variety of mathematical objects other than natural numbers, for which TMs have certain limitations.

As an example of non-classical computation, consider higher-order computation [50], i.e., computation that takes (as an input) or produces (as an output) another computation, which abounds in mathematics, e.g., quantification in mathematical logic, differentiation in analysis or simply an application \((f, a) \mapsto f(a)\) of a function \(f : A \rightarrow B\) to an argument \(a \in A\). However, TMs cannot capture higher-order computation in a natural or systematic fashion. In fact, although TMs may compute on symbols that encode other TMs, e.g., consider universal TMs [35, 48, 64], they cannot compute on ‘external behavior’ of an input computation, which implies that the input is limited to a recursive one (to be encoded); however, it makes perfect sense to consider computation on non-recursive objects such as non-recursive real numbers. For this point, one may argue that oracle TMs [47, 64] may treat an input computation as an oracle, a black-box-like computation that does not have to be recursive; however, it is like a function (rather than a computational process) that computes just in a single step, which appears conceptually mysterious and technically ad hoc. (Another approach is to give an input computation as a potentially infinite sequence of symbols on the input tape [69], but it may be criticized in a similar manner.)

On the other hand, most of the other models of higher-order computation are, unlike TMs, either syntactic (such as \(\lambda \)-calculi and programming languages [9, 50]), inductive and/or axiomatic (such as Kleene’s schemata S1 - S9 [40, 41]) or extrinsic (i.e., reducing to classical computation by encoding whose ‘effectivity’ is usually left imprecise [16, 50]), thus lacking the semantic, direct, intrinsic nature of TMs. Also, unlike classical computability, a confluence between different notions of higher-order computability has been rarely established [50]. For this problem, it would be a key step to establish a TMs-like model of higher-order computation since it may tell us which notion of higher-order computability is a ‘correct’ one.

1.2 Search for mathematics of high-level computational processes

Perhaps more crucially than the limitation for non-classical computation mentioned above, one may argue that TMs are not appropriate as mathematics of computational processes since computational steps of TMs are often too low-level to see what they are supposed to compute. In other words, we need mathematics of high-level computational processes that gives a ‘birds-eye-view’ of low-level computational processes.Footnote 1 Also, what TMs formulate is essentially symbol manipulations; however, the content of computation on mathematical, semantic, non-symbolic objects seems completely independent of its symbolic representation, e.g., consider a process (not a function) to add numbers or to take the union of sets.

Therefore, it would be rather appropriate, at least from the conceptual and the mathematical points of view, to formulate such high-level computational processes in a more abstract, in particular syntax-independent, manner, in order to explain low-level computational processes, and then regard the latter as executable symbolic implementations of the former.

1.3 Our research problem: mathematics of computational processes

To summarize, it would be reasonable and meaningful from both of the conceptual and the mathematical viewpoints to develop mathematics of abstract (in particular syntax-independent), high-level computational processes as well as executable, low-level ones beyond classical computation such that the former is defined to be ‘effectively computable’ if it is implementable or representable by the latter.

In fact, this (or similar) perspective is nothing new and shared with various prominent researchers; for instance, Robin Milner stated:

... we should have achieved a mathematical model of computation, perhaps highly abstract in contrast with the concrete nature of paper and register machines, but such that programming languages are merely executable fragment of the theory ...[52]

We address this problem in the present paper. However, since there are so many kinds of computation, e.g., parallel, concurrent, probabilistic, non-deterministic and quantum, as the first step, this paper focuses on a certain kind of higher-order, sequential (i.e., at most one computational step may be performed at a time) computation, which is based on (sequential) game semantics Footnote 2 introduced below.

1.4 Game semantics

Game semantics (of computation) [3, 6, 37] is a particular kind of denotational semantics of programming languages [8, 32, 70], in which types and terms are modeled as games and strategies (whose definitions are given in Sect. 2), respectively. Historically, having its roots in ‘games-based’ approaches in mathematical logic to capture validity [19, 59], higher-order computability [21, 42,43,44,45,46] and proofs in linear logic [5, 11, 36], combined with ideas from sequential algorithms [10], process calculi [34, 53] and geometry of interaction [24,25,26, 28,29,30] in theoretical computer science, several variants of game semantics in its modern form were developed in the early 1990s to give the first syntax-independent characterization of the higher-order programming language PCF [7, 38, 55]; since then, a variety of games and strategies have been proposed to model various programming features [6].

An advantage of game semantics is this flexibility: It models a wide range of programming languages by simply varying constraints on strategies [6], which enables one to systematically compare and relate different languages ignoring syntactic details. Also, as full completeness and full abstraction results [15] in the literature have demonstrated, game semantics in general has an appropriate degree of abstraction (and thus it has a good potential to be mathematics of high-level computational processes). Finally, yet another strong point of game semantics is its conceptual naturality: It interprets syntax as ‘dynamic interactions’ between the participants of games, providing a computational, intensional explanation of syntax in a natural, intuitive (yet mathematically precise) manner. Informally, one can imagine that games provide a high-level description of interactive computation between a TM and an oracle, and therefore, they seem appropriate as an approach to the research problem defined in Sect. 1.3. Note that such an intensional nature stands in sharp contrast to the traditional domain-theoretic denotational semantics [8] which, e.g., cannot capture sequentiality of PCF (but the game models [7, 38, 55] can).

In the following, let us give a brief, informal introduction to games and strategies (as defined in [6]) in order to sketch the main idea of the present paper.

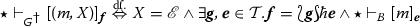

A game, roughly, is a certain kind of a rooted forest whose branches represent possible ‘developments’ or (valid) positions of a ‘game in the usual sense’ (such as chess, poker, etc.). Moves of a game are nodes of the game, where some moves are distinguished and called initial; only initial moves can be the first element (or occurrence) of a position of the game. Plays of a game are increasing sequences \(\varvec{\epsilon }, m_1, m_1 m_2, \ldots \) of positions of the game, where \(\varvec{\epsilon }\) is the empty sequence. For our purpose, it suffices to focus on rather standard sequential (as opposed to concurrent [2]) and unpolarized (as opposed to polarized [49]) games played by two participants, Player (P), who represents a ‘computational agent,’ and Opponent (O), who represents a ‘computational environment,’ in each of which O always starts a play (i.e., unpolarized), and then they alternately and separately (i.e., sequential) perform moves allowed by the rules of the game. Strictly speaking, a position of each game is not just a sequence of moves: Each occurrence m of O’s or O- (resp. P’s or P-) non-initial move in a position points to a previous occurrence \(m'\) of P- (resp. O-) move in the position, representing that m is performed specifically as a response to \(m'\). A strategy on a game, on the other hand, is what tells P which move (together with a pointer) she should make at each of her turns in the game. Hence, a game semantics \(\llbracket \_ \rrbracket _{\mathcal {G}}\) of a programming language \(\mathcal {L}\) interprets a type \(\mathsf {A}\) of \(\mathcal {L}\) as a game \(\llbracket \mathsf {A} \rrbracket _{\mathcal {G}}\) that specifies possible plays between P and O, and a term \(\mathsf {M : A}\) Footnote 3 of \(\mathcal {L}\) as a strategy \(\llbracket \mathsf {M} \rrbracket _{\mathcal {G}}\) that describes for P how to play on \(\llbracket \mathsf {A} \rrbracket _{\mathcal {G}}\); an execution of the term \(\mathsf {M}\) is then modeled as a play of \(\llbracket \mathsf {A} \rrbracket _{\mathcal {G}}\) in which P follows \(\llbracket \mathsf {M} \rrbracket _{\mathcal {G}}\).

Let us consider a simple example. The game N of natural numbers is the following rooted tree (which is infinite in width):

in which a play starts with O’s question q (‘What is your number?’) and ends with P’s answer \(n \in \mathbb {N}\) (‘My number is n!’), where \(\mathbb {N}\) is the set of all natural numbers, and n points to q (though this pointer is omitted in the diagram). A strategy \(\underline{10}\) on N, for instance, that corresponds to \(10 \in \mathbb {N}\) can be represented by the map \(q \mapsto 10\) equipped with a pointer from 10 to q (though it is the only choice). In the following, the pointers of most strategies are obvious, and thus, we often omit them.

There is a construction \(\otimes \) on games, called tensor (product). Conceptually, a position \(\varvec{s}\) of the tensor \(A \otimes B\) of games A and B is an interleaving mixture of a position \(\varvec{t}\) of A and a position \(\varvec{u}\) of B developed ‘in parallel without communication’; more specifically, \(\varvec{t}\) (resp. \(\varvec{u}\)) is the subsequence of \(\varvec{s}\) consisting of moves of A (resp. B) such that the change of AB-parity (i.e., the switch between \(\varvec{t}\) and \(\varvec{u}\)) in \(\varvec{s}\) must be made by O. The pointers in \(\varvec{s}\) are inherited from those in \(\varvec{t}\) and \(\varvec{u}\) in the obvious manner; this point holds also for other constructions on games and strategies in the rest of the introduction, and thus, we shall not mention it again. For instance, a maximal position of the tensor \(N \otimes N\) is either of the following formsFootnote 4:

where \(n, m \in \mathbb {N}\), and \((\_)^{[i]}\) (\(i = 0, 1\)) are (arbitrary, unspecified) ‘tags’ to distinguish the two copies of N (but we often omit them if it does not bring confusion), and the arrows represent pointers (n.b., they are distinct from edges of the game).

Next, a fundamental construction ! on games, called exponential, is basically the countably infinite iteration of \(\otimes \), i.e., \(!A {\mathop {=}\limits ^{\mathrm {df. }}} A \otimes A \otimes \dots \) for each game A, where the ‘tag’ for each copy of A is typically given as \((\_, i)\), where \(i \in \mathbb {N}\).

Another central construction \(\multimap \), called linear implication, captures the notion of linear functions, i.e., functions that consume exactly one input to produce an output. A position of the linear implication \(A \multimap B\) from A to B is almost like a position of the tensor \(A \otimes B\) except the following three points:

-

1.

The first occurrence of the position must be a move of B;

-

2.

A change of AB-parity in the position must be made by P;

-

3.

Each occurrence of an initial move (called an initial occurrence) of A points to an initial occurrence of B.

Thus, a typical position of the game \(N \multimap N\) is the following:

where \(n, m \in \mathbb {N}\), which can be read as follows:

-

1

O’s question \(q^{[1]}\) for an output (‘What is your output?’);

-

2

P’s question \(q^{[0]}\) for an input (‘Wait, what is your input?’);

-

3

O’s answer, say, \(n^{[0]}\), to \(q^{[0]}\) (‘OK, here is an input n.’);

-

4

P’s answer, say, \(m^{[1]}\), to \(q^{[1]}\) (‘Alright, the output is then m.’).

This play corresponds to any linear function that maps \(n \mapsto m\). The strategy \( succ \) (resp. \( double \)) on \(N \multimap N\) for the successor (resp. doubling) function is represented by the map \(q^{[1]} \mapsto q^{[0]}, q^{[1]}q^{[0]}n^{[0]} \mapsto n+1^{[1]}\) (resp. \(q^{[1]} \mapsto q^{[0]}, q^{[1]}q^{[0]}n^{[0]} \mapsto 2 \cdot n^{[1]}\)).

Let us remark here that the following play, which corresponds to a constant linear function that maps \(x \mapsto m\) for all \(x \in \mathbb {N}\), is also possible: \(\varvec{\epsilon }, q^{[1]}, q^{[1]}m^{[1]}\). Thus, strictly speaking, \(A \multimap B\) is the game of affine functions from A to B, but we follow the standard convention to call \(\multimap \) linear implication.

Another construction & on games, called product, is similar to yet simpler than tensor: A position \(\varvec{s}\) of the product \( A \& B\) of A and B is either a position \(\varvec{t}^{[0]}\) of \(A^{[0]}\) or a position \(\varvec{u}^{[1]}\) of \(B^{[1]}\). It is the product in the category \(\mathcal {G}\) of games and strategies, e.g., there is the pairing \( \langle \sigma , \tau \rangle : \ !C \multimap A \& B\) of given strategies \(\sigma : \ !C \multimap A\) and \(\tau : \ !C \multimap B\) that plays as \(\sigma \) (resp. \(\tau \)) if O initiates a play by a move of A (resp. B). Clearly, we may generalize product and pairing to n-ary ones for any \(n \in \mathbb {N}\).

These four constructions \(\otimes \), !, \(\multimap \) and & come from the corresponding ones in linear logic [5, 27]. Thus, in particular, the usual implication (or the function space) \(\Rightarrow \) is recovered by Girard translation [27]: \(A \Rightarrow B {\mathop {=}\limits ^{\mathrm {df. }}} \ !A \multimap B\).

Girard translation makes explicit the point that some functions need to refer to an input more than once to produce an output, i.e., there are nonlinear functions. For instance, consider the game \((N \Rightarrow N) \Rightarrow N\) of higher-order functions, in which the following position is possible:

where \(n, n', m, m', l, i, i', j, j' \in \mathbb {N}\) and \(j \ne j'\), which can be read as follows:

-

1.

O’s question q for an output (‘What is your output?’);

-

2.

P’s question (q, j) for an input function (‘Wait, your first output please!’);

-

3.

O’s question ((q, i), j) for an input (‘What is your first input then?’);

-

4.

P’s answer, say, ((n, i), j), to ((q, i), j) (‘Here is my first input n.’);

-

5.

O’s answer, say, (m, j), to (q, j) (‘OK, then here is my first output m.’);

-

6.

P’s question \((q, j')\) for an input function (‘Your second output please!’);

-

7.

O’s question \(((q, i'), j')\) for an input (‘What is your second input then?’);

-

8.

P’s answer, say, \(((n', i'), j')\), to \(((q, i'), j')\) (‘Here is my second input \(n'\).’);

-

9.

O’s answer, say, \((m', j')\), to \((q, j')\) (‘OK, then here is my second output \(m'\).’);

-

10.

P’s answer, say, l, to q (‘Alright, my output is then l.’).

In this play, P asks O twice about an input strategy \(N \Rightarrow N\). Clearly, such a play is not possible on the linear implication \((N \multimap N) \multimap N\) or \((N \Rightarrow N) \multimap N\). The strategy \( pazo : (N \Rightarrow N) \Rightarrow N\) that computes the sum \(f(0)+f(1)\) for a given function \(f : \mathbb {N} \Rightarrow \mathbb {N}\), for instance, plays as follows:

where \(j = 0\) and \(j' = 1\) are arbitrarily chosen, i.e., any \(j, j' \in \mathbb {N}\) with \(j \ne j'\) work.

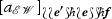

Finally, let us point out that any strategy \(\phi \) on the implication \(!A \multimap B\) induces its promotion \(\phi ^{\dagger } : \ !A \multimap \ !B\) such that if \(\phi \) plays, for instance, as

then \(\phi ^{\dagger }\) plays as

where \(\langle \_, \_ \rangle : \mathbb {N} \times \mathbb {N} {\mathop {\rightarrow }\limits ^{\sim }} \mathbb {N}\) is an arbitrarily fixed bijection, i.e., \(\phi ^{\dagger }\) plays as \(\phi \) for each thread in a position of \(!A \multimap \ !B\) that corresponds to a position of \(!A \multimap B\).

1.5 Toward a game-semantic model of computation

As seen in the examples given above, games and strategies capture higher-order computation in an abstract, conceptually natural fashion, where O plays the role of an oracle as part of the formalization. Note also that P computes on ‘external behavior’ of O, and thus O’s computation does not have to be recursive at all. Thus, one may expect that games and strategies would be appropriate as mathematics of high-level computational processes, solving the research problem of Sect. 1.3.

However, conventional games and strategies have never been formulated as a mathematical model of computation (in the sense of TMs); rather, the primary focus of the field has been full abstraction [8, 15], i.e., to characterize observational equivalences in syntax. In other words, game semantics has not been concerned that much with step-by-step processes in computation or their ‘effective computability,’ and it has been identifying programs with the same value [32, 70].

For instance, strategies on the game \(N \Rightarrow N\) typically play by q. (q, i) . (n, i) .m, where \(n, m, i \in \mathbb {N}\), as described above, and so they are essentially functions that map \(n \mapsto m\); in particular, it is not formulated at all how they calculate the fourth move m from the third one (n, i).Footnote 5 As a consequence, ‘effective computability’ in game semantics has been extrinsic: A strategy has been defined to be ‘effective’ or recursive if it is representable by a partial recursive function [7, 20, 38].

This situation is in a sense frustrating since games and strategies seem to have a good potential to give a semantic, intrinsic (i.e., without recourse to an established model of computation), non-axiomatic, non-inductive formulation of higher-order computation, but they have not taken advantage of this potential.

For the potential, we have decided to employ games and strategies as our basic mathematical framework and extend them to give mathematics of computational processes in the sense described in Sect. 1.3. For this aim, we shall first refine the category \(\mathcal {G}\) of games and strategies in such a way that accommodates step-by-step processes in computation, and then define their ‘effectivity’ in terms of their atomic computational steps. Fortunately, there is already the bicategory \(\mathcal {DG}\) of dynamic games and strategies [71], which addresses the first point.

1.6 Dynamic games and strategies

In the literature, there are several game models [13, 18, 31, 56] that exhibit step-by-step processes in computation to serve as a tool for program verification and analysis (the work [17, 23] may be called ‘intensional game semantics,’ but they rather keep track of costs in computation, not computational steps themselves). However, these variants of games and strategies are just conventional ones, and consequently, such step-by-step processes have no official status in their categories.

The problem lies in the point that in conventional game semantics composition of strategies is executed as parallel composition plus hiding [3], where hiding is the matter. Let us illustrate this point by a simple, informal example as follows. Consider again strategies \( succ \) and \( double \), but this time they are adjusted to the game \(N \Rightarrow N\). Their computations can be described by the following diagrams:

where the ‘tag’ \((\_, 0)\) on moves of the domain !N has been arbitrarily chosen (i.e., any \(i \in \mathbb {N}\) instead of 0 works). The composition \( double \bullet succ {\mathop {=}\limits ^{\mathrm {df. }}} double \circ succ ^{\dagger } = succ ^{\dagger } ; double : N \Rightarrow N\) is calculated as follows. First, by internal communication, we mean that \( succ ^{\dagger }\) and \( double \) are ‘synchronized’ via the codomain \(!N^{[1]}\) of \( succ ^{\dagger }\) and the domain \(!N^{[2]}\) of \( double \) (n.b., we take the promotion of \( succ \) to match its codomain with the domain of \( double \)), for which P also plays the role of O in \(!N^{[1]}\) and \(!N^{[2]}\) by copying her last P-moves,Footnote 6 resulting in the following play:

where moves for internal communication are marked by square boxes just for clarity, and a pointer from \((q, 0)^{[1]}\) to \((q, 0)^{[2]}\) is added because the move \((q, 0)^{[1]}\) is no longer initial. Importantly, it is assumed that O plays on the ‘external game’ \(!N^{[0]} \multimap N^{[3]}\), ‘seeing’ only moves of \(!N^{[0]}\) or \(N^{[3]}\). The resulting play is to be read as follows:

-

1.

O’s question \(q^{[3]}\) for an output in \(!N^{[0]} \multimap N^{[3]}\) (‘What is your output?’);

-

2.

P’s question

by \( double \) for an input in \(!N^{[2]} \multimap N^{[3]}\) (‘Wait, what is your input?’);

by \( double \) for an input in \(!N^{[2]} \multimap N^{[3]}\) (‘Wait, what is your input?’); -

3.

in turn triggers the question

in turn triggers the question

for an output in \(!N^{[0]} \multimap \ !N^{[1]}\) (‘What is your output?’);

for an output in \(!N^{[0]} \multimap \ !N^{[1]}\) (‘What is your output?’); -

4.

P’s question \((q, \langle 0, 0 \rangle )^{[0]}\) by \( succ ^{\dagger }\) for an input in \(!N^{[0]} \multimap \ !N^{[1]}\) (‘Wait, what is your input?’);

-

5.

O’s answer, say, \((n, \langle 0, 0 \rangle )^{[0]}\), to the question \((q, \langle 0, 0 \rangle )^{[0]}\) in \(!N^{[0]} \multimap \ !N^{[3]}\) (‘Here is an input n.’);

-

6.

P’s answer

to the question

to the question

by \( succ ^{\dagger }\) in \(!N^{[0]} \multimap \ !N^{[1]}\) (‘The output is then \(n+1\).’);

by \( succ ^{\dagger }\) in \(!N^{[0]} \multimap \ !N^{[1]}\) (‘The output is then \(n+1\).’); -

7.

in turn triggers the answer

in turn triggers the answer

to the question

to the question

in \(!N^{[2]} \multimap N^{[3]}\) (‘Here is the input \(n+1\).’);

in \(!N^{[2]} \multimap N^{[3]}\) (‘Here is the input \(n+1\).’); -

8.

P’s answer \(2 \cdot (n+1)^{[3]}\) to the initial question \(q^{[3]}\) by \( double \) in \(!N^{[0]} \multimap N^{[3]}\) (‘The output is then \(2 \cdot (n+1)\)!’).

Next, hiding means to hide or delete all moves with the square boxes from the play, resulting in the strategy for the function \(n \mapsto 2 \cdot (n + 1)\) as expected:

By the hiding operation, the resulting play is a legal one of the game \(N \Rightarrow N\), but let us point out that the intermediate occurrences of moves (with the square boxes), representing step-by-step processes in computation, are deleted by the operation.

Nevertheless, the present author and Samson Abramsky have introduced a novel, dynamic variant of games and strategies that systematically model dynamics and intensionality of computation, and also studied their algebraic structures [71]. In contrast to the previous work mentioned above, dynamic strategies themselves embody step-by-step processes in computation by retaining intermediate occurrences of moves, and composition of them is parallel composition without hiding. In addition, the categorical structure of existing game semantics is not lost but rather refined by the cartesian closed bicategory [57] \(\mathcal {DG}\) of dynamic games and strategies, forming a categorical ‘universe’ of high-level computational processes.

1.7 Viable strategies

Now, the remaining problem is to define ‘effective’ dynamic strategies in an intrinsic (i.e., solely in terms of games and strategies), non-inductive, non-axiomatic manner. Of course, we need to provide a convincing argument that justifies their ‘effectivity’ (though such an argument can never be mathematically precise) as in the case of TMs. Moreover, to obtain a powerful model of computation, they should be at least Turing complete, i.e., they ought to subsume all classically computable partial functions. This sets up, in addition to the conceptual quest so far, an intriguing mathematical question in its own right:

Is there any intrinsic, non-inductive, non-axiomatic notion of ‘effectivity’ of dynamic strategies that is Turing complete?

Not surprisingly, perhaps, this problem has turned out to be challenging, and the main technical achievement of the present paper is to give a positive answer to it.

As already mentioned, our solution is to give low-level computational processes (which are clearly ‘executable’) in order to define ‘effectivity’ of dynamic strategies (or high-level computational processes). This is achieved roughly as follows.

Remark

The concepts introduced below make sense for conventional (i.e., non-dynamic) games and strategies too, but they do not give rise to a Turing complete model of computation for composition of conventional strategies does not preserve our notion of ‘effectivity’ or viability as we shall see.

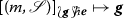

First, we give, by a fixed alphabet, a concrete formalization of ‘tags’ for disjoint union of sets of moves for constructions on games in order to rigorously formulate ‘effectivity’ of strategies. As we see in Sect. 1.4, a finite number of ‘tags’ suffice for most constructions on games, but it is not the case for exponential !. Then, we formalize ‘tags’ for exponential by an unary representation \(\ell ^i {\mathop {=}\limits ^{\mathrm {df. }}} \underbrace{\ell \ell \dots \ell }_i\) of natural numbers \(i \in \mathbb {N}\) (extended by a symbolic implementation of a recursive bijection \(\mathbb {N}^*{\mathop {\rightarrow }\limits ^{\sim }} \mathbb {N}\) [16], but here we omit the extension for simplicity) and employ, instead of the game N, the lazy variant \(\mathcal {N}\) of natural number game whose maximal positions are either of the following forms:

where the number n of \( yes \) in the position ranges over all natural numbers, which represents the number intended by P. In this way, \(\mathcal {N}\) gives an unary representationFootnote 7 of natural numbers. Note that the initial question \(\hat{q}\) must be distinguished from the non-initial one q for a technical reason, which will be clarified in Sect. 2. This sets up a finitary representation of game-semantic computation on natural numbers.

Next, as we shall see, dynamic strategies modeling PCF only need to refer to at most three moves in the history of previous moves which may be ‘effectively’ identified by pointers (specifically the last three moves in the P-view [6, 38]; see Definition 16). Thus, it may seem at first glance that finitary dynamic strategies in the following sense suffice: A strategy is finitary if its representation by a partial function [38, 51] that assigns the next P-move to previous moves, called its table, is finite. However, it is not the case: Finitary strategies cannot handle unboundedly many manipulations of ‘tags’ for exponential (more precisely, manipulations such that the length of input or output ‘tags’ is unbounded), but such manipulations seem to be necessary for Turing completeness, e.g., a strategy that models primitive recursion or minimization has to interact with input strategies unboundedly many times, and thus, it must handle unboundedly many ‘tags.’

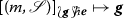

Then, the main idea of our solution is to define a strategy to be viable if its table is ‘describable’ by a finitary strategy. To state it more precisely, let us note that there is the terminal game T which has only the empty sequence \(\varvec{\epsilon }\) as a position, and each game G is identical up to ‘tags’ to the implication \(T \Rightarrow G\). Hence, we may regard strategies \(\sigma : G\) as the one on the implication \(T \Rightarrow G\) up to ‘tags,’ and vice versa; we shall not take the trouble of distinguishing the two. Also, we define for each move \(m = [m']_{\varvec{e}}\) of a game G, where \(\varvec{e} = e_1 . e_2 \dots e_{k}\) is a unary representation of the ‘tag’ for exponential on m, the strategy \(\underline{m}\) on a suitable game \(\mathcal {G}(M_G)\) that plays as \(\hat{q} . \mathsf {m'} . q . \mathsf {e_1} . q . \mathsf {e_2} \dots q . \mathsf {e_{k}} . q . \checkmark \), where each non-initial element points to the last element, and the font difference between the moves is just for clarity. In this manner, the strategy \(\underline{m} : \mathcal {G}(M_G)\) encodes the move m. Then, viability of strategies is given more precisely as follows: A strategy \(\sigma : G\) is defined to be viable if its partial function representation \((m_3, m_2, m_1) \mapsto m\), where \(m_1\), \(m_2\) and \(m_3\) are the last, the second last and the third last moves of the current P-view, respectively, is ‘implementable’ by a finitary strategy \( \mathcal {A}(\sigma )^\circledS : \mathcal {G}(M_{G}) \& \mathcal {G}(M_{G}) \& \mathcal {G}(M_{G}) \Rightarrow \mathcal {G}(M_{G})\), called an instruction strategy for \(\sigma \), in the sense that the composition \(\mathcal {A}(\sigma )^\circledS \circ \langle \underline{m_3}, \underline{m_2}, \underline{m_1} \rangle ^{\dagger } : \mathcal {G}(M_G)\) coincides with \(\underline{m} : \mathcal {G}(M_{G})\) for all quadruples \((m_3, m_2, m_1) \mapsto m\) in the table of \(\sigma \), where \( \langle \underline{m_3}, \underline{m_2}, \underline{m_1} \rangle : T \Rightarrow \mathcal {G}(M_G) \& \mathcal {G}(M_G) \& \mathcal {G}(M_G)\) is the ternary pairing of the strategies \(\underline{m_i} : T \Rightarrow \mathcal {G}(M_G)\) (i = 1, 2, 3).

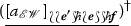

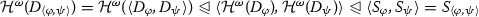

For instance, consider the successor and the doubling strategies modified for the lazy natural number game \(\mathcal {N}\), whose plays (on a nonzero input) are as in Fig. 1. Roughly, \( succ \) copies a given input on \(!\mathcal {N}^{[0]}\) and repeats it as an output on \(\mathcal {N}^{[1]}\), but it adds one more \([ yes ^{[1]}]\) to \(\mathcal {N}^{[1]}\) before \([ no ^{[1]}]\); similarly, \( double \) copies an input and repeats it as an output, but it doubles the number of \([ yes ^{[1]}]\)’s in the output. It is easy to see that for computing the next P-move (with a pointer) at an odd-length position \(\varvec{s} = m_1 m_2 \ldots m_{2i+1}\) the strategies only need to refer to at most the last O-move \(m_{2i+1}\), the P-move \(m_{2j}\) pointed by \(m_{2i+1}\) and the O-move \(m_{2j-1}\) (they are the last three moves in the P-view of \(\varvec{s}\)), e.g., \( succ : (\square , \square , [\hat{q}^{[1]}]) \mapsto [\hat{q}^{[0]}]\), \(([\hat{q}^{[1]}], [\hat{q}^{[0]}], [ yes ^{[0]}]) \mapsto [ yes ^{[1]}]\), and so on, where \(\square \) denotes ‘no move.’ Note that these strategies do not need unboundedly many manipulations of ‘tags’; they are in fact finitary (it is easy to construct finite tables for them, each of which consists of a finite number of quadruples of moves of the form \((m_3, m_2, m_1) \mapsto m\)).

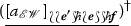

On the other hand, consider the strategy \( min : \mathcal {N} \Rightarrow \mathcal {N}\) that implements the minimization \((\mathbb {N} \rightharpoonup \mathbb {N}) \rightharpoonup \mathbb {N}\) that maps a given partial function \(f : \mathbb {N} \rightharpoonup \mathbb {N}\) to the least \(n \in \mathbb {N}\) such that \(f(n) = 0\) if it exists. For simplicity, the domain of \( min \) is \(!\mathcal {N}\), not \(\mathcal {N} \Rightarrow \mathcal {N}\); \( min \) informs an input computation of an input number \(n \in \mathbb {N}\) by the ‘tag’ \([\_]_{\ell ^n}\) for exponential. As described in Fig. 2, \( min \) simply investigates if the input computation gives back zero just by checking the first digit (\([ yes ^{[0]}]\) or \([ no ^{[0]}]\)) and adds \([ yes ^{[1]}]\) to the output if the input computation gives back nonzero (i.e., if the first digit is \([ yes ^{[0]}]\)). Note that \( min \) only needs to refer to at most the last three moves of each odd-length position (n.b., in this case, they are the last three moves of the P-view of the position as well). The point here is that the number of ‘tags’ for exponential that \( min \) has to manipulate is unbounded (though the manipulation is very simple), and therefore, the strategy is not finitary; however, it is easy to show that the strategy is viable as follows. First, its partial function representation can be given by the following infinitary table (n.b., n for \([\_]_{\ell ^n}\) given below ranges over all natural numbers):

This high-level computational process is ‘implementable’ by a strategy \( \mathcal {A}( min )^\circledS : \mathcal {G}(M_{\mathcal {N} \Rightarrow \mathcal {N}}) \& \mathcal {G}(M_{\mathcal {N} \Rightarrow \mathcal {N}}) \& \mathcal {G}(M_{\mathcal {N} \Rightarrow \mathcal {N}}) \Rightarrow \mathcal {G}(M_{\mathcal {N} \Rightarrow \mathcal {N}})\), which computes as in Fig. 3, where the rather trivial pointers and the ‘tags’ \((\_)^{[i]}\) in the underlying game are omitted for brevity. Then clearly, there is a finite table for \(\mathcal {A}( min )^\circledS \) that maps the last k-moves in the P-view of each odd-length position to the next P-move for some fixed \(k \in \mathbb {N}\) (though it is a bit too tedious to write down the table here), proving viability of \( min \). Observe in particular how the infinitary manipulation of ‘tags’ by \( min \) is reduced to a finitary computation by \(\mathcal {A}( min )^\circledS \).

This example illustrates why we need viable (not only finitary) dynamic strategies for Turing completeness, where recall that minimization (in the general form) or an equivalent construction is vital to construct all partial recursive functions [16]. Also, it should be intuitively clear now why we have to employ composition of strategies without hiding: An instruction strategy for the composition of strategies \(\phi : A \Rightarrow B\) and \(\psi : B \Rightarrow C\) without hiding can be obtained simply as the disjoint union of instruction strategies for \(\phi ^{\dagger }\) and \(\psi \) (see the proof of Theorem 76 for the details), but it is not possible for composition with hiding. (In fact, there is no obvious way to construct an instruction strategy for the composition of \(\phi \) and \(\psi \) with hiding.)

We advocate that viability of strategies gives a reasonable notion of ‘effective computability’ as finitary strategies are clearly ‘effective,’ and so their descriptions or instruction strategies can be ‘effectively read off’ by P. Note also that viability is defined solely in terms of games and strategies without any axiom or induction. Moreover, viability is at least as strong as Church–Turing computability: As the main results of the present work, we show that dynamic strategies definable by PCF are all viable (Theorem 81), and therefore, they are Turing complete in particular (Corollary 82).

Also, viable dynamic strategies solve the problem defined in Sect. 1.3 in the following sense. First, as we have seen via examples, games and strategies give an abstract, syntax-independent formulation of high-level computational processes, e.g., the lazy natural number game \(\mathcal {N}\) defines natural numbers (not their symbolic representation) as ‘counting processes’ in an abstract, syntax-independent fashion, beyond classical computation, e.g., higher-order computation. Moreover, an instruction strategy for a viable dynamic strategy describes a low-level computational process that implements the dynamic strategy. In this manner, we have obtained a single mathematical framework for both high-level and low-level computational processes as well as ‘effective computability’ of the former in terms of the latter.

1.8 Our contribution and related work

Our main technical achievement is to define an intrinsic, non-inductive, non-axiomatic notion of ‘effectivity’ of strategies in game semantics, namely viable dynamic strategies, and show that they are Turing complete (Corollary 82). We have also shown the converse (though it is not that surprising): The input/output behavior of each viable dynamic strategy computing on natural numbers coincides with a partial recursive function (Theorem 85). This result immediately implies a universality result [7, 15, 58] as well: Every viable dynamic strategy on a dynamic game interpreting a type of PCF is (up to intrinsic equivalence) the denotation of a term of PCF (Corollary 89). In addition, some of the well-known theorems in computability theory [16, 60] such as the smn theorem and the first recursion theorem are generalized to non-classical computation (Corollaries 83 and 84). We hope that these technical results would convince the reader that viability of dynamic strategies is a natural, reasonable generalization of Church–Turing computability.

Another, more conceptual contribution of the present work is to establish a single mathematical framework for both high-level and low-level computational processes, where the former defines what computation does, while the latter describes how to execute the former. In comparison with existing mathematical models of computation, our game-semantic approach has some novel features. First, in comparison with computation by TMs or programming languages, plays of games are a more abstract concept; in particular they are not necessarily symbol manipulations, which is why they are suitable for abstract, high-level computational processes. Next, computation in a game proceeds as an interaction between P and O, which may be seen as a generalization of computation by TMs in which just one interaction occurs (i.e., O gives an input on the infinite tape, and then P returns an output on the tape); this in particular means that O’s computation does not have to be recursive, and it is part of the formalization, which is why game semantics in general captures higher-order computation in a natural, systematic manner. The present work inherits this interactive nature of game semantics. Last but not least, games are a semantic counterpart of types, where note that types do not a priori exist in TMs, and types in programming languages are syntactic entities. Hence, our approach provides a deeper clarification of types in the context of theory of computation.

Moreover, by exploiting the flexibility of game semantics, our approach would be applicable to a wide range of computation though it is left as future work. Also, game semantics has interpreted various logics as well [1, 5, 37, 72], and so it would be possible to employ our framework for a realizability interpretation of constructive logic [65, 68], for which viable dynamic strategies would be more suitable as realizers than existing strategies such as [12] since the former contains more ‘computational contents’ and makes more sense as a model of computation than the latter. Furthermore, the game models [1, 72] interpret Martin-Löf type theory, one of the most prominent foundations of constructive mathematics, and thus our framework would provide a mathematical, syntax-independent formalization of constructive mathematics too.Footnote 8 Of course, we need to work out details for these developments, which is out of the scope of the present paper, but it is in principle clear how to apply our framework to existing game semantics. In this sense, the present work would serve as a stepping stone toward these extensions.

In the literature, there have been several attempts to provide a mathematical foundation of computation beyond classical or symbolic ones. We do not claim at all our game-semantic approach is best or canonical in comparison with the previous work; however, our approach certainly has some advantages. For instance, Robin Gandy proposed in the famous paper [22] a notion of ‘mechanical devices,’ now known as Gandy machines (GMs), which appear more general than TMs, but showed that TMs are actually as powerful as GMs. However, since GMs are an axiomatic approach to define a general class of ‘mechanical devices’ that are ‘effectively executable,’ they do not give a distinction between high-level and low-level computational processes, where GMs formulate the latter. More recent abstract state machines (ASMs) [33] introduced by Yuri Gurevich employ a similar idea to that of GMs for ‘effectivity,’ namely to require an upper bound of elements that may change in a single step of computation, utilizing structures in the sense of mathematical logic [63]. Notably, ASMs define a very general notion of computation, namely computation as structure transition. However, it seems that this framework is in some sense too general; for instance, it is possible that an ASM computes a real number in a single step, but then its ‘effectivity’ is questionable. In general, an appropriate notion of ‘effective computability’ of ASMs has been missing. Also, the way of computing a function by an ASM is to update input/output pairs of the function in the element-wise fashion, but it does not seem to be a common or natural processes in practice. In addition, Yiannis Moschovakis considered a mathematical foundation of algorithms [54] in which, similarly to us, he proposed that algorithms and their ‘implementations’ should be distinguished, where by algorithms he refers to what we call high-level computational processes. However, his framework, called recursors, is also based on structures, and his notion of algorithms is relative to atomic operations given in each structure; thus, it does not give a foundational analysis on the notion of ‘effective computability.’ Therefore, although the previous work captures broader notions of computation than the present work, our approach has the advantage of achieving both of the distinction between high-level and low-level computational processes, and the primitive, intrinsic notion of ‘effective computability.’ Also, the interactive, typed nature of game semantics stands in sharp contrast to the previous work as well.

At this point, we need to mention computability logic [39] developed by Giorgi Japaridze since his idea is similar to ours; he defines ‘effective computability’ via computing machines playing in games. Nevertheless, there are notable differences between computability logic and the present work. First, computing machines in computability logic are a variant of TMs, and thus they are less novel as a model of computation than our approach; in fact, the definition of ‘effective computability’ in computability logic can be seen more or less as a consequence of just spelling out the standard notion of recursive strategies [7, 20, 38]. Next, our framework inherits the categorical structure of existing game semantics (see [71] for this point), providing a compositional formulation of logic and computation, i.e., a compound proof or program is constructed from its components, while there has been no known categorical structure of computability logic. Nevertheless, it would be interesting to adopt his TMs-based approach in our framework and compare the resulting computational power with that of the present work.

Finally, let us mention some of the precursors of game semantics. To clarify the notion of higher-order computability, Stephen Cole Kleene considered a model of higher-order computation based on dialogues between computational oracles in a series of papers [42,43,44], which can be seen as the first attempt to define a mathematical notion of algorithms in a higher-order setting [50]. Moreover, Gandy and his student Giovanni Pani refined these works by Kleene to obtain a model of PCF that satisfies universality though this work was not published. These previous papers are direct ancestors of game semantics (in particular the so-called HO-games [38] by Martin Hyland and Luke Ong). As another line of research (motivated by the full abstraction problem for PCF [58]), Pierre-Louis Curien and Gerard Berry conceived of sequential algorithms [10] which was the first attempt to go beyond (extensional) functions to capture sequentiality of PCF. Sequential algorithms preceded and became highly influential to the development of game semantics; in fact, sequential algorithms are presented in the style of game semantics in [50], and it is shown in [14] that the oracle computation developed by Kleene can be represented by sequential algorithms (though the converse does not hold). Nevertheless, a point we would like to emphasize here is that neither of the previous attempts defines ‘effective computability’ in a similar manner to the present work; our approach has an advantage in its intrinsic, non-inductive, non-axiomatic nature.

1.9 Structure of the paper

The rest of the paper proceeds roughly as follows. This introduction ends with fixing some notation. Then, recalling dynamic games and strategies in Sect. 2, we define viability of strategies and establish, as the main theorem, the fact that viable dynamic strategies may interpret all terms of PCF in Sect. 3, proving their Turing completeness as a corollary. Finally, we draw a conclusion and propose future work in Sect. 4.

Notation

We use the following notation throughout the paper:

-

We use bold letters \(\varvec{s}, \varvec{t}, \varvec{u}, \varvec{v}\), etc. for sequences, in particular \(\varvec{\epsilon }\) for the empty sequence, and letters a, b, c, d, m, n, x, y, z, etc. for elements of sequences;

-

We often abbreviate a finite sequence \(\varvec{s} = (x_1, x_2, \dots , x_{|\varvec{s}|})\) as \(x_1 x_2 \dots x_{|\varvec{s}|}\), where \(|\varvec{s}|\) denotes the length (i.e., the number of elements) of \(\varvec{s}\), and write \(\varvec{s}(i)\), where \(i \in \{ 1, 2, \dots , |\varvec{s}| \}\), as another notation for \(x_i\);

-

A concatenation of sequences is represented by the juxtaposition of them, but we often write \(a \varvec{s}\), \(\varvec{t} b\), \(\varvec{u} c \varvec{v}\) for \((a) \varvec{s}\), \(\varvec{t} (b)\), \(\varvec{u} (c) \varvec{v}\), etc., and also write \(\varvec{s} . \varvec{t}\) for \(\varvec{s t}\);

-

We define \(\varvec{s}^n {\mathop {=}\limits ^{\mathrm {df. }}} \underbrace{\varvec{s} \varvec{s} \cdots \varvec{s}}_n\) for a sequence \(\varvec{s}\) and a natural number \(n \in \mathbb {N}\);

-

We write \(\mathsf {Even}(\varvec{s})\) (resp. \(\mathsf {Odd}(\varvec{s})\)) iff \(\varvec{s}\) is of even-length (resp. odd-length);

-

We define \(S^\mathsf {P} {\mathop {=}\limits ^{\mathrm {df. }}} \{ \varvec{s} \in S \mid \mathsf {P}(\varvec{s}) \}\) for a set S of sequences and \(\mathsf {P} \in \{ \mathsf {Even}, \mathsf {Odd} \}\);

-

\(\varvec{s} \preceq \varvec{t}\) means \(\varvec{s}\) is a prefix of \(\varvec{t}\), i.e., \(\varvec{t} = \varvec{s} . \varvec{u}\) for some sequence \(\varvec{u}\), and given a set S of sequences, we define \(\mathsf {Pref}(S) {\mathop {=}\limits ^{\mathrm {df. }}} \{ \varvec{s} \mid \exists \varvec{t} \in S . \ \! \varvec{s} \preceq \varvec{t} \ \! \}\);

-

For a poset P and a subset \(S \subseteq P\), \(\mathsf {Sup}(S)\) denotes the supremum of S;

-

\(X^* {\mathop {=}\limits ^{\mathrm {df. }}} \{ x_1 x_2 \ldots x_n \mid n \in \mathbb {N}, \forall i \in \{ 1, 2, \ldots , n \} . \ \! x_i \in X \ \! \}\) for each set X;

-

For a function \(f : A \rightarrow B\) and a subset \(S \subseteq A\), we define \(f \upharpoonright S : S \rightarrow B\) to be the restriction of f to S, and \(f^*: A^*\rightarrow B^*\) by \(f^*(a_1 a_2 \dots a_n) {\mathop {=}\limits ^{\mathrm {df. }}} f(a_1) f(a_2) \dots f(a_n) \in B^*\) for all \(a_1 a_2 \dots a_n \in A^*\);

-

Given sets \(X_1, X_2, \dots , X_n\), and an index \(i \in \{ 1, 2, \dots , n \}\), we write \(\pi _i\) (or \(\pi _i^{(n)}\)) for the ith projection function \(X_1 \times X_2 \times \dots \times X_n \rightarrow X_i\) that maps \((x_1, x_2, \dots , x_n) \mapsto x_i\) for all \(x_j \in X_j\) (\(j = 1, 2, \ldots , n\));

-

\(\simeq \) denote the Kleene equality, i.e., \(x \simeq y {\mathop {\Leftrightarrow }\limits ^{\mathrm {df. }}} (x \downarrow \wedge \ y \downarrow \wedge \ x = y) \vee (x \uparrow \wedge \ y \uparrow )\), where we write \(x \downarrow \) if an element x is defined, and \(x \uparrow \) otherwise.

2 Preliminary: games and strategies

Our games and strategies are essentially the ‘dynamic refinement’ of McCusker’s variant [6, 51],Footnote 9 which has been proposed under the name of dynamic games and strategies by the present author and Abramsky in [71] to capture dynamics (or rewriting) and intensionality (or algorithms) of computation by mathematical, particularly syntax-independent, concepts. As already explained, we have chosen this variant since, in contrast to conventional games and strategies, dynamic games and strategies capture step-by-step processes in computation, which is essential for a TMs-like model of computation.

However, we need some modifications of dynamic games and strategies. First, although disjoint union of sets of moves (for constructions on games) is usually treated informally for brevity, we need to adopt a particular formalization of ‘tags’ for the disjoint union because we are concerned with ‘effective computability’ of strategies, and thus, we must show that manipulations of ‘tags’ are all ‘effectively executable’ by strategies. In particular, we have to employ exponential ! in which different ‘rounds’ or threads are distinguished by such ‘effective tags.’

In addition, we slightly refine the original definition of dynamic games by requiring that an intermediate occurrence of an O-move in a position of a dynamic game must be a mere copy of the last occurrence of a P-move, which reflects the example of composition without hiding in the introduction. This modification is due to our computability-theoretic motivation: Intermediate occurrences of moves are ‘invisible’ to O (as in the example of composition without hiding), and therefore, P has to ‘effectively’ compute intermediate occurrences of O-moves too (though this point does not matter in [71]); note that it is clearly ‘effective’ to just copy and repeat a move. Also, it conceptually makes sense as well: Intermediate occurrences of O-moves are just copies or dummies of those of P-moves, and thus what happens in the intermediate part of each play is essentially P’s calculation only. Technically, this is achieved by introducing dummy internal O-moves (Definition 8) and strengthening the axiom DP2 (Definition 18). Let us remark, however, that this refinement is technically trivial, and it is not our main contribution.

This section presents the resulting variant of games and strategies. Fixing an implementation of ‘tags’ in Sect. 2.1 as a preparation, we recall (the slightly modified) dynamic games and strategies in Sects. 2.2 and 2.3, respectively. To make this paper essentially self-contained, we shall explain motivations and intuitions behind the definitions.

2.1 On ‘tags’ for disjoint union of sets

Let us begin with fixing ‘tags’ for disjoint union of sets that can be ‘effectively’ manipulated. We first define outer tags (Definition 3) for exponential (Definition 33), and then inner tags (Definition 5) for other constructions on games.

Definition 1

(Effective tags) An effective tag is a finite sequence over the two-element set \(\Sigma = \{ \ell , \hbar \}\), where \(\ell \) and \(\hbar \) are arbitrarily fixed elements such that \(\ell \ne \hbar \).

Definition 2

(Decoding and encoding) The decoding function \( de : \Sigma ^*\rightarrow \mathbb {N}^*\) and the encoding function \( en : \mathbb {N}^*\rightarrow \Sigma ^*\) are defined, respectively, by:

for all \(\varvec{\gamma } \in \Sigma ^*\) and \((j_1, j_2, \dots , j_l) \in \mathbb {N}^*\), where \(\varvec{\gamma } = \ell ^{i_1} \hbar \ \! \ell ^{i_2} \hbar \dots \ell ^{i_{k-1}} \hbar \ \! \ell ^{i_k}\).

Clearly, the functions \( de : \Sigma ^*\leftrightarrows \mathbb {N}^*: en \) are mutually inverses (n.b., they both map the empty sequence \(\varvec{\epsilon }\) to itself). In fact, each effective tag \(\varvec{\gamma } \in \Sigma ^*\) is intended to be a binary representation of the finite sequence \( de (\varvec{\gamma }) \in \mathbb {N}^*\) of natural numbers.

However, effective tags are not sufficient for our purpose: For nested exponentials occurring in promotion (Definition 57) and fixed-point strategies (Example 75), we need to ‘effectively’ associate a natural number to each pair of natural numbers in an ‘effectively’ invertible manner. Of course it is possible as there is a recursive bijection \(\mathbb {N} \times \mathbb {N} {\mathop {\rightarrow }\limits ^{\sim }} \mathbb {N}\) whose inverse is recursive too, which is an elementary fact in computability theory [16, 60], but we cannot rely on it for we are aiming at developing an autonomous foundation of ‘effective computability.’

On the other hand, such a bijection is necessary only for manipulating effective tags, and so we would like to avoid an involved mechanism to achieve it. Then, our solution for this problem is to simply introduce elements to denote the bijection:

Definition 3

(Outer tags) An outer tag or an extended effective tags is an expression  , where

, where  and

and  are arbitrarily fixed elements such that

are arbitrarily fixed elements such that  and

and  , generated by the grammar \(\varvec{e} {\mathop {\equiv }\limits ^{\mathrm {df. }}} \varvec{\gamma } \mid \varvec{e}_1 \hbar \ \! \varvec{e}_2 \mid \)

, generated by the grammar \(\varvec{e} {\mathop {\equiv }\limits ^{\mathrm {df. }}} \varvec{\gamma } \mid \varvec{e}_1 \hbar \ \! \varvec{e}_2 \mid \)

, where \(\varvec{\gamma }\) ranges over effective tags.

, where \(\varvec{\gamma }\) ranges over effective tags.

Notation

Let \(\mathcal {T}\) denote the set of all outer tags.

Definition 4

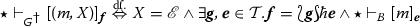

(Extended decoding) The extended decoding function \( ede : \mathcal {T} \rightarrow \mathbb {N}^*\) is recursively defined by:

where \(\wp : \mathbb {N}^*{\mathop {\rightarrow }\limits ^{\sim }} \mathbb {N}\) is any recursive bijection fixed throughout the present paper such that \(\wp (i_1, i_2, \dots , i_k) \ne \wp ( j_1, j_2, \dots , j_l)\) whenever \(k \ne l\) (see, e.g., [16]).

Of course, we lose the bijectivity between \(\Sigma ^*\) and \(\mathbb {N}^*\) for outer tags (e.g., if  , then \( ede (\ell ^{i}) = (i)\), but

, then \( ede (\ell ^{i}) = (i)\), but  ), but in return, we may ‘effectively execute’ the bijection \(\wp : \mathbb {N}^*{\mathop {\rightarrow }\limits ^{\sim }} \mathbb {N}\) by just inserting the elements

), but in return, we may ‘effectively execute’ the bijection \(\wp : \mathbb {N}^*{\mathop {\rightarrow }\limits ^{\sim }} \mathbb {N}\) by just inserting the elements  and

and  .Footnote 10 We shall utilize outer tags for exponential !; see Definition 33.

.Footnote 10 We shall utilize outer tags for exponential !; see Definition 33.

On the other hand, for ‘tags’ on moves for other constructions on games, i.e., \((\_)^{[i]}\) in the introduction, let us employ just four distinguished elements:

Definition 5

(Inner tags) Let \(\mathscr {W}\), \(\mathscr {E}\), \(\mathscr {N}\) and \(\mathscr {S}\) be arbitrarily fixed, pairwise distinct elements. A finite sequence \(\varvec{s} \in \{ \mathscr {W}, \mathscr {E}, \mathscr {N}, \mathscr {S} \}^*\) is called an inner tag.

We shall focus on games whose moves are all tagged elements:

Definition 6

(Inner elements) An inner element is a finitely nested pair \(( \dots ((m, t_1), t_2), \dots , t_k)\), usually written \(m_{t_1 t_2 \dots t_k}\), such that m is a distinguished element, called the substance of \(m_{t_1 t_2 \dots t_k}\), and \(t_1 t_2 \dots t_k\) is an inner tag.

Definition 7

(Tagged elements) A tagged element is any pair \((m_{t_1 t_2 \dots t_k}, \varvec{e})\), usually written \([m_{t_1 t_2 \dots t_k}]_{\varvec{e}}\), of an inner element \(m_{t_1 t_2 \dots t_k}\) and an outer tag \(\varvec{e} \in \mathcal {T}\).

Convention

We often abbreviate an inner element \(m_{t_1 t_2 \dots t_k}\) as m if the inner tag \(t_1 t_2 \dots t_k\) is not very important.

2.2 Games

As already stated, our games are (slightly modified) dynamic games introduced in [71]. The main idea of dynamic games is to introduce, in McCusker’s games [6, 51], a distinction between internal and external moves, where internal moves constitute internal communication between strategies (i.e., moves with square boxes in the introduction), and they are to be a posteriori hidden by the hiding operation, in order to capture intensionality and dynamics of computation by internal moves and the hiding operation, respectively. Conceptually, internal moves are ‘invisible’ to O as they represent how P ‘internally’ calculates the next external P-move (i.e., step-by-step processes in computation). In addition, unlike [71], we restrict internal O-moves to dummies of internal P-moves (Definition 8) for the computability-theoretic motivation already mentioned at the beginning of Sect. 2.

We first review (the slightly modified) dynamic games in the present section; see [71] for the details, and [3, 6, 37] for a general introduction to game semantics.

Convention

To distinguish our ‘dynamic concepts’ from conventional ones [6, 51], we add the word static in front of the latter, e.g., static arenas, static games, etc.

2.2.1 Arenas and legal positions

Similarly to McCusker’s games, dynamic games are based on two preliminary concepts: (dynamic) arenas and legal positions. An arena defines the basic components of a game, which in turn induces its legal positions that specify the basic rules of the game. Let us begin with recalling these two concepts.

Definition 8

(Dynamic arenas [71]) A dynamic arena is a quadruple \(G = (M_G, \lambda _G, \vdash _G, \Delta _G)\), where:

-

\(M_G\) is a set of tagged elements, called moves, such that: (M) the set \(\pi _1(M_G)\) of all inner elements of G is finite;

-

\(\lambda _G\) is a function \(M_G \rightarrow \{ \mathsf {O}, \mathsf {P} \} \times \{ \mathsf {Q}, \mathsf {A} \} \times \mathbb {N}\), called the labeling function, where \(\mathsf {O}\), \(\mathsf {P}\), \(\mathsf {Q}\) and \(\mathsf {A}\) are arbitrarily fixed, pairwise distinct symbols, called the labels, that satisfies: (L) \(\mu (G) {\mathop {=}\limits ^{\mathrm {df. }}} \mathsf {Sup}(\{ \lambda _G^{\mathbb {N}}(m) \mid m \in M_G \ \! \}) \in \mathbb {N}\);

-

\(\vdash _G\) is a subset of \((\{ \star \} \cup M_G) \times M_G\), where \(\star \) is an arbitrarily fixed symbol such that \(\star \not \in M_G\), called the enabling relation, that satisfies:

-

(E1) If \(\star \vdash _G m\), then \(\lambda _G(m) = (\mathsf {O}, \mathsf {Q}, 0)\), and \(n = \star \) whenever \(n \vdash _G m\);

-

(E2) If \(m \vdash _G n\) and \(\lambda _G^{\mathsf {QA}}(n) = \mathsf {A}\), then \(\lambda _G^{\mathsf {QA}}(m) = \mathsf {Q}\) and \(\lambda _G^{\mathbb {N}}(m) = \lambda _G^{\mathbb {N}}(n)\);

-

(E3) If \(m \vdash _G n\) and \(m \ne \star \), then \(\lambda _G^{\mathsf {OP}}(m) \ne \lambda _G^{\mathsf {OP}}(n)\);

-

(E4) If \(m \vdash _G n\), \(m \ne \star \) and \(\lambda _G^{\mathbb {N}}(m) \ne \lambda _G^{\mathbb {N}}(n)\), then \(\lambda _G^{\mathsf {OP}}(m) = \mathsf {O}\);

-

-

\(\Delta _G\) is a bijection \(M_G^{\mathsf {PInt}} {\mathop {\rightarrow }\limits ^{\sim }} M_G^{\mathsf {OInt}}\), called the dummy function, that satisfies: (D) there exists some finite partial function \(\delta _G\) on inner tags such that if \([m_{\varvec{t}}]_{\varvec{e}} \in M_G^{\mathsf {PInt}}\), \([n_{\varvec{u}}]_{\varvec{f}} \in M_G^{\mathsf {OInt}}\) and \(\Delta _G([m_{\varvec{t}}]_{\varvec{e}}) = [n_{\varvec{u}}]_{\varvec{f}}\), then \(m = n\), \(\varvec{e} = \varvec{f}\), \(\lambda _G^{\mathsf {QA}}([m_{\varvec{t}}]_{\varvec{e}}) = \lambda _G^{\mathsf {QA}}([n_{\varvec{u}}]_{\varvec{f}})\), \(\lambda _G^{\mathbb {N}}([m_{\varvec{t}}]_{\varvec{e}}) = \lambda _G^{\mathbb {N}}([n_{\varvec{u}}]_{\varvec{f}})\) and \(\varvec{u} = \delta _G(\varvec{t})\)

in which \(\lambda _G^{\mathsf {OP}} {\mathop {=}\limits ^{\mathrm {df. }}} \pi _1 \circ \lambda _G : M_G \rightarrow \{ \mathsf {O}, \mathsf {P} \}\), \(\lambda _G^{\mathsf {QA}} {\mathop {=}\limits ^{\mathrm {df. }}} \pi _2 \circ \lambda _G : M_G \rightarrow \{ \mathsf {Q}, \mathsf {A} \}\), \(\lambda _G^{\mathbb {N}} {\mathop {=}\limits ^{\mathrm {df. }}} \pi _3 \circ \lambda _G : M_G \rightarrow \mathbb {N}\), \(M_G^{\mathsf {PInt}} {\mathop {=}\limits ^{\mathrm {df. }}} \langle \lambda _G^{\mathsf {OP}}, \lambda _G^{\mathbb {N}} \rangle ^{-1}(\{ (\mathsf {P}, d) \mid d \geqslant 1 \ \! \})\) and \(M_G^{\mathsf {OInt}} {\mathop {=}\limits ^{\mathrm {df. }}} \langle \lambda _G^{\mathsf {OP}}, \lambda _G^{\mathbb {N}} \rangle ^{-1}(\{ (\mathsf {O}, d) \mid d \geqslant 1 \ \! \})\). A move \(m \in M_G\) is initial if \(\star \vdash _G m\), an O-move (resp. a P-move) if \(\lambda _G^{\mathsf {OP}}(m) = \mathsf {O}\) (resp. if \(\lambda _G^{\mathsf {OP}}(m) = \mathsf {P}\)), a question (resp. an answer) if \(\lambda _G^{\mathsf {QA}}(m) = \mathsf {Q}\) (resp. if \(\lambda _G^{\mathsf {QA}}(m) = \mathsf {A}\)), and internal or \(\varvec{\lambda _{{\varvec{G}}}^{\mathbb {N}}({{\varvec{m}}})}\)-internal (resp. external) if \(\lambda _G^{\mathbb {N}}(m) > 0\) (resp. if \(\lambda _G^{\mathbb {N}}(m) = 0\)). A finite sequence \(\varvec{s} \in M_G^*\) of moves is \({{{\varvec{d}}}}\)-complete if it ends with a move m such that \(\lambda _G^{\mathbb {N}}(m) = 0 \vee \lambda _G^{\mathbb {N}}(m) > d\), where \(d \in \mathbb {N} \cup \{ \omega \}\), and \(\omega \) is the least transfinite ordinal. For each \(m \in M_G^{\mathsf {PInt}}\), \(\Delta _G(m) \in M_G^{\mathsf {OInt}}\) is the dummy of m.

Notation

We write \(M_G^{\mathsf {Init}}\) (resp. \(M_G^{\mathsf {Int}}\), \(M_G^{\mathsf {Ext}}\)) for the set of all initial (resp. internal, external) moves of a dynamic arena G.

A dynamic arena is a static arena defined in [6], equipped with another labeling \(\lambda _G^{\mathbb {N}}\) on moves and dummies of internal P-moves, satisfying additional axioms about them. From the opposite angle, dynamic arenas are a generalization of static arenas: A static arena is equivalent to a dynamic arena whose moves are all external.

Recall that a static arena A determines possible moves of a game, each of which is O’s/P’s question/answer, and specifies which move n can be performed for each move m by the relation \(m \vdash _A n\) (and \(\star \vdash _A m\) means that m can initiate a play). Its axioms are E1, E2 and E3 (excluding the conditions on \(\lambda _A^{\mathbb {N}}\)):

-

E1 sets the convention that an initial move must be O’s question, and an initial move cannot be performed for a previous move;

-

E2 states that an answer must be performed for a question;

-

E3 mentions that an O-move must be performed for a P-move, and vice versa.

Then, as an additional structure for dynamic arenas G, the work [71] employs all natural numbers for \(\lambda _G^{\mathbb {N}}\), not only the internal/external (I/E)-parity, to define a step-by-step execution of the hiding operation \(\mathcal {H}\): The operation \(\mathcal {H}\) deletes all internal moves m such that \(\lambda _G^{\mathbb {N}}(m)\), called the priority order of m (since it indicates the priority order of m with respect to the execution of \(\mathcal {H}\)), is 1 and decreases the priority orders of the remaining internal moves by 1.Footnote 11

In addition, unlike [71], we have introduced the additional structure of dummy functions for the computability-theoretic motivation mentioned at the beginning of Sect. 2. The idea is that each internal O-move \(m \in M_G^{\mathsf {OInt}}\) of a dynamic game G must be the dummy of a unique internal P-move \(m' \in M_G^{\mathsf {PInt}}\), i.e., \(m = \Delta _G(m')\), and m may occur in a position only right after an occurrence of \(m'\), which axiomatizes the phenomenon of intermediate occurrences of moves in the composition of \( succ \) and \( double \) without hiding described in the introduction. We shall formalize this restriction on occurrences of internal O-moves by the axiom DP2 in Definition 18.

Note that the additional axioms for dynamic areas are intuitively natural:

-

M requires the set \(\pi _1 (M_G)\) to be finite so that each move is distinguishable, which is not required in [71] yet necessary to define ‘effectivity’ in the present work;

-

L requires the least upper bound \(\mu (G)\) to be finite as it is conceptually natural and technically necessary for concatenation \(\ddagger \) of games (Definition 36);

-

E1 adds \(\lambda _G^{\mathbb {N}}(m) = 0\) for all \(m \in M_G^{\mathsf {Init}}\) as O cannot ‘see’ internal moves, and thus, he cannot initiate a play with an internal move;

-

E2 additionally requires the priority orders between a ‘QA pair’ to be the same since otherwise an output of the hiding operation may not be well defined;

-

E4 states that only P can perform a move for a previous move if they have different priority orders because internal moves are ‘invisible’ to O (as we shall see, if \(\lambda _G^{\mathbb {N}}(m_1) = k_1 < k_2 = \lambda _G^{\mathbb {N}}(m_2)\), then after the \(k_1\)-many iteration of the hiding operation, \(m_1\) and \(m_2\) become external and internal, respectively, i.e., the I/E-parity of moves is relative, which is why E4 is not only concerned with I/E-parity but more fine-grained priority orders);

-

D requires that each internal P-move \(p \in M_G^{\mathsf {PInt}}\) and its dummy \(\Delta _G(p) \in M_G^{\mathsf {OInt}}\) may differ only in their inner tags since the latter is the dummy of the former (n.b., it reflects the informal example in the introduction), and \(\Delta _G(p)\) is ‘effectively’ obtainable from p by a finitary calculation \(\delta _G\) on inner tags.

Convention

From now on, arenas refer to dynamic arenas by default.

As explained previously, an interaction between P and O in a game is represented by a finite sequence of moves that satisfies certain axioms (under the name of (valid) positions; see Definition 18). Strictly speaking, however, we equip such sequences with an additional structure, called justifiers or pointers, to distinguish similar yet different computational processes (see, e.g., [6] for this point):

Definition 9

(Occurrences of moves) Given a finite sequence \(\varvec{s} \in M_G^*\) of moves of an arena G, an occurrence (of a move) in \(\varvec{s}\) is a pair \((\varvec{s}(i), i)\) such that \(i \in \{ 1, 2, \dots , |\varvec{s}| \}\). More specifically, we call the pair \((\varvec{s}(i), i)\) an initial occurrence (resp. a non-initial occurrence) in \(\varvec{s}\) if \(\star \vdash _G \varvec{s}(i)\) (resp. otherwise).

Remark

We have been so far casual about the distinction between moves and occurrences, but we shall be more precise from now on.

Definition 10

(J-sequences [6, 38]) A justified (j-) sequence of an arena G is a pair \(\varvec{s} = (\varvec{s}, \mathcal {J}_{\varvec{s}})\) of a finite sequence \(\varvec{s} \in M_G^*\) and a map \(\mathcal {J}_{\varvec{s}} : \{ 1, 2, \dots , |\varvec{s}| \} \rightarrow \{ 0, 1, 2, \dots , |\varvec{s}| \}\) such that for all \(i \in \{ 1, 2, \dots , |\varvec{s}| \}\) \(\mathcal {J}_{\varvec{s}}(i) = 0\) if \(\star \vdash _G \varvec{s}(i)\), and \(0< \mathcal {J}_{\varvec{s}}(i) < i \wedge \varvec{s}({\mathcal {J}_{\varvec{s}}(i)}) \vdash _G \varvec{s}(i)\) otherwise. The justifier of each non-initial occurrence \((\varvec{s}(i), i)\) in \(\varvec{s}\) is the occurrence \((\varvec{s}({\mathcal {J}_{\varvec{s}}(i)}), \mathcal {J}_{\varvec{s}}(i))\) in \(\varvec{s}\). We say that \((\varvec{s}(i), i)\) is justified by \((\varvec{s}({\mathcal {J}_{\varvec{s}}(i)}), \mathcal {J}_{\varvec{s}}(i))\), or there is a (necessarily unique) pointer from the former to the latter.

Notation

We write \(\mathscr {J}_G\) for the set of all j-sequences of an arena G.

Convention

By abuse of notation, we usually keep the pointer structure \(\mathcal {J}_{\varvec{s}}\) of each j-sequence \(\varvec{s} = (\varvec{s}, \mathcal {J}_{\varvec{s}})\) implicit and often abbreviate occurrences \((\varvec{s}(i), i)\) in \(\varvec{s}\) as \(\varvec{s}(i)\). Thus, \(\varvec{s} = \varvec{t} \in \mathscr {J}_G\) means \(\varvec{s} = \varvec{t}\) and \(\mathcal {J}_{\varvec{s}} = \mathcal {J}_{\varvec{t}}\). Moreover, we usually write \(\mathcal {J}_{\varvec{s}}(\varvec{s}(i)) = \varvec{s}(j)\) for \(\mathcal {J}_{\varvec{s}}(i) = j\). This convention is mathematically imprecise, but it is very convenient in practice, and it does not bring any serious confusion (in fact, it has been standard in the literature of game semantics).

The idea is that each non-initial occurrence in a j-sequence must be performed for a specific previous occurrence, viz. its justifier. Since the present paper is not concerned with a faithful interpretation of programs, one may wonder if justifiers would play any important role in the rest of the paper; however, they do in a novel manner: They allow P to ‘effectively’ collect, from the history of previous occurrences, a bounded number of necessary ones, as we shall see in Sect. 3.1.

Note that the first element m of each non-empty j-sequence \(m \varvec{s} \in \mathscr {J}_G\) must be initial; we particularly call m the opening occurrence of \(m \varvec{s}\). Clearly, an opening occurrence must be an initial occurrence, but not necessarily vice versa.

Let us now consider justifiers, j-sequences and arenas from the ‘external viewpoint’ (Definitions 12, 13 and 14):

Definition 11

(J-subsequences [71]) Given an arena G and a j-sequence \(\varvec{s} \in \mathscr {J}_G\), a j-subsequence of \(\varvec{s}\) is a j-sequence \(\varvec{t} \in \mathscr {J}_G\) such that \(\varvec{t}\) is a subsequence of \(\varvec{s}\), and \(\mathcal {J}_{\varvec{t}}(n) = m\) iff there are occurrences \(m_1, m_2, \dots , m_k\) (\(k \in \mathbb {N}\)) in \(\varvec{s}\) eliminated in \(\varvec{t}\) such that \(\mathcal {J}_{\varvec{s}}(n) = m_1 \wedge \mathcal {J}_{\varvec{s}}(m_1) = m_2 \dots \wedge \mathcal {J}_{\varvec{s}}(m_{k-1}) = m_k \wedge \mathcal {J}_{\varvec{s}}(m_{k}) = m\).

Definition 12

(External justifiers [71]) Let G be an arena, \(\varvec{s} \in \mathscr {J}_G\) and \(d \in \mathbb {N} \cup \{ \omega \}\). Each non-initial occurrence n in \(\varvec{s}\) has a unique sequence of justifiers \(m m_k \dots m_2 m_1 n\) \((k \in \mathbb {N})\) such that \(\mathcal {J}_{\varvec{s}}(n) = m_1\), \(\mathcal {J}_{\varvec{s}}(m_1) = m_{2}\), ..., \(\mathcal {J}_{\varvec{s}}(m_{k-1}) = m_k\), \(\mathcal {J}_{\varvec{s}}(m_k) = m\), \(\lambda _G^{\mathbb {N}}(m) = 0 \vee \lambda _G^{\mathbb {N}}(m) > d\) and \(0 < \lambda _G^{\mathbb {N}}(m_i) \leqslant d\) for \(i = 1, 2, \dots , k\). The occurrence m is called the \({{{\varvec{d}}}}\)-external justifier of n in \(\varvec{s}\), and written \(\mathcal {J}_{\varvec{s}}^{\circleddash d}(n)\).

Note that d-external justifiers are a simple generalization of justifiers: 0-external justifiers coincide with justifiers. d-external justifiers are intended to be justifiers after the d-times iteration of the hiding operation \(\mathcal {H}\), as we shall see shortly.

Definition 13

(External j-subsequences [71]) Given an arena G, \(\varvec{s} \in \mathscr {J}_G\) and \(d \in \mathbb {N} \cup \{ \omega \}\), the \({{{\varvec{d}}}}\)-external justified (j-) subsequence \(\mathcal {H}^d_G(\varvec{s})\) of \(\varvec{s}\) is obtained from \(\varvec{s}\) by deleting occurrences of internal moves m such that \(0 < \lambda _G^{\mathbb {N}}(m) \leqslant d\) and equipping the resulting subsequence of \(\varvec{s}\) with pointers \(\mathscr {J}_{\mathcal {H}_G^d(\varvec{s})} : n \mapsto \mathscr {J}_{\varvec{s}}^{\circleddash d}(n)\).

Remark

It should be clear how to reformulate Definitions 11, 12 and 13 more formally, following Definitions 9 and 10.

Definition 14

(External arenas [71]) Let G be an arena, and assume \(d \in \mathbb {N} \cup \{ \omega \}\). The \({{{\varvec{d}}}}\)-external arena \(\mathcal {H}^d(G)\) of G is defined by:

-

\(M_{\mathcal {H}^d(G)} {\mathop {=}\limits ^{\mathrm {df. }}} \{ m \in M_G \mid \lambda _G^{\mathbb {N}}(m) = 0 \vee \lambda _G^{\mathbb {N}}(m) > d \ \! \}\);

-

\(\lambda _{\mathcal {H}^d(G)} {\mathop {=}\limits ^{\mathrm {df. }}} \lambda _G^{\circleddash d} \upharpoonright M_{\mathcal {H}^d(G)}\), where \(\lambda _G^{\circleddash d} {\mathop {=}\limits ^{\mathrm {df. }}} \langle \lambda _G^{\mathsf {OP}}, \lambda _G^{\mathsf {QA}}, m \mapsto \lambda _G^{\mathbb {N}} (m) \circleddash d \rangle \) and \(n \circleddash d {\mathop {=}\limits ^{\mathrm {df. }}} {\left\{ \begin{array}{ll} n - d &{}\text {if }n \geqslant d; \\ 0 &{}\text {otherwise} \end{array}\right. }\) for all \(n \in \mathbb {N}\);

-

\(m \vdash _{\mathcal {H}^d(G)} \! n {\mathop {\Leftrightarrow }\limits ^{\mathrm {df. }}} \exists k \in \mathbb {N}, m_1, m_2, \dots , m_{2k-1}, m_{2k} \in M_G {\setminus } M_{\mathcal {H}^d(G)} . \ \! m \vdash _G m_1 \wedge m_1 \vdash _G m_2 \dots \wedge m_{2k-1} \vdash _G m_{2k} \wedge m_{2k} \vdash _G n\) (\(\Leftrightarrow m \vdash _G n\) if \(k = 0\));

-

\(\Delta _{\mathcal {H}^d(G)} {\mathop {=}\limits ^{\mathrm {df. }}} \Delta _G \upharpoonright M_{\mathcal {H}^d(G)}\).

That is, \(\mathcal {H}^d(G)\) is obtained from G by deleting internal moves m such that \(0 < \lambda _G^{\mathbb {N}}(m) \leqslant d\), decreasing by d the priority orders of the remaining internal moves, ‘concatenating’ the enabling relation to form the ‘d-external’ one and taking the obvious restrictions of the labeling and the dummy functions.

Convention

Given \(d \in \mathbb {N} \cup \{ \omega \}\), we regard \(\mathcal {H}^d\) as an operation on arenas G, called the \({{{\varvec{d}}}}\)-hiding operation (on arenas), and \(\mathcal {H}_G^d\) as an operation on j-sequences of G, called the \({{{\varvec{d}}}}\)-hiding operation (on j-sequences).

Lemma 15

(Closure of arenas and j-sequences under hiding [71]) If G is an arena, then, for all \(d \in \mathbb {N} \cup \{ \omega \}\), so is \(\mathcal {H}^d(G)\), and \(\mathcal {H}_G^d(\varvec{s}) \in \mathscr {J}_{\mathcal {H}^d(G)}\) for all \(\varvec{s} \in \mathscr {J}_G\). Also, \(\underbrace{\mathcal {H}^1 \circ \mathcal {H}^1 \cdots \circ \mathcal {H}^1}_i(G) = \mathcal {H}^i (G)\) and \(\mathcal {H}^1_{\mathcal {H}^{i-1}(G)} \circ \mathcal {H}^1_{\mathcal {H}^{i-2}(G)} \cdots \circ \mathcal {H}^1_{\mathcal {H}^{1}(G)} \circ \mathcal {H}^1_{G}(\varvec{s}) = \mathcal {H}_G^i(\varvec{s})\) for any \(i \in \mathbb {N}\) (it means \(G = G\) and \(\varvec{s} = \varvec{s}\) if \(i = 0\)), arena G and \(\varvec{s} \in \mathscr {J}_G\).

Proof

We need to consider the additional structure of dummy functions; everything else has been proved in [71]. Let G be an arena, and \(d \in \mathbb {N} \cup \{ \omega \}\). For each \(p \in M_G^{\mathsf {PInt}}\), we clearly have \(p \in M_{\mathcal {H}^d(G)} \Leftrightarrow \Delta _G(p) \in M_{\mathcal {H}^d(G)}\) by the axiom D on \(\Delta _G\); thus, \(\Delta _{\mathcal {H}^d(G)}\) is a well-defined bijection \(M_{\mathcal {H}^d(G)}^{\mathsf {PInt}} {\mathop {\rightarrow }\limits ^{\sim }} M_{\mathcal {H}^d(G)}^{\mathsf {OInt}}\). Finally, the axiom D on \(\Delta _{\mathcal {H}^d(G)}\) clearly follows from that on \(\Delta _G\), completing the proof. \(\square \)

Convention