Abstract

Motors, which are one of the most widely used machines in the manufacturing field, take charge of a key role in precision machining. Therefore, it is important to accurately estimate the health state of the motor that affects the quality of the product. The research outlined in this paper aims to improve motor fault severity estimation by suggesting a novel deep learning method, specifically, feature inherited hierarchical convolutional neural network (FI-HCNN). FI-HCNN consists of a fault diagnosis part and a severity estimation part, arranged hierarchically. The main novelty of the proposed FI-HCNN is the special inherited structure between the hierarchy; the severity estimation part utilizes the latent features to exploit the fault-related representations in the fault diagnosis task. FI-HCNN can improve the accuracy of the fault severity estimation because the level-specific abstraction is supported by the latent features. Also, FI-HCNN has ease in practical application because it is developed based on stator current signals which are usually acquired for a control purpose. Experimental studies of mechanical motor faults, including eccentricity, broken rotor bars, and unbalanced conditions, are used to corroborate the high performance of FI-HCNN, as compared to both conventional methods and other hierarchical deep learning methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Motors are widely used in manufacturing applications that require a rotating force due to their low cost and high reliability. In spite of their high reliability, motors are subjected to mechanical and electrical faults because of their exposure to unexpected stresses, such as in-use damage and environmental conditions. The degradation of motors can lead to deterioration in product quality, therefore it is crucial to diagnose the motor state and evaluate the fault severity [1].

To cope with these problems, motor current signature analysis (MCSA) has been studied for fault diagnosis (FD) and severity estimation (SE), due to its ease of implementation [2]. In particular, SE is crucial to enable proper maintenance decisions before a failure of the system. For condition-based maintenance, SE can be easily extended to fault prediction by estimating the growth of the severity [3]. Fault severity is usually defined by the size of the fault; thus, the degradation behavior of the feature is analyzed for SE [4, 5]. Most MCSA techniques for FD and SE have been developed based on the domain knowledge in general; they can be categorized into physics-based and data-driven approaches. Physics-based MCSA mainly derives spectral features that identify the particular fault using motor-specific parameters. For example, mathematical models have been formulated to investigate inter-turn shorts in stator windings [6]; further, the stator current spectrum was analyzed for SE of unbalance, eccentricity, and bearing faults [7]. These methods can be applied to generic motor systems; however, real-world applications are limited because specific motor expertise—which is not easily known—is necessary. Studies on data-driven MCSA, on the other hand, make an effort to extract fault-sensitive features and apply the proper learning method. Many signal processing methods, including wavelet decomposition [8], discrete wavelet transform [9], and empirical mode decomposition [10] have been used to design significant features. Several artificial intelligence methods have been applied to learn the manual features, for example, genetic algorithm [11], support vector machine (SVM) [12], and artificial neural network [13]. These methods do not require motor-specific expertise; however, they do require complicated signal processing techniques (which are labor-intensive) to create meaningful, handcrafted features. Therefore, the lack of domain knowledge hinders both MCSA approaches and results in suboptimal features that have difficulty discriminating fault modes and evaluating the fault severity. The analysis becomes even more complicated in cases with multiple fault modes with various severity levels. Thus, new FD and SE research is needed to further study and improve their performance in situations with minimal domain knowledge.

With this in mind, deep learning (DL), which can be a part of data-driven approaches, can help to ease the problem of limited domain knowledge. In particular, the autonomous feature extraction of DL has brought splendid results in many machinery health monitoring situations [14,15,16,17,18,19]. The convolutional neural network (CNN) approach, which is known as one of the most effective DL models, has demonstrated powerful performance with vibration signals for rotating systems, such as bearings [20, 21] and gearboxes [22, 23]. In the case of motors, several previous studies have mainly focused on devising efficient input data for training CNN models using vibration signals [24,25,26]. For stator current signals, however, there exist only a relatively small number of studies. For example, Ince et al. [27] used a 1-D CNN architecture to detect a motor bearing cage fault. In [28], SincNet was adopted to classify multiple faults, including broken rotor bars and bearing faults.

In this paper, a DL-based SE method for mechanical motor faults is proposed using stator current signals. We call the new method feature inherited hierarchical CNN (FI-HCNN). The structure of the proposed model uses a hierarchical CNN (HCNN) that follows the flow of performing SE after FD; further, it enhances the performance of SE through a novel connecting architecture. Each SE module of FI-HCNN learns the level characteristics of particular fault modes from the latent features, which are representations formulated in the FD module. This is possible due to the structure of the proposed FI-HCNN approach, where the latent features in the FD module are used as inputs to the corresponding SE modules. Moreover, the proposed FI-HCNN considers the continuity of fault severity by learning SE modules with regression. Although the severity can be computed by probability interpolation of each fault severity in the case of classification, the premise of linearity of all fault severities is required for this approach. Two main contributions are made in this research: (1) To the best of our knowledge, this is the first time a stator current signal has been applied to the hierarchical DL structure to diagnose and evaluate motor faults. While existing studies on DL-based motor FD use vibration signals, we propose a new DL method for FD and SE of induction motors based on the stator current signal. (2) A special connecting structure is suggested and its benefits for SE are explored. Through this connection, the latent feature spaces in the prior module are transferred to the subsequent modules and used to support the SE module as it captures the sophisticated level-specific features of a particular fault. The performance of FI-HCNN is analyzed by examining its performance compared to both conventional MCSA methods and other HCNN methods.

The remainder of this paper is organized as follows. The related work that is necessary to understand the proposed FI-HCNN method is detailed in Sect. 2. Section 3 describes the developed FI-HCNN method. The effectiveness of the developed method is discussed in Sect. 4, including comparisons with several existing methods and experimental validation. The conclusions are presented in Sect. 5.

2 Related Works

2.1 Convolutional Neural Networks (CNN)

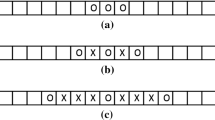

A CNN consists of three types of layers; the convolution layer, the pooling layer, and the fully connected (FC) layer. These layers operate differently from a multilayer perceptron (MLP), which is formed with sets of FC layers. CNN has two distinctive properties that make its performance better in terms of memory and statistical efficiency, specifically: (1) sparse connectivity, and (2) parameter sharing [29]. Sparse connectivity refers to the fact that each node in a layer is connected to a limited number of previous nodes by a filter (also called kernels) smaller than that of the input. This architecture—where the filters in a layer are just connected to the nodes in the receptive fields (not connected to all inputs)—encourages the filters in the frontal layer to concentrate on low-level features and combine them into high-level features as the layers are stacked. Parameter sharing means that the weights in a filter are applied equivalently in one layer. These two properties are illustrated in Fig. 1. Through these two properties, CNN learns the most adaptive filters for the objective of the model and configures the sets of the feature map.

2.2 Hierarchical Networks

A hierarchical network consists of a parent and two or more child modules. In image classification, several hierarchical models have been proven effective by categorizing the superclass in the parent module and classifying the fine classes in the child modules. Figure 2 depicts the schematic of a hierarchical network, where the total number of classes is N1 + N2 + … + Nk; these can be categorized into k superclasses. For example, when the superclasses are set to “animal” and “building” the possible fine classes could include “cat” and “dog” for the former, and “schools” and “hospitals” for the latter. In the field of computer vision, several studies have developed algorithms to construct appropriate superclasses and classify images. In [30], the tree-based priors encouraged transfer of the input to the related classes. The hierarchical exclusive graphs in [31] classified the large-scale objects with theoretical interpretations. In [32], the algorithm was able to pretrain the fine classes independently by using a combination of shared low-level features and additional input.

3 The Proposed Feature Inherited Hierarchical CNN (FI-HCNN) Method Using Stator Current Signals

This section details the proposed feature inherited hierarchical CNN (FI-HCNN) method. First, the special connected architecture of the hierarchical learning model is explained and the overall hierarchical structure, which consists of an FD module and several SE modules, is described.

3.1 Feature Inheritance Architecture

When the hierarchical network (as explained in Sect. 2.2) is applied for machinery health monitoring, the parent module can be matched to FD, and the child modules matched to SE for each fault mode. When the FD module and SE modules are deployed in the hierarchy, they reflect two different objectives, respectively: first, classifying a particular fault mode and then estimating its severity. In contrast to the ordinary hierarchical architecture, the proposed FI-HCNN delivers the latent features (\(\widehat{\mathbf{t}}\)) from the FD to the SE module. This concept is called feature inheritance. As shown in Fig. 3, the input data x evolves into learned representations that contain rich characteristics for the particular fault mode (Ck) in the FD module. These representations refer to \(\widehat{\mathbf{t}}\). \(\widehat{\mathbf{t}}\) are used as the input to the SE module of Ck; they are learned to be regressed to the severity of Ck (\({S}_{{C}_{k}}\)) through Ck’s SE module.

Specifically, \(\widehat{\mathbf{t}}\) are the values calculated from the last pooling layer in the FD module. When the filters of the FD module are trained to highlight the characteristics of the fault based on the input data, \(\widehat{\mathbf{t}}\)—by passing through these filters—they are expected to develop into the features that contain significant and intensive abstractions about the particular fault mode. By extending without discarding \(\widehat{\mathbf{t}}\), the learning of the SE modules can be more focused on capturing higher-level features; this can support the regression of fault severity. Therefore, the transmission of \(\widehat{\mathbf{t}}\) helps learn the degree of a specific fault and leads to enhanced SE performance.

3.2 A Hierarchical Structure for Fault Diagnosis (FD) and Severity Estimation (SE)

Using feature inheritance, which is the key idea of FI-HCNN, the overall hierarchical structure is configured as shown in Fig. 4. The proposed FI-HCNN method consists of three parts: (1) preprocessing, (2) FD, and (3) SE. Each fault mode has its own SE module, while the normal state does not go through any additional modules. x denotes the pre-processed current data, \(\widehat{\mathbf{t}}\) signifies the latent features, WFD and WSE are the weight matrices of the FD and SE modules, respectively, C is the fault mode, and S is the severity of each fault mode. The severity ranges from 0 to 1.

3.2.1 Part 1. Preprocessing

Before the hierarchical network starts learning, four steps of preprocessing (resampling, augmentation, normalization, and scaling) are executed on the raw current signals. First, the resampling adjusts all data by interpolation to have the same amount of information under the same operating conditions; this makes each datum unit have the same points in a revolution. Second, data augmentation is conducted by overlapping the amount of data of one revolution. This augmentation, which conserves the periodic characteristic of the current signal, not only has a positive effect on performance, it can also help the filters in the model to learn the relevant features. Third, normalization, which subtracts the self-mean and divides the total standard deviation, is used to homogenize the data of each experiment. Finally, the amplitudes of the current signals are scaled from − 1 to 1. The scaling of current signals allows expandability to signals from different sized motors and a decrement in the uncertain effects of the load torque condition.

3.2.2 Part 2. Fault diagnosis (FD)

After preprocessing, the refined current data enters the FD module. The FD module consists of three convolution layers, max-pooling layers, and one FC layer. Through the three convolution and max-pooling layers, the input data can be formulated as the features that reveal the fault characteristics. The FC layer is learned to classify the features to the fault mode. The task of the FD module can be explained as \(p\left(\widehat{C}|\mathbf{x},{\mathbf{W}}_{\mathbf{F}\mathbf{D}}\right)\sim p(C|\mathbf{x})\). The optimum can be achieved by minimizing the loss of the FD module (LFD), given as

where \({\beta }_{1}\) is a coefficient of the L2-normalization and the loss is computed via cross-entropy; this is because the FD module tackles the problem of discrete classification. While the dimensions of the features decrease as they pass through the pooling layers, the number of features increases due to the increased filters as the layer becomes deeper. In addition, ELU activation is used in all convolutional layers to encourage the information under 0 to be conserved; this is defined as

Both the ELU activation function and the increase in the number of filters according to the layer depth can compensate for the possibility of information that may be lost due to the stacked layers. As the weights of the filters are updated in the direction of minimizing (1), the input data passing through the updated filters formulates the features distinguishable to the fault modes. The features just before being flattened, which are denoted as \(\widehat{\mathbf{t}}\) in Fig. 4, are then transferred to the subsequent SE module.

3.2.3 Part 3. Severity Estimation (SE)

An SE module for each fault mode learns the severity of each corresponding fault mode. Each SE module consists of two convolution layers, followed by max-pooling layers and one FC layer. The two convolutional layers of the SE module, which have a larger number of filters than the preceding FD module, extract the more sophisticated features associated with the fault severity. The elaborate features are flattened and computed with the FC layer and then regressed to determine the fault severity. The task of an SE module can be explained as \(p\left(\widehat{S}|\widehat{\mathbf{t}},{\mathbf{W}}_{\mathbf{S}\mathbf{E}}\right)\sim p(S|\mathbf{x}).\) The latent features \(\widehat{\mathbf{t}}\), provide significant information about the corresponding fault mode to the SE module when LFD is sufficiently decreased. Then, \(\widehat{\mathbf{t}}\) are used to learn the WSE of the SE model by transferring the information to the SE module of the corresponding fault mode. The delivery of \(\widehat{\mathbf{t}}\) is expected to concentrate on learning the specific characteristics to assess the severity of each fault by minimizing the loss of the SE module (LSE), described as

where \({\beta }_{2}\) denotes a coefficient of L2 normalization and \({f}_{2}\) is the estimated severity, as calculated from latent feature \(\widehat{\mathbf{t}}\) and WSE of an SE module. Since fault severity is the continuous variable, the loss is computed by mean squared error (MSE).

The hierarchical structure of the proposed method is illustrated in detail in Fig. 5. The FD module used to identify the fault mode and three SE modules for assessing the fault severity are hierarchically associated. The numbers in square brackets indicate the dimensions of the data passed through the layer. The numbers in parentheses refer to the number of filters, and the size of the filters is set to nine for all convolution layers. The pooling size is set to four for all pooling layers. The structure of the proposed FI-HCNN is designed based on the motor current signals described in this study, but it can be generally applied with minor adjustments depending on the amount and the type of data. In Fig. 5, the blue arrow represents an example flow of a test data sample. When the test data is classified as Fault 1, the latent features of the test data transfer to the SE module of Fault 1 and develop into features that indicate the severity of Fault 1.

4 Experimental Study

In this section, the proposed FI-HCNN method is validated using experimental data. After an explanation of the data, the results of the proposed FI-HCNN are discussed. Then, the performance of FI-HCNN is compared to that of traditional MCSA methods and other DL methods, of which the structures are related to the proposed FI-HCNN.

4.1 Description of the Experimental Data

A dataset from a 160 kW, 2-pole induction motor was used to analyze the performance of the proposed method. In the experiment, one phase of the stator current signal was acquired at 3600 revolutions per minute (RPM) with no load. There were a total of three mechanical faults with multiple severity levels, respectively: eccentricity, unbalance, and a broken rotor bar. These faults and severity levels are illustrated in Fig. 6. The severity was defined based on the degree of experimental settings that caused the severe conditions of the motor. A higher severity level means that the health state of a motor is more deteriorated. Eccentricity, which indicates an uneven air gap between the rotor and stator, was introduced at three different levels by moving the rotor 10%, 30%, and 50% of the original air-gap length from the center. The severity of eccentricity was denoted as 10%, 30%, and 50%. For example, 30% eccentricity is described in Fig. 6a. The broken rotor bar, which was emulated by drilling rotor slots to create a half and a whole break, had the severity of 50% and 100%, respectively. Unbalance was created by attaching weights to the rotor. According to ISO21940-11, the health state is balanced at a vibration of 2.5 mm/s, marked as G2.5; G40 is treated as a failure. The severity of unbalance was set to the ratio of the unbalance level, 16% and 40%; these values indicate G6.3 and G16, respectively. All specific conditions, such as the severity level and abbreviations used, are summarized in Table 1. Figure 7 shows an example of raw stator current signals from each health state. The magnitudes at 60 Hz and its harmonics were large in the frequency domain (see Fig. 7b) because the supply frequency and the rotating frequency were the same. Although the current signals of the ROTOR stood out, as the broken rotor bar itself highly affected the motor compared to other fault modes, the current signals of each health state (except those of the broken rotor bar) were not readily distinguishable in either the time- or frequency-domain.

4.2 Result and Discussion of the FI-HCNN Method

Since FI-HCNN solves a classification problem in the FD module and a regression problem in the SE modules, the accuracy of FI-HCNN is defined separately for each module. In the case of the FD module, the error is calculated as the summation of the incorrect samples divided by the number of total samples. Then, the accuracy is calculated by subtracting the error from one. The accuracy of each SE module is evaluated by calculating the root mean squared error (RMSE) between the prediction and actual fault severity. For example, 2% of RMSE means that the fault severity deviates by an average of 2% from the true severity. All of the methods examined in Sect. 4 are evaluated with this metric.

For preprocessing, all raw stator current signals were resampled at 120 points per revolution, and one sample was defined to include two revolutions and augmented with one revolution overlapped. The length of each sample was 240. Normalization and scaling were then conducted in sequence. The total number of data in the set was 3776, as each class has 472 data. The network was trained using 75% of the data set and tested with the remaining 25% of the data set. fourfold cross-validation was conducted. The entire training and test procedure was run 10 times with randomly selected data sets to study repeatability by investigating a 95% confidence interval. The hyper-parameters, which are adaptive to learn the modules using the given data sets, are detailed in Table 2. The variables of the SE modules were determined to be smaller because the subsequent SE modules conduct elaborate training with the down-scaled data set.

The test accuracy of the FD module was 99.70 ± 0.11% and the three faults were distinguishable, as shown in Fig. 8a. The false negative error of ECC was understandable because—as compared to other fault modes, such as a broken rotor bar—the influence of an ECC-related fault in the current signal can be weak at first [33]. Thus, it is probable that an ECC might be determined to normal at the incipient stage because the effect of an incipient ECC on the current signal is small. The FD performance can be confirmed by investigating the latent feature space, as shown in Fig. 9. The test data set was used to demonstrate the latent feature spaces of each pooling layer in the FD module. The latent space of POOL3 (Fig. 9c), which is \(\widehat{\mathbf{t}}\), had more condensed clusters, compared to that of POOL1 and POOL2 (Fig. 9a and b, respectively). In Fig. 9c, the NOR was formulated into one cluster, and the other health states appeared to be more distinguishable. The performance of each SE module was evaluated using RMSE; 0.61 ± 0.05% for ECC, 0.54 ± 0.05% for ROTOR, and 0.65 ± 0.04% for UNB, respectively (Table 3). The learning feasibility of the SE module was confirmed by analyzing the change of the estimation result depending on the loss. For example, the trend of the loss and its SE results are demonstrated in the case of UNB in Fig. 10. While the bias and variance error of the FI-HCNN method showed improvement in the final output, the errors remained in the common hierarchical model in which the input was used repetitively. Moreover, the RMSE result of FI-HCNN in the early stage of SE was smaller than that of the comparative HCNN, which reuses the raw data; this shows the effect of latent features in SE. These RMSE results are discussed more specifically in the following subsection by comparing them with the results derived from other methods.

4.3 Comparison with Conventional MCSA and Other DL Methods

4.3.1 Comparison with Conventional MCSA Methods

This section aims to investigate the performance of FI-HCNN, as compared to existing methods. Two studies were conducted to represent conventional MCSA methods; one was based on physics-based spectral features, the other was based on data-driven features computed with principal component analysis (PCA) of the magnitude of fast Fourier transform (FFT). The spectral features that were developed separately for each fault mode through theoretical analysis are summarized in Table 4; these results are based on [34, 35]. nb is the number of rotor bars, s is the slip, p is the number of pole pairs, fs is the supplied frequency, fr is the rotating frequency, k and λ are positive integers, and μ is the arbitrary odd number. For the data-driven features, the principal components (PCs) of the FFT magnitudes are calculated. Instead of selecting the specific FFT magnitudes based on domain knowledge, PCA reduced the original FFT magnitude set (consisting of 120 data points), to a 22 PC set with 99% explained variance. After extracting the features using both physics and data-driven methods, the features were fed into SVM for FD and into support vector regression (SVR) for SE in common. The SVM and SVR methods both use quadratic polynomial kernels. The results of these two methods are summarized in Table 3. FI-HCNN shows about 2% better FD accuracy than other methods. As shown in Fig. 8, both conventional methods had more false alarms that indicate normal to faulty. In addition, the RMSEs of SE using the conventional methods were about 10 times worse than those of FI-HCNN, as shown in Fig. 11.

Specifically, the reason for the low performance of both conventional methods can be described in terms of the extracted features; these features do not demonstrate the apparent trends of fault deterioration. In fact, the spectral features were overlapped, depending on the parameters, even though they are defined separately. For example, the similarity between eccentricity and other mechanical faults, such as a bearing inner race fault and a broken rotor bar [36, 37] are revealed. Therefore, it is hard to declare that one spectral feature reflects only the effect of a particular fault mode. This is because the three fault modes (ECC, ROTOR, and UNB) share the relative characteristics that belong to mechanical failure and affect each other. Figure 12 shows the fault characteristic frequencies under a 3600RPM constant-speed condition. Most frequencies were overlapped because the supply frequency was the same as the rotating frequency and there was no slip at the constant-speed condition. Figure 13 shows some spectral features labeled by the fault modes; the number next to each fault mode refers to the fault severity. It is difficult to readily discriminate the fault modes and their severity because a significant amount of the feature values were overlapped. Also, the two main PCs of the FFT magnitudes are plotted in Fig. 14. The PCs of faults (except UNB) are hard to distinguish from NOR, and the overlap of PCs between severities interrupts the distinction in each of the fault cases. The weak performance of the conventional features thus yields inadequate results. Compared to conventional features, FI-HCNN is capable of learning the enhanced features to adapt and estimate the fault severity, thereby arriving at improved results, as shown in Fig. 15.

4.3.2 Comparison with Other Hierarchical CNN Methods

This section intends to confirm the performance of the feature inherited structure in HCNN. Two concept models were constructed with HCNN with a repetitive hierarchical structure in which the input data is re-used in the child modules based on previous research [38,39,40]. The structures of all of the comparative HCNN models are described in Fig. 16. Figure 16a is the proposed FI-HCNN. Figure 16b is one of the repetitive HCNN (Rep-HCNN1) models, described in [38], where the child modules are modified from the parent module. The child modules of Rep-HCNN1 have the same structure as that of FI-HCNN. Figure 16c is the other repetitive HCNN (Rep-HCNN2), where the structure of the parent module and the child module are identical; as outlined in [39, 40]. The notations in Fig. 16 are the same as those in Fig. 4. The hyper-parameters (e.g., learning rate, batch size, drop-out rate, and L2-norm coefficient) were set equal to the values used in FI-HCNN; however, the epoch was adjusted to a value at which the model could be trained sufficiently.

The SE results of all of the comparative methods using the above models are summarized in Table 5; FI-HCNN showed the best performance among all results. The RMSEs of all of the fault conditions using FI-HCNN were about half of those observed for the other HCNN methods, as shown in Fig. 17. We can also confirm that FI-HCNN has a lower variance error compared to both Rep-HCNN1 and 2, as shown in Fig. 18.

To be specific, the superior results of FI-HCNN, as compared to Rep-HCNN1, support the idea that the propagation of the latent features is effective to enhance SE. The structures of Rep-HCNN1 and Rep-HCNN2, which receive the raw input data in common, have different filter designs; Rep-HCNN1 extracts lots of features at the beginning, while Rep-HCNN2 extracts an increasing number of features through stacked layers. A possible reason for the slight improvement in Rep-HCNN2, as compared to Rep-HCNN1, is that the gradual learning by the stacked layers is more effective for training the raw input data. Through these comparative studies, we can confirm that the pre-trained latent features that learn the characteristics of the fault mode result in positive effects in the SE modules. There is also abundant room for further progress, by examining additional data in various fault conditions.

5 Conclusion

In this study, a new method—FI-HCNN—was proposed to identify the faults of induction motors and to calculate the fault severity. The structure of FI-HCNN was hierarchically composed to lead to an FD module that can learn the types of faults and an SE module that is able to estimate their severity. Fault severity was more accurately estimated in the proposed method, as compared to conventional methods, because the latent features, which contain the representations of the fault modes, are propagated from the FD module to the SE module to support the learning of severity. First, the performance of HCNN was confirmed by comparison with conventional MCSA methods. Specifically, spectral features and PCs of FFT magnitude from stator current signals were used with SVM for FD and with SVR for SE. In addition, two conventional HCNN models whose structures are similar to that of FI-HCNN were examined to confirm the superiority of the feature inherited structure of the proposed method. Through the experimental studies, FI-HCNN was proven to provide enhanced features that are more suitable for accurate estimation of fault severity, without the need for significant domain knowledge. FI-HCNN has the potential to learn more robust features through extended training that is available from the pretrained weights when additional fault mode data is included. Then, the latent features that are generated from the more sophisticated FD module can be applied to improve the SE performance. In future work, the training step can be enhanced by improving the loss function of FI-HCNN. Moreover, further study of FI-HCNN can be conducted in the presence of unknown faults.

References

Shin, I., et al. (2018). A framework for prognostics and health management applications toward smart manufacturing systems. International Journal of Precision Engineering and Manufacturing Green Technology, 5(4), 535–554.

Liu, Y., & Bazzi, A. M. (2017). A review and comparison of fault detection and diagnosis methods for squirrel-cage induction motors: state of the art. ISA Transactions, 70, 400–409.

Cerrada, M., et al. (2018). A review on data-driven fault severity assessment in rolling bearings. Mechanical Systems and Signal Processing, 99(1), 169–196.

Park, J., Hamadache, M., Ha, J. M., Kim, Y., Na, K., & Youn, B. D. (2019). A positive energy residual (PER) based planetary gear fault detection method under variable speed conditions. Mechanical Systems and Signal Processing, 117, 347–360.

Lee, J., Oh, H., Park, C. H., Youn, B. D., & Han, B. (2019). Test scheme and degradation model of Accumulated Electrostatic Discharge (ESD) damage for Insulated Gate Bipolar Transistor (IGBT) prognostics. IEEE Transactions on Device and Materials Reliability, 19(1), 233–241.

Qi, Y., Bostanci, E., Zafarani, M., & Akin, B. (2019). Severity estimation of interturn short circuit fault for PMSM. IEEE Transactions on Industrial Electronics, 66(9), 7260–7269.

Corne, B., Vervisch, B., Derammelaere, S., Knockaert, J., & Desmet, J. (2018). The reflection of evolving bearing faults in the stator current’s extended park vector approach for induction machines. Mechanical Systems and Signal Processing, 107(2018), 168–182.

Ebrahimi, B. M., Javan Roshtkhari, M., Faiz, J., & Khatami, S. V. (2014). Advanced eccentricity fault recognition in permanent magnet synchronous motors using stator current signature analysis. IEEE Transactions on Industrial Electronics, 61(4), 2041–2052.

Ameid, T., Menacer, A., Talhaoui, H., & Azzoug, Y. (2018). Discrete wavelet transform and energy eigen value for rotor bars fault detection in variable speed field-oriented control of induction motor drive. ISA Transactions, 79(May), 217–231.

Faiz, J., Ghorbanian, V., & Ebrahimi, B. M. (2014). EMD-Based analysis of industrial induction motors with broken rotor bars for identification of operating point at different supply modes. IEEE Transactions on Industrial Informatics, 10(2), 957–966.

Razik, H., de Rossiter Corrêa, M. B., & da Silva, E. R. C. (2009). A novel monitoring of load level and broken bar fault severity applied to squirrel-cage induction motors using a genetic algorithm. IEEE Transactions on Industrial Electronics, 56(11), 4615–4626.

Das, S., Purkait, P., Koley, C., & Chakravorti, S. (2014). Performance of a load-immune classifier for robust identification of minor faults in induction motor stator winding. IEEE Transactions on Dielectrics and Electrical Insulation, 21(1), 33–44.

Palacios, R. H. C., Da Silva, I. N., Goedtel, A., Godoy, W. F., & Lopes, T. D. (2017). Diagnosis of stator faults severity in induction motors using two intelligent approaches. IEEE Transactions on Industrial Informatics, 13(4), 1681–1691.

Oh, H., Jung, J. H., Jeon, B. C., & Youn, B. D. (2018). Scalable and unsupervised feature engineering using vibration-imaging and deep learning for rotor system diagnosis. IEEE Transactions on Industrial Electronics, 65(4), 3539–3549.

Kim, H., & Youn, B. D. (2019). A new parameter repurposing method for parameter transfer with small dataset and its application in fault diagnosis of rolling element bearings. IEEE Access, 7, 46917–46930.

Kiranyaz, S., Gastli, A., Ben-Brahim, L., Al-Emadi, N., & Gabbouj, M. (2019). Real-time fault detection and identification for MMC using 1-D convolutional neural networks. IEEE Transactions on Industrial Electronics, 66(11), 8760–8771.

Park, J. K., Kwon, B. K., Park, J. H., & Kang, D. J. (2016). Machine learning-based imaging system for surface defect inspection. International Journal of Precision Engineering and Manufacturing Green Technology, 3(3), 303–310.

Gao, X., Sun, Y., & Katayama, S. (2014). Neural network of plume and spatter for monitoring high-power disk laser welding. International Journal of Precision Engineering and Manufacturing Green Technology, 1(4), 293–298.

Kim, D. H., et al. (2018). Smart machining process using machine learning: a review and perspective on machining industry. International Journal of Precision Engineering and Manufacturing Green Technology, 5(4), 555–568.

Abdeljaber, O., Sassi, S., Avci, O., Kiranyaz, S., Ibrahim, A. A., & Gabbouj, M. (2019). Fault detection and severity identification of ball bearings by online condition monitoring. IEEE Transactions on Industrial Electronics, 66(10), 8136–8147.

Yang, B., Lei, Y., Jia, F., & Xing, S. (2019). An intelligent fault diagnosis approach based on transfer learning from laboratory bearings to locomotive bearings. Mechanical Systems and Signal Processing, 122, 692–706.

Jiang, G., He, H., Yan, J., & Xie, P. (2019). Multiscale convolutional neural networks for fault diagnosis of wind turbine gearbox. IEEE Transactions on Industrial Electronics, 66(4), 3196–3207.

Zhao, M., Kang, M., Tang, B., & Pecht, M. (2018). Deep residual networks with dynamically weighted wavelet coefficients for fault diagnosis of planetary gearboxes. IEEE Transactions on Industrial Electronics, 65(5), 4290–4300.

Wen, L., Li, X., Gao, L., & Zhang, Y. (2018). A new convolutional neural network-based data-driven fault diagnosis method. IEEE Transactions on Industrial Electronics, 65(7), 5990–5998.

Sun, C., Ma, M., Zhao, Z., & Chen, X. (2018). Sparse deep stacking network for fault diagnosis of motor. IEEE Transactions on Industrial Informatics, 14(7), 3261–3270.

Yang, Y., Zheng, H., Li, Y., Xu, M., & Chen, Y. (2019). A fault diagnosis scheme for rotating machinery using hierarchical symbolic analysis and convolutional neural network. ISA Transactions, 91, 235–252.

Ince, T., Kiranyaz, S., Eren, L., Askar, M., & Gabbouj, M. (2016). Real-time motor fault detection by 1-D convolutional neural networks. IEEE Transactions on Industrial Electronics, 63(11), 7067–7075.

F. Ben Abid, M. Sallem, and A. Braham, “Robust Interpretable Deep Learning for Intelligent Fault Diagnosis of Induction Motors,” IEEE Trans. Instrum. Meas., vol. 9456, no. c, pp. 1–1, 2019.

I. Goodfellow, Y. Bengio, and A. Courville, Deep learning. MIT press, 2016.

N. Srivastava and R. R. Salakhutdinov, “Discriminative Transfer Learning with Tree-based Priors,” in Advances in Neural Information Processing Systems 26, C. J. C. Burges, L. Bottou, M. Welling, Z. Ghahramani, and K. Q. Weinberger, Eds. Curran Associates, Inc., 2013, pp. 2094–2102.

J. Deng et al., “Large-scale object classification using label relation graphs,” in European Conference on Computer Vision, 2014, vol. 8689 LNCS, no. PART 1, pp. 48–64.

Z. Yan et al., “HD-CNN: Hierarchical Deep Convolutional Neural Network for Large Scale Visual Recognition,” in Computer Vision and Pattern Recognition, 2015, pp. 2740–2748.

Dorrell, D. G., Thomson, W. T., & Roach, S. (1997). Analysis of airgap flux, current, and vibration signals as a function of the combination of static and dynamic airgap eccentricity in 3-phase induction motors. IEEE Transactions on Industry Applications, 33(1), 24–34.

Nandi, S., Toliyat, H. A., & Parlos, A. G. (2002). Performance analysis of a single phase induction motor under eccentric conditions. IEEE Transactions on Energy Conversion, 17(3), 174–181.

Elkasabgy, N. M., Eastham, A. R., & Dawson, G. E. (1992). Detection of broken bars in the cage rotor on an induction machine. IEEE Transactions on Industry Applications, 28(1), 165–171.

Kaikaa, M. Y., Hadjami, M., & Khezzar, A. (2014). Effects of the simultaneous presence of static eccentricity and broken rotor bars on the stator current of induction machine. IEEE Transactions on Industrial Electronics, 61(6), 2942–2942.

Goktas, T., Zafarani, M., & Akin, B. (2016). Discernment of broken magnet and static eccentricity faults in permanent magnet synchronous motors. IEEE Transactions on Energy Conversion, 31(2), 578–587.

Gan, M., Wang, C., & Zhu, C. (2016). Construction of hierarchical diagnosis network based on deep learning and its application in the fault pattern recognition of rolling element bearings. Mechanical Systems and Signal Processing, 72–73, 92–104.

Guo, X., Chen, L., & Shen, C. (2016). Hierarchical adaptive deep convolution neural network and its application to bearing fault diagnosis. Measurement Journal of the International Measurement Confederation, 93, 490–502.

Roy, D., Panda, P., & Roy, K. (2020). Tree-CNN: a hierarchical deep convolutional neural network for incremental learning. Neural Networks, 121, 148–160.

Acknowledgements

This work was supported by the R&D project ‘Intelligent Digital Power Plant (IDPP)’ of Korea Electric Power Corporation (KEPCO), the National Research Foundation of Korea (NRF) grant funded by the Korea Government (MSIT) (No. 2020R1A2C3003644), and Korea Institute for Advancement of Technology (KIAT) grant funded by the Korea Government (MOTIE) (P0008691, HRD Program for Industrial Innovation).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Park, C.H., Kim, H., Lee, J. et al. A Feature Inherited Hierarchical Convolutional Neural Network (FI-HCNN) for Motor Fault Severity Estimation Using Stator Current Signals. Int. J. of Precis. Eng. and Manuf.-Green Tech. 8, 1253–1266 (2021). https://doi.org/10.1007/s40684-020-00279-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40684-020-00279-3