Abstract

Based on the current projection of the general population and the combined increase in end-stage kidney disease with age, the number of elderly donors and recipients is increasing, raising crucial questions about how to minimize the discard rate of organs from elderly donors and improve graft and patient outcomes. In 2002, extended criteria donors were the focus of a meeting in Crystal City (VA, USA), with a goal of maximizing the use of organs from deceased donors. Since then, extended criteria donors have progressively contributed to a large number of transplanted grafts worldwide, posing specific issues for allocation systems, recipient management, and therapeutic approaches. This review analyzes what we have learned in the last 20 years about extended criteria donor utilization, the promising innovations in immunosuppressive management, and the molecular pathways involved in the aging process, which constitute potential targets for novel therapies.

Graphical abstract

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Kidney transplantation represents the best kidney replacement strategy as compared to all other dialysis options, and considering both clinical (morbidity/mortality) and socioeconomic profiles (quality of life, economic costs) [1]. Unfortunately, in the last decade, the number of patients with end-stage kidney disease has increased in parallel with life expectancy, widening the gap between potential transplant candidates and available organs [2].

Health systems worldwide struggle to increase the number of donors and use different approaches to deal with this problem. In particular, elderly donors now contribute to many transplanted grafts worldwide, posing specific issues for allocation systems, patient management, and therapeutic strategies [3, 4]. The exact definition and the consequent allocation policy of elderly donors are still debated. The Crystal City criteria provided the first consensus: all donors > 60 years old without comorbidities or > 50 years old with at least two conditions among high blood pressure, death by cerebrovascular accident, or serum creatinine levels > 1.5 mg/dL were classified as extended criteria donors [5]. Recently, policymakers in the United States adopted a different score based on 14 donor and transplant factors (the Kidney Donor Risk Index) to allocate grafts for single or dual kidney transplantation [6]. Despite all these strategies and increasing utilization (e.g., the number of donors ≥ 60 years old increased from 21% in 2000–2001 to 42% in 2016–2017 in the Eurotransplant senior program [7]), the balance between supply and demand is far from satisfactory, and many organs are still discarded [8].

This review discusses all the pros/cons of using extended criteria donor organs, focusing on optimal utilization, the potential innovations in immunosuppressive management, and the molecular pathways involved in the aging process and associated with graft dysfunction.

Outcomes in recipients from extended criteria donors: good, bad, or (only) needing better allocation?

The increased use of marginal organs from elderly donors poses questions about their functional and clinical outcomes. Aubert et al. reported increased graft loss in patients who received organs from extended criteria donors (Hazard Ratio [HR] = 1.87 [1.50–2.32], p < 0.001 in multivariate analysis) compared to recipients of organs from standard criteria donors [9]. A meta-analysis by Querard et al. showed that both patient and death-censored graft survival were significantly better for recipients of standard criteria vs. extended criteria donor organs [10]. Additionally, Van Ittersum et al. highlighted higher death-censored graft failure and lower patient survival in recipients of organs from extended criteria donors vs. standard criteria/living donors [11].

Other authors documented similar rejection and death-censored graft survival rates at five years [12]. We recently revised our internal cohort of extended criteria donor recipients classified by decades of donor age, documenting similar patient (50–59 years old 87.8%; 60–69 years old 88.1%; 70–79 years 88%; > 80 years old 90.1%, p = 0.77) and graft (74.0%, 74.2%, 75.2%, 65.9%, p = 0.62) survival at five years. Considering that organs were allocated to single- or dual-kidney transplantation after a multistep evaluation including clinical and histological criteria, we investigated differences in the transplant outcomes and discard rate between groups, noting a better survival rate for dual-kidney transplantation from extended criteria donors > 80 years old (p = 0.04) and an increased number of kidneys discarded in this group (48.2%, Odds ratio [OR] 5.1 vs. 15.4%, 17.7% and 20.1% in other decades) [13]. On the basis of this experience, it would appear that appropriate selection provides comparable long-term outcomes in recipients of extended criteria donor organs, even considering the adoption of dual-kidney transplantation from very old donors (i.e., > 80 years old).

Although the long-term efficacy may be questioned, receiving a kidney from an extended criteria donor demonstrates a benefit in survival rate compared to being kept on the waiting list [14, 15]. This difference is particularly notable for recipients > 60 years old, for whom the survival-positive balance was approximately 15% (83.6% vs. 67.4%) [16], consistent with data from the United States [17].

More recently, Perez-Saez et al. confirmed this survival benefit (adjusted risk of death after transplantation, 0.44 [Confidence Interval (CI) 0.61–0.32; p < 0.001]) also in recipients of kidneys from deceased donors aged ≥ 75 years old, with acceptable death-censored graft survival (68.3% at ten years) [18]. It is worth mentioning that extended criteria donor recipients experienced death with functioning graft as the first cause of allograft loss [13, 19], stressing the influence of recipient factors in the outcomes and the risk of non-extended criteria donor utilization in lengthening the time on the waiting list for patients not highly suited for these organs (e.g., retransplant) [20].

These results highlight the need for flexible allocation policies that, taking into account the longevity of the transplanted kidneys, primarily offer organs from nonstandard criteria donors, extended criteria donors, or high Kidney Donor Risk Index donors to eligible elderly recipients and balance the pros and cons in an aging population, the increasing number of subjects with comorbid conditions, the expected benefit of extended criteria donors compared to standard criteria/living donors, and the risk of patient persistence on the waiting list. A proper allocation system could maximize the pros and reduce the cons (see Table 1), even by considering specific allocation programs according to geographical area (e.g., opt-in/opt-out policy for kidneys donated after brain death/circulatory death) and highlighting some comorbidities or parameters that may be helpful for appropriate post-transplant monitoring [21]. For example, in our experience, recipients with type 2 diabetes mellitus may have a worse outcome with extended criteria donor organs compared to non-type 2 diabetes mellitus patients, especially when receiving grafts from > 70-year-old donors [22], and recipients with pre-existing hypotension (mean blood pressure < 80 mmHg) had worse death-censored graft survival when receiving a transplant from donors > 50 years old [22]. Additionally, post-transplant proteinuria represents a crucial determinant of adverse outcomes in recipients of organs from donors > 50 years old [23].

Proper evaluation of extended criteria donors: discard rate and the role of pre-implantation biopsy

Any improvement in the use of extended criteria donor organs is centered on correct evaluation, trying to correctly answer the question of how a kidney could be used and when it should be discarded.

As mentioned above, kidney discard is a significant worldwide problem: as summarized in Fig. 1, literature data reflect a prevailing discard rate in older donors who are Black, female, diabetic, or hypertensive and those with undesirable social behavior and higher terminal creatinine level before donation [8, 27,28,29,30,31].

Mohan et al. [27] also raise the issue that pre-implantation biopsy findings are still the most commonly reported reason for discard, as documented by other reports [28, 29]. To date, pretransplant histological evaluations have been widely proposed and performed [32,33,34]. Nevertheless, concerns remain about the methods adopted to obtain and process material, the scoring system, and even the need for the biopsy itself.

In the methodology assessment, wedge biopsies, despite having a theoretically increased risk of complications compared with a needle biopsy, tend to overestimate the glomerulosclerosis rate (higher in the subcapsular cortex with correlation to discard if ≥ 20 [35]) with limited evaluation of arteries [36]. Needle biopsies or punch biopsies are more commonly used, with differences in the risk of bleeding or the sample size/accuracy [33].

A crucial point that is often not considered is the degree of experience of the nephropathologist: some studies have documented that on-call pathologists with limited experience with kidney biopsies assigned higher scores for chronic changes, leading to an increased number of discarded kidneys [36, 37].

From the initial grading system, which considered only glomerulosclerosis, and subsequent data showing the need for more extensive evaluation, the most widely adopted approaches now combine glomerular sclerosis, arteriosclerosis, hyaline arteriolosclerosis, and interstitial fibrosis in a grading system such as Karpinski’s score [38]. Each parameter receives a semiquantitative score of 0–3, and only kidneys with a cumulative score of ≥ 7 are discarded [38, 39].

The adoption of histological scoring systems combined with clinical and surgical parameters allows transplant teams to safely allocate extended criteria donor kidneys in single or dual transplantation with favorable outcomes [32, 33]. However, the logistical setting of the procurement area or the lack of centralized pathological evaluation may limit this approach, expanding the risk of prolonged cold ischemia time, which is the primary determinant, along with de novo donor-specific antibodies, of allograft function in extended criteria donor recipients [40].

This issue emphasized the desire to understand if there are specific clinical parameters that alone would best evaluate extended criteria donors and mitigate their discard rate [35, 41, 42]. The identification of reliable clinical parameters is a priority, especially since recent studies have already reported positive results with donors of very advanced age (> 70 or > 75 years) [18, 19].

The Eurotransplant consensus found that kidneys from 65- to 74-year-old donors can also be allocated to 55- to 64-year-old recipients without pre-implantation biopsies. This allocation was particularly recommended if kidneys were derived from donors without hypertension, increased creatinine, cerebrovascular death, or other reasons for definition as a marginal donor, such as diabetes or cancer [7].

The Kidney Donor Risk Index (adopted before the Kidney Donor Profile Index) considers donor age, height, weight, ethnicity, history of hypertension or diabetes, cause of death, serum creatinine, history of hepatitis C, and donation after cardio-circulatory death as clinical parameters for donor evaluation before allocation, stressing their importance in post-transplant graft survival [6]. However, some studies suggest that the application of the Kidney Donor Risk Index and Kidney Donor Profile Index may have resulted in an overestimation of high-risk organs, leading to excessive discard, and pose some questions about a decision based solely on clinical criteria (especially for some variables such as serum creatinine at the time of donation) [43].

In our opinion, biopsy findings in a favorable setting, such as that of our center, (i.e., limited kidney processing time, expert pathologists available 24/7) maintain their role in assessing kidney graft prospects and baseline pre-transplant damage, implementing clinical information without constituting the only parameter for discarding kidneys, as suggested by other authors [19]. These data could also be further implemented by artificial intelligence/computer-assisted evaluation of histological sections and acute kidney injury biomarkers such as neutrophil gelatinase-associated lipocalin to improve their significance and reliability [44,45,46,47]. Defining a clear profile to reject/retain organs is challenging, but a feasible approach with in-depth analysis of available histological/clinical profiles may also limit the discard rate for donors aged > 80 years, possibly considering dual kidney transplant in cases with suboptimal kidney function/comorbidities (i.e., hypertension/diabetes).

Strategies to improve outcomes in recipients of extended criteria donor organs: machine perfusion

Organs from extended criteria donors are more prone to ischemia–reperfusion injury, with a consequent increased risk of delayed graft function [48].

In this context, adopting machine perfusion techniques may be an exciting strategy for reducing damage caused by ischemia–reperfusion injury, analyzing potential biomarkers of acute injury during perfusion, and applying reconditioning protocols [49]. Machine perfusion may also integrate clinical/histological information, as is already the case, with promising results in mitigating extended criteria donor discard [19].

Presently, two approaches are available: normothermic and hypothermic perfusion.

Normothermic machine perfusion has attracted increasing interest in recent years because it offers the advantage of a normal biochemical situation for evaluating graft function during perfusion and administering drugs to recondition organs. Some reports suggest that normothermic machine perfusion is beneficial in reducing ischemia–reperfusion injury and delayed graft function [50, 51]. Regarding the disadvantages of its utilization, current normothermic machine perfusion protocols require constant monitoring, continuous oxygenation with blood or other O2 carriers, and administration of nutritional supplements, with the additional risk of graft discard in case of pulse failure.

The second option, hypothermic machine perfusion, is, to date, simple, cost-effective, and applicable on a large scale without risk of graft loss in case of pump failure [52]. A preclinical transplantation study in pigs showed that hypothermic machine perfusion improved survival, chronic inflammation, epithelial to mesenchymal transition, and fibrosis markers [53]. Many real-life reports, including a recent meta-analysis, confirm the positive results of this technique compared to standard perfusion, which include reduced delayed graft function or primary nonfunction occurrence and increased allograft survival [54]. In Tingle et al., hypothermic machine perfusion resulted in better outcomes than standard cold storage in post brain and cardiac death donation; interestingly, in donation after cardiac death per se associated with an increased risk of delayed graft function, fewer perfusions were required to prevent a delayed graft function episode [55].

Strategies to improve outcomes in recipients of extended criteria donor organs: tailored immunosuppression

The goal of immunosuppression in recipients of extended criteria donor grafts is to optimize the outcome and reduce the risk of clinical complications (i.e., infections, cancer), considering their higher immunogenicity (as discussed below), which exposes them to acute rejection episodes. This is even more critical in older recipients, in whom acute rejection incidence is generally lower but can lead to graft loss more frequently [56].

With regard to induction protocols, recent observations and a Cochrane meta-analysis reported the role of rabbit anti-thymocyte globulin vs. IL-2 receptor antagonists (e.g., basiliximab, daclizumab) in preventing acute rejection [57]. This effect is also demonstrated in elderly donors, in whom rabbit anti-thymocyte globulin has shown a lower risk of acute rejection than IL-2 receptor antagonists without an increased risk of death in older recipients and high-risk kidneys [58]. Gill et al. found that the adjusted odds of acute rejection at one year and mortality in kidney transplant recipients ≥ 60 years old were significantly higher among basiliximab recipients than rabbit anti-thymocyte globulin recipients [59]. Recently, Ahn et al. confirmed that rabbit anti-thymocyte globulin was associated with a decreased risk of acute rejection compared to basiliximab in both younger and older recipients; in younger recipients, rabbit anti-thymocyte globulin was also associated with a shorter time-to-discharge and reduced mortality risk compared with basiliximab [60].

Regarding maintenance therapy, kidney-transplanted patients, even elderly ones, most commonly receive triple therapy composed of tacrolimus/cyclosporine A, an antimetabolite (usually mycophenolate mofetil), and steroids [61, 62]. Interestingly, based on OPTN/UNOS data, Lentine et al. noted low adoption of depletion agents (rabbit anti-thymocyte globulin /alemtuzumab) in different combinations vs. basiliximab and more pronounced use of cyclosporine A-based immunosuppression induction in the older group (recipients > 65 years), with increased death-censored graft survival in patients without antimetabolite- or cyclosporine A-based regimens vs. standard treatment (induction with rabbit anti-thymocyte globulin /alemtuzumab followed by triple therapy) [61]. In a large European cohort of patients ≥ 60 years old, Echterdiek et al. showed similar 3-year death-censored graft loss and patient mortality between tacrolimus- and cyclosporine A-treated patients (in both cases with antimetabolite ± steroids) with a similar risk of hospitalization for global and bacterial infection but a lower incidence of rejection in tacrolimus-treated patients. Only BK virus infection and post-transplant diabetes were more prevalent in the tacrolimus group [62].

However, many studies suggest minimizing calcineurin inhibitor use based on the supposed increased susceptibility of older organs to higher levels of these drugs [63]. On the other hand, low/very low doses of calcineurin inhibitors or avoiding their use altogether may expose these increased immunogenic organs to a non-tolerogenic milieu, with a higher risk of acute rejection and donor-specific antibody production, clearly documented as a prevalent risk factor for graft failure in recipients of extended criteria donor organs [40, 64].

Mammalian target of rapamycin inhibitors (mTORi) have been proposed primarily in this context to avoid calcineurin inhibitor nephrotoxicity. However, large randomized clinical trials are not available [65], and despite some studies reporting positive results [58], mTORi utilization in patients receiving extended criteria donor organs remains a matter of debate [66], considering the documented risk of acute rejection in patients receiving tacrolimus + everolimus vs. the standard of care (tacrolimus + mycophenolate mofetil) [67].

Belatacept, a blocker of the costimulatory CD28/CD80 pathway [68], demonstrated a positive effect in increasing kidney function after conversion from calcineurin inhibitors in extended criteria donor organ recipients in the BENEFIT-EXT trial, with a 15 ml/min/1.73 m2 gain in belatacept-treated groups at seven years [69].

At the same time, some reports suggest positive results in extended criteria donor patients who switched from calcineurin inhibitors to Belatacept within the first six months post-transplant [70].

Some authors also noted an increased rejection rate in patients who switched from calcineurin inhibitors to Belatacept [71], but as also documented by our group, hybrid approaches with calcineurin inhibitor minimization (rather than avoidance) may reduce acute rejection risk, maintaining the positive belatacept effect on estimated glomerular filtration rate [72].

As shown in Table 2, each type of therapy may have a rationale and documented pros/cons based on literature data. In our opinion, a tailored approach should be applied for every patient based on their specific pre-transplant characteristics (i.e., age, immunological profile, years of dialysis) and the available information on the extended criteria donor kidneys. Considering the need to lower the discard rate and maximize post-transplant outcomes, excessive dependence on HLA matching to reduce the risk of de novo donor-specific antibodies may be adequately replaced by rabbit anti-thymocyte globulin induction and the adoption of triple therapy in standard patients, considering mTORi or the reduction of immunosuppressive load in a specific context according to the recipient’s history (e.g., history of cancer, cardiovascular/infectious risk), and belatacept + low tacrolimus in patients with delayed graft function or insufficient graft recovery.

Future perspectives: cellular therapies

With regard to future approaches, cellular therapies have received progressively more and more attention in the last ten years and may theoretically allow, in older donor settings, achievement of immunological tolerance (thereby abolishing the need for nephrotoxic immunosuppressive drugs) and/or reconditioning/recellularizing donors with suboptimal kidney function.

Several approaches have thus far not demonstrated a significant benefit. In the TAIC-I trial, donor-derived transplant acceptance-inducing cells, a type of immunoregulatory macrophage, were administered as an adjunct immune conditioning therapy; eight out of ten kidney transplant recipients in whom immunosuppression was tapered tolerated steroid discontinuation, with an additional reduction of sirolimus/tacrolimus monotherapy in some cases. Nevertheless, the trial could not provide evidence that postoperative transplant acceptance-inducing cell administration has a documented ability to dampen allogeneic rejection [86].

Promising examples are derived from some T regulatory cell (Treg) trials, showing good patient and graft survival and, apparently, low infection/rejection rates [78, 79]. However, adopted protocols and donor/patient characteristics vary greatly among studies. The sample size was obviously scarce, and some intrinsic specificities of these cells (difficult isolation, effective homing in target sites) may limit their utilization. Notably, Treg infusion alone was insufficient to achieve tolerance, and combined immunosuppressive regimens are still under investigation [84, 85].

One interesting way to adapt the immune system involves synthetic chimeric antigen receptor cells that could be targeted toward donor HLA mismatches to redirect Treg specificity. In mouse allograft models, donor-specific chimeric antigen receptor Tregs effectively reduced allograft rejection [82]. More recently, they showed a striking ability to diminish de novo donor-specific antibodies and frequencies of de novo donor-specific antibody-secreting B cells but had no effect in sensitized mice, suggesting limited efficacy on memory alloresponse [83].

Bone marrow-derived mesenchymal stromal cells have also emerged in this field for their regenerative and tolerance-inducing potential. Many transplantation models and seminal trials, with a wide range of adopted cells and combined immunosuppression, have shown mixed results with the potential induction of a favorable and protolerogenic microenvironment after mesenchymal stromal cell infusion [80, 81]. Although their exact mechanism of action is not clearly understood, the greatest part of their protective and regenerative role could be mediated by indirect modulation of immune system components (e.g., macrophages, monocytes), as documented by similar results obtained through mesenchymal stromal cell-derived extracellular vesicles or conditioned medium infusion [87]. Although it seems that mesenchymal stromal cells have a very short lifespan in recipients with a lower risk of malignant transformation, using mesenchymal stromal cell-extracellular vesicles could overcome this problem. Nevertheless, available protocols must be refined to fulfill the quality and quantity requirements for practical application [88].

Complete chimerism with cessation of immunosuppressive drugs and tolerance of transplanted organs has been tested and obtained, but safety issues that presented after required immune system reconditioning limit this strategy [89].

Aging at the cellular level: senescence in the kidney

Despite being partially questioned, donor age remains a critical factor in the long-term outcome of kidney transplantations, and extended criteria donor organs also carry an increased risk of acute rejection [56].

From a pathophysiological viewpoint, these conditions reflect clinical and metabolic processes related to organ and immunological aging (Fig. 2).

Aging is defined as the decline of physiological integrity due to an accumulation of damage and deterioration of proteins and organelle functions [90]; its cellular counterpart, as described by Hayflick and Moorhead, is senescence [91].

Senescence is determined by a permanent decline in cell proliferation due to different stimuli (i.e., the accumulation of DNA damage, telomere shortening, high levels of reactive oxygen species, genetic mutations, chromatin remodeling, and mitochondrial dysfunction). Senescent cells also acquire a proinflammatory profile with the secretion of cytokines/chemokines, such as interleukin-6 (IL-6), matrix metalloproteinases, and growth factors [92].

Autophagy is also profoundly involved in kidney aging: this process, which is strictly mTOR-dependent, determines adequate protein and organelle degradation but declines with age, causing age-related waste accumulation in cells [93]; this accumulation results in increasing numbers of misfolded proteins and the formation of inclusion bodies and deformed organelles, with crucial impact on terminal cells (i.e., podocytes) [94]. In this context, both genetic and drug-induced mTOR and AMPK-ULK1 pathways may represent potential targets to increase autophagy and reduce organ aging [95].

Modification of protein folding also depends on heat shock proteins, a subgroup of chaperones. Barna et al. demonstrated that with aging, the master regulator of heat shock protein transcription (HSF1) decreases the ability to bind to heat shock protein genes upon stress [96]. Additionally, low-grade constitutive heat shock protein expression differs between standard and pathological allografts, suggesting a possible connection between aging, transplant outcome, and heat shock protein activity [97].

From a genetic point of view, some authors have investigated the role of age-related modifications in the kidney compartment. Rodwell et al. identified a pool of kidney-specific signatures that change expression in the cortex and the medulla with age; forty-nine age-regulated genes encode protein components of the extracellular matrix, all but four of which increase expression in old age. Considering the crucial role of the extracellular matrix in the filtration process via the basement membrane and its well-known decline with age, this study highlights the potential role of these age-related modifications in causing nonspecific injuries that may induce a proinflammatory niche that, in turn, activates innate and adaptive immune responses [98].

In this context, Franceschi et al. encompass organ and organism aging in “inflammaging,” defining this condition as the chronic, low-grade inflammation that occurs during aging and contributes to the pathogenesis of age-related diseases. According to this definition, cellular senescence, mitochondrial dysfunction, defective autophagy and mitophagy, and activation of the inflammasome are linked, with the additional contribution of metabolic inflammation driven by nutrient excess or overnutrition (the so-called “metaflammation”) [99]. The use of specific biomarkers (i.e., DNA methylation, glycomics, and metabolomics) may open the door to individual evaluation of the metabolic and inflammatory profile of donors and recipients, rewriting the parameters of “old” and “age” for grafts and patients, respectively.

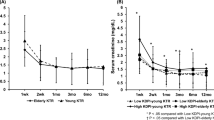

Some examples of specific approaches are already available: Kimmel et al. applied single-cell sequencing to identify the upregulation of inflammatory pathways in old vs. young mice [100]; Elyahu et al. documented, through single-cell RNA sequencing and multidimensional protein analyses, a modification of the CD4 T-cell profile in aged mice, with alterations of regulatory, exhausted, and cytotoxic patterns and different expression of inflammatory cytokines (IL-27, IFNb, IL-6) [101].

All these data pave the way for a future evaluation of specific age-related organ modifications, allowing us to accurately characterize the detailed footprint of “aged” organs according to the objective biological age rather than the chronological one.

At the same time, these new molecular insights pose new questions, for example, in the case of donors with unfavorable biological age (based on the molecular analyses themselves) but with still adequately preserved renal function.

As mentioned above, we strongly recommend adopting a pragmatic approach, encompassing functional, histological, perfusion, and, in the near future, molecular information, to implement the decision-making process without constituting a barrier to organ acceptance, possibly considering dual kidney transplantation in high-risk settings (e.g., donor > 80 years old) to minimize the discard.

Conclusions

Based on the confirmed results of kidney transplantation in improving the quality of life and survival of patients with end-stage kidney disease, the use of extended criteria donor organs appears to be a crucial issue in the transplantation field for expanding the donor pool. In the (not-so-distant) future, extended criteria donors may be treated with the previously mentioned techniques to obtain “young” and low tolerogenic tissues. To date, a combination of available therapeutic strategies and allocation policies, with a tailored evaluation for each patient on the waiting list according to their specific clinical characteristics, may improve results and expand and optimize extended criteria donor utilization.

Data availability

All data and datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Rose C, Gill J, Gill JS (2017) Association of kidney transplantation with survival in patients with long dialysis exposure. Clin J Am Soc Nephrol 12:2024–2031. https://doi.org/10.2215/CJN.06100617

Carriazo S, Villalvazo P, Ortiz A (2022) More on the invisibility of chronic kidney disease… and counting. Clin Kidney J 15:388–392. https://doi.org/10.1093/ckj/sfab240

Kling CE, Perkins JD, Johnson CK et al (2018) Utilization of standard criteria donor and expanded criteria donor kidneys after kidney allocation system implementation. Ann Transplant 23:691–703. https://doi.org/10.12659/AOT.910504

Metzger R, Delmonico FL, Feng S et al (2003) Expanded criteria donors for kidney transplantation. Am J Transplant 3(Suppl 4):114–125. https://doi.org/10.1034/j.1600-6143.3.s4.11.x

Rosengard BR, Feng S, Alfrey EJ et al (2002) Report of the Crystal City meeting to maximize the use of organs recovered from the cadaver donor. Am J Transplant 2:701–711. https://doi.org/10.1034/j.1600-6143.2002.20804.x

Rao PS, Schaubel DE, Guidinger MK et al (2009) A comprehensive risk quantification score for deceased donor kidneys: the kidney donor risk index. Transplantation 88:231–236. https://doi.org/10.1097/TP.0b013e3181ac620b

Süsal C, Kumru G, Döhler B et al (2020) Should kidney allografts from old donors be allocated only to old recipients? Transpl Int 33:849–857. https://doi.org/10.1111/tri.13628

Price MB, Yan G, Joshi M et al (2021) Prediction of kidney allograft discard before procurement: the kidney discard risk index. Exp Clin Transplant 19:204–211. https://doi.org/10.6002/ect.2020.0340

Aubert O, Reese PP, Audry B et al (2019) Disparities in acceptance of deceased donor kidneys between the United States and france and estimated effects of increased US acceptance. JAMA Intern Med 179:1365–1374. https://doi.org/10.1001/jamainternmed.2019.2322

Querard AH, Foucher Y, Combescure C et al (2016) Comparison of survival outcomes between expanded criteria donor and standard criteria donor kidney transplant recipients: a systematic review and meta-analysis. Transpl Int 29:403–415. https://doi.org/10.1111/tri.12736

van Ittersum FJ, Hemke AC, Dekker FW et al (2017) Increased risk of graft failure and mortality in Dutch recipients receiving an expanded criteria donor kidney transplant. Transpl Int 30:14–28. https://doi.org/10.1111/tri.12863

Kim M-G, Kim YJ, Kwon HY et al (2013) Outcomes of combination therapy for chronic antibody-mediated rejection in renal transplantation. Nephrology (Carlton) 18:820–826. https://doi.org/10.1111/nep.12157

Messina M, Diena D, Dellepiane S et al (2017) Long-term outcomes and discard rate of kidneys by decade of extended criteria donor age. Clin J Am Soc Nephrol 12:323–331. https://doi.org/10.2215/CJN.06550616

Merion RM, Ashby VB, Wolfe RA et al (2005) Deceased-donor characteristics and the survival benefit of kidney transplantation. JAMA 294:2726–2733. https://doi.org/10.1001/jama.294.21.2726

Gill JS, Schaeffner E, Chadban S et al (2013) Quantification of the early risk of death in elderly kidney transplant recipients. Am J Transplant 13:427–432. https://doi.org/10.1111/j.1600-6143.2012.04323.x

Savoye E, Tamarelle D, Chalem Y et al (2007) Survival benefits of kidney transplantation with expanded criteria deceased donors in patients aged 60 years and over. Transplantation 84:1618–1624. https://doi.org/10.1097/01.tp.0000295988.28127.dd

Ojo AO, Meier-Kriesche HU, Hanson JA et al (2001) The impact of simultaneous pancreas-kidney transplantation on long-term patient survival. Transplantation 71:82–90. https://doi.org/10.1097/00007890-200101150-00014

Pérez-Sáez MJ, Arcos E, Comas J et al (2016) Survival benefit from kidney transplantation using kidneys from deceased donors aged ≥75 years: a time-dependent analysis. Am J Transplant 16:2724–2733. https://doi.org/10.1111/ajt.13800

Pérez-Sáez MJ, Montero N, Redondo-Pachón D et al (2017) Strategies for an expanded use of kidneys from elderly donors. Transplantation 101:727–745. https://doi.org/10.1097/TP.0000000000001635

Miles CD, Schaubel DE, Jia X et al (2007) Mortality experience in recipients undergoing repeat transplantation with expanded criteria donor and non-ECD deceased-donor kidneys. Am J Transplant 7:1140–1147. https://doi.org/10.1111/j.1600-6143.2007.01742.x

Etheredge HR (2021) Assessing global organ donation policies: Opt-in vs opt-out. Risk Manag Healthc Policy 14:1985–1998. https://doi.org/10.2147/RMHP.S270234

Dolla C, Mella A, Vigilante G et al (2021) Recipient pre-existing chronic hypotension is associated with delayed graft function and inferior graft survival in kidney transplantation from elderly donors. PLoS ONE 16:1–15. https://doi.org/10.1371/journal.pone.0249552

Diena D, Messina M, De Biase C et al (2019) Relationship between early proteinuria and long term outcome of kidney transplanted patients from different decades of donor age. BMC Nephrol 20:1–15. https://doi.org/10.1186/s12882-019-1635-0

Ortiz J, Parsikia A, Nan Chang P et al (2013) Satisfactory outcomes with usage of extended criteria donor (ECD) kidneys in retransplant recipients. Ann Transplant 18:285–292. https://doi.org/10.12659/AOT.883951

Dunn C, Emeasoba EU, Hung M et al (2018) A retrospective cohort study on rehospitalization following expanded criteria donor kidney transplantation. Surg Res Pract 2018:1–8. https://doi.org/10.1155/2018/4879850

Rose C, Schaeffner E, Frei U et al (2015) A lifetime of allograft function with kidneys from older donors. J Am Soc Nephrol 26:2483–2493. https://doi.org/10.1681/ASN.2014080771

Mohan S, Chiles MC, Patzer RE et al (2018) Factors leading to the discard of deceased donor kidneys in the United States. Kidney Int 94:187–198. https://doi.org/10.1016/j.kint.2018.02.016

Stewart DE, Garcia VC, Rosendale JD et al (2017) Diagnosing the decades-long rise in the deceased donor kidney discard rate in the United States. Transplantation 101:575–587. https://doi.org/10.1097/TP.0000000000001539

Sung RS, Christensen LL, Leichtman AB et al (2008) Determinants of discard of expanded criteria donor kidneys: Impact of biopsy and machine perfusion. Am J Transplant 8:783–792. https://doi.org/10.1111/j.1600-6143.2008.02157.x

Narvaez JRF, Nie J, Noyes K et al (2018) Hard-to-place kidney offers: Donor- and system-level predictors of discard. Am J Transplant 18:2708–2718. https://doi.org/10.1111/ajt.14712

Marrero WJ, Naik AS, Friedewald JJ et al (2017) Predictors of deceased donor kidney discard in the United States. Transplantation 101:1690–1697. https://doi.org/10.1097/TP.0000000000001238

Gandolfini I, Buzio C, Zanelli P et al (2014) The kidney donor profile index (KDPI) of marginal donors allocated by standardized pretransplant donor biopsy assessment: Distribution and association with graft outcomes. Am J Transplant 14:2515–2525. https://doi.org/10.1111/ajt.12928

Hofer J, Regele H, Böhmig GA et al (2014) Pre-implant biopsy predicts outcome of single-kidney transplantation independent of clinical donor variables. Transplantation 97:426–432. https://doi.org/10.1097/01.tp.0000437428.12356.4a

Stewart DE, Foutz J, Kamal L et al (2022) The independent effects of procurement biopsy findings on 10-year outcomes of extended criteria donor kidney transplants. Kidney Int Reports 7:1850–1865. https://doi.org/10.1016/j.ekir.2022.05.027

Kasiske BL, Stewart DE, Bista BR et al (2014) The role of procurement biopsies in acceptance decisions for kidneys retrieved for transplant. Clin J Am Soc Nephrol 9:562–571. https://doi.org/10.2215/CJN.07610713

Haas M (2014) Donor kidney biopsies: pathology matters, and so does the pathologist. Kidney Int 85:1016–1019. https://doi.org/10.1038/ki.2013.439

Girolami I, Gambaro G, Ghimenton C et al (2020) Pre-implantation kidney biopsy: value of the expertise in determining histological score and comparison with the whole organ on a series of discarded kidneys. J Nephrol 33:167–176. https://doi.org/10.1007/s40620-019-00638-7

Karpinski J, Lajoie G, Cattran D, Fenton S, Zaltzman J, Cardella CCE (1999) Outcome of kidney transplantation from high-risk donors is determined by both structure and function. Transplantation 67:1162–1167

Remuzzi G, Cravedi P, Perna A, Dimitrov BD, Turturro M, Locatelli G, Rigotti P, Baldan N, Beatini M, Valente U, Scalamogna MRPDKTG (2006) Long-term outcome of renal transplantation from older donors. N Engl J Med 354:343–352. https://doi.org/10.1097/00007890-200607152-01373

Aubert O, Kamar N, Vernerey D et al (2015) Long term outcomes of transplantation using kidneys from expanded criteria donors: Prospective, population based cohort study. BMJ. https://doi.org/10.1136/bmj.h3557

Tanriover B, Mohan S, Cohen DJ et al (2014) Kidneys at higher risk of discard: Expanding the role of dual kidney transplantation. Am J Transplant 14:404–415. https://doi.org/10.1111/ajt.12553

Cecka JM, Cohen B, Rosendale J, Smith M (2006) Could more effective use of kidneys recovered from older deceased donors result in more kidney transplants for older patients? Transplantation 81:966–970. https://doi.org/10.1097/01.tp.0000216284.81604.d4

Saha-Chaudhuri P, Rabin C, Tchervenkov J et al (2020) Predicting clinical outcome in expanded criteria donor kidney transplantation: a retrospective cohort study. Can J Kidney Heal Dis. https://doi.org/10.1177/2054358120924305

Girolami I, Pantanowitz L, Marletta S et al (2022) Artificial intelligence applications for pre-implantation kidney biopsy pathology practice: a systematic review. J Nephrol 35:1801–1808. https://doi.org/10.1007/s40620-022-01327-8

Pavlovic M, Oszwald A, Kikić Ž et al (2022) Computer-assisted evaluation enhances the quantification of interstitial fibrosis in renal implantation biopsies, measures differences between frozen and paraffin sections, and predicts delayed graft function. J Nephrol 35:1819–1829. https://doi.org/10.1007/s40620-022-01315-y

Threlkeld R, Ashiku L, Canfield C et al (2021) Reducing kidney discard with artificial intelligence decision support: the need for a transdisciplinary systems approach. Curr Transplant Reports 8:263–271. https://doi.org/10.1007/s40472-021-00351-0

Ronco C, Legrand M, Goldstein SL et al (2014) Neutrophil gelatinase-associated lipocalin: Ready for routine clinical use? an international perspective. Blood Purif 37:271–285. https://doi.org/10.1159/000360689

Pascual J, Zamora J, Pirsch JD (2008) A systematic review of kidney transplantation from expanded criteria donors. Am J Kidney Dis 52:553–586. https://doi.org/10.1053/j.ajkd.2008.06.005

Resch T, Cardini B, Oberhuber R et al (2020) Transplanting marginal organs in the era of modern machine perfusion and advanced organ monitoring. Front Immunol. https://doi.org/10.3389/fimmu.2020.00631

Elliott TR, Nicholson ML, Hosgood SA (2021) Normothermic kidney perfusion: an overview of protocols and strategies. Am J Transplant 21:1382–1390. https://doi.org/10.1111/ajt.16307

Padilla M, Coll E, Fernández-Pérez C et al (2021) Improved short-term outcomes of kidney transplants in controlled donation after the circulatory determination of death with the use of normothermic regional perfusion. Am J Transplant 21:3618–3628. https://doi.org/10.1111/ajt.16622

Darius T, Nath J, Mourad M (2021) Simply adding oxygen during hypothermic machine perfusion to combat the negative effects of ischemia-reperfusion injury: Fundamentals and current evidence for kidneys. Biomedicines. https://doi.org/10.3390/biomedicines9080993

Vaziri N, Thuillier R, Favreau FD et al (2011) Analysis of machine perfusion benefits in kidney grafts: a preclinical study. J Transl Med 9:1–13. https://doi.org/10.1186/1479-5876-9-15

Bellini MI, Yiu J, Nozdrin M, Papalois V (2019) The effect of preservation temperature on liver, kidney, and pancreas tissue ATP in animal and preclinical human models. J Clin Med. https://doi.org/10.3390/jcm8091421

Tingle SJ, Figueiredo RS, Moir JAG et al (2019) Machine perfusion preservation versus static cold storage for deceased donor kidney transplantation. Cochrane Database Syst Rev. https://doi.org/10.1002/14651858.CD011671.pub2

Peeters LEJ, Andrews LM, Hesselink DA et al (2018) Personalized immunosuppression in elderly renal transplant recipients. Pharmacol Res 130:303–307. https://doi.org/10.1016/j.phrs.2018.02.031

Webster AC, Wu S, Tallapragada K et al (2017) Polyclonal and monoclonal antibodies for treating acute rejection episodes in kidney transplant recipients. Cochrane Database Syst Rev. https://doi.org/10.1002/14651858.CD004756.pub4

Filiopoulos V, Boletis JN (2016) Renal transplantation with expanded criteria donors: Which is the optimal immunosuppression? World J Transplant 6:103. https://doi.org/10.5500/wjt.v6.i1.103

Gill J, Sampaio M, Gill JS et al (2011) Induction immunosuppressive therapy in the elderly kidney transplant recipient in the United States. Clin J Am Soc Nephrol 6:1168–1178. https://doi.org/10.2215/CJN.07540810

Ahn JB, Bae S, Chu NM, Wang L, Kim J, Schnitzler M, Hess GP, Lentine KL, Segev DLM-DM (2021) The risk of post kidney transplant outcomes by induction choice differs by recipient age. Transplant Direct 7:e715. https://doi.org/10.1097/TXD.0000000000001105

Lentine KL, Cheungpasitporn W, Xiao H et al (2021) Immunosuppression regimen use and outcomes in older and younger adult kidney transplant recipients: a national registry analysis. Transplantation 105:1840–1849. https://doi.org/10.1097/TP.0000000000003547

Echterdiek F, Döhler B, Latus J et al (2022) Influence of calcineurin inhibitor choice on outcomes in kidney transplant recipients aged ≥60 Y: a collaborative transplant study report. Transplantation 106:E212–E218. https://doi.org/10.1097/TP.0000000000004060

Luke PPW, Nguan CY, Horovitz D et al (2009) Immunosuppression without calcineurin inhibition: Optimization of renal function in expanded criteria donor renal transplantation. Clin Transplant 23:9–15. https://doi.org/10.1111/j.1399-0012.2008.00880.x

Gatault P, Kamar N, Büchler M et al (2017) Reduction of extended-release tacrolimus dose in low-immunological-risk kidney transplant recipients increases risk of rejection and appearance of donor-specific antibodies: a randomized study. Am J Transplant 17:1370–1379. https://doi.org/10.1111/ajt.14109

Laham G, Scuteri R, Cornicelli P et al (2016) Surveillance registry of sirolimus use in recipients of kidney allografts from expanded criteria donors. Transplant Proc 48:2650–2655. https://doi.org/10.1016/j.transproceed.2016.08.008

Durrbach A, Rostaing L, Tricot L et al (2008) Prospective comparison of the use of sirolimus and cyclosporine in recipients of a kidney from an expanded criteria donor. Transplantation 85:486–490. https://doi.org/10.1097/TP.0b013e318160d3c9

Ferreira AN, Felipe CR, Cristelli M et al (2019) Prospective randomized study comparing everolimus and mycophenolate sodium in de novo kidney transplant recipients from expanded criteria deceased donor. Transpl Int 32:1127–1143. https://doi.org/10.1111/tri.13478

Malvezzi P, Jouve T, Rostaing L (2016) Costimulation blockade in kidney transplantation: an update. Transplantation 100:2315–2323. https://doi.org/10.1097/TP.0000000000001344

Durrbach A, Pestana JM, Florman S et al (2016) Long-term outcomes in belatacept- versus cyclosporine-treated recipients of extended criteria donor kidneys: final results from BENEFIT-EXT, a phase III randomized study. Am J Transplant 16:3192–3201. https://doi.org/10.1111/ajt.13830

Le Meur Y, Aulagnon F, Bertrand D et al (2016) Effect of an early switch to belatacept among calcineurin inhibitor-intolerant graft recipients of kidneys from extended-criteria donors. Am J Transplant 16:2181–2186. https://doi.org/10.1111/ajt.13698

De Graav GN, Baan CC, Clahsen-Van Groningen MC et al (2017) A randomized controlled clinical trial comparing belatacept with tacrolimus after de novo kidney transplantation. Transplantation 101:2571–2581. https://doi.org/10.1097/TP.0000000000001755

Gallo E, Abbasciano I, Mingozzi S et al (2020) Prevention of acute rejection after rescue with Belatacept by association of low-dose Tacrolimus maintenance in medically complex kidney transplant recipients with early or late graft dysfunction. PLoS ONE 15:1–16. https://doi.org/10.1371/journal.pone.0240335

Patel SJ, Knight RJ, Suki WN et al (2011) Rabbit antithymocyte induction and dosing in deceased donor renal transplant recipients over 60yr of age. Clin Transplant 25:250–256. https://doi.org/10.1111/j.1399-0012.2010.01393.x

Andrés A, Marcén R, Valdés F et al (2009) A randomized trial of basiliximab with three different patterns of cyclosporin A initiation in renal transplant from expanded criteria donors and at high risk of delayed graft function. Clin Transplant 23:23–32. https://doi.org/10.1111/j.1399-0012.2008.00891.x

Andrés A, Budde K, Clavien PA et al (2009) A randomized trial comparing renal function in older kidney transplant patients following delayed versus immediate tacrolimus administration. Transplantation 88:1101–1108. https://doi.org/10.1097/TP.0b013e3181ba06ee

Durrbach A, Pestana JM, Pearson T et al (2010) A phase III study of Belatacept versus cyclosporine in kidney transplants from extended criteria donors (BENEFIT-EXT Study). Am J Transplant 10:547–557. https://doi.org/10.1111/j.1600-6143.2010.03016.x

Pestana JOM, Grinyo JM, Vanrenterghem Y et al (2012) Three-year outcomes from BENEFIT-EXT: A phase III study of Belatacept versus cyclosporine in recipients of extended criteria donor kidneys. Am J Transplant 12:630–639. https://doi.org/10.1111/j.1600-6143.2011.03914.x

Mathew JM, H-Voss J, Lefever A et al (2018) A phase i clinical trial with Ex vivo expanded recipient regulatory T cells in living donor kidney transplants. Sci Rep 8:1–12. https://doi.org/10.1038/s41598-018-25574-7

Harden PN, Game DS, Sawitzki B et al (2021) Feasibility, long-term safety, and immune monitoring of regulatory T cell therapy in living donor kidney transplant recipients. Am J Transplant 21:1603–1611. https://doi.org/10.1111/ajt.16395

Perico N, Casiraghi F, Introna M et al (2011) Autologous mesenchymal stromal cells and kidney transplantation: A pilot study of safety and clinical feasibility. Clin J Am Soc Nephrol 6:412–422. https://doi.org/10.2215/CJN.04950610

Kaundal U, Ramachandran R, Arora A et al (2022) Mesenchymal stromal cells mediate clinically unpromising but favourable immune responses in kidney transplant patients. Stem Cells Int 2022:1–17. https://doi.org/10.1155/2022/2154544

Dawson NAJ, Lamarche C, Hoeppli RE et al (2019) Systematic testing and specificity mapping of alloantigen-specific chimeric antigen receptors in regulatory T cells. JCI Insight 4:1–19. https://doi.org/10.1172/jci.insight.123672

Sicard A, Lamarche C, Speck M et al (2020) Donor-specific chimeric antigen receptor Tregs limit rejection in naive but not sensitized allograft recipients. Am J Transplant 20:1562–1573. https://doi.org/10.1111/ajt.15787

Koyama I, Bashuda H, Uchida K et al (2020) A clinical trial with adoptive transfer of Ex vivo-induced, donor-specific immune-regulatory cells in kidney transplantation - a second report. Transplantation 104:2415–2423. https://doi.org/10.1097/TP.0000000000003149

Juneja T, Kazmi M, Mellace M, Saidi RF (2022) Utilization of treg cells in solid organ transplantation. Front Immunol 13:1–12. https://doi.org/10.3389/fimmu.2022.746889

Hutchinson JA, Riquelme P, Brem-exner BG et al (2008) Transplant acceptance-inducing cells as an immune-conditioning therapy in renal transplantation. Transpl Int 21:728–741. https://doi.org/10.1111/j.1432-2277.2008.00680.x

Massa M, Croce S, Campanelli R et al (2020) Clinical applications of mesenchymal stem/stromal cell derived extracellular vesicles: Therapeutic potential of an acellular product. Diagnostics 10:1–17. https://doi.org/10.3390/diagnostics10120999

Bruno S, Kholia S, Deregibus MCCG (2019) The role of extracellular vesicles as paracrine effectors in stem cell-based therapies. In: Ratajczak M (ed) Stem cells: therapeutic applications. Springer International Publishing, Cham, pp 175–193

Lowsky R, Strober S (2022) Establishment of chimerism and organ transplant tolerance in laboratory animals: safety and efficacy of adaptation to humans. Front Immunol 13:1–22. https://doi.org/10.3389/fimmu.2022.805177

López-Otín C, Blasco MA, Partridge L et al (2013) The hallmarks of aging. Cell 153:1194. https://doi.org/10.1016/j.cell.2013.05.039

Hayflick L (1965) The limited in vitro lifetime of human diploid cell strains. Exp Cell Res 37:614–636. https://doi.org/10.1016/0014-4827(65)90211-9

van Willigenburg H, de Keizer PLJ, de Bruin RWF (2018) Cellular senescence as a therapeutic target to improve renal transplantation outcome. Pharmacol Res 130:322–330. https://doi.org/10.1016/j.phrs.2018.02.015

Mizushima N, Levine B, Cuervo AM, Klionsky DJ (2008) Autophagy fights disease through cellular self-digestion. Nature 451:1069–1075. https://doi.org/10.1038/nature06639

Huber TB, Edelstein CL, Hartleben B et al (2012) Emerging role of autophagy in kidney function, diseases and aging. Autophagy 8:1009–1031. https://doi.org/10.4161/auto.19821

Bork T, Liang W, Yamahara K et al (2020) Podocytes maintain high basal levels of autophagy independent of mtor signaling. Autophagy 16:1932–1948. https://doi.org/10.1080/15548627.2019.1705007

Barna J, Csermely P, Vellai T (2018) Roles of heat shock factor 1 beyond the heat shock response. Cell Mol Life Sci 75:2897–2916. https://doi.org/10.1007/s00018-018-2836-6

O’Neill S, Ingman TG, Wigmore SJ et al (2013) Differential expression of heat shock proteins in healthy and diseased human renal allografts. Ann Transplant 18:550–557. https://doi.org/10.12659/AOT.889599

Rodwell GEJ, Sonu R, Zahn JM et al (2004) A transcriptional profile of aging in the human kidney. PLoS Biol. https://doi.org/10.1371/journal.pbio.0020427

Franceschi C, Campisi J (2014) Chronic inflammation (inflammaging) and its potential contribution to age-associated diseases. Journals Gerontol - Ser A Biol Sci Med Sci 69:S4–S9. https://doi.org/10.1093/gerona/glu057

Kimmel JC, Penland L, Rubinstein ND et al (2019) Murine single-cell RNA-seq reveals cell-identity- and tissue-specific trajectories of aging. Genome Res 29:2088–2103. https://doi.org/10.1101/gr.253880.119

Elyahu Y, Hekselman I, Eizenberg-Magar I et al (2019) Aging promotes reorganization of the CD4 T cell landscape toward extreme regulatory and effector phenotypes. Sci Adv. https://doi.org/10.1126/sciadv.aaw8330

Funding

Open access funding provided by Università degli Studi di Torino within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Contributions

AM, RC, and LB revised literature data and wrote the main manuscript text; AB and GC contributed to the conception, design, and critical revision. All the authors approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

All procedures performed were in accordance with the ethical standards of the institute and regional research committee and with the 1964 Helsinki declaration and its later amendments of comparable ethical standards.

Human and animal rights

There are no human and animal rights issues to declare.

Informed consent

For this study formal consent is not required.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mella, A., Calvetti, R., Barreca, A. et al. Kidney transplants from elderly donors: what we have learned 20 years after the Crystal City consensus criteria meeting. J Nephrol (2024). https://doi.org/10.1007/s40620-024-01888-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40620-024-01888-w