Abstract

Approximation models have recently been introduced to differential evolution (DE) to reduce expensive fitness evaluation in function optimization. These models basically require additional control parameters and/or external storage for the learning process. Depending on the choice of the additional parameters, the strategies may have different levels of efficiency. The present paper introduces an alternative way for reducing function evaluations in differential evolution, which does not require additional control parameter and external archive. The algorithm uses a nearest neighbor in the search population to judge whether a new point is worth evaluating, so that unnecessary evaluations can be avoided. The performance of this new scheme of differential evolution, known as differential evolution with nearest neighbor comparison (DE-NNC), is demonstrated and compared with that of standard DE as well as approximation models including differential evolution using k-nearest neighbor predictor (DE-kNN), differential evolution using speeded-up k-nearest neighbor estimator (DE-EkNN) and DE with estimated comparison method through some test functions. The results show that DE-NNC can produce considerable reduction of actual function calls compared to DE and is competitive to DE-kNN, DE-EkNN and DE with estimated comparison.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Differential evolution (DE), which was introduced by Storn and Price [1] is a population-based optimizer. DE creates a trial individual using differences within the search population. The population is then restructured by survival individuals evolutionally. The algorithm is simple, easy to use and has shown better global convergence and robustness than most other genetic algorithms, suitable for various optimization problems [2]. However, like other population-based algorithms such as genetic algorithms (GA) and particle swarm optimization (PSO), one of the main issues in applying DE is its expensive computation requirement. This is due to the fact that evolutionary algorithm (EA) often needs to evaluate objective function thousand times to get optimal solutions. It becomes more pronounced when the cost of function evaluation becomes higher.

Research in reducing the computational burden in EA has been focusing on using function approximations, so-called meta-model or surrogate model [3–6]. Some of the popular approximation models in evolutionary computation are quadratic models [7], kriging models [7, 8], neural network models [9] and radial basis function (RBF) network models [10–13]. In these approximation strategies, objective function is estimated by approximation model and the optimization problem is solved utilizing the approximated values. The effectiveness of this strategy depends largely on the accuracy of the approximation model. A time-consuming learning process is often invoked to obtain a high accuracy model. Thus, time-efficient approximate model is particularly important for expensive function evaluation.

Methods using fitness approximation based on meta-model have been recently introduced to differential evolution, including DE-kNN [14] and DE-EkNN [15]. Both methods use k-nearest neighbor (kNN) predictor constructed through a dynamic learning to reduce the exact evaluation calls during DE search. In the early predefined iterations, the algorithm processes as usual, i.e., as standard DE procedure with exact objective function evaluations. All fitness values calculated are stored in an archive to be reused as training population. In later iterations, prediction function value computed from k-nearest samples in the training population is assigned to each solution. This new population is sorted (in the order of optimization) based on prediction function values. A chosen number of best solution values in the new population will be replaced by the exact function values and then stored in the training population. In DE-kNN, all re-evaluated samples are stored and the training population gradually increases. Two major differences between DE-EkNN and DE-kNN are that a weighted average kNN is used in DE-EkNN to estimate the fitness value for effective prediction and the archive is updated by selectively storing training samples for efficiency [15]. Thus, DE-EkNN has more compact archive than DE-kNN. Both DE-kNN and DE-EkNN have been shown through benchmark test functions to be able to converge towards the global optima with less actual evaluations. As pointed out by Park and Lee in their paper [15], two of the major drawbacks of the kNN predictor are the requirement of time to find the nearest neighbors and the need of memory storage to keep samples in the archive. This tends to become more pronounced as the dimension of the problem and the size of the archive increase. So the algorithms are not applicable to the problem whose dimension is extremely large, the real-time application which needs rapidness or an embedded device which lacks memory storage [15].

A new strategy for reducing the number of function evaluations is the estimated comparison method introduced by Takahama and Sakai [16, 17]. In their method, they utilize a rough approximation model, which is an approximation model with low accuracy and without learning process to approximate the function values. The method is different from the surrogate models in that the rough approximated values are only used to estimate the order relation of two points. Function evaluations will be omitted if a point is judged as worse than the target point. The method of estimated comparison was first proposed with a potential model for function approximation [16] and shown to be efficient with much less evaluations compared to DE. The method also works well with other rough approximation models, including kernel average smoother and nearest neighbor smoother [17]. The efficiency of the estimated comparison is influenced by a parameter for error margin: lower value of error margin parameter can reject more trial individuals and omit a larger number of function evaluations, but can also increase the possibility of rejecting a good child; larger value reduces the possibility of rejecting a good child. However, the estimated comparison can reject fewer children and omit a small number of function evaluations. An improved estimated comparison is given by the same authors in [18], in which adaptive control was proposed to produce proper parameters to give more efficiency and stability. The estimated comparison was shown to be also effective for constraint optimization problem [19]. The advantage of DE with rough approximation-based comparison is that rough approximation is not too expensive and does not require the learning process and archive. The rough approximation is constructed on the current search population and only used for judgment, and the optimal solution is searched using the exact function values.

Introducing additional control parameters is required in both DE using kNN predictor and DE with estimated comparison method. DE-kNN and DE-EkNN need five and seven more parameters, respectively, whilst DE with estimated comparison adds one or two parameters. Proper parameters are often sought to ensure efficiency and stability. Good values of parameters are obtained by hand-tuning [14, 17] or in an automatically adaptive way [15, 18]. Obviously, more parameters bring more complexity to the algorithms.

The present paper proposes an alternative way to reduce function evaluation without additional control parameter. The proposed method applies comparison to judge whether or not a new child is worth evaluating, so that the evaluation of the objective function can be skipped. However, the method is different from the estimated comparison method. It uses the readily exact function value of a nearest neighbor in the population of the new child to compare with that of the parent, thus the approximation process is not necessary. The method is named as DE with the nearest neighbor comparison (DE-NNC) and can be viewed as another way of rough approximation-based comparison. The performance of DE-NNC is demonstrated through optimization of some test functions. The simulation results suggest that the proposed scheme is able to achieve good solutions and provide competitive reduction of the function evaluations compared to DE-kNN, DE-EkNN and DE with the estimated comparison method.

The organization of the rest of this paper is as follows. In Sect. 2, a brief introduction of DE is given. In Sect. 3, the main idea of the proposed DE-NNC is described in detail with a concept of possibly useless trial (PUT) and a nearest neighbor comparison method. Experiment results on some test functions are presented in Sect. 4. Finally, the conclusion is given in Sect. 5.

2 Basic of differential evolution

We search for the global optima of the objective function\(f\)(x) over a continuous space \({\mathbf{x}}=\left\{ {x_i }\right\} ,x_i \in \left[ {x_{i,\min } ,x_{i,\max } } \right] , i=1,2,\ldots ,n.\) Classical differential evolution (DE) algorithm invented by Storn and Price [1] for this optimization problem is described in the following.

For each generation \(G\), a population of NP parameter vectors \(\mathrm{\mathbf{x}}_k ( G),k=1,2,\ldots , NP,\) is utilized. The initial population is generated as

where rand[0,1] is a uniformly distributed random real value in the range [0,1]. For each target vector in the population \(\mathbf{x}_{k}(G)\), \(k=1,2,\)..., NP, a perturbed vector y is generated according to

with \(r_{1}\), \(r_{2}\) and \(r_{3}\) being randomly chosen integers and \(1\le r_1 \ne r_2 \ne r_3 \ne k\le NP; \quad F\) a real and constant factor usually chosen in the interval [0, 1], which controls the amplification of the differential variation \(( {\mathrm{\mathbf{x}}_{r_2 } (G)-\mathrm{\mathbf{x}}_{r_3 } (G)}).\)

Crossover is introduced to increase the diversity of the parameter vectors, creating a trial vector z with its elements determined by:

Here, \(r\) is a randomly chosen integer from the interval [1, \(n\)]; Cr is a user-defined crossover constant from [0, 1]. The new vector z is then compared to \(\mathbf{x}_{k}(G)\). If z yields a better objective function value, then z becomes a member of the next generation (\(G+1\)); otherwise, the old value \(\mathbf{x}_{k}(G)\) is retained.

Basically, DE calls for objective function evaluation for every trial vector. It is desirable that trial vectors which might produce no better fitness should not be evaluated. In the following parts, we introduce the concept of possibly useless trial and employ a nearest neighbor comparison method to reduce useless computation.

3 Differential evolution with nearest neighbor comparison (DE-NNC)

The nearest neighbor comparison has the same strategy as the estimated comparison method by Takahama and Sakai [16], [17]. In the estimated comparison, a rough approximation model is used to estimate the order relation of two points. A child point z is judged better than the parent point x \(_{k}\) if the following condition is guaranteed:

where \(\hat{f}\) is the estimated function of\(f\), \(\sigma \) is the error estimation of the approximation model and \(\delta \) is a margin parameter for the approximation error. The parameter \(\delta \ge 0\) controls the margin value for the approximation error. A lower value of \(\delta \) can reject more trial individuals and omit a larger number of function evaluations, but can also increase the possibility of rejecting a good child; a larger value of \(\delta \) reduces the possibility of rejecting good child. However, the estimated comparison can reject fewer children and omit a small number of function evaluations. Thus, the efficiency of the estimated comparison largely depends on the choice of the margin parameter. Different approximation models can be applied for estimated comparison, including the potential model and kernel smoother [17].

The nearest neighbor comparison, on the other hand, does not use function approximation and no additional control parameter is introduced. The details of the method are described in the following.

3.1 Concept of possibly useless trial

-

A trial parameter vector with high possibility of having fitness worse than that of the current target vector is called a possibly useless trial vector (PUT vector).

-

To judge whether a trial vector is a PUT vector, its nearest neighbor vector in the population is utilized to compare with the target vector. This method is named as the nearest neighbor comparison (NNC).

3.2 Nearest neighbor comparison method (NNC)

The step of NNC is give below:

-

In the current population, a vector x \(_{c}(G)\) nearest to the considered trial vector is searched using the distance measure. For this task, Euclidean distance measured as in Eq. (5) is adopted. Other form of distance measurements such as Minkowsky metric can be used:

$$\begin{aligned} \mathrm{d}(\mathrm{\mathbf{x}},\mathrm{\mathbf{y}})=\sqrt{\left( {\sum \limits _{i=1}^n {( {x_i -y_i })^2} }\right) }, \end{aligned}$$(5)where \(\mathrm{d}(\mathbf{x},\mathbf{y})\) is the distance between two \(n\)-dimension vectors x and y.

-

The ready fitness of \(\mathbf{x}_{c}(G)\) is then compared with that of the target vector \(\mathbf{x}_{k}(G)\). If \(f(\mathbf{x}_{c}(G))\) is worse than \(f(\mathbf{x}_{k}(G))\), the trial vector possibly yields no better fitness than \(\mathbf{x}_{k}(G)\), and it is judged as a PUT vector.

-

Function evaluation will not be carried out for for the PUT vector.

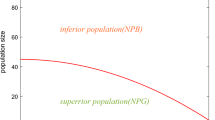

The judgment by NNC is based on the fact that in the vicinity of a point in the search space, the objective function is often observed to behave monotonically, except points which are close to the local (global) optima. This judgment is illustrated in Fig. 1 for a simple minimization problem of a single variable function \(y(x)=f(x)\). Assume an increasing monotonic behavior of the function in the vicinity of the parent point, we observe that:

-

Case 1 (Fig. 1a): trial point is on the right of \(x_{k}\), \(x_{c}\) is worse than \(x_{k}\), i.e., \(y_{c} > y_{k}\). The trial point will be also worse than\(x_{k}\). So it is PUT point.

-

Case 2 (Fig. 1b): trial point is on the left of \(x_{k}\), \(x_{c}\) is better than \(x_{k}\), i.e., \(y_{c} < y_{k}\). The trial point will be better than \(x_{k}\). So it is a good trial point.

Thus, in these cases, the trial point is completely judged by its nearest neighbor. For maximization problems, the comparison is reversed. The extension of the judgment to multi-dimension problems can be guaranteed as long as the monotonic property of the objective function is reserved. Therefore, wrong judgment might be made when a trial point is far away from the target point or the two points are in the vicinity of the local/global optima.

3.3 Algorithm description

The procedure of the proposed algorithm is basically similar to the conventional DE algorithm [1]. The nearest neighbor comparison is introduced to the survivor selection phase. The algorithm is outlined in Fig. 2.

This approach requires no additional control parameters. Moreover, storage space for the archive together with the computation cost for updating and learning is not necessary. The additional computational time for searching the nearest neighbor in the population is normally negligible compared to the overall computational time taken to solve the optimization problem. This is because it is assumed here that the computational time for function evaluations is so large, and the size of the population so small, that the time taken for the detection of the PUT vector will be comparatively small.

4 Experiments

4.1 Test functions

To demonstrate the performance of the proposed DE-NNC, we employed nine well-known benchmark functions, which were also studied in different researches [15–20]. Details of the functions and their search space, where \(n\) is the dimension of the decision vector, are given in Table 1.

4.2 Experimental conditions

In these experiments, the algorithm DE/rand/1/bin (binary crossover and random mutation with one pair of individuals) was adopted as the base algorithm. The parameter settings for optimization are given in Table 2. For tests with the functions \(f_{1}\), \(f_{2}\), \(f_{3}\), \(f_{4}\) and \(f_{5}\) parameter values are adopted from [18]. The terminate condition for the optimization process of these functions is when the objective function value \(<\)10e\(-\)03, or when the number of fitness evaluations, NFE, exceeds 100,000 (assume that we have a computational budget of 100,000 fitness evaluations). For comparison purpose, the experimental conditions for \(f_{6}\), \(f_{7}\), \(f_{8}\) and \(f_{9}\) are exactly the same as those in the study by Park and Lee [15]. The dimension of the search space is 10 and 50 for the first five test functions and 2 for the other four functions which have fixed dimension. For each function and each algorithm, 25 random runs were executed.

4.3 Results and discussion

4.3.1 Test functions \(f_{1}\), \(f_{2}\), \(f_{3}\), \(f_{4}\) and \(f_{5}\)

For these functions, the proposed DE-NCC was tested and compared with the DE using estimated comparison (denoted as DE-EC) and conventional DE. Here, the DE-EC was based on the potential model of approximation with the reference value given by the standard deviation of approximation values [17]. Two values of error margin parameter were considered for DE-EC, which are 0.01 and 0.1.

It was found that with \(n=\)10, all tests stopped before the number of fitness evaluations reached N \(_\mathrm{max}\). Thus, we assessed the performance of the tested algorithms based on two measurement criteria: number of actual function evaluations (NFE) until the fitness value \(<\)10e\(-\)03 and the number of functions’ skip.

Table 3 shows the average results of 25 random runs for each algorithm and each function with \(n=\)10. The column “Func.” shows the optimized function name and “Method” shows the algorithm, where “NCC” means DE with nearest neighbor comparison, “DE” means original DE/rand/1/bin and others mean DE with estimated comparison using fixed error margin parameter values. The column “eval” and “skip” show the total number of fitness evaluations and the total number of function skips, respectively. The column “success-eval” shows the number of successful evaluations where the child is better than the parent, while the column “success-skip” shows the number of success function skips where the skipped child is worse than the parent. Thus, the success rate of evaluation and success rate of skip are given in the corresponding columns “rate”. The column “reduction” shows the percentage of reduction of function evaluations compared with DE.

It was shown that DE-NCC achieved the reduction of function evaluations of 44.51 % for \(f_{1}\), 37.51 % for \(f_{2}\), 21.57 % for \(f_{3}\), 44.31 % for \(f_{4}\) and 21.17 % for \(f_{5}\), compared to DE. These results were better than the results by DE-EC using the margin parameter of 0.1. With the margin parameter of 0.01, DE-EC was superior to DE-NCC in optimization of \(f_{1}\), \(f_{3}\), \(f_{4}\) and \(f_{5}\). However, for \(f_{2}\), DE-EC was not better than DE-NCC because it was sometimes trapped by the local minimum. The success rate of skip by DE-NCC was more than 95 % for all five test functions, which was as good as that obtained by DE-EC. It means that the nearest neighbor comparison can efficiently reduce the number of function evaluations and skip only a relatively small number of good trial points in the search.

In the case of \(n=\) 50, the tests were terminated when the number of fitness evaluations exceeded 100,000. Table 4 shows the optimal results obtained. The columns labeled “average”, “best”, “worst” and “std” show the average value, the best value, the worst value and the standard deviation of the optimal value in 25 runs, respectively.

For the best average value, DE-NCC found better value than DE, except for \(f_{4}\). On the other hand, DE-NCC could attain as good results as DE-EC in most cases. Thus, the nearest neighbor comparison method can find better solution and reduce the number of function evaluations effectively.

Figures 3, 4, 5, 6 and 7 show the logarithmic plots of the best function values over the number of function evaluations for functions \(f_{1}\), \(f_{2}\), \(f_{3}\), \(f_{4}\) and \(f_{5}\), respectively. Note that the ends of graphs in case \(n=\)10 are violated because some runs stopped earlier than other runs when the termination condition was satisfied. In the graphs, thick solid lines and thin solid lines show the optimization process by DE-NNC and DE, respectively. The dashed lines and dotted lines show the optimization process by DE-EC. It can be seen in the figures that the DE-NNC is faster than DE in most cases. Figure 4 clearly shows that for \(f_{2} (n=\) 10) the DE-EC was trapped by the local minimum with the graphs going horizontally. For functions \(f_{4}\) with \(n=\) 50, both DE-NNC and DE-EC were trapped by the local minimum as seen in Fig. 6. This explains why the results of DE-NNC and DE-EC were not better than that of DE.

4.3.2 Test function \(f_{6}\), \(f_{7}\), \(f_{8}\) and \(f_{9}\)

These functions were used to examine the performance of DE-NNC and compare with that of DE-EC, DE-kNN and DE-EkNN. For each function, 30 random runs are executed. For each run, the optimization is terminated when the number of generation exceeds 350.

First, the DE-EC was examined with different values of the margin parameter, \(\delta =\) 0.15, 0.10, 0.05, 0.01. The algorithm DE/rand/1/bin was adopted. The reference value was given by the standard deviation of approximation values. The results are given in Table 5, including the number of function evaluations (NFE) and the function values (FV) optimized. The column “Average” shows the average values of 100 runs. The “Best” and “Worst” columns show the smallest and largest values, respectively. The standard deviations of NFE and FV for 100 runs are given in the column “SD”. It is shown that when the value of the margin parameter decreases, the average number of actual function evaluations also decreases. The largest average number of function calls is 9,631 with \(\delta = 0.15\) and the smallest one is 6,233 corresponding to \(\delta = 0.01\). However, with smaller value of \(\delta \), the optimized function value is worse and its standard deviation is higher, i.e., less accuracy and less stability. On the other hand, higher margin parameters can give better solution and more stability (lower function values and its standard deviations as shown in Table 5). In this study, DE-EC using \(\delta =\) 0.1 is taken for comparison.

Table 6 shows the results of optimization obtained by DE-NCC and DE-EC, including the optimal function value attained and the number of fitness evaluations after 350 iterations. The results for DE-kNN and DE-EkNN taken from the study by Park and Lee [15] are also listed in Table 6.

Considering the function values optimized, DE-NCC was as good as other algorithms, even better for \(f_{9}\). Considering the reduction of function evaluations, DE-NNC and DE-EC were not much different. Nevertheless, both methods required less function calls than that by DE-kNN and DE-EkNN. With smaller standard deviation of evaluations for all functions except \(f_{7}\), DE-NCC was shown to be more stable than DE-EC.

In addition, more computational cost was required by DE-EC, DE-kNN and DE-EkNN, because they do need time for approximation or learning from and updating the archive. This implies that we can get a considerable advantage with DE-NCC.

4.4 Efficiency of DE-NNC

4.4.1 Effect of crossover rate

First, the influence of the crossover rate on the efficiency of the nearest neighbor comparison was examined. Without loss of generality, we tested using function \(f_{1}\) with dimension of decision vector of 10. All parameter settings were the same as in the previous experiment, except that the crossover rate was set with different values, CR \(=\) 0.95, 0.5 and 0.1. For each case, 25 runs were executed. Each run stopped when the fitness value \(<\)10e\(-\)03.

Table 7 shows the optimization results of DE-NCC, DE-EC and DE. The columns labeled “eval”, “skip” and “rate” show the total number of evaluations, the number of evaluation skip and the ratio of evaluation skip, respectively. The column “reduce” shows the ratio of the number of times fitness evaluations is reduced compared with DE.

It is seen that when the crossover rate decreases, the reduction ratio is lower and the skip rate also decreases. It means that the nearest neighbor comparison is less efficient with smaller crossover rate. This is also true for DE with estimated comparison as can be seen in Table 7. The explanation for this is that, with small crossover rate, the parent point is close to the trial point and becomes the nearest neighbor of the trial point. Thus, the algorithm judges the trial point as a good trial.

4.4.2 Effect of additional computation time

The DE-NCC can reduce the number of function evaluations in the tested problems compared with standard DE. However, it requires additional time to search for the nearest neighbor point of the trial point in the population. For each trial point, DE-NCC had to compute NP distance measure given by Eq. 5. Moreover, in the optimization process, DE-NCC sometimes rejected good trial points (\(<\)5 % of the skipped points in our experiment, see Table 3) and took more iterations, thus increasing more time for distance measure. In this study, all test functions were not expensive to evaluate, so that DE-NCC took more time than DE. Table 8 shows the average time consumed as well as the number of iterations required for optimization of functions \(f_{1}\), \(f_{2}\), \(f_{3}\), \(f_{4}\) and \(f_{5}\) with \(n=\) 10.

It is obvious that this method may be less time consuming and more effective than standard DE only when the cost of function evaluation is much higher than the cost of distance measure.

5 Conclusions

A simple method for reducing the number of function evaluations in differential evolution was proposed. In this method, the function evaluation of a solution is omitted when the fitness of its nearest point in the search population is worse than that of the compared point. The method is named as nearest neighbor comparison (DE-NNC). With the same parameters, the proposed DE-NNC was shown to be able to reduce considerable function evaluations compared with standard DE. It was shown for the test problems that the DE-NNC is competitive to the other DE algorithms using the approximation model, which are DE with estimated comparison, DE-kNN and DE-EkNN. The advantage of DE-NNC is that it requires no additional control parameter as well as external archive and maintains a simple structure as standard DE.

It was shown that the crossover rate has large influence on the performance of DE-NCC. Higher values of crossover rates will be more efficient for function evaluation reduction. Moreover, the nearest neighbor comparison is beneficial only for expensive optimization problems, where the cost of the distance measure between two points is much smaller than the cost of function evaluation.

References

Storn, R., Price, K.: Differential Evolution–A Simple and Efficient Adaptive Scheme for Global Optimization Over Continuous Spaces. International Computer Science Institute, Berkeley (1995)

Das, S., Suganthan, P.N.: Differential evolution: a survey of the state-of-the-art. IEEE Trans. Evol. Comput. 15(1), 4–31 (2011)

Jin, Y.: A comprehensive survey of fitness approximation in evolutionary computation. Soft Comput. 9(1), 3–12 (2005)

Khu, S.T., Liu, Y., Savic, D.A.: A fast calibration technique using a hybrid genetic algorithm—neural network approach: application to rainfall-runoff models. In The sixth international conference of hydroinformatics (HIC2004), Singapore (2004)

Liu, Y., Khu, S. T.: Automatic calibration of numerical models using fast optimization by fitness approximation. In 2007 International joint conference on neural networks (IJCNN), Orlando, Florida, pp 1073–1078 (2007)

Yan, S., Minsker, B.S.: A dynamic meta-model approach to genetic algorithm solution of a risk-based groundwater remediation design model. In American Society of Civil Engineers (ASCE) Environmental and Water Resources Institute (EWRI) world water and environmental resources congress 2003 and related symposia, Philadelphia, PA (2003)

Giunta, A.A., Watson, L.T., Koehler, J.: A comparison of approximation modeling techniques: polynomial versus interpolating models. In Proceedings of the 7th IAA/USAF/NASA/ISSMO symposium on multidisciplinary analysis and design, pp 392–404 (1998)

Simpson, T.W., Mauery, T.M., Korte, J.J., Mistree, F.: Comparison of response surface and kriging models for multidisciplinary design optimization. Am. Inst. Aeronaut. Astronaut. 98(7), 1–16 (1998)

Shyy, W., Tucker, P.K., Vaidyanathan, R.: Response surface and neural network techniques for rocket engine injector optimization. J. Propuls. Power 17(2), 391–401 (2001)

Guimaraes, F.G., Wanner, E.F., Campelo, F., Takahashi, R.H., Igarashi, H., Lowther, D.A., Ramirez, J.A.: Local learning and search in memetic algorithms. In: Proceedings of the 2006 IEEE Congress on Evolutionary Computation, Vancouver, BC, Canada, pp. 9841–9848 (2006)

Jin, Y., Sendhoff, B.. Reducing fitness evaluations using clustering techniques and neural network ensembles. In Genetic and Evolutionary Computation-GECCO 2004, Springer, Berlin Heidelberg, pp 688–699 (2004)

Jin, Y., Olhofer, M., Sendhoff, B. (2000). On evolutionary optimization with approximate fitness functions. In GECCO, pp 786–793

Jin, Y., Olhofer, M., Sendhoff, B.: A framework for evolutionary optimization with approximate fitness functions. IEEE Trans Evolution Comput 6(5), 481–494 (2002)

Liu, Y., Sun, F.: A fast differential evolution algorithm using k-Nearest Neighbour predictor. Expert Syst Appl 38(4), 4254–4258 (2011)

Park, S.Y., Lee, J.J.: An efficient differential evolution using speeded-up k-nearest neighbor estimator. Soft Comput. 18(1), 35–49 (2014)

Takahama, T., Sakai, S.: Reducing function evaluations in differential evolution using rough approximation-based comparison. In IEEE congress on evolutionary computation (CEC) 2008, pp 2307–2314 (2008)

Takahama, T., Sakai, S.: A comparative study on kernel smoothers in differential evolution with estimated comparison method for reducing function evaluations. In IEEE congress on evolutionary computation (CEC) 2009, pp 1367–1374 (2009)

Takahama, T., Sakai, S.: Reducing function evaluations using adaptively controlled differential evolution with rough approximation model. In computational intelligence in expensive optimization problems, pp 111–129, Springer, Berlin Heidelberg (2010)

Takahama, T., Sakai, S.: Efficient constrained optimization by the \(\varepsilon \) constrained differential evolution with rough approximation using kernel regression. In IEEE congress on evolutionary computation (CEC) 2013, pp 1334–1341 (2013)

Neri, F., Tirronen, V.: Recent advances in differential evolution: a survey and experimental analysis. Artif. Intel. Rev. 33(1–2), 61–106 (2010)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

This article is published under license to BioMed Central Ltd. Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Pham, H.A. Reduction of function evaluation in differential evolution using nearest neighbor comparison. Vietnam J Comput Sci 2, 121–131 (2015). https://doi.org/10.1007/s40595-014-0037-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40595-014-0037-2