Abstract

Reflective writing is an important educational practice to train reflective thinking. Currently, researchers must manually analyze these writings, limiting practice and research because the analysis is time and resource consuming. This study evaluates whether machine learning can be used to automate this manual analysis. The study investigates eight categories that are often used in models to assess reflective writing, and the evaluation is based on 76 student essays (5080 sentences) that are largely from third- and second-year health, business, and engineering students. To test the automated analysis of reflection in writings, machine learning models were built based on a random sample of 80% of the sentences. These models were then tested on the remaining 20% of the sentences. Overall, the standardized evaluation shows that five out of eight categories can be detected automatically with substantial or almost perfect reliability, while the other three categories can be detected with moderate reliability (Cohen’s κ ranges between .53 and .85). The accuracies of the automated analysis were on average 10% lower than the accuracies of the manual analysis. These findings enable reflection analytics that is immediate and scalable.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Fostering reflective thinking (Boud et al. 1985; Dewey 1933; Mezirow 1991; Schön 1983) is an important educational practice recognized on national and international levels. The worldwide operating Organisation for Economic Co-operation and Development (OECD) has endorsed reflective thinking as important for a “successful life and well-functioning society” (Rychen and Salganik 2005). Students worldwide are assessed regarding their reflection and evaluation skills via the Programme for International Student Assessment (PISA) test on reading literacy (OECD 2013). Examples for the importance of reflective thinking can be found on a national level, such as the quality code of the UK Quality Assurance Agency for Higher Education (QAA) that recommends all higher education providers establish a structured and supported process for learners to reflect upon their personal development (QAA 2012) or the U.S. Department of Education that puts reflective thinking on the national agenda as it recommends the creation of learning opportunities to promote reflection (Means et al. 2010). Students are frequently encouraged to maintain reflective journals or to engage in writing activities which aid their reflection on experiences.

Despite the importance of reflection for education, there is little research on artificial intelligence techniques to automate the analysis of writings regarding reflective thinking. Currently, the common educational practice to analyze and assess reflective writings is a manual process. Very often, they are analyzed by following the principles of the content analysis method (Krippendorff 2012), which is a manual, labor-intensive process (Birney 2012; Ip et al. 2012; Mena-Marcos et al. 2013; Poldner et al. 2014; Poom-Valickis and Mathews 2013; Prilla and Renner 2014; Wald et al. 2012). The typical content coding task of reflective writings entails the manual labeling of text units according to categories of a reflective writing model. The labeled text units are used to calculate scores that indicate the quality of reflection found in the writing (for example, see Clarkeburn and Kettula 2011; Mena-Marcos et al. 2013; Plack and Greenberg 2005; Poldner et al. 2014). This analysis is currently manual, as there is a lack of knowledge about automated methods specific to reflective writing.

There has been great success with the extensive research into automated text analysis methods for educational contexts (Dringus and Ellis 2005; Lin et al. 2009; Rosé et al. 2008). The areas of automatic essay assessment (Attali and Burstein 2006; Dikli 2006; Hearst 2000; Jordan 2014; Landauer 2003; Page 1968; Page and Paulus 1968; Rivers et al. 2014; Shermis 2014; Shermis and Burstein 2003; Wild et al. 2005; Wilson and Czik 2016), discourse analysis (Dascalu 2014; Dessus et al. 2009; Ferguson and Buckingham Shum 2011), and general natural language processing techniques that are prominently applied to the context of education (McCarthy and Boonthum-Denecke 2012).

Despite these research successes showing the potential of automated text analysis for education, there has been not much research regarding the automated text analytics of reflection. Furthermore, there has been some research using dictionary and rule-based approaches (see the following review section), but in general, not much is known about the extent to which automated methods can be used to reliably analyze texts regarding categories of reflective writing models.

This research replicates the aspect of the manual content analysis that assigns labels to text segments with automated means. This study is the first one to be based on a comprehensive model of reflective writing with the following eight categories that has been derived from 24 models of reflective writing: reflection (depth of reflection), experience, feelings, personal belief, awareness of difficulties, perspective, lessons learned, and future intention (breadth of reflection). The aim is to show that automated methods can be used to reliably draw inferences about the presence (and absence) of reflection in texts. This research further investigates the potential of supervised machine learning algorithms to reliably annotate text segments of writings according to categories of a reflective writing model.

Researching automated methods to analyze reflection is important because the manual analysis poses constraints to teaching and research and may hamper deeply personal reflections. The manual analysis of reflective writing is a time-consuming task, adding a cost that constrains the frequency and intensity of its pedagogical use. This cost also limits large-scale research explorations and research designs investigating immediacy of feedback. Automated methods do not have these constraints. Beyond saving time, automated methods may have benefits especially for learning to write reflectively because of the often sensitive nature of reflective writings. Students may feel a barrier to share their writings with a tutor. They may also feel more comfortable with an automated reflective writing aid to self-disclose those private thoughts relevant for their reflection. This assumption is backed by research that indicates self-disclosure is easier by using a computer (Richman et al. 1999).

The major highlights of this study are as follows:

-

a)

The theoretical model of this study is based on categories that are common to many models that have been used to manually analyze reflective writing. Compared to previous research, this research tested machine learning on a comprehensive model and not on a particular model of reflection.

-

b)

The empirical tests of the theoretical model show that the model categories can be annotated in a reliable way and the model components showed empirical validity. In other words, compared to previous research, this research shows that the model is reliable and valid.

-

c)

The paper provides a comprehensive literature review of automated methods to analyze reflection in texts.

-

d)

This research focuses on machine-learning-based approaches.

-

e)

The evaluation was conducted in a standardized way for all data sets.

-

f)

The evaluation shows that the theoretically derived model categories can be automatically analyzed in a reliable way using machine learning.

-

g)

The evaluation suggests that there is a relationship between the manual and machine coding performance.

-

h)

The inspection of the most important features of the machine learning algorithms confirmed several important features but also surfaced new features.

Model to Analyze Reflection in Writings

There is a debate about what exactly constitutes reflection [for example, see the definitions of Dewey 1933, Schön 1987, Boyd and Fales 1983, Boud et al. 1985, Mezirow 1991, and Moon 1999, 2006], but as of now, there is yet not a widely agreed definition of reflection. However, as discussed later in this work, some commonalities exist between the various theories of reflection. For the purpose of this paper, we define reflection as follows: Reflective thinking is a conscious, goal-oriented cognitive process that seeks to learn solutions to personally important, often weakly defined, ambiguous problems of past and present experience and anticipated future situations that often involve thinking about the important elements of the experience, a critical analysis of the problem including the analysis of the thinkers’ own personal perspective and feelings as well as the perspective of others in order to determine lessons-learned or future plans.

All people do reflect on experience, but their reflective thinking skills may not be fully developed (Mann et al. 2007). Research indicates that reflective thinking can be taught and learned (Mann et al. 2007). An important educational practice to foster reflective thinking is the practice of reflective writing (Moon 2006; Thorpe 2004). Many higher education disciplines make use of reflective writing as part of their educational programs, such as teachers’ pre-service training, early childhood education, business, physical therapy, literature, psychology (Dyment and O’Connell 2010), health profession (Mann et al. 2007), pharmacy (Wallman et al. 2008), language learning (Chang and Lin 2014; Lamy and Goodfellow 1999), and writing literacy (Yang 2010). Through writing, students capture their reflective thoughts to better understand their own experience.

Reflective writings are different from the types of writings students normally perform, such as essays, literature surveys, or reports. Although reflective thinking may have gone into these writings, the reflective thought process is usually not expressed or developed in these writings as this is not their primary purpose. These writings are different as they are centered on the personal thought process and how to learn from individual experiences. The writings are often very personal and can contain references to feelings. Also, authors are often self-critical. They may consider the perspectives of people who are important in their context or draw conclusions from contexts that are valued by the author. If the writing includes lessons learned or plans for the future, they are often very specific to the author’s context. It is this constant challenging of routines to improve practice, making reflective thinking such an important asset. Thus, reflective writing is different from other types of academic writing. Students may not be familiar with it and therefore may find it difficult to engage with it, as the “rules” are different from what they have previously experienced. This lack of experience results in students not fully developing their reflective writing. For example, students often have problems writing in a descriptive/non-reflective way as they are used to describe events without deeply engaging their own thought process (Mann et al. 2007). An analysis of the writing is the first step toward improving reflective writing and thinking.

Common to all manual methods used to analyze writings regarding reflection is that they are based on a model (sometimes called a framework, assessment rubric, or coding scheme). This section outlines a comprehensive model of reflective writing used in this work as a realistically complex test case to automatically detect reflection.

For several decades, researchers have developed models to analyze reflective writings (early examples are Gore and Zeichner 1991; Hatton and Smith 1995; Richardson and Maltby 1995; Ross 1989; Sparks-Langer et al. 1990; Sparks-Langer and Colto 1991; Tsangaridou and O’Sullivan 1994). Reflective writing models often exhibit a quality of depth, of breadth, or both (Moon 2004). Many models that analyze the depth of reflection in writings define a scale with several levels usually with the lowest level characterized as showing no sign of reflection, i.e., a descriptive writing, while a writing on the highest level shows evidence of a deeply reflective writing. The quality of depth implies that reflection is hierarchical, with the highest level being the most desired outcome. Examples of depth models are the model of Wong et al. (1995) with the non-reflector, reflector, and critical reflector levels or the model of Lai and Calandra (2010) having the routine (non-reflective) level at the lowest level and the transformative (highly reflective) level at the highest level.

Breadth models are descriptive because they do not value one category over another one, as the depth models imply. For example, Wong et al. (1995) described their model to analyze reflective writings with categories, such as attending to feelings, validation, and outcome of reflection. Prilla and Renner (2014) analyzed reflection according to the categories such as describing an experience, mentioning and describing emotions, and challenging or supporting assumptions. Often, the unit of analysis (Weber 1990) of a depth model is the whole text, while the unit of analysis of breadth models are smaller parts of the texts. Several models describe a mapping from the breadth categories to the levels of reflection connecting both qualities (see Ullmann 2015a).

The breadth and depth dimensions for the model of reflection detection that are used for this research have been derived from the model descriptions of the following 24 models (Ullmann 2015a): the models of Sparks-Langer et al. (1990), Wong et al. (1995), McCollum (1997), Kember et al. (1999), Fund et al. (2002), Hamann (2002), Pee et al. (2002), Williams et al. (2002), Boenink et al. (2004), O’Connell and Dyment (2004), Plack and Greenberg (2005), Ballard (2006), Mansvelder-Longayroux (2006, 2007), Plack et al. (2007), Kember et al. (2008), Wallman et al. (2008), Chamoso and Cáceres (2009), Lai and Calandra (2010), Fischer et al. (2011), Birney (2012), Wald et al. (2012), Mena-Marcos et al. (2013), Poldner et al. (2014), and Prilla and Renner (2014). The reflective writing models described in those papers have been evaluated with the method of the manual content analysis, and they all reported inter-rater reliability scores, which gauge the degree to which coders can classify text units according to reflective writing model components. The reason for this selection is that a) content analysis is a common and principled approach to analyze and assess reflective writings and b) the information about reliability allows researchers to understand how well human raters can differentiate between the categories of the model, and it increases the confidence that the research can be replicated. All 24 models satisfy these criteria.

The synthesis of these models led to a model of common constituents, containing both qualities. We will refer to this model as the model for reflection detection. In total, the model consists of eight categories:

-

1.

The depth dimension reflection was modeled as the binary category reflective vs. non-reflective. This is the common denominator of the depth models (Ullmann 2015a).

A synthesis of the breadth categories of the models derived seven categories (Ullmann 2015a). These are categories that are common components in many reflective writing models, and these seven breadth dimensions can be summarized as follows (see, Ullmann 2015a):

-

2.

Description of an experience: Reflective writing models often contain a category that captured an experience of a writer. Often, this experience is the reason for the writer to start a reflective writing. The description of the experience provides the context for the reflection (Ullmann 2015a).

-

3.

Feelings: Many models contain a component that looked for expressions of emotions expressed in the writing. Feelings can be a key element of a reflective writing, as feelings can be the reason to start thinking reflectively (e.g., a feeling of puzzlement, uncertainty, surprise), and they can be the subject of the writing, reflecting the influence of feelings on our thought process (Ullmann 2015a).

-

4.

Personal belief: Many models have searched for evidence of expressing personal beliefs as a component of reflective writing. A reflective thought is often of a personal nature, and a reflection is often about one’s own perspectives and assumptions (Ullmann 2015a).

-

5.

Awareness of difficulties – critical stance: All models contain a category that can be summarized as having an awareness of difficulties and problems and more generally a critical stance towards an experience or situation (Ullmann 2015a).

-

6.

Perspective: Many models have described the importance of considering other perspectives. In the reflective writing, these models look for evidence of the description of the perspective of someone else, consideration of theory as an external perspective, or the social, historical, or ethical context (Ullmann 2015a).

Outcomes – Lessons learned and future intentions: Many of the models include a component that captures the outcomes of reflective writings. The models have exhibited two outcome dimensions:

-

7.

Lessons learned – retrospective outcomes: These are outcomes that look back on what was learned. This could be a better understanding of the experience, new knowledge, behavior changes, changes to perception, and better self-awareness (Ullmann 2015a).

-

8.

Future intentions – prospective outcomes: These are future potential outcomes that are yet not realized. Examples are intentions to do something or plans for the future (Ullmann 2015a).

We will refer to these categories throughout the text as to Reflection, Experience, Feeling, Belief, Difficulty, Perspective, Learning, and Intention. Overall, a synthesis of the categories of existing models derived common categories which formed the categories of the model for reflection detection. Each category of this model for reflection detection serves as a test case for the automated detection of reflection. The approach taken here is to evaluate the automated detection of reflection on a set of categories that are common to many models used in the context of the manual content analysis of reflective writing. An alternative approach is to test the automatability with an existing model. This, however, would have narrowed the scope under investigation to the characteristics of this specific model. The current approach has the benefit that the evaluation is about a model that represents cases that are commonly analyzed.

Automated Methods to Analyze Reflection

The review of the literature shows that the landscape of methods that have been used to automatically analyze reflective writing can be largely classified according to three approaches, namely the dictionary-based, the rule-based, and the machine-learning-based approaches. The main focus of this literature review is about the machine-learning-based approach. The other two approaches differ from the machine-learning-based approach insofar as they are expert-driven, meaning that experts explicitly define patterns to detect reflection. In the machine-learning-based approach, the algorithms define these patterns. The following literature review of methods to analyze text regarding the evidence of reflective thinking and related constructs extends the review of Ullmann (2015a).

Machine-Learning-Based Approach

There is a relatively small body of research that is concerned with machine-learning-based approaches to detect reflection in writings. These are approaches that use machine learning algorithms, especially supervised machine-learning algorithms for classification (Gupta and Lehal 2009; Sebastiani 2002) to classify text according to categories of reflective writing models. Compared to the rule-based approach that relies on the manual construction of rules and patterns, the machine-learning-based approach learns these patterns from example data automatically.

Research in this area is still fragmented. Currently, researchers investigate specific theoretical models that cover only some of the aspects of reflection. Furthermore, these models have not been tested regarding their validity and also often not regarding their reliability. These criteria should not be neglected as they are important to understand the quality of the model. To understand whether we can use machine learning for the analysis of writings regarding of reflection, we need to test a model that is valid and reliable and covers the most important characteristics of reflection.

Yusuff (2011) trained several machine learning models on a variety of text sources for his bachelor thesis. The texts of these sources were declared by the author as either reflective or not. Other dimensions of reflection have not been considered. The validity of this distinction was not determined, making it difficult to evaluate whether the texts have been reflective, and the inter-rater reliability of the coding was not reported, making it difficult to assess whether this research can be replicated by other researchers. Ullmann (2015a) reported the successful application of machine learning for the detection of reflection. As this paper is a substantial extension of that work, it is not further discussed. Cheng (2017) developed machine learning models to classify posts of an e-portfolio system according to categories of their self-developed A-S-E-R model in the context of L2 learning. The model proposes four elements of reflective writing, namely “experience”, “external influence”, “strategy application - analyze the effectiveness of language learning strategy”, and “analysis, reformulation and future application”. Each element consists of four levels capturing proficiency. Their model covers four out of the seven breadth dimensions of the model used in here, namely Experience (which is related to their category “experience”), Perspective (“external influence”), Difficulties (as part of the category “strategy application”) and future Intentions (part of the higher levels of the category “analysis, reformulation and future application”). They did not cover the categories Beliefs and Feelings and they did not explicitly specify the depth dimension of reflection. Their model has been derived from theory and adapted to the context of L2 language learning, but an empirical evaluation of the validity of the model is missing. Furthermore, the papers did not report inter-rater reliability. The model of Liu et al. (2018) has been inductively derived from an initial content analysis by experts. The model consists of two foci called “technical” and “personalistic beliefs” with each having three levels, namely “description”, “analysis”, and “critique”. Their model covers three out of the seven breadth model categories of this paper, namely the categories Experience (which is related to their category “description”), Belief (which is part of the “personalistic” dimension), and Difficulties (which is part of the categories “analysis” and “critique”). Their model does not explicitly contain the depth of reflection dimension, and the model has not been empirically validated, but the authors report high inter-rater reliability between two coders. The model used in the research of Kovanović et al. (2018) covers two of the seven breadth dimensions of this paper’s model, namely Experience, which in their model consists of the two categories “observation of own behavior” and “motive or effect of own behavior”, and the category Intention, which is similar to the their category “indicating a goal of own behavior”. Their model does not consider the depth dimension of reflection. They do not report any empirical evidence of the validity of their model, but they achieved high inter-rater reliability between two coders.

All models outlined have explicit links to the theory of reflective thinking. While not directly connected with reflection, there has been research about related concepts that use machine learning, such as cognitive presence (Corich 2011; Kovanovic et al. 2016; McKlin 2004) and argumentative knowledge construction (Dönmez et al. 2005; Rosé et al. 2008). As this research is only related, it is less relevant and is not further discussed.

The literature review shows that existing research is constrained to specific models of reflection. These theoretical models are less comprehensive compared to the theoretical model used in this paper and they miss important facets of reflection. Therefore, they do not allow us to assess whether all important dimensions of reflection can be assessed automatically with machine learning methods. Furthermore, the review shows that none of the models show any evidence for empirical validity. While many of the mentioned categories can be traced back to the theory or reflective thinking and thus have face validity, evidence of empirical validity would have strengthened the case for these models to actually measure reflective thinking in writings and not something else. Apart from two papers, the papers did not report inter-rater reliability values, making it difficult to assess whether their research can be replicated.

The research in this paper goes beyond the current state-of-the-art. Compared to previous research, this research is a) based on a general model derived from empirical research and b) includes empirical evidence about the reliability and validity of the model in the current context. Regarding the first point, the research model of this research has been generalized by many individual models. The model categories therefore stand for a set of categories that are commonly used to analyze reflective writing. The evaluation of the machine learning algorithms on these model categories therefore provides more generalized evidence about how well reflection can be analyzed with automated means. This approach is different from research that uses a particular model, such as the self-developed models by Liu et al. (2017) or the context-specific model of Cheng (2017; Cheng and Chau 2013) or the model of Kovanović et al. (2018). With regard to the second point, this research outlines both the performance of the machine learning algorithms as well as the performance of the manual coders, providing insights into the difficulty of the content analysis task, and provides empirical evidence about the validity of the model. Empirical evidence of validity for the model has not been reported in any of the previous works. Consequently, the test of the machine learning algorithms is based on a model that is a realistic test case of reflective writing models and is based on a model that is reliable and valid.

Besides model differences, current research into this area uses various machine learning algorithms ranging from latent semantic analysis (Cheng 2017) to Naïve Bayes and Support Vector Machines (Liu et al. 2018) and Random Forests (Kovanović et al. 2018). This variety suggests that all these algorithms are good candidate algorithms for the detection of reflection. Liu et al. observed that Naïve Bayes outperformed the SVM algorithms, suggesting that certain algorithms perform better on the same data set. We do not know which of the algorithms will perform best until we have tested several of them on the same data set.

Researchers also used different measures and measurement techniques to gauge the performance of the algorithms. They used the Cohen’s κ (Cheng 2017; Kovanović et al. 2018) and the F1-score (Liu et al. 2018) measures. Cohen’s κ is often used in the educational area, as it is also a frequent measure of the inter-rater reliability between human coders. Using the same statistic for both, the human performance and the machine performance allows for a better comparison. The F1-score is the harmonic mean of the statistics Precision and Recall. This is a measure often used in the area of Information Retrieval. However, both measures are not compatible, making their comparison difficult. Research differs with regard to the used performance statistic and in the methods to measure it, such as by using different splits of the test and training data set, various forms of cross-validation, and different numbers of class labels. All these factors make comparing research challenging.

We try to summarize the performance of the machine learning algorithms based on a mapping of the individual model categories to the categories of this paper’s model for reflection detection. However, due to the outlined caveats, the outcome of the comparison may not be very informative. The performance of the category Experience has a reported Cohen’s κ of 0.7 (Cheng 2017) and F1-scores of 0.82 and 0.85 (Liu et al. 2018). The category “personalistic” dimension of Liu et al. (2018), which is similar to the category Belief, had F1-scores ranging from 0.78 to 0.84. The categories “application of strategies” with a κ of 0.73 in the work of Cheng (2017) and “analysis” with an F1-score of 0.80 to 0.88 and “critique” with a score of 0.79 to 0.84 in the work of Liu et al. (2018) are similar to the category Difficulties. The category Intention can be found in the category “analysis, reformulation and future application” (κ of 0.6) of Cheng (2017). Kovanović et al. (2018) reported a Cohen’s κ of 0.51 over all categories. The category with the highest error rate was the “goal” category (which has been mapped to the category Intention), followed by the categories “observation” and “motive” (Experience). Summarizing all this information, the categories Experience and Difficulties achieved the highest performance, followed by Beliefs and with some distance comes Intention at the last position. However, as the methods and measures varied between each paper and because of the limited amount of available research, the value of this ranking is limited.

Dictionary-Based Approach

In the context of automated methods, a dictionary often means a collection of words that are associated with a category. Usually, one or several experts define a set of words that represents a concept. A computer program can make use of these dictionaries to find occurrences of dictionary words in texts. The aim of dictionaries to analyze text is to convert text into numbers by counting the frequencies of dictionary words. This allows to quantify text and to use statistical methods to test the data. The focus of dictionary-based research is less about creating high-performing classifiers and most researchers do not consider this aspect, although there are exceptions (Ullmann 2017). Therefore, we cannot report about the performance for most of the paper cited in the following paragraphs. However, as this approach is relevant for the automated detection of reflection, this section provides an overview to show how widespread this approach has become. Benefits are that dictionaries can be set up quicker than rule-based or machine-learning-based approaches to test ideas of automatization. They can be important for rule-based approaches, which often use dictionaries in combination with rules, and they may even inform the creation of feature for machine learning.

One of the first examples of this approach was the General Inquirer (Stone and Hunt 1963). Other examples of this approach were the research in the scope of the Textbank System (Mergenthaler and Kächele 1991) and the Linguistic Inquiry and Word Count (LIWC) tool (Chung and Pennebaker 2012; Pennebaker and Francis 1996).

The following examples highlight the application of this approach in the context of the detection of reflection in texts. The dictionary-based approach has been researched in the context of multiple disciplines, such as in education (Bruno et al. 2011; Chang et al. 2012; Chang and Chou 2011; Gašević et al. 2014; Houston 2016; Kann and Högfeldt 2016; Lin et al. 2016; Ullmann 2011, 2015b, 2017; Ullmann et al. 2012, 2013), psychology (Fonagy et al. 1998; Mergenthaler 1996), and linguisticsFootnote 1 (Birney 2012; Forbes 2011; Luk 2008; Olshtain and Kupferberg 1998; Reidsema and Mort 2009; Ryan 2011, 2012, 2014; Wharton 2012). Most of the research is based on English writings, but there is also research about Chinese (Chang et al. 2012; Chang and Chou 2011; Lin et al. 2016) and Swedish dictionaries (Kann and Högfeldt 2016). Consequently, there is an interdisciplinary interest in the analysis of reflection with dictionaries spanning several languages. As mentioned, there is a lack of research into the classification performance of these dictionaries. Ullmann (2017), however, devised a data-driven method based on large data sets to generate keywords that showed promising performance above the baseline accuracy for seven out of eight categories of the reflection detection model. The average Cohen’s κ over all eight categories was 0.45. The highest value was 0.65 for Experience and the lowest value was 0.28 for Perspective. The Cohen’s κ for Reflection was 0.59. These performances were achieved with a small number of words for each category. These words seemed to be a useful start to populate reflection specific dictionaries.

As with the rule-based approach, experts mainly drive the creation of the dictionaries (expert-driven approach). Often, a single researcher or a group of researchers determines which dictionaries are relevant for reflection and which words should belong to each dictionary based on the study of text examples. The machine-learning-based approach on the other side is data-driven. The algorithms learn from data which words are important and how these words must be connected to classify texts.

Rule-Based Approach

While dictionary-based approaches rely mostly on pattern matching the dictionary entries with the text, rule-based systems provide mechanisms that extend the capability of making inferences from texts. The core of a rule-based system is a set of rules to express knowledge about the domain. The logic expressed in these rules allows for formal reasoning over the knowledge base of rules. Thus, with the inference machine of a knowledge-based system, rules can be chained to deduce facts based on multiple conditions. This technique extends the expressiveness of the automated detector compared to the dictionary-based approach.

Compared to the dictionary-based approach, the rule-based approach is more recent in this domain. Research using this approach often combines natural language processing, dictionaries, and rules to create a text analysis pipeline that captures patterns of reflective writing, as defined by the expert modeler. Buckingham Shum et al. (2017; 2016) customized the Xerox Incremental Parser (Ait-Mokhtar et al. 2002), a general natural language parser, with custom generated dictionaries and rules in order to detect several categories of reflection. The categories of the model have been co-designed together with a practitioner (Buckingham Shum et al. 2017). Although the model described in the paper had several facets, the evaluation only tested whether the rule-based system can distinguish between reflective and unreflective sentences. This distinction may be similar to the depth category Reflection of the reflection detection model in this paper. Their best test result (second test) had a Cohen’s κ of 0.43 (based on own calculation of the values presented in the confusion matrix of table 3 in Buckingham Shum et al. 2017), which was achieved after rule alterations based on the experiences with the first experiment and a rerun of the experiment on the same data set. The paper did not provide evidence for the validity of their concept nor did it report inter-rater reliability. Gibson et al. (2016) showed a rule-based system to analyze writings according to metacognition, which is a related concept of reflection. Their model defined four overarching categories with sub-categories. For each category, they created rules to find evidence of the categories in text using a combination of part-of-speech and dictionary words. In their evaluation, they combined all categories and tested whether the metacognitive activity was strong or weak. Their best test result (for strong authors) achieved a Cohen’s κ of 0.48 (based on own calculation of the values presented in table 9 in Gibson et al. 2016). The paper did not report indicators of validity and reliability.

In the context of the analysis of writings according to facets of reflection, Ullmann et al. (2012) combined the dictionary-based approach with the rule-based approach. An inference machine reasoned over a set of rules that chained low-level rules with higher-level rules to derive facts that indicate reflection in writing. The descriptive results indicated a positive association between the predictions of the rule-based algorithm and the manual ratings of blog posts according to reflective categories, such as “description of an experience”, “personal experience”, “critical analysis”, “taking into account other perspectives”, “outcome”, “what next”, and “reflection”. The paper did not report any performance measures. The paper also did not report any evidence of the empirical validity of the theoretical model, but instead outlined the theoretical roots of each category, supporting the face validity of the model. The paper did not report the inter-rater reliability of the blog post coders.

Summary

Most research regarding automated methods to analyze writing about reflective thinking use the dictionary-based approach. There are other studies that use the rule-based systems and machine learning approaches. These three approaches have different capabilities in modeling text. The dictionary-based approach models dictionaries as word lists. Each word of this list belongs to the category expressed by the dictionary. Using this method of modeling text may result in lower accuracy, as, for example, it does not consider the polysemy of words. Words can have multiple meanings and therefore might express another concept than foreseen by the dictionary. In contrast, a rule-based approach has more capabilities to model text because it can use rules to disambiguate the meaning of words based on context information and provide better results. Machine learning has been highly successful to classify text (Hotho et al. 2005). It therefore appears as a promising approach to automatically analyze reflection in writing.

Research Questions

This study investigates whether machine learning algorithms can be used to reliably detect reflection in texts. The literature review showed that models that have been used for the manual analysis of writings according to reflection have two types of qualities, quality of depth and quality of breadth. The following two research questions consider both qualities:

-

1.

Can machine learning reliably distinguish between reflective and descriptive (non-reflective) sentences?

-

2.

Can machine learning reliably distinguish sentences per the presence or absence of categories that are common in reflective writings? The categories are the following: description of an experience, feelings, personal beliefs, awareness of difficulties, perspective, lessons learned and future intentions.

The following experiments have been constructed to answer these research questions. The experiments use a standardized process to evaluate the potential of machine learning to detect reflection in texts. This process ensures that all categories are assessed in the same way and that the results of the experiment are comparable.

Material and Methods

To generate the data set for each category of the reflection detection model, the researcher devised a standardized processFootnote 2 that was equally applied to all data sets. Based on a text collection of student writings, the texts were unitized, annotated, and split into eight times three data sets (for each of the eight categories of the reflection detection model exist three data set versions representing the three majority vote conditions outlined in the result section). Then, each data set was pre-processed and split into training and test data sets. These data sets served as inputs for the machine learning algorithms.

Text Collection

The text collection consisted of 77 student writings. Among them, 67 student writings came from the British Academic Written English (BAWE)Footnote 3 corpus (Gardner and Nesi 2013; Nesi and Gardner 2012). As most of the research about reflective writings was conducted in the context of academic writing, the BAWE corpus with its similar background seemed to be an appropriate choice. The BAWE corpus contains student essays, and some of them are responses to several reflective writing tasks (Nesi 2007). Relevance sampling (Krippendorff 2012) was used to retrieve 67 texts from the BAWE text collection and ten writings came from examples cited in the research literature. Relevance sampling was chosen over random sampling, as reflective texts are relatively rare (Ullmann et al. 2013); therefore, many texts without relevance would have entered the text selection if random sampling would have been applied. In total, 46 students wrote the 67 texts. Most of the essays were written by students of the health (20 students), business (9), engineering (9), tourism management (6), and linguistic (6) disciplines. They were mostly written by third- (23) and second-year students (21), followed by first-year (12) and postgraduate students (10), and for one text, the student level was unknown. Among the texts, 40 texts were awarded merit, 24 texts were awarded distinction, and for three texts, the grade was not known. In addition to these 67 texts, the text collection was extended by ten student writings that were cited in the literature of reflective writing (Korthagen and Vasalos 2005; Moon 2004, 2006; Wald et al. 2012) to add additional examples of reflective writings to the text collection.

Unitizing Text Collection

The related literature on the manual content analysis of reflective writings suggested that smaller units opposed to whole texts are more suitable to research the breadth quality of reflective writings (Bell et al. 2011; Fund et al. 2002; Hamann 2002; Plack and Greenberg 2005; Poldner et al. 2014; Wong et al. 1995). Therefore, the decision was made to choose single sentences as the unit of analysis. An added benefit of using sentences as the unit of analysis is that software can be used to automatically split texts into sentence units. The unit of analysis of sentences was also chosen for the depth category, although the levels of reflection are often assessed while considering the whole text (Fischer et al. 2011; Ip et al. 2012; Kember et al. 2008; Lai and Calandra 2010; O’Connell and Dyment 2004; Sumsion and Fleet 1996; Wald et al. 2012; Williams et al. 2002; Wong et al. 1995). The reason for this decision lies in the standardization of the experiment. Using the same unit of analysis for all categories simplifies their comparisons. The use of a smaller unit has the additional benefit that they can be aggregated on the level of the whole text. However, one of the drawbacks of using a sentence-based unit is that some of the meanings that stem from the wider context in which the sentence is embedded are not captured. Another drawback is that a sentence can consist of several meaningful parts and thus a smaller unit may be more useful.

A sentence splitter divided all texts of the collection (approximately 130,000 words) into sentences, and duplicated sentences and very short character strings were removed. Lastly, some of the sentences were used as qualifier questions for the coders, leaving a total of 5080 sentences (116,633 words).

Annotation

In the annotation step, all sentences were annotated with the categories of the reflection detection model. For this research, a crowd-worker platformFootnote 4 distributed the annotation task to thousands of workers, who received payment for their work. Their task was to rate sentences per eight questions. Each question represents an operationalization of one of the categories of the reflection detection model (see model section above). In other words, the operationalizations are indicators of the categories of the reflection detection model. For example, the operationalization “The writer describes an experience he or she had in the past” is indicative for the category description of an experience (see Table 1). Another example is the outcome category, which was covered with the two indicators “The writer has learned something”, and “The writer intends to do something”. The first indicator captures past outcomes by looking retrospectively back on outcomes, while the second indicator considers any future intent described by the writer. Table 1 contains the mapping between the categories of the model for reflection detection and the indicator questions that were used to capture the category. The words in parentheses are used as references to these indicators in the following text.

Table 2 shows several sentences from the text collection and their category label. These examples have been chosen from sentences of the data sets that have been agreed by all coders to represent the presence of a category. We chose two examples for each category. A sentence can have several labels.

Pre-Processing

The same setup for pre-processing the data and to train and test the machine learning algorithms was applied to all data sets. The pre-processing step transformed the labeled data sets into data sets suitable for the machine learning algorithms. Important are the steps of feature construction and feature selection.Footnote 5 The choice made about features was to only use textual features in form of unigrams represented as a set of binary values (Sebastiani 2002). Although other representations are possible (Blake 2011; Brank et al. 2011), this research used a simple unigram set representation to estimate performance without using more complex features. The rationale was that if the machine learning models with simple features already show enough signal, then we can expect better results with more sophisticated features. The performance shown in the evaluation therefore represents a lower baseline that can be extended with feature engineering.

The extraction of the features from the texts produced many features. Feature selection aims at reducing the number of features to remove less informative features or features that introduce noise (Manning et al. 2008). There are many feature selection methods (Forman 2003; Mladenic 2011). In this study, we removed features such as punctuation, numbers, and spurious white space and all features that occurred fewer than ten times in the whole of the data set. The R text mining package tm (Feinerer and Hornik 2014; Feinerer et al. 2008) pre-processed the texts. After pre-processing, all data sets had the form of labeled feature vectors.

Training and Test Set

After the pre-processing of the data sets, they had the format required by the machine learning algorithms. We randomly divided each data set into a larger training data set (80%) that was used to train the machine learning algorithms and a smaller test data set (20%), which was used to assess the performance of the machine learning models derived from the training set. The test data set consists of novel/unseen instances, and this is a common best practice setup (Sebastiani 2002).

Class imbalance can be a problem for machine learning algorithms (Chawla et al. 2004). Several techniques exist to counterbalance this problem (Chawla 2005; Chawla et al. 2004; Menardi and Torelli 2012). Here, we use random oversampling on the training data as it has shown positive effects for data sets with class imbalance (Batista et al. 2004; Japkowicz and Stephen 2002). Random oversampling is a technique with which the minority class is randomly repeated until it matches the number of instances of the majority class. The test data set remained with the original class distribution to retrieve a realistic test performance.

We determined the best candidate model from the training data set with k-fold cross validation (k = 10) as the resampling technique (Kim 2009; Molinaro et al. 2005). Resampling was also used to determine the tuning parameters of the machine learning algorithms.

Machine Learning Algorithms

Aggarwal and Zhai (2012) identified Support Vector Machines (SVM), Neural Networks, and Naïve Bayes classifiers as key text classification methods. Fernández-Delgado et al. (2014) found that Random Forests showed good performance on several data sets. They are therefore good-candidate machine learning algorithms to be evaluated on the problem of the automated detection of reflection in texts.

The R (R Core Team 2014) caret package developed by Kuhn et al. (2014) provided all machine learning algorithms used in this paper such as the implementations of the SVM (Hornik et al. 2006; Joachims 1998; Karatzoglou et al. 2004), Neural Networks (Venables and Ripley 2002), Random Forests (Breiman 2001; Liaw and Wiener 2002), and Naïve Bayes (Meyer et al. 2014; Weihs et al. 2005) algorithms.

Benchmarks

The performance of the machine learning algorithms was determined by comparing the prediction of the machine learning algorithm with the annotations generated by the coders. There exist several proposals about how to benchmark inter-rater reliability (Fleiss et al. 2004; Krippendorff 2012; Landis and Koch 1977; Stemler and Tsai 2008). A benchmark is a recommendation about acceptable levels of inter-rater reliability. For example, Landis and Koch (1977) defined Cohen’s κ values below 0 as poor, between 0 and 0.20 as slight, between 0.21 and 0.4 as fair, between 0.41 and 0.6 as moderate, between 0.61 and 0.80 as substantial, and above 0.81 as almost perfect inter-rater reliability. Stemler and Tsai (2008) recommended for exploratory research a threshold of a Cohen’s κ of 0.5. These thresholds provide guidance, but it is up to the research community to define acceptable levels for the context/practice in question. A high-stakes context requires stricter guidelines, while for a low-stakes context, a more lenient standard may suffice.

Results

Reliability and validity are important quality criteria of research (American Educational Research Association, American Psychological Association, and National Council on Measurement in Education 2014). Therefore, the results section first shows that a) the annotation process of the data sets is reliable and b) that the model of reflection detection shows validity. Therefore, the machine learning algorithms are based on a theoretical model of common constituents of reflective writing models that has face validity and empirical validity and can be reliably annotated. The section afterward shows the performance estimates of the machine learning algorithms for each category of the reflection detection model. The last section inspects the most important features that the machine learning algorithms used to predict the class labels.

Reliability of the Manual Annotation

In the context of the manual content analysis, inter-rater reliability is usually calculated on an individual level and are usually calculated between two or three coders. In the context of crowdsourcing,Footnote 6 the inter-rater reliability is often calculated based on aggregated results. The underlying idea is that many (redundant) annotations can help compensate for noise, leading to high-quality annotations. A common aggregation strategy is using majority voting, which is a simple although not always optimal strategy (Li et al. 2013). This research uses several types of majority voting to aggregate votes. Also, the researcher must decide which type of majority voting best suits the research. Using a form of supermajority means applying a strict standard to the data compared to the simple majority, as only instances enter the data set where a supermajority of coders agreed. Simple majority voting needs less agreement than a supermajority voting; therefore, represents a less strict criterion. The benefit of a strict criterion is that it ensures to only include instances that have high agreement by many coders that represent the category. The downside of choosing a strict standard is that fewer instances meet the criterion, resulting in fewer instances to train the machine learning algorithms. This study considers simple majority and two-thirds and four-fifths supermajorities.

The process is as follows: To estimate the inter-rater reliability of the crowdsourced annotations, we randomly split the annotations for each unit of analysis into two groups. A form of majority voting (simple majority voting or a supermajority) determines the final annotation of the group. The inter-rater reliability is then calculated based on the aggregated ratings of both groups over all units. The random assignment to groups was repeated 50 times to compensate for grouping related effects. The reported inter-rater reliability of manually annotated data sets is the mean inter-rater reliability of these 50 random repetitions, and each group must consist of a minimum of four ratings. This criterion ensures that the voting is based on a group of coders and not only on the ratings of a single coder or only a few coders; consequently, the sample size is lower than the actual size of the training data set, as not all randomly sampled groups fulfill this criterion.

This approach allows to the various levels of inter-coder reliability to be compared with the corresponding performance of the machine learning algorithms to assess the order of magnitude of the performance difference and to estimate the machine learning performance from the manual performance.

Table 3 shows the estimates of the aggregated inter-rater reliability of the manual annotation of the sentences for each category of the reflection detection model. The table shows the accuracy and the Cohen’s κ for three majorities, the two supermajorities (four-fifths and two-thirds majority), and the simple majority that were used to determine the class label of each sentence.

Cohen’s κ, estimated from the aggregated ratings agreed by four-fifths of the ratings, is almost perfect for all but one category according to the benchmark of Landis and Koch (1977). The exception is the Perspective category, which is substantial. The estimates based on the two-thirds majority are all at least substantial. The same applies to the reliability estimates derived with the simple majority except for the Perspective and Learning categories, which are moderate. Overall, the measures show that the annotation process produced labels that the coding groups consistently agreed.

Validity of the Manual Annotated Data Set

The model for reflection detection postulates that the breadth dimensions of reflection are associated with the depth dimension. The analysis of validity uses the Fischer’s exact test to investigate whether the breadth dimensions of reflection are independent of the depth dimension of reflection. The assumption is that each individual breadth category is associated with the category Reflection. In contrast, no relation between the depth and each breadth category would be a counterfactual against the model validity.

Table 4 shows the results of Fisher’s exact test (R Core Team 2014) between the category Reflection and each breadth category of the model for reflection detection for three variations of the voting technique (four-fifths majority, two-thirds majority, and simple majority).

Table 4 shows that all breadth dimensions (from Experience to Intention) are likely not independent from Reflection (p < 0.05). Consequently, there is a high likelihood that there is a relation between the depth category and all breadth categories of reflection. These results corroborate the validity of the model for reflection detection. Reflection has the highest odds ratio to co-occur with the Feeling, Belief, Learning, Experience, and Difficulty categories and the lowest odds ratio with Perspective and Intention categories.

Machine Learning Training and Test Data Sets

Table 5 shows the amount (N) of training and test instances of the data sets for each category and each majority. The table shows the split of training and test instances by the two classes, present and absent. An instance (sentence) was either rated by the coders as being an example of the presence of a characteristic (e.g., is reflective) or absence (e.g., is descriptive/non-reflective). Also, 80% of the data were used for training and 20% were used for testing. The training and test data show the original class distribution. Also, the models were tested on the test data with the original class distribution.

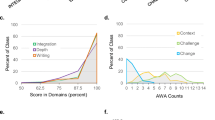

Performance of the Machine Learning Models

Table 6 shows the performance of the machine learning models assessed on the unseen test data for each category of the reflection detection model and for each majority condition. The category Experience had the highest performance over all three conditions. The Cohen’s κ values were 0.85 (for the four-fifths condition), 0.78 (two-thirds), and 0.75 (simple majority). In all these three conditions, the best performing machine learning algorithm on the training data was the Random Forests algorithm trained either on the oversampled data set (overs.) or on the data set with the original class distribution (orig.). The category Feeling had the second highest Cohen’s κ values, followed by Reflection, Intention, Belief, Difficulty, and Learning. The category with the lowest performance is Perspective, with Cohen’s κ of 0.53 (four-fifths majority), 0.38 (two-thirds majority), and 0.30 (simple majority). The category with the highest accuracy over all three conditions is Intention, followed by Experience, Feeling, Reflection, Belief, Difficulty, Learning, and Perspective.

We can compare the accuracy with a baseline. The baseline accuracy is the accuracy of an algorithm that always predicts the class with the most instances as correct. The machine prediction should be better than this very simple algorithm that always predicts the majority class as true. The baseline accuracy can be calculated from the class distribution of the test data and is not shown here. All accuracies achieved by the machine learning algorithms are above the baseline accuracy except for the Perspective category for the simple and two-thirds majority.

We can compare the values with a baseline and with established benchmarks. For the four-fifths majority condition, the Cohen’s κ values of all categories are above the 0.5 exploratory research threshold of Stemler and Tsai (2008). All categories have a Cohen’s κ that is fair or better according to the benchmark of Landis and Koch (1977). Also, almost perfect is the Experience category in the four-fifths majority condition, followed by the categories with substantial reliability Feeling, Reflection, Intention, and Belief. Difficulty, Learning, and Perspective can be benchmarked as moderate.

Overall, we expect that the machine learning algorithms’ performance to be below the performance of the manual coders. By averaging the performance values of all categories by each of the three conditions, the average machine performance accuracy was 8 % below the average manual annotation accuracy for the simple and two-thirds majority condition and 10 % below the average manual annotation accuracy for the four-fifths majority vote conditions. Cohen’s κ was 18 points lower in the simple and two-thirds majority condition and 25 points lower for the four-fifths majority condition. In most cases, the Cohen’s κ values of the machine learning models is one benchmark level lower than the level reported for the manual inter-rater reliability.

A simple linear regression was used to investigate the relation between machine learning performance and the rater performance. The inspection of the scatterplots (not shown here) between the machine learning and coder accuracy as well as machine learning Cohen’s κ values and coder Cohen’s κ values showed a strong positive correlation, which was confirmed with a significant Pearson’s correlation (for accuracy: r(22) = .84, p < .001 and for Cohen’s κ: r(22) = .88, p < .001). The linear regression indicated that the manual performance explained 71% of variance for accuracy (F(1,22) = 54.07, p < .001) and 77% of variance for Cohen’s κ (F(1,22) = 71.92, p < .001). The results show a significant relationship between the performance of the machine learning algorithms and rater performance. The coefficient for the coder accuracy was 0.97 (p < 0.001) and for the coder Cohen’s κ was 0.86 (p < .001).

Another interesting finding illustrated in Table 6 is that there is not a single best performing machine learning algorithm. The Random Forests algorithm achieved the highest performance for several categories but not in all categories. The highest performing models came also from the algorithms Naïve Bayes, Support Vector Machine variants (linear, radial and polynomial), and Neural Networks.

Features of the Machine Learning Models

The pre-processing section described how the texts were converted to features, in this case unigrams. The machine learning algorithms use these features for their classification. Many of the machine learning models do not use all the features as predictors. Based on the input data, they learn which of the input variables are important. We can inspect these variables to understand which features are more important and which are less important for the classification, providing insights about the inner workings of the otherwise difficult-to-examine machine learning algorithms. The inspection of these features can help to corroborate the validity of the machine learning models as often they intuitively make sense to an expert. Sometimes, the inspection reveals odd features, which may indicate errors when preparing the data set, for example, when the class information is accidentally included in the feature set. Other times, however, these features do not conform to human intuition but still achieve high performance.

The evaluative function to calculate the contribution of features to a model is different for each algorithm. Here, we use the functions provided by the R caret package developed by Kuhn et al. (2014). The variable importance for each unigram can be ranked and scaled with values ranging from 0 (no contribution) to 100 (contributes the most). While the variable importance can tell us about the features that contribute most to the classification, it cannot tell us about the direction of that contribution (e.g., is a feature used as a case in favor of a classification class or against it) as these features can be used in many ways to determine the response. Also, a highly important feature is not necessarily the sole differentiator between classes. The existence of a highly important feature in text only indicates that it is more likely that the instance belongs to the class or another class. Often, it is the combination of features that guarantees high performance, and Table 7 shows the most important features of the machine learning models.

To explain the feature presented in Table 7 in the context of reflective writing, they are interpreted based on the theory of reflection as well as the existing empirical studies. This interpretative context is also used to gauge the direction of the features, as we cannot derive this information from the variable importance measure. Due to space limitations and the large overlap of the features for the other two data set conditions, the discussion is only about the features of the four-fifths majority data set condition in Table 7.

Six of the features had a scaled feature importance of 10 and above in the Random Forests model of the reflective data set. This was the data set in which each sentence had a four-fifths majority of either being reflective or non-reflective/descriptive. The singular first-person pronouns “I”, “me”, and “my” have high levels of feature importance. Several other studies highlighted the importance of self-referential messages for reflection (Birney 2012; Ullmann 2015a, b; 2017; Ullmann et al. 2012), which is congruent with this finding. In the reflective writing literature, there is a debate whether a reflective account needs to be written from a first-person perspective (Moon 2006). Although a reflective account can be written without any self-references, they tend to be personal in nature. The word “have” can be indicative of the present perfect tense, which is used to express that something in the past still has importance for the present. Considering past experiences for current learning is an important facet of reflective thinking (see definition previously presented). The subordinate conjunction “that” is often used to provide additional information to information given by the main clause of the sentence. A reflection is also often a very detailed account of experience as the topic of the reflection is often complex. The conjunction “that” would be such a device to provide additional information. The word “that” can be part of a that-clause, for example, “I thought/feel/believe that”, which are that-clauses using verbs that refer to thinking processes. It can be used by the author to explicitly refer to mental processes, which is a sign of self-awareness and important for reflection. The verb “feel” is a thinking and sensing word. According to Birney (2012), verbs of that group highly correlate with reflection. She suggests that the combination of the first-person perspective together with thinking and sensing verbs (e.g., I feel) is a linguistic device that can be used to express self-awareness. Self-awareness is an important characteristic of reflection as it is much about expressing one’s own perspective, believes, and feelings.

The most important features of the category Experience are words indicating various verb tenses. The words “was”, “had”, and “were” indicate the past tense, the word “have” indicates the present perfect tense, the words “is”, and “are” indicate the present tense, and “will” indicates the future tense. A reflective writing is often a recount of a past experience. The author uses the past tense to express their experience. The description of an experience in a reflective writing is less about the presence or the future. The latter tenses, however, can indicate the absence of an experience. The other top features of Experience are the singular first-person pronouns “I” and “me”, as well as the third-person pronoun “we”. These pronouns can be useful in describing the agent of the experience, which can be the person writing the account, and an experience with several actors of which the writer is one.

The words with the highest variable importance for the Feeling category consists of the first-person pronouns “I”, “me”, and “my” and the sensing word “feel” in its present tense form and past tense form. The phrases “I feel” or “I felt” are expressions that are often used to describe something that is not entirely in the known, something about which someone is not sure about, or something for which we do not hold a firm belief yet. Such intuitions that are often encapsulated in an expression related to emotions can be the reason to reflect about something to reach greater clarity. Supporting evidence for these features also comes from Birney (2012), who found a high relationship between these thinking and sensing words and reflection.

Many of the most important features of the category Beliefs are thinking and sensing words, such as “believe”, “feel”, “felt”, and “think”, and the noun “feelings”. The word “believe” directly addresses the category, and the other words in this category can be used to express beliefs and personal views. A writer can explicitly use these sensing words to express that something is a personal perception and not a fact. These words can also indicate that the writer is less definitive about something and does not yet accept something as a fact. This is related to the group of words expressing tentativeness, such as “seemed”, “should”, “could”, “can”, or “would”. Another class of words that has a high variable importance include the self-referential words “me”, and “own”. Reflective writings are often about one’s own personal beliefs. The transition adverb “therefore” can be used to express an addition such as a suggestion or conclusion. Birney (2012) examined causal reasoning and explanation resources, of which “therefore” is one, and found that they are important for reflective writings. In the context of writing about own beliefs, the word “better” can be used to indicate that something could have been better (is believed to be better). Currently, it less clear how the words “people”, “asked”, and “used”, which also have high levels of feature importance, fit into the context of Beliefs.

The top features of the category Difficulties use nouns indicating problems or discrepancies, such as the words “difficult”, or “lack”. Ullmann et al. (2012) noted a discrepancy annotator for reflection that among other words uses the word “lack”. The word “but” can be used to express a contrast, which can indicate a difficulty. Birney (2012) also found contrastive transition devices, such as “but”, important. The negation “not” is often used to describe that something did not happen or that there is a lack of something, also indicating a difficulty. The words “if”, “because”, and “that” (as in “given that”) can signal the premise part of an argument. These words comprise part of the premise annotator of Ullmann et al. (2012). As outlined, the subordinate conjunction “that” can be used to add extra information to the main clause of a sentence, which can be used to specify the exact nature or context of the problem or difficulty. The verb “be” is often used in the combinations of “to be”, “should be”, “need to be”, and “would be”. This verb is related to reality and existence and can be used to emphasise that something really happened and that something really exists, such as a problem. The verb “was” is the past tense form of “be” and thus focuses more on past aspects. The first-person pronouns “I” reflects that a reflection is often about personal problems or difficulties. The third-person pronoun “it” is frequently used to refer to something previously introduced, such as the problem that has been discussed. Similarly, the word “this” can be used to refer to something that has been previously mentioned. The verb “have” can be used to form the present perfect tense to indicate the importance of past events, such as a past problem, for the current situation. The verb “have” can be used in the form of “have to”, representing that something needs to be done. This feeling may come from the perception that something must be done to overcome a problem. Often “have” is used in combination with “could” to express that something “could have” been done differently, implying that something was not done in the best way possible because of a problem. The word “could” alone can signal uncertainty or a possibility, possibly signaling a difficulty. The word “would” can express something wished for or an imagined situation. In the context of expressing difficulties or problems, the expressed wish can indicate a target state that has not yet been reached and therefore implicitly indicate a problem. The verb “made” can be used to express that someone or something caused something, which in this context can describe a problem that has been caused. The function of the word “did” can be similar to “made”. Lastly, a link between the category and the words “to”, “the”, and “and” could not be established.

Regarding Perspective, the feature with the highest importance is the subordinate conjunction “that”. A writer can use “that” to add extra information to the main clause of the sentence. In the context of considering the perspectives of others, this extra information can be either details about that perspective or the source of the perspective. The third-person pronouns “they” and “it” can indicate the source of the perspective, which is in the first case a group of people and for the second case a single person. Conversely, the singular first-person pronoun “I” refers to the perspective of the writer and therefore can be a negative indicator for the presence of this category, i.e., indicating its absence. The word “but” can express a contrast, which can indicate a contrasting perspective. Similarly, Birney (2012) found that contrastive devices, such as “but”, can indicate “multiple perspectives”. The negation “not” can signal that something did not happen, which in the context of perspective can mean that something was different from one’s own perspective. The verb “felt” is a thinking and sensing word. In the context of Perspective, this verb can either express a feeling that the writer had about the perspective of someone else or that someone else had a feeling about the writer’s perspective. In contrast to Birney (2012), feeling and sensing words such as “felt” do not play a major role for the category “multiple perspectives”, a category that is closely associated with Perspective. The modal verb “would” can be used to express an imagined situation or something that does not necessarily need to be actual. In this context, the writer imagines something that adds another perspective to the train of thought. However, there was no clear connection between the category and the words “and”, “with”, “to”, “as”, “of”, “a”, “the”, “be”, “was”, “is”, and “have”.

The features with the highest importance for the data set Learning include the personal pronouns “I”, “my”, and “me”. This is congruent with the research of Birney (2012) that found a link between the personal voice and the evidence of learning. The personal pronoun “it” has been highlighted as important as well, but the importance currently cannot be explained. In the context of Learning, the word “experience” can specify a learning experience or the degree of experience of a person. The words “this” can be used to refer to something specific such as a specific learning experience or to refer back to something that has been previously mentioned. The subordinate conjunction “that” can signal additional information. In this context, this can be a clarification of the learning experience or other context information. The word “how” can be used to specify the way or manner of things. In this context, “how” can possibly signal a certain know-how gained by the writer. The word “more” can signal that something is now greater or better and can be used to describe an increase in learning. The words “have” and “had” have a high feature importance in the context of Learning. The word “have” can be part of phrases such as “I have learned a lot”, “I have a lot to learn”, “should have tried harder”, “could have done more”, expressing a statement about learning or lessons learned. The word “had” can refer to things that the writer had done, such as a recount of a learning experience. Currently, the connection between the words “of”, “about”, “in”, “on”, “from”, “to”, “and”, “the”, and “a” with Learning is unclear.

In the context of Intention, the personal pronouns “I” and “my” are important features to express future plans of the writer. The future tense indicator word “will” can indicate what the writer “will” try or do in future. Birney (2012) and Ullmann et al. also noted the importance of future tense words. The word “was” is the past tense form of “be”. “Was” refers to past events and therefore may be an indicator for the absence of an Intention. The temporal word “next” can indicate the “next” opportunity to do something. The verb “improve” signals areas that the writer wants to improve or areas that need improvement. The link between the word “to” and Intention cannot be explicitly established for now.

Discussion and Conclusions

The aim of this research was to determine whether machine learning algorithms can be used to reliably detect reflection in texts. This was tested on a comprehensive model of reflection that has been derived from theory, and its reliability and validity were confirmed with empirical evidence. The evaluation shows compelling evidence that machine learning can be used to analyze reflection in texts.

Reliability and Validity of the Model for Reflection Detection

The reflective writing literature showed that reflection is a multi-faceted construct that often describes two qualities, depth and breadth. Our model captured both dimensions with a total of eight categories that are the common categories of 24 reflective writing models. Previous research is based on models that are specific to the researchers’ context and covered fewer categories, such as the model of Cheng (2017) with four categories, Liu et al. (2018) with three categories, and Kovanović et al. (2018) with two categories of the reflection detection model. The research using a rule-based approach suggested many model categories but empirically evaluated so far only one category. Compared to previous research, this paper tested the automatability of the analysis of reflection with a comprehensive reflective writing model.