Abstract

In this article, we propose a new iterative algorithm for solving split common fixed point problems for finite families of single-valued demicontractive mappings in the setting of real Hilbert spaces. In order to solve this problem, we introduce a Halpern method with with an Armijo-line search for approximating the solution of the aforementioned problems. We establish a strong convergence result of the proposed method and display some numerical examples to illustrate the performance of our main result. Our result complements and generalizes some related results in literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \(C_j,~j=1,2, \ldots ,m\) be a family of nonempty, closed and convex subset of real Hilbert space \(\mathcal { H}\), the Convex Feasibility Problem (in short, CFP) is to find

where \(m \ge 1\) is an integer. The CFP (1.1) has received a lot of attention due to its numerous applications in many applied disciplines such as image recovery and signal processing, approximation theory, control theory, biomedical engineering, geophysics and communication (see [4, 11, 22, 28] and the references therein). A special case of CFP (1.1) is the Split Feasibility Problem (in short, SFP), which is to find

where C and Q are two nonempty, closed and convex subsets of real Hilbert spaces \(\mathcal { H}_1\) and \(\mathcal { H}_2\), respectively, and A: \(\mathcal { H}_1 \rightarrow \mathcal { H}_2\) is a bounded linear operator. The SFP (1.2) was introduced in 1994 by Censor and Elfving [7] for modeling certain inverse problems. It plays a vital role in medical image reconstruction and in signal processing (see [5, 6, 20, 33] and the references therein). In 2002, Bryne [5] proposed a popular algorithm \(\{x^k\}\) that solves the SFP (1.2) as follows:

for each \(k \ge 0,\) where \(P_{C}\) and \(P_{Q}\) are metric projections onto C and Q, respectively, \(A^*\) denotes the adjoint of a mapping \(A: \mathcal { H}_1 \rightarrow \mathcal { H}_2,\) and \(\gamma \in (0, \frac{2}{\lambda })\) with \(\lambda \) being the spectral radius of \(A^*A.\) Since then, several iterative algorithms for solving SFP (1.2) have been proposed (see [1, 2, 5,6,7, 16, 19, 23]). The authors [24] studied the following SFP with multiple output sets in the setting of real Hilbert spaces: Let \(\mathcal { H}, \mathcal { H}_j, j=1,2, \ldots ,N\) be bounded linear operators. Let C and \(Q_j\) be nonempty, closed and convex subsets of \(\mathcal { H}\) and \(\mathcal { H}_j, j=1,2,\ldots ,N\) respectively. Suppose that \(\bigtriangleup :=C \cap (\bigcap \nolimits _{j=1}^{N}A_j^{-1}(Q_j)) \ne \emptyset ,\) then in other to solve for \(\bigtriangleup \). Reich et al. [24] proposed the following two iterative methods: For any \(x^{0}, y^{0} \in C,\) let \(\{x^{k}\}\) and \(\{y^{k}\}\) be two sequences generated by

and

where \(g: C \rightarrow C\) is a strict contraction mapping with contraction coefficient \(a \in [0,1),~ {\gamma ^{k}} \in (0, \infty )\) and \(\{\alpha ^{k}\} \in (0,1).\) They established weak and strong convergence of the sequences generated by (1.3) and (1.4) using the following assumption on \(\{\gamma ^{k}\}\):

Now, if \(C=F(T)\) and \(Q=F(S)\) in (1.2), then we obtain the following Split Common Fixed Point Problem (SCFPP) which is to find a point

where \(F(T)=\{x \in C: x=Tx\},\) and F(S) denotes respectively, the set of all fixed points of T and S, respectively with \(A: \mathcal { H}_1 \rightarrow \mathcal { H}_2\) being the bounded linear operator. The SCFPP (1.5) was first studied by Censor and Segal [9]. Note that \(x^*\) is a solution of SCFPP (1.5) if the fixed point equation below stands:

In order to solve SCFPP (1.5), Censor and Segal [9] introduced the following iterative method: For any arbitrary point \(x^{1} \in \mathcal { H}_{1}\), define the sequence \(\{x^{k}\}\) by

where U and T are directed operators, \(\gamma \in (0, \frac{2}{\Vert A\Vert ^2})\). They established that the sequence \(\{x^{k}\}\) generated by (1.6) converges weakly to a solution of (1.5). Subsequently, the result of [9] was extended to the classes of quasi-nonexpansive mappings [18] and demicontractive mappings [17], but still, the sequence \(\{x^{k}\}\) converges weakly to the solution of (1.5), see ([2, 3, 8, 27] and the references therein) for more results on SCFPP.

Remark 1.1

Though the difficulty occurs when one implements (1.6) because its step size does require the computation of the operator norm \(\Vert A\Vert \), alternative ways to solve this problem have been considered by several authors (see [21, 29, 31] and the references therein).

In this paper, we consider the following split common fixed point problem with multiple output sets in real Hilbert spaces: Let \(H,~ H_j, j=1,2, \ldots ,N\) be real Hilbert spaces and \(A_j: \mathcal { H} \rightarrow \mathcal { H}_j\) be bounded linear operators. Let \(T_j: \mathcal { H} \rightarrow \mathcal { H}\) and \(S_j:\mathcal { H}_j \rightarrow \mathcal { H}_j, j=1,2 \ldots , N\) be \(\lambda _j\) and \(k_j\)-demicontractive mappings respectively, then the SCFPP with multiple output sets is to find an element \(x^* \in \mathcal { H} \) such that

Remark: If \(C_j=\bigcap \nolimits _{j=1}^{N}F(T_j)\) and \(Q_j=\bigcap \nolimits _{j=1}^{N}F(S_j),\) then problem (1.7) becomes the multiple set split feasibility problem considered in [24].

Remark 1.2

The class of demicontractive mappings is known to be of central importance in optimization theory since it contains many common types of operators that are useful in solving optimization problems, (see [24, 27] and other references therein).

Remark 1.3

We will like to emphasize that approximating a common solution of SCFPP for finite families of certain nonlinear mappings have some possible real life applications to mathematical models whose constraints can be expressed as fixed points of some nonlinear mappings. In fact, this happens in practical problems like signal processing, network resource allocation, image recovery, among others, (see [13]).

Inspired by the results of Reich et al. [24], Eslamian Padcharoen et al. [21], Reich et al. [25] and many other related results in the literature, we introduce a Halpern method for approximating the solution of split common fixed point problem for demicontractive mappings with multiple output sets in real Hilbert spaces. We prove a strong convergence result of the proposed method with the Armijo- linesearch. Finally, we provide some applications and numerical examples to illustrate the performance of our main result. Our results extends and complements many related results in the literature. We highlight our contributions as follows:

-

(i)

We establish a strong convergence result which is desirable to the weak convergence results obtained in [4, 9, 17, 18].

-

(ii)

We introduce a linesearch which prevents our iterative method from depending on operator norm (see [17, 24]).

-

(iii)

The problems considered in [9, 17, 25] are special cases of problem (1.7).

-

(iv)

Our method of proof is short and elegant.

2 Preliminaries

We state some known and useful results which will be needed in the proof of our main theorem. In the sequel, we denote strong and weak convergence by "\(\rightarrow \)" and "\(\rightharpoonup \)", respectively.

Let C be a nonempty, closed and convex subset of a real Hilbert space \(\mathcal {H}\). Let \(T: C \rightarrow C\) be a single-valued mapping, then a point \(x \in C\) is called a fixed point of T if \(Tx=x\). We denote by F(T), the set of all fixed points of T. The mapping \(T: \mathcal {H} \rightarrow \mathcal {H}\) is said to be

-

(i)

nonexpansive if

$$\begin{aligned} \Vert Tx-Ty\Vert \le \Vert x-y\Vert ,\quad ~\forall ~x,y \in \mathcal { H}, \end{aligned}$$ -

(ii)

quasi-nonexpansive if

$$\begin{aligned} \Vert Tx-Tp\Vert \le \Vert x-p\Vert ,\quad ~\forall ~x \in \mathcal { H}~\text {and}~p \in F(T), \end{aligned}$$ -

(iii)

directed (firmly quasi-nonexpansive) if

$$\begin{aligned} \Vert Tx-p\Vert ^2&\le \Vert x-p\Vert ^2-\Vert x-Tx\Vert ^2,\quad ~\forall ~x\in \mathcal { H}~\text {and}~p \in F(T), \end{aligned}$$ -

(iv)

strictly pseudocontractive if there exists \(k \in [0,1)\) such that

$$\begin{aligned} \Vert Tx-Ty\Vert ^2 \le \Vert x-y\Vert ^2 + k \Vert (x-y)-(Tx-Ty)\Vert ^2,\quad ~\forall ~x \in \mathcal { H}, \end{aligned}$$ -

(v)

pseudocontractive if

$$\begin{aligned} \Vert Tx-Ty\Vert ^2&\le \Vert x-y\Vert ^2 + \Vert (x-y)-(Tx-Ty)\Vert ^2,\quad ~\forall ~x \in \mathcal { H}, \end{aligned}$$ -

(vi)

demicontractive (or k-demicontractive) if there exists \(k < 1\) such that

$$\begin{aligned} \Vert Tx-Tp\Vert ^2&\le \Vert x-p\Vert ^2 + k\Vert x-Tx\Vert ^2,\quad ~\forall ~x \in \mathcal { H}~\text {and}~p \in F(T), \end{aligned}$$which is equivalent to

$$\begin{aligned} \langle x-p, x-Tx\rangle \ge \frac{1-k}{2}\Vert x-Tx\Vert ^2,\quad ~\forall ~x \in \mathcal { H}~\text {and}~p \in F(T). \end{aligned}$$

Remark 2.1

It can be observed from the definitions above that if \(k=0\) in (iv), we get (i) and when k=0 in (vi), we get (ii). Also, it can be seen that the class of demicontractive mappings contains the the classes of quasi-nonexpansive and directed mappings. Every k-demicontractive mapping \((k \le 0)\) is characterized by quasi-nonexpansive. Also, every directed mapping is \((-1)\)-demicontractive.

Example 2.2

[30] Let \(\mathcal { H}=\ell _2\) and \(T: \ell _2 \rightarrow \ell _2, Tx=-kx, x \in \ell _2, k \ge 1.\) Then \(F(T)=\{0\}\) and T is an operator with endowed with the demicontractive property but not with the quasi-nonexpansive property.

Let C be a nonempty, closed and convex subset of a real Hilbert space H. For every point \(x \in H,\) there exists a unique nearest point in C, denoted by \(P_C x\) such that

\(P_C\) is called the metric projection of H onto C and it is well known that \(P_C\) is a nonexpansive mapping of H onto C that satisfies the inequality:

Moreover, \(P_C x\) is characterized by the following properties:

and

More information on metric projection can be found in [12, 15].

Lemma 2.3

[10] Let \(\mathcal {H}\) be a real Hilbert space, then for all \(x, y \in \mathcal {H}\) and \(\alpha \in (0,1)\), the following inequalities holds:

Definition 2.4

Let \(T: \mathcal { H}\rightarrow \mathcal { H}\) be a mapping, then \(I-T\) is said to be demiclosed at zero if for any sequence \(\{x^{k}\}\) in \(\mathcal { H}\), the conditions \(x^{k}\rightharpoonup x\) and \(\lim \nolimits _{k \rightarrow \infty }\Vert x^{k}-Tx^{k}\Vert =0\) imply \(x=Tx.\)

Lemma 2.5

[32] Let C be a nonempty, closed and convex subset of a real Hilbert space \(\mathcal {H}\) and \( T: C \rightarrow C\) be a nonexpansive mapping. Then \(I-T\) is demiclosed at 0 (i.e., if \(\{x_n\}\) converges weakly to \(x\in C\) and \(\{x_n-Tx_n\}\) converges strongly to 0, then \(x=Tx\).

Lemma 2.6

[26] Let \(\{\alpha _n\}\) be sequence of nonnegative real numbers, \(\{a_n\}\) be sequence of real numbers in (0, 1) such that \(\sum \nolimits _{n=1}^{\infty }a_n=\infty \) and \(\{b_n\}\) be a sequence of real numbers. Assume that

If \(\limsup \nolimits _{k \in \infty }b_{n_k}\le 0\) for every subsequence \(\{a_{n_k}\}\) of \(\{a_n\}\) satisfying the condition

then \(\lim \nolimits _{k \rightarrow \infty }a_k=0\).

3 Main result

In this section, we present our algorithm and its convergence analysis.

Assumption 3.1

-

(A1)

Let \(\mathcal {H}\), \(\mathcal {H}_j, j=1,2,\ldots , N\) be real Hilbert spaces and let \(A_j: \mathcal {H} \rightarrow \mathcal {H}_j, j=1,2,\ldots ,N\) be bounded linear operators.

-

(A2)

Let \(S_j:\mathcal {H}_j \rightarrow \mathcal {H}_j, j=1,2,\ldots ,N\) be finite family of \(k_j\)-demicontractive mapping such that \(S_j-I\) are demiclosed at 0 and let C be nonempty, closed and convex subset of \(\mathcal { H}.\)

-

(A3)

For \(j=1,2,\ldots ,N,\) let \(T_j:\mathcal { H} \rightarrow \mathcal { H}\) be finite family of \(\lambda _j\)-demicontractive mappings such that \(T_j-I\) are demiclosed at 0.

-

(A4)

Assume that \(\bigtriangleup :=\bigcap \nolimits _{j=1}^{N}F(T_j) \bigcap (\bigcap \nolimits _{j=1}^{N} A_j^{-1}(F(S_j)))\) is nonempty.

Assumption 3.2

-

(B1)

\(\beta ^{k} \in (0,1)\) such that \(\lim \nolimits _{k \rightarrow \infty }\beta ^{k}=0\) and \(\sum \nolimits _{k=1}^{\infty }\beta ^{k}=\infty .\)

-

(B2)

\(\sum \nolimits _{j=1}^{N}\theta ^{k,j}=1\) and \(\liminf \nolimits _{k \rightarrow \infty } \theta ^{k,j}> 0.\)

Remark 3.4

We employed an Armijo linesearch in Step 1 of Algorithm 3.3 to prevent our sequence from depending on the operator norm. Our Armijo linesearch is easy to compute and gives more importance to our iterative method.

Lemma 3.5

Let \(\{x^{k}\},~ \{u^{k}\}\) and \(\{w^{k}\}\) be sequences generated by Algorithm 3.3, then the following inequality holds:

Proof

Let \(x^* \in \bigtriangleup ,\) then we obtain from Lemma 2.3, Algorithm 3.3 and the equality \(S_jA_jx^*=A_jx^*, j=1,2,\ldots ,N\) that

Using the fact that \(S_j, j=1,2, \ldots ,N\) are \(k_j\)-demicontractive and \(A_jx^* \in \bigcap \nolimits _{j=1}^{N}F(S_j),\) we obtain that

Thus, we obtain from (3.6) that

From the last inequality in (3.5), it follows that

On substituting (3.7) and (3.8) into (3.5), it yields

This completes the proof. \(\square \)

Lemma 3.6

Suppose \(\{x^{k}\}, ~\{u^{k}\}\) and \(\{w^{k}\}\) are sequences generated by Algorithm 3.3, and \(T_j: \mathcal { H} \rightarrow \mathcal { H}\) be a finite family of \(\lambda _j-\) demicontractive mapping. Then the following inequality holds:

Proof

Let \(x^* \in \bigtriangleup ,\) then we obtain from Algorithm 3.3 and (3.6) that

This completes the proof. \(\square \)

Lemma 3.7

Suppose \(\{x^{k}\},~ \{u^{k}\}\) and \(\{w^{k}\}\) are sequences generated by Algorithm 3.3. Then the aforementioned sequences are all bounded.

Proof

Let \(x^* \in \bigtriangleup ,\) then we obtain from Lemma 3.5 and Lemma 3.6 that

From Algorithm 3.3 and (3.12), we have

Thus, by induction, we have

Hence, \(\{x^{k}\}\) is bounded. Consequently, \(\{u^{k}\}\) and \(\{w^{k}\}\) are bounded. \(\square \)

Theorem 3.3

Assume that Assumptions (A1)–(A4) and (B1)–(B2) hold, then the sequence \(\{x^{k}\}\) generated iteratively by Algorithm 3.3 strongly converges to the solution of \(z \in \bigtriangleup ,\) where \(z=P_{\bigtriangleup }u\) and \(P_{\bigtriangleup }\) denotes the metric projection of H onto \(\bigtriangleup \).

Proof

Let \(x^* \in \bigtriangleup ,\) then from Lemma 2.3, Algorithm 3.3 and (3.11), we get

This implies that

Put \(a^{k}=\Vert x^{k}-z\Vert ^2\) and \(d^{k}:=2 \langle u-z, x^{k+1}-z\rangle .\) Then the inequality (3.16) becomes

We now establish that \(a^{k} \rightarrow 0.\) In view of Lemma 2.6, we claim that \(\limsup \nolimits _{n \rightarrow \infty }d^{k_n} \le 0\) for a subsequence \(\{a^{k_n}\}\) of \(\{a^{k}\}\) satisfying

Now, from (3.14), we obtain that

By condition (B1), we obtain that

Also, using (3.14), we obtain that

Thus, we obtain

From step 3 of Algorithm 3.3 and (3.19), we obtain that

Using step 4 of Algorithm 3.3, we get

From (3.17), (3.20) and (3.21), we have

Since \(\{x^{k_n}\}\) is bounded, there exists a subsequence \(\{x^{k_{n_j}}\}\) of \(\{x^{k_n}\}\) with \(x^{k_{n_j}} \rightharpoonup p \in H.\) Also, from (3.17) and (3.22), there exist subsequences \(\{u^{k_{n_j}}\}\) of \(\{u^{k_n}\}\) and \(\{w^{k_{n_j}}\}\) of \(\{w^{k_n}\}\) which converge weakly to p, respectively. By the demiclosedness principle of \(T_j-I, j=1,2, \ldots ,N\) at 0 and (3.19), we have that \(p \in F(T_j)=C, j=1,2,\ldots ,N\). Also, since \(A_j, j=1,2,\ldots ,N\) are bounded linear operators, we have the \(A_jx^{k_{n_j}}\rightharpoonup A_jp.\) Thus, by using the demiclosedness principle of \(S_j-I\) at 0 and (3.17), we obtain that \(A_jp \in F(S_j), j=1,2,\ldots N.\) Hence, we conclude that \(p \in \bigtriangleup .\)

Next, we show that \(\limsup \nolimits _{n \rightarrow \infty }d^{k_n}\le 0.\) Indeed, suppose that \(\{x^{k_{n_j}}\}\) is a subsequence of \(\{x^{k_n}\}\), then from the fact that \(z=P_{\bigtriangleup }u\) and applying (2.1), we deduce that

that is \(\limsup \nolimits _{n \rightarrow \infty }d^{k_n} \le 0\). Hence all the assumptions of Lemma 2.6 are satisfied and hence \(a^{k} \rightarrow 0,\) that is \(x^{k} \rightarrow z=P_{\bigtriangleup }u\), as asserted. \(\square \)

Corollary 3.9

In this case \(T_j\) and \(S_j,\) \(j=1,2,\ldots ,N\) are finite families of quasi-nonexpansive mappings. Then, we have the following iterative algorithm.

4 Numerical example

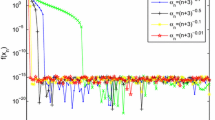

In this section, we report some numerical example to illustrate the convergence of Algorithm 3.3.

Example 4.1

For all \(j=1,\ldots , N\) let \(\mathcal {H}=\mathcal {H}_j=\mathbb {R}\) and \(C=[0,+\infty )\) be a subset of \(\mathcal {H}.\) We define the mapping \(T_j: \mathcal {H}_j \rightarrow \mathcal {H}_j\) by

and \(S_j: \mathcal {H}_j\rightarrow \mathcal {H}_j\) be give by

Then \(T_j\) and \(S_j\) are demicontractive mappings. Now let \(A_j: \mathcal {H} \rightarrow \mathcal {H}_j\) be defined by \(A_j=\frac{x}{j}\) for all \(x \in \mathcal {H}\) and \(j=1,\ldots , N.\) Let \(\beta ^k=\frac{1}{15k+1},\) \(l=0.0012,\) \(\rho =0.05,\) \(\mu =0.5\) and \(\theta ^{k,j}=\) \(\frac{3}{4j}+\frac{1}{N4^N}.\) We set \(u=0.1,\) \(j=1,2\) and choose \(E_n=\Vert x_{n+1}-x_n\Vert ^2=10^{-4}\) as the stopping criterion. The process is conducted for different initial values of \(x_1\) as follows:

-

(Case 1)

\(x_1=1.6;\)

-

(Case 2)

\(x_1=3.1.\)

We present the report of this experiment in Fig. 1.

Numerical report for Example 4.1

Example 4.2

Let \(\mathcal {H}=(\mathbb {R}^3,\Vert .\Vert _2)=\mathcal {H}\). Let \(S,T:\mathbb {R}^3\rightarrow \mathbb {R}^3\) be two mappings defined by

It is clear that both T and S are 0-demicontractive mappings. We assume that

We consider the following SCFP: Find a point \(x^*\in F(T)\) such that \(Ax^*\in F(S)\).

Let

and \(Ax^*\in F(S)\), we have

From the definition of S, we obtain

That is,

Hence

Next, we show that \(x^*\in F(T)\). By definition of T, we obtain

Therefore,

In this example, we choose \(N=u=1,\) \(\rho =2.5, \mu =0.5,, \theta ^{k,1}=\theta ^k=\frac{k}{2k+17}\) and \(\beta ^k=\frac{1}{k+2}.\) The stopping criterion for this experiment is \(\Vert x_{k+1}-x_k\Vert ^2=E_n=10^{-4}.\) The report is given in Fig. 2 for different initial values of \(x_1\) as follows:

-

(Case a)

\(x_1=[-2.3, -2.5,-2.1];\)

-

(Case b)

\(x_1=[3.1,2.7,3.5].\)

5 Conclusion

In this paper, we studied the Halpern method with Armijo-linesearch rule which is designed to solve finite family of split common fixed point problems for demicontractive mappings in the setting of real Hilbert spaces. Also, we established in an elegant and novel way how the sequence generated by our algorithm converges strongly to a solution of finite family of SCFPP. Finally, we present some numerical examples to illustrate the performance of our method.

Data availibility

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

Abass, H.A., Jolaoso, L.O.: An inertial generalized viscosity approximation method for solving multiple-sets split feasibility problem and common fixed point of strictly pseudo-nonspreading mappings. Axioms 10, 1 (2021)

Abass, H.A., Mebawondu, A.A., Izuchukwu, C., Narain, O.K.: On split common fixed point and monotone inclusion problems in reflexive Banach spaces. Fixed Point Theory 23(1), 3–20 (2022)

Abass, H.A., Oyewole, O.K., Jolaoso, L.O., Aremu, K.O.: Modified inertial Tseng for solving variational inclusion and fixed point problems o Hadamard manifolds. Appl. Anal. (2023). https://doi.org/10.1080/00036811.2023.2256357

Bauschke, H.H., Borwein, J.M.: On projection algorithms for solving convex feasibility problems. SIAM Rev. 38, 367–426 (1996)

Bryne, C.: Iteratice oblique projection onto convex sets and the split feasibility problem. Inverse Probl. 18, 441–453 (2002)

Byrne, C.: A unified treatment for some iterative algorithms in signal processing and image reconstruction. Inverse Probl. 20, 103–120 (2004)

Censor, Y., Elfving, T.: A multiprojection algorithm using Bregman projections in product space. Numer. Algorithms 8, 221–239 (1994)

Censor, Y., Segal, A.: The split common fixed point problem for directed operators. J. Convex Anal. 16(2), 587–600 (2009)

Censor, Y., Segal, A.: The split common fixed point problem for directed operators. J. Convex Anal. 16, 587–600 (2009)

Chidume, C. E.: Geometric properties of Banach spaces and nonlinear spaces and nonlinear iterations . In: Springer Verlag Series. Lecture Notes in Mathematics. ISBN 978-84882-189-7 (2009)

Combettes, P.L.: The convex feasibility problem in image recovery. In: Hawkes, P. (ed.) Advances in Imaging and Electron Physics, vol. 95, pp. 155–270. Academic Press, New York (1996)

Geobel, K., Reich, S.: Uniform Convexity, Hyperbolic Geometry and Nonexpansive Mappings. Marcel Dekker, New York (1984)

Iiduka, H.: Acceleration method for convex optimization over fixed point set of a nonexpansive mappings. Math. Prog. Ser. A. 149, 131–165 (2015)

Kim, J.K., Cho, S.Y., Qin, X.: Some results on generalized equilibrium problems involving strictly pseudocontractive mappings. Acta Math. Sci. 31, 2041–2057 (2011)

Kopecká, E., Reich, S.: A note on alternating projections in Hilbert spaces. J. Fixed Point Theory Appl. 12, 41–47 (2012)

Masad, E., Reich, S.: A note on the multiple-set split convex feasibility problem in Hilbert spaces. J. Nonlinear Convex Anal. 8, 367–371 (2007)

Moudafi, A.: The split common fixed-point problem for demicontractive mappings. Inverse Probl. 26, 587–600 (2010)

Moudafi, A.: A note on the split common fixed-point problem for quasi-nonexpansive operators. Nonlinear Anal. 74, 4083–4087 (2011)

Okeke, C. C., Izuchukwu, C.: Strong convergence theorem for split feasibility problems and variational inclusion problems in real Banach spaces. In: Rendiconti del Circolo Matematica di Palermo Series 2, vol. 70(1), pp. 457–480 (2021)

Oyewole, O.K., Abass, H.A., Mewomo, O.T.: A strong convergence algorithm for a fixed point constrained split null point problem. Rendiconti Circ. Mat. Palermo 70(1), 389–408 (2021)

Padcharoen, A., Kumam, P., Cho, Y.J.: Split common fixed point problems for demicontractive operators. Numer. Algorithms 82, 297–320 (2019)

Plubtieng, S., Ungchittrakool, K.: Hybrid iterative methods for convex feasibility problems and fixed point problems of relatively nonexpansive mappings in Banach spaces. Fixed Point Theory Appl. 2008, 19 (2008)

Reich, S., Tuyen, T.M.: Regularization methods for solving the split feasibility problem with multiple output sets in Hilbert spaces. Topol. Methods Nonlinear Anal. 60, 547–563 (2022)

Reich, S., Truong, M.T., Mai, T.N.: The split feasibility problem with multiple output sets in Hilbert spaces. Optim. Lett. 14, 2335–2353 (2020)

Reich, S., Tuyen, T.M., Ha, M.T.: An optimization approach to solving the split feasibility problem in Hilbert spaces. J. Glob. Optim. 79, 837–852 (2021)

Saejung, S., Yotkaew, P.: Approximation of zeroes of inverse strongly monotone operators in Banach spaces. Nonlinear Anal. 75, 742–750 (2012)

Shehu, Y., Cholamjiak, P.: Another look at the split common fixed point problem for demicontractive operators. Rev. R. Acad. Cienc. Exactas Fís. Nat. Ser. A Mat. 110 , 201–218 (2016)

Stark, H.: Image Recovery: Theory and Application. Academic, Orlando (1987)

Wang, F.: A new iterative method for the split common fixed point problem in Hilbert spaces. Optimization 66(3), 407–415 (2017)

Wang, Y.Q., Kim, T.H.: Simultaneous iterative algorithm for the split equality fixed-point problem of demicontractive mappings. J. Nonlinear Sci. Appl. 10, 154–165 (2017)

Wang, Y., Fang, X., Kim, T.H.: Viscosity methods and split common fixed point problems for demicontractive mappings. Mathematics 7(9), 844 (2019)

Xu, H.K.: Viscosity approximation methods for nonexpansive mappings. J. Math. Anal. Appl. 298, 279–291 (2004)

Xu, H.K.: Iterative methods for split feasibility problem in infinite-dimensional Hilbert space. Inverse Probl. 26, 105018 (2010)

Funding

Open access funding provided by Sefako Makgatho Health Sciences University.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflicting interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Abass, H.A., Aphane, M. An algorithmic approach to solving split common fixed point problems for families of demicontractive operators in Hilbert spaces. Boll Unione Mat Ital 17, 119–134 (2024). https://doi.org/10.1007/s40574-023-00391-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40574-023-00391-7