Abstract

For many years, coal-fired power plant generation comprised the largest share of electricity in the U.S. power sector. While natural gas plants now constitute a greater portion of the total, coal is projected to remain a shrinking but significant component of U.S. electricity production. Natural gas-fired technologies are dispatchable and versatile generation sources, but the recent and anticipated growth of wind and solar technologies will add non-dispatchable, intermittent power generation sources to U.S. electricity grids. Numerous emissions-related benefits arise from the deployment of these technologies, but they must coexist with coal plants, many of which run most efficiently under baseload operating procedures. Historical monthly emissions data has been analyzed on a sample of coal plants to show how modified coal operations have affected plant emission rates, as measured by carbon dioxide emitted per unit of electricity output. Statistically significant correlations between plant capacity factors and emission rate intensity have been observed by the majority of the sample, showing a worsening under more sporadic operations. Since nearly all of the coal plants in the sample are generating less electricity, determining the emissions impact of operational decisions will assist policymakers as they seek to minimize total system emissions without severe disruptions to electricity cost and service reliability.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Projected electricity trends

The recent transformation of the U.S. electricity sector away from coal and towards intermittent generation technologies has been swift. In 2008, as natural gas prices spiked to levels above $10 (all monetary units are 2015 U.S. dollars unless otherwise specified) per MMBTU (million British thermal units) [1], baseload electricity generation dominated U.S. supply. Excluding end-use generation, nuclear and coal facilities provided 70 percent of U.S. electricity supply. Seven years later, these same technologies comprised 56 percent of generation capacity as natural gas and renewable generation gained significant share [2]. Since 2008, natural gas prices have remained low, with two exceptions occurring during the winters of 2010 and 2014, when prices rose above $6 per MMBTU. Low natural gas prices have been a fundamental driver of the movement away from baseload generating sources.

Meanwhile, technological advancements, environmental concerns, and policies have spurred the rapid growth of renewable energy within the last decade, and this growth is expected to accelerate in the coming years. The U.S. Energy Information Administration (EIA) projects that the electric power sector in 2040 will feature large amounts of renewable and natural gas capacity with less coal [3]. The projected growth is noteworthy, especially when compared to earlier outlooks that showed renewable generation technologies were largely uncompetitive without subsidies [3]. Some of the expected renewable capacity additions were spurred by the Clean Power Plan (CPP) of the U.S. Environmental Protection Agency (EPA). The rule was repealed by EPA Administrator E. Scott Pruitt in October 2017, a decision that will spur litigation [4], yet the strong growth occurs when the CPP is not considered. Solar photovoltaic (PV) cells were projected to grow from the current 8.4 GW (Excludes end-use generation. When all installations are considered, 18.9 GW of solar capacity and 65.0 GW of wind capacity had been installed by 2014. 2040 renewable projections are considerably higher with the inclusion of end-use capacity) to 155.6 GW by 2040. Without the CPP, 115 GW of capacity is projected in that same year. Wind power, which had been historically reliant on the renewable production tax credit (PTC), is also anticipated to experience rapid growth. Current installed capacity is 64.1 GW—already an impressive number given the state of the technology at the turn of the century, and would have risen to 146 GW in 2040 under EIA’s projections that included the CPP impacts. Even without the CPP, capacity will nearly double to 124 GW, under EIA’s business-as-usual reference case. Non-fossil steam generation, from biomass and geothermal resources, grows much more modestly.

The unprecedented growth of the renewable technologies is anticipated to occur during a period of sustained low natural gas prices. EIA predicts that the Henry Hub spot price will hover around $5 per MMBTU ($2015) for the next twenty five years. Coal-generating capacity, which currently sits slightly below 300 GW, is expected to decrease regardless of the CPP. EIA projects capacity to be 209 GW by 2040 without the CPP and 170 GW had the power plan not been repealed [2]. Ignoring the CPP, coal is projected to generate at levels above 1000 billion kWh throughout the outlook period, double the projected combined output of wind and solar facilities. Even if the CPP had been enforced, the 2040 projected output from coal generation, slightly under 900 billion kWh, would still have exceeded the sum of wind and solar output [2]. Due to these trends, the term “baseload facility”, which classifies a plant by its duty cycle, can no longer effectively describe coal plants; using the term “steam plant”, which reflects a technological rather than operational state, is more accurate.

1.2 Scope of analysis

This paper seeks to understand an issue that is not widely discussed in the literature nor represented well in large-scale energy sector optimization models, such as EIA’s National Energy Modeling System (NEMS): the emissions impact on coal plants from intermittent capacity additions. The unpredictability and large output fluctuations associated with renewable technologies will change coal plant operations. Without long-term and large-scale energy storage systems to complement renewable capacity installations, which are not currently economically feasible [5], optimal generation management decisions are subject to disruption.

Moreover, minimizing costs or emissions for an entire service area may force individual suboptimal decisions. That is, a coal plant operator may run the plant under stressful conditions, leading to elevated facility-level emission rates. Higher operating reserve requirements are needed with the added uncertainty, which encourage cycling and partial loading of thermal plants. The operations of gas turbines are also likely to be directly impacted by the renewable capacity additions. The plants are expected to ramp up and shut down more frequently in order to stabilize weather-dependent generation, meet demand, and prevent curtailment on the operating system. The emissions penalties associated with the change in natural gas operations are outside the scope of this analysis, but should not be considered to be negligible. Modifications to coal plant operations will also affect the emission rates of other pollutants, especially since selective catalytic reduction systems must be operated at a minimum load [6], but this is not discussed in the paper.

2 Background and literature review

2.1 Electricity market supply

Most modern large-scale coal plants were built to run at high capacity factors, providing baseload electricity supply [7]. Baseload plants are designed to operate at stable output levels, in contrast to peaking units that only generate electricity during periods of high demand or when baseload plants are offline for maintenance. Before the current period of low natural gas prices and non-hydro renewable growth, coal and nuclear technologies supplied most of the electricity, running the vast majority of the time with relatively small output variations.

Peak electricity demand usually occurs during the late afternoon and early evening hours on weekdays, as large numbers of people arrive home from work, turn on appliances, and cool or heat their homes [8]. Peaking technologies, which have historically been fueled by natural gas, can ramp up quickly on a daily basis and provide the necessary energy to achieve supply and demand equilibrium. In order to ensure that supply is adequate and no blackouts occur, electricity system operators require a certain amount of reserve capacity that is usually represented as a percentage overshoot of that day’s anticipated peak demand. For example, if the peak expected hourly demand is 50 MW, an additional 8 MW are needed as a cushion to ensure sufficient supply during periods of unanticipated stress [9]. Operating reserves are comprised of spinning and non-spinning reserve capacity. Spinning reserves are plants that are already connected to the grid system and are capable of ramping up their power output within minutes. Non-spinning reserves are offline facilities that can be turned on to provide power after a short delay.

Renewable energy generation technologies reduce greenhouse gas emissions, but require numerous operational changes for electricity market systems. The technologies also highlight many existing infrastructural deficiencies affecting the electricity grid [10]. Investment in new transmission lines is needed for two primary reasons: to connect areas of rich resource potential to demand centers and to mitigate the risk of relying on weather-dependent technologies [11]. Perhaps the greatest challenge associated with renewable energy is the non-dispatchable nature of the resource. Electricity market optimization has traditionally focused on meeting demand constraints while minimizing generation costs. A familiar calculation to those in the energy industry, the levelized cost of electricity (LCOE), was used as a reliable metric by which to judge the cost competiveness of technologies. LCOE calculations incorporated capital costs, operational costs, and fuel costs to provide comparisons of generators within an operating market [12].

A convenient dollar-per-megawatthour estimate allowed operators to choose the least-expensive options. While these calculations work well with dispatchable technologies, the introduction of renewables increases the amount of energy produced that does not correlate with consumer demand [13]. Since LCOE calculations do not account for different values of energy generated by daypart, system operators can no longer choose technologies solely by LCOE comparison. A well-known example of the challenges associated with non-dispatchable energy is the California ISO “duck curve” [14]. The duck curve shows that the midday surplus of solar generation ends before the early evening peak period, requiring other generation sources to meet load requirements. For certain days during the spring of 2016, CAISO [8] reported that the majority of electricity generation was from renewable resources, and utilities asked customers to reduce demand during the late afternoon and early evening hours [15].

Renewable capacity cannot be dispatched, which introduces weather-related uncertainty and requires higher amounts of reserve capacity. Some estimates state that for every 10 MW of incremental renewable capacity, 8 MW of reserve capacity need to be readily available [10]. This added capital cost expenditure is counteracted by the negative marginal cost of renewable generation operations. Since wind and solar facilities do not burn fuel and have extremely low operation and maintenance costs [16], the negative cost of electricity production is driven largely by the 2.3-cent per kWh renewable PTC. The credit applies to the first ten years of plant operations, and current law gradually phases down the credit amount through 2019 [17]. Therefore, renewable power producers are actually willing to pay a small amount to supply their generation, outbidding other steam plants with low operating costs [18]. Once a renewable plant has been constructed with relatively high capital costs, it is the lowest cost generation option during the periods in which it is able to produce electricity.

2.2 Competition among electricity generators for the supply market

The day-ahead dispatch of coal provides a certain degree of immunity from unexpected intraday volatility, but plants are still run very differently from the baseload operations of the past. They are now subject to more frequent cycling, startups, and shutdowns, with projections showing these events will occur more frequently. During a calm, cloudy, hot day, a coal facility will likely run at a much higher capacity factor than during a windy, sunny, spring day when demand is lower. In markets with high solar penetrations, coal facilities may ramp down production around noon, increasing output during the early evening as the sun sets. Daily and seasonal operations will impact the number of cold, warm, and hot starts. A study deploying the U.S. Regional Economy, Greenhouse Gas, and Energy (REGEN) Model examined projected optimal operations in the north central region and Texas region on an hourly timescale resolution, showing how deeply renewable generation cuts into baseload coal operations, especially during the low-demand periods of spring and fall [19]. The REGEN output data supports the hypothesis that running coal plants at high capacity factors with little variation is not compatible with a highly intermittent, non-dispatchable grid.

2.3 Costs and damages associated with coal plant cycling

Equipment performance is plant specific and providing hourly forecasts by region several years out can be highly inaccurate, so quantifying the economic costs of startups is beyond the scope of the analysis. Estimates for large coal units have been reported to be $54 per MW, $64 per MW, and $104 per MW for cold, warm, and hot starts, respectively [20]. Under a scenario where 33 percent of total generation in western states originated from wind and solar sources, cycling costs have been estimated to be between $35 million and $157 million for the region. This translates to costs of $0.14 to $0.67 per MWh over the system, representing 2 percent to 7 percent of total production costs [21]. Other sources note that when examining overall wholesale electricity prices, startup costs were inconsequential [22]. It has been projected that under a 30-percent renewable penetration scenario in western states, a 500 MW coal plant would experience a dramatic increase in cycles below a 50 percent capacity factor, moving from fewer than five annual occurrences to more than 50 [11]. If coal plants simply run at stable operations, as in the past, high rates of renewable curtailment will be become inevitable and system production costs will no longer be minimized.

Another critical issue is the long-term equipment impacts from more frequent cycling and ramping. These occurrences increase stress on the equipment, causing damage that require shutdowns for repair and modification [21]. The costs, reliability impacts, and indirect emissions resulting from shutdowns, including the contribution from other plants dispatched, are not quantified in this analysis, but should not be assumed to be negligible [6].

Comprehensive reports are available in the literature on equipment damage from cycling, mostly attributable to an increase in rapid thermal gradients experienced during variable operations [20, 23]. The reports note that the plant-specific nature of damages cannot be readily summarized across broad categories, especially since detailed hourly observations for each plant in the sample would be needed to determine the amount, frequency, and duration of load shifts. Rather than modeling damages from variable operations, this analysis focuses on annual emission rate trends, providing context for policy-related discussions that work in tandem with individual plant operational decisions. Operational impacts of reduced capacity also extend beyond equipment stress. As a coal plant generates with less consistency and more uncertainty, the risk for fuel supply delivery disruptions is elevated. Coal silo fires may become more frequent as fuel sits for longer periods of time [24].

2.4 Existing resources on coal plant cycling and carbon dioxide emissions

All of the noted issues provide context for the scope of analysis. A study examining the wind potential in China stated that changes in thermal plant operations under a 30-percent wind penetration scenario caused net carbon dioxide emission reductions to be 20 percent less than projections that ignored such changes [22]. China, which currently has more than double the installed coal capacity of the U.S., has a required uniform coal plant utilization rate of 47.7 percent, which is the lowest observed rate since the 1970s [25]. When comparing the 100 most efficient coal plants in the U.S. and China, Chinese plants, on average, generate the same amount of electricity with 8.2 percent lower carbon dioxide emissions, largely due to the presence of new supercritical and ultra-supercritical technology deployment [25]. It is unclear how falling utilization rates, which have experienced a 22 percent decline since 2011, have affected Chinese coal greenhouse gas emission intensities.

Other reports have shown that additional wind capacity will actually raise overall system greenhouse gas emissions, as wind-induced cycling impacts from coal facilities outweigh the carbon benefits [26]. Individual coal plants use different sources of energy during start-up procedures: oil and natural gas are two commonly used fuels. Since the start-up fuel is different from the operating fuel, emissions associated with starts cannot easily be generalized and may create discrepancies if data from a specific plant is applied to other units [21].

A comprehensive examination of the energy sector in the western states by the National Renewable Energy Laboratory (NREL) details emissions impacts on the region’s coal plants under a variety of wind and solar penetration scenarios. Studies were completed on individual facilities as well as overall system operations to quantify the emissions associated with the required operational changes needed to accommodate renewable capacity [21, 27]. The investigated area contains 122 coal units totaling 36 GW of capacity. By comparison, in 2013 there were 518 active coal plants with an estimated 306 GW of capacity across the continental U.S. [28]. The NREL report finds that, when viewed across the operating system, coal plant greenhouse gas emission rate changes associated with renewable energy capacity additions are negligible.

In fact, three of the four renewable penetration scenarios actually show a very slight decrease in the overall carbon dioxide emission rates (lb/MWh) of a few tenths of one percent. While an overall system average is not indicative of changes at individual plants, no trend of worsening emissions with changed operations and lower capacity factors was reported. Similar to many renewable feasibility projections, the NREL analysis assumed that 40 percent of projected installed solar capacity will consist of central station plants having up to six hours of storage capability. This significantly reduces the early evening spike in demand and subsequent need for ramping. The study also asserts that natural gas prices impact coal plant operations more than the amount of online renewable capacity. The impact of low natural gas prices in the most recent eight-year period is discussed in the results section of this analysis.

3 Methodology

3.1 Coal power plant emissions data

The EPA offers comprehensive online information for emitting facilities collected from its emissions trading programs in the Air Markets Program Data (AMPD) [29]. Each program requires facilities emitting a certain threshold of pollutants to report their emissions. There are several prepackaged data sets available in AMPD, and the list of the 50 largest carbon dioxide emitting coal-fired units in 2008 was chosen as the representative sample for emissions trends. One unit was excluded due to data issues. The remaining 49 units emitted 345 million short tons of carbon dioxide in 2008, falling to 249 million short tons in 2015. This represented 13.3 percent of electric power carbon dioxide emissions in 2008 and 12.0 percent of 2015 emissions. In 2008, the units generated 331 billion kWh of electricity which fell to 232 billion kWh in 2015. This equates to 8.0 percent and 5.7 percent of U.S. electricity generation in 2008 and 2015, respectively. Figure 1 shows the locations of the units, with a single marker representing multiple units housed at the same power plant. Most of the 518 coal plants are located in the eastern U.S., which is also observed in the sample.

Monthly AMPD data was obtained for each of the 49 coal units. The two variables selected were total electricity output in MWh and short tons of carbon dioxide emitted. Using EIA Form 860 data, the average capacity factor at which each unit was run over the course of the month was calculated. The emission rate (lbCO2/MWh) is the quotient of the two AMPD variables. Since ambient air and water temperature affect coal power plant efficiency and likely impact emission rates, temperature data was incorporated into the analysis [30]. One potential option, performing a monthly seasonal adjustment factor as a proxy for temperature, added complications to processing time series data, so temperature was instead added as an independent variable. It does not appear that potential collinearity between emission rates and temperatures affected the integrity of the results. Monthly average temperatures were collected from the weather stations located closest to each generation facility with a continuous record over the 2008-2015 period. All average temperature data originated from the National Centers for Environmental Information (NCEI) [31], formerly the National Climatic Data Center (NCDC).

3.2 Renewable generation data

Each unit was mapped into its NEMS region. EIA uses 22 NEMS regions to provide regional electricity projections. The regions operate under a coordinated system of electricity dispatch. The electricity in some regions is supplied by vertically integrated, monopolistic utilities while other areas are deregulated and have competitive suppliers [32]. Annual wind and solar generation for the eight-year period of 2008–2015 was calculated from EIA Annual Energy Outlook historical data.

The region representing the northern plains, Midwest Reliability Organization, had the highest level of 2015 combined wind and solar generation and the most post-2008 growth: 38.0 billion kWh of wind and solar energy was generated in 2015, representing a 27.4 billion kWh increase since 2008. Utility-scale and end-use distributed wind and solar facilities are included in each of these totals.

The Texas Reliability Entity and the Western Electricity Coordinating Council had the second and third-highest amount of 2015 wind and solar generation, respectively. Texas Reliability Entity generated 35.5 billion kWh in that year, with a 20.7 billion kWh increase over 2008; Western Electricity Coordinating Council 2015 generation of 31.5 billion kWh was a 24.8 billion kWh increase from 2008.

The combined 2015 total of wind and solar generation from the top five NEMS regions was 154.0 billion kWh of electricity. This is a 108.6 billion kWh increase from 2008 generation in these same regions. Nearly one-third of the regions, including those comprising the southeastern U.S., generated less than 1 billion kWh from wind and solar combined in 2015. Renewable generation by NEMS regions will be considered when examining how the additional weather-dependent capacity has impacted coal generation patterns. A correlation exists if coal plants in regions with higher renewable generation are run differently from similar plants where renewables do not have a presence.

3.3 Data analysis procedure

Temperature, calculated capacity factor, and calculated emission rates were uploaded into the statistical analysis program R in order to perform linear regressions. The first set of linear models assumed that temperature and capacity factors were independent (explanatory) variables that predicted changes in emission rates. The statistical significance and effect on emission rate of each explanatory variable was evaluated for the 49 units. Another regression examined how capacity factors have changed over time, using the number of months from January 2008 as the explanatory variable. For the second regression, an alternative period of 2009–2015 was also used to represent how coal plant capacity factors have changed during a period of prolonged low, stable natural gas prices. Since 2008 experienced higher gas prices than other years, it is likely that coal plants were run at higher capacity factors during the first year of the analysis period, potentially leading to trend lines showing a bias towards decreasing coal generation. Both of these time series analyses used seasonally-adjusted data in order to minimize variability associated with lower electricity demands in the spring and fall and higher usage in winter and summer.

Once the monthly regressions were completed, hourly data sets were obtained from EPA’s AMPD for eight coal-fired units. These units were chosen from NEMS regions that reflected diversity in the amount of online renewable generating capacity available in order to discern if there were observable effects from that generation. Hourly intervals in April were chosen due to the lower heating and cooling demands and relatively high solar and wind potential in that month. In periods of lower net demand and high renewable potential, impacts of the weather-dependent technologies on coal unit operations would likely be more evident than during other seasons. Generation from the eight units in April 2008 was compared with April 2015 output. Even though the eight units were chosen by NEMS region location, the observed trends between capacity factor and emission rate change were not a factor in the selection process. A unit was a candidate for hourly analysis as long as it generated electricity for the majority of days during the month of April in both years and was in a region with high or low 2015 renewable generation.

4 Results and discussion

4.1 Emission intensity trends

Of the 49 coal-fired units, 34 units (69.4 percent of the sample) showed a statistically significant negative correlation between lbCO2/MWh and capacity factor. Statistical significance was assigned if the p-value of the regression coefficient was less than 0.10, meaning there is a less than 10 percent chance that the least-squares regression coefficient is due to sample randomness. One unit showed a very slight statistically significant positive correlation, and the remaining units showed negative correlations that could not be demonstrated with 90 percent certainty. The median impact of capacity factor on the emission rates for the statistically significant subsample was a 2.68 lbCO2/MWh increase for every one-percent decrease in unit capacity factor. The mean increase was 3.28 lbCO2/MWh; the larger average increase was due to an outlier unit that showed a 24.31 lbCO2/MWh worsening for each one-percent drop in capacity factor. The other independent variable, temperature, showed an average 1.26 lbCO2/MWh increase for every one-degree Fahrenheit increase for units that showed statistically significant trends. Since specific plants use different cooling systems, a general unit-wide impact does not accurately represent the relationship between temperature and efficiency [30], even though it is well-known for specific technologies.

4.2 Capacity factor trends

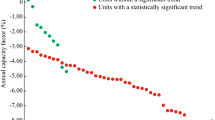

Analyzing the seasonally-adjusted trends in capacity factor over the eight-year period showed a statistically-significant decrease in 44 of the 49 units of electricity output relating to time (89.7 percent of the sample). No units showed positive trends. The annual median and mean decreases were 3.0 percent and 3.2 percent, respectively. Since 2008 had higher natural gas prices than other years, the 2009-2015 period was also considered. During that period of low natural gas prices, 30 of the 49 units showed a statistically significant decrease in capacity factors over time. While the number of units showing significant trends decreased, the magnitude of the decrease actually grew for that subsample: the median decrease was 3.5 percent per year while the mean annual decrease was 3.7 percent.

4.3 Combined trends

In all, 28 of the 49 units showed statistically significant trends for emission rates relating to capacity factors and capacity factors relating to time over the 2008 to 2015 period. Figures 2 and 3 present the calculated change in emissions from these units. Using 2008 as the baseline year, the figure data compares the difference between the baseline and model-predicted 2015 emissions by applying the regression coefficient values. For example, if a plant emits, on average, 2000 lbCO2/MWh when generating at a 90 percent capacity in 2008, has an emission worsening of 2 lbCO2/MWh for every percentage decline in capacity factor, and was predicted to run at a 70 percent capacity factor in 2015, its calculated change in emissions would be 40 lbCO2/MWh.

Examining historical data shows that the 28-unit subsample generated 191.8 billion kWh of electricity in 2008 at an average emission rate of 2067 lbCO2/MWh. In 2015, these same units generated 127.9 billion kWh of electricity at a less efficient rate of 2184 lbCO2/MWh. Decreased generation therefore resulted in a 58.5 million-short-ton reduction of carbon dioxide emissions, excluding potential emissions from substitute facilities. If the 2015 emission rate per unit of electricity produced had remained at 2008 intensity levels, an additional 7.5 million tons of carbon dioxide emissions would have been avoided, resulting in a 66.0 million-ton abatement. Using the calculated regression coefficients of the first linear model, a 4.0 million-ton decrease in avoided carbon dioxide emissions was projected in 2015.

The divergence in values show that the trend models, which account for temperature and capacity factors as determining factors in emission rates, omit other considerations that may have an impact. While 2015 coal production is down in nearly all regions relative to 2008 totals [2], Appalachian coal has experienced the steepest declines. As subbituminous western coal comprises a greater share of the market, emission rates at facilities switching fuel types will, on average, increase [16]. The calculated trend line from the time series analysis also overestimates 2015 capacity factors since later years experienced a more precipitous decline relative to earlier years. In cases of an accelerated decline, a trend line that encompasses eight years of data will underestimate earlier values while overestimating more recent data points.

4.4 Hourly data trends at selected units

The hourly data from April 2008 and April 2015 showed a clear trend of decreasing output from the eight selected units. In 2008, they produced 4.3 billion kWh of electricity compared to 3.4 billion kWh in 2015. The calculated average emission rates for these plants changed very little despite the 21.2 percent drop in aggregate electricity production, showing slight improvement from 2019 lbCO2/MWh in 2008 to 1992 lbCO2/MWh in 2015.

Figure 4 shows the hourly generation output at four facilities during the two months. Two of the units (Unit 1 and Unit 2) are located in NEMS regions that had both the highest 2015 renewable generation and the largest amount of wind and solar growth from 2008 to 2015. The other two units (Unit 3 and Unit 4) were located within a NEMS region that had very little installed wind and solar capacity throughout the projection period. There are no discernable contrasts between regions that have installed larger amounts of wind and solar capacity and those that have not.

Temperatures were not a factor in potential comparative discrepancies, as 2015 average April temperatures at each site were roughly 2 degrees Fahrenheit warmer than in 2008. Further investigation is needed on hourly emission rates to consider whether the amount of renewable capacity in a region directly impacts plant emission rates in ways that are unique from operational changes attributable to natural gas. Since each of the eight coal-fired units experienced precipitous output declines in 2015 relative to 2008 regardless of regional renewable growth, such trends are likely dominated by changes associated with increased natural gas generation and demand curtailment programs.

5 Conclusion

Electricity from coal is expected to comprise a smaller share of U.S. electricity supply in the coming decades as technological advances enhance the economic competiveness of wind and solar generators while natural gas, a complementary fuel for weather-dependent intermittent generation, remains inexpensive. Although the growth rate of renewable technologies is robust, they comprise a relatively small share of current annual electricity generation—even in the highest growth regions.

On average, generation from plants showing a statistically significant relationship between capacity factor and time declined more than 20 percent over the eight-year period. It is evident that many plant operators are no longer running their units at the baseload capacity factors for which many were designed. The analysis demonstrates the existence of quantifiable trends relating decreases in coal unit capacity factors to increases in emission rates, something that has not been addressed in the existing literature. When using average monthly data, a practical method for a multiyear time period over which 49 plants are examined, the nature of the specific operational changes resulting in lower capacity factors is not evident and should be investigated in future research.

The high number of statistically significant emissions trends does show that additional investigation is needed before it can be assumed that high renewable penetration scenarios in which coal plants operate differently do not impact the emissions profile of the coal fleet, an assumption found several times in the existing body of literature that is contradicted in these findings. This goal of this study is to provide a comprehensive literature review on issues affecting coal-unit operations and quantify emissions trends. Future work will develop hourly output/emission rate models that will show how the increases occur in emission rates. Lower average annual capacity factors can result from intraday, load-following output fluctuations, seasonal generation patterns, stable operations at a consistently lower output, or as a combination of all factors. This paper, while showing the majority of units examined produce more carbon dioxide per MWh of generation, does not develop hourly output models nor identify the source of the intensity of rate increases. It rather shows that such efforts will be worthwhile and can be a valuable tool for policymakers.

It is well known that departing from baseload operating procedures can adversely impact equipment at coal-fired power plants, but type and severity of damages depend on operational decisions. As fossil steam units move further away from baseload operations for extended time periods, negative cumulative equipment impacts build and are evident in the number of unplanned outages. An examination of hourly data is needed to determine if a unit has experienced this type of outage, and calculating outage trends is left to future work. System reliability is also not discussed in this paper, something that may be of increasing concern if the number of outages has indeed grown. In the case that certain operations are found to more adversely impact emission rates or equipment than others, a coordinated system-wide coal plant operational schedule could be beneficial.

If coal plants are run at lower capacity factors to accommodate renewable and natural gas units, their emission rates will likely worsen without corrective action inside the power plant gate. EPA’s CPP gave individual states wide-ranging flexibility to meet emissions reduction targets under their Best System of Emission Reduction (BSER) [33]. One of the BSER building blocks, improving efficiency at existing coal-fired units, coexisted with two other building blocks: shifting generation from coal to natural gas power plants and growing generation from zero-emitting renewable technologies. Yet the movement away from coal towards increased natural gas and renewable generation has, with at least 90 percent certainty, raised coal plant greenhouse gas emission rates in 34 of the 49 units analyzed.

Under future emission-reduction plans, coal plant operations must be reviewed and evaluated with increasing penetrations of intermittent technologies. Cost effective power plant retrofits that minimize damage from future operational realities, once they are better understood, may become important means for emissions reductions and system reliability preservation. In an emissions constrained market, if a coal plant operates to minimize overall system emissions while producing electric power at suboptimal plant efficiency levels, a discussion over the assignment of emissions penalties may provide incentives to generate differently in order to accommodate greater system-wide emissions mitigation milestones. As coal-fueled power stations are projected to generate less electricity in the U.S. but remain an integral part of most system operations, coordinated policies that address emerging issues facing these plants are practical necessities.

References

U.S. Energy Information Administration (2016) Henry Hub natural gas spot price. https://www.eia.gov/dnav/ng/hist/rngwhhdm.htm. Accessed 23 July 2016

U.S. Energy Information Administration (2016) 2016 annual energy outlook. U.S. Department of Energy, Washington, USA

U.S. Energy Information Administration (2008) 2008 annual energy outlook. U.S. Department of Energy, Washington, USA

Eilperin (2013) EPA’s Pruitt signs proposed rule to unravel clean power plan. https://www.washingtonpost.com/politics/epas-pruitt-signs-proposed-rule-to-unravel-clean-power-plan/2017/10/10. Accessed 8 October 2017

Sisternes FJD, Jenkins JD, Botterud A (2016) The value of energy storage in decarbonizing the electricity sector. Appl Energy 175:368–379

Cochran J, Lew D, Kumar N (2013) Flexible coal: evolution from baseload to peaking plant. https://digital.library.unt.edu/ark:/67531/metadc869995/m2/1/high_res_d/1110465.pdf. Accessed 10 December 2016

Stoft S (2002) Power system economics: designing markets for electricity. IEEE Press, Hoboken

California Independent System Operator (2016) Today’s outlook. http://www.caiso.com/outlook/outlook.html. Accessed 13 September 2016

U.S. Energy Information Administration (2012) Reserve electric generating capacity helps keep the lights on http://www.eia.gov/todayinenergy/detail.cfm?id=6510. Accessed 14 August 2016

Verdolini E, Vona F, Popp D (2016) Bridging the gap: do fast reacting fossil technologies facilitate renewable energy diffusion? http://www.nber.org/papers/w22454. Accessed 20 October 2016

Energy GE (2010) The western wind and solar integration study: phase 1. GE Energy, Schenectady

U.S. Energy Information Administration (2016) Levelized cost and levelized avoided cost of new generation resources in the annual energy outlook 2016. U.S. Department of Energy, Washington

Joskow PL (2011) Comparing the costs of intermittent and dispatchable electricity generating technologies. Am Econ Rev: Pap Proc 101(3):238–241

Fowlie (2016) The duck has landed. https://energyathaas.wordpress.com/2016/05/02/the-duck-has-landed. Accessed 20 June 2016

Baker DR (2016) As solar floods California grid, challenges loom. http://www.sfgate.com/business/article/As-heatwave-bakes-CA-solar-sets-a-big-record-8379331.php. Accessed 7 July 2016

U.S. Energy Information Administration (2016) How much carbon dioxide is produced when different fuels are burned? https://www.eia.gov/tools/faqs/faq.cfm. Accessed 10 September 2016

U.S. Department of Energy (2016) Renewable electricity production tax credit (PTC). http://energy.gov/savings/renewable-electricity-production-tax-credit-ptc. Accessed 10 September 2016

Levin T, Botterud A (2015) Electricity market design for generator revenue sufficiency with increased variable generation. Energy Policy 87:392–406

Electric Power Research Institute (2015) Program on technology innovation: fossil fleet transition with fuel changes and large scale variable renewable integration. EPRI, Palo Alto

Kumar N, Besuner P, Agan LD et al (2012) Power plant cycling costs. Intertek APTECH, Sunnyvale

Lew D, Brinkman G, Ibanez E et al (2013) The western wind and solar integration study: phase 2. National Renewable Energy Laboratory, Golden

Davidson MR, Zhang D, Xiong WM et al (2016) Modelling the potential for wind energy integration on China’s coal heavy electricity grid. Nat Energy. https://doi.org/10.1038/nenergy.2016.86

Electric Power Research Institute (2001) Damage to power plants due to cycling. EPRI, Palo Alto

Electric Power Research Institute (2013) Flexible operation of current and next-generation coal plants, with and without carbon capture. EPRI, Palo Alto

Hart M, Bassett L, Johnson B (2017) Everything you think you know about coal in China is wrong. https://www.americanprogress.org/issues/green/reports/2017/05/15/432141/everything-think-know-coal-china-wrong. Accessed 15 June 2017

Valentino L, Valenzuela V, Botterud A et al (2012) System-wide emissions implications of increased wind power penetration. Environ Sci Technol 46(7):4200–4206

Miller NW, Shao M, Pajic S et al (2014) The western wind and solar integration study phase 3—frequency response and transient stability. GE Energy, Schenectady

Jean J, Borrelli DC (2016) Mapping the economics of U.S. coal power and the rise of renewables. Massachusetts Institute of Technology, Cambridge

U.S. Environmental Protection Agency (2017) Air markets program data. https://ampd.epa.gov/ampd. Accessed 15 July 2016

Colman J (2013) The effect of ambient air and water temperature on power plant efficiency. Dissertation, Duke University

National Centers for Environmental Information (2017) National oceanic and atmospheric administration. http://www.ncdc.noaa.gov. Accessed 18 July 2016

U.S. Energy Information Administration (2010) Status of electricity restructuring by state. http://www.eia.gov/electricity/policies/restructuring/restructure_elect.html. Accessed 19 July 2016

U.S. Environmental Protection Agency (2016) Fact sheet: clean power plan key changes and improvements. https://www.epa.gov/cleanpowerplan/fact-sheet-clean-power-plan-key-changes-and-improvements. Accessed 12 September 2016

Author information

Authors and Affiliations

Corresponding author

Additional information

Disclaimer The contents of this article represent the author’s views and do not necessarily represent the official views of the U.S. Department of Energy.

CrossCheck date: 28 March 2018

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

SMITH, R.K. Evolving coal-fired power plant carbon dioxide emission rate intensities on U.S. electricity operating systems. J. Mod. Power Syst. Clean Energy 6, 1103–1112 (2018). https://doi.org/10.1007/s40565-018-0414-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40565-018-0414-4