Abstract

Along with the improvement of electrical equipment reliability, people’s unsafe behaviors and human errors have become one of main sources of risks in power systems. However, there is no comprehensive study on human factors and human reliability analysis in power systems. In allusion to this situation, this paper attempts to analyze the impact of human factors on power system reliability. First, this paper introduces current situation of human factors in power systems and the latest research progress in this field. Several analysis methods are proposed according to specified situations, and these methods are verified by some power system practical cases. On this base, this paper illustrates how human factors affect power system operation reliability from 2 typical aspects: imperfect maintenance caused by human errors, and impact of human factors on emergency dispatch operation and power system cascading failure. Finally, based on information decision and action in crew (IDAC), a novel dispatcher training evaluation simulation system (DTESS) is established, which can incorporate all influencing factors. Once fully developed, DTESS can be used to simulate dispatchers’ response when encountering an initial event, and improve power system dispatching reliability.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Electrical energy is the basic resources of national economy and people’s life. Power systems play a key role in power generation and transmission. In the past few decades, power systems have enormously expanded in scale and become more complex in structure. As a result, reliability is becoming an important issue. With the development of smart grid, electrical equipment reliability and automation technology have been improved on large scale. However, power systems cannot operate without human by far, and people’s unsafe behaviors and human errors can have a great impact on power systems [1]. To further improve power system reliability, it is necessary take human factors into consideration.

Through analyzing major incidents of power systems in last decades, it can be seen that human factors made significant contributions to these failures [2]. Human errors are identified as one of the main causes of the blackout in North American in August 2003 [3] and the Italian blackout in September 2003 [4]. Human errors could occur in any situations involving people, such as power system operation, electrical equipment maintenance and power system dispatching [5]. Although we gradually realize the importance of human factors in power systems, there are few researches in this area.

For better analysis of human factors’ impact on power system reliability, we should figure out human error mechanism and recognize how human errors occur. Proper analysis methods are necessary, especially for quantitative assessment. Furthermore we need to demonstrate the influence of human factors on power system from several main aspects. After this, we could obtain some measures to improve human operational reliability.

In this paper we make a comprehensive introduction of human errors and some common accidents resulting from human factors. According to specific operation scenarios, we establish several models of human factors, and propose corresponding methods for human reliability analysis (HRA). These methods are verified by some power system practical cases. On this basis, we establish a modified maintenance model considering imperfect maintenance caused by human errors. Furthermore, the influence of human factors on dispatching operation and power system cascading failure are analyzed through IEEE 24-bus test system. Finally, a novel Dispatcher Training Evaluation Simulation System based on information decision and action in crew (IDAC) is established, which can consider all the influencing factors. Once fully developed, it can be used for dispatcher dynamic assessment in order to find out operators’ shortcomings and improve power system dispatching reliability.

2 Human errors and human factors in power systems

Human errors can be defined as any human actions, both cognitive and physical, that potentially or actually result in negative effects on system’s normal functions [6]. As power systems become more complex, human operators are supposed to work in various situations, and they may encounter all kinds of emergencies. If human behaviors exceed an acceptable limit, it could lead to a disaster.

The final report on August 14, 2003 blackout in the United States and Canada shows dispatchers’ lack of monitoring of grid state is an important cause leading to cascading failure [3]. In the 5.25 Moscow blackout, dispatchers failed to take measures after a large number of tripping, which caused the accident to expand [7]. On May 7, 2004, Golmud power grid split from main grid due to substation personnel’s fault action on protection device. On April 1, 2005, operation personal’s misoperation resulted in power outage of 220 kV Lingyuan substation.

Some of these accidents are attributed to human errors, however we seldom try to investigate the cause of human errors. Human error is not a cause, but a consequence, which is shaped and provoked by the upstream factors [8]. Operators’ actions in power systems can be affected by various factors, like external environment, complexity of operation task, operators’ knowledge and experience, and so on. We consider all these factors that may cause human errors as human factors. In some researches, performance influencing factors (PIFs) and performance shaping factors (PSFs) [9] are used to describe human factors. PIFs and PSFs are usually classified according to various standards and purposes. Reference [10] proposed a data-informed PIF hierarchy for human reliability analysis, which consists of five categories: organization related, time related, person related, situation related and machine related factors. Through investigation we can see that human operation could be affected by many factors in power systems, such as task complexity, operation period, experience, physical state and so on. In different situations, the dominating factors that have the greatest influence on human reliability may be different. Therefore, it is important to determine the exact PIFs according to actual situations.

3 Human factors modeling and HRA methods in power systems

It is widely recognized that human errors could not be avoided completely. However, we can take measures to reduce human error probability. Human reliability is the opposite concept of human error. As an essential part of probabilistic safety assessment (PSA), HRA has been widely researched in many fields which have higher requirement on reliability, as in nuclear power plant and aerospace [11]. Qualitative and quantitative HRA could be used in system design, operation and optimization in order to improve human reliability. Nevertheless, in the aspect of power systems, there are very few studies about HRA.

With the development of HRA, many methodologies are established to analyze human errors, such technique for human error rate prediction (THERP) [12], cognitive reliability and error analysis method (CREAM) [13], human error assessment and reduction technique (HEART) [14], a technique for human error analysis (ATHEANA) [15]. Moreover in some references, human reliability is assessed using a Markov model with a constant transition rate for human error [16, 17]. In order to recognize personals’ cognitive process when dealing with system failures, several dynamic HRA methods are proposed, and IDAC is a typical dynamic HRA method [18]. Reference [19] identified requirements for human reliability model to be integrated into system dynamic probabilistic risk analysis. Reference [20] described the existing dynamic HRA simulations, and gave a prospect about next work to increase the fidelity of simulated accident scenarios. Lack of appropriate and sufficient performance data has been identified as a key factor affecting HRA quality, especially in the estimation of human error probability. Therefore, U.S. Nuclear Regulation Commission (NRC) tried to develop a HRA database (SACADA) to satisfy this data need [21].

We should notice that most of these methods originated in other industries, and they not specified for power systems. So it is necessary to propose several HRA methods suitable for power system specific situations. As we know, the primary cause of human errors differs a lot in different operation scenes. Thus, it is significant to make proper classification of power system operation scenarios for human reliability analysis. According to the investigation, power system operation scenarios are classified into 3 categories: time-centered scenarios, process-centered scenarios and emergency-centered scenarios. Then three HRA methods suitable for the above three scenarios are proposed respectively.

3.1 Time-centered HRA (TCHRA)

Time-centered scenario refers to situations where operators should continue to work for a long time without interruptions, such as system state monitoring and new equipment debugging. Operators will become fatigue and the probability of human error to occur will increase accordingly. Statistics show that many accidents are caused by people’s fatigue [22]. It is obvious that continuous working time (CWT) is the primary factor that affects human reliability in this scenario. Besides, some other human factors may also influence this process, such as task complexity, environment factors, human knowledge and experience.

Proportional hazard model (PHM) [23] could be used for quantitative analysis of time-centered scenario. PHM has been wildly used in the field of engineering, biology and mechanics. The hazard function in PHM consists of two parts: baseline function and link function. The hazard function can be expressed as

where h 0(t) is the baseline function which could be used to indicate the change of human reliability with CWT; \(\psi (\varvec{Z})\) is the link function, which could be used to indicate the influence of covariates, \(\varvec{Z}\), on human reliability. In TCHRA, five main covariates are considered: task complexity z 1, environment factors z 2, human knowledge and experience z 3, human psychology z 4 and physical state z 5. Therefore, it could be defined as

We suppose the influence coefficient of each covariate could be 0, 1 or 2. When influence coefficient is lager, this factor has more effect on human reliability, and human errors are more likely to occur. γ is the weight value of each covariate. Since available data is limited, we cannot obtain the weight value through fitting process by far. In this paper, the weight value of covariate is obtained via analytic hierarchy process (AHP) [24]. Through expert assessment, the five covariates are compared in pairs with respect to their relative importance to human error probability. Then their value weight could be calculated.

Assuming the operation begins at time t = 0, then human reliability function could be expressed as

where R hp(t) is the probability that human error has not occurred before the moment t. According to [25], Weibull distribution function could be adopted as baseline function, as shown in (5).

The parameters can be estimated through careful statistical analysis. According to [25], β = 3, α = 200 hours.

In order to illustrate the relation between human reliability and continuous working hours, we suppose there are three irrelevant scenes. Through expert assessment, the influence coefficient and weight value in different scenes are obtained, shown in Table 1.

Then human reliability function could be expressed as

The probability of human error could be expressed as

With the increase of CWT, human error probability changes as shown in Fig. 1.

In Fig.1, when CWT is less than 10 hours, the human error probability is extremely low (less than 7 × 10−4). When CWT increases, human error probability increases accordingly. Although the staffs work less than 10 hours a day under normal conditions, long-time lasting work still exists, such as annual inspection of main transformer while the whole substation is out of power, and new equipment debugging before operation. Through investigation, we find that human errors are more likely to happen in these situations.

Thus, in order to ensure operational reliability, we should limit continuous work within reasonable time. Besides, we could take some measures to improve human reliability, such as improving operator’s skill and experience by training, improving operator’s mental and physical state, and making work condition more suitable.

3.2 Process-centered HRA (PCHRA)

Process-centered scenario refers to situations where operation task consists of many steps, and operators should follow certain procedures to finish the work. We should pay attention to the process to avoid human errors. Modified CREAM could be used to analyze this kind of scenario. CREAM [13], proposed by Hollnagel E, hold the idea that cognitive functions contain several generic failure types. CREAM concluded the basic probability value of each generic failure type, which is called cognitive failure probability (CFP). The nominal values of cognitive function failures are shown in Table 2.

In CREAM, all human factors are divided into 9 categories, called common performance conditions (CPC). The expected influence of CPCs on human reliability could be generalized as three levels: reduced, not significant and improved, shown in Table 3.

The CREAM standard method divided the control model into four classes, Strategic, Tactical, Opportunistic and Scrambled. Each control model has a corresponding error probability interval [13]. Although CREAM method has been widely accepted and used in many fields, some aspects require improvement. Since the CPCs are not specially introduced for power systems, we should concretize CPCs according to regulations and actual conditions in power systems [26]. For example, working conditions could be divided into sub-CPCs: personal security requirement, equipment security requirement and environment requirement.

We could assess each sub-CPCs firstly, then we can obtain the score of CPCs with analytic hierarchy process. The score of CPC varies from 0 to 100 according to the concrete conditions except Time of day, which varies from 0 to 24. Since human reliability analysis is still at the starting stage in power systems, and related data statistics is still very scarce. The use of expert systems, such as fuzzy expert systems, can be helpful to improve the assessments with limited data available [27]. In this paper, triangular fuzzy model is used to lower subjectivity of judgment [28].

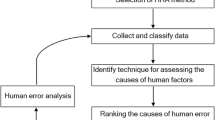

The process of quantifying human error in process- centered scenario is shown is Fig. 2.

In PCHRA, we should first determine the cognitive function and operation scenario according to concrete operation task. Then we could calculate the basic value of human error probability (HEP) after analyzing the generic failure types. For example, when executing one action, we find the generic failure types are: action of wrong time (E2), action out of sequence (E4) and missed action (E5), then basic HEP value of executing this action could be calculated using the following equation.

After obtaining basic value of HEP, we should analyze the level of CPCs and obtain correction coefficient using (9). Then we can obtain the final result of HEP with (10).

where P HEP0 is the total basic HEP value of the whole operation task; P HEP is the final value of HEP; β is the HEP correction coefficient; ρ i is the influence coefficient of CPCi.

We take Xuyue station as an example for analysis [29]. The main transformer turning to operation from cold standby needs ten steps, and the operation in Step 2 is shown in Table 4. The cognitive function and generic failure types in Step 2 is shown in Table 5. With (8) we could calculate the basic value of HEP in Step 2 is 0.011, and the HEP of the whole process is 0.0753.

We suppose the operation is conducted in 3 different contexts, and Context 3 represents the worst situation. In Context 3, the organization is inadequate, and there is lack of sound management system; the working conditions is unpleasant; there is not enough operational support; there exists some deficiencies in arrangement; the work is complex and time load is heavy for the current operators; what’s more, the task is conducted at 4 a.m. Context 1 represents the best-case of these three contexts, and Context 2 is somewhere between Context 1 and Context 3. According to CREAM basic method, we could figure out that Contexts 1, 2, 3 belong to Tactical, Opportunistic and Scrambled control model, respectively.

Through scenario analysis, we could obtain the scores of CPCs in different contexts. Then we could calculate the membership of each level with triangular fuzzy model. With (9) and (10), we could obtain correction coefficient and the final value of HEP, shown in Table 6.

From the simulation we can see, on the one hand, the results of these three contexts locate in the reliability interval of appropriate control model. It proves the validity of the proposed methods. On the other hand, we can conclude that Context 1 is more suitable for human operation when comparing with Context 2 and Context 3. It demonstrates the impact of CPCs on human operation quantitatively. We could also calculate the change of human reliability when CPC differs. Furthermore, we could take directed measures according to the simulation results. For example, if time of day (4 a.m. in Context 3) is a main influencing factor, we could adjust to finish the work in the day if possible in order to improve human reliability.

3.3 Emergency-centered HRA (ECHRA)

Emergency-centered scenario refers to situations where power system failures occurred and operators need to react in a short time, including diagnose fault and take proper measures. In this scenario, human reliability has significant effect on clearing faults and recovering system reliability. Human cognitive reliability (HCR) [30] method could be used to quantify HEP in emergency-centered scenarios.

According to different ways of response, human behavior is usually divided into 3 categories. This classification is commonly known as skill-based, rule-based and knowledge-based (SRK) framework [31]. Skill-based behavior is assumed to be highly integrated patterns of behavior. Since the operator is so familiar with the situations, human behavior takes place without conscious attention. Rule-based behavior refers to executing routine tasks according to regulations strictly. This type of behavior is typically controlled by a stored procedure. During unfamiliar situations, no procedures are available, and human behavior is considered as knowledge-based. The operator has to rely upon their knowledge to make decisions and deal with the operation task.

It is not hard to find that human errors are more likely to occur in knowledge-based situations and less likely to occur in skill-based situations. According to survey results [30], it is recognized that once the operation task, scenario and operators are determined, human error probability is only related to the ratio of operation allowable time (t) and operation execution time (T 1/2). The relationship could be expressed with Weibull distribution function with 3 parameters, which is shown in (11).

where P(t) is the probability of human error; α, β, γ are dimension, shape and location parameters, and their values are determined by operation category [32], shown in Table 7; t is the operation allowable time which is determined by power system characteristic; T 1/2 is the operation execution time which could be obtained by (12).

where T 1/2,n is the average execution time in regular situation, which could be obtained according to the statistics; K 1, K 2, K 3 are the adjustment coefficients to execution time from aspects of training, operator mental state and operation support.

For example, when line protection channel fault occurs, main protection should quit operation manually. This process is supposed to be finished within 8 minutes. Through investigation we find that the average time to finish the job is about 5 minutes, and it depends on operators. Operator A has experience in dealing with such situations, while Operator B is inexperienced, and should follow the regulations to finish the job. The parameters could be defined as Table 8.

From Table 8, we can see that Operator A might fail to finish the operation with a probability of 0.005, while the probability is 0.341 for Operator B. Through analysis we can conclude that when facing with emergency situations, experience, operation time and psychological state will affect human operation a lot. High quality is an essential way to enhance human reliability in emergency conditions.

4 Impact of human factors on maintenance

Electrical equipment maintenance is significant to maintain power systems stable, prolong the service life of equipment and reduce the system power loss. According to statistics of grid accidents, maintenance personal mistakes occupy a large proportion [33]. In this part, we first establish a periodic maintenance (PM) model considering imperfect maintenance caused by human factors, and demonstrate the impact of human factors on maintenance availability with a simple case.

4.1 Electrical equipment maintenance model considering imperfect maintenance

In most cases, analysts assume that maintenance is totally perfect, which is unrealistic. Effect of maintenance could be weakened by human factors, and more than that, the system occasionally becomes even worse due to human errors [34]. Several common human errors and their external forms in maintenance are listed below.

-

1)

Latent failures which are not detected during maintenance due to operators' insufficient awareness.

-

2)

Wrong adjustments, incorrect estimations of system states and inappropriate decisions.

-

3)

Replacement with fault parts and damages introduced during maintenance, which could be attributed to human action errors.

The results of maintenance will be quite different due to different levels of human reliability, as shown in Fig. 3 [35]. According to maintenance quality, the results can differ from perfect maintenance to maintenance failure. Since we aim to demonstrate the impact of human errors on equipment maintenance in this paper, we make two assumptions: ① other factors are completely reliable except human factors; ② results of maintenance consist of three categories considering human factors.

Category 1: perfect maintenance, denoted as PM, namely the system becomes as good as new after maintenance.

Category 2: as bad as old, denoted as ABAO, namely the system state does not change after maintenance.

Category 3: failure after maintenance, denoted as FAM, namely maintenance failure occurs, and the system needs repair after maintenance.

When human errors occur, the maintenance is considered as imperfect. The probability of human error (hep) could be obtained with PCHRA method proposed in Section 3. The percentage of human error cause maintenance failure is defined as ξ. The probability of PM, ABAO and FAM could be expressed as follow.

It is assumed that system begins as new and the age is set as t = 0. The maintenance period is ΔT and every maintenance time is Δt. If the system fails during operation, it will be repaired with mean time μ 2. If the system fails after maintenance, mean repair time will be μ 1. Under normal circumstances, μ 1 is smaller than μ 2, since a failure during operation is an emergency, and the repair is not prepared in advance. If system state does change after maintenance, it will continue operating. If the maintenance is perfect, or the system is repaired after failure, the system is renewed and system age returns to 0. Equipment periodic maintenance model is shown in Fig. 4.

Given the above description, the relation can be derived as follow.

where R(t) is the reliability function; F(t) is the cumulative distribution function of system failure; P pm, P af, P mf are the probability that system is renewed by perfect maintenance, repair after actual failure and repair after maintenance failure. The mean time to renewal (MTTR) is

The availability of system could be expressed as (20) when maintenance time is neglected.

Through a simple case, we will analyze the impact of human errors on maintenance availability.

4.2 Results of case study

The proposed methodology is illustrated using a system of 3 units [36] and the reliability function of this system is

In this case, we assume hep increases from 0.05 to 0.9, ξ is 0.7, and the ratio of μ 1/μ 2 is 0.5. With the variation of the maintenance period, maintenance availability changes as shown in Fig. 5. ΔT* is normalized by 104 hours. For example, if ΔT* = 0.02, then ΔT = 0.02 × 104 = 200 hours.

It can be seen that when hep increases from 0.05 to 0.9, the maintenance availability decreases if ΔT* is less than 1, which is the mean time to failure (MTTF) of the system. When hep is smaller than 0.5, there exists optimal maintenance period that maximizes the availability. While hep is larger than 0.5, maintenance will not be able to improve the system availability any more due to the negative effect of human errors.

From the results of case study, we can see that human errors affect maintenance availability a lot, and we should take human factors into consideration when determining the optimal maintenance period.

5 Impact of human factors on dispatching operation

Reasonable dispatching is a key part in maintaining power systems reliable and secure. However, dispatching operation faces risks due to uncertainties, such as adverse weather, equipment state and human errors. Common human errors in power system mainly include three categories.

-

1)

Insufficient of situation awareness, referring to the situation where dispatchers fail to have a comprehensive acquisition of system information in time, or dispatchers fail to have a correct understanding of system state.

-

2)

Dispatch decision errors, dispatchers might make wrong dispatch decisions due to insufficient experience or pressing time.

-

3)

Dispatch action errors, which mainly refer to physical action mistakes occur during operation, including action of wrong type, action of wrong object or missed action.

Through analysis of latest grid accidents, we can conclude human errors have a great influence on power system reliability, especially in emergency situations. Human error probability in emergency situation could be calculated with ECHRA method proposed in Section 3. Since the allowable time is short, dispatchers might make mistakes under great pressure. In this part, we will analyze the impact of human errors on emergency dispatch and the development of power system cascading failures.

5.1 Impact of human factors on cascading failures

Cascading failure is one of main reasons that lead to power system blackout. Under normal conditions, transmission lines operate with a certain initial power load. However, a single outage may result in line thermal overload. If the overload could not be removed within permitted time, more components will be tripped one by one, which increasing the probability of cascading outage and blackout. Allowable time to relieve line’s overload is shown in Table 9 [37].

In Table 9, P CON, P LTE and P STE are the continuous, long-time and short-time emergency ratings. We define the third condition as critical overload, because the overload lines should be tripped immediately. In the initial stage of a cascading failure, also called pre-cascading stage, dispatchers have enough time to take measures and prevent failures extending. If dispatchers fail to restore power system to normal state at this stage, it will enter fast-cascading stage, which can result in cascading outage and load disconnection.

In this part, we only consider the critical overload. Due to human errors, dispatchers may fail to finish the work at pre-cascading stage. We will evaluate the impact of human errors on dispatch operation in emergency condition. During the evaluation, shown in Fig. 6, we conduct “N − 1” test of the system, and all lines are tripped one by one as the initial event. A DC power flow is used for this analysis, and the critical overload line will be tripped. Dispatch operation will be correctly executed with a probability of human reliability.

5.2 Case study of IEEE RTS 79 system

In this part, IEEE RTS 79 system [38] is used for analysis. We suppose generator #10 and #18 are out of service for maintenance, and line capacity is adjusted. If Line 4 is tripped because of failure and operators failed to take any measures, system state will develop as follow.

From Table 10, we can see Line 28 will be overload after the outage of Line 4. Then Line 28 will be tripped beyond the allowable time, as a result Lines 24, 25, 26 will be overload. In the next stage, Lines 24-26, 30-33 and 38 will be tripped by automation device. By far Buses 17, 18, 21 and 22 are isolated. With the development of system stage, outage extends constantly. In Stage 5, more than half of buses are isolated and the system is split.

During the process of failure extending, if operators take proper measures, like generation re-dispatching, it is possible to avoid system splitting. Amount of load shedding is different if the system is stabilized at different stage.

From Table 11, we can see if the system is stabilized at Stage 1 or 2, there will be no load shedding. The loss of load will increase to 657 MW and 1401 MW if emergency dispatching operation is successful at Stages 3 and 4 respectively. If all operations failed, the system will lose the whole load.

Emergency dispatch operation may fail with a certain probability p in every stage. When the system is stabilized after one successful operation, amount of loss load (P LOSS) could be expressed as

where P LOSS(1), P LOSS(2), P LOSS(3), P LOSS(4) are the loss loads at each stage. It should be noticed that, we only consider the first 4 stages, because the system will probably split if we cannot make it in the first 4 stages.

We suppose there are 3 scenarios, and the human operation error probability p could be calculated with proposed ECHRA method. Results of p are shown in Table 12. Line transmission capacity is set as 75% of the rated capacity. After the evaluation shown in Fig. 6, we get the following results.

From Table 12, we can see loss load is minimal in Scenario 1, while maximal in Scenario 3. In Scenario 1, human operation reliability is the highest, and operators could take emergency dispatching to stabilize the system early; while in Scenario 3, human error probability is the largest, failures could spread due to human factors. As a result, more loads will be shed when system is stable.

It should be noticed that, in this section we aim to analyze the impact of human errors on emergency dispatch and the development of power system cascading failures. To simplify the discussion, we neglect the dependency among operators. Further research will be conducted to establish a comprehensive analysis model for dispatching operation, which focuses on the whole crew instead of single operator.

6 Dispatcher training evaluation simulation system

From analysis in Section 5, we can conclude that human factors make a great impact on dispatch operation and power system reliability. So we should apply human error theory and human reliability analysis to practical. In this part, we will propose a framework of dispatcher training evaluation simulation system (DTESS), which could be used as a tool for dispatcher training simulation. Different from conventional dispatcher training simulator (DTS) [39], DTESS is completely based on simulation and operators’ cognition process is modeled with IDAC method. Once fully developed, it can simulate dispatchers’ response to various conditions in detail. The framework of DTESS is shown in Fig. 7.

As shown in Fig. 7, DTESS consists of 4 modules: main program module, operator module, scheduler module and power system simulator module.

-

1)

Operator module Operator module aims to model dispatcher’ response to system in different situations. Based on the IDAC, this model mainly consists of three parts: I-D-A cognition model [40], performance influencing factors [41] and rules of behavior [42]. The operators’ state is initialized at the very beginning. During the evaluation, some static PIFs will stay unchanged over a period of time, while dynamic PIFs should be assessed over every time step. According to the rules of behaviors, this module will generate operator actions.

Dispatchers are supposed to take various trainings and tests, such as skill training, security testing, psychological test and qualifications grading. All these results could be taken into consideration to make the modeling of dispatcher more precise.

-

2)

Scheduler module DTESS uses dynamic event tree (DET) [43] to represent scenario development following an initiating event. This module controls the evolution of event sequences, and the branches are generated when system state changes or operators take actions at every time point. Some termination criteria should be determined before the simulation, for example branch probability is less than a specific value and power system splits into disconnected parts. A sequence will be terminated when the termination criteria is met.

Another function of this module is to save information at each point, like states of power system, dispatchers’ action and branch probability. We could obtain details of dispatchers’ operation by retrieving this information.

-

3)

Dispatcher simulator training module This module includes most parts of conventional DTS. It simulates static or dynamic process of power systems, including the behaviors of relay and automatic equipment. Another function of control center model is to provide interaction between power system and dispatcher. On one hand, it reveals power system present state to dispatcher model through data acquisition, data processing, event and alarm processing, remote adjustment and control, man-machine interface, etc. On the other hand, actions form dispatchers are implemented with this model.

-

4)

Main program module Main program module is the controlling part of the framework, managing the calls to other modules. The general flow of DTESS is shown in Fig. 8. At the beginning of the evaluation, state of power system and levels of PIFs are initialized. According to dispatcher model and power system model, scheduler model decides whether DET branches are generated. If there is more than one branch, the scheduler model will save the branch information and proceed with simulating the first branch until it meet the termination criteria. Then the end state information is stored and next branch information is loaded to continue the simulation. When all the sequences are simulated, the simulation will be terminated.

Compared to DTS, DTESS has many advantages. First, DTESS could record dispatchers’ actions, both cognitive and physical, in response to an initial event in detail. Furthermore, since all the probabilities are stored, so we can assess a dispatcher more objectively and accurately. Through analysis of simulation results, we can find out operators’ shortcomings and improve dispatch reliability. Besides, DTESS could also be used to assess the quality of other trainings, like security training and skill training through adjusting operator module.

7 Conclusion

Human factors have great impact on power systems reliability; however, there are few researches in this field. In allusion to this situation, we attempt to analyze the impact of human factors on power system reliability comprehensively. Main contributions of this paper include the following aspects.

-

1)

Through analyzing human errors and operation scenarios in power systems, we established human factor models and proposed 3 human reliability analysis methods. Since these methods are based on practical characteristics of power system operation scenarios, they are suitable for power systems, and they are verified by some power system practical cases.

-

2)

We analyzed the impact of human factors on maintenance. Electrical equipment maintenance could not be always perfect due to human errors, and maintenance availability can be affected a lot. So it is necessary to take human factors into consideration when determining maintenance policy.

-

3)

We analyze the impact of human errors on emergency dispatch. Analysis and evaluation results demonstrate that it could avoid cascading failures and reduce power loss by improving human operation reliability.

-

4)

Based on IDAC, we propose a framework of dispatcher training evaluation simulation system, which could be used as a tool for dispatcher training simulation. It could take all the influencing factors into account, and make a comprehensive assessment of dispatchers. With DTESS we can find out operators` shortcomings and improve dispatch reliability

As a noteworthy issue, human reliability analysis in power systems deserves more attention. We should take further researches into how to quantify human error probability, the influence of human factors on power system, and the measures taken to reduce human errors and enhance power system reliability.

References

Fang YJ, Bao YH, Xu TS et al (2012) Coordinated prevention based on manual and automatic emergency control. Autom Electr Power Syst 36(22):6–11

Kirschen D, Bouffard F (2009) Keeping the lights on and the information flowing. IEEE Power Energy Mag 7(1):50–60

US-Canada Power System Outage Task Force (2004) Blackout 2003: final report on the August 14, 2003 blackout in the United States and Canada: causes and recommendations. Office of Electricity Delivery & Energy Reliability, Washington, DC

Interim report of the Investigation Committee on the 28 September 2003 blackout in Italy. Union for the Co-ordination of Electricity Transmission (UCTE), Brussels, Belgium, 2003

Guo CX, Lu HB, Bin Yu et al (2013) A survey of research on security risk assessment of secondary system. Power Syst Technol 37(1):112–118

Koval DO, Floyd HL (1998) Human element factors affecting reliability and safety. IEEE Trans Ind Appl 34(2):406–414

Lu S, Gao LQ, Wang K et al (2006) Analysis and inspiration on blackout of Moscow. Relay 34(16):27–31

Reason J (1990) Human error. Cambridge University Press, New York

Lee SW, Kim AR, Ha JS et al (2011) Development of a qualitative evaluation framework for performance shaping factors (PSFs) in advanced MCR HRA. Ann Nucl Energy 38(8):1751–1759

Groth KM, Mosleh A (2012) A data-informed PIF hierarchy for model-based human reliability analysis. Reliab Eng Syst Safe 108:154–174

Pyy P (2000) An approach for assessing human decision reliability. Reliab Eng Syst Safe 68(1):17–28

Swain AD, Guttmann HE (1985) Handbook of human reliability analysis with emphasis on nuclear power plant applications. NUREGPCR-1278, US Nuclear Regulatory Commission, Washington, DC, USA

Hollnagel E (1998) Cognitive reliability and error analysis method (CREAM). Elsevier, Amsterdam

Williams JC (1986) HEART: a proposed method for assessing and reducing human error. In: Proceedings of the 9th advances in reliability technology symposium (ARTS’86), Bradford, UK, Jun, 1986, B3.R/1-13

Cooper SE, Ramey-Smith AM, Wreathall J, et al (1996) A technique for human error analysis (ATHEANA). NUREG/CR-6350, US Nuclear Regulatory Commission, Washington, DC, USA

Dhillon BS (1982) Stochastic models for predicting human reliability. Microelectron Reliab 22(3):491–496

Liboa JJ (1988) Quantification of human error and common mode failures in man-machine systems. IEEE Trans Energy Convers 3(2):292–299

Chang YHJ, Mosleh A (2007) Cognitive modeling and dynamic probabilistic simulation of operating crew response to complex system accident—Part 1: overview of the IDAC model. Reliab Eng Syst Safe 92(8):997–1013

Sundaramurthi R, Smidts C (2013) Human reliability modeling for the next generation system code. Ann Nucl Energy 52(2):137–156

Joe JC, Shirley RB, Mandelli D, et al (2015) The development of dynamic human reliability analysis simulations for inclusion in risk informed safety margin characterization frameworks. In: Proceedings of the 6th international conference on applied human factors and ergonomics (AHFE’15) and the affiliated conferences, Las Vegas, NV, USA, 26–30 Jul 2015, pp 1305–1311

Chang Y, Bely D, Criscione L et al (2014) The SACADA database for human reliability and human performance. Reliab Eng Syst Safe 125:117–133

Guo XY (2007) Research on integrated control operation staff`s fatigue problem of electrical power enterprise. Master Thesis, Beijing Jiaotong University, Beijing, China

Cox DR (1992) Regression models and life—tables. In: Breakthroughs in statistics. Springer, New York, pp 527–541

Saaty TL (1980) The analytic hierarchy process: planning, priority setting, resource allocation. McGraw-Hill, New York

Dhillon BS (2007) Human reliability and error in transportation systems. Springer, New York, pp 43–54

Lu HB, Wang M, Guo CX et al (2013) A quantitative method for human reliability in power system based on CREAM. Power Syst Prot Control 41(5):37–42

Baraldi P, Podofillini L, Mkrtchyan L et al (2015) Comparing the treatment of uncertainty in Bayesian networks and fuzzy expert systems used for a human reliability analysis application. Reliab Eng Syst Safe 138:176–193

Wang AS, Luo Y, Tu GY et al (2011) Quantitative evaluation of human-reliability based on fuzzy-clonal selection. IEEE Trans Reliab 60(3):517–527

Li GQ (2009) Analysis and simulation of power grid and substation operation. China Electrica Power Press, Beijing, pp 193–244

Hannaman G, Spurgin A, Lukic Y (1984) Human cognitive reliability model for PRA analysis. NUS-4531, NUS Corporation, San Diego, CA, USA, pp 125–130

Rasmussen J (1983) Skills, rules, and knowledge; signals, signs and symbols, and other distinctions in human performance models. IEEE Trans Syst Man Cybern 13(3):257–266

Wang HD, Gao W (2006) Study on erroneous operation due to human factor based on human cognitive (HCR) model. China Safe Sci J 16(7):51–56

Etemadi AH, Fotuhi-Firuzabad M (2010) New considerations in modern protection system quantitative reliability assessment. IEEE Trans Power Deliv 25(4):2213–2222

Flage R (2014) A delay time model with imperfect and failure inducing inspections. Reliab Eng Syst Safe 124:1–12

Bao YK, Wang YF, Huang G, et al (2015) Impact of human error on electrical equipment maintenance policy. In: Proceedings of the 2015 IEEE PES general meeting, Denver, CO, USA, 26–30 Jul 2015, 5 pp

Nakagawa T, Yasui K (1987) Optimum policies for a system with imperfect maintenance. IEEE Trans Reliab 36(5):631–633

Grigg C, Wong P, Albrecht P et al (1999) The IEEE reliability test system—1996: a report prepared by the reliability test system task force of the application of probability methods subcommittee. IEEE Trans Power Syst 14(3):1010–1020

Reliability Test System Task Force (1979) IEEE reliability test system. IEEE Trans Power Appar Syst 96(6):2047–2054

Miller GL, Storey AB, Vadari SV et al (1993) Experiences using the dispatcher training simulator as a training tool. IEEE Trans Power Syst 8(3):1126–1132

Shen SH, Smidts C, Mosleh A (1997) A methodology for collection and analysis of human error data based on a cognitive model: IDA. Nucl Eng Des 172(1/2):157–186

Chang YHJ, Mosleh A (2007) Cognitive modeling and dynamic probabilistic simulation of operating crew response to complex system accidents—Part 2: IDAC performance influencing factors model. Reliab Eng Syst Safe 92(8):1014–1040

Chang YHJ, Mosleh A (2007) Cognitive modeling and dynamic probabilistic simulation of operating crew response to complex system accidents—Part 3: IDAC operator response model. Reliab Eng Syst Safe 92(8):1041–1060

Mercurio D, Podofillini L, Zio E et al (2009) Identification and classification of dynamic event tree scenarios via possibilistic clustering: Application to a steam generator tube rupture event. Accid Anal Prev 41(6):1180–1191

Acknowledgements

This research work was supported by the State Key Program of National Natural Science Foundation of China (No. 51537010) and Zhejiang Provincial Natural Science Foundation (No. LZ14E070001).

Author information

Authors and Affiliations

Corresponding author

Additional information

CrossCheck date: 5 July 2016

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

BAO, Y., GUO, C., ZHANG, J. et al. Impact analysis of human factors on power system operation reliability. J. Mod. Power Syst. Clean Energy 6, 27–39 (2018). https://doi.org/10.1007/s40565-016-0231-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40565-016-0231-6