Abstract

Efficiency analysis is commonly used to assess and compare the productivity of similar decision-making units (DMUs). In this research, we broaden the applicability of efficiency analysis to situations where each DMU comprises interconnected sub-DMUs (e.g., departments). Extant research espouses that embracing the connectedness between sub-DMUs (e.g., marketing–sales interface, marketing–R&D interface) helps maximize sub-DMU and DMU performance. For example, the marketing sub-DMU generates sales leads through advertising while the sales force sub-DMU convert leads into sales. The sales force sub-DMU partially relies on the marketing sub-DMU to generate sales, and the DMU benefits from the efficiency gained by the sales force sub-DMU in following through the leads generated by the marketing sub-DMU. To conduct efficiency analysis of DMUs with interconnected sub-DMUs, we need a method that produces sub-DMU level efficiency scores, accounts for the interconnectedness among sub-DMU inputs and outputs, and provides statistical inference on the impact of efficiency on overall DMU outputs; all of which is not provided by extant methods. Accordingly, we propose a new method that combines the intuition of the existing methods to address the problem. In addition, based on syndicated data from over 300 newspapers with interconnected sub-DMU, we present empirical evidence that the proposed method outperforms traditional approaches that do not consider sub-DMU interconnectedness. Finally, the proposed method yields substantive insights. For example, the newsroom department was the most efficient; yet newsrooms appear to have been the major focus of cutbacks during the last decade.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Efficiency analysis has been a core issue of marketing since the discipline’s origins [e.g., 2, 35, 36]. In general, efficiency analysis assesses the relative productivity of comparable decision-making units (DMUs), e.g., competing firms in a market, stores of a retailer, geographic branches of a sales organization, etc. According to such analyses, a 100 % efficient DMU is one that produces the most output(s) given the same input(s) as other DMUs or, equivalently, uses the least input(s) to produce the same level of output(s) as other DMUs being compared. To understand how to better convert scarce marketing resources into improved performance outcomes, marketing scholars have conducted both internal and external efficiency benchmarking analyses in a variety of contexts, e.g., assessing relative efficiencies of stores in a retail chain [17], sales districts of a sales organization [20], and large banks in the banking industry [25].Footnote 1

However, to date, efficiency analyses in marketing have ignored the fact that in many contexts, the DMUs in question are comprised of “sub-DMUs,” e.g., organizational departments, that are linked in that some or all of the outputs of one department’s productive activity may serve as inputs for the other’s activity. For example, customer leads generated by the marketing department of an organization are critical inputs for the sales department’s activity of converting prospects into customers, while orders booked by sales are critical inputs for production and so on. Moreover, in many instances, organizational departments are interlinked in that some or all of the outputs of one department serve as the inputs for the other and vice versa. For example, revenues from the customer orders successfully booked by sales may drive marketing efforts that yield leads utilized by sales to produce orders. Intuitively, the overall efficiency of a DMU is an aggregation of the efficiency of each sub-DMU or department after accounting for the portion of its inputs (outputs) supplied (utilized) by other sub-DMUs with which it interfaces.Footnote 2 Marketing scholars argue that organizational performance is impacted by coordination (or lack thereof) at various marketing-related interfaces such as the marketing–sales interface [e.g., 22, 33], marketing–production interface [e.g., 12, 13, 19], and marketing–R&D–sales interfaces [e.g., 11, 14, 18]. However, so far, no study has performed overall marketing efficiency benchmarking of DMUs of interest accounting for the respective efficiencies of interfacing sub-DMUs or departments of which they are comprised. Such an analysis promises to provide deeper insight into specific sub-DMU interfaces that need greater attention in order to boost overall DMU productivity. Our research in this paper aims to fill this gap in the extant organization-level marketing efficiency analysis literature.

More formally, we identify three criteria that must be met by a method for assessing relative efficiencies of a set of DMUs that accounts for sub-DMU level efficiencies:

-

1.

Produce efficiency scores at the sub-DMU level: When relative efficiencies of a set of DMUs are expressed in terms of sub-DMU level relative efficiency scores, the sub-DMU/s that contribute more or less to overall efficiency can be identified. This analysis is likely to be of more diagnostic value than the typical aggregate analysis for managers considering where and how to intervene to boost overall productivity of a DMU.

-

2.

Model interconnectedness among sub-DMU when producing efficiency scores: Modeling the interconnectedness among sub-DMUs will help produce efficiency scores that explicitly adjust for the portion of an sub-DMU’s inputs (outputs) supplied (utilized) by other sub-DMUs with which it interfaces and also correct for any upward/downward bias that might arise when the interfaces between sub-DMUs are ignored.

-

3.

Provide statistical inference about the impact of sub-DMU efficiency: When sub-DMUs within a DMU are interconnected, the increase in each sub-DMU’s efficiency should contribute not only to its own outputs but also to the outputs of all other sub-DMUs with which it interfaces. It is meaningful to provide metrics that document the impact of an increase in sub-DMU efficiency to sub-DMU and overall DMU output. Since numerous random factors (e.g., luck, weather) could alter the impact of each sub-DMU’s efficiency on overall DMU efficiency, it is useful to provide an uncertainty range around the impact metric. Thus, we should control for the role of random error and need a means to estimate (rather than simply calculate) and statistically test (rather than assume) the impact of an increase in sub-DMU efficiency on the each DMU output.

A review of the literature on efficiency analysis techniques, e.g., data envelopment analysis (DEA) [8] and stochastic frontier analysis [1], reveals that no available method meets all three criteria noted above. More specifically, the DEA approach, developed in operations research [8], is a nonparametric way to relate output(s) to input(s) without assuming any restrictive form for the production function [e.g., 44, 45]. Network DEA (NDEA), developed in [15], conceptualizes a DMU as a set of smaller interconnected sub-DMUs (departments) and calculates the efficiency scores for the DMU and each sub-DMU. However, neither DEA nor NDEA provides statistical inference on the impact of efficiency. In contrast, the stochastic frontier analysis (SFA) approach, developed in economics [1], parametrically estimates the relationship between an output and various inputs, providing formal statistical inference about the impact of increase in efficiency on outputs [42]. However, SFA does not model the interconnectedness among sub-DMUs.

In this paper, our goal is to develop and demonstrate a suitable approach to benchmarking of DMUs that meet all the three criteria simultaneously. This new two-stage approach not only employs advanced forms of both DEA and SFA that preserves their basic advantages (discussed in the next section) but also permits efficiency analysis of DMUs with interconnected sub-DMUs. This approach combines NDEA with multivariate sliced inverse regression (MSIR) [24]. While NDEA allows us to effectively characterize the interfaces among various sub-DMUs, MSIR provides nonparametric statistical inference about the impact of increase in sub-DMU efficiency on each DMU level output of interest.

We demonstrate the benefits of the proposed approach in a practical context, currently of great interest to marketers and media economists. Specifically, our empirical application involves external benchmarking of print newspaper firms, where output–input links between key sub-DMUs (newsroom or editorial, distribution, and advertising space sales departments) exist. Facing tough economic times in the USA today, newspaper firms are struggling to improve their efficiency and profitability [6]. They would benefit from a benchmarking methodology that identifies those firms that are most efficient as well as reveals the relative efficiencies of individual sub-DMUs taking into account their direct and indirect impacts on outputs of interest to the firms. For example, the advertising sales outputs of the sales department are of value in themselves and also an input impacting a newspaper’s circulation, the main output of the newsroom department.

Using syndicated data from over 300 newspapers, we first obtain the efficiencies of the newsroom, distribution, and sales force sub-DMUs for each newspaper and then assess the impact of efficiency on the performance of newspapers. We also replicate the analyses using another sample of over 200 newspapers. We find that the proposed method outperforms the current aggregate approach and offers substantive guidance to the newspaper industry. For example, the newsroom department is the most efficient, yet newsroom efficiency does not statistically increase the outputs of newspapers; whereas the efficiencies of sales force and distribution departments increase both the subscriptions and ad-space revenues.

The rest of the paper is organized as follows. Section 2 reviews the available procedures for efficiency and their limitations with respect to modeling sub-DMUs and their interfaces. Section 3 develops the proposed method, Section 4 presents the empirical analysis, and Section 5 concludes by summarizing the key takeaways.

2 Review of Existing Efficiency Analysis Techniques

In this section, we describe the commonly used approaches for efficiency analysis and highlight their limitations when analyzing the efficiency of interconnected sub-DMUs in conjunction with the overall efficiency of a DMU. We then develop a suitable approach that meets three desired criteria.

2.1 Data Envelopment Analysis

Consider the comparison of n DMUs that each use m inputs x 0 to produce s outputs y 0. DEA uses the data (x 0 , y 0 ) to calculate the scalar efficiency of a DMU as a weighted ratio of the DMU’s outputs to the DMU’s inputs. DEA calculates the DMU’s weighting scheme and scalar efficiency simultaneously [8]. Specifically, DEA sets up a linear program to optimally determine the weights that enable each DMU to maximize its weighted ratio of outputs to inputs (efficiency) subject to all DMUs’ efficiencies. Thus, DEA calculates every DMU’s weights at the point where the DMU is most efficient. Appendix 1 presents the details of calculating the efficiency scores. A DMU is 100 % efficient if its score is unity.

Column 2 in Table 1 below evaluates the use of DEA for efficiency analysis of DMUs with interconnected sub-DMUs. To apply DEA to our setting, we could simply combine all the outputs that come out of a DMU and all the inputs that go into it, regardless of which sub-DMU they belong to, and then obtain the DMU’s efficiency score with respect to the conversion of all inputs to outputs. However, this would treat a DMU as a “black box,” and the sub-DMUs and their interfaces would not be modeled. Conversely, one could perform as many DEA analyses as there are sub-DMUs by treating each sub-DMU as an entire DMU that uses inputs to produce outputs. While this would overcome the challenge of providing sub-DMU level scores, it would ignore the interfaces shared by the sub-DMUs. Finally, since DEA is purely deterministic, it cannot provide statistical inference about the impact of efficiency [26]. Therefore, it is not a comprehensive tool for our purpose (see Table 1).

2.2 Stochastic Frontier Analysis

SFA explicitly accounts for the role of random errors in computing efficiency (e.g., [1]). Consider the comparison of \( n \)DMUs using \( m \) inputs in vector x 0 to produce the scalar output y 0 . SFA assumes that a DMU uses a production technology f(x 0 , β) to produce y 0 , where β represents the slope coefficient vector corresponding to x 0 .

SFA challenges the assumption that DMUs actually produce as much as their technology function would imply. Specifically, while each of the n DMUs may try to produce outputs according to its technology, they may fall short by an amount v k (k = 1…,n) due to inefficiency. Efficiency in SFA is the ratio of observed output produced by a DMU to the maximum feasible output producible by the DMU. Additionally, the SFA incorporates error (u) in our knowledge of a DMU’s production function to account for myriad random factors not explicitly included in the model. In sum, we represent a DMU’s output as:

where ε SFA is the difference of the normal error term (u) and the one-sided error term (v > 0). Thus,

Column 3 in Table 1 evaluates the use of SFA for efficiency analysis of DMUs with interconnected sub-DMUs. Similar to DEA, one could again perform as many SFA analyses as there are sub-DMUs by treating each sub-DMU as an entire DMU that obtains inputs to produce outputs. This would provide sub-DMU efficiency scores but ignore the interfaces shared by the sub-DMUs. However, maximum likelihood estimation provides the estimates and inference of β and the DMU-specific estimates of inefficiency (v k). However, we require pre-specification of a functional form f(⋅). In light of these limitations, SFA also falls short of meeting the first two of our three desired criteria.

2.3 NDEA

NDEA falls in the class of DEA models in that it also uses the mathematical programming idea to calculate the weights and efficiency of a DMU. The basic innovation of NDEA, developed in [15], is that it breaks a DMU into a set of smaller interconnected sub-DMUs (departments) and calculates the overall efficiency score of a DMU as well as each sub-DMU’s efficiency score. While doing so, it also recognizes that sub-DMUs may be structured so that one DMU’s output may serve as another’s input. Performing benchmarking through NDEA involves the specification of the interfaces within a DMU and then the solution of a linear program similar to that of standard DEA. Appendix 2 provides the details for calculating the weights and efficiency scores via mathematical programming (see [15]).

Column 3 in Table 1 evaluates the use of NDEA for efficiency analysis of DMUs with interconnected sub-DMUs. We would identify the inputs, outputs, and the interfaces across sub-DMUs. By obtaining sub-DMU and DMU scores while accounting for interconnectedness due to the sub-DMU interfaces, NDEA addresses the first two issues in Table 1. However, NDEA is purely deterministic and does not provide statistical inference about the impact of increasing a sub-DMU’s efficiency on all DMU outputs. Therefore, NDEA also falls short of all three criteria.

2.4 Two-Stage (DEA + Regression) Approach

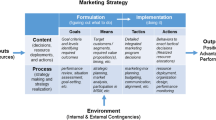

Arnold et al. [3] introduce a two-stage efficiency approach to combine the nonparametric advantages of DEA and the inference capabilities of SFA. They propose the joint use of DEA and ordinary least squares (OLS), i.e., they include the results from a DEA analysis in the linear regression of outputs on inputs.

Specifically, for a set of n DMUs, they perform a standard DEA (stage 1) to obtain the efficiency score of each DMU. Their second stage consists of two parts in the case of a DMU producing multiple outputs. In part 1, they condense multiple outputs into one composite output via canonical correlation of outputs on inputs. Specifically, if a DMU produces s outputs each denoted by y s , then they define composite output CY by

where γ j represents the canonical coefficients obtained between the outputs and inputs (see, e.g., [40]). In part 2, they regress the composite output CY on the multiple inputs and efficiency score of the DMU. Denoting the efficiency score by ρ, their approach assumes the Cobb-Douglas (double log) functional form as follows:

where \( {\tilde{\boldsymbol{\mathsf{x}}}}_{\boldsymbol{0}} \) is an m × 1 vector that equals \( \ln {\boldsymbol{\mathsf{x}}}_{\boldsymbol{0}} \), τ 1 represents the m × 1 slope coefficient vector corresponding to \( {\boldsymbol{\mathsf{x}}}_{\boldsymbol{0}} \), and τ e captures the impact of efficiency on the composite output CY.

Column 5 in Table 3 evaluates the use of the two-stage (DEA + Regression) technique for efficiency analysis of DMUs with interconnected sub-DMUs. The first step involves DEA and hence cannot take into account the relatedness among the various sub-DMU interfaces. The two-stage approach allows for statistical inference since it calculates ρ from a nonparametric DEA and carries out inference about τ e via OLS. In a simulation study, Bardhan et al. [5] show that this two-stage method performs better in terms of retrieving the true parameters of known (simulated) production functions than DEA and SFA taken individually. However, the Arnold et al. [3] method uses DEA and suffers from the limitations that sub-DMU efficiency scores and their interconnectedness are not modeled.

3 Method Development

As the previous section shows, extant methods do not simultaneously satisfy all the three methodological criteria needed for efficiency analysis of DMUs with interconnected sub-DMUs (see Table 1). Therefore, in this section, we propose a novel dimension reduction technique introduced in the statistics literature (multivariate sliced inverse regression) which, when combined with NDEA, provides the solution that we are seeking. Similar to Arnold et al. [3], the proposed method employs a two-stage approach that combines the usefulness of DEA-based techniques and statistical techniques but at each stage employs an augmented procedure that preserves their respective advantages throughout the analysis.

3.1 Modeling the Interconnectedness Among Sub-DMUs

The first stage of the Arnold et al. method to compute efficiency ignores the interconnectedness among sub-DMUs within a DMU. To embed these interfaces, we use NDEA to calculate efficiency scores for both the DMU and sub-DMUs. That is, we allow for some sub-DMUs’ output to serve as other sub-DMUs’ input.

3.2 Incorporating the Nonparametric Structure

To avoid pre-specifying a functional form such as Cobb-Douglas in the second stage of Arnold et al., we apply link-free regression via sliced inverse regression [10]. The general representation of a SIR model is as follows:

where Y is a univariate vector of n observations n × 1, g(.) is an unknown function, X is an n × m matrix of m inputs, α j (j = 1, …, r) are m × 1 vectors of coefficients (r < m), and ε is the error term that needs not be normally distributed (i.e., it belongs to a broad class of elliptically contoured distributions). Although a generalized eigenvalue decomposition yields all the r alpha vectors (see [23]), for our purpose, we extract only the principal eigenvector to estimate the model y = g(X α 1, ε SIR) without pre-specifying the link function g(⋅). Appendix 3 provides the details of estimation and inference of the vector α 1.

To accommodate multiple outputs, we apply MSIR, where Y is a matrix of n observations on s outputs. We create a composite dependent variable Y θ 1, where θ 1 is the s × 1 vector of weights in the model:

Appendix 3 describes how to estimate (θ 1, α 1) via the inverse regression theory (see [9, 23]) without pre-specifying the link function g(⋅). We denote the resulting composite vectors as \( {\mathrm{COMP}}_Y=\boldsymbol{Y}{\widehat{\theta}}_1 \) and \( {\mathrm{COMP}}_X=\boldsymbol{X}{\widehat{\alpha}}_1 \).

MSIR generalizes the second stage of Arnold et al. as follows. First, we estimate the COMP X and COMP Y without pre-specifying the functional form g(⋅). The canonical correlation in part 1 of the Arnold et al. approach is a special case of MSIR. Specifically, the canonical correlation assumes the link function g(⋅) to be linear, whereas MSIR does not impose the linear restriction. Second, once we extract COMP X and COMP Y , we could either create a scatter plot to visually assess the shape of the link function g(⋅) (e.g., [16]) or choose an appropriate function, Cobb-Douglas or other, post hoc. In contrast, in part 2 of the Arnold et al. approach, the link function g(⋅) is pre-specified to be of the log–log type. In contrast, MSIR discovers the shape nonparametrically, which could be used to post-specify the relevant parametric link function.

3.3 Algorithm for the Proposed Method

-

Stage 1

Sub-DMU Efficiency Computation

-

Identify the sub-DMUs, inputs, outputs, and linked inputs of each sub-DMU of the DMU. Compute the efficiency scores of each sub-DMU. Appendix 2 provides the computational details.

-

-

Stage 2

Nonparametric Estimation and Inference

-

Part 1

Perform an MSIR estimation where Y includes all the outputs produced by the DMU and X includes all the inputs used by the DMU and the efficiency scores of each sub-DMU. Extract the composites, COMP Y and COMP X , without pre-specifying the link function g(⋅). Appendix 2 provides the estimation and inference details.

-

Part 2

Plot a graph of the extracted composites, COMP Y versus COMP X , to determine an appropriate functional form COMP Y = g(COMP X ). Test whether a sub-DMU’s efficiency significantly increases the outputs.

-

Part 1

We next apply this algorithm to US print newspaper firms. We first describe the data set and the interfaces among the sub-DMUs within a newspaper firm and then discuss the empirical results and substantive insights.

4 Empirical Application

We focus on the newspaper industry for our empirical application for the following reasons. First, the newspaper industry is characterized by the presence of a few large companies that have each acquired several hundred independently operating DMUs [30]. For example, the leading media giant Gannett Incorporated owns 82 daily newspapers that function as independent DMUsFootnote 3. Efficiency analysis could provide timely and accurate guidance to DMUs performing below the mark. Second, recent years have seen a wave of debatable consolidation and deconsolidation decisions by media firms. For example, Clear Communication Inc. grew to dominate the US radio market by acquiring DMUs from nearly 70 companies. Such acquisitions led to criticism since analysts did not consider all DMUs as strategic assets. Efficiency analysis helps during acquisition situations when consolidating firms seek to replace/discard some poorly performing DMUs [34]. Third, daily newspaper sorely needs guidance on efficient allocation of resources given they are going through tough economic times due to dwindling circulations (e.g., [6]). After several interviews with newspaper owners and managers of syndicated data collection agencies, we find that no formal efficiency analysis has been performed to benefit the industry at large. Moreover, no academic application exists on benchmarking to gauge the productivity of media firms. Hence, we acquired data from a syndicated press association to perform efficiency analysis, which we next describe.

4.1 Data

The Inland Press Association (IPA) provided the data. The IPA venture began as a service to small and medium newspapers (with daily circulation of less than 85,000) that would otherwise lack reference to the industry norms. Since 1916, IPA kept annual records of data on financial aspects of hundreds of US print newspapers. The IPA database includes data on costs incurred by various departments of a newspaper (number of employees, expenses on equipment), revenues obtained, and profits generated. For our analysis, we obtained data from 310 newspapers in 1999. We also perform a replication analysis with data on 225 newspapers from 2002. To protect newspaper confidentiality, the IPA does not reveal information about newspapers’ identities and locations; hence, we treat the dataset from 1999 and 2002 as a separate cross-sectional sample.

Each newspaper is a stand-alone DMU comprised of three main sub-DMUs: the newsroom for creating news content, the distribution department for circulation, and the sales force for selling advertising space. The inputs utilized by each of these sub-DMUs are employees and equipment. Their outputs are measured by the pages of news content produced (in inches), the number of subscriptions sold, the amount of ad space sold (in inches), and the amount of local, national, and classified advertising revenue.

Table 2 presents the descriptive statistics of the outputs and inputs for 1999 and 2002. The newsroom is the most highly staffed sub-DMU with 40 % of all the employees, whereas the distribution sub-DMU contributes the most to equipment expenses.

4.2 Sub-DMU Efficiency Computation

4.2.1 Interfaces of the Newspaper Organizational Structure

Newsroom

The role of the employees in the newsroom is to produce and market a product of high quality and integrity that positively impacts circulation [27]. Specifically, the employees and equipment in the newsroom (inputs) combine to generate news content and subscribers (outputs). Local newspapers’ own staff writes most of their news; external wire services contribute much less. As shown in past research (e.g., [38]), the type (retail, national, classified) and amount of ad space also impact newsroom outputs. Therefore, we treat outputs of the sales force department as linked inputs to the newsroom department.

Distribution

Its responsibility is to effectively deliver the newspaper to subscribers. A newspaper’s delivery systems are strategic assets [28]. A newspaper’s distribution elasticity is large (0.23) and significant [27], suggesting that a 10 % increase in distribution intensity results in 2.3 % increase in sales. Because the delivery of a print newspaper on time and in good condition retains subscribers’ interest in the service, the main output of the distribution department is also the number of subscribers.

Sales Force

Its role is to sell ad space to advertisers. The total space sold and the revenues from retail, national, and classified ads are the outputs of sales force efforts [43]. Over 85 % of ads in newspapers promote a product or service; the remaining ads promote events such as public meetings and personal messages [29]. The output produced by the newsroom (pages of content, subscriptions) and distribution departments (subscriptions) influence advertisers in buying ad space in a newspaper [37]. Therefore, we treat the outputs from the newsroom and distribution departments as being linked inputs to the sales force [32].

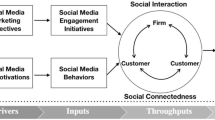

Figure 1 summarizes the newspaper organizational structure. The gray boxes show the newsroom, distribution, and sales force sub-DMUs. These input boxes point towards the departments with block arrows. We depict subscriptions and pages of content (newsroom output) to the right of the newsroom department box, with block arrows pointing from the newsroom towards the outputs. The distribution department is also responsible for producing subscriptions, and hence, a block arrow points from the distribution department towards subscriptions. We show ad space and ad revenue (sales force output) to the right of the sales force department box, with block arrows pointing from the sales force department towards the outputs.

Turning to the linked inputs, since ad revenue and ad space (sales force outputs) serve as inputs to the newsroom, we draw a dashed arrow pointing from ad space and ad revenue to the newsroom department. Similarly, since the sales force uses subscriptions and content pages as linked inputs from the newsroom, we draw a dashed arrow pointing from subscriptions and pages of content to the sales force department. This description shows us how the newsroom, distribution, and sales force sub-DMUs are intricately linked.

4.2.2 Empirical Results

As in Tone and Tsutsui [41], we apply linear programming to obtain the efficiency scores and compare them to those from the traditional DEA model. Table 3 provides the mean efficiency scores of the newspapers in 1999 and 2002. The standard DEA-based mean overall efficiency is 0.77 for 1999 and 0.86 for 2002. In contrast, the proposed method which captures the interconnectedness estimates the mean overall efficiency as 0.53 for 1999 and 0.62 for 2002. The smaller score reflects the more conservative nature of the proposed method as it identifies more linked variables to penalize DMUs for their inefficiency.

Furthermore, the proposed method provides the sub-DMU efficiencies as well as the overall DMU efficiency for each firm. This information is unavailable from the standard DEA analysis. In Table 3 below, we see that the newsroom’s efficiency is higher than the efficiency of the distribution and advertising sales departments in 1999 and 2002. This finding is interesting because newsrooms have been the major focus of cutbacks during 2002–2010 [39] even when they seem to operate at high efficiency relative to other departments.

Table 4 further reveals the benefits of the proposed method relative to the standard DEA. Specifically, we identify and discriminate a few outstanding performers from the rest. Table 4 shows that, in 1999 and 2002, respectively, the standard DEA performs poorly because it classifies 197 firms (about 63 %) and 163 firms (about 72 %) as being 100 % efficient. Such a prevalence of high-performing firms is hard to reconcile given the downturn soon thereafter [27]. On the other hand, the proposed method identifies only 30 (22) firms in 1999 (2002) as fully efficient, which suggests an average of 10 % or fewer outstanding firms in the sample. Indeed, this ability of the proposed method to identify a selective sample of outstanding firms empowers the managers to learn from the finest performers rather than from the average performers.

Finally, we expect the DMUs with higher efficiency scores to earn higher profits. The correlations between efficiency scores (based on the proposed method) and profits were 0.34 and 0.32 in 1999 and 2002, respectively, and statistically significant. In contrast, the correlations between the efficiency scores based on the standard DEA and profits are much lower (0.19 in 1999, 0.20 in 2002).

To summarize, the proposed method captures the interconnectedness of sub-DMUs and outperforms the standard DEA. It provides discriminating information as well as disaggregated (sub-DMU level) information. It assists less efficient firms by identifying the finest firms to benchmark and emulate. It offers diagnostic information with respect to the efficiency improvements at the departmental (sub-DMUs) levels. We next demonstrate how MSIR outperforms in the second stage.

4.3 Nonparametric Estimation and Inference

Stage 2 of the proposed method discovers how the inputs in each department together with the departmental efficiencies impact the outputs produced by the firm. In part 1 of stage 2, we extract the composite variables COMP Y and COMP X using MSIR. Six outputs comprise the dependent variables: pages of content, pages of ad space, subscriptions, retail, local, and classified ad revenues. Three inputs comprise the independent variables: full-time and part-time employees, investments in equipment in the three sub-DMUs, and the three sub-DMU efficiency scores. Therefore, the vector of independent variables has (3 × 3) + 3 = 12 inputs. Table 5 shows the MSIR estimates for 1999 and 2002. It shows the estimates of the α 1 vector which reduces the dimensionality of the 12 inputs and the estimates of the θ 1 vector which reduces the dimensionality of the 6 outputs.

In the part 2 of stage 2, we discover the relationship between COMP Y and COMP X and do not impose a functional form a priori. Rather, we let the data inform us the most appropriate shape. Because we log-transform the output and input variables, we expect a linear relationship between COMP Y and COMP X if the Cobb-Douglas assumptions were to hold. To formally ascertain the presence of nonlinearity, we estimate a series of nonlinear models given by COMP Y = π (COMP X )δ/δ, where δ ranged from 0 to 1 in increments of 0.1. When δ = 1, the relationship between COMP Y and COMP X is linear, thereby nesting the Arnold et al. [5] Cobb-Douglas form. We compute the corrected Akaike information criterion (AICC) for each of the models; the best model is one that attains the smallest AICC [21].

Table 6 presents the results for model selection across the 11 alternative models in 1999 and 2002. Focusing on results for 1999, the model with δ = 0 obtains an AICC value of 1,188.24, while the model with δ = 0.6 attains the minimum AICC score of 1,099.60. The difference in information criterion exceeds the critical threshold of 2 points for rejection [7]. Hence, we reject the model with δ = 0 in favor of the model with δ = 0.6. Also, the model with δ = 1 (the Arnold et al. model) obtains an AICC value of 1,590.47, which is more than 2 points greater than the model with δ = 0.6. Hence, we reject the Arnold et al. model (δ = 1) in favor of the model whose δ = 0.6.

Focusing on results for 2002, the model with δ = 0 obtains an AIC C value of 671.46, the model with δ = 1 obtains an AICC value of 1,167.67, and the model with δ = 0.5 attains the minimum AICC score of 669.29. The difference in information criteria between the models with δ = 0 and δ = 0.5 and the difference in information criteria between the models with δ = 1 and exceed 2, respectively. Hence, we again reject the models with δ = 0 and δ = 1 over the model with δ = 0.5. Thus, our proposed approach allows flexibility in the choice of nonlinearity. Table 7 shows the estimates of π for 1999 and 2002.

We calculate the percentage increase in a newspaper output (e.g., ad space sold) in response to a 1 % change in the department’s efficiency by combining information from Table 4 (links the individual outputs to COMP Y and the individual inputs to COMP X ) and Table 5 (links COMP Y and COMP X ). Table 8 shows the results for year 1999 (year 2002 results are similar).

Table 8 indicates that increases in sales force efficiency significantly increase all outputs of the newspaper, with subscriptions, pages of content produced, and classified ads benefitting the most. To boost sales force efficiency, newspaper firms should continue to invest in sales force training and coaching [43]. Table 8 also shows that the distribution efficiencies generate more outputs. Specifically, it enhances the subscriptions and sales of classified ads. Indeed, when readers receive newspapers on time, their utility to stay subscribed increases which, in turn, boosts advertisers’ inclination to buy ad space. At the current levels, an incremental increase in newsroom efficiency does not statistically impact any of the six outputs that the newspaper produces (i.e., the statistical impact of a 1 % increase in efficiency in the newsroom on all outputs is 0). This finding occurs because, first, the operating levels of newsroom efficiency are 20–30 % higher than the efficiencies of the distribution and sales force departments, and diminishing returns set in as the efficiency increases. Second, newspapers tend to be understaffed in the newsroom, and the average newsroom in our database is already performing as efficiently as it can, given its limited staff.

5 Conclusion

Efficiency analysis is an important for managing multi-unit business operations, since it provides accurate and timely productivity benchmarks for sub-DMUs within firms and DMUs at large. However, the literature so far has largely ignored the efficiency analysis of DMUs with interconnected sub-DMUs. It is important to address this gap to identify sub-DMUs and interfaces that are relatively inefficient and need more management attention.

Towards this end, we first outline three requirements in the efficiency analysis of DMUs with interconnected sub-DMUs: a need to produce sub-DMU efficiency scores, to account for the interconnected input–output relationships between the sub-DMUs, and to provide statistical inference on the impact of efficiency on overall DMU outputs. A detailed review of four existing techniques (DEA, SFA, NDEA, and a two-stage DEA/OLS method) shows that each method lacks a comprehensive treatment of the problem.

Hence, we propose a new two-stage method that meets all three criteria. We generalize the existing two-stage method that uses DEA in the first stage by embedding the sub-DMU structure in a DMU. Consequently, we obtain sub-DMU level efficiency and understand the interconnectedness among sub-DMUs. To provide statistical inference, we generalize the existing two-stage method by performing nonparametric estimation in the second stage of efficiency analysis. Specifically, we propose multivariate sliced inverse regression, MSIR, which provides estimation and inference capability without requiring the users to pre-specify any link function between the multiple outputs and multiple inputs. MSIR allows researchers the flexibility to discover the relationship between DMUs’ outputs and inputs, rather than imposing any a priori relationship.

Our empirical application illustrates how the proposed method outperforms DEA as an efficiency analysis tool. The proposed method is applicable to a wide variety of efficiency analysis situations; it empowers managers to obtain sub-DMU level insights, measures reliably the impact of efficiency gains on outputs, and avoids pre-specifying the functional form between the outputs and inputs.

We close by offering three takeaways for the newspaper industry. First, newspaper departments are organized so as to perform tasks that mainly serve one consumer group (e.g., reader). The reality is that a media firm produces a joint product serving both end user groups. In this unique business model, one department’s outputs enhance the efficiency of other departments and any benchmarking analysis must appreciate this key feature. Our methodology embraces this two-sided feature while providing departmental and overall efficiency scores. Therefore, our approach is suitable to benchmark newspapers and similar media platforms and in process development efforts that follow. Second, we provide the cash-strapped and bankruptcy-ridden industry with a technique to perform benchmarking analysis to decide which newspapers and newspaper departments are most efficient. Third, further increases in newsroom efficiency do not statistically increase the outputs of the newspapers while increases in sales force and distribution efficiencies help newspaper firms. This finding occurs because newsroom efficiency is 20–30 % higher than the efficiencies of the distribution and sales force departments, and diminishing returns set in as the efficiency increases. We hope practitioners and researchers find the proposed method and results useful.

Notes

Benchmarking is a widely adopted management tool by which a firm seeks to identify and replicate best practices to enhance its business performance [4, 31, 46]. External benchmarking compares the performance of one organization with others like itself in the same industry or across industries, while internal benchmarking compares similar operations (individuals, departments, branches) within an organization.

An interface represents the point of interconnection between two organizational subsystems, e.g., departments.

Sourced from the company website [http://www.gannett.com/about/company_profile.htm]

References

Aigner D, Lovell CA, Schmidt P (1977) Formulation and estimation of stochastic frontier production function models. J Econ 6(1):21–37

Alderson W (1941) A critical analysis of recent literature dealing with marketing efficiency: discussion. J Mark 5(April):365–370

Arnold VL, Bardhan IR, Cooper WW, Kumbhakar SC (1996) New uses of DEA and statistical regressions for efficiency evaluation and estimation—with an illustrative application to public secondary schools in Texas. Ann Oper Res 66(4):255–277

Banker RD, Gadh VM, Gorr WL (1993) A Monte Carlo comparison of two production frontier estimation methods: corrected ordinary least squares and data envelopment analysis. Eur J Oper Res 67(3):332–343

Bardhan IR, Cooper WW, Kumbhakar SC (1998) A simulation study of joint uses of data envelopment analysis and statistical regressions for production function estimation and efficiency evaluation. J Prod Anal 9(3):249–278

Bughin JR, Poppe H (2005) Dwindling readership: are tabloids the answer? The McKinsey Quarterly, January 2005

Burnham KP, Anderson DR (2002) Model selection and multi-model inference: a practical information-theoretic approach. Springer, New York

Charnes A, Cooper WW, Rhodes EL (1978) Measuring the efficiency of decision making units. Eur J Oper Res 2(6):429–444

Cook DR (1998) Regression graphics: ideas for studying regressions through graphics. Wiley, New York

Duan N, Li KC (1991) Slicing regression: a link-free regression method. Ann Stat 19(2):505–530

Dutta S, Narasimhan O, Rajiv S (1999) Success in high-technology markets: is marketing capability critical? Mark Sci 18(4):547–568

Eliashberg J, Steinberg R (1993) Marketing-production joint decision-making. Handb Oper Res Manag Sci 5:827–880

Erickson GM (2011) A differential game model of the marketing-operations interface. Eur J Oper Res 211(2):394–402

Ernst H, Hoyer WD, Rübsaamen C (2010) Sales, marketing, and research-and-development cooperation across new product development stages: implications for success. J Mark 74(5):80–92

Färe R, Grosskopf S (2000) Network DEA. Socio Econ Plan Sci 34(1):35–49

Gannoun A, Girard S, Guinot C, Saracco J (2004) Sliced inverse regression in reference curves estimation. Comput Stat Data Anal 46(3):103–122

Gauri DK, Pauler JG, Trivedi M (2009) Benchmarking performance in retail chains: an integrated approach. Mark Sci 28(3):502–515

Gupta AK, Raj SP, Wilemon D (1986) A model for studying R&D–marketing interface in the product innovation process. J Mark 50(2):7–17

Hauser JR, Simester DI, Wernerfelt B (1996) Internal customers and internal suppliers. J Mark Res 33(3):268–280

Horsky D, Nelson PE (1996) Evaluation of salesforce size and productivity through efficient frontier benchmarking. Mark Sci 15(4):301–320

Hurvich CM, Tsai CL (1989) Regression and time series model selection in small samples. Biometrika 76(2):297–307

Kotler P, Rackham N, Krishnaswamy S (2006) Ending the war between sales and marketing. Harv Bus Rev 84(8):68–78

Li KC (1991) Sliced inverse regression for dimension reduction. J Am Stat Assoc 86(414):316–327

Li KC, Aragon Y, Shedden K, Agnan CT (2003) Dimension reduction for multivariate response data. J Am Stat Assoc 98(461):99–109

Luo X (2003) Evaluating the profitability and marketability efficiency of large banks: an application of data envelopment analysis. J Bus Res 56:627–635

Luo X, Donthu N (2005) Assessing advertising media spending inefficiencies in generating sales. J Bus Res 58(1):28–36

Mantrala MK, Naik PA, Sridhar S, Thorson E (2007) Uphill or downhill? Locating your firm on a profit function. J Mark 71(2):26–44

Newspaper Association of America Marketing Department Report (2006) How America shops and spends 2006. MORI Research, Minneapolis

Peer L, Nesbitt M (2004) An analysis of content in 52 U.S. Daily Newspapers Summary Report, Newspaper Readership Institute

Picard RG (1993) Economics of the daily newspaper industry. In: Alexander A, Owers J, Carveth R (eds) Media economics: a reader, 2nd ed. (Hillsdale, NJ: Lawrence Erlbaum Associates, 1998), 111–129

Rigby D, Bilodeau B (2007) Selecting management tools wisely. Harv Bus Rev 85(12):20–22

Rochet JC, Tirole J (2006) Two-sided markets: a progress report. RAND J Econ 37(3):645–667

Rouziès D, Anderson E, Kohli AK, Michaels RE, Weitz BA, Zoltners AA (2005) Sales and marketing integration: a proposed framework. J Pers Sell Sales Manag 25(2):113–122

Ross A, Dröge C (2004) An analysis of operations efficiency in large-scale distribution systems. J Oper Manag 21(6):673–688

Shaw AW (1912) Some problems in market distribution. Q J Econ 26(August):706–765

Shaw EH (2010) Revisiting and revising Alderson’s formula to measure the productivity of the aggregate marketing system. Mark Theory 10(4):347–367

Smith K (1998) Advertisers’ media selection in small newspaper markets. Newsp Res J 19(1):30–44

Soley LC, Craig RL (1992) Advertising pressures on newspapers: a survey. J Advert 21(4):1–10

Sridhar S, Mantrala MK, Naik PA, Thorson E (2011) Dynamic marketing investment strategies for platform firms. J Mark Res 48(6):929–943

Tabachnick BG, Fidell LS (2007) Using multivariate statistics, 5th edn. Allyn and Bacon, New York

Tone K, Tsutsui M (2009) Network DEA: a slacks-based measure approach. Eur J Oper Res 197(1):243–252

Wang HJ (2003) A stochastic frontier analysis of financing constraints on investment. J Bus Econ Stat 21(3):406–419

Warner C, Buchman J (1991) Broadcast and cable selling (2nd ed.), Wadsworth Inc

Wheelock DC, Wilson PW (2009) Robust nonparametric quantile estimation of efficiency and productivity change in U.S. commercial banking. J Bus Econ Stat 27(3):354–368

Wilson PW (1993) Detecting outliers in deterministic nonparametric frontier models with multiple outputs. J Bus Econ Stat 11(3):319–323

Zairi M (1998) Benchmarking for best practice, Butterworth-Heinemann, Oxford Camp, R. C. (1995), Business process benchmarking: finding and implementing best practices. Milwaukee, WI, ASQC Quality Press

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendices

1.1 Appendix 1. Obtaining Efficiency Scores Through DEA

We describe the slacks-based approach of DEA to measure efficiency score (ρ). It provides a scalar efficiency score invariant to the units of measurement used for inputs or outputs. The dataset contains m inputs of n DMUs given by the n × m matrix X and s outputs of the n DMUs given by the n × s matrix Y. The input and output vectors of a specific DMU are given by \( \left({\boldsymbol{\mathsf{x}}}_{\mathbf{0}},{\boldsymbol{\mathsf{y}}}_{\mathbf{0}}\right) \), where x 0 is an m × 1 vector and y 0 is an s × 1 vector. A particular DMU’s production possibility set can be written as follows:

where μ is a n × 1 nonnegative vector. The use of μ makes the input–output vector of a particular DMU \( \left({\boldsymbol{\mathsf{x}}}_{\mathbf{0}},{\boldsymbol{\mathsf{y}}}_{\mathbf{0}}\right) \) comparable to the inputs and outputs of all DMUs (captured in (X, Y)). Thus, given a DMU’s input–output vector (x 0 , y 0 ), any hypothetical input–output value (x 0 ≥ X ′ μ, y 0 ≤ Y ′ μ) is producible, where X ′ μ is the least input and Y ′ μ is the most output in the dataset. We rewrite a DMU’s input–output vector \( \left({\boldsymbol{\mathsf{x}}}_{\mathbf{0}},{\boldsymbol{\mathsf{y}}}_{\mathbf{0}}\right) \) as follows:

In Eqs. 8 and 9, κ − denotes the m × 1 input excess slack vector and κ + represents the s × 1 output shortfall vector. When κ − > 0, the DMU is inefficient since it uses more input (than at least one other DMU in the sample) to achieve a given output level. When κ + > 0, the DMU is inefficient on account of producing lesser output (than at least one other DMU in the sample), while using a given input level. A DMU’s scalar efficiency score (ρ) is given by:

We calculate DEA efficiency scores by finding the weights (μ) and the slack values that allow a DMU to achieve the best efficiency score relative to all other DMUs subject to the constraint that no DMU can have an efficiency score greater than 1. Specifically, we obtain the score by solving the optimization problem:

The minimized objective function value ρ* for a DMU serves as the efficiency score. A DMU is termed as 100 % efficient when ρ* = 1.

1.2 Appendix 2. Obtaining Efficiency Scores Through NDEA

Consider a setting with n DMUs (j = 1, …, n) consisting of W sub-DMUs w = (1, …, W) each. Let m w and s w be the numbers of inputs and outputs, respectively, for the sub-DMU w. Let the input vector and output vector for the w th sub-DMU be x w 0 and y w 0 , respectively, where x w 0 is an m w × 1 vector and y w 0 is an s w × 1 vector. We describe the slacks-based approach of NDEA to obtain the efficiency score for DMUs as well as each of their constituent sub-DMUs.

To capture the interconnected structure, let sub-DMU w provide l outputs that sub-DMU t uses as inputs. The l outputs together form the linked intermediate output vector that establishes the connection between sub-DMU w and sub-DMU t. We denote the linked intermediate output vector as denoted by z (w,t) 0 , a vector with dimensionality l w × 1. We now define the production possibility set of the w th sub-DMU of a DMU as follows:

In Eq. 19, μ w is a n × 1 nonnegative vector. μ w enables the comparison of the input–output vector of a particular sub-DMU within a DMU (x w 0 , y w 0 ) to the inputs and outputs of the same sub-DMU across all DMUs. The collective input–output vector of a particular sub-DMU across all DMUs is captured in (X w, Y w), where X w is the n × m w input matrix of the w th sub-DMU across all DMUs, and Y w is the n × s w output matrix of the w th sub-DMU across all DMUs.

The vector μ w also enables the comparison of the linked intermediate output vector of a particular sub-DMU within a DMU z (w,t)0 to the collective linked intermediate output vector of the same sub-DMU across all DMUs. We denote the collective linked intermediate output vector as Z (w,t), an n × l w matrix of linked outputs that encapsulate the outputs produced by the w th sub-DMU and used as inputs by the t th sub-DMU. Analogous to DEA, we establish the following equality in NDEA:

where \( {\boldsymbol{\mathsf{\boldsymbol{\kappa}}}}_{\boldsymbol{\mathsf{w}}}^{\boldsymbol{\hbox{-}}} \) is the input excess slack vector, and the \( {\boldsymbol{\mathsf{\boldsymbol{\kappa}}}}_{\boldsymbol{\mathsf{w}}}^{+} \) is the output shortfall vector of the w th sub-DMU. In addition, we introduce a linking constraint to capture the idea that the intermediate linked outputs produced by the w th sub-DMU are beyond the control of the t th sub-DMU (see [32], p. 4):

Now, the sub-DMU level efficiency score (ρ w ) is given by

and the DMU’s overall efficiency scores across all sub-DMUs is given by

To solve for both the sub-DMU and DMU efficiencies, we determine the weights (μ w ) for each sub-DMU and the slack values by allowing the firm to be as efficient as possible given its constraints. To this end, we obtain ρ* (DMU efficiency) and ρ * w (sub-DMU efficiency) by solving the following problem:

1.3 Appendix 3. Multivariate Sliced Inverse Regression

Consider the univariate SIR model, Y = g(X α 1, X α 2, ⋯, X α r ), described by Eq. 5 where Y is a univariate vector of n observations, g(.) is an unknown function, X is an n × m matrix of m inputs, α j (j = 1, …, r) are m × 1 vectors of coefficients (r < m). To estimate the set of vectors α j , we first define the covariance matrix

Then, we solve the generalized eigenvalue decomposition of Σ η with respect to the covariance matrix Σ X . The set of vectors α j yield the solutions to the eigenvalue decomposition:

To implement the eigenvalue decomposition using sample data, we require a sample estimate of the matrix Σ η . Accordingly, we create H slices of Y and denote the slices as h = (1, 2, … H). Further, we partition the corresponding rows of the X matrix into H slices. Next, we compute the means of the independent variables in each slice and denote them by \( {\overline{\mathbf{X}}}_h \). We obtain the sample estimate of Σ η via

In (C5), \( {\widehat{p}}_h \) is the proportion of observations falling into the slice h, and \( \overline{X} \) is the grand mean across the entire sample. We replace Σ η in Eq. 20 by Eq. 23 and Σ X by the usual sample variance–covariance matrix.

For our purpose, we need to extract only the principal eigenvector, i.e., we only need the first of the vectors α j . The principal eigenvector yields the estimated \( {\widehat{\alpha}}_1 \), which is consistent and not sensitive to the number of slices [24]. We obtain the standard errors of \( {\widehat{\alpha}}_1 \) from the square root of the diagonal values of the following matrix:

Next, to accommodate multiple outputs, we create a composite-dependent variable Y θ 1, where θ 1 is the s × 1 vector of weights in the following model:

To estimate the weights θ 1, we switch the roles of (Y, X) in the above univariate SIR algorithm. Specifically, we consider the composite scalar variable of n observations \( {\mathrm{COMP}}_X=\mathbf{X}{\widehat{\alpha}}_1 \) as the new dependent variable and consider the matrix Y as the new independent variables. We then reapply the univariate SIR algorithm to extract the principal eigenvector of s × 1 dimension that serves as the estimate of θ 1. We then recreate the scalar variable of n observations \( {\mathrm{COMP}}_Y=\boldsymbol{Y}{\widehat{\theta}}_1 \). Using COMP Y and the data matrix X, we apply the univariate SIR algorithm to obtain \( {\widehat{\alpha}}_1; \) then by using the resulting COMP X and the data matrix Y, we obtain \( {\widehat{\theta}}_1 \). We alternate these two steps until convergence in the estimates \( \left({\widehat{\theta}}_1,{\widehat{\alpha}}_1\right) \). By alternating these steps, MSIR reduces the dimensionality of both Y and X without knowing the link function g(⋅). Li et al. [24] developed this iterative procedure, known as the alternating SIR, to tackle multivariate response variables and proved that the algorithm converges in finite number of steps equal to the dimensionality of α 1.

Rights and permissions

About this article

Cite this article

Sridhar, S., Mantrala, M.K. & Naik, P.A. Efficiency Analysis of Marketing Organizations with Interconnected Departments. Cust. Need. and Solut. 1, 154–167 (2014). https://doi.org/10.1007/s40547-014-0014-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40547-014-0014-z