Abstract

Objectives

To identify early nutritional risk in older populations, simple screening approaches are needed. This study aimed to compare nutrition risk scores, calculated from a short checklist, with diet quality and health outcomes, both at baseline and prospectively over a 2.5-year follow-up period; the association between baseline scores and risk of mortality over the follow-up period was assessed.

Methods

The study included 86 community-dwelling older adults in Southampton, UK, recruited from outpatient clinics. At both assessments, hand grip strength was measured using a Jamar dynamometer. Diet was assessed using a short validated food frequency questionnaire; derived ‘prudent’ diet scores described diet quality. Body mass index (BMI) was calculated and weight loss was self-reported. Nutrition risk scores were calculated from a checklist adapted from the DETERMINE (range 0–17).

Results

The mean age of participants at baseline (n = 86) was 78 (SD 8) years; half (53%) scored ‘moderate’ or ‘high’ nutritional risk, using the checklist adapted from DETERMINE. In cross-sectional analyses, after adjusting for age, sex and education, higher nutrition risk scores were associated with lower grip strength [difference in grip strength: − 0.09, 95% CI (− 0.17, − 0.02) SD per unit increase in nutrition risk score, p = 0.017] and poorer diet quality [prudent diet score: − 0.12, 95% CI (− 0.21, − 0.02) SD, p = 0.013]. The association with diet quality was robust to further adjustment for number of comorbidities, whereas the association with grip strength was attenuated. Nutrition risk scores were not related to reported weight loss or BMI at baseline. In longitudinal analyses there was an association between baseline nutrition risk score and lower grip strength at follow-up [fully-adjusted model: − 0.12, 95% CI (− 0.23, − 0.02) SD, p = 0.024]. Baseline nutrition risk score was also associated with greater risk of mortality [unadjusted hazard ratio per unit increase in score: 1.29 (1.01, 1.63), p = 0.039]; however, this association was attenuated after adjustment for sex and age.

Conclusions

Cross-sectional associations between higher nutrition risk scores, assessed from a short checklist, and poorer diet quality suggest that this approach may hold promise as a simple way of screening older populations. Further larger prospective studies are needed to explore the predictive ability of this screening approach and its potential to detect nutritional risk in older adults.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The implementation of malnutrition screening, using standardised tools, has led to better recognition of the poorer health outcomes associated with it, such as sarcopenia, frailty and mortality [1,2,3]. Importantly, malnutrition is a common clinical problem in older populations [1]. Screening approaches that enable early identification of malnutrition risk in older people could be important to prevent the development of malnutrition, and the related detrimental effects on health [3]. Despite increased awareness of malnutrition, the preceding trajectories of change in dietary habits in older age, that can lead to greater nutritional risk, are poorly described [4]. Furthermore, as many malnutrition screening tools identify weight loss and thinness, they may not be designed to describe other aspects of poor nutrition, such as poor diet quality, low protein intakes, insufficient micronutrient intakes (such as vitamins D, E, C and folate) and micronutrient deficiencies (such as vitamin B12 and folate) and, therefore, lack sensitivity to identify those at risk [5, 6]. To identify signs of early nutritional risk, and to allow intervention before overt malnutrition develops, a different approach to screening is required. One such tool is the ‘Determine your Nutritional Health’ (DETERMINE) tool, developed by the US Nutrition Screening Initiative to identify and treat nutritional problems in older populations [7]. DETERMINE was designed for self-completion, requiring no specialist knowledge or equipment. The tool includes ten questions on age-related and contextual factors that are linked to poor nutrition in older age; responses are weighted to calculate an overall nutrition risk ‘score’, with thresholds given to identify categories of risk. Older adults with high nutritional risk, assessed using this tool, have been shown to be more likely to have low nutrient intakes and to report poorer health [7]. However, studies of its prediction of mortality in older populations have yielded mixed findings [8,9,10].

Therefore, the aim of the current study was to assess the use of an adapted DETERMINE checklist to calculate a nutrition risk score in a group of older community-dwelling adults in the UK. In this exploratory study, we assess the utility of this approach by determining associations of the score with hand grip strength, which is linked to malnutrition [11], diet quality, body mass index and reported weight loss, both cross-sectional and longitudinally over a 2.5-year period, and by evaluating the association between the baseline score and risk of mortality over the follow-up period.

Methods

Participants

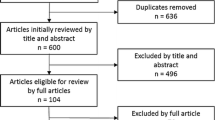

At baseline, 86 older adults were recruited to the study from three outpatient clinics in Southampton [n = 27 (31%) Comprehensive Geriatric Assessment (CGA); n = 32 (37%) syncope clinic; n = 27 (31%) fragility fracture clinic]. A total of 545 patients from these clinics were screened for eligibility; of these, 224 were eligible to take part, and 86 (38%) agreed to participate in the study. Eligibility criteria were: aged 60 years or older, not acutely unwell, capable of giving informed consent. All participants who expressed an interest were visited at baseline at home by a researcher (AP), between March 2015 and June 2016. The participants were followed up by the same researcher (AP) 2.5 years after baseline, between September 2017 and December 2018. Of the 86 participants who were visited at baseline, 8 (9%) died during the follow-up period (with date of death available); 53 (62%) received a follow-up home visit; the remaining 25 (29%) participants were not followed up for the following reasons: death with no date available (n = 1), lacking capacity to consent (n = 5), declined (n = 15), relocated (n = 2), they were too unwell (n = 2).

The study had ethical approval from the National Research Ethics Service Committee Southwest, 14/SW/1129. All participants gave written informed consent. The datasets generated and analysed during the current study are available from the corresponding author on reasonable request.

Home visits—baseline and follow-up

Information on background characteristics, including age and age at leaving full-time education, was obtained by questionnaire at the baseline interviews. At both interviews data were collected on lifestyle, health, social, and psychological factors [12,13,14,15,16,17,18]. Participants reported their number of doctor-diagnosed comorbidities out of the following: heart attack, congestive heart failure, angina, stroke, mini-stroke or transient ischemic attack (TIA), hypertension, diabetes, asthma, depression, chronic lung disease, kidney disease, cancer, or any other serious disease. Appetite was assessed using the Simplified Nutritional Appetite Questionnaire (SNAQ) [19]. Grip strength and weight were measured.

Outcome measures assessed at baseline and follow-up

Grip strength

Maximal grip strength was measured using a handgrip Jamar dynamometer (Lafayette Instrument Company, USA). Grip strength was measured, to the nearest kg, three times in each hand and the maximum value was used for the analyses [20].

Diet quality

Diet was assessed using an administered 24-item food frequency questionnaire that was developed to describe diet quality in community-dwelling older adults [13]. Based on a participant’s reported frequencies of consumption of the listed foods, a ‘prudent’ dietary pattern score is calculated which describes compliance with this pattern [13]. High scores indicate diets characterised by frequent consumption of fruit, vegetables, wholegrain cereals and oily fish but low consumption of white bread, added sugar, full-fat dairy products, chips and processed meat, aligning with healthy eating guidance [13]. Participants’ prudent diet scores were interpreted as an indication of their diet quality.

Body mass index (BMI)

Self-reported height was recorded. Body weight was measured to the nearest 0.1 kg, with the participant wearing clothes and shoes, using portable SECA standing balance scales (model 875). BMI (kg/m2) was calculated [weight (kg)/height (m)2].

Weight loss

Weight loss was self-reported; participants were asked if they had lost any weight unintentionally within the past 12 months and, if so, how much weight they had lost to the nearest 0.1 kg.

Mortality

Participant deaths from any cause, from baseline until the follow-up home visit, were extracted from medical records.

Nutrition risk score

A nutrition risk score was calculated for each participant at baseline (n = 86), and at follow-up (n = 53), using a checklist adapted from the DETERMINE checklist (Table 1 shows the original DETERMINE checklist) [7]. Our adapted checklist was based on eight of the ten components assessed in the DETERMINE; we omitted two items: 3 (‘I eat few fruits or vegetables or milk products’) and 9 (‘without wanting to, I have lost or gained 10 lb in the last 6 months) as diet quality and weight loss were outcome measures in our analyses.

We applied the published weighting scores to the remaining eight components in the checklist to calculate nutrition risk scores (Table 1). However, there were some differences in the way that variables were derived, when compared with the original study. In some cases these were differences in wording such that information collected from participants was mapped onto the original DETERMINE questions to derive equivalent information. For example, DETERMINE item 2 (‘I eat fewer than 2 meals per day’) was defined from participant responses to a question in the SNAQ [19] (‘Normally I eat < 1, 1, 2, 3, > 3 meals a day’; < 1 or 1 meal per day scored 3). In other cases, we used related background information to derive an equivalent variable. For example, we assessed food insecurity using a six-item food security module [21]; participants who were food insecure (score ≥ 2) were given a weighting of 4 (item 6, Table 1).

Statistical analysis

Baseline descriptive characteristics are given as mean with standard deviation (SD) for continuous normally distributed variables, median with interquartile range (IQR) for continuous variables with a skewed distribution, or counts and percentages for categorical variables, as appropriate. The calculated nutrition risk score was used as a continuous variable in regression analyses, but shown in categories for presentation (‘low’ (0–2), ‘moderate’ (3–5) and ‘high’ (≥ 6) nutritional risk, Table 1); although we did not include two of the factors in our nutrition risk score calculations we used the published DETERMINE thresholds to categorise different levels of risk [7]. The relationships between nutrition risk score and grip strength, diet quality (prudent diet score) and BMI were examined using multivariate linear regressions. Since BMI was not normally distributed, a Fisher–Yates rank-based inverse normal transformation was performed to create z-scores (FY z-scores). We also transformed the prudent diet score and grip strength variables to create z-scores (FY z-scores) to enable the comparison of effect sizes. The association between nutrition risk score and weight loss (any unintentional weight loss in preceding year: yes/no), was examined using multivariate logistic regressions. Additional cross-sectional analyses considered whether inclusion of weight loss in the calculation of the nutrition risk score made a difference in terms of its associations with grip strength and diet quality. In the follow-up sub-group, longitudinal associations between baseline nutrition risk score and follow-up level of outcome measures were examined. As we have previously shown that prudent diet scores are generally higher among older women, compared with older men, and diet quality is positively associated with education [22], we adjusted for sex and education in our multivariate models. Analyses were performed with adjustments for sex, age and age left education; final models also took account of the number of comorbidities, type of clinic attended, and of follow-up time (in longitudinal analyses).

Additional analyses examined the relationship between baseline nutrition risk scores and risk of mortality in the period between baseline and follow-up home visits using Cox regression, with and without adjustment for age and sex. This was conducted among the 61 participants who either attended the follow-up home visit and were censored at this date (n = 53) or who died between the baseline and follow-up home visits with a date of death available (n = 8). Finally, receiver operating characteristic (ROC) analyses were performed to further evaluate the predictive value of nutrition risk scores in relation to low grip strength (both at baseline and at follow-up) (using the EWGSOP2 cut-off points of < 27 kg (men) and < 16 kg (women) [2]) and poor diet quality (both at baseline and at follow-up) (prudent diet scores in the lowest quarter of the distribution). Data were analysed using Stata version 14.2.

Results

At baseline, participants (n = 86) were aged between 60 and 93 years (mean age 78 (SD 8) years) and 53 (62%) of the study participants were women. The baseline characteristics are shown for the whole group, and according to category of nutritional risk, in Table 2. Over a third (36%) of all participants were living alone. The median nutrition risk score at baseline was 3 (IQR 1–5). Almost half (n = 40, 47%) of the participants were in the low nutritional risk category, 31 (36%) were at moderate risk and 15 (17%) were at high risk. As there were no statistically significant differences in nutrition risk scores between men and women [median nutrition risk score: 2 (IQR 1–5) in men, and 3 (IQR 1–4) in women, p = 0.276], separate analyses were not carried out.

Participants with greater nutritional risk tended to be older and to have a greater number of comorbidities (Table 2). Univariate analyses showed a strong association between age and nutrition risk score at baseline (Table 2), such that 67% (n = 10) of the high risk group were aged over 80 years, compared with 23% (n = 9) of the low risk group. A higher nutrition risk score was associated with lower grip strength and poorer diet quality, but was not related to BMI or reported weight loss. The associations with diet quality and grip strength are illustrated in Fig. 1.

Diet quality [prudent diet score (z-score)] and grip strength (kg) according to category of nutritional risk at baseline [7], in older men and women studied (bars represent 95% CI for mean). Unadjusted p values for trend across the continuous nutrition risk score variable (values ranging from 0–11) among the pooled sample of men and women are shown

Table 3 shows the associations between baseline nutrition risk score and grip strength, prudent diet score, body mass index (BMI) and weight loss, in the multivariate models.

After adjusting for age, sex and age at leaving education, the association between higher nutrition risk scores and lower baseline grip strength, and poorer baseline diet quality, remained. Following further adjustment for number of comorbidities and type of clinic attended, the association between nutrition risk score and baseline prudent diet score remained, but the association with baseline grip strength was attenuated. Our final cross-sectional analyses considered the impact of the inclusion of information about weight loss in the calculation of the nutrition risk score (12 participants lost weight above the DETERMINE threshold, as set out in Table 1, item 9). However, when the recalculated scores were used in age and sex-adjusted models, there was little change in the associations between nutrition risk scores and either grip strength or diet quality (data not shown).

In longitudinal analyses, there was an association between higher baseline nutrition risk score and lower grip strength at follow-up in the sub-group who were reassessed, which remained in the fully-adjusted model (adjusted for sex, age, age left education, number of comorbidities, type of clinic attended and follow-up time). In contrast, there were no associations with the other outcomes assessed at follow-up, in the fully-adjusted analysis.

Cross-sectional associations at follow-up between nutrition risk score and prudent diet score were also assessed; there was an association between higher nutrition risk score at follow-up and lower prudent diet score at follow-up, after adjustment for sex, age and age left education [prudent diet score at follow-up: − 0.16, 95% CI (− 0.28, − 0.04) SD, p = 0.009]. However, unlike the baseline cross-sectional association, this was not robust to adjustment in the multivariate model in the sub-group of participants who were followed up [− 0.11, 95% CI (− 0.24, 0.03) SD, p = 0.108].

Baseline nutrition risk score was related to greater risk of mortality [unadjusted hazard ratio per unit increase in score: 1.29 (1.01, 1.63), p = 0.039] during the follow-up period. However, this association was not robust to adjustment for the effects of sex and age [hazard ratio: 1.08 (0.83, 1.40), p = 0.569].

Our final analyses used ROC-curves to evaluate the predictive ability of the nutrition risk score to identify individuals with low grip strength and poor diet quality, both at baseline and at follow-up. There were no significant differences in the area under curve (AUC) when comparing models with sex and age as predictors and those additionally including nutrition risk scores (p > 0.1 for all comparisons) (data not shown).

Discussion

In this study we applied a checklist adapted from the DETERMINE nutrition screening tool to identify nutritional risk, and assessed its relationships with diet quality and health outcomes in a community-dwelling group of older adults in the UK. In cross-sectional analyses at baseline, greater nutritional risk was associated with lower grip strength and with poorer diet quality. However, the association with grip strength was attenuated when adjusting for number of comorbidities. We found no associations between nutrition risk scores and reported weight loss or BMI at baseline. In longitudinal analyses, greater nutritional risk at baseline was associated with lower grip strength at follow-up, even after adjustment for possible confounding factors. In contrast, there were no independent associations with diet quality at follow-up. Furthermore, additional analyses suggested no added predictive value of nutritional risk score, when added to models using sex and age as predictors of mortality or in the prediction of low grip strength or poor diet quality.

The importance of nutrition as an influence on health in older age is widely recognised [23, 24]. However, much current research focuses on malnutrition, and less is known about the preceding determinants of trajectories of change in diet in older age, and adverse changes in nutrition that may be happening before there is unintended weight loss or marked falls in body mass [25]. Early identification of nutritional risk, identifying risk before overt malnutrition has developed, should be key to prevention, prompting intervention to improve outcomes [26]. However, current malnutrition screening tools may not be designed to detect early signs of poor nutrition such as declining diet quality. The observed prevalence of poor diet quality in older populations [27,28,29], together with findings of low nutrient intakes among older adults who are not at risk of malnutrition when screened [5, 6], highlight the need for new screening approaches to identify and quantify that early risk. Although a recent review identified more than 30 malnutrition screening tools [30], surprisingly few have been developed to screen for other aspects of declining nutrition in older populations. There are validated short dietary assessment questionnaires that quantify dietary intake [13, 31, 32], that do not take account of wider influences on diet, such as the contextual and age-related factors that are known to contribute to nutritional risk [24, 33]. Conversely, other screening methods that address some of these wider determinants of poor nutrition, may not quantify nutritional risk [34,35,36]. There are, therefore, few studies to compare our findings with directly and none, to our knowledge, in the UK. More research is needed into screening tools that could enable the identification of early signs of poor nutrition, particularly in a UK context.

Our study showed that a short set of eight questions, that can be scored easily, yielded a nutrition risk score that was related prospectively to lower grip strength, an important biomarker of morbidity and mortality [2]. Consistent with this, other studies point to the utility of this tool for the prediction of outcomes related to independence and functional capacity. In a study of US older women, higher nutritional risk assessed with DETERMINE was negatively associated with living independently in the community, and it was suggested that this tool could have potential to identify people who might be at increased risk of losing independence [37]. In a study of independent Japanese community-living older adults, high nutrition risk assessed with this tool at baseline was associated with functional decline in both activities of daily living (ADL) and instrumental ADL (IADL) over a 2-year period [38].

However, although the nutrition risk score was associated with diet quality in cross-sectional analyses, we found no prospective association with overall quality of diet over time in the follow-up sub-group. This may be due to changes in diet over the follow-up period, as a result of ageing-related factors, such as bereavement or onset of illness [24, 39], but is also consistent with mixed evidence from other settings using the DETERMINE tool to indicate differences in diet. For example, in the original study higher scores were linked to greater risk of low nutrient intakes and poorer health [7], but in a cross-sectional US study of community-dwelling older women, the DETERMINE checklist did not identify participants with low nutrient intake (those with < 75% of the recommended intake for eight selected nutrients) [40]. Our study did not find an independent association between baseline nutrition risk score and greater risk of mortality, when adjusted for sex and age. Although some studies have not found the DETERMINE tool to be a significant predictor of mortality in older populations [9, 10], in a relatively large study of older US adults (n = 978), nutritional risk calculated using this checklist was associated with all-cause hospitalizations, nonsurgical hospitalizations, and mortality, over a follow-up period of 8.5 years [8].

Because part of our aims was to use diet quality and weight loss as outcomes measures in our analyses, we omitted two items of the DETERMINE checklist, thus the scoring of our adapted tool effectively lowered the overall nutrition risk scores of the participants in our study. However, we found a comparable proportion of older adults categorised as being at high nutritional risk using the published thresholds (17% with score ≥ 6) as reported among adults of similar age in the original DETERMINE study (24%) [7] and to another study of older people in the US [mean age 75.3 (6.7 SD) years], where 20.9% of participants were at high nutritional risk [8]. A similar prevalence was also found in an older European population, where 19% of the Danish participants of the SENECA (Survey in Europe of Nutrition in the Elderly, a Concerted Action) study were found to be at high nutritional risk according to the DETERMINE checklist [10]. A recent systematic review, using data from malnutrition screening tools, indicated that up to 23% of older adults in Europe could be at high risk of malnutrition, across all settings. Moreover, it showed that the prevalence of high malnutrition risk among older adults living in the community was 8.5% [41], which is considerably lower than the figures for high nutritional risk assessed using the DETERMINE tool.

The present study has a number of limitations. Firstly, this was a preliminary study to assess the use of a screening method and its potential to detect early nutritional risk. We did not carry out a power calculation in this exploratory study, and the sample size was small, limiting the statistical power of the study. Furthermore, although we were able to follow up the majority (71%) of participants assessed at baseline, prospective data on grip strength and diet quality were only available for a sub-group of participants. Secondly, there were differences in the way that we derived the variables to be scored using the DETERMINE checklist when compared with the original version, and that affected individual scores. However, we think it is unlikely that small differences in scoring method would explain the associations that we observed. Finally, we studied a small group of older men and women, recruited from outpatient clinics, who had on average more than four comorbidities; thus, study participants may not be representative of the wider population of older adults. This has implications for the generalisability of the findings, and particularly for the prevalence of higher nutritional risk we report, which may be higher in this study than in the broader community-living older adult population, which includes older people not attending outpatient clinics and with likely fewer health conditions on average.

This study found cross-sectional associations between higher nutrition risk scores, assessed from a short checklist, and poorer diet quality, both at baseline and at follow-up. This suggests that this screening method might provide useful information at the time of screening. The nutrition risk score was also associated prospectively with lower grip strength; however, its predictive ability of later outcomes was uncertain, as findings suggested no added value to predictions based on age and sex. Further longitudinal research, with larger study populations, is needed to establish the predictive ability of the tool. Further studies are needed to explore its potential to detect nutritional risk in a range of older populations. Early screening may help to address nutritional risk in a timely manner in older adults living in the community.

References

Stratton R, Smith T, Gabe S (2018) Managing malnutrition to improve lives and save money. BAPEN (British Association of Parenteral and Enteral Nutrition). https://www.bapen.org.uk/resources-and-education/publications-and-reports/malnutrition. Accessed 12 Dec 2019

Cruz-Jentoft AJ, Bahat G, Bauer J et al (2019) Sarcopenia: revised European consensus on definition and diagnosis. Age Ageing 48:16–31. https://doi.org/10.1093/ageing/afy169

Visser M, Volkert D, Corish C et al (2017) Tackling the increasing problem of malnutrition in older persons: the Malnutrition in the Elderly (MaNuEL) knowledge hub. Nutr Bull 42:178–186. https://doi.org/10.1111/nbu.12268

Lengyel CO, Jiang D, Tate RB (2017) Trajectories of nutritional risk: the Manitoba follow-up study. J Nutr Health Aging 21:604–609

Jyväkorpi SK, Pitkälä KH, Puranen TM et al (2016) High proportions of older people with normal nutritional status have poor protein intake and low diet quality. Arch Gerontol Geriatr 67:40–45. https://doi.org/10.1016/j.archger.2016.06.012

Soysal P, Smith L, Capar E et al (2019) Vitamin B12 and folate deficiencies are not associated with nutritional or weight status in older adults. Exp Gerontol 116:1–6. https://doi.org/10.1016/j.exger.2018.12.007

Posner BM, Jette AM, Smith KW et al (1993) Nutrition and health risks in the elderly: the nutrition screening initiative. Am J Public Health 83:972–978

Buys DR, Roth DL, Ritchie CS et al (2014) Nutritional risk and body mass index predict hospitalization, nursing home admissions, and mortality in community-dwelling older adults: results from the UAB Study of Aging with 8.5 years of follow-up. J Gerontol A Biol Sci Med Sci 69:1146–1153. https://doi.org/10.1093/gerona/glu024

Sahyoun NR, Jacques PF, Dallal GE et al (1997) Nutrition screening initiative checklist may be a better awareness/educational tool than a screening one. J Am Diet Assoc 97:760–764. https://doi.org/10.1016/S0002-8223(97)00188-0

Beck AM, Ovesen L, Osler M (1999) The ‘Mini Nutritional Assessment’ (MNA) and the ‘Determine Your Nutritional Health’ checklist (NSI checklist) as predictors of morbidity and mortality in an elderly Danish population. Br J Nutr 81:31–36. https://doi.org/10.1017/S0007114599000112

Jensen GL, Cederholm T, Correia MITD et al (2019) GLIM criteria for the diagnosis of malnutrition: a consensus report from the global clinical nutrition community. JPEN J Parenter Enteral Nutr 43:32–40. https://doi.org/10.1002/jpen.1440

IPAQ group (2005) Guidelines for Data Processing and Analysis of the International Physical Activity Questionnaire (IPAQ) – Short and Long Forms. https://biobank.ctsu.ox.ac.uk/crystal/crystal/docs/ipaq_analysis.pdf. Accessed 15 Nov 2018

Robinson SM, Jameson KA, Bloom I et al (2017) Development of a short questionnaire to assess diet quality among older community-dwelling adults. J Nutr Health Aging 21:247–253. https://doi.org/10.1007/s12603-016-0758-2

Fried LP, Tangen CM, Walston J et al (2001) Frailty in older adults: Evidence for a phenotype. J Gerontol Ser Biol Sci Med Sci 56:M146–M156

Syddall HE, Martin HJ, Harwood RH et al (2009) The SF-36: a simple, effective measure of mobility-disability for epidemiological studies. J Nutr Health Aging 13:57–62

Tombaugh TN, McIntyre NJ (1992) The mini-mental state examination: a comprehensive review. J Am Geriatr Soc 40:922–935

Lubben J, Blozik E, Gillmann G et al (2006) Performance of an abbreviated version of the Lubben Social Network Scale among three European community-dwelling older adult populations. Gerontologist 46:503–513. https://doi.org/10.1093/geront/46.4.503

Baillon S, Dennis M, Lo N et al (2014) Screening for depression in Parkinson’s disease: the performance of two screening questions. Age Ageing 43:200–205. https://doi.org/10.1093/ageing/aft152

Wilson M-MG, Thomas DR, Rubenstein LZ et al (2005) Appetite assessment: simple appetite questionnaire predicts weight loss in community-dwelling adults and nursing home residents. Am J Clin Nutr 82:1074–1081. https://doi.org/10.1093/ajcn/82.5.1074

Roberts HC, Denison HJ, Martin HJ et al (2011) A review of the measurement of grip strength in clinical and epidemiological studies: towards a standardised approach. Age Ageing 40:423–429. https://doi.org/10.1093/ageing/afr051

Blumberg SJ, Bialostosky K, Hamilton WL et al (1999) The effectiveness of a short form of the Household Food Security Scale. Am J Public Health 89:1231–1234

Robinson S, Syddall H, Jameson K et al (2009) Current patterns of diet in community-dwelling older men and women: results from the Hertfordshire Cohort Study. Age Ageing 38:594–599

Drewnowski A, Evans WJ (2001) Nutrition, physical activity, and quality of life in older adults: summary. J Gerontol A Biol Sci Med Sci 56:89–94

Shlisky J, Wu D, Meydani SN et al (2017) Nutritional considerations for healthy aging and reduction in age-related chronic disease. Adv Nutr 8:17–26. https://doi.org/10.3945/an.116.013474

Volkert D, Beck AM, Cederholm T et al (2019) ESPEN guideline on clinical nutrition and hydration in geriatrics. Hum Nutr Clin Nutr 38:10–47. https://doi.org/10.1016/j.clnu.2018.05.024

Keller HH (2007) Promoting food intake in older adults living in the community: a review. Appl Physiol Nutr Metab 32:991–1000. https://doi.org/10.1139/h07-067

Irz X, Fratiglioni L, Kuosmanen N et al (2014) Sociodemographic determinants of diet quality of the EU elderly: a comparative analysis in four countries. Public Health Nutr 17:1177–1189. https://doi.org/10.1017/s1368980013001146

Maynard M, Gunnell D, Ness AR et al (2006) What influences diet in early old age? Prospective and cross-sectional analyses of the Boyd Orr cohort. Eur J Public Health 16:316–324. https://doi.org/10.1093/eurpub/cki167

Hengeveld LM, Wijnhoven HAH, Olthof MR et al (2018) Prospective associations of poor diet quality with long-term incidence of protein-energy malnutrition in community-dwelling older adults: the Health, Aging, and Body Composition (Health ABC) study. Am J Clin Nutr 107:155–164. https://doi.org/10.1093/ajcn/nqx020

Power L, Mullally D, Gibney ER et al (2018) A review of the validity of malnutrition screening tools used in older adults in community and healthcare settings—a MaNuEL study. Clin Nutr ESPEN 24:1–13. https://doi.org/10.1016/j.clnesp.2018.02.005

Bailey RL, Miller PE, Mitchell DC et al (2009) Dietary screening tool identifies nutritional risk in older adults. Am J Clin Nutr 90:177–183. https://doi.org/10.3945/ajcn.2008.27268

Papadaki A, Scott JA (2007) Relative validity and utility of a short food frequency questionnaire assessing the intake of legumes in Scottish women. J Hum Nutr Diet 20:467–475. https://doi.org/10.1111/j.1365-277X.2007.00809.x

Nieuwenhuizen WF, Weenen H, Rigby P et al (2010) Older adults and patients in need of nutritional support: review of current treatment options and factors influencing nutritional intake. Hum Nutr Clin Nutr 29:160–169. https://doi.org/10.1016/j.clnu.2009.09.003

Brighton and Hove Food Partnership (2017) Eating well to stay healthy as you age. https://bhfood.org.uk/resources/eat-well-as-you-age/. Accessed 06/03/2019

Food and Fuel Working Party Kensington and Chelsea (2015) Food for vitality: are you getting enough nutrition? https://www.peoplefirstinfo.org.uk/leaflets-library/leaflets-for-people-in-kensington-and-chelsea/. Accessed 06/03/2019

NHS Leeds Community Healthcare, NHS Leeds, Leeds City Council (2010) Spotting the signs of malnutrition. https://www.leeds.gov.uk/docs/spotting%20the%20signs%20of%20malnutrition.pdf. Accessed 06/03/2019

Brunt AR (2007) The ability of the DETERMINE checklist to predict continued community-dwelling in rural, white women. J Nutr Elder 25(3–4):41–59. https://doi.org/10.1300/J052v25n03_04

Sugiura Y, Tanimoto Y, Imbe A et al (2016) Association between functional capacity decline and nutritional status based on the nutrition screening initiative checklist: a 2-year cohort study of Japanese community-dwelling elderly. PLoS ONE 11(11):e0166037. https://doi.org/10.1371/journal.pone.0166037

Blane D, Abraham L, Gunnell D et al (2003) Background influences on dietary choice in early old age. J R Soc Promot Health 123:204–209. https://doi.org/10.1177/146642400312300408

Brunt AR, Schafer E, Oakland MJ (1999) The ability of the DETERMINE checklist to predict dietary intake of white, rural, elderly, community-dwelling women. J Nutr Elder 18:1–19. https://doi.org/10.1300/J052v18n03_01

Leij-Halfwerk S, Verwijs MH, van Houdt S et al (2019) Prevalence of protein-energy malnutrition risk in European older adults in community, residential and hospital settings, according to 22 malnutrition screening tools validated for use in adults ≥65 years: a systematic review and meta-analysis. Maturitas 126:80–89. https://doi.org/10.1016/j.maturitas.2019.05.006

Funding

This research is supported by the National Institute for Health Research through the NIHR Southampton Biomedical Research Centre.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

No author has a conflict of interest.

Ethics approval

The study had ethical approval from the UK National Research Ethics Service Committee Southwest, 14/SW/1129.

Informed consent

All participants gave written informed consent.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bloom, I., Pilgrim, A., Jameson, K.A. et al. The relationship of nutritional risk with diet quality and health outcomes in community-dwelling older adults. Aging Clin Exp Res 33, 2767–2776 (2021). https://doi.org/10.1007/s40520-021-01824-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40520-021-01824-z