Abstract

A novel method is proposed in this paper to obtain global solutions of fuzzy optimal control with fixed state terminal conditions and control bounds. The global solution implies that the optimal control solutions are valid for all the initial conditions in a region of the state space. The method makes use of Bellman’s principle of optimality and fuzzy generalized cell mapping method (FGCM). A discrete form of fuzzy master equation with a control dependent transition membership matrix is generated by using the FGCM. This allows to evaluate both the transient and the steady-state responses of the controlled system. The method, simply called FGCM with BP, is applied to three nonlinear systems leading to excellent control performances.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Uncertainties are ubiquitous [1]. They exist everywhere in nature and engineering, for example, earthquakes, ocean waves, aerodynamic forces acting on the fast moving vehicles as well as contact frictions in mechanical structures. In general, based on sampled data, uncertainties can be mathematically modeled as a random variable and a fuzzy set leading to the two theoretical categories of uncertain dynamics, i.e., stochastic and fuzzy differential equations, respectively. A response solution of uncertain systems is characterized by both its topology in the state space and its density measure in the probability and possibility space, namely probability density functions for stochastic uncertainty and membership distribution functions for fuzzy one. In this paper, we emphasize fuzzy uncertain dynamics. A major theoretical foundation of uncertain systems includes master equations describing the evolutions of density functions, also known as density dynamics. Here, an emphasis is laid on a fuzzy master equation describing the evolutions of membership distribution functions [2, 3]. This fuzzy master equation is analogous to the Fokker–Planck–Kolmogorov equation for the probability density function of stochastic processes. The solution to this equation is in general very difficult to obtain analytically. A numerical method is a key approach to obtain the response solutions of fuzzy uncertain systems [4].

Optimal control of dynamic systems is an active research topic due to its mathematical theories and engineering applications. Optimal control solutions are always difficult to find for complex systems, especially for fuzzy nonlinear systems with bounded control and state variables. Most researchers have focused on the study of fuzzy logic control based on if-then rules [5], while only slight progress has been made in the analytical numerical solution of optimal control of fuzzy nonlinear systems. It should be emphasized that the optimal control of fuzzy systems in this paper is based on fuzzy differential equations, which is different from the if-then rule. They share the concept of slicing fuzzy variables and achieve similar goals with different formations. These existing works employing the if-then rule may not match the accuracy achieved for the fuzzy dynamical system. Although the adaptation of human language provides convenience and comprehensibility, it is difficult to process in a systematic way due to the lack of a system knowledge such as fuzzy differential equations. In the field of optimal control of fuzzy dynamical systems, Zhu et al. proposed fuzzy optimal control expectation mathematical models [6] and studied the fuzzy optimal control of linear systems [7]. And based on that, a fuzzy optimal control problem for a multistage fuzzy system was studied [8]. In addition, a numerical algorithm solving fuzzy variable problems and fuzzy optimal control problems using He’s variational iteration method (VIM) was proposed [9]. These studies are impractical for most off-line applications and are computationally intensive or prohibitive. Therefore, efficient and practical numerical methods for optimal control based on fuzzy differential equations need to be developed.

The cell mapping method is an efficient numerical method for optimal control, requiring only one computational procedure. Moreover, the optimal solution obtained by the cell mapping optimal control method can be controlled by closed-loop feedback, giving the method better robustness and practical engineering implications. The cell mapping method was first applied to the optimal control of the nonlinear dynamic system by Hsu [10] and then was widely applied to the optimal control problem of deterministic systems [11,12,13] and stochastic systems. In terms of optimal control of stochastic systems: The cell mapping method based on short-time Gaussian approximation was used for the optimal control of stochastic dynamic systems [14]. A control-dependent transition probability matrix was constructed using the generalized cell mapping method. An optimal control strategy for nonlinear stochastic systems based on Bellman’s principle was subsequently proposed [15]. Based on the above research, a numerical method using dynamic programming to solve the optimal control of the stochastic dynamic system was proposed [16]. The optimal control solution obtained from the cell mapping can guarantee the global optimization of the control solution in the state space and has universal applicability to nonlinear systems. All the above studies are based on the cell mapping method and the Bellman’s principle of optimality.

As shown by the research above, it is effective and feasible to apply cell mapping to the optimal control of both deterministic systems and stochastic systems [15, 16]. In some control scenarios with uncertainty, models based on fuzzy set theory have been demonstrated to be more suitable than randomization [17]. However, the global solutions of fuzzy optimal control method based on cell mapping for nonlinear systems have not been studied. The Generalized Cell Mapping method (GCM) is a very effective tool for studying the global behavior of strongly nonlinear fuzzy systems [18, 19]. A fuzzy generalized cell mapping method (FGCM) was proposed to study the bifurcation of systems with fuzzy noise [4]. Based on this work, an adaptive interpolation fuzzy generalized cell mapping method (FGCM with AI) was proposed, which significantly improved the algorithm’s efficiency without losing accuracy [20, 21]. In addition, the adoption of GPU parallel technology makes the cell mapping method more efficient [22]. In this paper, the fuzzy generalized cell mapping method (FGCM) with parallel computing is extended to the fuzzy optimal control problem.

Based on the successful application of the fuzzy generalized cell mapping method (FGCM) to the global analysis of fuzzy systems and the efficiency of the Bellman’s principle of optimality applied to the optimal control problem with fixed terminal conditions and control bounds, the present paper proposes fuzzy generalized cell mapping with bellman’s principle method (FGCM with BP) for obtaining the global solutions of fuzzy optimal control for nonlinear systems.

The paper is organized as follows: The fuzzy optimal control is presented in Sect. 2. Section 2.1 gives the mathematical model of fuzzy optimal control. Section 2.2 gives the discrete form of optimal control model for fuzzy systems based on Bellman’s principle. Section 3 presents the method of Fuzzy Generalized Cell Mapping with Bellman’s Principle (FGCM with BP). This method can be made considerably more computationally efficient by employing parallel computing techniques. With the subdivision strategy, the control solution can be obtained with high precision. Real-time optimal feedback control schemes for fuzzy uncertainty systems have also been proposed. This scheme enables online real-time feedback control of the system with good robustness. In Sect. 4, three examples are given to illustrate the effectiveness and accuracy of the FGCM with BP for global solutions of optimal control. Finally, concluding remarks and some perspectives are given in Sect. 5.

2 Fuzzy optimal control

2.1 Problem formulation

Consider the following fuzzy optimal control of nonlinear systems:

where \(\textbf{x} \left( t \right) \in \textbf{R} ^{n}\) is the state vector, \(\textbf{u} \left( t \right) \in \textbf{R} ^{m}\) is the control input, \( S \) is fuzzy parameter, \(\textbf{x} _{0}\) is the initial state, If \( f \) satisfies Lipschitz condition with respect to \(\textbf{x}\), \(\textbf{u}\) and \( s\in \textbf{S}\), then equation(1) has a unique solution, and the solution is continuous with respect to \(\textbf{x} _{0}\) and \(\textbf{u}\), \( s \). Assuming that \( s \) cannot be accurately determined and is therefore described as a fuzzy set, Eq. (1) is the fuzzy differential equation, which can be expressed by the fuzzy differential inclusion relation [23]. The corresponding dynamic system perturbed by fuzzy noise can be expressed as:

\(\textbf{S}\) is a fuzzy set on \(\textbf{R}\), and its membership function is \(\mu _{s} \left( s \right) \in (0,1]\), where \( s\in {S}\). For \( \forall \alpha \in [0,1],[\textbf{S} ]^{\alpha } \) are non-empty compact sets on \( \textbf{R} \). Remember that the only solution of Eq. (1) is \( \textbf{x} \left( \cdot ,\textbf{x} _{0},s \right) \) and the solution of equation (2) is \(\textbf{X} \left( \cdot ,\textbf{X} _{0},S \right) \), for \( \forall \ \textbf{s} \in [\textbf{S} ]^{\alpha } \), with the relation \( \textbf{x} \left( \cdot ,\textbf{x} _{0},s \right) \in [\textbf{X} \left( \cdot ,\textbf{X} _{0},S \right) ]^{\alpha }\).

A fuzzy Poincare’ map can be obtained from Eq. (1) as

For fuzzy systems, by further discretizing a fuzzy variable, we have a FGCM in such a form as follows [4]:

The symbol “o” denotes the min-max operator, which is different from that in Markov chain. The FGCM is considered as a discrete representation of the fuzzy master equation for a continuous fuzzy process [2, 3]. The two important properties of FGCM include the existence of a normal form in the topological matrix and finite iterations to convergence of membership functions.

Let the cost function be

where \( E [ ] \) is the mathematical expectation of cost function, \( T \) is the terminal control time, \( \varphi \left( \textbf{x} \left( T \right) ,T \right) \) is the terminal cost function and \( L \left( \textbf{x}\left( t \right) ,\textbf{u} \left( t \right) \right) \) is integrable.

Optimal control is to find a control strategy \( \textbf{u} \left( t \right) \in \textbf{U} \in \textbf{R} ^{m} \) in a given initial state \(\textbf{x} \left( t_{0} \right) =\textbf{x} _{0} \) to reach the target set \( \varPsi \left( \textbf{x} \left( T \right) ,T \right) = 0\), and the cost function \( J \left( \textbf{x} _{0},\textbf{u} \left( t \right) \right) \) reaches the minimum or maximum. It should be noted that in the fuzzy optimal control problem of nonlinear systems, the cost function is a mathematical expectation value, which is related to the one-step transition membership function. The details will be illustrated later.

2.2 Bellman’s principle of optimality

Bellman’s principle is an essential basis for optimal control. For the optimal control problem, assume that \( [\textbf{X} ^{'}, \textbf{U} ^{'} ] \) is the optimal control evolution trajectory for the initial state \( \textbf{X} \left( t_{0} \right) =\textbf{x} _{0} \) over the entire time interval \( [t_{0},T ] \). Suppose \( t_{0}< \hat{t} < T \), no matter how the control input \( \textbf{u} \) in the time interval \( [t_{0},\hat{t} ] \) is selected, \( \textbf{U} ^{'}\) must be the optimal control of the state \( \textbf{X} \left( \textbf{U},\hat{t} \right) \) in the time interval \([\hat{t},T ] \) for the same optimal control problem. Let the cost function of optimal control be

Bellman’s principle can be formulated

where \(t_{0}\le \hat{t} \le T ,V\left( \textbf{x} \left( T \right) , T , T \right) =\varphi \left( \textbf{x} \left( T \right) , T \right) \)

Under discrete state space and discrete time, assuming that the system starts from \( \textbf{x} _{i} \) through the evolution of time interval \( \left[ j\tau , T \right] \), where \( \tau \) is the discrete time step. The cost function is divided into two parts in a step size \( \tau \), namely the incremental cost function and the accumulative cost function. The incremental cost function can be expressed as

The cumulative cost function from time \( \left( j+1 \right) \tau \) to the final state is

\( \left( \textbf{x} ^{'} \left( t \right) ,\textbf{u} ^{'} \left( t \right) \right) \) is the optimal solution on the time interval \( \left[ \left( j+1 \right) \tau , T \right] \). Then, the discrete form of Bellman’s principle can be expressed as

The one-step increased cost function of the system can be obtained by integrating the cost function from the initial state \( t=j\tau \) to \( t=\left( j+1 \right) \tau \), and the accumulated cost function value is obtained by adding all the one-step increase cost function values from \( t=\left( j+1 \right) \tau \) to \( t= T \) and the terminal cost function value.

According to Bellman’s principle of optimality, we can use the backward search strategy to search for the optimal solution. Starting from the terminal state and searching backward to the initial state, the global optimal solution can be then obtained.

3 Fuzzy generalized cell mapping with Bellman’s principle (FGCM with BP)

3.1 Fuzzy generalized cell mapping (FGCM)

To apply the fuzzy generalized cell mapping method (FGCM), we also need to discrete fuzzy set \( S \). We take \( M \) sampling points \( s_{k} \left( k=1,2\ldots M \right) \) from \( S \) while making sure the membership degree equals to 1 for at least one point. The state space is divided into \( N \) cells of the same size and assigned serial numbers from 1 to \( N \) in turn. A cell \( j \left( k=1,2\ldots N \right) \) represents a small domain \( \textbf{z} _{j} \) in the state space. In the same way, the control input \(\textbf{u} \) is discretized into \( N _{\mu } \) different levels: \( \left\{ \textbf{u} \left( 1 \right) ,\textbf{u} \left( 2 \right) ,\ldots ,\textbf{u} \left( N _{\mu } \right) \right\} \). And then substitute each \( \textbf{u}, t \) and \( s_{k} \left( k=1,2\ldots M \right) \in S \) into the equation (1) as a pair of certain quantities. Then, perform numerical integration to get the solution \( \textbf{x} \left( \textbf{u},t,s_{k} \right) \). Therefore, \( M \times N _{\mu } \times N _{\tau } \) trajectories will be generated starting from \( \textbf{z} _{j} \). Figure 1 illustrates the discretization of state space and trajectories among cells possessing different time intervals and fuzzy parameters.

Under a given control input \(\textbf{u} \), for the center point \( \textbf{x} _{0} \in \textbf{z} _{j} \) at different discrete times \( \left\{ t\left( 1 \right) , t\left( 1 \right) ,\ldots ,t\left( \tau \right) \right\} \), set the membership degree of each initial condition to 1, that is, \(\mu _{\textbf{x} _{0} } \left( \textbf{x} _{0} \right) =1 \). Therefore, \( M \times N _{\tau } \) trajectories will be generated starting from cell \( j \). The mapping point of each trajectory is \( \textbf{x} ^{'} \left( \textbf{u},t,s_{k} \right) \), If a total of \( m\left( 0\le m\le MN_{\tau } \right) \) trajectories enter cell \( i \), The one-step membership function of the k-th trajectory is expressed as follows:

one-step transition membership degree from cell \( j \) to cell \( i \) can be obtained.

We can get the one-step transition membership function \( p_{ij} \) of each cell under \( \left\{ \textbf{u} \left( 1 \right) , \textbf{u} \left( 2 \right) ,\ldots , \textbf{u} \left( N_{\mu } \right) \right\} \). Figure 2 explains the procedure of constructing one-step membership transition matrix, which equals to the criteria applied to select individual \( p_{ij} \) for P. The one-step transition membership function \( p_{ij} \) will be used in the adaptive backward optimal search below.

To evaluate the control performance, we need to obtain its transient and steady-state membership functions. We choose the mean value of the discrete time series as the step size used in FGCM. Suppose that \( p_{j} \left( r \right) \) is the membership degree of the system state in cell \( j \) after the \( r \) step of mapping, that is, the membership degree of the center point \( \textbf{x} _{0} \in \mathbf {{z} } _{j} \) is \( \mu _{\textbf{x} _{0} } \left( \textbf{x} _{0} \right) =p_{j} \left( r \right) \), then after another step of mapping, the membership degree of the system state from cell \( j \) to cell \( i \) is given as follows:

Now consider the mapping from all cells, then after the \( r+1 \) step of mapping, the membership degree of the system state belonging to cell \( i \) is given as follows:

Remember that the membership function vector after the \( r \) step of mapping is \( \textbf{p}(r)\), and the i-th element in the vector is \(\textbf{p}_{i} \left( r \right) \). Let the one-step transition membership matrix be \(\textbf{P} \), and the element in the i-th row and j-th column of the matrix is \( p_{ij} \). Then, there is the following formula.

where \( \textbf{P} ^{r+1} =\textbf{P} \circ \textbf{P} ^{r},\textbf{P} ^{0} =\textbf{I},\) “\(\circ \)” represents the min-max operation, and \( \textbf{p} \left( 0\right) \) is the initial membership function vector. Repeat the calculation of Eq. (15) to get the transient and steady-state membership functions.

In order to improve the computational efficiency, we adopted the parallel technique into this work. The pseudocode of this algorithm is shown in Algorithm 1. In this program, the number of threads executed simultaneously needs to be chosen based on both the hardware capacity and the system’s nature. The system cost in swapping memories between CPU and GPU could sometimes be large enough to counteract the time saved by introducing the parallel technique. Therefore, we recommend tying to minimize the average time for the kernel to execute one thread in GPU and load the results to its destination in CPU.

As stated above, the global analysis of fuzzy systems relies on the construction of one-step membership transition matrix. In the context of optimal control, one needs transition matrixes for the backward search process, which will be illustrated in the following part of this article.

After constructing the mapping database by the method above, the next step is to search of the optimal control solution of each controllable cell with this database. Based on the Bellman’s principle, this paper proposes an adaptive backward search strategy for fuzzy systems. Due to the error caused by the discretization of the state space, this paper also adopts a subdivision strategy to refine the target set.

3.2 Adaptive backward search strategy based on BP

Each cell is given a maximum (or minimum) initial accumulative cost function value before searching, and then, a backward search strategy is performed to update the accumulative cost function of each cell adaptively. The backward search strategy starts from the last segment of the time interval \( \left[ T -\tau , T \right] \). Since the terminal condition of the last time segment is known, which is the desired state of control process, we can get all local optimal solutions for all possible initial conditions \( \textbf{x} \left( T -\tau \right) \). Then, repeat this process to obtain the local optimal control solution for the next segment of the time interval \( \left[ T -2\tau , T \right] \). The optimal control solution of the time interval \( \left[ j\tau , T \right] \) is determined by minimizing (maximizing) the sum of the incremental cost function value and the accumulative cost function value in Eq. (12). In the next part, the minimum cost function value is taken as an example to illustrate this adaptive backward search strategy.

In fuzzy optimal control of nonlinear systems, the system state is represented by the membership distribution function, which could be seen as a state vector, whose dimension equals to the total cell number. An initial state \( \textbf{x} \left( n\right) \) evolves to another state \( \textbf{x} \left( n+1\right) \) under the action of given control input \(\textbf{u} \left( n\right) \). In order to select the optimal control solution for fuzzy systems, we adopt the concept of total probability and probability expectation. The specific process is introduced as follows:

For cell j under a given control input \( \textbf{u} \left( n\right) \), we define \( P_{\varPhi } \) as \( P_{\varPhi } =\varSigma _{\textbf{z} _{i} \in \varPhi } p_{ij} \). The cells where the optimal control solution are found will be added to the target cell to form an extended target cell \( \varPhi \). \( P_{\varPhi } \) represents the possibility of cell j reaching an extended target cell \( \varPhi \) under a given control input \( \textbf{u} \left( n\right) \), and only cells that satisfies \( P_{\varPhi } > P_{set} \) will be considered controllable in one specific iteration. \( P_{set} \) is set manually in advance, as it goes higher, the final controlled state tends to concentrate more around the target region, but we may risk failing to recognize more actually controllable cells.

If a total of \( m\left( 0\le m\le MN _{\tau } \right) \) trajectories enter cell i, the one-step membership function of the k-th trajectory is obtained by Eq. (11). \( J_{ij}^{k} \) is the increased cost of the k-th trajectory in m trajectories from cell j to cell i.

Then, find the minimum (or maximum) value of the following formula to determine the optimal control input \( \textbf{u} ^{'} \left( n \right) \) of cell j.

Let \( \textbf{u} ^{'} \left[ j \right] =\textbf{u} _{\textrm{max}} \) represent the initial optimal control level of each cell, and \( E \left( J^{'}[j] \right) = J _{\textrm{max}} \) represents the initial accumulative cost function value of each cell. In the process of adaptive backward search, if starting from a cell \( \textbf{z} _{j} \) and passing through n adjoining cell mappings, the target set that satisfies the formula can be reached, then this cell is called the n-step optimal controllable cell. This means that after n cell mapping, optimal control can be achieved. In summary, the steps of adaptive backward search are as follows:

-

1.

Set the cell where the target set satisfies the state of formula \(\varPsi \left( \textbf{x} \left( T \right) , T \right) =0 \) as the target cell, assign the accumulative cost function value of all the target cells to 0 and treat all the target cells as the n-step optimal controllable cell ( \(n=0 \) ).

-

2.

Find the adjoining pre-image cells of all the n-step optimal controllable cell, and find all mapping pairs that can satisfy \(P_{\varPhi } > P_{set} \) in the mapping database.

-

3.

Find the mathematical expectation of incremental cost function value corresponding to these mappings, and obtain the mathematical expectation of the cumulative cost function value of the pre-image cell by adding the mathematical expectation of the incremental cost function value and the accumulative cost of the image cell.

-

4.

If a mapping starting from the pre-image cell \(\textbf{z} _{j} \) can make the optimal accumulative cost function value smaller, then \( E \left( J^{'}[j] \right) \) is updated to this smaller value, and the corresponding optimal control input \(\textbf{u} ^{'} \left[ j \right] \) is updated at the same time.

-

5.

Let \( n=n+1 \), and set the updated cell of \( E \left( J^{'}[j] \right) \) and \( \textbf{u} ^{'} \left[ j \right] \) as the new the n-step optimal controllable cell.

-

6.

The search process is terminated when the following conditions are met, otherwise it continues to execute from step 2.

-

(1)

\( n=N \), N is the number of total cells.

-

(2)

The \( E \left( J^{'}[j] \right) \) and \( \textbf{u} ^{'} \left[ j \right] \) of all cells has not been updated in the last search.

-

(1)

It should be noted that \( E \left( J^{'}[j] \right) \) and \( \textbf{u} ^{'} \left[ j \right] \) of each cell are adaptively updated during the optimal search process, so it is an adaptive optimal search. The flowchart of the search process is shown in Fig. 3.

In a deterministic dynamic system, the optimal trajectory of the system can be determined by executing the discrete optimal control table. However, due to the influence of fuzzy noise in the fuzzy system, we cannot know the definite value of its next state. In this paper, transient membership and steady membership are used to evaluate its control performance. The specific methods are introduced as follows.

For a given initial state vector \( \textbf{x} \left( 0 \right) \), find out every item with a nonzero membership degree. Part of these items are controllable, therefore we could look up their corresponding \( \textbf{u} ^{'} \) in the discrete optimal control table. And then we could select the \( \textbf{u} ^{'} \left( 1 \right) \) which owns the largest sum of membership degrees as the next control input for traditional FGCM. After repeated iterations n times, the transient and steady-state membership of the system state can be obtained, which could be used to verify the controllability of the system.

3.3 Subdivision strategy

For a specific control process, if a certain control precision cannot be reached with the limited computational resources, the subdivision strategy could be considered a supplement for the existing method. The subdivision program checks the distance between the system state and the target set constantly, and refine the cell set as the trajectory comes closer to the target. The pseudocode of this strategy is given as follows:

As seen in the Algorithm 2, a large proportion of the state space may be considered irrelevant for this specific process and are abandoned; therefore, control solutions that come from subdivision are usually unable to generate trajectories for another initial state. Moreover, the resolution should be increased to the final goal as fast as possible, since every time the cell size is decreased, or in other words the subdivision happens, one additional integration process and backward searching is required.

After introducing the subdivision strategy, the cost distribution and the control input selection can be obtained with very high precision; hence, it can be used to refine the trajectories near the target set.

3.4 Real-time optimal feedback control

The discrete optimal control table (DOCT) can be obtained after acquiring the mapping database and adaptive backward search strategy, in which an optimal control input is selected for every controllable cell. The sampling time is fixed in the actual application of feedback control and satisfies that it is less than the integration time in FGCM with BP.

For a given initial controllable state \( \textbf{x} \left( 0 \right) \), The optimal feedback control can be performed through DOCT, and the specific process is as follows:

-

1.

Let \( n=0 \), find the cell \( \textbf{z} \left( 0 \right) \) where the initial state \( \textbf{x} \left( 0 \right) \) is located.

-

2.

Find the optimal control input \( \textbf{u} \left( n \right) \) corresponding to \( \textbf{z} \left( n \right) \) in the DOCT.

-

3.

The system state evolves from \( \textbf{x} \left( n \right) \) to \( \textbf{x} \left( n+1 \right) \) after an integration step (sampling time \( T_{s} \) )under the control input \(\textbf{u} \left( n \right) \).

-

4.

Find the cell \(\textbf{z} \left( n+1 \right) \) (the cell number has been changed ) where \( \textbf{x} \left( n+1 \right) \) is located, if \( \textbf{z} \left( n+1 \right) \) is the target cell, the calculation ends, otherwise let \(n=n+1 \), return to step 2.

The flowchart of using DOCT table for optimal feedback control is shown in Fig. 4.

The feedback control will not end until the system state reaches the target set. Each cell in the DOCT constructed by Hsu [10] has a corresponding integration time and optimal control input. Each cell in the DOCT constructed in this paper corresponds to only one optimal control input, which means a significant reduction in memory space and calculations. The DOCT table constructed in this paper can better adapt to the real-time optimal feedback control of fixed sampling time \( T_{s} \).

4 Examples

In this section, the method of FGCM with BP proposed in this paper is applied to three examples. The first example is the classic Bang-Bang shortest time control problem, which proves the effectiveness and accuracy of the method. The second example is the optimal steering of a vehicle over a vortex field. The third example is the quadratic optimal control of a single inverted pendulum on a moving cart, which demonstrates the accuracy of the optimal search method and how the control performance is evaluated.

4.1 Example 1

Consider the shortest time Bang-Bang control problem of the fuzzy system as follows:

where S is the fuzzy parameter. The target set is (0,0), the initial point is (\(-\)0.7, \(-\)1.4), the control input is [− 1,1], the cost function is the time used to reach the target set: \( J= \int \textrm{d}t=t_{\textrm{end}}-t_{\textrm{start}} \) and the selected state space is [− 2,2] \(*\) [− 2,2]. The selected state space is uniformly discretized into 100*100 cells to search for the optimal solution. For the classical problem of Bang-Bang control without any form of uncertainty, there exists both numerical results of optimal control selection and the analytical control conversion curve. Let \(\varepsilon \) = 0.02 (\(\varepsilon \) is a parameter characterizing the degree of fuzziness of S). We can get the Global distribution of the optimal control solution in Fig. 5.

After applying FGCM with BP under the conditions above, the selected optimal control solution of the state space is given in Fig. 5. The cells in red are selected − 1 to be the optimal control solution, while cells in black are selected 1 to be the optimal control solution. The blue curve represents the analytical solution of the deterministic system in Fig. 5; it is shown that results obtained by FGCM with BP method correspond well to the analytical solution of this deterministic system.

Figure 6 are the optimal trajectories of the deterministic system and fuzzy system(\(\varepsilon \)= 0.02) with an initial point of (\(-\) 0.7, \(-\) 1.4). Red line represents the trajectory with the membership of 1 extracted from the fuzzy system. Blue line represents the optimal trajectory graph in the deterministic system. It can be seen that the two trajectories have a good correspondence.

Figure 7a, b are the global distribution of incremental cost of the deterministic system and fuzzy system (\(\varepsilon \) = 0.02),respectively. Figure 7c, d are the global distribution of accumulative cost the deterministic system and fuzzy system (\(\varepsilon \) = 0.02), respectively. The comparison between two columns demonstrates that both the incremental and the accumulative cost distribution of the fuzzy system obtained by FGCM with BP has the same basic form as those of the deterministic system. However, the existence of a small fuzzy uncertainty smooths the gradient of cost distribution, allowing more cells to reach target set at a relatively low cost.

This is achieved at the cost of lowering the standard of ‘reaching target set’. The intrinsic nature of fuzzy uncertainty makes it almost impossible for the state vector to reach the target set completely, that is to say, the controlled final state vector is very likely to have nonzero items outside the precise location of the target set.

In the following part, we use FGCM with BP method to apply feedback control and observe how the system evolves into the target set from a certain initial state. Figure 8 is the steady-state membership density function (MDF) of the controlled fuzzy system (\(\varepsilon \) = 0.02). It shows that the probability of the fuzzy system reaching the terminal state is 1, indicating that the system can reach target set under the optimal control law constructed by PGCM with BP. Table 1 shows the difference in computing time cost with and without parallel computing. It can be seen from the table that the computing efficiency can be greatly improved with parallel computing.

4.2 Example 2

The optimal steering of a vehicle over a vortex field is also studied. The vehicle moves on the \( (x_{1},x_{2})\) plane with constant velocity relative to the vortex. The control u is the heading angle with respect to the positive \(x_{1}\)-axis.The velocity field is given by \( v(x_{1},x_{2})=ar/(br_{}^{2} +c )\), where \( r=\left| \textbf{ x} \right| \) is the radius from the center of the vortex. The fuzzy system is as follows:

where S is the fuzzy parameter, and the selected state space is [− 2,2] * [− 2,2]. The selected state space is uniformly discretized into 1089 cells to search for the optimal solution. Let \({\varvec{\Omega }}\) be the set of cells corresponding to the target set \({\varvec{\Psi }} =\left\{ x_{1}=2,x_{2} \right\} \), i.e. \({\varvec{\Omega }} \) has the rightmost column of cells in state space. Take \(\textbf{U} =\left\{ -\pi , -14\pi /15,\ldots , 14\pi /15\right\} , \lambda = 1, \textit{a} = 5, \textit{b} = 10, \textit{c} = 2 \), the cost function is used to reach the target set as follows:

Figure 9 shows the mean vector field of the vortex. A trajectory of a freely moving vehicle is superimposed. The center of the vortex attracts probability due to the state dependence of the diffusion and not to the existence of an attracting point at the origin. This behavior has no counterpart in a deterministic analysis. Take the initial point is (\(-\) 0.8, \(-\) 0.32), and the vector field of the mean of the controlled response is shown in Fig. 10.

Controlled trajectories can be inferred from Fig. 8. We could see that most parts of the selected region are controllable while a tiny percentage of initial conditions evolve into other regions. Most of the uncontrollable cells are located in the margin area of the selected space. This may result from a course division of cells and too small a selected region.

After proving the controllability of our method in this example, we present Fig. 11 illustrating how the variance of the fuzzy parameter affects both the transient and steady-state membership distribution function. When the fuzzy noise becomes stronger, it is clear that more cells possess nonzero memberships. However, the trajectory which represents cells with memberships of 1 remains unchanged, indicating the validness of our method.

a Controlled transient state membership distribution function when \(\varepsilon \) = 0.02, t = 0.3 unit; b Controlled transient state membership distribution function when \(\varepsilon \) = 0.1, t = 0.3 unit; c Controlled steady-state membership distribution function when \(\varepsilon \) = 0.02; d Controlled steay state membership distribution function when \(\varepsilon \) = 0.1

Figure 11a, b are controlled transient state membership distribution function when \(\varepsilon \) = 0.02, \(\varepsilon \) = 0.1, respectively, after 0.3 time units. Figure 11c, d are controlled steay state membership distribution function,respectively. In Fig. 11d, it can be observed that a discontinuity exists among the nonzero memberships of multiple cells. This results from the discretion of fuzzy parameter S. Computational power permitting, S can be divided into finer grids alleviating this obvious discontinuity. It should also be noted that this discontinuity exists for all four figures in Fig. 11, but is not visible in other three figures.

4.3 Example 3

Consider the optimal control problem of a single inverted pendulum on a moving cart under the horizontal force \( \textbf{u} \) [24], The mass of the mobile cart is M, the mass of the pendulum is m and length of the pendulum is l. The angle of the pendulum relative to the vertical upward position of the cart is \( \phi \). Assume there is no friction. We ignore the cart and only consider the dynamic equations related to the pendulum. Let \( \textbf{x} _{1} =\phi ,\textbf{x} _{2} =\dot{\phi } \), the state equation is as follows:

where \( m_{r} =m/(m+M) \), we use the parameter values in the literature [24] to facilitate comparison with its results: m = 2 kg, M =8 kg, l = 0.5 m, \(g = 9.8\,\textrm{m}/\textrm{s}^2\), s is the uncertainty parameter, the terminal state is (0,0), the initial point is (\(-\) 3.14, 0), the control input is [− 64,64] and it is divided into nine levels, the range of the selected state space is [− 8,8] \(*\) [− 10,10], and the state space is discretized into 512 \(*\) 512 cells.

The cost function is a purely quadratic:

We studied the optimal control of the fuzzy system and obtained the global distribution of the optimal accumulative cost with \(\varepsilon \) = 0.02 and \(\varepsilon \) = 0.5, respectively, in Fig. 12. The optimal accumulative cost runs from 0 (blue) to 15 (red), and the white area is the uncontrollable area in Fig. 12. From the comparison of Fig. 12a, b, it can be seen that the accumulative cost increases gradually as the uncertainty increases.

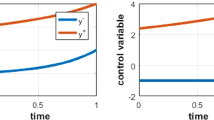

This paper obtains the discrete optimal control table through the adaptive backward optimal search method of fuzzy uncertain system. Each cell in the discrete optimal control table corresponds to an optimal control input. Figure 13 shows the global distribution of the optimal control solution when \(\varepsilon \) = 0.02.

Because there are fuzzy parameters in a fuzzy system, under a given control input, it is impossible to know which state to evolve in the next step, so it is impossible to obtain an optimal control trajectory, which is different from a deterministic system. In order to evaluate the control performance of the system, this paper adopts the transient membership degree and the steady-state membership degree. Since it is impossible to determine which cell the subsequent evolution trajectory of the system falls into under a given control input, the next control input to the system cannot be determined. This paper proposes a method by combining the membership function and the discrete optimal control table. First, the optimal control input of all the cells that may be reached in the next step need to be found in the discrete optimal control table. Then, find the optimal control input corresponding to the maximum sum of membership degrees, and use it as the feedback control input of the current state of the system. Controlled performance evaluation methods of systems is used to observe whether the system can reach the target set from an initial state, thus verifying the effectiveness and accuracy of FGCM with BP method.

For a given initial point (\(-\) 3.14, 0), Fig. 14 shows the optimal control input time history diagram. Figure 15 extracts the trajectory with membership degree of 1. To avoid redundant computation without losing accuracy, the subdivision technique is introduced when the trajectory enters a certain region surrounding the target set. That is to say, the cells are gradually downsized as it comes closer to the target set. In Fig. 15, the red curve represents the trajectory with the membership degree of 1 which is obtained by subdivision, while the black curve represents the trajectory obtained without the subdivision strategy. By using subdivision technology, the optimal control trajectory can reach the target set more accurately.

Figure 16 is the distribution of accumulative cost obtained by subdivision for cells near the target. Cells within the close range of the target set are further divided to increase the resolution, and a more accurate trajectory is generated by the method proposed. The area which is not passed through by the new trajectory is temporarily considered uncontrollable in the subdivision process. It can be clearly seen that the optimal control trajectory accurately reaches the target set. Figure 16 shows that the adaptation of subdivision strategy has greatly improved control accuracy. Figure 17 shows trajectories in the phase space with the evolution of time, which can be seen that the system is controllable. Figure 15 shows the trajectories constituted by points with membership of 1, which represents the system state falls into these cells with the highest probability at the corresponding time segment.

For systems with uncertainties, no matter stochastic or fuzzy, control methods pursuing high precision should try to concentrate the nonzero items in the final state vector to the target set, or at least lower the probabilities for cells outside the target set. However, no method has completed this task perfectly as far as we know. In the process of feedback control, the decrease in sampling time generally increases the control precision, for it adjusts the control inputs more frequently. An increase in the number of control input discretization also benefits the final control quality, while the computational cost could go up at the same scale.

Based on these considerations, our method serves well in occasions where large amounts of control processes are needed for one single environment, since our method only needs a one-time computation and searching process, before it generates solutions for all possible initial states.

5 Conclusions

Focusing on fuzzy optimal control of nonlinear systems, we propose a method of Fuzzy Generalized Cell Mapping with Bellman’s Principle (FGCM with BP) as a numerical solution. The traditional fuzzy generalized cell mapping method is further developed with the parallel technique, which serves as the foundation of the optimal control method proposed. Multiple one-step membership transition matrixes can now be obtained hundreds of times faster, which could be considered acceptable for most occasions. After the construction of the mapping database, the optimal control inputs are selected for all controllable cells though the backward searching process. Uncontrollable cells are also identified along with this process. It is worthy of noticing that a subdivision strategy is introduced to the searching process, which greatly reduce the time consumption of this stage. To implement this method successfully, one is required to methodically choose the time series used in the construction of mapping database, as stated in earlier sections.

On the completion of the backward search, the DOCT table is formed, which enables one to look up the optimal control solution for various known states of the analyzed system. In real-time feedback control, we need to constantly monitor the system state and then adjust the control inputs according to the DOCT table. Three examples are given to demonstrate the proposed method’s effectiveness.

In the Bang-Bang control system, let \( \varepsilon = 0.02 \), all results stay in the same shape with those in the deterministic system. Good correspondence is shown in control solutions transition curves, and more importantly the controlled trajectories, indicating the correctness of the method proposed. However, the cost gradient is obviously smoothed. Due to the existence of fuzzy uncertainties, cost expectations are lowered for most cells. However, the system is now almost impossible to reach the target set fully.

In the second example, the effectiveness of our approach is demonstrated again, in combination with a vector field that intuitively demonstrates nearly all possible controlled trajectories. The discontinuity is analyzed for the membership distribution function. It is brought about by the discretion of the fuzzy parameter.

In the third example, the subdivision strategy is adapted to improve the control precision for one single trajectory. Several possible ways to improve the quality of control process are proposed, including the more frequent adjustments of control inputs and the finer discretization of control inputs, meanwhile their drawbacks are also discussed. Now the final controlled state cannot stay in the target set completely due to the existence of stochastic or fuzzy uncertainties, which is of certainly great significance for real word application. Therefore, the enhancements of this method shall be our future work.

Code Availability

The code that support the findings of this study are available from the corresponding author upon reasonable request.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Křivan V, Colombo G (1998) A non-stochastic approach for modeling uncertainty in population dynamics. Bull Math Biol 60(4):721–751

Friedman Y, Sandler U (1999) Fuzzy dynamics as an alternative to statistical mechanics. Fuzzy Sets Syst 106(1):61–74

Friedman Y, Sandler U (1996) Evolution of systems under fuzzy dynamic laws. Fuzzy Sets Syst 84(1):61–74

Hong L, Sun J-Q (2006) Bifurcations of fuzzy nonlinear dynamical systems. Commun Nonlinear Sci Numer Simul 11(1):1–12

Mustafa AM, Gong Z, Osman M (2019) Fuzzy optimal control problem of several variables. Adv Math Phys 2019

Zhu Y (2009) A fuzzy optimal control model. J Uncertain Syst 3(4):270–279

Zhao Y, Zhu Y (2010) Fuzzy optimal control of linear quadratic models. Comput Math Appl 60(1):67–73

Zhu Y (2011) Fuzzy optimal control for multistage fuzzy systems. IEEE Trans Syst Man Cybern Part B (Cybern) 41(4):964–975

Mustafa AM, Gong Z, Osman M (2021) The solution of fuzzy variational problem and fuzzy optimal control problem under granular differentiability concept. Int J Comput Math 98(8):1495–1520

Hsu CS (1985) A discrete method of optimal control based upon the cell state space concept. J Optim Theory Appl 46(4):547–569

Crespo LG, Sun JQ (2000) Solution of fixed final state optimal control problems via simple cell mapping. Nonlinear Dyn 23(4):391–403

Crespo LG, Sun JQ (2003) Fixed final time optimal control via simple cell mapping. Nonlinear Dyn 31(2):119–131

Cheng Y, Jiang J (2021) A subdivision strategy for adjoining cell mapping on the global optimal control in multi-input–multi-output systems. Optimal Control Appl Methods 42(6):1556–1567

Crespo LG, Sun JQ (2002) Stochastic optimal control of nonlinear systems via short-time gaussian approximation and cell mapping. Nonlinear Dyn 28(3):323–342

Crespo LG, Sun J-Q (2003) Stochastic optimal control via bellman’s principle. Automatica 39(12):2109–2114

Crespo LG, Sun JQ (2005) A numerical approach to stochastic optimal control via dynamic programming. IFAC Proc Vol 38(1):23–28

Klir GJ, Folger TA (1987) Fuzzy sets, uncertainty, and information. Prentice-Hall Inc

Chen Y-Y, Tsao T-C (1988) A new approach for the global analysis of fuzzy dynamical systems. In: Proceedings of the 27th IEEE conference on decision and control, pp 1415–1420. IEEE

Sun JQ, Hsu CS (1990) Global analysis of nonlinear dynamical systems with fuzzy uncertainties by the cell mapping method. Comput Methods Appl Mech Eng 83(2):109–120

Liu X-M, Jiang J, Hong L, Li Z, Tang D (2019) Fuzzy noise-induced codimension-two bifurcations captured by fuzzy generalized cell mapping with adaptive interpolation. Int J Bifurc Chaos 29(11):1950151

Liu X-M, Jiang J, Hong L, Tang D (2018) Studying the global bifurcation involving Wada boundary metamorphosis by a method of generalized cell mapping with sampling-adaptive interpolation. Int J Bifurc Chaos 28(02):1830003

Xiong F-R, Qin Z-C, Ding Q, Hernández C, Fernandez J, Schütze O, Sun J-Q (2015) Parallel cell mapping method for global analysis of high-dimensional nonlinear dynamical systems. J Appl Mech 82(11):111010

Hüllermeier E (1999) Numerical methods for fuzzy initial value problems. Int J Uncertain Fuzziness Knowl Based Syst 7(05):439–461

Hauser J, Osinga H (2001) On the geometry of optimal control: the inverted pendulum example. In: Proceedings of the 2001 American control conference (Cat. No. 01CH37148), vol 2, pp 1721–1726. IEEE

Funding

Funding was provided by Natural Science Foundation of China (Grant No. 11972274) and National Natural Science Foundation of China (Grant No. 12172267).

Author information

Authors and Affiliations

Contributions

All authors contributed to this paper.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Pan, F., Yan, H., Jiang, J. et al. Fuzzy optimal control of nonlinear systems by fuzzy generalized cell mapping method with Bellman’s principle. Int. J. Dynam. Control 11, 1808–1822 (2023). https://doi.org/10.1007/s40435-022-01090-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40435-022-01090-5