Abstract

We propose an adaptive optimization algorithm for operating district heating networks in a stationary regime. The behavior of hot water flow in the pipe network is modeled using the incompressible Euler equations and a suitably chosen energy equation. By applying different simplifications to these equations, we derive a catalog of models. Our algorithm is based on this catalog and adaptively controls where in the network which model is used. Moreover, the granularity of the applied discretization is controlled in a similar adaptive manner. By doing so, we are able to obtain optimal solutions at low computational costs that satisfy a prescribed tolerance w.r.t. the most accurate modeling level. To adaptively control the switching between different levels and the adaptation of the discretization grids, we derive error measure formulas and a posteriori error measure estimators. Under reasonable assumptions we prove that the adaptive algorithm terminates after finitely many iterations. Our numerical results show that the algorithm is able to produce solutions for problem instances that have not been solvable before.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

An efficient and sustainable energy sector is at the core of the fight against the climate crisis. Thus, many countries around the world strive towards an energy turnaround with the overarching goal to replace fossil fuels with energy from renewable resources such as wind and solar power. However, one then faces issues with the high volatility of the fluctuating renewable resources. To overcome this fluctuating nature of wind and solar power, two main approaches are currently seen as the most promising ones: (i) the development and usage of large-scale energy storage systems as well as (ii) sector-coupling.

In this paper, we consider the computation of optimal operation strategies for district heating networks. These networks are used to provide customers with hot water in order to satisfy their heat demand. Thus, a district heating network can be seen both as a large-scale energy storage as well as a key element of successful sector-coupling. The hot water in the pipes of a district heating network is heated in so-called depots in which, usually, waste incineration is used as the primary heat source. If, however, waste incineration is not sufficient for heating the water, gas turbines are used as well. The hot water in the pipeline system can thus be seen as an energy storage that could, for instance, also be filled using power-to-heat technologies in time periods with surplus production of renewables. On the other hand, heat-to-power can be used to smooth the fluctuating nature of renewables in time periods with only small renewable production. Consequently, district heating networks can be seen as sector-coupling entities with inherent storage capabilities.

To make such operational strategies for district heating networks possible, an efficient control of the network is required that does not compromise the heat demand of the households that are connected to the network. However, a rigorous physical and technical modeling of hot water flow in pipes leads to hard mathematical optimization problems. At the core of these problems are partial differential equations for modeling both water and heat transport. Additionally, proper models of the depot and the households further increase the level of nonlinearity in the overall model. Finally, the tracking of water temperatures across nodes of the network leads to nonconvex and nonsmooth mixing models that put a significant burden on today’s state-of-the-art optimization techniques.

In this paper, we consider the simplified setting of a stationary flow regime. For closed-loop control strategies for instationary variants of the problem we refer to [1, 28, 35] and to [15] for open-loop optimization approaches. Interestingly, the literature on mathematical optimization for district heating networks is rather sparse. An applied case study for a specific district heating network in South Wales is done in [20] and [26] provides more a general discussion of technological aspects and the potentials of district heating networks. In [30], the authors follow a first-discretize-then-optimize approach for the underlying PDE-constrained problem. For the relation between district heating networks and energy storage aspects we refer to [5, 12, 33] and the references therein. Stationary models of hot water flow are also considered in studies on the design and expansion of networks as, e.g., in [2, 7, 27]. Numerical simulation of district heating networks using a local time stepping method is studied in [3] and model order reduction techniques for the hyperbolic equations in district heating networks are discussed in [23] or [24, 25]. Finally, a port-Hamiltonian modeling approach for district heating networks is presented and discussed in [13].

Despite the mentioned simplification of considering stationary flow regimes, the optimization problems at hand are still large-scale and highly nonlinear mathematical programs with complementarity constraints (MPCCs) that are constrained by ordinary differential equations (ODEs). It turns out that these models are extremely hard to solve for realistic or even real-world district heating networks if they are presented to state-of-the-art optimization solvers. Our contribution is the development of an adaptive optimization algorithm that controls the modeling and the discretization of the hot water flow equations in the network. A similar approach has already been developed and tested for natural gas networks in [16]. The main rationale is that simplified (and thus computationally cheaper) models can lead to satisfactory (w.r.t. their physical accuracy) results for some parts of the network whereas other parts require a highly accurate modeling to obtain the required physical accuracy. The problem, however, is that it is not known up-front where which kind of modeling is appropriate. Our adaptive algorithm is based on (i) a catalog of different models of hot water flow and on (ii) different discretization grids for the underlying differential equations. The proposed method then controls the choice of the model and the discretization grid separately for every pipe in the network. The switching between different models and discretization grids is based on rigorous error measures so that we obtain a finite termination proof stating that the method computes a locally optimal point that is feasible w.r.t. the most accurate modeling level and a prescribed tolerance. Besides these theoretical contributions, we also show the effectiveness of our approach in practice and, in particular, illustrate that instances on realistic networks can be solved with the newly proposed method that have been unsolvable before.

The remainder of the paper is structured as follows. In Sect. 2 we present our modeling of district heating networks and derive the modeling catalog for hot water flow as well as the discretizations of the respective differential equations. After this, we derive exact error measures and error measure estimators in Sect. 3 both for modeling as well as discretization error measures. These are then used in Sect. 4 to set up the adaptive optimization algorithm and to prove its finite termination. The algorithm is numerically tested in Sect. 5 before we close the paper with some concluding remarks and some aspects of potential future work in Sect. 6.

2 Modeling

We model the district heating network as a directed and connected graph \(G = ({V}, {A})\), which has a special structure. First, we have a so-called forward-flow part of the network, which is used to provide the consumers with hot water. Second, the cooled water is transported back to the depot in the so-called backward-flow part. These two parts are connected via the depot in which the cooled water is heated again, and via the consumers who use the temperature difference to satisfy the thermal energy demand in the corresponding household. The set of nodes of the forward-flow part is denoted by \({V}_{\text {ff}}\) and the set of arcs of this part is denoted by \({A}_{\text {ff}}\), i.e., \(a= (u, v) \in {A}_{\text {ff}}\) implies \(u, v\in {V}_{\text {ff}}\). In analogy, the set of nodes of the backward-flow part is denoted by \({V}_{\text {bf}}\) and the set of arcs of this part is denoted by \({A}_{\text {bf}}\), i.e., \(a= (u, v) \in {A}_{\text {bf}}\) implies \(u, v\in {V}_{\text {bf}}\). The depot arc is denoted by \(a_\text {d}= (u, v)\) with \(u\in {V}_{\text {bf}}\) and \(v\in {V}_{\text {ff}}\). The consumers are modeled with arcs \(a= (u, v)\) with \(u\in {V}_{\text {ff}}\) and \(v\in {V}_{\text {bf}}\). Finally, all pipes of the forward and the backward flow part are contained in the set of pipes \( {A}_{\text {p}}= {A}_{\text {ff}}\cup {A}_{\text {bf}}\).

In the next subsection we present the model for all components of the network, i.e., for pipes, consumers, and the depot.

2.1 Pipes

We now derive an approximation for the stationary water flow in cylindrical pipes. This derivation is based on the 1-dimensional compressible Euler equations [3, 13, 24]

Equation (1a) is the continuity equation and models mass balance, whereas the pressure gradient is described by the momentum equation (1b). Here and in what follows, \(\rho \) denotes the density of water, \(v\) its velocity, and \(p\) its pressure. In (1), the quantities are to be seen as functions in space (x) and time (t), i.e., for instance, \(p= p(x,t)\). The diameter of a pipe \(a\) is denoted by \(D_a\), \(\lambda _a\) is the pipe’s friction coefficient, and \(h_a'\) denotes the slope of the pipe. Finally, g is the gravitational acceleration.

The incompressibility of water is modeled as \( 0 = \rho _{a} \frac{\partial v_a}{\partial x}\), cf. [13], which implies

Moreover, the additional PDEs

model conservation of internal energy density \(e\) and entropy density \(s\), respectively; see [13]. The water’s temperature is denoted by \(T_a\). The parameters \(k_W\) and \(T_\text {W}\) are the heat transfer coefficient and the soil or pipe wall temperature.

Since we expect the change (in time) of the pressure energy and the term of energy and power loss due to dissipation work to be small, we neglect these terms. However, if these terms are taken into account, then it is possible to reformulate these equations in a port-Hamiltonian form (see, e.g., [13]), which is more appropriate for sector coupling [17]. Finally, we are interested in the stationary state of the network. This is modeled by setting all partial derivatives w.r.t. time to zero. Hence, the System (1)–(3) simplifies to the stationary, incompressible, and 1-dimensional Euler equations for hot water pipe flow, i.e.,

Since \(\rho _a> 0\) holds, Eq. (4a) implies that \(v_a(x) = v_a\) is constant for all pipes. Using this, (4b) implies that the density \(\rho _a(x) = \rho _a\) is constant as well. In addition, we set \(\rho _a= \rho \) for all arcs \(a\) of the network. With the mass flow

and constant velocities and densities we also have that \(q_a(x) = q_a\) is constant for all pipes. In (5), \(A_a\) denotes the cross-sectional area of pipe \(a\). By subsuming the discussed simplifications we get the system

In Eq. (6a), the pressure gradient term is the only term that depends on the spatial position x. Hence, we obtain the stationary momentum and energy equation

In the following, for our optimization framework, we do not consider the entropy equation, which can be solved in a post-processing step once the optimal pressure and internal energy values have been determined.

The system is closed by the state equations

in which we set

Equation (7b) is known to be a reasonable approximation for \(e_a\in [0.2,0.5]\) GJ m\(^{-3}\), \(T_a\in [323,403]\) K, and \(p_a\in [5,25]\) bar; see, e.g., [13].

2.1.1 Model catalog

For the adaptive optimization method developed in this work we employ a catalog of models. In this catalog, System (M1a) represents the highest or first modeling level, i.e., the most accurate one.

To derive the second modeling level, we neglect the (small) term \(\lambda _a/ (2 D_a) \rho v_a^2 |v_a|\) and get

By further assuming that the first term in (M2b) dominates the second one, we can neglect the term \(4 k_W/ D_a(T_a- T_\text {W})\) and simplify System (M2) to obtain the third level as

Considering model catalogs such as the one just developed is a standard procedure in order to cope with challenging optimization models; see, e.g., [6] in the context of gas networks. Under sufficient regularity assumptions that allow for Taylor expansions, a detailed perturbation analysis and the dropping of higher-order terms would lead to a similar catalog.

2.1.2 Exact solution of the energy equation

The Eqs. (M1b) and (M2b) can be solved analytically. This is done in the following lemma and will be used later to compute exact error measures in our adaptive algorithm.

Lemma 1

The differential equation (M1b), i.e.,

with initial condition

and state Eq. (7b) has the exact solution

with

if \(4\alpha \gamma -\beta ^2 < 0\) is satisfied.

Proof

We combine (M1b) and (7b) to obtain

After re-organizing and replacing \(e_a^*\) by its definition, the equation reads

We combine Eq. (9) with the definitions in (8) and get

Equation (10) is a special type of Riccati equation with constant coefficients; see, e.g., [22]. Because \(\alpha ,\beta ,\gamma \), and \(\zeta \) do not depend on x, they can be seen as constants when integrating over x. We re-organize and integrate both sides over x, yielding

Applying a variable change in the right-hand side of (11) leads to

We may rewrite

since \(4\alpha \gamma -\beta ^2 < 0\) holds by assumption. Therefore, we have

Going back to (12) we have (see also Section 8.1 in [4])

where we set

The internal energy equation thus reduces to

By re-substituting the definition of \(C_1\) we may write

We define \(C_3 \mathrel {{\mathop :}{=}}\exp \left( -C_2\sqrt{\beta ^2- 4 \alpha \gamma }\right) \). Then, (13) leads to

The constant \(C_3\) then absorbs the ± sign such that we can write

We compute \(C_2\) using the initial condition at \(x=0\) and obtain

Finally, we combine Eqs. (14) and (15), yielding

\(\square \)

Let us further note that the condition \(4 \alpha \gamma - \beta ^2 < 0\) of the last lemma is satisfied for usual pipe parameters.

Corollary 1

The differential equation (M2b), i.e.,

with initial condition

and state Eq. (7b) has the solution

with

if \(4\alpha \gamma -\beta ^2 < 0\) is satisfied.

The proof is analogous to the one of Lemma 1. Figure 1 shows the exact solution of (M1b) for a specific pipe.

The exact solutions derived in the last lemma and corollary could, in principle, be used as constraints in a nonlinear optimization model. However, the fractions, square roots, and exponential functions would lead to a very badly posed problem resulting in an extreme numerical challenge even for state-of-the-art solvers.

Solution of Eq. (M1b) for positive velocities and the parameters \(k_W= {0.5}{\text {W\,m}^{-2}} {\hbox {K}^{-1}}\), \(\lambda _a= 0.017\), \(D_a= {0.107}\,\,\hbox {m}\), \(L_a= {100 0}\,\hbox {m}\), and \(T_\text {W}= {278}\hbox {K}\). The units of the internal energy density \(e_a\) and the velocity \(v_a\) are \({\hbox {GJ\, m}^{-3}}\) and \({\hbox {m\,s}^{-1}}\), respectively

2.1.3 Discretization

In order to solve the optimization problem, we follow the first-discretize-then-optimize approach and introduce an equidistant discretization

of the spatial domain \([0, L_a]\) using the discretization points \( x_k \in \varGamma _a\) with \(0 = x_0< x_1< \cdots < x_{n_a} = L_a\) and step size \(\Delta x_a = L_a / n_a = x_{k+1} - x_k\) for \( k = 0,1, \dotsc , n_a - 1\). We use the implicit mid-point rule to discretize the separate levels of the catalog, i.e., Systems (M1a)–(M3), as well as the state Eq. (7b). Using the abbreviation \(e_a^k \mathrel {{\mathop :}{=}}e_a(x_k)\), we obtain the discretized system

for all \(k = 1, \dotsc , n_a\). Discretizing (M2a) analogously leads to

for all \(k = 1, \dotsc , n_a\). The discretized systems (D1) and (D2) are closed by the discretized version of the state Eq. (7b), i.e., by

for all \(k = 1, \dotsc , n_a\). For System (M3), we get

for all \(k = 1, \dotsc , n_a\). In our actual computations, we replace the \(n_a\) equations for \(e\) by the single constraint \(e_a(L_a) = e_a(0)\), since a two-point discretization is always exact for this model level.

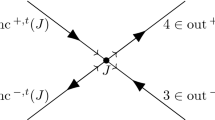

The model catalog (both for the original and the discretized version) is depicted in Fig. 2.

2.2 Nodes

In this section, we discuss the modeling of nodes in the district heating network. To this end, we mainly follow the modeling approach presented in [15]. We model mass conservation via

where \(\delta ^{\text {in}}(u)\) and \(\delta ^{\text {out}}(u)\) model the set of in- and outgoing arcs of node \(u\), respectively. We assume continuity of pressure at the nodes and obtain

where \(p_u\) is the pressure at node \(u\).

Finally, we have to model how the internal energy is mixed at the nodes of the network. To describe this, we use the perfect mixing model

with

for \(a\in \delta (u) = \delta ^{\text {in}}(u) \cup \delta ^{\text {out}}(u)\). Here and in what follows, we denote with \(e_{a:u}\) the internal energy in pipe a at its end node u. For more details and a derivation of this model we refer to [9, 13, 15, 29].

2.3 Depot and consumers

Following [15, 27], for the depot \(a_\text {d}= (u, v)\) we have the constraints

where \(p_\textrm{s}\) is the so-called stagnation pressure that is used to make the overall pressure profile in the network unique. Moreover, \(P_{\textrm{p}}\) is the power required for the pressure increase realized at the depot, \(P_{\textrm{w}}\) is the power obtained by waste incineration and \(P_{\textrm{g}}\) is the power obtained by burning natural gas. The latter two quantities are used in the depot to increase the internal energy density or, equivalently, the temperature of the water.

In order to model the consumers \(a= (u, v) \in {A}_{\text {c}}\), we use the constraints

The first constraint models how the required thermal energy \(P_a\) is obtained in dependence on the mass flow \(q_{a}\) at the consumer and the difference \(e_{a:v} - e_{a:u}\) of the internal energy density. The internal energy density at inflow conditions (\(e_{a:u}\)) needs to be larger than the given threshold \(e^{\text {ff}}_a\) and, at outflow conditions, it is fixed to the network-wide constant \(e^{\text {bf}}\). Finally, the fourth constraint states that the pressure cannot be increased at the household of a consumer.

2.4 Bounds, objective function, and model summary

To complete the model we need to incorporate some technical and physical bounds on the variables of the model and to define a proper objective function. First, we have bounds on the mass flow, i.e.,

on the nodal pressures,

and on the nodal water temperatures, i.e.,

Lastly, we incorporate bounds on power consumption, i.e.,

for given upper bounds \(P_{\textrm{p}}^+\), \(P_{\textrm{w}}^+\), and \(P_{\textrm{g}}^+\).

Our goal is to minimize the overall costs required to satisfy the heat demand of all the consumers. Thus, the objective function is given by

where \(C_{\text {p}},C_{\text {w}}\), and \(C_{\text {g}}\), respectively, correspond to the cost of pressure increase, waste incineration, and burning gas.

Taking this all together leads to the discretized and thus finite-dimensional optimization problem

This is a highly nonlinear and, depending on the chosen grids, large-scale optimization problem. Moreover, it only possesses very few degrees of freedom since almost all variables are determined by our physical modeling. Both aspects already make the problem very challenging to solve. In addition, however, the model also contains the complementarity constraints (20), which makes it an ODE-constrained mathematical program with complementarity constraints (MPCC). Solving it for real-world networks is very challenging, which is the motivation of the error measure-based adaptive algorithm that we develop in the two following sections.

3 Error measures

In this section, we introduce the error measures for the adaptive optimization algorithm that is presented in Sect. 4. Our approach is based on the work of [16] and adapted for the problem at hand. The algorithm developed here is designed to iteratively solve the nonlinear program (NLP) until its solution \( y\) is deemed to be feasible w.r.t. a prescribed tolerance. The algorithm iteratively switches the model level and the step sizes of the discretization grids for each pipe according to a switching strategy presented later on. Both the switching strategy and the feasibility check utilize the error measures in this section.

For the (NLP), four error sources can be identified: errors as introduced by the solver of the optimization problem, round-off errors, errors from switching between Systems (D1)–(D3), and errors due to selecting different step sizes of the discretization of the systems. In this work we will only consider the latter two error sources, which we refer to as model (level) errors and discretization (level) errors, respectively. For a discussion of the neglected solver and round-off errors we refer to Remark 1 below. By investigating the Systems (D1)–(D3) one finds that the only difference between them, and hence the resulting error source, is the energy equation and its discretization. This is why we base the definition of the error in each pipe a on its internal energy density \( e_a\).

In general, utilizing estimates of the error of a system allows for the assessment of the quality of their solution if an exact solution is not available. Hence, this section is used to introduce error measure estimates for the model and discretization error. However, since we have the analytic solution of the energy equations of Systems (M1a)–(M3) at hand, we can compute exact errors for the model and discretization error. Having the exact errors available allows us to compare them to the error estimates presented in this work and, hence, determine their quality.

This section is structured as follows. We start by providing the required notation in Sect. 3.1. Furthermore, the rules that are used to refine and coarsen the grids in the discretization of Systems (D1)–(D3) are introduced. In Sect. 3.2, we continue by deriving exact and estimated error measures. We then close this section by proving that the error measure estimates form upper bounds of the exact error measures in a first-order approximation.

Remark 1

Since we want to be able to employ different third-party optimization software packages in our adaptive error control we do not incorporate the errors introduced by the solvers for the optimization problem. However, if error estimates and error control for these errors are available then these can be incorporated as well. It has been observed in the application of adaptive methods for gas networks [16, 32] that round-off errors typically do not contribute much to the global error. For this reason, we also do not consider round-off errors in our adaptive procedure for district heating networks.

3.1 Notation

We start this section by introducing the required quantities and notation. In order to keep the notation lucid, we omit the usage of the subscript \( a\) as much as possible in this section. In particular, we drop the subscript \( a\) for the model level (\( \ell _a\rightarrow \ell \)), the grid size (\( \Delta x_a\rightarrow \Delta x\)), and for the set of gridpoints (\( \varGamma _a\rightarrow \varGamma \)), if not stated otherwise.

Let \( y\) denote the solution of the optimization problem (NLP). For all pipes \( a\in {A}_{\text {p}}\) it contains the approximate solution \( e_a^\ell (x_k; \Delta x) \) for model level \( \ell \) (of pipe model (D\(\ell \))) and step size \( \Delta x\) (of discretization grid \( \varGamma \)) at every grid point \( x_k \in \varGamma \), \( k = 1,\ldots , n \). In addition, for a given pipe \( a\) we denote the exact solution of model (M\(\ell \)), evaluated at \( x_k \in \varGamma \) as \( e_a^\ell (x_k) \). Furthermore, for the approximate and exact solutions we also utilize the notion of \( e_a^\ell (\varGamma ; \Delta x) \mathrel {{\mathop :}{=}}(e_a^\ell (x_1; \Delta x), \ldots , e_a^\ell (x_n; \Delta x))^\top \) and \( e_a^\ell (\varGamma ) \mathrel {{\mathop :}{=}}(e_a^\ell (x_1), \ldots , e_a^\ell (x_n))^\top \), respectively.

We continue by defining the grid refinement and coarsening rules. For a given pipe \( a\), consider a sequence of grids \( \{ \varGamma _i \} \), \( i = 0,1,2,\ldots \), with \( \varGamma _i \mathrel {{\mathop :}{=}}\{x_{k_i}\}_{k_i={0}}^{n_i} \) and \( \Delta x_{i} = x_{k_{i+1}} - x_{k_i} \) for \( k_i = {0},\ldots , n_i \). Moreover, we refer to \( \varGamma _0 \) as the reference or evaluation grid. It is defined by a given number of grid points \( n_0 \) and the corresponding step size \( \Delta x_{0} \mathrel {{\mathop :}{=}}L_a/(n_0 - 1) \). Given an arbitrary grid \( \varGamma _i \), \( i = 0,1,2, \ldots \), we perform a grid refinement step by halving its step size \( \Delta x_i \) to get \( \Delta x_{i+1} = \Delta x_i/2 \) of the refined grid \( \varGamma _{i+1} \). Conversely, we perform a grid coarsening step by doubling \( \Delta x_{i} \) of grid \( \varGamma _{i} \) to obtain the coarsened grid \( \varGamma _{i-1} \) with step size \( \Delta x_{i-1} = 2 \Delta x_{i} \). Performing grid refinement and coarsening this way ensures that for every \( i = 1, 2, \ldots \) it holds that \( \varGamma _0 \subset \varGamma _i \). Therefore, providing a fixed number of grid points \( n_0 \) enables us to use the reference grid \( \varGamma _0 \) as a common evaluation grid for every refinement and coarsening step.

3.2 Derivation of error measures

Since we want to be able to compare the errors of a solution we measure the model and discretization errors by applying the maximum norm to them, which yields the associated model and discretization error measures, respectively.

In the following, we introduce two error measures: exact error measures and error measure estimates. To this end, we consider a single pipe \( a \in {A}_{\text {p}}\). We start by defining the total exact error measure as

where we compare the approximate solution of Model (D\(\ell \)) with grid size \( \Delta x_i \) to the exact solution of Model (M1a). Note that \(e_a^\ell (\varGamma _0; \Delta x_i)\) is part of the considered solution \(y\) and that the exact error measure \(e_a^1(\varGamma _0)\) can be, e.g., computed by using the exact formulas given in Sect. 2.1.2. Second, we introduce the exact model error measure via

where we compare the solutions of models (M\(\ell \)) and (M1a). Next, we define the exact discretization error measure as

for which we compare the solution of Model (D\(\ell \)) with grid size \( \Delta x_i \) to the exact solution of Model (M\(\ell \)). We continue by introducing error measure estimates. The (total) error measure estimate is defined as the sum of a model error measure estimate and a discretization error measure estimate. That is,

with the model error measure estimate

and the discretization error measure estimate

The model error measure estimate compares two solutions with the same discretization scheme but different pipe models (D1) and (D\(\ell \)). On the other hand, the discretization error measure estimate compares two solutions of the same model but with different discretization schemes as given by the step sizes \( \Delta x_i \) and \( \Delta x_{i-1} \).

By considering the definitions (28)–(33) one finds the relation

for \( \Delta x_{i} \rightarrow 0 \).

In the following, we show that the relation (34) holds for \( \Delta x_{i} \rightarrow 0 \). In particular, we need to show that \( \nu ^{\text {d}}_a(y) \mathrel {{\dot{\le }}}\eta ^{\text {d}}_a(y) \) and \( \nu ^{\text {m}}_a(y) \mathrel {{\dot{\le }}}\eta ^{\text {m}}_a(y) \) hold, where the relation \( f_1(x) \mathrel {{\dot{\le }}}f_2(x) \) states that a function \( f_2 \) is a first-order upper bound of the function \( f_1 \) if and only if \( f_1(x) \le f_2(x) + \phi (x) \) for \( x \rightarrow 0 \) and any function \( \phi \in o(\Vert f_2\Vert _{\infty })\). The use of first-order error measure bounds that are obtained by omitting higher-order terms is standard practice in adaptive refinement methods; see, e.g., [34]. In many instances one can also obtain exact upper bounds [14], but these are typically far too pessimistic to be of practical use.

We first proceed by showing that \( \nu ^{\text {d}}_a(y) \mathrel {{\dot{\le }}}\eta ^{\text {d}}_a(y) \) holds for \( \Delta x_{i} \rightarrow 0 \). Since we utilize the implicit mid-point rule to get Systems (D1)–(D3) and the fact that its discretization error measure is of convergence order 2 (see, e.g., [21]) we can write that

where we use \( \Delta x_{i-1} = 2 \Delta x_i \). Here, the function \( c^\ell (x) \), that arises from the Taylor series expansion of the local discretization error measure, is independent of \( \Delta x_i \); see, e.g., [31]. Computing the difference between (35) and (36) yields

and, thus,

By replacing \(c^\ell (x_k) \Delta x_i^2\) in (35) with the result of (38), applying the \( \infty \)-norm over \(\varGamma _0\) on both sides, and using the triangle inequality, we find

Since \(\eta ^{\text {d}}_a(y) \in {\mathcal {O}}(\Delta x_i^2)\) holds as shown in (37), we get that \(\nu ^{\text {d}}_a(y) \mathrel {{\dot{\le }}}\eta ^{\text {d}}_a(y)\) holds for \( \Delta x_{i} \rightarrow 0\).

Finally, we show that \( \nu ^{\text {m}}_a(y) \mathrel {{\dot{\le }}}\eta ^{\text {m}}_a(y) \). The ideas are rather similar. By applying the \(\infty \)-norm over \(\varGamma _0\) and the triangle inequality to the difference between (35) with (M1a) and current model level \(\ell \in \{(M1),(M2),(M3)\}\) we get

Since \(\eta ^{\text {m}}_a(y) \in {\mathcal {O}}(1)\), we get that \(\nu ^{\text {m}}_a(y) \mathrel {{\dot{\le }}}\eta ^{\text {m}}_a(y)\) holds for \( \Delta x_{i} \rightarrow 0\).

Remark 3

(Computing error measure estimates) Observing the definitions of the error measure estimates (31)–(33) yields that not only the energy \( e_a^\ell (\varGamma _0; \Delta x_i)\), as a part of the solution \(y\), is required to compute the estimates but also the values \( e_a^1(\varGamma _0; \Delta x_i) \) and \( e_a^\ell (\varGamma _0; \Delta x_{i-1}) \), which are not given in terms of the solution \( y\). One could compute these values by recomputing the (NLP) with appropriately modified pipe levels and step sizes. However, this is computationally very costly. An alternative approach is to explicitly solve the modified (w.r.t. appropriately modified model levels and step sizes) energy equations of the Systems (D1)–(D3) by means of implicit numerical integration. Fortunately, this is not required in this work since the energy equations of the Systems (D1)–(D3) together with Eq. (16) allow for solving them algebraically for the energies \( e_a^k \), \( k = 0, 1, \ldots , n \) in linear time.

In the following section we present the algorithm that adaptively switches the previously introduced models and their discretizations by means of a switching strategy.

4 Adaptive algorithm

In this section, we present and analyze the adaptive optimization algorithm. This algorithm is based on the work in [16] and adapted for the district heating network problem studied in this paper. The algorithm iteratively solves the (NLP) while adaptively switching the pipe model levels and discretization step sizes to achieve a locally optimal solution that is feasible w.r.t. to some prescribed tolerance. The adaptive switching is implemented via marking and switching strategies, which are based on the error measures presented in the previous section.

Given an a-priori error measure tolerance \(\varepsilon > 0 \), our method aims at computing a finite sequence of solutions of the nonlinear problem (NLP) in order to achieve a solution \(y\) with an estimated average error measure less or equal to \(\varepsilon \). This motivates the following definition.

Definition 1

Let \(\varepsilon > 0 \) be a given tolerance. The solution \(y\) of the (NLP) is called \(\varepsilon \)-feasible if

where \({{\bar{\eta }}} (y) \) is called the total average error measure estimate.

The remainder of this section is structured as follows. We first provide the switching and marking strategies used by our algorithm in Sect. 4.1. Then, we present the adaptive algorithm and prove its convergence in Sect. 4.2.

4.1 Switching and marking strategies

In a nutshell, the overall algorithm follows the standard principles of adaptive refinement methods: a problem is solved, an error measure is computed, elements (here pipes) are marked to be refined, the refinement is carried out, and the new problem is solved; see, e.g., [18, 34]. In this section, we describe both the rules that are used to carry out the refinements and the strategies that are used to mark the pipes to be refined.

We now define switching strategies to compute new pipe levels \(\ell _a^\text {new}\) and new step sizes \( \Delta x_a^\text {new}\). Let \(\varepsilon > 0\) be a tolerance and \( \tau \ge 1 \) be a tuning parameter. First, we introduce the model level switching rules. Consider the pipe sets

and

The set \({A}_{\text {p}}^{>\varepsilon }\) (\({A}_{\text {p}}^{<\tau \varepsilon }\)) contains all the pipes for which the new model level \( \ell _a^\text {new}\) decreases (increases) the model error measure estimate compared to the current model level \( \ell _a\) w.r.t. the error measure tolerance \( \varepsilon \). In order to switch-up the model level (\( \ell _a^\text {new}< \ell _a\)), we apply the rule

Similarly, for down-switching of the model level (\( \ell _a^\text {new}> \ell _a\)), we apply the rule

with \(\ell _{\text {max}} = 3\) in our setting. According to the rules defined in Sect. 3.1, we apply the following grid refinement and coarsening rule:

Based on the switching strategies defined in (39)–(43) we can now present our marking strategies that decide for which pipes we switch up or down the model level and for which pipes we refine or coarsen the step size. Let the sets \( {\mathcal {R}}, {\mathcal {U}}\subseteq {A}_{\text {p}}\) represent all pipes marked for grid refinement and model level up-switching, respectively. Furthermore, let the sets \( {\mathcal {C}}, {\mathcal {D}}\subseteq {A}_{\text {p}}\) represent all pipes marked for grid coarsening and model level down-switching, respectively. To avoid unnecessary switching we use threshold parameters \(\varTheta _{\mathcal {R}}\), \(\varTheta _{\mathcal {U}}\), \(\varTheta _{\mathcal {C}}\), \(\varTheta _{\mathcal {D}}\in (0,1)\). We determine \( {\mathcal {R}}\) and \( {\mathcal {U}}\) by finding the minimum subset of pipes \( a\in {A}_{\text {p}}\) such that

and

are satisfied, where in (45), the rule in (41) is applied. Similarly, in order to determine \( {\mathcal {C}}\) and \( {\mathcal {D}}\), we have to find the maximum subset of all pipes \( a\in {A}_{\text {p}}\) such that

and

hold, where in (47), the rule in (42) is applied.

Remark 3 Note that Definition 1 is based on the total error measure estimate as introduced in the previous section. Since the total exact error measure is upper bounded by the total error measure estimate via (34) one also has that the solution \( y\) of the (NLP) is \( \varepsilon \)-feasible w.r.t. the total average exact error measure \( \bar{\nu }(y) \), i.e., \( {{\bar{\nu }}}(y) \le \varepsilon \) holds with

Thus, whenever error measure estimates are used for the switching and marking strategies, the exact error measures can be used as well.

As used before in Sect. 3.2, the first-order approximation of the discretization error measure estimator in \(x \in [0,L_a]\) of a discretization scheme of order \(\beta \) reads \(\eta ^{\text {d}}_a(x) \doteq c(x) \Delta x_a^\beta \), where c(x) is independent of \(\Delta x_a\). This allows us to write

for the new discretization error measure estimator after a grid refinement or coarsening. Since the implicit mid-point rule is used in our case, \(\beta = 2\) holds, leading to

This also naturally holds for the exact discretization error measure estimator \(\nu ^{\text {d}}_a(x)\).

Alternative to the error measure estimates that we present, one could use Richardson extrapolation based on errors on different grids to generate error measure estimates. Since our estimates are straightforward, we have not proceeded in this way.

4.2 Adaptive algorithm

In this section we present the adaptive optimization algorithm. The algorithm is formally given in Algorithm 4.2 and described in the following.

The input of the algorithm comprise of a complete description of the network, including initial and boundary conditions, the error tolerance \( \varepsilon > 0 \) as well as initial values for the parameters \( \varTheta _{\mathcal {R}}^0, \varTheta _{\mathcal {U}}^0, \varTheta _{\mathcal {C}}^0, \varTheta _{\mathcal {D}}^0 \in (0,1) \), \( \tau ^0 \le 1 \), \( \mu ^0 \in {\mathbb {N}}_+ \). The output of the algorithm is an \( \varepsilon \)-feasible solution \( y\) of the nonlinear problem (NLP) according to Definition 1.

The algorithm starts by initializing model levels and grid sizes for each pipe. It then solves the (NLP) for the first time and checks for \(\varepsilon \)-feasibility. Since it is likely that after the first iteration the feasibility check fails, the algorithm enters two nested loops: the outer loop for down-switching and coarsening and the inner loop for up-switching and refinement. In this description we will also refer to the outer loop as the k-loop and to the inner loop as the j-loop.

Next, the inner loop is entered and the up-switching and refinement sets \({\mathcal {U}}\) and \({\mathcal {R}}\) are determined. This step is followed by up-switching and refining of each pipe accordingly. Each j-loop finishes by re-solving the (NLP) with the new configuration w.r.t. pipe model levels and grid sizes and it checks for feasibility. The inner loop continues until either a feasible solution \( y\) is found or a maximum number of inner loop iterations \( \mu ^k \) is reached.

What follows in the outer loop is the computation of the coarsening and down-switching sets \( {\mathcal {C}}\) and \( {\mathcal {D}}\), respectively. This step is succeeded by updating the pipe model levels and step sizes. Similar to the inner loop, the outer loop finishes by re-solving the (NLP) and checking for feasibility.

We first show that the algorithm is finite if we only apply changes to the discretization step sizes while fixing the model levels for all pipes.

Lemma 2

Suppose that the model level \(\ell _a\in \{1, 2, 3\}\) is fixed for every pipe \(a\in {A}_{\text {p}}\). Let the resulting set of model levels be denoted by \({\mathcal {M}}\). Suppose further that \(\eta _a(y) = \eta ^d_a(y) \) holds in (31) and that every (NLP) is solved to local optimality. Consider Algorithm 4.2 without applying the model switching steps in Lines 13 and 25. Then, the algorithm terminates after a finite number of refinements in Line 16 and coarsenings in Line 28 with an \(\varepsilon \)-feasible solution w.r.t. model level set \({\mathcal {M}}\) if there exists a constant C > 0 such that

holds and if the step sizes of the initial discretizations are chosen sufficiently small.

Proof

We focus on the total discretization error measure defined as

and show that this quantity is positively bounded away from zero for one outer-loop iteration k containing \(\mu \) inner refinement steps and one coarsening step. For the sake of simplicity we drop the k index.

Hence, we first look at the influence of one inner refinement for-loop iteration \(j \in \{1,\dots ,\mu \}\) on \(\eta ^{\text {d}}(y^j)\). Thus,

where we use that \(\eta ^{\text {d}}_a(y^{j})\) equals 1/4 of \(\eta ^{\text {d}}_a(y^{j-1})\) if \(\Delta x_a\) is chosen small enough.

Summing up Eq. (50) over all \(j \in \{1,\dots ,\mu \} \) gives the total error measure decrease in the inner for-loop:

We now focus on the final coarsening step of the outer for-loop. For the sake of simplicity we say that \(y^{\mu +1}\) corresponds to the solution of the (NLP) after the coarsening step. Thus,

holds, where we again use that \(\eta ^{\text {d}}_a( y^{\mu +1})\) equals \(4 \eta ^{\text {d}}_a(y^{\mu })\) if \(\Delta x_a\) is chosen small enough.

We now prove that the total error measure decrease in each iteration of the outer for loop of Algorithm 4.2 is positive and uniformly bounded away from zero. Hence, we consider

Then, using

(44), (49), and (46), we obtain

which completes the proof. \(\square \)

Next, we show that the algorithm is finite if we only apply model level changes while the discretization step sizes are kept fixed.

Lemma 3

Suppose that the discretization stepsize \(\Delta x_a\) is fixed for every pipe \(a\in {A}_{\text {p}}\). Suppose further that \(\eta _a(y) = \eta ^{\text {m}}_a(y) \) holds in (31) and that every (NLP) is solved to local optimality. Consider Algorithm 4.2 without applying the discretization refinements in Line 16 and the coarsening step in Line 28. Then, the algorithm terminates after a finite number of model switches in Lines 13 and 25 with an \(\varepsilon \)-feasible solution with respect to the step sizes \(\Delta x_a\), \(a\in {A}_{\text {p}}\), if there exists a constant C > 0 such that

The proof of this lemma is the same as in [16], which is why we omit it here.

Lemma 4

Let \(y^\mu \) and \(y^{\mu +1}\) be the solution of the optimization problem before and after a refinement or coarsening step, respectively. Let \(\eta ^{\text {d}}_a(y)\) and \(\eta ^{\text {m}}_a(y)\) be the discretization and model error measure estimator for a given solution y of (NLP) as defined in (33) and (32). Then, if

is satisfied, it holds that

Proof

For \(x \in \Gamma _{0}\) we introduce \(\eta ^{\text {d}}_a(x;\ell _a,\Delta x_{i})\) and \(\eta ^{\text {m}}_a(x;\ell _a,\Delta x_{i})\) as the local discretization error measure estimator and the local model error measure estimator evaluated at x using the model level \(\ell _a\) and the step size \(\Delta x_{i}\) such that

holds. Since \(\eta ^{\text {d}}_a(x;\ell _a,\Delta x_{i})\) uses the same step sizes \(\Delta x_i\) and \(\Delta x_{i-1}\) for all \(\ell _a\), we have

We now focus on the coarsening step and prove Eq. (52). The proof for the refinement step is analogous to the coarsening step and is therefore not presented. By definition and due to the coarsening step, we have

Using (53), we finally obtain

\(\square \)

We also have a corresponding result for the estimators of the discretization error measure. For this result, we make the following assumption.

Assumption 1

Let \(y^\mu \) and \(y^{\mu +1}\) be the solution of the optimization problem before and after a model up- or down-switching step, respectively. Moreover, let us denote with \(\lambda ^\mu \) and \(\lambda ^{\mu +1}\) the corresponding sensitivities. Then, there exists a constant \(C > 0\) with \(\Vert \lambda ^\mu - \lambda ^{\mu +1} \Vert \le C\).

Before we now state the next lemma, we briefly discuss this assumption. Informally speaking, it states that the difference of the sensitivities (i.e., of the dual variables) of the optimization problems before and after a model up- or down-switching step is bounded by a constant. We are convinced that this assumption holds for the different models in our catalog.

Lemma 5

Let \(y^\mu \) and \(y^{\mu +1}\) respectively be the solution of the optimization problem before and after a model up or down switching step. Let \(\eta ^{\text {d}}_a(y)\) and \(\eta ^{\text {m}}_a(y)\) be the discretization and model error measure estimator for a given solution y of (NLP) as defined in (33) and (32). Finally, suppose that Assumption 1 holds. Then,

holds.

Proof

As long as Assumption 1 holds, the error measure estimate for the discretization error measure is independent of the used model and we immediately get the desired result. \(\square \)

We are now ready to prove our main theorem on the finiteness of the proposed algorithm.

Theorem 1

(Finite termination) Suppose that \( \eta ^{\text {d}}_a\ll \eta ^{\text {m}}_a\) for every \(a\in {A}_{\text {p}}\) and that every (NLP) is solved to local optimality. Moreover, suppose that Assumption 1 holds. Then, Algorithm 4.2 terminates after a finite number of refinements, coarsenings, and model switches in Lines 13, 16, 25, and 28 with an \(\varepsilon \)-feasible solution w.r.t. the reference problem if there exist constants \(C_1, C_2 > 0\) such that

hold for all k.

Proof

We first focus on the average total error measure estimator decrease between two subsequent inner loop iterations of Algorithm 4.2. Hence,

holds, where we use Lemma 4, Lemma 5, and Eq. (48). Taking the sum over all \(j = 1, \dots , \mu \) inner loop iterations gives

Next, we focus on the outer loop iterations of Algorithm 4.2. We evaluate the average total error measure increase due to the coarsening and down-switching. Hence,

where we use Lemma 4, Lemma 5, and Eq. (48).

It suffices to prove that the inner loop average total error measure decrease is always greater than the outer loop average total error measure increase, i.e.,

Using the proofs of Lemmas 2 and 3, we obtain

This concludes the proof. \(\square \)

5 Numerical results

In this section we present numerical results and for this we first discuss the software and hardware setup. Then, the considered instances are presented and, afterward, the parameterization of the adaptive algorithm is explained.

5.1 Software and hardware setup

We implemented the models in Python 3.7.4 using the Pyomo 6.2 package [10, 11] and solve the resulting NLPs using the NLP solver CONOPT4 4.24 [8], which is interfaced via the Pyomo-GAMS interface. We also tested other solvers and concluded that CONOPT4 is the most reliable solver that performs best for our application. We used the default GAMS settings. The computations were executed on a computer with an Intel(R) Core(TM) i7-8550U processor with eight threads at 1.90GHz and 16GB RAM.

5.2 Test instances

The two networks considered in this section are the so-called AROMA and STREET networks; see also [15] where they have been used as well. AROMA is an academic test network, whereas STREET is a part of an existing real-world district heating network. Both networks contain cycles but the much larger STREET network only contains a single cycle so that the overall network is almost tree-shaped. Table 1 shows the main characteristics of these networks.

The cost of waste incineration, of natural gas, and of increasing the pressure of the water in the depot are taken from [19] and are set to  ,

,  , and

, and  . Additionally, the gas and pressure power variables \(P_{\textrm{g}}\) and \(P_{\textrm{p}}\) are left unbounded above, whereas the waste power variable \(P_{\textrm{w}}\) is bounded above by 10 kW. Scarce waste incineration power \(P_{\textrm{w}}\) implies an increased consumption of costly power (\(P_{\textrm{p}}\) and \(P_{\textrm{g}}\)) to satisfy the total customer demand and thus yields a non-trivial optimization problem.

. Additionally, the gas and pressure power variables \(P_{\textrm{g}}\) and \(P_{\textrm{p}}\) are left unbounded above, whereas the waste power variable \(P_{\textrm{w}}\) is bounded above by 10 kW. Scarce waste incineration power \(P_{\textrm{w}}\) implies an increased consumption of costly power (\(P_{\textrm{p}}\) and \(P_{\textrm{g}}\)) to satisfy the total customer demand and thus yields a non-trivial optimization problem.

5.3 Parameterization of the algorithm

Table 2 shows the parameters used for obtaining the numerical results. These parameters are kept constant over the course of the iterations of the algorithm to simplify the interpretation of the results. It should be noted that the parameters do not satisfy the second inequality in Theorem 1. We choose this parameterization despite this fact because the algorithm still converges using these settings and allows for switching down the model level of more pipes and, hence, keeps the optimization model more tractable over the course of the iterations. One could, e.g., by increasing \(\mu \), easily satisfy both inequalities of Theorem 1. For the first iteration of the adaptive algorithm we use \(\Delta x_a= L_a/2\) and \(\ell _a= 3\) for all \(a\in {A}_{\text {p}}\). This forces us to take the reference grid \(\varGamma _0 = \{0,L_a\}\) for all \(a\in {A}_{\text {p}}\). The assumption that the initial granularity of the discretization is sufficiently fine is not satisfied here but does (in practice) not harm the overall convergence of the algorithm and is therefore kept large.

5.4 Discussion of the results obtained by using error measure estimators

Let us first note that none of the tested optimization solvers converges to a feasible point for both the AROMA and the STREET network when using (M1a) and \(\Delta x_a= L_a/ 10\) for all \(a\in {A}_{\text {p}}\), since this spatial discretization already leads to a highly nonlinear problem of a size that is very hard to be tackled by state-of-the-art NLP solvers.

The two upper plots in Fig. 3 show a steady decrease of the values of the error measure estimators over the course of the iterations of the adaptive algorithm. Small increases in the error measure can be observed every five iterations of the algorithm. These arise from the increase of the model level and the coarsening of the discretizations (outer loop) that is carried out after four refinement steps in which we increase the model’s accuracy (inner loop). The error measure plots thus confirm that the algorithm steadily decreases the total error measure over the course of one outer loop iteration.

The results show that the algorithm works as expected and that it terminates after a finite number of iterations with a locally optimal solution of a model that has a physical accuracy for which state-of-the-art solvers are not able to compute a solution from scratch. This is one of the most important contributions of this paper: We can solve realistic instances that have not been solvable before. Additionally, the two lower plots in Fig. 3 show the computation times for the separate models of Type (NLP) that we solve in every iteration. Although we warmstart every new problem with the solution of the previous one, we observe an increase of solution times due to the higher complexity of the successive models that we solve.

Next, Fig. 4 shows the proportion of pipes inside the sets \({\mathcal {U}}\), \({\mathcal {R}}\), \({\mathcal {D}}\), and \({\mathcal {C}}\) before solving (NLP) for every iteration of the algorithm. The discretization sets represent a larger proportion of pipes when compared to the sets for switching between the model levels. This originates from the parameter selection that favors changes of the discretization and is explained by the fact that the model level of a specific pipe can only be increased twice—unlike the discretization step size that may need to be halved more often. The right plot of Fig. 5 shows violin plots for the amount of grid points in the pipes over the iterations of the algorithm applied to the STREET network. The plot confirms the idea behind the parameter selection. Besides this, Fig. 4 illustrates that the down-switching set \({\mathcal {D}}\) stays empty until the last outer loop iteration for both networks. This is a result of the set \({A}_{\text {p}}^{<\tau \varepsilon }\) being empty for the first outer-loop iterations of the algorithm, which forces \({\mathcal {D}}\) to be empty. The amount of pipes in each model level is shown in the left plot of Fig. 5. Roughly 90% of all pipes end up in the most accurate model level whereas the remaining stay in the intermediate level.

Overall, we see that the behavior of the algorithm is comparable when applied to the two different networks, which indicates that the algorithm is robust.

5.5 Discussion of the results obtained by using exact error measures

We now compare the impact of using the error measure estimators defined in (31)–(33) when employing the exact error measures as defined in (28)–(30). To this end, we only consider the larger STREET network. Figure 6 shows the previously discussed plots using exact error measures. Both approaches need 19 iterations to reach the desired tolerance. However, when looking at the distribution of model levels, we see that in the case of using error measure estimators, a much higher proportion of pipes are modeled using the most accurate model (M1a), which is not the case for any pipe in the exact error measure case; see the bottom-left plot in Fig. 6. Thus, it seems that the error measure estimators overestimate the importance of switching to the most accurate model level. Consequently, using the error measure estimators instead of the exact error measures introduces a larger amount of nonlinearities to the models that are solved in each iteration. This is an interesting aspect and shows that it might be beneficial to use exact error measures if they are available like for the ODEs that we consider in this paper. Nevertheless, the computation times show very similar behavior for both approaches, which makes clear that using error measure estimators (especially in cases in which exact error measure formulas are not available) also leads to an effective method.

Exact error measure values (top-left), computation time (top-right), proportion of pipes inside each model level set (bottom-left), and violin plots of the quantity of grid points (bottom-right) over the course of the iterations of the adaptive algorithm using the exact error measures applied on the STREET network

5.6 Is physical accuracy worth the effort?

Let us close this section with a brief analysis of whether the physical accuracy guaranteed by our adaptive method is worth the computational effort. The answer is a clear “Yes”. To illustrate this, Fig. 7 shows the values of some forward flow variables (pressures, temperatures, and mass flows) that are part of the (NLP) of the AROMA network solved in the first iteration as well as in the last iteration of the adaptive algorithm. The parameter setup used in this test case is the same as presented in Sect. 5.2. Note that the solution of the first iteration (top figure) corresponds to a rather coarse physical modeling whereas the solution of the last iteration (bottom figure) satisfies the prescribed tolerance and is very accurate.

The difference of the solution values are obvious. The first solution has no temperature losses at all (see (M3)) and all temperature values are at the upper bound. Moreover, the mass flow values are comparably small. This changes completely in the final solution. The temperatures have decreased around 50K and mass flows have increased by up to a factor of 3. The pressures have also changed by around 10%. It is clearly visible that the physical solution and the control of the network changes significantly if the physical accuracy is increased. Thus, there is a strong need for computing highly accurate solutions if the resulting controls shall be practically useful.

6 Conclusion

In this paper, we set up a catalog of models for the hot water flow in pipes of district heating networks. For all entries of this catalog, we also derived suitable discretizations to obtain finite-dimensional optimization problems for the energy-efficient control of these networks that still ensures that the demand of all customers are satisfied. Based on these different models, we designed an iterative and adaptive optimization method that automatically adapts the model level in the catalog as well as the granularity of the discretization to finally obtain a local optimal control that is feasible w.r.t. a user-specified tolerance. We show finite termination of this algorithm and present very convincing numerical results that particularly show that we can now solve realistic instances that are not solvable with state-of-the-art commercial NLP solvers.

For our future work, we plan to extend our modeling and solution approach to the case of instationary hot water flow modeling. While we are confident that the overall ideas can be carried over to this PDE-setting, this will most likely require some more technical derivations compared to the ODE-case considered in this paper.

Data availability

Not applicable.

References

Benonysson, A., Bøhm, B., Ravn, H.F.: Operational optimization in a district heating system. Energy Convers. Manag. 36(5), 297–314 (1995). https://doi.org/10.1016/0196-8904(95)98895-T

Bordin, C., Gordini, A., Vigo, D.: An optimization approach for district heating strategic network design. Eur. J. Oper. Res. 252(1), 296–307 (2016). https://doi.org/10.1016/j.ejor.2015.12.049

Borsche, R., Eimer, M., Siedow, N.: A local time stepping method for district heating networks. Technical report (2019). https://kluedo.ub.uni-kl.de/frontdoor/deliver/index/docId/5140/file/district_heating.pdf

Bronshtein, I.N., Semendyayev, K.A., Musiol, G., Mühlig, H.: Handbook of Mathematics, 6th edn. Springer, New York (2015). https://doi.org/10.1007/978-3-540-72122-2

Colella, F., Sciacovelli, A., Verda, V.: Numerical analysis of a medium scale latent energy storage unit for district heating systems. Energy 45(1), 397–406. https://doi.org/10.1016/j.energy.2012.03.043. The 24th International Conference on Efficiency, Cost, Optimization, Simulation and Environmental Impact of Energy, ECOS (2011)

Domschke, P., Hiller, B., Lang, J., Mehrmann, V., Morandin, R., Tischendorf, C.: Gas network modeling: an overview. Technical report (2021). TRR 154 Preprint. https://opus4.kobv.de/opus4-trr154

Dorfner, J., Hamacher, T.: Large-scale district heating network optimization. IEEE Trans. Smart Grid 5(4), 1884–1891 (2014). https://doi.org/10.1109/TSG.2013.2295856

Drud, A.S.: Conopt-a large-scale grg code. ORSA J. Comput. 6(2), 207–216 (1994)

Hante, F.M., Schmidt, M.: Complementarity-based nonlinear programming techniques for optimal mixing in gas networks. Eur. J. Comput. Optim. 7(3), 299–323 (2019). https://doi.org/10.1007/s13675-019-00112-w

Hart, W.E., Watson, J.-P., Woodruff, D.L.: Pyomo: modeling and solving mathematical programs in Python. Math. Program. Comput. 3(3), 219–260 (2011). https://doi.org/10.1007/s12532-011-0026-8

Hart, W.E., Laird, C.D., Watson, J.-P., Woodruff, D.L., Hackebeil, G.A., Nicholson, B.L., Siirola, J.D.: Pyomo-optimization Modeling in Python. Springer, New York (2017). https://doi.org/10.1007/978-1-4614-3226-5

Hassine, I.B., Eicker, U.: Impact of load structure variation and solar thermal energy integration on an existing district heating network. Appl. Therm. Eng. 50(2), 1437–1446. https://doi.org/10.1016/j.applthermaleng.2011.12.037 (2013). Combined Special Issues: ECP 2011 and IMPRES 2010

Hauschild, S.-A., Marheineke, N., Mehrmann, V., Mohring, J., Badlyan, A.M., Rein, M., Schmidt, M.: Port-Hamiltonian modeling of district heating networks. In: Reis, T., Grundel, S., Schöps, S. (eds.) Progress in Differential Algebraic Equations II. Differential-Algebraic Equations Forum. Springer, New York (2020). https://doi.org/10.1007/978-3-030-53905-4_11

Konstantinov, M.M., Gu, D.W., Mehrmann, V., Petkov, P.H.: Perturbation Theory for Matrix Equations, p. 429. North Holland, Amsterdam (2003)

Krug, R., Mehrmann, V., Schmidt, M.: Nonlinear optimization of district heating networks. Optim. Eng. 22(2), 783–819 (2021). https://doi.org/10.1007/s11081-020-09549-0

Mehrmann, V., Schmidt, M., Stolwijk, J.J.: Model and discretization error adaptivity within stationary gas transport optimization. Vietnam J. Math. 46(4), 779–801 (2018). https://doi.org/10.1007/s10013-018-0303-1

Mehrmann, V., Morandin, R.: Structure-preserving discretization for port-hamiltonian descriptor systems. In: 58th IEEE Conference on Decision and Control (CDC), 9.-12.12.19, Nice, pp. 6863–6868. IEEE (2019). https://doi.org/10.1109/CDC40024.2019.9030180

Nochetto, R.H., Siebert, K.G., Veeser, A.: Theory of adaptive finite element methods: an introduction. In: Multiscale, Nonlinear and Adaptive Approximation, pp. 409–542. Springer, New York (2009). https://doi.org/10.1007/978-3-642-03413-8_12

Nussbaumer, T., Thalmann, S.: Influence of system design on heat distribution costs in district heating. Energy 101, 496–505 (2016). https://doi.org/10.1016/j.energy.2016.02.062

Pirouti, M., Bagdanavicius, A., Ekanayake, J., Wu, J., Jenkins, N.: Energy consumption and economic analyses of a district heating network. Energy 57, 149–159 (2013). https://doi.org/10.1016/j.energy.2013.01.065

Quarteroni, A., Sacco, R., Saleri, F.: Numerical Mathematics, vol. 37. Springer, New York (2010). https://doi.org/10.1007/b98885

Reid, W.T.: Riccati Differential Equations. Mathematics in Science and Engineering, vol. 86. Elsevier, Amsterdam (1972). https://doi.org/10.1016/S0076-5392(08)61166-2

Rein, M., Mohring, J., Damm, T., Klar, A.: Optimal control of district heating networks using a reduced order model. Technical report (2020). http://publica.fraunhofer.de/documents/N-596673.html

Rein, M., Mohring, J., Damm, T., Klar, A.: Parametric model order reduction for district heating networks. Proc. Appl. Math. Mech. 18(1), e201800192 (2018). https://doi.org/10.1002/pamm.201800192

Rein, M., Mohring, J., Damm, T., Klar, A.: Model order reduction of hyperbolic systems at the example of district heating networks. Technical report (2019). arXiv:1903.03342

Rezaie, B., Rosen, M.A.: District heating and cooling: review of technology and potential enhancements. Appl. Energy 93, 2–10 (2012). https://doi.org/10.1016/j.apenergy.2011.04.020

Roland, M., Schmidt, M.: Mixed-integer nonlinear optimization for district heating network expansion. Automatisierungstechnik (2020). https://doi.org/10.1515/auto-2020-0063. Special Issue “Mathematical Innovations fostering the Energy Transition—Control, Optimization and Uncertainty Quantification”

Sandou, G., Font, S., Tebbani, S., Hiret, A., Mondon, C., Tebbani, S., Hiret, A., Mondon, C.: Predictive control of a complex district heating network. In: Proceedings of the 44th IEEE Conference on Decision and Control, pp. 7372–7377 (2005). https://doi.org/10.1109/CDC.2005.1583351

Schmidt, M., Steinbach, M.C., Willert, B.M.: High detail stationary optimization models for gas networks: validation and results. Optim. Eng. 17(2), 437–472 (2016). https://doi.org/10.1007/s11081-015-9300-3

Schweiger, G., Larsson, P.-O., Magnusson, F., Lauenburg, P., Velut, S.: District heating and cooling systems—framework for modelica-based simulation and dynamic optimization. Energy 137, 566–578 (2017). https://doi.org/10.1016/j.energy.2017.05.115

Stoer, J., Bulirsch, R.: Introduction to Numerical Analysis, 3rd edn. Springer, New York (2002). https://doi.org/10.1007/978-0-387-21738-3

Stolwijk, J., Mehrmann, V.: Error analysis and model adaptivity for flows in gas networks. Analele Stiintifice ale Universitatii Ovidius Constanta, Seria Matematica 26, 231–266 (2018). https://doi.org/10.2478/auom-2018-0027

Verda, V., Colella, F.: Primary energy savings through thermal storage in district heating networks. Energy 36(7), 4278–4286 (2011). https://doi.org/10.1016/j.energy.2011.04.015

Verfürth, R.: A Posteriori Error Estimation Techniques for Finite Element Methods. OUP, Oxford (2013)

Verrilli, F., Srinivasan, S., Gambino, G., Canelli, M., Himanka, M., Del Vecchio, C., Sasso, M., Glielmo, L.: Model predictive control-based optimal operations of district heating system with thermal energy storage and flexible loads. IEEE Trans. Autom. Sci. Eng. 14(2), 547–557 (2017). https://doi.org/10.1109/TASE.2016.2618948

Acknowledgements

We acknowledge the support by the German Bundesministerium für Bildung und Forschung within the project “EiFer”. Moreover, we are very grateful to all the colleagues within the EiFer consortium for many fruitful discussions on the topics of this paper and for providing the data. We also thank the Deutsche Forschungsgemeinschaft for their support within projects A05, B03, B08, and Z01 in the Sonderforschungsbereich/Transregio 154 “Mathematical Modelling, Simulation and Optimization using the Example of Gas Networks”.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dänschel, H., Mehrmann, V., Roland, M. et al. Adaptive nonlinear optimization of district heating networks based on model and discretization catalogs. SeMA 81, 81–112 (2024). https://doi.org/10.1007/s40324-023-00332-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40324-023-00332-6