Abstract

We present an abstract framework for the eigenvalue approximation of a class of non-coercive operators. We provide sufficient conditions to guarantee the spectral correctness of the Galerkin scheme and to obtain optimal rates of convergence. The theory is applied to the convergence analysis of mixed finite element approximations of the elasticity and Stokes eigensystems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In many common applications of solid mechanics, mixed formulations derived from the Hellinger-Reissner variational principle perform better than the standard displacement-based formulation. They deliver direct and accurate approximations of the stress tensor and they are free from the locking phenomenon in the nearly incompressible case [11].

The symmetry constraint on the Cauchy stress tensor has been the main difficulty in the construction of stable conforming discretizations of stress-displacement mixed formulations. The first important progress in this direction is due to Arnold and Winther [3]. This work led to further developments in conforming mixed finite elements on simplicial and rectangular meshes for both 2D and 3D; see [1, 6, 23] and the references therein. However, these mixed finite elements require the simultaneous imposition of H(div)-conformity and strong symmetry, which entails too many degrees of freedom and complicates the implementation of the corresponding Galerkin schemes. Moreover, they are not amenable to hybridization. To overcome this difficulty one can either consider non-conforming or DG approximations [7, 20, 32] or relax the symmetry constraint as in [2, 4, 5, 14, 21, 31]. We point out that the latter alternative, which is the option of choice in this paper, incorporates a further variable (a Lagrange multiplier called the rotor that approximates the skew-symmetric part of the displacement gradient) to enforce weakly the symmetry restriction at the discrete level.

The approximation of eigenvalue problems in mixed form has been the object of several papers; see part 3 of [8] and the references therein. In particular, it is known from [9] that the usual stability conditions for discrete mixed source problems (namely the ‘coercivity in the kernel’ and the inf-sup conditions) are not sufficient to ensure correct spectral approximations. Recently, a dual-mixed eigenvalue formulation of the elasticity problem with reduced symmetry has been considered in [26]. The eigenproblem resulting from this approach doesn’t fit into any of the previously existing theories for mixed spectral problems. Nevertheless, the abstract spectral approximation theory of Descloux-Nassif-Rappaz [16] could be successfully adapted in [26] to show that the Galerkin method based on the first order Arnold-Falk-Winther element [5] is free from spurious modes and converges at optimal rates for eigenvalues and eigenvectors. The same strategy has been applied to a pseudostress formulation of the Stokes eigenproblem [28] and to a stress-pressure formulation of a fluid-structure interaction spectral problem [27].

The aim of this paper is to provide a general theory for the spectral approximation of a class of symmetric and noncoercive operators, so that the studies carried out in [26,27,28] all fit into the same framework. The analysis given here is performed according to the ideas in [26] and builds on the theory developed in [16, 17]. The resulting unified approach reveals a new criterion (see Assumption 5 below) to determine the spectral correctness of a given Galerkin approximation. This allows to validate more families of mixed finite elements for the approximation of the elasticity eigenproblem as mentioned in Remark 6.1.

We also highlight that the analysis considered in [26] relies on the regularity of an auxiliary elasticity source problem. Here, we can circumvent the use of this property, which allows us to treat the important case of heterogeneous material coefficients.

The paper is organized as follows: In Sect. 2 we set out the abstract spectral problem and we describe its continuous Galerkin approximation in Sect. 3. In Sect. 4 we provide sufficient conditions ensuring the spectral correctness of the approximation in the sense of [16]. In Sect. 5, we establish rates of convergence for eigenvalues and eigenfunctions. Section 6 is devoted to applications. We show that the abstract framework can be applied to the stress formulation with weak symmetry of the elasticity and Stokes eigenproblems. We present numerical results for the latter example that confirm the theoretical convergence rates.

2 An abstract eigenproblem

Let H, X be two infinite-dimensional, separable, real Hilbert spaces endowed with inner products \((\cdot , \cdot )_H\), \((\cdot , \cdot )_X\) and corresponding norms \(\Vert \cdot \Vert _H\) and \(\Vert \cdot \Vert _X\). We assume that the inclusion \(X \hookrightarrow H\) is continuous. We let \(c: X\times X \rightarrow \mathbb {R}\) be a bounded, symmetric and positive semidefinite bilinear form such that \(c(\cdot ,\cdot )+ {(}\cdot , \cdot {)}_H\) is coercive on X, i.e., there exists \(\alpha >0\) such that

We introduce the closed subspace

and point out that, as \(c(\cdot ,\cdot )\) is semidefinite, we may also write \(K = {\{}u\in X:\, c(u,u) = 0{\}}\). We do not require K to be finite-dimensional. Finally, we let \(B:\, H\times H \rightarrow \mathbb {R}\) be a symmetric and bounded bilinear form and consider \(A:\, X\times X \rightarrow \mathbb {R}\) given by

We are interested in the following eigenvalue problem: find \(0\ne u \in X\) and \(\kappa \in \mathbb {R}\) such that

Our purpose is to introduce a series of assumptions that permit us to solve (in Sect. 2) the spectral problem (2.1) and to analyse (in Sect. 3) the convergence of the corresponding Galerkin approximation (3.1).

Assumption 1

We assume that

-

(i)

there exists \(\beta _A>0\) such that \(\displaystyle \sup _{v\in X} \dfrac{A(u,v)}{\Vert v\Vert _X} \ge \beta _A \Vert u\Vert _X,\quad \forall u \in X\),

-

(ii)

and there exists \(\beta _B>0\) such that \(\displaystyle \sup _{v\in K} \dfrac{B(u,v)}{\Vert v\Vert _H} \ge \beta _B \Vert u\Vert _H,\quad \forall u \in K\).

Assumption 1(i) and the symmetry of A imply that the linear operator \(T:\, X \rightarrow X\) defined, for all \(u\in X\), by

is well-defined and bounded, c.f. [18, Theorem 2.6]. The importance of the source operator T lies in the fact that its eigenvalues and those of the problem (2.1) are reciprocal to each other with coincident associated eigenfunctions. A full description of the spectrum of T will then solve eigenproblem (2.1). It is clear that \(\kappa =1\) is an eigenvalue of (2.1) associated with the eigenspace K, which can also be expressed in terms of the source operator T by the property \(\mathop {\mathrm {ker}}\nolimits (I - T) = K\). Consequently, if K is not a finite-dimensional subspace of X (which is the case in the applications we have in mind) T is not a compact operator.

We introduce the closed subspace

We point out that the orthogonality symbol \(\bot _B\) is an abuse of notation since \(B(\cdot ,\cdot )\) is generally not an inner product in H. Moreover, the symmetry of A and B imply that T is symmetric with respect to B, indeed,

It follows immediately from this fact that \(K^{\bot _{B}}\) is T-invariant, namely, \(T(K^{\bot _{B}}) \subset K^{\bot _{B}}\).

Proposition 2.1

If Assumption 1 (ii) is satisfied, the splitting \(X = K \oplus K^{\bot _{B}}\) is direct and stable.

Proof

By virtue of Assumption 1 (ii), for any \(u\in X\), there exists a unique \(u_0\in K\) solution of

with the a priori estimate (see [18, Theorem 2.6]) \(\Vert u_0\Vert _X \le \frac{\Vert u_0\Vert _H}{\sqrt{\alpha }}\le \frac{\Vert B\Vert }{{\sqrt{\alpha }}\beta _B} \Vert u\Vert _X\), where \(\Vert B\Vert \) stands for the norm of the bilinear form B. It follows that the direct decomposition \(u = u_0 + u-u_0\) into components \(u_0\in K\) and \(u-u_0\in K^{\bot _{B}}\) is stable. \(\square \)

As a consequence of Proposition 2.1, there exists a unique continuous projector \(P:\, X \rightarrow X\) with range \(K^{\bot _{B}}\) and kernel K. We are now going to provide a description of the spectrum of T under the following conditions.

Assumption 2

We assume that

-

(i)

the inclusion \(P(X)\hookrightarrow H\) is compact,

-

(ii)

and the inclusion \(P(X)\cap T(X)\hookrightarrow X\) is compact.

We notice that, as \(P(X) = K^{\bot _{B}}\) is T-invariant, the inclusion \(T(P(X)) \subset P(X)\cap T(X)\) holds true and Assumption 2 (ii) implies that \(T:\, K^{\bot _{B}} \rightarrow K^{\bot _{B}}\) is compact. The following result is then a consequence of the spectral characterization of compact operators.

Theorem 2.1

Under Assumption 1 and Assumption 2 (ii), the spectrum of T decomposes as follows: \(\mathop {\mathrm {sp}}\nolimits (T)={\{}0,1{\}}\cup {\{}\eta _k{\}}_{k\in {\mathbb {N}}}\), where:

-

(i)

\(\eta =1\) is an eigenvalue of T of finite/infinite multiplicity with associated finite/infinite dimensional eigenspace K;

-

(ii)

\({\{}\eta _k{\}}_{k\in {\mathbb {N}}}\subset (0,1)\) is a sequence of finite multiplicity eigenvalues of T that converges to 0 and the corresponding eigenspaces lie in \(K^{\bot _{B}}\);

-

iii)

if T is non-injective, \(\eta =0\) is an eigenvalue of T with associated eigenspace \(\mathop {\mathrm {ker}}\nolimits (T)\).

Remark 2.1

If we assume that \(A(v,v)\ne 0\) for all \(v\in K^{\bot _{B}}{\setminus }\{0\}\), then it can also be shown that the ascent of each eigenvalue \(\eta _k\in (0,1)\) is 1, c.f. [26, Proposition A.2].

3 A continuous Galerkin discretization

We introduce a family \({\{}X_h{\}}_{h\ge 0} \subset X\) of finite dimensional subspaces of X. The continuous Galerkin discretization of the variational eigenproblem 2.1 reads as follows: find \(0\ne u_h \in X_h\) and \(\kappa _h \in \mathbb {R}\) such that

We will use the notation

for the distance in X between an element u and a closed subspace \(W\subset X\).

Assumption 3

We assume that \(K_h \subset K\), where \( K_h := {\{}v_h\in X_h;\quad c(v_h,v_h) = 0{\}}. \)

We consider \( K_h^{\bot _B} := {\{}u_h\in X_h: \, B(u_h, v_h) = 0,\quad \forall v_h\in K_h{\}}. \) It is important to notice that \(K_h^{\bot _B}\) is generally not a subspace of \(K^{\bot _B}\). To proceed with the analysis of problem (3.1) we need the following discrete inf-sup conditions.

Assumption 4

We assume that

-

(i)

there exists \(\beta _A'>0\) independent of h such that \(\displaystyle \sup _{v\in X_h} \dfrac{A(u,v)}{\Vert v\Vert _X} \ge \beta _A' \Vert u\Vert _X,\quad \forall u \in X_h\),

-

(ii)

and there exists \(\beta _B'>0\) independent of h such that \(\displaystyle \sup _{v\in K_h} \dfrac{B(u,v)}{\Vert v\Vert _H} \ge \beta _B' \Vert u\Vert _H,\quad \forall u \in K_h\).

Under Assumption 3 and Assumption 4 (ii), we can prove (as in Proposition 2.1) that the splitting \(X_h = K_h \oplus K_h^{\bot _B}\) is direct and uniformly stable with respect to h. We can also associate to this direct decomposition a unique projector \(P_h:\, X_h \rightarrow X_h\) with range \(K_h^{\bot _{B}}\) and kernel \(K_h\), which is uniformly bounded with respect to h.

Moreover, thanks to Assumption 4 (i), the linear operator \({\tilde{T}}_h:\, X \rightarrow X_h\) defined, for all \(u\in X\), by

is well-defined and uniformly bounded with respect to h. Moreover, we have the Céa estimate (c.f. [18, Lemma 2.28])

We point out that \(T_h := {\tilde{T}}_h|_{X_h}\) reduces to the identity on \(K_h\), which means that 1 is an eigenvalue of \(T_h\) with associated eigenspace \(K_h\). Moreover, \(\kappa _h\ne 0\) is an eigenvalue of Problem (3.1) if and only if \(\eta _h = 1/\kappa _h\) is an eigenvalue of \(T_h\) and the corresponding eigenspaces are the same. Finally, here again, the symmetry of \(T_h\) with respect to B implies that \(K_h^{\bot _B}\) is \(T_h\)-invariant, i.e., \(T_h(K_h^{\bot _B}) \subset K_h^{\bot _B}\). We are then in a position to provide the following spectral decomposition of \(T_h\).

Theorem 3.1

The spectrum of \(T_h\) consists of \(m:=\mathop {\mathrm {dim}}\nolimits (X_h)\) eigenvalues, repeated accordingly to their respective multiplicities. Under Assumptions 3 and 4, it holds \(\mathop {\mathrm {sp}}\nolimits (T_h)={\{}1{\}}\cup {\{}\eta _{hk}{\}}_{k=1}^{m_0}\), with \(m_0 = m - \mathop {\mathrm {dim}}\nolimits (K_h)\). Moreover,

-

(i)

the eigenspace associated to \(\eta _h=1\) is \(K_h\);

-

(ii)

\(\eta _{hk}\in (0,1)\), \(k=1,\dots ,m_0 - \mathop {\mathrm {dim}}\nolimits (\ker (T_h))\), are eigenvalues with eigenspaces lying in \(K_h^{\bot _B}\);

-

(iii)

if \(T_h\) is non-injective, \(\eta _h=0\) is an eigenvalue with corresponding eigenspace \(\mathop {\mathrm {ker}}\nolimits (T_h)\).

Proof

The result follows from the decomposition \(X_h=K_h\oplus K_h^\bot \), the fact that \(T_h|_{K_h}:K_h \longrightarrow K_h\) is the identity and the inclusion \(T_h(K_h^{\bot _B}){\subset } K_h^{\bot _B}\). \(\square \)

Remark 3.1

Here again (see Remark 2.1), if \(A(v,v) \ne 0\) for all \(v\in K_h^\bot {\setminus }\{0\}\) then, the eigenvalues \(\eta _{hk}\in (0,1)\) are non-defective.

4 Correctness of the spectral approximation

Henceforth, given any positive functions \(F_h\) and \(G_h\) depending on the parameter h, the abbreviation \(F_h \lesssim G_h\) means that \(F_h \le C\, G_h\) with a constant \(C>0\) independent h. Moreover, the norm of a linear and continuous operator \(L:\, V_1 \rightarrow V_2\) between two Hilbert spaces \(V_1\) and \(V_2\) is denoted

When \(V_1 = V_2 = V\) we simply write \(\Vert L\Vert _{{\mathcal {L}}(V)}\) for \(\Vert L\Vert _{{\mathcal {L}}(V, V)}\).

The spectral approximation theory developed in [16] for non-compact operators relies essentially on the condition

to prove that \(T_h:\, X_h \rightarrow X_h\) provides a correct spectral approximation of T (in a sense that will be precised in Theorem 4.1 below). The aim of this section is to show that the following key assumption guarantees (4.1).

Assumption 5

There exists a linear operator \(\Xi _h:\,K^{\bot _B} \rightarrow X_h\) satisfying

-

(i)

there exits a constant \(C>0\) independent of h such that

$$\begin{aligned} \Vert \Xi _h v\Vert _H\le C \Vert v\Vert _H \quad \text {and} \quad \Vert \Xi _h v\Vert _X\le C \Vert v\Vert _X\qquad \forall v\in K^{\bot _B}, \end{aligned}$$ -

(ii)

\(\lim _{h\rightarrow 0} \Vert (I - \Xi _h)Pv\Vert _X = 0\), \(\forall v\in X\),

-

(iii)

and \((I -\Xi _h P) X_h \subset K_h\).

Lemma 4.1

If Assumptions 1, 3 and 4 are satisfied, the following estimate holds true

Proof

Taking into account that \(T-T_h\) vanishes identically on \(K_h\subset K\) we obtain,

Next, we deduce from the triangle inequality and Céa estimate (3.3) that

and the uniform boundedness of \({\widetilde{T}}_h\) with respect to h gives the result. \(\square \)

To achieve (4.1), let us first prove the following auxiliary result.

Lemma 4.2

Under Assumptions 1 (ii), 3, 4 (ii) and 5 (iii) it holds,

Proof

Let us first notice that, by virtue of Assumption 5 (iii),

The triangle inequality yields

where the last estimate is a consequence of (4.2), Assumption 5 (iii) and the fact that \((I - \Xi _h) Pu_h = u_h - \Xi _hP u_h -(u_h - Pu_h) \in K\). Next, we use the inf-sup condition provided by Assumption 4 (ii) to deduce from (4.3) and Assumption 3 that

and the result follows. \(\square \)

Lemma 4.3

Under Assumptions 1–5 it holds

Proof

Let us first notice that

Combining Lemma 4.1 and Lemma 4.2 with the last estimate yields

Now, by virtue of Assumption 2 (ii) and Assumption 5 (i)–(ii), \(TP:\, X \rightarrow X\) is compact and the operator \(I - \Xi _h:\, (K^{\bot _B}, \Vert \cdot \Vert _X) \rightarrow X\) is uniformly bounded and converges pointwise to zero. Hence, \((I - \Xi _h)TP:\, X\rightarrow X\) converges uniformly to zero; namely,

On the other hand, thanks to Assumption 2 (i) and Assumption 5 (i)–(ii), \(P:\, X \rightarrow H\) is compact and \(I - \Xi _h:\, (K^{\bot _B}, \Vert \cdot \Vert _H) \rightarrow X\) is uniformly bounded and converges pointwise to zero, due to the continuous embedding of X in H. Consequently, \((I - \Xi _h)P:\, X\rightarrow H\) converges uniformly to zero, i.e.,

and the result follows by using (4.5) and (4.6) in (4.4). \(\square \)

For the sake of completeness, we finalize this section by adapting the results of [16] (see also [26]) to show that Assumptions 1–5 are sufficient to ensure the correctness of the spectral approximation. Let us first recall that the resolvent the operator of T is given by

The mapping \(z\mapsto \Vert R_z(T)\Vert _{\mathcal {L}(X)}\) is continuous for all \(z\notin \mathop {\mathrm {sp}}\nolimits (T)\) and goes to zero as \(|z|\rightarrow \infty \). Consequently, it is bounded on any compact subset \(F\subset {\mathbb {C}}\) satisfying \(F\cap \mathop {\mathrm {sp}}\nolimits (T) = \emptyset \), namely, there exists a constant \(C_F>0\) such that

It is shown in [26, Lemma 1] that the same property holds true uniformly in h for the resolvent \(R_z(T_h):= \left( z I - T_h \right) ^{-1}:\, X_h \rightarrow X_h\) of the discrete source operator \(T_h\). We recall this result below.

Lemma 4.4

Let \(F\subset {\mathbb {C}}\) be an arbitrary compact subset such that \(F\cap \mathop {\mathrm {sp}}\nolimits (T) = \emptyset \). Then, if Assumptions 1-5 are satisfied, there exists \(h_0>0\) such that \(\forall h\le h_0\),

where \(C_F>0\) is the constant appearing in (4.8).

Proof

We deduce from the decomposition \( (z I - T_h) v_h = (z I - T) v_h + (T - T_h ) v_h \) and (4.8) that

and the result follows from Lemma 4.3. \(\square \)

Remark 4.1

Lemma 4.4 means that given an arbitrary compact set \(F\subset {\mathbb {C}} {\setminus }\mathop {\mathrm {sp}}\nolimits (T)\) there exists \(h_0 > 0\) such that for all \(h \le h_0\) it holds \(F\subset {\mathbb {C}}{\setminus }\mathop {\mathrm {sp}}\nolimits (T_h)\), which means that, for h small enough, the Galerkin scheme (3.1) does not introduce spurious modes.

For E and F closed subspaces of X, we set

the latter being the so called gap between subspaces E and F.

Let \(F\subset {\mathbb {C}}{\setminus }{\{}0,1{\}}\) be a compact set whose boundary \(\Lambda \) is a smooth Jordan curve not intersecting \(\mathop {\mathrm {sp}}\nolimits (T)\). It is well known [24] that the linear and bounded operator

is a projector onto the finite dimensional space \(\mathcal {E}(X)\) spanned by the generalized eigenfunctions associated with the finite set of eigenvalues of T contained in \(\Lambda \). It follows from Lemma 4.4 that, for h small enough, the linear operator

is uniformly bounded in h. Likewise, \(\mathcal {E}_h\) is a projector onto the \(T_h\)-invariant subspace \(\mathcal {E}_h(X_h)\) corresponding to the eigenvalues of \(T_h:\, X_h \rightarrow X_h\) contained in \(\Lambda \). The aim now is to compare \(\mathcal {E}_h(X_h)\) to \(\mathcal {E}(X)\) in terms of the gap \(\widehat{\delta }\). The following auxiliary result is essential for this purpose.

Lemma 4.5

If Assumptions 1–5 are satisfied, there exists \(h_0>0\) such that

Proof

We reproduce here the proof given in [26, Lemma 2]. By virtue of Lemma 4.4, there exists \(h_0>0\) such that the resolvent identity

is satisfied. Hence, for any \(v_h\in X_h\),

and the result follows from (4.8) and Lemma 4.4. \(\square \)

Lemma 4.6

Assume that Assumptions 1–5 are satisfied. There exists \(h_0>0\) such that

Proof

As \(\mathcal {E}_h:\, X_h\rightarrow X_h\) is a projector, it holds \(\mathcal {E}_h u_h = u_h\) for all \(u_h\in \mathcal {E}_h(X_h)\). Hence, there exists \(h_0>0\) such that

Combining the last estimate with (4.9) gives

Using this time that \(\mathcal {E}u=u\) for all \(u\in \mathcal {E}(X)\) yields

Consequently, by virtue of the uniform boundedness of \(\mathcal {E}_h:\, X_h \rightarrow X_h\), there exists \(h_0>0\) such that

It follows that

and the result is a consequence of the last estimate, Lemma 4.5 and (4.12). \(\square \)

We are now in a position to establish the convergence properties of the eigenvalues and eigenfunctions.

Theorem 4.1

Assume that Assumptions 1–5 are satisfied. Let \(F\subset {\mathbb {C}}{\setminus }{\{}0,1{\}}\) be an arbitrary compact set with smooth boundary \(\Lambda \) satisfying \(\Lambda \cap \mathop {\mathrm {sp}}\nolimits (T) = \emptyset \). We assume that there are m eigenvalues \(\eta ^F_1, \ldots , \eta ^F_m\) of T (repeated according to their algebraic multiplicities) contained in \(\Lambda \). We also consider the eigenvalues \(\eta ^F_{1, h}, \ldots , \eta ^F_{m(h), h}\) of \(T_h:\, X_h \rightarrow X_h\) lying in F and repeated according to their algebraic multiplicities. Then, there exists \(h_0>0\) such that \(m(h) = m\) for all \(h\le h_0\) and

Moreover, if \(\mathcal {E}(X)\) is the T-invariant subspace of X spanned by the generalized eigenfunctions corresponding to the set of eigenvalues \({\{}\eta ^F_{i},\ i = 1,\ldots , m{\}}\) and \(\mathcal {E}_h(X_h)\) is the \(T_h\)-invariant subspace of \(X_h\) spanned by the eigenspaces corresponding to \({\{}\eta _{i, h},\ i = 1,\ldots , m{\}}\) then \({\widehat{\delta }}(\mathcal {E}(X),\mathcal {E}_h(X_h))\rightarrow 0\) as \(h\rightarrow 0\).

Proof

We deduce from Lemma 4.6, Lemma 4.3 and Assumption 5 (ii) and from the fact that \(\mathcal {E}(X)\subset P(X)\) is a finite dimensional subspace of X that

As a consequence, \(\mathcal {E}(X)\) and \(\mathcal {E}_h(X_h)\) have the same dimension provided h is sufficiently small, c.f. [24]. Finally, as the eigenvalues \({\{}\eta ^F_1, \ldots , \eta ^F_m{\}}\) are isolated, for a sufficiently small \(\epsilon >0\), we can consider \(D = \cup _{i=1}^m D_{\eta _i^F} \subset F\), where \(D_{\eta _i^F}\subset {\mathbb {C}}\), \(i=1,\ldots , m\) are disjoint closed disks centered at \(\eta _i^F\) of radius \(\epsilon \). The previous analysis shows that there exists \(h(\epsilon )>0\) such that \(\eta ^F_{1, h}, \ldots , \eta ^F_{m, h}\) are all inside of D for \(h\le h(\epsilon )\), which means that

\(\square \)

5 Asymptotic estimates for the eigenvalue and eigenfunction error

We proved in Sect. 4 that, under Assumptions 1–5, the Galerkin scheme (3.1) does not pollute the spectrum of T with spurious modes. Moreover, we established the convergence of eigenvalues and eigenfunctions with correct multiplicity. However, in practice the space \(\mathcal {E}_\eta (X)\) of generalized eigenfunctions corresponding to a given isolated eigenvalue \(\eta \ne 1\) enjoys individual smoothness properties. Therefore, in order to be able to claim that the Galerkin method (3.1) has optimal convergence rates we need to estimate the error for a particular eigenvalue \(\eta \ne 1\) and for the corresponding eigenspace \(\mathcal {E}_\eta (X)\) only in terms of \(\delta (\mathcal {E}_\eta (X), X_h)\). This question has been addressed in [17] for noncompact operators under the condition of coercivity for the bilinear form A. In this section we extend the results to the abstract framework we are considering here.

Hereafter, we focus on a particular isolated eigenvalue \(\eta \ne 1\) of T of algebraic multiplicity m and let \(D_\eta \subset \mathbb C\) be a closed disk centered at \(\eta \) with boundary \(\gamma \) such that \(D_\eta \cap \mathop {\mathrm {sp}}\nolimits (T) = {\{}\eta {\}}\). We denote by \(\mathcal {E}_\eta :=\frac{1}{2\pi i} \int _{\gamma }R_z(T)\, dz: X \rightarrow X\) the projector onto the eigenspace \(\mathcal {E}_\eta (X)\) of \(\eta \) and we define, for h small enough, the projector by \(\mathcal {E}_{\eta , h}:=\frac{1}{2\pi i} \int _{\gamma }R_z( T_h)\, dz: X_h \rightarrow X_h\) onto the \(T_h\)-invariant subspace \(\mathcal {E}_{\eta , h}(X_h)\) corresponding to the m eigenvalues of \(T_h:\, X_h \rightarrow X_h\) contained in \(\gamma \).

We begin our analysis by proving an analogue of Lemma 4.4 for \(R_z({\tilde{T}}_h):= (z I- {\tilde{T}}_h)^{-1}: X\longrightarrow X\).

Lemma 5.1

Assume that Assumptions 1–5 are satisfied. Let \(F\subset {\mathbb {C}}\) be an arbitrary compact subset such that \(F\cap \mathop {\mathrm {sp}}\nolimits (T) = \emptyset \). There exist \(C'_F>0\) and \(h_0>0\) such that,

Proof

Given \(u\in X\) we let \( u_h^* = {\tilde{T}}_h u \in X_h \). We deduce from the identity

and from Lemma 4.4 that

The last estimate and the triangle inequality yield

and the result follows from the uniform boundedness of \(\Vert {\tilde{T}}_h\Vert _{\mathcal {L}(X)}\). \(\square \)

It follows from Lemma 5.1 that, for h small enough, \(R_z({\tilde{T}}_h): X\longrightarrow X\) is linear and bounded uniformly in h for all \(z\in \gamma \). Hence, the linear operator

is uniformly bounded as well. It is straightforward that \(R_z( {\tilde{T}}_h)|_{X_h} = R_z(T_h)\). It follows that we also have \(\tilde{\mathcal {E}}_{\eta , h}|_{X_h} = \mathcal {E}_{\eta , h}\). Moreover, if \({\tilde{\eta }}\in D_{\eta }\) is an eigenvalue of \({\tilde{T}}_h\), as \({\tilde{\eta }}\ne 0\), the corresponding eigenspace is a subspace of \(\mathcal {E}_{\eta , h}(X_h)\) and \({\tilde{\eta }}\) should necessarily coincide with one of the eigenvalues \({\{}\eta _{i,h},\, i=1,\ldots , m{\}}\) of \(T_h\). We conclude that \(\tilde{\mathcal {E}}_{\eta , h}(X)= \mathcal {E}_{\eta , h}(X_h)\) is the eigenspace corresponding to the eigenvalues of \(T_h\) contained in \(\gamma \).

Theorem 5.1

Under Assumptions 1–5 and for h small enough, it holds

Proof

Thanks to Lemma 5.1, there exists \(h_0>0\) such that

Hence, recalling that \(\mathcal {E}_\eta (X)\) is invariant for T and hence also for \(R_z(T)\) (i.e. \(R_z(T) \mathcal {E}_\eta (X) \subset \mathcal {E}_\eta (X)\)), we have

Now, due to the fact that \(\mathcal {E}_\eta (X)\subset K^{\bot _B}\) is finite dimensional and T-invariant, we deduce from (5.2), Céa estimate (3.3) and Assumption 5 (ii) that

It follows that

On the other hand, we also deduce from (5.3) that the operator \(\tilde{\mathcal {E}}_{\eta ,h}:\ \mathcal {E}_\eta (X) \rightarrow \mathcal {E}_{\eta ,h}(X_h)\) converges uniformly to the identity, which proves that it is invertible, for h small enough. We denote its inverse \(\Lambda _{\eta ,h}:\ \mathcal {E}_{\eta ,h}(X_h) \rightarrow \mathcal {E}_\eta (X)\). It is straightforward that, if \(h_0>0\) is such that

then \(\Vert \Lambda _{\eta , h}\Vert _{\mathcal {L}(\mathcal {E}_{\eta ,h}(X_h),X)} \le 2\) and, again by (5.3),

The result follows from the last estimate and (5.4). \(\square \)

We recall that \(\kappa = 1/\eta \) an eigenvalue of Problem 2.1 with the same m-dimensional eigenspace \(\mathcal {E}_\eta (X)\). Analogously, if \(\eta _{i,h}\), \(i=1,\ldots , m\), are the eigenvalues of \(T_h\) (repeated accordingly to their respective algebraic multiplicities) that converge to \(\eta \) then, \(\kappa _{i,h} = 1/\eta _{i,h}\) are the eigenvalues of Problem 3.1 converging to \(\kappa \) and the corresponding generalized eigenfunctions span \(\mathcal {E}_{\eta , h}(X_h)\). The last step of this section is the following theorem, in which we establish a double order of convergence for the eigenvalues. To this end we need the following assumption.

Assumption 6

Assume \(B(u,u)>0\) for all \(u\in \mathcal {E}_\eta (X){\setminus }\{0\}\).

Theorem 5.2

Under Assumptions 1–6, there exists \(h_0 > 0\) such that,

Proof

We denote by \(u_{i,h}\) an eigenfunction corresponding to \(\kappa _{i,h}\) satisfying \(\Vert u_{i,h}\Vert _X=1\). There exists an eigenfunction \(u\in \mathcal {E}_\eta (X)\) satisfying

It follows that, for h small enough, \(\Vert u\Vert _X\) is bounded from below and above by a constant independent of h. Furthermore, Assumption 6 and the fact that \(\mathcal {E}_\eta (X)\) is finite-dimensional imply the existence of \(c>0\), independent of h, such that \(B(u,u)\ge c\Vert u\Vert _X\) for all \(u\in \mathcal {E}_\eta (X)\). Using (5.5) and the uniform boundedness of \(\Vert u\Vert _X\), it is straightforward deduce that \(B(u_{ih},u_{ih})\ge \frac{c}{2}\) for h sufficiently small. We can now use the identity

to obtain the estimate

and the result follows. \(\square \)

6 Applications

We present two applications of the abstract theory developed in the previous sections. They concern dual mixed formulations for the elasticity and Stokes eigensystems.

We denote the space of real matrices of order \(d\times d\) by \(\mathbb {M}\), and define \(\mathbb {S}:= {\{}\varvec{\tau }\in \mathbb {M};\ \varvec{\tau }= \varvec{\tau }^{\texttt {t}}{\}} \) and \(\mathbb {K}:={\{}\varvec{\tau }\in \mathbb {M};\ \varvec{\tau }= -\varvec{\tau }^{\texttt {t}}{\}}\) as the subspaces of real symmetric and skew symmetric matrices, respectively. The component-wise inner product of two matrices \(\varvec{\sigma }, \,\varvec{\tau }\in \mathbb {M}\) is defined by \(\varvec{\sigma }:\varvec{\tau }:= \mathop {\mathrm {tr}}\nolimits ( \varvec{\sigma }^{\mathtt {t}} \varvec{\tau })\), where \(\mathop {\mathrm {tr}}\nolimits \varvec{\tau }:=\sum _{i=1}^d\tau _{ii}\) and \(\varvec{\tau }^{\mathtt {t}}:=(\tau _{ji})\) stand for the trace and the transpose of \(\varvec{\tau }= (\tau _{ij})\), respectively. We also introduce the deviatoric part \(\varvec{\tau }^{\mathtt {D}}:=\varvec{\tau }-\frac{1}{d}\left( \mathop {\mathrm {tr}}\nolimits \varvec{\tau }\right) I\) of a tensor \(\varvec{\tau }\) , where I stands here for the identity in \(\mathbb {M}\).

Along this paper we convene to apply all differential operators row-wise. Hence, given regular tensors \(\varvec{\sigma }:\Omega \rightarrow \mathbb {M}\) and vector fields \(\varvec{u}:\Omega \rightarrow \mathbb {R}^d\), we set the divergence \(\mathop {\mathbf {div}}\nolimits \varvec{\sigma }:\Omega \rightarrow \mathbb {R}^d\), the gradient \(\nabla \varvec{u}:\Omega \rightarrow \mathbb {M}\), and the linearized strain tensor \(\varvec{\varepsilon }(\varvec{u}) : \Omega \rightarrow \mathbb {S}\) as

Let D be a polyhedral Lipschitz bounded domain of \(\mathbb {R}^d\) \((d=2,3)\), with boundary \(\partial D\). For \(s\in \mathbb {R}\), \(H^s(D,E)\) stands for the usual Hilbertian Sobolev space of functions with domain D and values in E, where E is either \(\mathbb {R}\), \(\mathbb {R}^d\) or \(\mathbb {M}\). In the case \(E=\mathbb {R}\) we simply write \(H^s(D)\). The norm of \(H^s(D,E)\) is denoted \(\Vert \cdot \Vert _{s,D}\) indistinctly for \(E=\mathbb {R},\mathbb {R}^d,\mathbb {M}\). We use the convention \(H^0(D, E):=L^2(D,E)\) and let \((\cdot , \cdot )_D\) be the inner product in \(L^2(D, E)\), for \(E=\mathbb {R},\mathbb {R}^d,\mathbb {M}\), namely,

We denote by \(H(\mathop {\mathbf {div}}\nolimits , D, \mathbb {M})\) the space of functions in \(L^2(D, \mathbb {M})\) with divergence in \(L^2(D, \mathbb {R}^d)\). We equip this Hilbert space with the norm \(\Vert \varvec{\tau }\Vert ^2_{\mathop {\mathbf {div}}\nolimits ,D}:=\Vert \varvec{\tau }\Vert _{0,D}^2+\Vert \mathop {\mathbf {div}}\nolimits \varvec{\tau }\Vert ^2_{0,D}\). Finally, \(H(\mathop {\mathbf {div}}\nolimits ^0, D, \mathbb {M})\) stands for the subspace of divergence free tensors in \(H(\mathop {\mathbf {div}}\nolimits , D, \mathbb {M})\), i.e.,

6.1 Stress formulation of the elasticity eigenproblem with reduced symmetry

6.1.1 The continuous problem

Let \(\Omega \subset \mathbb {R}^d\) (\(d=2,3\)) be a bounded Lipschitz polygon/polyhedron representing a linearly elastic body with mass density \(\varrho \in L^\infty (\Omega )\) satisfying \( \varrho (x) \ge \varrho _0>0 \quad \text {a.e. in }\Omega . \) For simplicity, we assume that the structure is fixed at the boundary \(\partial \Omega \). We denote by \(\mathcal {A}(\varvec{x}): \mathbb {M}\rightarrow \mathbb {M}\) the symmetric and positive-definite 4\(\mathrm{th}\)-order tensor (known as the compliance tensor) that relates the Cauchy stress tensor \(\varvec{\sigma }\) to the strain tensor through the linear material law \(\mathcal {A}(\varvec{x})\varvec{\sigma }=\varvec{\varepsilon }(\varvec{u})\).

Our aim is to find natural frequencies \(\omega \in \mathbb {R}\) such that \(\mathop {\mathbf {div}}\nolimits \varvec{\sigma }+ \omega ^2\varrho (\varvec{x}) \varvec{u}= 0\) in \(\Omega \). Here, we opt for combining this equilibrium equation with the constitutive law to eliminate the displacement field \(\varvec{u}\) and impose \(\varvec{\sigma }\) as a primary variable. This procedure leads to the following grad-div eigensystem: Find \(0 \ne \varvec{\sigma }:\Omega \rightarrow \mathbb {S}\), \(0 \ne \varvec{r}:\Omega \rightarrow \mathbb {K}\) and eigenmodes \(\omega \in \mathbb {R}\) such that,

We notice that we introduced above the skew symmetric tensor \(\varvec{r}:=\frac{1}{2}\left[ \nabla \varvec{u}-(\nabla \varvec{u})^{\mathtt {t}}\right] \) (the rotation) by writing Hooke’s law \(\mathcal {A}\varvec{\sigma }=\nabla \varvec{u}- \varvec{r}\). This additional unknown will act as a Lagrange multiplier for the symmetry restriction.

The variational formulation of the spectral problem (6.1) can be cast into the abstract framework presented in Sect. 2 by defining problem (2.1) with \(\kappa = 1+\omega ^2\), \(H:= L^2(\Omega , \mathbb {M})\times L^2(\Omega , \mathbb {M})\), \(X:= H(\mathop {\mathbf {div}}\nolimits , \Omega , \mathbb {M})\times L^2(\Omega , {\mathbb {K}})\) and with bounded and symmetric bilinear forms \(B:\ H\times H\rightarrow \mathbb {R}\), \(c:\ X\times X\rightarrow \mathbb {R}\) and \(A:\ X\times X\rightarrow \mathbb {R}\) given by

c.f. [26] for more details. The Hilbert spaces H and X are endowed with the norms

We point out that the continuous inclusion \(X\hookrightarrow H\) is not compact.

We proceed now to check out Assumption 1 and Assumption 2. We begin by noticing that

is not a finite dimensional subspace of X. It is well-known that the bilinear form \({(}\varvec{\tau }, (\varvec{v}, \varvec{s}){)} \mapsto {(}\mathop {\mathbf {div}}\nolimits \varvec{\tau }, \varvec{u}{)}_\Omega + {(}\varvec{\tau }, \varvec{s}{)}_\Omega \) satisfies the inf-sup condition for the pair \({\{}H(\mathop {\mathbf {div}}\nolimits , \Omega , \mathbb {M}), L^2(\Omega , \mathbb {R}^d)\times L^2(\Omega , \mathbb {K}){\}}\), which can be equivalently formulated as follows, (c.f. [10, Proposition 2] ).

Lemma 6.1

There exists a linear and bounded operator \(\Theta :\, L^2(\Omega , \mathbb {R}^d)\times L^2(\Omega , \mathbb {K}) \rightarrow H(\mathop {\mathbf {div}}\nolimits , \Omega , \mathbb {M})\) such that

Corollary 6.1

Assumption 1 is satisfied.

Proof

It follows from Lemma 6.1 that

for all \(\varvec{s}\in L^2(\Omega , \mathbb {K})\). This means that the bilinear form \({(}\varvec{\tau }, \varvec{s}{)}_\Omega \) satisfies the inf-sup condition for the pair \({\{} H(\mathop {\mathbf {div}}\nolimits ^0, \Omega , \mathbb {M}), L^2(\Omega , \mathbb {K}){\}}\) and also for the pair \({\{} H(\mathop {\mathbf {div}}\nolimits , \Omega , \mathbb {M}), L^2(\Omega , \mathbb {K}){\}}\). Moreover, we have that \((\varvec{\sigma }, \varvec{\tau })\mapsto {(}\mathcal {A}\varvec{\sigma }, \varvec{\tau }{)}_\Omega \) is coercive on \(H(\mathop {\mathbf {div}}\nolimits ^0, \Omega , \mathbb {M})\) while \((\varvec{\sigma }, \varvec{\tau })\mapsto {(}\mathcal {A}\varvec{\sigma }, \varvec{\tau }{)} + {(}\varrho ^{-1}\mathop {\mathbf {div}}\nolimits \varvec{\sigma }, \mathop {\mathbf {div}}\nolimits \varvec{\tau }{)}_\Omega \) is coercive on the whole space \(H(\mathop {\mathbf {div}}\nolimits , \Omega , \mathbb {M})\). By virtue of the Babuška-Brezzi theory [11], for all \(L\in X'\), the saddle point problem: find \((\varvec{\sigma },\varvec{r})\in X\) such that

is well-posed, which implies that Assumption 1 (i) is satisfied. Likewise, for all \(L_0\in K'\), the saddle point problem: find \((\varvec{\sigma },\varvec{r})\in K= H(\mathop {\mathbf {div}}\nolimits ^0, \Omega , \mathbb {M})\times L^2(\Omega ,\mathbb {K})\) such that

is well-posed. Consequently, Assumption 1 (ii) also holds true. \(\square \)

Thanks to Corollary 6.1, we can define the source operator \(T:\, X\rightarrow X\) in terms of problem (2.2). We recall that \(\mathop {\mathrm {ker}}\nolimits (I - T)= K\) and that the symmetry of T with respect to \(B(\cdot , \cdot )\) yields \(T(K^{\bot _B}) \subset K^{\bot _B}\). In addition, the direct and stable splitting \(X = K\oplus K^{\bot _B}\) holds true. Our aim now is to characterize the unique projector \(P:X\rightarrow X\) with range \(K^{\bot _B}\) and kernel K associated to this splitting.

For any \((\varvec{\sigma }, \varvec{r})\in X\), we consider \(P(\varvec{\sigma }, \varvec{r}):=({\widetilde{\varvec{\sigma }}}, {\widetilde{\varvec{r}}})\) with \({\widetilde{\varvec{\sigma }}} = \mathcal {A}^{-1} \varvec{\varepsilon }({\widetilde{\varvec{u}}})\) and \({\widetilde{\varvec{r}}}:=\frac{1}{2}\left[ \nabla {\widetilde{\varvec{u}}} -(\nabla {\widetilde{\varvec{u}}})^{\mathtt {t}}\right] \), where \({\widetilde{\varvec{u}}}\) is the unique solution of the classical displacement based variational formulation of the elasticity problem in \(\Omega \) with volume load \(\mathop {\mathbf {div}}\nolimits \varvec{\sigma }\), namely, \({\widetilde{\varvec{u}}}\in H^1_0(\Omega ,\mathbb {R}^d)\) solves

We point out that \(\mathop {\mathbf {div}}\nolimits {\widetilde{\varvec{\sigma }}} = \mathop {\mathbf {div}}\nolimits \varvec{\sigma }\) by construction. In addition, Korn’s inequality provides the stability estimate

which ensures the continuity of \(P:\, X\rightarrow X\). Now, it is clear that \(P\circ P = P\) and \(\mathop {\mathrm {ker}}\nolimits {P} = K\). Besides, for any \((\varvec{\sigma }, \varvec{r})\in X\),

which proves that \(P(X)\subset K^{\bot _B}\). Finally, we notice that \((I - P)X \subset K\), and hence, \(K^{\bot _B} = P (K^{\bot _B}) + (I - P)K^{\bot _B} = P (K^{\bot _B}) \subset P(X)\). We conclude that \(P:X\rightarrow X\) is indeed the unique continuous projector corresponding to the direct and stable decomposition \(X = K\oplus K^{\bot _B}\).

Lemma 6.2

Assumption 2 is satisfied.

Proof

Let \({\{}(\varvec{\sigma }_n,\varvec{r}_n){\}}_n\) be a weakly convergent sequence in X. As \(P\in {\mathcal {L}}(X)\), the sequence \({\{}({\widetilde{\varvec{\sigma }}}_n, {\widetilde{\varvec{r}}}_n){\}}_n:={\{}P((\varvec{\sigma }_n,\varvec{r}_n)){\}}_n\) is also weakly convergent in X. By definition, \({\widetilde{\varvec{\sigma }}}_n = \mathcal {A}^{-1} \varvec{\varepsilon }({\widetilde{\varvec{u}}}_n)\) and \({\widetilde{\varvec{r}}}_n:=\frac{1}{2}\left[ \nabla {\widetilde{\varvec{u}}}_n -(\nabla {\widetilde{\varvec{u}}}_n)^{\mathtt {t}}\right] \), where \({\widetilde{\varvec{u}}}_n\in H^1_0(\Omega , \mathbb {R}^d)\) solves (6.4) with right-hand side \(\mathop {\mathbf {div}}\nolimits \varvec{\sigma }_n\). It follows from (6.5) that \({\widetilde{\varvec{u}}}_n\) is bounded in \(H^1_0(\Omega , \mathbb {R}^d)\) and the compactness of the embedding \(H^1(\Omega ,\mathbb {R}^d) \hookrightarrow L^2(\Omega , \mathbb {R}^d)\) implies that \({\{}{\widetilde{\varvec{u}}}_n{\}}_n\) admits a subsequence (denoted again \({\{}{\widetilde{\varvec{u}}}_n{\}}_n\) ) that converges strongly in \(L^2(\Omega ,\mathbb {R}^d)\). Next, we deduce from the Green identity

that \({\{}{\widetilde{\varvec{\sigma }}}_n{\}}_n\) is a Cauchy sequence in \(L^2(\Omega , \mathbb {M})\). Moreover, the identity

and the inf-sup condition (6.3) yield

The last estimate ensures that \({\{}{\widetilde{\varvec{r}}}_n{\}}_n\) is also a Cauchy sequence in \(L^2(\Omega , \mathbb {M})\). We then come to the conclusion that the image under P of any bounded sequence in X contains a converging subsequence in H, which proves that Assumption 2 (i) is satisfieded.

On the other hand, testing (2.2) with \((\varvec{\tau }, 0)\) and choosing the components of \(\varvec{\tau }:\Omega \rightarrow \mathbb {M}\) indefinitely differentiable and compactly supported in \(\Omega \), we readily obtain that, if \((\varvec{\sigma }^*, \varvec{r}^*):= T((\varvec{\sigma }, \varvec{r}))\) then

Consequently,

and the compactness of the embedding \(T(X)\cap P(X)\hookrightarrow X\) follows. We conclude that Assumption 2 (ii) is fulfilled. \(\square \)

Finally, we notice that for all \(0\ne (\varvec{\sigma }, \varvec{r})\in P(X)\),

where we used that \(\varvec{\sigma }\) is symmetric and that \({\{}0{\}}\times L^2(\Omega , \mathbb {K})\subset K\).

We can invoke now Theorem 6.1 and Remark 2.1 to conclude that we have the following spectral characterization for the source operator T.

Proposition 6.1

The spectrum \(\mathop {\mathrm {sp}}\nolimits (T)\) of T is given by \(\mathop {\mathrm {sp}}\nolimits (T) = {\{}0, 1{\}} \cup {\{}\eta _k{\}}_{k\in \mathbb {N}}\), where \({\{}\eta _k{\}}_k\subset (0,1)\) is a sequence of finite-multiplicity eigenvalues of T that converges to 0. The ascent of each of these eigenvalues is 1 and the corresponding eigenfunctions lie in P(X). Moreover, \(\eta =1\) is an infinite-multiplicity eigenvalue of T with associated eigenspace K and \(\eta =0\) is not an eigenvalue.

6.1.2 The discrete problem

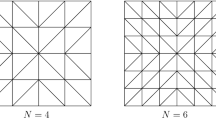

We consider a family \({\{}\mathcal {T}_h{\}}_h\) of shape regular simplicial meshes of \({\bar{\Omega }}\) satisfying the standard finite element conformity assumptions. We denote by \(h_K\) the diameter of triangles/tetrahedra \(K\in \mathcal {T}_h\) and let the parameter \(h:= \max _{K\in \mathcal {T}_h} \{h_K\}\) be the mesh size of \(\mathcal {T}_h\).

Hereafter, given an integer \(m\ge 0\) and \(D\subset \mathbb {R}^d\), \(\mathcal {P}_k(D,E)\) is the space of functions with domain D and values in E, where E is either \(\mathbb {R}^d\), \(\mathbb {M}\) or \(\mathbb {K}\), and whose scalar components are polynomials of degree at most m. Likewise, the spaces of E-valued functions with piecewise polynomial scalar components of degree \(\le m\) relatively to \(\mathcal {T}_h\) are defined by

For \(k\ge 0\), we define Problem (3.1) with \(X_h:= \varvec{\mathcal {W}}_h\times \mathcal {P}_k(\mathcal {T}_h,\mathbb {K})\), where \(\varvec{\mathcal {W}}_h := \mathcal {P}_{k+1}(\mathcal {T}_h, \mathbb {M})\cap H(\mathop {\mathbf {div}}\nolimits , \Omega , \mathbb {M})\). We point out that the set \({\{}\varvec{\mathcal {W}}_h, \mathcal {P}_k(\mathcal {T}_h,\mathbb {R}^d), \mathcal {P}_k(\mathcal {T}_h,\mathbb {K}){\}}\) constitutes the mixed finite element of Arnold-Falk-Winther [5] for linear elasticity. The key property ensuring the stability of this triplet of spaces is given by the following result, c.f. [5, Theorem 7.1].

Lemma 6.3

There exists a linear operator \(\Theta _h:\, \mathcal {P}_k(\mathcal {T}_h,\mathbb {R}^d) \times \mathcal {P}_k(\mathcal {T}_h,\mathbb {K}) \rightarrow \varvec{\mathcal {W}}_h\) that is uniformly bounded with respect to h and that satisfies

for all \((\varvec{v}_h, \varvec{s}_h)\in \mathcal {P}_k(\mathcal {T}_h,\mathbb {R}^d) \times \mathcal {P}_k(\mathcal {T}_h,\mathbb {K})\).

We point out that \(K_h = \varvec{\mathcal {W}}^0_h\times \mathcal {P}_k(\mathcal {T}_h, \mathbb {K}) \subset K\) where \(\varvec{\mathcal {W}}^0_h:= \varvec{\mathcal {W}}_h\cap H(\mathop {\mathbf {div}}\nolimits ^0,\Omega , \mathbb {M})\). The same procedure used in the proof of Corollary 6.1 can be used verbatim to deduce from Lemma 6.3 that Assumption 4 is satisfied. Moreover, as \(\{0\}\times \mathcal {P}_k(\mathcal {T}_h, \mathbb {K}) \subset K_h\), for all \(0\ne (\varvec{\sigma }_h, \varvec{r}_h)\in P_h(X_h)= K_h^{\bot _{B}}\) it holds

Consequently, the discrete counterpart of (6.6) is satisfied. Indeed, for all \(0\ne (\varvec{\sigma }_h, \varvec{r}_h)\in P_h(X_h)\) we have that

We deduce from Theorem 3.1 and Remark 3.1 the following result.

Proposition 6.2

The spectrum of \(T_h\) consists of \(m:=\mathop {\mathrm {dim}}\nolimits (X_h)\) eigenvalues, repeated accordingly to their respective multiplicities. It holds \(\mathop {\mathrm {sp}}\nolimits (T_h)={\{}1{\}}\cup {\{}\eta _{hk}{\}}_{k=1}^{m_0}\), with \(m_0 = m - \mathop {\mathrm {dim}}\nolimits (K_h)\). The eigenspace associated to \(\eta _h=1\) is \(K_h\). The real numbers \(\eta _{hk}\in (0,1)\), \(k=1,\dots ,m_0\), are non-defective eigenvalues with eigenspaces lying in \(K_h^{\bot _B}\) and \(\eta _h=0\) is not an eigenvalue.

Let us now recall some well-known approximation properties of the finite element spaces introduced above. Given \(s>1/2\) the tensorial version of the canonical interpolation operator \(\Pi _h: H^s(\Omega , \mathbb {M}) \rightarrow \varvec{\mathcal {W}}_h\) associated with the Brezzi-Douglas-Marini (BDM) mixed finite element [12], satisfies the following classical error estimate, see [11, Proposition 2.5.4],

Moreover, we have the well-known commutativity property,

where \(U_h\) stands for the \(L^2(\Omega , \mathbb {R}^d)\)-orthogonal projection onto \(\mathcal {P}_k(\mathcal {T}_h, \mathbb {R}^d)\). Therefore, if \(\mathop {\mathbf {div}}\nolimits \varvec{\tau }\in H^s(\Omega ,\mathbb {R}^d)\), we obtain

Finally, if \(S_h\) represents the \(L^2(\Omega , \mathbb {M})\)-orthogonal projection onto \(\mathcal {P}_k(\mathcal {T}_h, \mathbb {K})\), for any \(s>0\), it holds

We point out that one can actually extend the domain of the canonical interpolation operator \(\Pi _h\) to \(H(\mathop {\mathbf {div}}\nolimits ,\Omega ,\mathbb {M})\cap H^s(\Omega ,\mathbb {M})\), for any \(s>0\). In the case of a constant function \(\varrho \) and a constant tensor \(\mathcal {A}\), classical regularity results [15, 22] ensure the existence of \({\hat{s}}\in (0,1]\) (depending on \(\Omega \) on the boundary conditions and on the Lamé coefficients) such that the solution \(\widetilde{\varvec{u}}\) of problem (6.4) belongs to \(H^{1+s}(\Omega , \mathbb {R}^d)\cap H^1_0(\Omega , \mathbb {R}^d)\) for all \(s\in (0, {\hat{s}})\). This implies that \(P(X) \subset [H^s(\Omega , \mathbb {M})\cap H(\mathop {\mathbf {div}}\nolimits ,\Omega , \mathbb {M})]\times H^s(\Omega , \mathbb {M})\). In such a case, we can directly define the operator linear operator \(\Xi _h:\, K^{\bot _{B}} \rightarrow X_h\) by \(\Xi _h(\varvec{\sigma }, \varvec{r}):= (\Pi _h \varvec{\sigma }, S_h \varvec{r})\) and deduce (as shown below) that Assumption 5 is satisfied. However, instead of relying on regularity results that are difficult to establish for the elasticity system in the case of general domains, boundary conditions and material properties, we resort to the quasi-interpolation operator constructed in [13, 19, 25] by combining the BDM interpolation operator \(\Pi _h\) with a mollification technique. The resulting projector has domain \(H(\mathop {\mathbf {div}}\nolimits , \Omega , \mathbb {M})\) and range \(\varvec{\mathcal {W}}_h\) and preserves all the properties of \(\Pi _h\) listed above. More precisely, we will use tensorial version of the following result [19, Theorem 6.5] (see also [13, 25]).

Theorem 6.1

There exists a bounded and linear operator \({\mathcal {J}}_h:\, H(\mathop {\mathbf {div}}\nolimits , \Omega , \mathbb {M}) \rightarrow \varvec{\mathcal {W}}_h\) such that

-

(i)

\(\varvec{\mathcal {W}}_h\) is point-wise invariant under \({\mathcal {J}}_h\)

-

(ii)

The exists \(C>0\) independent of h such that

$$\begin{aligned} \Vert \varvec{\sigma }- {\mathcal {J}}_h \varvec{\sigma }\Vert _{0,\Omega }\le C \displaystyle \inf _{\varvec{\tau }_h\in \varvec{\mathcal {W}}_h} \Vert \varvec{\sigma }- \varvec{\tau }_h\Vert _{0,\Omega },\quad \forall \varvec{\sigma }\in H(\mathop {\mathbf {div}}\nolimits , \Omega ,\mathbb {M}) \end{aligned}$$ -

iii)

\(\mathop {\mathbf {div}}\nolimits {\mathcal {J}}_h \varvec{\sigma }= U_h \mathop {\mathbf {div}}\nolimits \varvec{\sigma }\) for all \(\varvec{\sigma }\in H(\mathop {\mathbf {div}}\nolimits , \Omega , \mathbb {M})\).

Lemma 6.4

The linear operator \(\Xi _h:\, X \rightarrow X_h\) defined by \(\Xi _h(\varvec{\sigma }, \varvec{r}):= ({\mathcal {J}}_h \varvec{\sigma }, S_h \varvec{r})\) satisfies Assumption 5.

Proof

For an arbitrary \((\varvec{\sigma }_h, \varvec{r}_h)\in X_h\) we let \(({\widetilde{\varvec{\sigma }}}, {\widetilde{\varvec{r}}} ) = P (\varvec{\sigma }_h, \varvec{r}_h) \in K^{\bot _{B}}\). It holds

Indeed, by virtue of property (iii) of Theorem 6.1 and because \(\mathop {\mathbf {div}}\nolimits {\widetilde{\varvec{\sigma }}} = \mathop {\mathbf {div}}\nolimits \varvec{\sigma }_h\), we have that

This proves Assumption 5 (iii). On the other hand, using this time property (ii) of Theorem 6.1, we deduce that, if we let \(({\widetilde{\varvec{\sigma }}}, {\widetilde{\varvec{r}}} ) = P (\varvec{\sigma }, \varvec{r})\in K^{\bot _{B}}\) for an arbitrary \((\varvec{\sigma }, \varvec{r})\in X\), we obtain

and again by property (iii) of Theorem 6.1

It follows immediately from (6.12)–(6.13) and the triangle inequality that Assumption 5 (i) is satisfied. Moreover, the error estimates (6.8), (6.10) and (6.11) and classical density results ensure that Assumption 5 (ii) is a consequence of (6.13). \(\square \)

We conclude that the Galerkin method (3.1) provides a correct spectral approximation of the eigenproblem (6.1) in the sense of Theorem 4.1.

We recall that \(\eta \in (0,1)\) is an eigenvalue of T with multiplicity m if and only if \(\kappa = 1/\eta \) is an eigenvalue of Problem 2.1 with the same multiplicity and the corresponding eigenfunctions coincide. Analogously, \(\eta _{i,h}\), \(i=1,\ldots , m\), are the eigenvalues of \(T_h\) (repeated accordingly to their respective multiplicities) that converge to \(\eta \) if and only if \(\kappa _{i,h} = 1/\eta _{i,h}\) are the eigenvalues of Problem 3.1 converging to \(\eta \). Moreover, the corresponding eigenfunctions coincide. Taking into account that Assumption 6 is satisfied because of (6.6), the following rates of convergence for the eigenfunctions and eigenvalues are a direct consequence of Theorem 5.1, (6.6) and Theorem 5.2, together with the interpolation error estimates (6.8), (6.10) and (6.11).

Theorem 6.2

Let \(\eta \ne 1\) be an eigenvalue of T of algebraic multiplicity m and let \(\mathcal {E}_\eta (X)\) be the corresponding eigenspace. There exists \(h_0>0\) such that for all \(h\le h_0\), \(T_h\) admits exactly m eigenvalues \(\eta _{i,h}\), \(i=1,\ldots , m\), repeated according to their respective multiplicity, such that

where \(\mathcal {E}_{\eta , h}(X_h)\) is the \(T_h\)-invariant subspace of \(X_h\) spanned by the eigenspaces of \({\{}\eta _{i, h},\ i = 1,\ldots , m{\}}\). Moreover, if we assume that the eigenfunctions are piecewise regular with respect to a partition \(\overline{\Omega }=\cup _{j=1}^J\overline{\Omega }_j\) of \(\Omega \) into polyhedral/polygonal subdomain \(\Omega _j\), namely, if there exists \(r>1/2\) such that

then, for h small enough,

Remark 6.1

For the sake of brevity and clarity of exposition, we only considered here an approximation based on the Arnold-Falk-Winther element [5]. However, we could equally have defined the Galerkin method in base of the families of mixed finite elements introduced by Cockburn-Gopalakrishnan-Guzmán (CGG(k)) in [14] and by Gopalakrishnan-Guzmán (GG(k)) in [21] for \(k\ge 1\). Indeed, the same BDM quasi-interpolation operator \({\mathcal {J}}_h\) can be used in the proof of Assumption 5 for GG(k) and the quasi-interpolation operator corresponding to the Raviart-Thomas element can be used instead for CGG(k).

6.2 Stress formulation of the Stokes eigenproblem with reduced symmetry

6.2.1 The continuous problem

We are interested in the following eigenvalue problem for the Stokes system posed in a connected Lipschitz domain \(\Omega \subset \mathbb {R}^d\): find \((\lambda ,\varvec{u}, p)\), with \(\varvec{u}\ne 0\) and \(\lambda \in \mathbb {R}\) such that,

It is interesting to notice that, in the two dimensional case, problem (6.15) is equivalent to the following buckling problem for a uniformly compressed clamped Kirchhoff plate (see [29]): find \(\lambda \in \mathbb {R}\) and \(0\ne \psi \in H_0^2(\Omega )\) such that

where \(\varvec{n}\) represents the unit outward normal vector to \(\partial \Omega \). The eigenvalue \(2\lambda \) represents in this case the buckling coefficient. The plate transverse displacement \(\psi \) is related to the Stokes velocity field by \(\frac{1}{2}\varvec{u}= \mathbf {curl}\, \psi := (\partial _2 \psi , -\partial _1 \psi )^\texttt {t}\).

Our aim is to employ a dual-mixed approach to derive a variational formulation of this problem. To this end, we introduce the stress tensor \(\varvec{\sigma }:=\varvec{\varepsilon }(\varvec{u}) - p I\) and notice that the incompressibility condition implies that \(\varvec{\sigma }^\mathtt {D}= \nabla \varvec{u}- \varvec{r}\), with \(\varvec{r}:=\frac{1}{2}\left[ \nabla \varvec{u}-(\nabla \varvec{u})^{\mathtt {t}}\right] \). We can then reformulate the problem above in terms of \(\varvec{\sigma }\) as follows: find \(\lambda \in \mathbb {R}\), \(0\ne \varvec{\sigma }:\Omega \rightarrow \mathbb {S}\), and \(0\ne \varvec{r}\rightarrow \mathbb {K}\) such that,

We point out that the pressure p and the velocity field \(\varvec{u}\) disappeared from the formulation but once \(\varvec{\sigma }\) is known they can be recovered (and also post-processed at the discrete level) by \(\varvec{u}= -\frac{1}{\lambda }\mathop {\mathbf {div}}\nolimits \varvec{\sigma }\) and \(p=-\frac{1}{d}\mathop {\mathrm {tr}}\nolimits (\varvec{\sigma })\).

The variational formulation (6.16) fits into the abstract framework of Sect. 2 by taking in problem (2.1) \(\kappa = 1 + \lambda \), \(H:= L^2(\Omega , \mathbb {M})\times L^2(\Omega , \mathbb {M})\), \(X:= H(\mathop {\mathbf {div}}\nolimits , \Omega , \mathbb {M})\times L^2(\Omega , {\mathbb {K}})\) (endowed with their natural norms (6.2)) and by defining the bounded and symmetric bilinear forms \(B:\ H\times H\rightarrow \mathbb {R}\), \(c:\ X\times X \rightarrow \mathbb {R}\) and \(A:\ X\times X \rightarrow \mathbb {R}\) as follows:

We point out that testing problem (2.1) with \((I, 0)\in X\) gives \({(}\mathop {\mathrm {tr}}\nolimits (\varvec{\sigma }),1{)}_\Omega = 0\) for \(\kappa \ne 1\). This corresponds to the zero mean value restriction on the pressure \(p\in L^2_0(\Omega ):= {\{}q\in L^2(\Omega );\ (q,1)_\Omega = 0{\}}\) that is usually imposed to enforce uniqueness. Here, we opt for a variational insertion of this restriction in order to free the space X from the cumbersome constraint \({(}\mathop {\mathrm {tr}}\nolimits (\varvec{\tau }), 1{)}_\Omega =0\).

The following Poincaré-Friedrichs inequality is essential in our analysis.

Lemma 6.5

There exists \(\alpha >0\) depending only on \(\Omega \) such that

Proof

See [11, Proposition 9.1.1]. \(\square \)

Corollary 6.2

The bilinear form

is coercive on \(H(\mathop {\mathbf {div}}\nolimits ,\Omega ,\mathbb {M})\).

Proof

Using the \(L^2(\Omega ,\mathbb {M})\)-orthogonal decomposition \(\varvec{\tau }= \varvec{\tau }_0 + \frac{1}{d|\Omega |} {(}\mathop {\mathrm {tr}}\nolimits (\varvec{\tau }), 1{)}_\Omega I\), we deduce from Lemma 6.5 that

and the result follows. \(\square \)

We point out that Corollary 6.2 also implies the coerciveness of the bilinear form

on \(H(\mathop {\mathbf {div}}\nolimits ^0,\Omega , \mathbb {M})\). Consequently, following the same steps given in the proof of Corollary 6.1 we deduce that Assumption 1 is satisfied. Hence, the source operator \(T:\, X \rightarrow X\) defined by problem (2.2) is bounded and symmetric with respect to \(B(\cdot , \cdot )\). In addition, we have the direct and stable splitting \(X = K\oplus K^{\bot _B}\), where \(K := H(\mathop {\mathbf {div}}\nolimits ^0, \Omega , \mathbb {M})\times L^2(\Omega , \mathbb {K})\) is the eigenspace corresponding to the essential eigenvalue \(\eta =1\) of (2.1). We also have that \(T(K^{\bot _B}) \subset K^{\bot _B}\). It is important to notice that T is not one-to-one. Indeed, if \((\varvec{\sigma }, \varvec{r})\in X\) is such that \(B{(}(\varvec{\sigma }, \varvec{r}), (\varvec{\tau }, \varvec{s}){)} = 0\) for all \((\varvec{\tau }, \varvec{s})\in X\) then \(\varvec{\sigma }= \varvec{\sigma }^\texttt {t}\), \({(}\varvec{\sigma }, 1{)}_\Omega = 0\) and

Taking \(\varvec{\tau }= \varvec{\sigma }^\mathtt {D}\) in (6.18) we deduce that \(\varvec{\sigma }^\mathtt {D}= 0\). This condition holds true if and only if \(\varvec{\sigma }=p I\), with \(p=\frac{1}{d}\mathop {\mathrm {tr}}\nolimits (\varvec{\sigma })\in L^2(\Omega )\) and \(\nabla p=\mathop {\mathbf {div}}\nolimits \varvec{\sigma }\in L^2(\Omega , \mathbb {R}^d)\). Testing now (6.18) with skew symmetric tensors and using a density argument we deduce that \(\varvec{r}= 0\). It follows that

is the eigenspace corresponding to the eigenvalue \(\eta = 0\) of T.

The characterization of the projector \(P:X\rightarrow X\) associated to this decomposition follows the same pattern of last section. For any \((\varvec{\sigma }, \varvec{r})\in X\), we consider \(P(\varvec{\sigma }, \varvec{r})=({\widetilde{\varvec{\sigma }}}, {\widetilde{\varvec{r}}})\in X\) with \({\widetilde{\varvec{\sigma }}} :=\varvec{\varepsilon }({\tilde{\varvec{u}}}) - {\tilde{p}} I\) and \({\widetilde{\varvec{r}}} := \frac{1}{2}\left[ \nabla {\tilde{\varvec{u}}}-(\nabla {\tilde{\varvec{u}}})^{\mathtt {t}}\right] \), where \(({\tilde{\varvec{u}}}, {\tilde{p}})\in H^1_0(\Omega ,\mathbb {R}^d)\times L^2_0(\Omega )\) is the unique solution of the classical velocity-pressure variational formulation of Stokes problem in \(\Omega \) with load \(-\mathop {\mathbf {div}}\nolimits \varvec{\sigma }\), i.e.,

We know [11] that there exists a constant \(C>0\) such that

moreover, \(\mathop {\mathbf {div}}\nolimits {\widetilde{\varvec{\sigma }}} = \mathop {\mathbf {div}}\nolimits \varvec{\sigma }\) by construction. This ensures the continuity of \(P:\, X\rightarrow X\). Besides, it is clear that \(P\circ P = P\), \(\mathop {\mathrm {ker}}\nolimits {P} = K\), and for any \((\varvec{\sigma }, \varvec{r})\in X\),

which proves that \(P(X)\subset K^{\bot _B}\). The inclusion \(K^{\bot _B}\subset P(X)\) is a consequence of \((I - P)X \subset K\). We conclude that \(P:X\rightarrow X\) is the unique continuous projector associated to the direct and stable decomposition \(X = K\oplus K^{\bot _B}\).

Lemma 6.6

Assumption 2 is satisfied.

Proof

Let \({\{}(\varvec{\sigma }_n, \varvec{r}_n){\}}_n\) be a weakly convergent sequence in X. The continuity of \(P:\, X\rightarrow X\) implies that \({\{}({\widetilde{\varvec{\sigma }}}_n, {\widetilde{\varvec{r}}}_n){\}}_n := {\{}P(\varvec{\sigma }_n, \varvec{r}_n){\}}_n = {\{}\left( \nabla {\tilde{\varvec{u}}}_n -{\tilde{p}}_n I, \frac{1}{2}\left[ \nabla {\tilde{\varvec{u}}}_n-(\nabla {\tilde{\varvec{u}}}_n)^{\mathtt {t}}\right] \right) {\}}_n\) also converges weakly in X, where \(({\tilde{\varvec{u}}}_n, {\tilde{p}}_n)\in H^1_0(\Omega , \mathbb {R}^d)\times L^2_0(\Omega )\) solves (6.19) with datum \(-\mathop {\mathbf {div}}\nolimits \varvec{\sigma }_n\). As a consequence of (6.20), \({\{}{\tilde{\varvec{u}}}_n{\}}_n\) is bounded in \(H^1(\Omega , \mathbb {R}^d)\). In turn, we deduce from the identities \(-\nabla {\tilde{p}}_n = \mathop {\mathbf {div}}\nolimits {\widetilde{\varvec{\sigma }}}_n = \mathop {\mathbf {div}}\nolimits \varvec{\sigma }_n\) that \({\{}{\tilde{p}}_n{\}}_n\) is also bounded in \(H^1(\Omega )\). The compactness of the embedding \(H^1(\Omega ,\mathbb {R}^d)\times H^1(\Omega ) \hookrightarrow L^2(\Omega , \mathbb {R}^d)\times L^2(\Omega )\) imply that \({\{}{\tilde{\varvec{\sigma }}}_n, \tilde{p}_n{\}}_n\) admits a subsequence (also denotes \({\{}{\tilde{\varvec{\sigma }}}_n, {\tilde{p}}_n{\}}_n\)) that converges strongly in \(L^2(\Omega , \mathbb {R}^d)\times L^2(\Omega )\). Next, it follows from (6.19) and a Green formula that

Taking \(\varvec{v}= {\tilde{\varvec{u}}}_p - {\tilde{\varvec{u}}}_q\) in the last identity yields

which proves that \({\{}\varvec{\varepsilon }( {\tilde{\varvec{u}}}_n){\}}_n\) is a Cauchy sequence in \(L^2(\Omega , \mathbb {M})\). Moreover, testing the identity \(\nabla {(}\varvec{u}_p - \varvec{u}_q{)} = ({\widetilde{\varvec{\sigma }}}_p - {\widetilde{\varvec{\sigma }}}_q)^\mathtt {D}+ {\widetilde{\varvec{r}}}_p - {\widetilde{\varvec{r}}}_q\) with \(\varvec{\tau }\in H(\mathop {\mathbf {div}}\nolimits ^0, \Omega , \mathbb {M})\) yields

We can now use the inf-sup condition (6.3) as in Lemma 6.2 to deduce that \({\{}{\widetilde{\varvec{r}}}_n{\}}_n\) is also a Cauchy sequence in \(L^2(\Omega , \mathbb {M})\). We conclude that \({\{}({\widetilde{\varvec{\sigma }}}_n, {\widetilde{\varvec{r}}}_n){\}}_n := {\{}P(\varvec{\sigma }_n, \varvec{r}_n){\}}_n\) admits a subsequence that converges strongly in H and Assumption 2 (i) follows.

Let us denote by \((\varvec{\sigma }^*,\varvec{r}^*):= T(\varvec{\sigma }, \varvec{r})\) the image of any \((\varvec{\sigma }, \varvec{r})\in X\) by the source operator T. Testing (2.2) with \((I, 0)\in X\) we deduce that \({(}\varvec{\sigma }^*,1{)}_\Omega = {(}\varvec{\sigma },1{)}_\Omega \). Testing now the same equation with \((\varvec{\tau }, 0)\), and choosing the entries of \(\varvec{\tau }:\Omega \rightarrow \mathbb {M}\) indefinitely differentiable and compactly supported in \(\Omega \), we deduce that

Therefore, the subspace

is compactly embedded in X and Assumption 2 (ii) is also satisfied. \(\square \)

Before we provide the spectral description of T given by Theorem 6.1, we point out that for \(\eta \notin {\{}0,1{\}}\) is an eigenvalue of T with eigenspace \(\mathcal {E}_\eta (X)\) then

where we used that \(\varvec{\sigma }\) is symmetric and satisfies the restriction \({(}\varvec{\sigma }, 1{)}_\Omega = 0\) together with the fact that \({\{}0{\}}\times L^2(\Omega , \mathbb {K})\subset K\) and \(\mathcal {E}_\eta (X)\not \subset \ker (T)\).

Proposition 6.3

The spectrum \(\mathop {\mathrm {sp}}\nolimits (T)\) of T admits the decomposition \(\mathop {\mathrm {sp}}\nolimits (T) = {\{}0, 1{\}} \cup {\{}\eta _k{\}}_{k\in \mathbb {N}}\), where \({\{}\eta _k{\}}_k\subset (0,1)\) is a sequence of finite-multiplicity eigenvalues of T that converges to 0. The ascent of each of these eigenvalues is 1 and the corresponding eigenfunctions lie in P(X). Moreover, \(\eta =1\) is an infinite-multiplicity eigenvalue of T with associated eigenspace K and \(\eta =0\) is an eigenvalue of with eigenspace \(\ker (T)\).

6.2.2 The discrete problem

The Galerkin scheme (3.1) is based on the same finite element spaces used in the last section. Namely, for \(k\ge 0\), we take \(X_h:= \varvec{\mathcal {W}}_h\times \mathcal {P}_k(\mathcal {T}_h,\mathbb {K})\), with \(\varvec{\mathcal {W}}_h := \mathcal {P}_{k+1}(\mathcal {T}_h, \mathbb {M})\cap H(\mathop {\mathbf {div}}\nolimits , \Omega , \mathbb {M})\) and here again \(K_h = \varvec{\mathcal {W}}^0_h\times \mathcal {P}_k(\mathcal {T}_h, \mathbb {K}) \subset K\). It follows easily from Lemma 6.3 that Assumption 4 holds true.

Proposition 6.4

The spectrum of \(T_h\) consists of \(m:=\mathop {\mathrm {dim}}\nolimits (X_h)\) eigenvalues, repeated accordingly to their respective multiplicities. It holds \(\mathop {\mathrm {sp}}\nolimits (T_h)={\{}1{\}}\cup {\{}\eta _{hk}{\}}_{k=1}^{m_0}\), with \(m_0 = m - \mathop {\mathrm {dim}}\nolimits (K_h)\). The eigenspace associated to \(\eta _h=1\) is \(K_h\). The eigenvalues \(\eta _{hk}\notin {\{}0,1{\}}\) are non-defective and the corresponding eigenspaces lie in \(K_h^{\bot _B}\). Moreover, \(\eta _h=0\) is an eigenvalue with eigenspace \(\ker (T_h)\).

Remark 6.2

It is straightforward that \(\ker (T_h)= {\{}q_hI;\ q_h \in V_h{\}}\times {\{}0{\}}\) where the space \(V_h := \mathcal {P}_{k+1}(\mathcal {T}_h)\cap H^1(\Omega )\cap L_0^2(\Omega )\) of continuous and piecewise polynomial functions of degree \(\le k+1\).

We define \(\Xi _h:\, X \rightarrow X_h\) as in Lemma 6.4, which ensures Assumption 5 and guaranties by the way that the Galerkin method (3.1) provides a correct spectral approximation of the stress formulation of the Stokes eigenproblem (2.1) in the sense of Theorem 4.1. Moreover, Assumption 6 is satisfied thanks to (6.21). We can then rely on Theorem 5.2 to obtain the following rates of convergence for the eigenfunctions and eigenvalues.

Theorem 6.3

Let \(\eta \notin {\{}0,1{\}}\) be an eigenvalue of T of algebraic multiplicity m and let \(\mathcal {E}_\eta (X)\) be the corresponding eigenspace. There exists \(h_0>0\) such that for all \(h\le h_0\), \(T_h\) admits eigenvalues \(\eta _{i,h}\), \(i=1,\ldots , m\), repeated according to their respective multiplicity, such that

where \(\mathcal {E}_{\eta , h}(X_h)\) is the \(T_h\)-invariant subspace of \(X_h\) spanned by the eigenspaces of \({\{}\eta _{i, h},\ i = 1,\ldots , m{\}}\). Moreover, under the piecewise regularity assumption

for h small enough,

Remark 6.3

The analysis given in this section can be adapted to deal with Dirichlet-Neumann boundary conditions for eigenproblems (6.1) and (6.15) by defining the space X as in [26] and by employing the quasi-interpolation operator with partial boundary conditions recently introduced by Licht in [25, Theorem 6.3].

6.2.3 Numerical results

On the unit disk, the eigenvalues of the Stokes eigenproblem (6.15) are given by the sequence \({\{}\tfrac{1}{2}\jmath _{n\ell }^2{\}}_{n\ge 1,\, \ell \ge 1}\), where \(\jmath _{nk}\) is the \(\ell \)-th positive zero of the Bessel function \(J_n\) of the first kind of order n. Accurate approximations of the first 4 eigenvalues are given by

We use the open-source finite element software Netgen/NGSolve [30] to implement the Galerkin method (3.1) of the Stokes eigenproblem (6.15). We take advantage of the Netgen/NGSolve support for curved mixed finite elements of arbitrary order to base the construction of \(X_h\) on an H(div)-conforming BDM-parametric element associated to an exact triangulation \(\mathcal {T}_h\) of the unit disk. We notice that this leads to a conforming finite element approximation \(X_h\) of X.

We denote by \(\lambda _{jh}\) the approximation of \(\lambda _j\) computed by solving problem 3.1 with A and B given by (6.17). We introduce the experimental rates of convergence

where h and \({\hat{h}}\) are two consecutive mesh sizes.

We present in Table 1 the first four eigenvalues computed on a series of exact partitions of the unit disk \({\bar{\Omega }}\) with decreasing mesh sizes h, and for polynomial degrees \(k+1 = 1, 2, 3, 4\). We also report in the same table the arithmetic mean of the experimental rates of convergence obtained for each eigenvalue via (6.24). We observe that a convergence of order \(2(k+1)\) is attained for each eigenvalue, as predicted by the error estimate given in (6.22).

References

Adams, S., Cockburn, B.: A mixed finite element method for elasticity in three dimensions. J. Sci. Comput. 25(3), 515–521 (2005)

Amara, M., Thomas, J.M.: Equilibrium finite elements for the linear elastic problem. Numer. Math. 33(4), 367–383 (1979)

Arnold, D.N., Winther, R.: Mixed finite elements for elasticity. Numer. Math. 92(3), 401–419 (2002)

Arnold, D.N., Brezzi, F., Douglas, J., Douglas, J., Jr.: PEERS: a new mixed finite element for plane elasticity. Jpn. J. Appl. Math. 1(2), 347–367 (1984)

Arnold, D.N., Falk, R.S., Winther, R.: Mixed finite element methods for linear elasticity with weakly imposed symmetry. Math. Comp. 76(260), 1699–1723 (2007)

Arnold, D.N., Awanou, G., Winther, R.: Finite elements for symmetric tensors in three dimensions. Math. Comp. 77(263), 1229–1251 (2008)

Arnold, D.N., Awanou, G., Winther, R.: Nonconforming tetrahedral mixed finite elements for elasticity. Math. Models Methods Appl. Sci. 24(4), 783–796 (2014)

Boffi, D.: Finite element approximation of eigenvalue problems. Acta Numer. 19, 1–120 (2010)

Boffi, D., Brezzi, F., Gastaldi, L.: On the problem of spurious eigenvalues in the approximation of linear elliptic problems in mixed form. Math. Comp. 69(229), 121–140 (2000)

Boffi, D., Brezzi, F., Fortin, M.: Reduced symmetry elements in linear elasticity. Commun. Pure Appl. Anal. 8(1), 95–121 (2009)

Boffi, D., Brezzi, F., Fortin, M.: Mixed finite element methods and applications. In: Springer Series in Computational Mathematics, vol. 44. Springer, Heidelberg (2013)

Brezzi, F., Douglas, J., Jr., Marini, L.D.: Two families of mixed finite elements for second order elliptic problems. Numer. Math. 47(2), 217–235 (1985)

Christiansen, S.H., Winther, R.: Smoothed projections in finite element exterior calculus. Math. Comp. 77(262), 813–829 (2008)

Cockburn, B., Gopalakrishnan, J., Guzmán, J.: A new elasticity element made for enforcing weak stress symmetry. Math. Comp. 79(271), 1331–1349 (2010)

Dauge, M.: Elliptic boundary value problems on corner domains. In: Lecture Notes in Mathematics, vol. 1341. Smoothness and asymptotics of solutions. Springer-Verlag, Berlin (1988)

Descloux, J., Nassif, N., Rappaz, J.: On spectral approximation. I. The problem of convergence. RAIRO Anal. Numér. 12(2), 97–112, iii (1978a)

Descloux, J., Nassif, N., Rappaz, J.: On spectral approximation. II. Error estimates for the Galerkin method. RAIRO Anal. Numér. 12(2), 113–119, iii (1978b)

Ern, A., Guermond, J.-L.: Theory and practice of finite elements. In: Applied Mathematical Sciences, vol. 159. Springer-Verlag, New York (2004)

Ern, A., Guermond, J.-L.: Mollification in strongly Lipschitz domains with application to continuous and discrete de Rham complexes. Comput. Methods Appl. Math. 16(1), 51–75 (2016)

Gopalakrishnan, J., Guzmán, J.: Symmetric nonconforming mixed finite elements for linear elasticity. SIAM J. Numer. Anal. 49(4), 1504–1520 (2011)

Gopalakrishnan, J., Guzmán, J.: A second elasticity element using the matrix bubble. IMA J. Numer. Anal. 32(1), 352–372 (2012)

Grisvard, P.: Problèmes aux limites dans les polygones. Mode d’emploi. EDF Bull. Direction Études Rech. Sér. C Math. Inform. (1), 3, 21–59 (1986)

Jun, H.: Finite element approximations of symmetric tensors on simplicial grids in \({\mathbb{R}}^n\): the higher order case. J. Comput. Math. 33(3), 283–296 (2015)

Kato, T.: Perturbation theory for linear operators. In: Classics in Mathematics. Springer-Verlag, Berlin (1995). Reprint of the 1980 edition

Licht, M.W.: Smoothed projections and mixed boundary conditions. Math. Comp. 88(316), 607–635 (2019)

Meddahi, S., Mora, D., Rodríguez, R.: Finite element spectral analysis for the mixed formulation of the elasticity equations. SIAM J. Numer. Anal. 51(2), 1041–1063 (2013)

Meddahi, S., Mora, D., Rodríguez, R.: Finite element analysis for a pressure-stress formulation of a fluid-structure interaction spectral problem. Comput. Math. Appl. 68(12, part A), 1733–1750 (2014)

Meddahi, S., Mora, D., Rodríguez, R.: A finite element analysis of a pseudostress formulation for the Stokes eigenvalue problem. IMA J. Numer. Anal. 35(2), 749–766 (2015)

Mercier, B., Osborn, J., Rappaz, J., Raviart, P.-A.: Eigenvalue approximation by mixed and hybrid methods. Math. Comp. 36(154), 427–453 (1981)

Netgen/NGSolve. Finite element library. https://ngsolve.org

Stenberg, R.: A family of mixed finite elements for the elasticity problem. Numer. Math. 53(5), 513–538 (1988)

Shuonan, W., Gong, S., Jinchao, X.: Interior penalty mixed finite element methods of any order in any dimension for linear elasticity with strongly symmetric stress tensor. Math. Models Methods Appl. Sci. 27(14), 2711–2743 (2017)

Acknowledgements

This work was funded by Ministerio Ciencia e Innovación Gobierno de España (Grant no. PID2020-116287GB-I00.

Author information

Authors and Affiliations

Corresponding author

Additional information

Dedicated to the memory of Francisco-Javier Sayas.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Meddahi, S. Variational eigenvalue approximation of non-coercive operators with application to mixed formulations in elasticity. SeMA 79, 139–164 (2022). https://doi.org/10.1007/s40324-021-00279-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40324-021-00279-6