Abstract

We are going to explain the fuzzy Adams–Bashforth methods for solving fuzzy differential equations focusing on the concept of g-differentiability. Considering the analysis of normal, convex, upper semicontinuous, compactly supported fuzzy sets in \(R^n\) and also convergence of the methods, the general expression of solutions is obtained. Finally, we demonstrate the importance of our method with some illustrative examples. These examples are provided aiming to solve the fuzzy differential equations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

According to the most recent published papers, the fuzzy differential equation was introduced in 1978. Moreover, Kandel (1980) and Byatt and Kandel (1978) present the fuzzy differential equation and have rapidly expanded literature. First-order linear fuzzy differential equations emerge in modeling the uncertainty of dynamical systems. The solutions of first-order linear fuzzy differential equations have been widely considered (e. g., see Chalco-Cano and Roman-Flores 2008; Buckley and Feuring 2000; Seikkala 1987; Diamond 2002; Song and Wu 2000; Allahviranloo et al. 2009; Zabihi et al. 2023; Allahviranloo and Pedrycz 2020).

The most famous numerical solutions of order fuzzy differential equations are investigated and analyzed under the Hukuhara and gH-differentiability (Safikhani et al. 2023). It is widely believed that the common Hukuhara difference and so Hukuhara derivative between two fuzzy numbers are accessible under special circumstances (Kaleva 1987; Diamond 1999, 2000). The gH-derivative, however, is available in less restrictive conditions, even though this is not always the case (Dubois et al. 2008). To overcome these serious defects of the concepts mentioned above, Bede and Stefanini (Dubois et al. 2008) describe g-derivative. In 2007, Allahviranloo used the predictor–corrector under the Seikkala-derivative method to propose a numerical solution of fuzzy differential equations (Allahviranloo et al. 2007).

Here, we investigate the Adams–Bashforth method to solve fuzzy differential equations focusing on g-differentiability. We restrict our study on normal, convex, upper semicontinuous, and compactly supported fuzzy sets in \(\mathbb {R}^n\).

This paper has been arranged as mentioned below: firstly, in Sect. 2, we recall the necessary definitions to be used in the rest of the article, after a preliminary section in Sect. 3, which is dedicated to the description of the Adams–Bashforth method to fix the purposed equation. The convergence theorem is formulated and proved in Sect. 4. For checking the accuracy of the method, three examples are presented. In Sect. 5, their solutions are compared with the exact solutions. In the last section, some conclusions are given.

2 Preliminaries

Definition 2.1

(Mehrkanoon et al. 2009) A fuzzy subset of the real line with a normal, convex, and upper semicontinuous membership function of bounded support is a fuzzy number \(\tilde{w}\). The family of fuzzy numbers is indicated by F.

We show an arbitrary fuzzy number with an ordered pair of functions \((\underline{w}(\gamma ),\overline{w}(\gamma ))\), \(0\le \gamma \le 1\) which provides the following:

-

\(\underline{w}(\gamma )\) is a bounded left continuous non-decreasing function over [0, 1], corresponding to any \(\gamma \).

-

\(\overline{w}(\gamma )\) is a bounded left continuous non-decreasing function over [0, 1], corresponding to any \(\gamma \).

$$\begin{aligned} \underline{w}(\gamma )\le \overline{w}(\gamma ),\quad \textrm{where} ~~ \gamma \in [0,1]. \end{aligned}$$(1)

Then, the \(\gamma \)-level set

is a closed bounded interval, which is denoted by:

Definition 2.2

(Bede and Stefanini 2013) The g-difference is defined as follows:

In Bede and Stefanini (2013), the difference between g-derivative and q-derivative has been fully investigated.

Definition 2.3

(Bede and Stefanini 2013; Diamond 1999, The Hausdorff distance) The Hausdorff distance is defined as follows:

where \(|| \cdot ||=D(\cdot , \cdot )\) and the gH-difference \(\circleddash _{gH}\) is with interval operands \([u]^\gamma \) and \([v]^\gamma \)

By definition, D is a metric in \(R_F\) which has the subsequent properties:

-

1.

\(D(w+t, z+t)=D(w,z ) \qquad \forall w, z, t \in R_F\),

-

2.

\(D(rw,rz)=|r|D(w,z)\qquad \forall w, z\in \ R_F, r\in R\),

-

3.

\(D(w+t,z+d)\le D(w,z)+ D(t, d)\qquad \forall w, z, t, d \in R_F\).

Then, \((D, R_F)\) is called a complete metric space.

Definition 2.4

(Bede and Stefanini 2013) Neumann’s integral of \(k{:}\, [m, n] \rightarrow R_F\) is defined level-wise by the fuzzy

Definition 2.5

(Bede and Stefanini 2013) Suppose \(k{:}\, [m,n] \rightarrow R_F\) is a function with \([k(y)]^{\gamma }=[\underline{k}_{\gamma }(y), \overline{k}_{\gamma }(y)]\). If \(\underline{k}_{\gamma }(y)\) and \(\overline{k}_{\gamma }(y)\) are differentiable real-valued functions with respect to y, uniformly for \(\gamma \in [0, 1]\), then k(y) is g-differentiable and we have

Definition 2.6

(Bede and Stefanini 2013) Let \(y_0 \in [m, n]\) and t be such that \(y_0+t \in ]m, n[\), then the g-derivative of a function \(k{:}\, ]m, n[ \rightarrow R_F\) at \(y_0\) is defined as

If there exists \(k'_g(y_0)\in R_F\) satisfying (7), we call it generalized differentiable (g-differentiable for short) at \(y_0\). This relation depends on the existence of \(\circleddash _g\), and there exists no such guarantee for this desire.

Theorem 2.7

Suppose \(k{:}\,[m,n]\rightarrow R_F\) is a continuous function with \([k(y)]^{\gamma }=[k^{-}_{\gamma }(y), k^{+}_{\gamma }(y)]\) and g-differentiable in [m, n]. In this case, we obtain

Proof

To show the assertion, it is enough to show their equality in level-wise form, suppose k is g-differentiable, so we have

\(\square \)

Definition 2.8

(Kaleva 1990, fuzzy Cauchy problem) Suppose \(x'_g(s)=k(s,x(s))\) is the first-order fuzzy differential equation, where y is a fuzzy function of s, k(s, x(s)) is a fuzzy function of the crisp variable s, and the fuzzy variable x and \(x'\) is the g-fuzzy derivative of x. By the initial value \(x(s_0)=\gamma _0\), we define the first-order fuzzy Cauchy problem:

Proposition 2.9

Suppose \(\textit{k, h}{:}\, [\textit{a}, \textit{b}] {\rightarrow } R_F\) are two bounded functions, then

Proof

Since \(k(y)\le \textrm{sup}_A k\) and \(k(y)\le \textrm{sup}_A k\) for every \(y \in [m,n]\), one can obtain \(k(y)+h(y)\le \textrm{sup}_A k+\textrm{sup}_A h\). Thus, \(k+h\) is bounded from above by \(\textrm{sup}_A k+\textrm{sup}_A h\), so \(\textrm{sup}_A( k+h) \le \textrm{sup}_A k+ \textrm{sup}_A h\). The proof for the infimum is similar. \(\square \)

Definition 2.10

Let \(\{\widetilde{q}_m\}^\infty _{m=0}\) be a fuzzy sequence. Then, we define the backward g-difference \(\nabla _g \widetilde{q}_m\) as follows

So, we have

Consequently,

Proposition 2.11

For a given fuzzy sequence \({\left\{ {\widetilde{q}}_m\right\} }^{\infty }_{m=0}\), by supposing backward g-difference, we have

Proof

We prove proposition by induction that, for all \(n \in \mathbb {Z}^+\),

Using Definition 2.10, for base case, \(n = 1\), we have

Induction step: Let \( k \in \mathbb {Z}^+\) be given and suppose (14) is true for \(n=k\). Then,

Conclusion: For all \(m\in \mathbb {Z}^+\), (14) is correct, by the principle of induction. \(\square \)

Definition 2.12

(Switching Point) The concept of switching point refers to an interval where fuzzy differentiability of type-(i) turns into type-(ii) and also vice versa.

3 Fuzzy Adams–Bashforth method

To derivative of a fuzzy multistep method, we consider the solution of the initial-value problem:

To obtain the approximation \(t_{j+1}\) at the mesh point \(s_{j+1}\), where initial values

are assumed.

If integrated over the interval \([s_j,s_{j+1}]\), we get

but, without knowing \(\widetilde{x}(s)\), we cannot integrate \(\widetilde{k}(s,\widetilde{x}(s))\), one can apply an interpolating polynomial \(\widetilde{q}(s)\) to \(\widetilde{k}(s, \widetilde{x}(s))\), which is computed by the data points \(\left( s_0, {\widetilde{t}}_0\right) , \left( s_1,{\widetilde{t}}_1\right) ,\ldots \left( s_j,{\widetilde{t}}_j\right) \). These data were obtained in Sect. 2.

Indeed, by supposing that \(\widetilde{x}\left( s_j\right) \approx \ {\widetilde{t}}_j\ \), Eq. (17) is rewritten as

To take a fuzzy Adams–Bashforth explicit m-step method under the notion of g-difference, we construct the backward difference polynomial \({\widetilde{q}}_{n-1}(s)\),

We assume that the nth derivatives of the fuzzy function k exist. This means that all derivatives are g-differentiable. As \({\widetilde{q}}_{n-1}(s)\) is an interpolation polynomial of degree \(n-1\), some number \({\xi }_j\) in \(\left( s_{j+1-n}, s_j\right) \) exists with

where the corresponding notation \({\widetilde{k}}^{(n)}_g (s, \widetilde{x}(s)),n\in \mathbb {N},\) exists. Moreover, it can be mentioned that the existence of this corresponding formula based on the existence of \({\circleddash }_g\), and while \({\circleddash }_g\) exist this relation always exists.

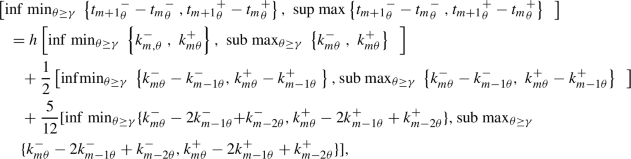

We introduce the \(s=s_j+\beta h\), with \(\textrm{d}s=h \textrm{d}\beta \), substituting these variable into \({\widetilde{q}}_{n-1}(s)\) and the error term indicates

So, we will get

Obviously, the product of \(\beta \ \left( \beta +1\right) \cdots \left( \beta +n-1\right) \) does not change sign on [0, 1], so the Weighted Mean Value Theorem for some number \({\mu }_j\), where \(s_{j+1-n}< {\mu }_j< s_{j+1}\), can be applied to the last term in Eq. (22), hence it becomes

So, it simplifies to

whereas

So, Eq. (20) is written as

It is also worth mentioning that the notions \(\Delta _{g}\) and \(\oplus \) are extensively utilized solving the problems of sup and inf existence.

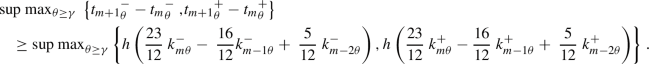

To illustrate this method, we discuss solving the fuzzy initial value problem \({\widetilde{x}}'\left( s\right) =\widetilde{k}(s,\widetilde{x}\left( s\right) )\) by Adams–Bashforth’s three-step method. To derive the three-step Adams–Bashforth technique, with \(n= 3\), We have

For \(m=2, 3,\ldots , N-1.\) So

Here, we also describe our model as introduced models \(\Delta _{g}\) and \(\oplus \).

By considering

As a consequence

from which we obtain

And

if we suppose that

Case 1:

and

So

and

Then, we have

Hence,

We follow

Similarly, we have

Case 2:

and

So,

and

Thus,

So that,

Then, we can say

Case 3:

and

So,

and

So,

Then,

So, we have

Case 4:

and

So,

and

And so,

Then,

We follow:

4 Convergence

We begin our dissection with definitions of the convergence of multistep difference equation and consistency before discussing methods to solve the differential equation.

Definition 4.1

The differential fuzzy equation with initial condition

and similarly, the other models can be derived as

in which

is the \(\left( j+1\right) \)st step in a multistep method. At this step, it has a fuzzy local truncation error as follows

Exists N that for all \(j= n-1, n, \ldots N-1\), and \(h=\frac{b-a}{N}\), where

And \(\widetilde{x}\left( s_j\right) \) indicates the exact value of the solution of the differential equation. The approximation \({\widetilde{t}}_j\) is taken from the different methods at the jth step.

Definition 4.2

A multistep method with local truncation error \({\widetilde{\nu }}_{j+1}\left( h\right) \) at the \((j+1)\)th step is called consistent with the differential equation approximation if

Theorem 4.3

Let the initial-value problem

be approximated by a multistep difference method:

Let a number \(h_0>0\) exist, and \(\phi \left( s_j,\widetilde{t}\left( s_j\right) \right) \) be continuous, with meets the constant Lipschitz T

Then, the difference method is convergent if and only if it is consistent. It is equal to

We are aware of the concept of convergence for the multistep method. As the step size approaches zero, the solution of the difference equation approaches the solution to the differential equation. In other words

For the multistep fuzzy Adams–Bashforth method, we have seen that

using Proposition 2.11, \(\nabla ^l_g{\widetilde{k}}_m=h^l\widetilde{k}^{(l)}_{m_g}\), and substituting it in Eq. (66), we have

So,

Consequently,

under the hypotheses of paper, \(\widetilde{k}({(s}_j,\widetilde{x}(s_j))\in R_F\), and by definition g-differentiability \(\widetilde{k}^{(n)}({(s}_j,\widetilde{x}(s_j))\in R_F\) so by Definition 2.1\(\ {\widetilde{k}}^{(n)}\left( {(s}_j,\widetilde{x}\left( s_j\right) \right) \in R_F\) for \(j\ge 0\) are bounded, thus exists M such that

And hence,

where

When \(h\rightarrow 0\), we will have \(Z\rightarrow 0\) so

So, we see that it satisfied the first condition of Definition 4.2. The concept of the second part is that if the one-step method generating the starting values is also consistent, then the multistep method is consistent. So our method is consistent; therefore according to Theorem 4.3, this difference method is convergent.

5 Examples

Example 5.1

Consider the initial-value problem

Obviously, one can check the exact solution as follows:

Indeed, the solution is a triangular number

So, the exact solution in mesh point \(s=0.01\) is

On the other hand with the proposed method, the approximated solution in \(s=0.01\) is as follows:

where \(\tilde{t}^\gamma \) is a approximated of \(\tilde{x}\).

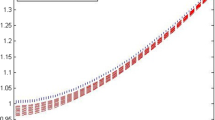

The maximum error in \(s=0.1\), \(s=0.2, \ldots , s=1\), also shows the errors (Table 1).

Suppose

Consider the initial-value problem

and

Thus, we have

where (90) are real values. Suppose

Therefore,

By (85), (86), (92), we obtain

According to the previous sections, this example has been solved by the two-step Adams–Bashforth method with \(t=0.1\) and \(N=10\). We use the following relations to solve it.

Example 5.2

Consider the initial-value problem

First, we solve the problem with the gH-differentiability. The initial-value problem on [0, 1] is \([(i)-gH]\)-differentiable and \([(i)-gH]\)-differentiable on (1, 2]. By solving the following system, the \([(i)-gH]\)-differentiable solution will be achieved

By solving the following system, the \([(ii)-gH]\)-differentiable solution will be achieved

If we apply the Euler method to the approximate solution of the initial-value problem by

The results are presented in Table 2.

In the calculations of this method, we need to consider the \(i-gh\)-differentiability and \(ii-gH\)-differentiability. But when we use g-differentiability, we do not need to check the different states of the differentiability. To solve using the method mentioned in the article, we have:

Or we have \(x^\gamma (0)-[\gamma , 2-\gamma ]\). The exact solution is as follows:

The results of the solution using the Adams–Bashforth two-step method for \(h = 1\) and calculating the approximate value of the solution and the error of the method can be seen in the Table 3.

Consider the initial-value problem \(\tilde{x'}=(s\ominus 1)\odot \tilde{x}^2\), where \(s\in [-1,1]\)

the exact solution is

6 Conclusion

In the present paper, the proposed method, which is based on the concept of g-differentiability, provides a fuzzy solution. This solution is related to a set of equations from the family of Adams-Bashforth differential equations, which coincide with the solutions derived by fuzzy differential equations.

The gH-difference is a powerful and versatile fuzzy differential operator that is more flexible, robust, and computationally efficient, making it a good choice for solving a wide range of fuzzy differential equations. It does not need i and ii-differentiability. In Examples, we compare g-differentiability and gH-differentiability.

G-differentiability allows for capturing gradual changes in a fuzzy-valued function. G-differentiable functions exhibit certain degrees of smoothness and continuity, which can be useful in modeling and analyzing fuzzy systems. The choice of the parameter g in g-differentiability is crucial and depends on the specific problem. Determining an appropriate value for g requires careful consideration and analysis. H-differentiability combines the gradual reduction of fuzziness (via the parameter g) with the Hukuhara difference (H-difference). It provides a more refined analysis of fuzzy-valued functions. gH-differentiability offers enhanced modeling capabilities by considering both the gradual reduction of fuzziness and the separation between fuzzy numbers or fuzzy sets. But gH-differentiability introduces an additional level of complexity compared to g-differentiability or H-differentiability alone. The combination of gradual reduction and H-difference requires careful understanding and analysis to ensure proper application.

Data availability

There is no data available for this research.

References

Allahviranloo T, Pedrycz W (2020) Soft numerical computing in uncertain dynamic systems. Academic Press, New York

Allahviranloo T, Ahmady N, Ahmady E (2007) Numerical solution of fuzzy differential equations by predictor-corrector method. Inf Sci 177(7):1633–1647

Allahviranloo T, Kiani NA, Motamedi N (2009) Solving fuzzy differential equations by differential transformation method. Inf Sci 179(7):956–966

Bede B, Stefanini L (2013) Generalized differentiability of fuzzy-valued functions. Fuzzy Sets Syst 230:119–141. https://doi.org/10.1016/j.fss.2012.10.003

Buckley JJ, Feuring T (2000) Fuzzy differential equations. Fuzzy Sets Syst 110(1):43–54

Byatt W, Kandel A (1978) Fuzzy differential equations. In: Proceedings of the international conference on Cybernetics and Society, Tokyo, Japan, vol 1

Chalco-Cano Y, Roman-Flores H (2008) On new solutions of fuzzy differential equations. Chaos Solitons Fractals 38(1):112–119

Diamond P (1999) Time-dependent differential inclusions, cocycle attractors and fuzzy differential equations. IEEE Trans Fuzzy Syst 7(6):734–740

Diamond P (2000) Stability and periodicity in fuzzy differential equations. IEEE Trans Fuzzy Syst 8(5):583–590

Diamond P (2002) Brief note on the variation of constants formula for fuzzy differential equations. Fuzzy Sets Syst 129(1):65–71

Dubois D, Lubiano MA, Prade H, Gil MA, Grzegorzewski P, Hryniewicz O (2008) Soft methods for handling variability and imprecision, vol 48. Springer, Berlin

Kaleva O (1987) Fuzzy differential equations. Fuzzy Sets Syst 24(3):301–317

Kaleva O (1990) The Cauchy problem for fuzzy differential equations. Fuzzy Sets Syst 35(3):389–396. https://doi.org/10.1016/0165-0114(90)90010-4

Kandel A (1980) Fuzzy dynamical systems and the nature of their solutions. In: Fuzzy sets. Springer, Berlin, pp 93–121

Mehrkanoon S, Suleiman M, Majid Z (2009) Block method for numerical solution of fuzzy differential equations. In: International mathematical forum, vol 4, Citeseer, pp 2269–2280

Safikhani L, Vahidi A, Allahviranloo T, Afshar Kermani M (2023) Multi-step gh-difference-based methods for fuzzy differential equations. Comput Appl Math 42(1):27

Seikkala S (1987) On the fuzzy initial value problem. Fuzzy Sets Syst 24(3):319–330

Song S, Wu C (2000) Existence and uniqueness of solutions to Cauchy problem of fuzzy differential equations. Fuzzy Sets Syst 110(1):55–67

Zabihi S, Ezzati R, Fattahzadeh F, Rashidinia J (2023) Numerical solutions of the fuzzy wave equation based on the fuzzy difference method. Fuzzy Sets Syst 465:108537. https://doi.org/10.1016/j.fss.2023.108537

Acknowledgements

The authors are thankful to the area editor and referees for giving valuable comments and suggestions.

Funding

Open access funding provided by the Scientific and Technological Research Council of Türkiye (TÜBİTAK).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Marcos Eduardo Valle.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Soroush, S., Allahviranloo, T., Azari, H. et al. Generalized fuzzy difference method for solving fuzzy initial value problem. Comp. Appl. Math. 43, 129 (2024). https://doi.org/10.1007/s40314-024-02645-2

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-024-02645-2

Keywords

- Fuzzy differential equation

- Generalized differentiability

- Adams–Bashforth method

- Fuzzy difference equations