Abstract

Fractional delay differential equations (FDDEs) and time-fractional delay partial differential equations (TFDPDEs) are the focus of the present research. The FDDEs is converted into a system of algebraic equations utilizing a novel numerical approach based on the spectral Galerkin (SG) technique. The suggested numerical technique is likewise utilized for TFDPDEs. In terms of shifted Jacobi polynomials, suitable trial functions are developed to fulfill the initial-boundary conditions of the main problems. According to the authors, this is the first time utilizing the SG technique to solve TFDPDEs. The approximate solution of five numerical examples is provided and compared with those of other approaches and with the analytic solutions to test the superiority of the proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Unlike ordinary integration and differentiation, the non-local property of fractional calculus is the main aim of attracting many researchers in various fields to study its definitions, properties and applications (Kimeu (2009) and Dalir and Bashour (2010)). Also, due to the emergence of a large number of natural phenomena that have been described using fractional calculus such as fluid mechanics (Kulish and Jose (2002)), viscoelasticity (Meral et al. (2010)), thermoelasticity (Povstenko (2015)), media (Tarasov (2011)), continuum mechanics (Carpinteri and Mainardi (1997)), and other application (Podlubny (1999); Kilbas et al. (2006); Sun et al. (2018)), numerous researchers have been keen on concentrating on various types of fractional differential equations (FDEs), and looking for the solutions to these equations has become the goal of many mathematicians. Due to the tremendous difficulty of finding exact solutions for many types of FDEs, many researchers have been interested in obtaining analytical and numerical solutions for such problems. Some of them are the finite difference (Hendy et al. (2021)), predictor-corrector (Kumar and Gejji (2019)), wavelet operational matrix (Yi and Huang (2014)), Galerkin-Legendre spectral (Zaky et al. (2020)), fractional-order Boubaker wavelets (Rabiei and Razzaghi (2021)), fractional Jacobi collocation (Abdelkawy et al. (2020)) and artificial neural network (Pakdaman et al. 2017) methods. Because of the globality of fractional calculus, it is preferable to use global numerical techniques to solve FDEs of various types.

Over the last four decades, spectral methods have been developed rapidly for several advantages. The uses of spectral methods ensure that all calculations can be performed numerically with an arbitrarily large degree of accuracy. As a result, an accurate solution was obtained with a relatively small number of degrees of freedom and hence at a reasonable computational cost, especially for variable coefficients and nonlinear problems. The choice of trial functions is one of the features which distinguish spectral methods from finite-element and finite-difference methods. The trial functions for spectral methods are differentiable global functions that fit well with the nonlocal definition of fractional derivatives, making them promising candidates for solving different types of fractional differential equations.

Spectral techniques can be separated into three principal types: Galerkin, tau, and collocation. The choice of the appropriate utilized spectral method suggested for solving such differential equations depends certainly on the type of the differential equation and also on the type of the initial or boundary conditions governing it. Also, different trial functions lead to different spectral approximations, for instance, trigonometric polynomials for periodic problems; Chebyshev, Legendre, ultraspherical, and Jacobi polynomials for non-periodic problems; Jacobi rational and Laguerre polynomials for problems on the half line, and Hermite polynomials for problems on the whole line.

In the last few years, Several authors have expressed interest in utilizing various spectral approaches for solving ordinary and partial FDEs. Doha et al. (2013) applied the collocation and tau spectral approaches based on shifted Jacobi polynomials for solving linear and nonlinear FDEs, respectively. Alsuyuti et al. (2019) used a modification of the SG approach for solving a class of ordinary FDEs. Abd-Elhameed et al. (2022) used the third- and fourth-kinds Chebyshev polynomials as the basis function of the Petrov-Galerkin method for treating particular types of odd-order boundary value problems. Hafez et al. (2022) introduced two efficient SG algorithms for solving multi-dimensional time-fractional advection–diffusion-reaction equations based on fractional-order Jacobi functions. Ezz-Eldien et al. (2020) introduced a solution of two-dimensional multi-term time-fractional mixed sub-diffusion and diffusion-wave equation using the time-space spectral collocation (SC) method.

Sometimes, the current state is insufficient to describe the behavior of the process being considered. Still, information on the previous state can benefit the system’s behavior. These systems are called time-delay systems. In the last decades, much attention has been paid to time-delay systems because of its many applications in a large number of practical systems, such as economics, transportation, chemical processes, robotics, physics and engineering (Zavarei and Jamshidi (1987); Kuang (1993); Jaradat et al. (2022); Cai and Huang (2002); Bocharov and Rihan (2000); Ji and Leung (2002)). Searching for exact solutions for any system in the presence of delay is a complex process. Therefore, developing analytical and numerical techniques for various types of FDDEs has attracted the attention of many authors. Ezz-Eldien (2018) introduced the spectral tau technique for solving systems of multi-pantograph equations. Cheng et al. (2015) applied the reproducing kernel theory for solving the neutral functional-differential equation with proportional delays. Akkaya et al. (2013) introduced a numerical approach based on first Boubaker polynomials for solve FDDEs. Ahmad and Mukhtar (2015) applied the artificial neural network for the multi-pantograph differential equation. Ezz-Eldien and Doha (2019) used the SC for pantograph Volterra integro-differential equations. Otherwise, FDDEs have many applications in numerous scientific domains, such as population ecology, control systems, biology and medicine (Magin (2010); Jaradat et al. (2020); Wu (2012); Alquran and Jaradat (2019); Keller (2010)). A large number of numerical methods have been introduced to get the solutions to various types of FDDEs. The Haar wavelet (Amin et al. (2021)), reproducing kernel (Allahviranloo and Sahihi (2021)), Generalized Adams (Zhao et al. (2021)), Lagrange interpolations (Zuniga-Aguilar et al. (2019)), finite difference (Moghaddam and Mostaghim (2014)) and Legendre wavelet methods (Yuttanan et al. (2021)) have been applied for solving various types of FDDEs. Recently, spectral methods have been applied to solve different FDDEs. Hafez and Youssri (2022) used the Gegenbauer-Gauss SC method for fractional neutral functional-differential equations. Alsuyuti et al. (2021) used the Legendre polynomials as basis function of SG approach for solving a general form of multi-order fractional pantograph equations. Abdelkawy et al. (2020) used the Jacobi SC method for fractional functional differential equations of variable order. Ezz-Eldien et al. (2020) applied Chebyshev spectral tau method for solving multi-order fractional neutral pantograph equations.

The current study aims to develop an accurate numerical solution for the FDDEs and TFDPDEs. The numerical method offered in the spatial and temporal axes is based on the SG technique. Special properties of shifted Jacobi polynomials are exploited to generate new basis functions that meet the problem’s initial and boundary conditions. The elements of a system of algebraic equations are determined using advanced techniques and are represented as a matrix.

The following is a breakdown of the article’s structure. In Sect. 2, the SG approach is explained, along with a full explanation of how to solve FDDEs using the resulting system of algebraic equations. For TFDPDEs, the SG approach is used in Sect. 3. The convergence analysis and the stability of the proposed numerical scheme is investigated in Sect. 4. Section 5 presents numerical solutions to five test problems compared to those obtained using other algorithms to demonstrate the method’s superior performance. Section 6 concludes with some recommendations.

2 Fractional delay differential equations

In this section, we discuss the numerical approach for solving the FDDEs

subject to

where \(0\le t \le \tau ,\) \(k-1< \nu _k \le k;(k=1(1)n)\) and \(\nu _0=0,\) while \(\eta _k,\alpha _k,\beta _k\) for \(k=0(1)n-1,\) are given constants and \(D_t^{\nu }{} Y (t)\) denotes the Caputo fractional (CF) derivative of order \(\nu ,\) w.r.t. tPodlubny (1999), namely,

where \(\Gamma (.)\) and \(\lceil .\rceil \) are the Gamma and Ceilling functions, respectively. As an SG approach, we define the following space

and then we choose the basis function as follows:

where \(J^{(\rho ,\sigma )}_{j,\tau }(t)\) denotes the shifted Jacobi polynomials defined on \([0,\tau ]\), have orthogonality relation is given by

where \(\varsigma _{j,\jmath }\) is the well-known Kronecker delta function, and

with

and

For more details about Jacobi polynomials, one can consult (Ezz-Eldien (2016); Alsuyuti et al. (2022)). Now, we assume that the solution of the FDDEs (1)–(2) is approximated by

where

Hence, the SG technique is to find \(Y _{N_1}(t)\in \Omega _{N_1},\) such that

where

is the scalar inner product w.r.t. weighted space  with

with  . Using the approximation (8), we have

. Using the approximation (8), we have

Let us denote

where \( 0\le \jmath \le N_1-n.\) Then, one can deduce that the main problem is equivalent to

where

Using (9) with the explicit analytic form of the shifted Jacobi polynomial \(J^{(\rho ,\sigma )}_{j,\tau }(t),\) we have

Applying the CF-derivative, we get

Making use of (12)–(13), and after performing some manipulations, we have

where

In virtue of (10) and (14), we have

Finally, we use a suitable solver to find the unknowns vector \({\textbf{C}}\).

3 Time-fractional delay partial differential equations

In this section, we apply the numerical method for the TFDPDEs

subject to

where \(0\le x \le \ell ,\ 0\le t \le \tau ,\) \(0< \nu <1,\) while \(\mu _\iota ,\alpha _\iota ,\beta _\iota \) for \(\iota =1(1)6,\) are given constants, and \(D_t^{\nu }\,Y (x,t)\) denotes the CF-derivative of order \(\nu ,\) w.r.t. t. Defining the following spaces

and

As a time-space spectral method, we approximate the solution of the TFDPDE (17)–(18) by a truncated series of shifted Jacobi polynomials as follows

where

Also, we have to find \(Y _{N_2,N_1}(x,t)\in \mho _{N_2}\times \Omega _{N_1},\) such that

where

is the scalar inner product w.r.t. weighted space  with

with

Using (21), we get

If we denote

where \(0\le \imath \le N_2-2,\ 0\le \jmath \le N_1-1,\) then the main problem is equivalent to

where

Using (22), we can write

hence,

In the same manner as in the previous section, we get

where

and

while

where

In virtue of (26) and (32), we have

4 Theoretical analysis

This section aims to verify how effective the numerical solution of the suggested approach for problems (1) and (17). We start this section with a review of certain auxiliary lemmas that will be important later for the study of convergence and stability analysis for the proposed method.

4.1 Convergence analysis

Lemma 1

Alsuyuti et al. (2022) For any \(\rho ,\sigma >-1\) and \(j\ge nm;\,n,m\in \mathbb {N},\) then, the following nm-times repeated integration formula is valid

where  is given in (7).

is given in (7).

Lemma 2

Alsuyuti et al. (2022) For any non-negative number \(j;\,j\ge nm\) and \(\rho ,\sigma \ge -\frac{1}{2},\) the following inquality is valid

Lemma 3

Rainville (1971) For all non-negative number j and real number \(\nu \), one has

Lemma 4

Szegö (1975) If \(\rho ,\sigma > -1\), then, \(\mid J_{j,\tau }^{(\rho ,\sigma )}(t) \mid ={\mathcal {O}}(j^\iota ),\) where \(\iota =\max (\rho ,\sigma ,-\frac{1}{2}).\)

Theorem 1

If \(Y (t)=t^n\,f(t)\) is expanded in infinite series of the basis functions \(\psi _j(t)\) given as in (9), i.e.,

Then this series converges uniformly to \(Y (t),\) and the expansion coefficients \(c_j\) satisfy the following inequality

with \(\left| f^{(nm)}(t)\right| \le \epsilon \) where \(\epsilon \) is a positive constant.

Proof

Applying the orthogonality relation (5) to Eq. (34) under the assumption (9), then we can write

where \(\hbar ^{(\rho ,\sigma )}_{j,\tau }\) and  are given as in (6) and (7), respectively.

are given as in (6) and (7), respectively.

Now, if we assume that \(Y (t)=t^n\,f(t),\) and with the aid of the relation (9), then we have

Applying the integration by parts nm-times, and in virtue of Lemma 1, we get

Hence,

Based on Lemmas 2 and 3, and after performing some manipulations, then we get (35), which ends the proof. \(\square \)

Theorem 2

If \(Y (x,t)=t\,x\,(\ell -x)\,f(t)\,\hat{f}(x)\) is expanded in infinite series of the basis functions \(\phi _i(x)\) and \(\psi _j(t)\) given as in (22), respectively, i.e.,

Then this series converges uniformly to \(Y (x,t),\) and the expansion coefficients \(c_{ij}\) satisfy the following inequality

with \(\left| \hat{f}^{(2m)}(x)\,f^{(m)}(t)\right| \le \varepsilon \) where \(\varepsilon \) is a positive constant.

Proof

Applying the orthogonality relation (5) to Eq. (36), under the assumptions (22), then we can write

where \(\hbar ^{(\rho ,\sigma )}_{j,\tau }\) and  are given in (6) and (7), respectively.

are given in (6) and (7), respectively.

Again, with the aid of Eq. (36) and the assumption \(Y (x,t)=t\,x\,(\ell -x)\,f(t)\,\hat{f}(x)\), the coefficients \(c_{ij}\) can be written as follows

where  is given by (23), hence,

is given by (23), hence,

Applying the integration by parts 2m-times and m-times with respect to x and t, respectively, and in virtue of Lemma 1, with the aid of Theorem 1 and Lemmas 2 and 3, and after performing some manipulations, we get

which ends the proof. \(\square \)

4.2 Stability analysis

Theorem 3

For the two consecutive approximations \(Y _{N_1}(t)\) and \(Y _{N_1+1}(t),\) we have the following estimate

Proof With the aid of Theorem 1 and Lemma 4, we have

\(\square \)

Theorem 4

For the two consecutive approximations \(Y _{N_2,N_1}(x,t)\) and \(Y _{N_2+1,N_1+1}(x,t),\) we have the following estimate

where \(\iota =\max (\rho ,\sigma ,-\frac{1}{2}).\)

Proof

Using a similar technique to that introduced for the proof of Theorem 3, we can prove this theorem. \(\square \)

5 Numerical verification

This section is confined to testing our proposed algorithm. For this purpose, we will present five numerical examples accompanied by presenting comparisons with some other techniques in the literature to demonstrate the efficiency and high accuracy of our proposed numerical algorithm.

Example 1

Consider the following FDDE (Amin et al. (2021))

with \(Y (0)=0\) and \(Y (t)=t^2\) as the exact solution.

Amin et al. in Amin et al. (2021) raised this issue and used the SC method based on Haar wavelet and Gauss elimination techniques to reduce it into a system of linear algebraic equations. The best absolute errors achieved using the numerical approach presented in Amin et al. (2021) were around \(10^{-7}\) with 2048 steps, see Table 1 in Amin et al. (2021). If we apply the numerical technique presented in Sect. 2 for solving the above problem with \(N_1=4\) and \(\{\rho ,\sigma \}=\{1,1\},\) then the approximate solution of \(Y (t)\) might be composed as follows:

and the vector \({\textbf{P}}\) can be expressed in writing as:

Therefore, the FDDE (38) is identical to the system

where the matrices \({\textbf{A}},\ {\textbf{B}}_0\) and \({\textbf{B}}_1\) can be composed as follows:

Solving the system (40) using any suitable solver, the unknown coefficients vector \({\textbf{C}}\) can be determined as follows:

which leads to the exact solution

Example 2

Consider the fractional pantograph differential equation (Syam et al. 2021)

where

with \(Y (0)=1\) and \(Y (t)= 1+\nu \, t^2\) as the exact solution.

Syam et al. (2021) considered this problem and applied the modified operational matrix method (MOMM) for getting the numerical solution. Table 1 compares the absolute errors of \(Y (t)\) at \(\{\rho ,\sigma \}= \{0,0\}\) with distinct values of \(\nu \) against the numerical results given by the MOMM (Syam et al. 2021).

Example 3

Consider the following TFDPDE (Hosseinpour et al. 2018)

subject to

The exact solution is \(Y (x,t)= x^2 \left( t^{\frac{5}{3}}+t^{\frac{4}{3}}\right) .\)

To approximate the solution to this problem, Hosseinpour et al. (2018) introduced two new numerical approaches based on the Pade approximation method with Legendre polynomial (PALP) and Muntz-Legendre polynomial (PAMLP). The authors in Hosseinpour et al. (2018) used the Pade approximation and two-sided Laplace transformations with the operational matrix of fractional derivatives to transform the main problem into a system of FPDEs without delay. In Table 2, we compare the absolute errors of \(Y (x,t)\) achieved using the proposed approach against the PALP and PAMLP approaches at \(\{N_2,N_1\}=\{5,5\}\) and \(\nu =0.9.\) Fig. 1 obtain the approximate solution of Y(x, 1.8) at \(\{N_2,N_1\}=\{5,5\},\) \(\{\rho ,\sigma \}= \{0,0\}\) and \(\nu =0.5\).

Example 4

Consider the time-fractional neutral delay parabolic equation (Usman et al. 2020)

subject to

and select p(x, t) so that the exact solution is \(Y (x,t)=t^2 \cos (\pi x).\)

Usman et al. (2020) considered this problem and applied the SC approach for getting the numerical solution. They constructed the operational matrices of fractional-order integration and those of differentiation with the delay operational matrix based on shifted Gegenbauer polynomials to transform them into a system of algebraic equations. The most accurately results obtained by the SC approach (Usman et al. 2020) were around \(10^{-15}\) using \((N_2=20)\), see Figures 6 and 7 in Usman et al. (2020). In Table 3, we list the maximum absolute errors of \(Y (x,t)\) at \(\nu =\{0.5,0.7,0.9\},\ \{\rho ,\sigma \}=\{0,0\}\) with \(N_1=3\) and \(N_2=\{4,6,8,10,12,14,16,18\}.\) Fig. 2 obtains the convergence of the new numerical approach in the perspective of the function \(Log_{10}L_\infty \) for \(Y (x,t)\) at \(N_1=3,\) \(\{\rho ,\sigma \}= \{0,0\}\) and \(\nu =\{0.5,0.7,0.9\}\) with different values of \(N_2\). Figure 3 obtain the approximate solution of \(Y (x,t)\) at \(\{N_2,N_1\}=\{6,6\},\) \(\{\rho ,\sigma \}= \{1,1\}\) and \(\nu =0.7\).

Approximate solution of \(Y (x,t)\) at \(\{N_2,N_1\}=\{5,5\},\) \(\{\rho ,\sigma \}= \{0,0\}\) and \(\nu =0.5\) for Example 3

\(Log_{10}L_\infty \) of \(Y (x,t)\) at \(N_1=3,\) \(\{\rho ,\sigma \}= \{0,0\}\) and \(\nu =\{0.5,0.7,0.9\}\) with distinct values of \(N_2\) for Example 4

Approximate solution of \(Y (x,t)\) at \(\{N_2,N_1\}=\{6,6\},\) \(\{\rho ,\sigma \}= \{1,1\}\) and \(\nu =0.7\) for Example 4

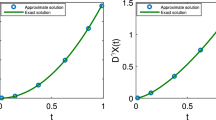

Example 5

Consider the following TFDPDE (Dehestani et al. 2019)

subject to

The exact solution is \(Y (x,t)=t^2 (2x- x^2).\)

To solve the current problem, Dehestani et al. (2019) applied the SC technique with the help of the Genocchi wavelet method. The most accurate results of absolute errors of \(Y (x,t)\) given in Dehestani et al. (2019) were around \(10^{-14}\), where the absolute errors achieved using our algorithm were around \(10^{-16}\). Figures 4 and 5 obtain the absolute errors and approximate solution, respectively, of \(Y (x,t)\) at \(\{N_2,N_1\}=\{3,3\},\) \(\{\rho ,\sigma \}= \{1,1\}\) and \(\nu =0.7\).

Absolute errors of \(Y (x,t)\) at \(\{N_2,N_1\}=\{3,3\},\) \(\{\rho ,\sigma \}= \{1,1\}\) and \(\nu =0.7\) for Example 5

Approximate solution of \(Y (x,t)\) at \(\{N_2,N_1\}=\{3,3\},\) \(\{\rho ,\sigma \}= \{1,1\}\) and \(\nu =0.7\) for Example 5

6 Concluding remarks

In the current study, we developed a numerical method for solving the FDDEs. The suggested technique is based on the SG method and shifted Jacobi polynomials. A novel approach is used to convert the core problem into another by solving a system of algebraic equations. The SG approach is also applied for TFDPDEs in both temporal and spatial axes. Moreover, the convergence and stability of this scheme are rigorously established. To our knowledge, it is the first attempt to deal with TFDPDEs using the SG algorithm or shifted Jacobi polynomials. By providing five test problems and contrasting the outcomes with those obtained using alternative results and the exact solution, the effectiveness of the suggested approach is validated. The new results ensure that the suggested method is more accurate than the collocation Haar wavelet, Bernoulli wavelet operational matrix, Pade approximation Legendre polynomial, Pade approximation Muntz-Legendre polynomial, collocation Gegenbauer and collocation Genocchi wavelet methods. We also mention that the codes were written and debugged using Mathematica version 12 software using a PC machine, with Intel(R) Core(TM) i5-8500 CPU @ 3.00 GHz, 12.00 GB of RAM.

References

Abd-Elhameed WM, Doha EH, Alsuyuti MM (2022) Numerical treatment of special types of odd-order boundary value problems using nonsymmetric cases of Jacobi polynomials. Prog Fract Differ Appl 8(2):305–319

Abdelkawy MA, Lopes AM, Babatin MM (2020) Shifted fractional Jacobi collocation method for solving fractional functional differential equations of variable order. Chaos Solitons Fract 134:109721

Ahmad I, Mukhtar A (2015) Stochastic approach for the solution of multi-pantograph differential equation arising in cell-growth model. Appl Math Comput 261:360–72

Akkaya T, Yalcinbas S, Sezer M (2013) Numeric solutions for the pantograph type delay differential equation using first Boubaker polynomials. Appl Math Comput 219:9484–9492

Allahviranloo T, Sahihi H (2021) Reproducing kernel method to solve fractional delay differential equations. Appl Math Comput 400:126095

Alquran M, Jaradat I (2019) Delay-asymptotic solutions for the time-fractional delay-type wave equation. Phys A: Stat Mech Appl 527:121275

Alsuyuti MM, Doha EH, Ezz-Eldien SS, Bayoumi BI, Baleanu D (2019) Modified Galerkin algorithm for solving multi-type fractional differential equations. Math Meth Appl Sci 42:1389–1412

Alsuyuti MM, Doha EH, Ezz-Eldien SS, Youssef IK (2021) Spectral Galerkin schemes for a class of multi-order fractional pantograph equations. J Comput Appl Math 384:113157

Alsuyuti MM, Doha EH, Ezz-Eldien SS (2022) Galerkin operational approach for multi-dimensions fractional differential equations. Commun Nonlinear Sci Numer Simul 114:106608

Amin R, Shah K, Asif M, Khan I (2021) A computational algorithm for the numerical solution of fractional order delay differential equations. Appl Math Comput 402:125863

Bocharov GA, Rihan FA (2000) Numerical modelling in biosciences using delay differential equations. J Comput Appl Math 125:183–199

Brunner H, Huang Q, Xies H (2011) Discontinuous Galerkin methods for delay differential equations of pantograph type. SIAM J Numer Anal 48:1944–1967

Cai G, Huang J (2002) Optimal control method with time delay in control. J Sound Vibration 251:383–394

Carpinteri A, Mainardi F (1997) Fractals and fractional calculus in continuum mechanics. Springer, Wien

Cheng X, Chen Z, Zhang Q (2015) An approximate solution for a neutral functional-differential equation with proportional delays. Appl Math Comput 260:27–34

Dalir M, Bashour M (2010) Applications of fractional calculus. Appl Math Sci 4(21):1021–1032

Dehestani H, Ordokhani Y, Razzaghi M (2019) On the applicability of Genocchi wavelet method for different kinds of fractional-order differential equations with delay. Numer Linear Algebra Appl 26:e2259

Doha EH, Bhrawy AH, Baleanu D, Ezz-Eldien SS (2013) On shifted Jacobi spectral approximations for solving fractional differential equations. Appl Math Comput 219:8042–8056

Ezz-Eldien SS (2016) New quadrature approach based on operational matrix for solving a class of fractional variational problems. J Comput Phys 317:362–381

Ezz-Eldien SS (2018) On solving systems of multi-pantograph equations via spectral tau method. Appl Math Comput 321:63–73

Ezz-Eldien SS, Doha EH (2019) Fast and precise spectral method for solving pantograph type Volterra integro-differential equations. Numer Algor 81:57–77

Ezz-Eldien SS, Doha EH, Wang Y, Cai W (2020a) A numerical treatment of the two-dimensional multi-term time-fractional mixed sub-diffusion and diffusion-wave equation. Commun Nonlinear Sci Numer Simul 91:105445

Ezz-Eldien SS, Wang Y, Abdelkawy MA, Zaky MA, Aldraiweesh AA, Machado JT (2020b) Chebyshev spectral methods for multi-order fractional neutral pantograph equations. Nonlinear Dyn 100:3785–3797

Hafez RM, Youssri YH (2022) Shifted Gegenbauer-Gauss collocation method for solving fractional neutral functional-differential equations with proportional delays. Kragujev J Math 46:981–996

Hafez RM, Hammad M, Doha EH (2022) Fractional Jacobi Galerkin spectral schemes for multi-dimensional time fractional advection-diffusion-reaction equations. Eng Comput 38:841–858

Hendy AS, Zaky MA, Staelen RHD (2021) A general framework for the numerical analysis of high-order finite difference solvers for nonlinear multi-term time-space fractional partial differential equations with time delay. Appl Numer Math 169:108–121

Hosseinpour S, Nazemi A, Tohidi E (2018) A new approach for solving a class of delay fractional partial differential equations. Mediterr J Math 15:218

Jaradat I, Alquran M, Momani S, Baleanu D (2020) Numerical schemes for studying biomathematics model inherited with memory-time and delay-time. Alex Eng J 59:2969–2974

Jaradat I, Alquran M, Sulaiman TA, Yusuf A (2022) Analytic simulation of the synergy of spatial-temporal memory indices with proportional time delay. Chaos, Solitons Fractals 156:111818

Ji JC, Leung AYT (2002) Resonances of a non-linear s.d.o.f. system with two time delays in linear feedback control. J Sound Vibration 253:985–1000

Keller AA (2010) Contribution of the delay differential equations to the complex economic macrodynamics. WSEAS Trans Syst 9:358–371

Kilbas A, Srivastava H, Trujillo J (2006) Theory and applications of fractional differential equations. Elsevier, Amsterdam

Kimeu J M (2009) Fractional calculus: Definitions and applications

Kuang Y (1993) Delay differential equations with applications in population dynamics. Academic Press, New York, USA

Kulish V, Jose L (2002) Application of fractional calculus to fluid mechanics. J Fluid Eng 124:803–806

Kumar M, Gejji VD (2019) A new family of predictor-corrector methods for solving fractional differential equations. Appl Math Comput 363:124633

Magin RL (2010) Fractional calculus models of complex dynamics in biological tissues. Comput Math Appl 59:1586–1593

Meral F, Royston T, Magin R (2010) Fractional calculus in viscoelasticity: an experimental study. Commun Nonlinear Sci Numer Simul 15:939–945

Moghaddam BP, Mostaghim ZS (2014) A novel matrix approach to fractional finite difference for solving models based on nonlinear fractional delay differential equations. Ain Shams Eng J 5:585–594

Pakdaman M, Ahmadian A, Effati S, Salahshour S, Baleanu D (2017) Solving differential equations of fractional order using an optimization technique based on training artificial neural network. Appl Math Comput 293:81–95

Podlubny I (1999) Fractional differential equations. Academic Press, San Diego

Povstenko Y (2015) Fractional thermoelasticity. Springer, Cham

Rabiei K, Razzaghi M (2021) Fractional-order Boubaker wavelets method for solving fractional Riccati differential equations. Appl Numer Math 168:221–234

Rainville ED (1971) Special functions. Chelsea, New York

Sun HG, Zhang Y, Baleanu D, Chen W, Chen YQ (2018) A new collection of real world applications of fractional calculus in science and engineering. Commun Nonlinear Sci Numer Simul 64:213–231

Syam MI, Sharadga M, Hashim I (2021) A numerical method for solving fractional delay differential equations based on the operational matrix method. Chaos Solitons Fractals 147:110977

Szegö G (1975) Orthogonal polynomials, American Mathematical Society Colloquium Publications

Tarasov VE (2011) Fractional dynamics: applications of fractional calculus to dynamics of particles, fields and media. Springer, Berlin, Heidelberg

Usman M, Hamid M, Zubair T, Haq RU, Wang W, Liu MB (2020) Novel operational matrices-based method for solving fractional-order delay differential equations via shifted Gegenbauer polynomials. Appl Math Comput 372:124985

Wu J (2012) Theory and applications of partial functional differential equations. Springer, New York

Yi M, Huang J (2014) Wavelet operational matrix method for solving fractional differential equations with variable coefficients. Appl Math Comput 230:383–394

Yuttanan B, Razzaghi M, Vo TN (2021) Legendre wavelet method for fractional delay differential equations. Appl Numer Math 168:127–142

Zaky MA, Hendy AS, Macias-Diaz JE (2020) Semi-implicit Galerkin-Legendre spectral schemes for nonlinear time-space fractional diffusion-reaction equations with smooth and nonsmooth solutions. J Sci Comput 82:1–27

Zavarei MM, Jamshidi M (1987) Time delay systems: analysis, opimization and applications (North-Holland systems and control series). Elsevier, New York

Zhao J, Jiang X, Xu Y (2021) Generalized Adams method for solving fractional delay differential equations. Math Comput Simul 180:401–419

Zuniga-Aguilar CJ, Gomez-Aguilar JF, Escobar-Jimenez RF, Romero-Ugalde HM (2019) A novel method to solve variable-order fractional delay differential equations based in Lagrange interpolations. Chaos Solitons Fract 126:266–282

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

This work does not have any conflicts of interest

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Alsuyuti, M.M., Doha, E.H., Bayoumi, B.I. et al. Robust spectral treatment for time-fractional delay partial differential equations. Comp. Appl. Math. 42, 159 (2023). https://doi.org/10.1007/s40314-023-02287-w

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-023-02287-w

Keywords

- Delay partial differential equations

- Caputo fractional derivative

- Shifted Jacobi polynomial

- Galerkin spectral method