Abstract

In this paper, we consider exponentially fitted peer methods for the numerical solution of first order differential equations and we investigate how the frequencies can be tuned in order to obtain the maximal benefit. We will show that the key is analyzing the error’s behavior. Formulae for optimal frequencies are computed. Numerical experiments show the properties of the proposed algorithm.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The exponential fitting technique was originally introduced a few decades ago to reform the classical algorithms to be particularly effective in the numerical solution of problems with oscillating or periodic solutions (Conte et al. 2014; Conte and Paternoster 2016; D’Ambrosio and Paternoster 2014a, b; D’Ambrosio et al. 2017; Ixaru and Vanden Berghe 2004; Ixaru 2012; Ixaru et al. 1997; Paternoster 2002; Simos 2001, 1998; Vanden Berghe et al. 2003). Exponentially Fitted (EF) algorithms were initially created to make classic numerical integrators more efficient, as, when the oscillation frequency increases, they usually need very small stepsizes to obtain accurate solutions. In contrast, EF numerical methods can achieve the same accuracy with significantly larger step sizes, permitting to develop efficient and accurate numerical methods.

Despite the enormous efforts on Ordinary Differential Equations (ODEs) problems, the critical question is how to choose frequencies to maximize the benefits of EF methods. In Ixaru et al. (2001, 2002, 2003) for EF multistep methods, an algorithm was presented, and the answer was provided to numerically tune frequencies as optimally as possible for systems of ODEs. The optimal frequencies were derived by exploiting the expression of the error, depending on some combination of higher order derivative of the solution.

The present paper considers a general class of EF two-step peer methods (Conte et al. 2018, 2020) of the form (2) for numerical integration of ODEs (1) with oscillatory solution. We investigate Ixaru’s frequency evaluation algorithm for adapted EF peer methods. We take the behavior of the leading term of the error as a starting point of the whole investigation and we develop frequency formulae.

The paper is organized as follows: in Sect. 2, we give a short review of EF peer methods. Section 3 investigates some fundamental concepts of frequency evaluation and explores the development of the EF peer method frequency assessment, while Sect. 4 describes the estimation of derivatives. Some numerical examples in Sect. 5 illustrate the efficiency of the new frequency assessment algorithm, and, lastly, some conclusions are drawn in Sect. 6. Appendix A contains the expressions of the leading term of the error.

2 EF peer methods

We consider systems of ODEs of the form

where \(f: {\mathbb {R}}\times {\mathbb {R}}^d \rightarrow {\mathbb {R}}^d\) is sufficiently smooth to ensure that the solution exists and it is unique. In addition, we assume that the problem (1) possesses an oscillatory solution. Let \(\{t_{n}:=t_{0}+n h,~n=0,\ldots , N \}\) be a discretization of the interval \([t_0, T]\) with fixed stepsize \(h>0\), \(0\le c_1 \le \cdots \le c_s=1\) be fixed distinct nodes and define \(t_{ni}=t_n+c_i h\), \(i=1, \ldots ,s\). Two-step peer methods can be formulated as follows:

where \(h>0\) is the stepsize, \({\mathbb {I}}\) is the identity matrix of dimension d, \({A}=\left[ {a_{ij}} \right] _{i,j=1}^{s}\), \({B}=\left[ {b_{ij}} \right] _{i,j=1}^{s}\) and \({R}=\left[ {r_{ij}} \right] _{i,j=1}^{s}\) are the coefficient matrices. The stages vector \(Y_n= \left[ Y_{ni} \right] _{i=1}^{s}\) contains the approximations \(Y_{ni} \approx y(t_{ni})\) and \(F(Y_n)= \left[ f(t_{ni},Y_{ni}) \right] _{i=1}^{s}\). As \(c_s=1\), \(Y_{ns}\) is the approximation of the solution at grid point \(t_{n+1}\).

The order of EF peer methods has been analyzed by Schmitt and Weiner (2004) by introducing the condition

and providing the following Theorem.

Theorem 1

If \(\textrm{AB}(p+1)\) is verified, the implicit s-stage peer method (2) has order of consistency p.

Corollary 1

The peer method (2) has order \(p \ge s\) if

where \({\textbf{1}}=[1,1,\dots ,1]^\mathrm{{T}}\), \(C=\text {diag}(c_1,\dots ,c_s)\), \( D=\text {diag}(1,\dots ,s)\) and

We briefly review the procedure of construction of EF peer methods introduced in Conte et al. (2018, 2020) in the following Algorithm.

3 Frequency evaluation

3.1 Basic elements

The question of how frequencies need to be tuned to achieve maximum benefit from EF methods has not been answered for a long time. Ixaru et al. (2001, 2002, 2003), and Vanden Berghe et al. (2001a, 2001b) have proposed a frequency evaluation algorithm for EF multistep methods and EF Runge–Kutta methods (respectively) which enable to tune of the frequency \(\mu \) in the way that the principal local truncation error vanishes. Therefore, the analysis of the error behavior is a required step. We refer to the articles Ixaru et al. (2001, 2002, 2003) and Vanden Berghe et al. (2001a, 2001b) for technical information and even some practical points and we restrict the discussion to only three relevant cases: A0, A1, and A2 algorithms that exactly incorporate all linear combinations from the reference set of functions.

-

Algorithm A0: \(\{1,t,t^2,\dots ,t^K\},\)

-

Algorithm A1:\(\{1,t,t^2,\dots ,t^K,e^{\mu t}\,\vert \,\mu \in {\mathbb {R}}\},\)

-

Algorithm A2:\(\{1,t,t^2,\dots ,t^K,e^{\pm \mu t}\,\vert \, \mu \in {\mathbb {R}}\, or\, \mu \in i{\mathbb {R}}\},\)

where K is specified by the considered method. The choice A0 covers the purely algebraic classical method; Algorithm A1 is especially of importance whenever the solution exhibits a purely exponential behavior, while Algorithm A2 describes oscillatory solutions if \(\mu \) is strictly imaginary.

As said, for the investigation of frequency, analyzing the behavior of the error is a necessary stage. We compute the expression of the leading term of the error (lte) for these algorithms by using the general procedure described in Sect. 2 and appropriate options for K and P in the fitting space based on the above algorithms.

3.2 Frequency evaluation for adapted EF peer methods

To start, we consider EF peer methods derived in Algorithm 1 to the scalar equation \(y'(t)=f(t,y(t))\), then the leading term of their error (lte) assumes the form

When we apply three types of algorithms \(A0,\, A1\) and A2 to the scalar equation (1) and assume appropriate options for K and P in the fitting space summarized in Table 1 and by attention to Eq. (13) the lte is derived as follows.

-

Algorithm A0: in this case \(K=s\) and \(P=-1\), therefore lte is described as follows

$$\begin{aligned} (lteA0)=\dfrac{h^{s+1}}{(s+1)!} D^{s+1} y(t); \end{aligned}$$(14) -

Algorithm A1: in this case \(K=s-1\) and \(P=0\) and lte is given by the following expressions assuming s even or odd.

-

if \(s=2\), then \(K=1,P=0\)

$$\begin{aligned} (lteA1)_i = \frac{ \; -h^{3}}{2!} \dfrac{{\mathcal {L}}^*_{i,2}(h,{\textbf{w}})}{ Z} D^2(D^2-\mu ^2) y(t);\quad i=1,2, \end{aligned}$$(15) -

if \(s=3\), then \(K=2,P=0\)

$$\begin{aligned} (lteA1)_i = \frac{-h^{4}}{3!} \dfrac{{\mathcal {L}}^*_{i,3}(h,{\textbf{w}})}{Z} D^3(D^2-\mu ^2) y(t),\quad i=1,2,3. \end{aligned}$$(16)

-

-

Algorithm A2: for this case with \(K=s-2 \) and \(P=0 \), assuming that s is even or odd, the following expressions provide lte.

-

\(s=2\), then \(K=0,P=0\)

$$\begin{aligned} (lteA2)_i = -h^{3} \dfrac{{\mathcal {L}}^*_{i,1}(h,{\textbf{w}})}{ Z} D(D^2-\mu ^2) y(t);\quad i=1,2, \end{aligned}$$(17) -

\(s=3\), then \(K=1,P=0\)

$$\begin{aligned} (lteA2)_i = \frac{-h^{4}}{2!} \dfrac{{\mathcal {L}}^*_{i,2}(h,{\textbf{w}})}{Z} D^2(D^2-\mu ^2) y(t),\quad i=1,2,3. \end{aligned}$$(18)

-

Our principal purpose is to determine the \(\mu \) value that guarantees maximum accuracy when the classical A0 case is replaced by one of the two EF Algorithms. For the calculation of parameter \(\mu \), we restrict discussion to the expressions for the lte of A1 and A2 cases especially for \(s=2\) and \(s=3\):

In all cases, we see that the lte consists of a product of three factors, i.e.

-

a general \(h^3\) (in the case \(s=2\)) or general \(h^4\) factor (in the case \(s=3\)),

-

a function depending on Z which tends to the classical value when Z tends to zero.

-

a factor that involves two derivatives of the solution.

The important thing for our studies is the different behavior of the third factor. This differential factor can make a real difference in accuracy. Let us then introduce the following functionals:

If a \(\mu \) exists such that \({\mathcal {D}}_1 \) identically vanishes on the quoted interval then the version A1 corresponding to that \(\mu \) will be exact. The reason is that identically vanishing \({\mathcal {D}}_1 \) is equivalent to looking at the differential equation \(y^{(4)}(t)-\mu ^2 y^{''}(t)=0\) and \(y^{(5)}(t)-\mu ^2 y^{(3)}(t)=0\) for \(s=2\) and \(s=3\), respectively.

In general, no constant \(\mu \) can be found such that \({\mathcal {D}}_1 \) identically vanishes but it makes sense to address the problem of finding that value of \(\mu \) which ensures that the values of \({\mathcal {D}}_1 \) are kept as close to zero as possible for \(t_n\) in the considered interval. When \({\mathcal {D}}_1\) is held close to zero, the optimal \(\mu \) are given by

In A1 case the frequency must be real.

For algorithm A2, the same considerations can be repeated for \({\mathcal {D}}_2\). The reason is that identically vanishing \({\mathcal {D}}_2\) are equivalent to looking at the differential equation \(y^{(3)}(t)-\mu ^2 y^{'}(t)=0\) and \(y^{(4)}(t)-\mu ^2 y^{''}(t)=0\) for \(s=2\) and \(s=3\), respectively. When \({\mathcal {D}}_2\) is held close to zero, the optimal \(\mu \) are given by

The frequencies are either real or imaginary if Algorithm A2 is chosen.

We have succeeded in proposing formulae for the optimal \(\mu =\textrm{i}\,\omega \) (\(\omega \) is frequency) value in (20)–(21). Having found the optimal value for \(\mu \), we have the optimal frequency \(\omega \).

4 Estimation of the derivatives

The evaluation of the optimal \(\mu \) value and then optimal frequency \(\omega \) requires knowing the total derivatives appearing in the expressions of the formulae (20)–(21). At first, it seems like a very simple task: the first-order derivative is equivalent to the right-hand sides of the Eq. (1) i.e. f(t, y(t)), after that, it is very straightforward to calculate the higher-order derivatives. This technique works well on many problems, but in Ixaru et al. (2002), authors demonstrate this should be avoided on stiff problems. They also demonstrate that it is sufficient to use finite difference approximations of the derivatives. Since the expressions of the formulae (20)–(21) contain derivatives of orders three and four (for \(s=2\)) and four and five (for \(s=3\)), we will estimate the derivatives in each integration point \(t_n\) with five points finite difference formulae for \(s=2\) and six points finite difference formulae for \(s=3\).

Since in Van de Vyver (2005), the author has shown approximation of the derivatives by five points finite difference formulae, in this work we just mention the formulae of the six points finite difference with data at \(t_{n-4}, t_{n-3},t_{n-2}, t_{n-1}, t_n\) and \(t_{n+1}\) for the input.

The data \(y_{n-4},\, y_{n-3},\, y_{n-2},\, y_{n-1}, y_n\) for the input points are the value of the numerical solution at \(t_{n-4}, t_{n-3},t_{n-2}, t_{n-1}, t_n\). The estimation for the \(y_{n+1}\) at \(t_{n+1}\) is determined by Milne-Simpson two-step formulae (Ixaru et al. 2002) as follows:

It is necessary that we make this approximation with a method that has a higher or the same order as the EF peer method. Therefore it is sufficient to choose Milne-Simpson’s two-step formulae. We only use the result for the calculation of the derivatives and not for the propagation of the solution.

4.1 Case \(s=2\)

If \( t_n \) is a root of \( y ^ {''} (t) \), the algorithm A1 is not defined, while A2 is not defined when \( t_n\) is a root of \( y ^{'}(t) \). In a very special case, when \( t_n \) is a root of both \( y ^ {'} (t) \) and \( y ^ {''} (t) \) EF algorithms are not suitable and so the classic A0 Algorithm must be activated. In general, a logical way to choose between A1 and A2 involves comparing \( \vert y ^ {'} (t) \vert \) and \( \vert y ^ {''} (t) \vert \). If \( \vert y ^ {'} (t_n) \vert <\vert y ^ {''} (t_n) \vert \) then A1 is selected, otherwise A2.

4.2 Case \(s=3\)

In this case, if \( t_n \) is a root of \( y ^ {(3)} (t) \), the algorithm A1 is not defined, whereas A2 when \( t_n\) is a root of \( y ^ {''} ( t) \), not defined. It happens that both \( y ^ {''} (t) \) and \( y ^ {(3)} (t) \) change the sign with the same time interval, neither the two EF algorithms are not suitable, so the classic A0 should be enabled. In general, as in the previous case, there is a reasonable way to choose between A1 and A2 involves comparing \( \vert y ^ {''} (t) \vert \) and \( \vert y ^ {(3)} (t) \vert \). If \( \vert y ^ {''} (t_n) \vert <\vert y^ {(3)}(t_n) \vert \) then algorithm A1 otherwise A2 is selected.

5 Numerical experiments

In this section we present numerical experiments showing the behaviour of the new “optimal EF peer methods”, with Z-dependent coefficients whose are computed for optimal \(\mu \)-values in (20)–(21). In the following examples, we apply the described optimal EF peer methods and the classic and EF peer methods to solve the test cases. We compare errors of the optimal EF implicit peer methods with errors of classic and EF implicit peer methods of Conte et al. (2020) in Examples 1 and 2, and we also compare achieved results from Example 3 with reported results by Ixaru et al. (2002).

The error will be estimated as the infinite norm of the difference between the numerical solution and the exact solution at the endpoint and reported in the tables. In addition, we will use the following notation to represent the used numerical methods:

-

CL = classic,

-

EF = exponentially fitted,

-

IM P2 = implicit peer method of order 2.

In the following examples, we use the notation reported in Conte et al. (2020). We consider \(s=2\). In this case \(K = 0\) and \(P = 0\). We fix \(c_1=0\), \(c_2=1\). According to \(Z={\mu }^2 h^2=-\omega ^2h^2\), the numerical values of \(a_{ij}\) and \(b_i\) are computed either for real or imaginary \(\mu \)-values. The corresponding optimal EF IM peer method and EF IM peer method are:

Example 1

Let us consider the Prothero–Robinson problem

whose exact solution is

The oscillating behavior of the exact solution leads us to utilize the EF methods with the parameter \(\mu \) characterizing the functions belonging to the fitting space equal to \(\mu =\textrm{i}\,\omega \). So the problem is integrated by the EF peer methods, where the parameter \(\omega \) is chosen equal to the frequency of the exact solution, i. e. \(\omega =50\).

We also consider the case in which the oscillatory frequency \(\omega \) is not known exactly. Therefore by finding the frequency from the formulae (20)–(21) and denoting with \(\omega _\mathrm{{op}}\), we employ the EF peer methods whose coefficients are computed in correspondence of a frequency \(\omega _\mathrm{{op}}\) and \(\mu =i\,\omega _\mathrm{{op}}\) value.

We used the initial conditions and carried out with CL, EF IM peer methods, and optimal EF IM peer methods, whose algorithms are constructed by the procedure described in Algorithm 1. We consider interval \([0,\frac{\pi }{2}]\) with different grid points \(N=320, 640, 1280\). Tables 2, 3 represent the absolute errors from the considered methods, for \(\lambda =-1\) (non stiff case) and \(\lambda =-10^6\) (stiff case). We see that the optimal EF IM peer method works much better than CL and is close to EF IM peer methods, irrespective of whether the problem is stiff or non-stiff.

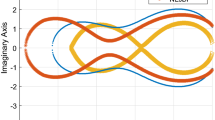

For additional confirmation, we present some graphs for both cases \(\lambda =-1\) and \(\lambda =-10^6\). In Fig. 1, we depict the variation of \(\omega _\mathrm{{op}}\) at each integration point for the problem when the A2 algorithm is chosen. It is seen from Fig. 2, (as expected) the obtained \(\omega _\mathrm{{op}}\) is close to \(\omega =50\). It is instructive to mention that Fig. 2 shows the efficiency curve for this problem obtained by the CL IM, EF IM, and optimal EF IM peer methods.

Example 2

Consider the Prothero–Robinson problem

where exact solution is

The oscillating behavior of the exact solution leads us to utilize the EF methods with the parameter \(\mu =\textrm{i}\,\omega \). This system is integrated by the EF peer methods, where the parameter \(\omega \) is chosen equal to the frequency of the exact solution, i.e. \(\omega =100\). In this example, we also consider the case in which the oscillatory frequency \(\omega \) is not known exactly and we integrate the system by the optimal EF peer methods with \(\omega _\mathrm{{op}}\). In detail, we utilized the initial conditions and performed the experiments with CL and EF IM peer methods, as well as optimal EF IM peer methods, whose algorithms are constructed in the process outlined in Algorithm 1. We examine the interval \([0,\frac{\pi }{2}]\) with various grid points \(N=320, 640, 1280\). The absolute errors from the considered approaches are listed in the Tables 4 and 5 for \(\lambda =-1\) (non-stiff case) and \(\lambda =-10^6\) (stiff case).

Whether the problem is stiff or non-stiff, the optimal EF IM peer methods perform much better than CL IM and are close to EF IM peer methods. We offer some graphs for both scenarios \(\lambda =-1 \) and \(\lambda =-10^6 \) for extra validation. It is instructive to mention that Fig. 3 shows the efficiency curve for this problem obtained by the CL, EF, and optimal EF IM peer methods. In Fig. 4, we depict the variation of \(\omega _\mathrm{{op}}\) at each integration point for the problem when the A2 algorithm is chosen. As predicted, it is obvious from Fig. 4, the obtained \(\omega _\mathrm{{op}}\) is close to \(\omega =100\).

Example 3

Consider the following test case

with the exact solution

This is a simple differential equation, and it helps to illustrate some exciting aspects when (24) is approached by optimal EF IM peer methods (with Z-dependent coefficients whose are computed for optimal \(\mu \)-values) introduced in this work. We can construct the optimal \(\mu \) value for both A1 and A2 algorithms by using formulae (20) and (21). For instance, when \(s=2\), by using formulae (20) the optimal \(\mu \) value for A1 is the constant representation

while by using formulae (21) the optimal \(\mu ^2\) value for A2 is

It follows that by using the A1 algorithm when \(s=2\), we don’t have all of the data to construct the optimal \(\mu \) value. For this reason, in this test case, we construct the solution by the A2 algorithm along with the whole interval. In Fig. 5, we depict the variation of optimal \(\mu ^2\) at each integration point for the problem. As predicted, it is evident from Fig. 5, \(\mu ^2 \simeq 0.024\) when \(t=10,\,s=2,\,h=0.0125\), as the theoretically expected value 0.024 is also given from \(\varphi _2(10)\).

This test case has been employed by Ixaru et al. (2002). They used EF multistep methods for Eq. (24). In Table 6, we report the absolute errors at \(t=1, t=5\) and \(t=10\), with the fixed stepsize \(h=0.0500, 0.0250\) and \(h=0.0125\) by optimal EF IM peer methods and compare them with reported results in Ixaru et al. (2002). From this table, we observe that for \(s = 2\), the optimal EF IM peer methods have the same accuracy behavior concerning with EF multistep methods (Ixaru et al. 2002).

Plot of calculated optimal \(\mu ^2\) value for problem (24) for \(s=2,\,h=0.0125\)

6 Conclusion

In this paper, we applied EF peer methods for the numerical solution of first-order ODEs and examined the problem of how the frequencies should be tuned to obtain the maximal benefit from the exponential fitting versions. To answer this question, we analyzed the error behavior of EF peer methods. We have succeeded in proposing formulae for optimal \(\mu \) values. Under this condition and with the determination of optimal \(\mu \) values, we achieved the “optimal EF peer methods”. The introduced methods were tested on some examples, and the efficiency of optimal EF peer methods was shown.

References

Conte D, Paternoster B (2016) Modified Gauss–Laguerre exponential fitting based formulae. J Sci Comput 69(1):227–243

Conte D, Ixaru LG, Paternoster B, Santomauro G (2014) Exponentially-fitted Gauss–Laguerre quadrature rule for integrals over an unbounded interval. J Comput Appl Math 255:725–736

Conte D, D’Ambrosio R, Moccaldi M, Paternoster B (2018) Adapted explicit two-step peer methods. J Numer Math 255:725–736

Conte D, Mohammadi F, Moradi L, Paternoster B (2020) Exponentially fitted two-step peer methods for oscillatory problems. Comput Appl Math. https://doi.org/10.1007/s40314-020-01202-x

D’Ambrosio R, Paternoster B (2014a) Exponentially fitted singly diagonally implicit Runge–Kutta methods. J Comput Appl Math 263:277–287

D’Ambrosio R, Paternoster B (2014b) Numerical solution of a diffusion problem by exponentially fitted finite difference methods. SpringerPlus 3:425

D’Ambrosio R, Moccaldi M, Paternoster B (2017) Adapted numerical methods for advection–reaction–diffusion problems generating periodic wavefronts. Comput Math Appl. https://doi.org/10.1016/j.camwa.2017.04.023

Ixaru LG (2012) Runge–Kutta method with equation dependent coefficients. Comput Phys Commun 183:63–69

Ixaru LG, Vanden Berghe G (2004) Exponential fitting. Kluwer, Boston

Ixaru LG, Vanden Berghe G, De Meyer H, Van Daele M (1997) Four-step exponential-fitted methods for nonlinear physical problems. Comput Phys Commun 100:56–70

Ixaru LG, Rizea M, De Meyer H, Vanden Berghe G (2001) Weights of the exponential fitting multistep algorithms for ODEs. J Comput Appl Math 132:83–93

Ixaru LG, Vanden Berghe G, De Meyer H (2002) Frequency evaluation in exponential fitting multistep algorithms for ODEs. J Comput Appl Math 140:423–434

Ixaru LG, Vanden Berghe G, De Meyer H (2003) Exponentially fitted variable two-step BDF algorithms for first order ODEs. Comput Phys Commun 150:116–128

Paternoster B (2002) Two step Runge–Kutta–Nyström methods for y = f(x, y) and P-stability. Lect Notes Comput Sci 2331:459–466

Schmitt BA, Weiner R (2004) Parallel two-step W-methods with peer variables. SIAM J Numer Anal 42:265–282

Simos TE (1998) An exponentially-fitted Runge–Kutta method for the numerical integration of initial-value problems with periodic or oscillating solutions. Comput Phys Commun 115:1–8

Simos TE (2001) A fourth algebraic order exponentially-fitted Runge–Kutta method for the numerical solution of the Schrödinger equation. IMA J Numer Anal 21:919–931

Van de Vyver H (2005) Frequency evaluation for exponentially fitted Runge–Kutta methods. J Comput Appl Math 184:442–463

Vanden Berghe G, Ixaru LG, De Meyer H (2001a) Frequency determination and step-length control for exponentially fitted Runge–Kutta methods. J Comput Appl Math 132:95–105

Vanden Berghe G, Ixaru LG, Van Daele M (2001b) Optimal implicit exponentially fitted Runge–Kutta methods. Comput Phys Commun 140:346–357

Vanden Berghe G, Van Daele M, Van de Vyver H (2003) Exponential fitted Runge–Kutta methods of collocation type: fixed or variable knot points? J Comput Appl Math 159:217–239

Acknowledgements

The authors would like to thank the anonymous referees who provided valuable and detailed comments to improve the quality of the publication. The authors are members of the GNCS group. This work was supported by the GNCS-INDAM project and by the Italian Ministry of University and Research (MUR) through the PRIN 2017 project (no. 2017JYCLSF) Structure preserving approximation of evolutionary problems, and the PRIN 2020 project (no. 2020JLWP23) Integrated Mathematical Approaches to SocioEpidemiological Dynamics (CUP: E15F21005420006).

Funding

Open access funding provided by Università degli Studi di Salerno within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Jose Alberto Cuminato.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Conte, D., Moradi, L. & Paternoster, B. Frequency evaluation for adapted peer methods. Comp. Appl. Math. 42, 78 (2023). https://doi.org/10.1007/s40314-023-02223-y

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-023-02223-y