Abstract

Pareto distributions are widely used models in economics, finance and actuarial sciences. As a result, a number of goodness-of-fit tests have been proposed for these distributions in the literature. We provide an overview of the existing tests for the Pareto distribution, focussing specifically on the Pareto type I distribution. To date, only a single overview paper on goodness-of-fit testing for Pareto distributions has been published. However, the mentioned paper has a much wider scope than is the case for the current paper as it covers multiple types of Pareto distributions. The current paper differs in a number of respects. First, the narrower focus on the Pareto type I distribution allows a larger number of tests to be included. Second, the current paper is concerned with composite hypotheses compared to the simple hypotheses (specifying the parameters of the Pareto distribution in question) considered in the mentioned overview. Third, the sample sizes considered in the two papers differ substantially. In addition, we consider two different methods of fitting the Pareto Type I distribution; the method of maximum likelihood and a method closely related to moment matching. It is demonstrated that the method of estimation has a profound effect, not only on the powers achieved by the various tests, but also on the way in which numerical critical values are calculated. We show that, when using maximum likelihood, the resulting critical values are shape invariant and can be obtained using a Monte Carlo procedure. This is not the case when moment matching is employed. The paper includes an extensive Monte Carlo power study. Based on the results obtained, we recommend the use of a test based on the phi divergence together with maximum likelihood estimation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and motivation

The Pareto distribution was first introduced by the economist Vilfredo Pareto in 1897 as a model for the distribution of income, see Pareto [41]. Since then the Pareto distribution has been widely used in a variety of fields including economics, finance, actuarial science, and reliability theory, see, e.g., Nofal and El Gebaly [38] as well as Ismaïl [24]. For an in-depth discussion of the Pareto distribution the interested reader is referred to Arnold [7] where the role of this distribution in the modelling of data is discussed.

The popularity of the Pareto distribution has prompted research into several generalisations of this model. Subsequently, the originally proposed distribution became known as the Pareto type I distribution in order to distinguish this model from the variants known as the Pareto types II, III and IV as well as the so-called generalised Pareto distribution. These distributions, as well as the relationships between them, are described in detail in Arnold [7].

Due to the wide range of applications of the various types of Pareto distributions, a number of tests have been developed for the hypothesis that observed data follow a Pareto distribution. This paper provides an overview of the goodness-of-fit tests specifically developed for the Pareto type I distribution available in the literature. Although numerous overview papers are available for goodness-of-fit tests for distributions such as the normal distribution, see, e.g., Bera et al. [9], and the exponential distribution, see, e.g., Allison et al. [5], the only overview paper of this kind relating to the Pareto distribution is Chu et al. [16]. The latter investigates several existing tests for the Pareto types I and II as well as the generalised Pareto distribution. However, due to the wider scope, Chu et al. [16] does not review all of the tests available for the Pareto type I distribution; several recently proposed tests are excluded from the comparisons provided. The current paper has a narrower scope and provides an overview of existing tests specifically for the Pareto type I distribution, hereafter simply referred to as the Pareto distribution.

A further distinction between Chu et al. [16] and the study presented here is that the former considers simple hypotheses in which the parameters of the Pareto distribution are specified beforehand, whereas the current paper is concerned with the testing of the composite hypothesis that data follow a Pareto distribution with unspecified parameters. Furthermore, note that the sample sizes considered in the two papers are quite distinct; while Chu et al. [16] considers the performance of tests for larger sample sizes, our focus is on the performance of the tests in the case of smaller samples. Additionally, Chu et al. [16] employs only maximum likelihood estimation, whereas the current paper uses both maximum likelihood and the adjusted method of moments estimators. In the study presented here, we compare the powers achieved by the various tests using the two different estimation techniques and we demonstrate that the parameter estimation method, perhaps surprisingly, substantially influences the powers associated with the various tests. Lastly, the critical values used in Chu et al. [16] are obtained using a bootstrap approach; in Sect. 3, we show that it is possible to obtain critical values independent of the estimated parameters when using maximum likelihood estimation. This allows us to estimate critical values without resorting to a bootstrap procedure in the case where maximum likelihood parameter estimates are employed.

In order to proceed we introduce some notation. Let \(X,X_1,X_2,\dots ,X_n\) be independent and identically distributed (i.i.d.) continuous positive random variables with an unknown distribution function F. Let \(X_{(1)}\le X_{(2)}\le \cdots \le X_{(n)}\) denote the order statistics of \(X_1,X_2,\dots ,X_n\). Denote the Pareto distribution function by

and the density function by

where \(\beta >0\) is a shape parameter and \(\sigma >0\) is a scale parameter. To indicate that the distribution of a random variable X is the Pareto distribution with shape and scale parameters \(\beta \) and \(\sigma \), we make use of the following shorthand notation: \(X \sim P(\beta ,\sigma )\).

The hypothesis to be tested is that an observed data set is realised from a Pareto distribution, but we distinguish between two distinct hypothesis testing scenarios. In the first scenario, the value of \(\sigma \) in (1.1) is known while the value of \(\beta \) is unspecified. Note that \(\sigma \) determines the support of the Pareto distribution. As a result, if the support of the distribution is known, then the value of \(\sigma \) is also known. As a concrete example, consider the case of an insurance company. Typically, an insurance claim is subject to a so-called excess, meaning that the insurance company will only receive a claim if it exceeds a known, fixed value. A closely related example is considered in Sect. 5; here the monetary expenses (above a certain threshold) resulting from wind related catastrophes are examined. Another example is found in Arnold [7], where the lifetime tournament earnings of professional golfers are considered. However, only golfers with a total lifetime earning exceeding $700 000 are considered. In the second hypothesis testing scenario considered, we may be interested in modelling a phenomenon for which the support of F is unknown and the values of both \(\beta \) and \(\sigma \) require estimation. In both testing scenarios, we are interested in testing the composite goodness-of-fit hypothesis

for some \(\beta >0\), \(\sigma >0\) and all \(x>\sigma \). This hypothesis is to be tested against general alternatives.

The remainder of the paper is organised as follows. Section 2 provides an overview of a large number of tests for the Pareto distribution based on a wide range of characterisations of this distribution. Section 3 considers two types of estimators for the parameters of the Pareto distribution; the method of maximum likelihood as well as a method closely related to the method of moments. This section also details the estimation of critical values for the tests considered. An extensive Monte Carlo study is presented in Sect. 4. This section investigates and compares the finite sample performance of the tests in various of settings. Section 5 presents a practical implementation of the goodness-of-fit tests as well as the parameter estimation techniques considered. These techniques are demonstrated using a data set comprised of the monetary expenses resulting from wind related catastrophes in 40 separate instances during the year 1977. Some conclusions are presented in Sect. 6.

2 Goodness-of-fit tests for the Pareto distribution

We discuss various goodness-of-fit tests for the Pareto distribution below; tests are grouped according to the characteristic of the Pareto distribution that the tests are based on. We consider tests utilising the empirical distribution function, likelihood ratios, entropy, phi-divergence, empirical characteristic function as well as Mellin transform. Additionally, the discussion below includes tests based on the so-called inequality curve as well as various characterisations of the Pareto distribution. All tests are omnibus tests, except where stated otherwise. To simplify notation, let \(U_j = F_{{\beta },{\sigma }}(X_j)\) and \({\widehat{U}}_j = F_{{\widehat{\beta }}_n,{\widehat{\sigma }}_n}(X_j)\), \(j=1,2,...,n\), where \({\widehat{\beta }}_n\) and \({\widehat{\sigma }}_n\) are consistent estimates of the shape and scale parameters of the Pareto distribution (these estimates will be discussed in Sect. 3). Under \(H_0\), we have from the probability integral transform that \(U_j \sim U[0,1]\) and that \({\widehat{U}}_j\) should be approximately standard uniformly distributed. Some of the tests below exploit this property.

2.1 Tests based on the empirical distribution function (edf)

Classical edf-based tests, such as the Kolmogorov–Smirnov, Cramér—von Mises, and Anderson–Darling tests are based on a distance measure between parametric and non-parametric estimates of the distribution function. The non-parametric estimate of the distribution function of \(X_1,X_2,...,X_n\) used is the edf,

with \(I(\cdot )\) the indicator function, while the parametric estimate of the distribution function is

The Kolmogov-Smirnov test statistic, corresponding to the supremum difference between \(F_{{\widehat{\beta }}_n,{\widehat{\sigma }}_n}\) and \(F_n\), is

The remaining edf test statistics considered are (weighted) \(L^2\) distances and have the following general form,

where w(x) is some weight function. Choosing \(w(x)=1\) in (2.1), we have the Cramér–von Mises test with direct calculable form

When choosing \(w(x)=\left[ F_{{\widehat{\beta }}_n,{\widehat{\sigma }}_n}(x)\{1-F_{{\widehat{\beta }}_n,{\widehat{\sigma }}_n}(x)\}\right] ^{-1}\), we obtain the Anderson–Darling test

Finally, setting \(w(x)=[1-F_{{\widehat{\beta }}_n,{\widehat{\sigma }}_n}(x)]^{-2}\), we arrive at the so-called modified Anderson–Darling test

While the \(CM_n\), \(AD_n\) and \(MA_n\) tests are all weighted \(L^2\) distances between the parametric and non-parametric estimates of the distribution function, the weight functions used vary the importance allocated to different types of deviations between these estimates. For example, when comparing the Cramér–von Mises and Anderson–Darling tests, differences in the tail of the distribution are more heavily weighted in the case of the latter than the former. For further discussions on these edf-based tests, see, Klar [27] and D’Agostino and Stephens [20]. All of the above tests reject the null hypothesis for large values of the test statistics.

2.2 Tests based on likelihood ratios

Zhang [54] proposes two general test statistics which are used to test for normality; below we adapt these tests in order to test for the Pareto distribution. The test statistics are of the form

where \(G_n(x)\) is the likelihood ratio statistic defined as

The two choices of \(\textrm{d}w(x)\) that Zhang [54] proposes, as well as the test statistics resulting from each of these choices, are presented below. The results are obtained upon setting \(F_n(X_{(j)})=(j-\frac{1}{2})/n\).

-

Choosing \(\textrm{d}w(x)=\left[ F_n(x)\{1-F_n(x)\}\right] ^{-1}\textrm{d}F_n(x)\) leads to

$$\begin{aligned} ZA_n=-\sum _{j=1}^{n}\left\{ \frac{\log \left( {\widehat{U}}_{(j)}\right) }{n-j+\frac{1}{2}}+\frac{\log \left( 1-{\widehat{U}}_{(j)}\right) }{j-\frac{1}{2}}\right\} . \end{aligned}$$ -

Choosing \(\textrm{d}w(x)=\left[ F_{{\widehat{\beta }}_n,{\widehat{\sigma }}_n}(x)\{1-F_{{\widehat{\beta }}_n,{\widehat{\sigma }}_n}(x)\}\right] ^{-1}\textrm{d}F_{{\widehat{\beta }}_n,{\widehat{\sigma }}_n}(x)\) results in

$$\begin{aligned} ZB_n=\sum _{j=1}^{n}\left\{ \log \left( \frac{\left( {\widehat{U}}_{(j)}\right) ^{-1}-1}{(n-\frac{1}{2})/(j-\frac{3}{4})-1}\right) \right\} ^2. \end{aligned}$$

Motivated by the high powers often obtained using the modified Anderson–Darling test, we also include the choice \(\textrm{d}w(x)=\{1-F_n(x)\}^{-2}\textrm{d}F_n(x)\), which leads to the test statistic

All three of these tests reject the null hypothesis for large values of the test statistics.

Building on the tests for the assumption of normality that Zhang [54] proposes, Alizadeh Noughabi [2] adapts two of these test to test the assumption of exponentiality. Neither Zhang [54] nor Alizadeh Noughabi [2] derive the asymptotic properties of these tests and rather present extensive Monte Carlo studies to investigate their finite sample performances. The authors found that these tests are quite powerful compared to other tests (especially the traditional edf-based tests) against a range of alternatives.

Remark

Zhang [54] also considers the test

However, we do not include \(ZD_n\) in our Monte Carlo study as \(ZA_n\) and \(ZB_n\) proved more powerful in the papers mentioned.

2.3 Tests based on entropy

A further class of tests is based on the concept of entropy, first introduced in Shannon [47]. The entropy of a random variable X with density and distribution functions f and F, respectively, is defined to be

where \(F^{-1}(\cdot )\) denotes the quantile function of X. The concept of entropy has been applied in several studies, see, e.g., Kullback [29], Kapur [26] and Vasicek [50], where, in particular, Vasicek [50] proposes using

as an estimator for H, where \(X_{(j)}=X_{(1)}\) for \(j<1\), \(X_{(j)}=X_{(n)}\) for \(j>n\), and m is a window width subject to \(m\le \frac{n}{2}\). We now consider two goodness-of-fit tests based on concepts related to entropy: the Kullback–Leibler divergence and the Hellinger distance, where H is estimated by \(H_{n,m}\) in the test statistic.

The Kullback–Leibler divergence between any arbitrary density function, f, and the Pareto density, \(f_{\beta ,\sigma }\), is defined to be [see, e.g., 29]

It follows that the Kullback–Leibler divergence can also be expressed in terms of entropy:

Estimating (2.5) by the empirical quantities mentioned above, we obtain the test statistic

This test rejects the null hypothesis for large values of \(KL_{n,m}\). The test statistic coincide with the one studied by Lequesne [30], where the authors uses maximum likelihood estimation and a normalizing transformation to ensure the test statistic lies between 0 and 1. Alizadeh Noughabi et al. [4] uses a similar test statistic in order to test the goodness-of-fit hypothesis for the Rayleigh distribution. Authors did not derive the limiting null distribution of the test statistic, they proved that the test is consistent against general alternatives. In their simulation study the authors find that the test compared favourably to other competing tests.

The Hellinger distance between two densities f and \(f_{\beta ,\sigma }\) is defined as [see, e.g., 25]

By setting \(F(x)=p\), the Hellinger distance can be expressed in terms of the quantile function as follows

From (2.3) and (2.4) it can be argued that \(\frac{\textrm{d}}{\textrm{d}p}F^{-1}(p)\) can be estimated by \(\{\frac{n}{2m}(X_{(j+m)}-X_{(j-m)})\}\). The resulting test statistic is given by

This test rejects the null hypothesis for large values of \(HD_{n,m}\).

Jahanshahi et al. [25] uses similar arguments to propose a goodness-of-fit test for the Rayleigh distribution and proves that the test is consistent in that setting. In addition, they also propose a method for obtaining the optimum value of m by minimising bias and mean square error (MSE). In a finite sample power comparison, Jahanshahi et al. [25] finds that \(HD_{n,m}\) produces the highest estimated powers against the majority of alternatives considered. In the case of alternatives considered with non-monotone hazard rates, the entropy-based tests outperform the remaining tests by some margin.

2.4 Tests based on the phi-divergence

The phi-divergence between an arbitrary density, f, and \(f_{\beta , \sigma }\) is

where \(\phi : [0,\infty )\longrightarrow (-\infty ,\infty )\) is a convex function such that \(\phi (1)=0\) and \(\phi ''(1)>0\). It is further known [see, e.g., 15, 18] that if \(\phi \) is strictly convex in a neighbourhood of \(x=1\), then \(D_\phi (f,f_{\beta , \sigma })=0\) if, and only if, \(f=f_{\beta , \sigma }\). Alizadeh Noughabi & Balakrishnan [3] use this property to construct goodness-of-fit tests for a variety of different distributions. Let \(E_F[\cdot ]\) denote an expectation taken with respect to the distribution F. By noting that

it follows that \(D_\phi (f,f_{\beta , \sigma })\) can be estimated by

where \(\widehat{f}_h(x)=\frac{1}{nh}\sum _{j=1}^{n}k\left( \frac{x-X_j}{h}\right) \) is the kernel density estimator with kernel function \(k(\cdot )\) and bandwidth h.

In the Monte Carlo study in Sect. 4, we use the standard normal density function as kernel and choose \(h=1.06sn^{-\frac{1}{5}}\), where s is the unbiased sample standard deviation [see, e.g., 48]. We will use the following four choices of \(\phi \):

-

The Kullback-Liebler distance (DK) with \(\phi (x)=x\log (x)\).

-

The Hellinger distance (DH) with \(\phi (x)=\frac{1}{2}(\sqrt{x}-1)^2\).

-

The Jeffreys divergence distance (DJ) with \(\phi (x)=(x-1)\log (x)\).

-

The total variation distance (DT) with \(\phi (x)=|x-1|\).

A variety of test statistics can be constructed from (2.6) using the above choices of \(\phi \). The test statistics corresponding to these choices are

All tests reject the null hypothesis for large values of the test statistics.

In addition to showing that the tests above are consistent against fixed alternatives (no derivation of the asymptotic null distribution was presented), Alizadeh Noughabi & Balakrishnan [3] also uses \(DK_n\), \(DH_n\), \(DJ_n\) and \(DT_n\) to test the goodness-of-fit hypothesis for the normal, exponential, uniform and Laplace distributions. The Monte Carlo study included in Alizadeh Noughabi & Balakrishnan [3] indicates that \(DK_n\) produces the highest powers amongst the phi-divergence type tests. When comparing the performance of these tests, the powers associated with \(DK_n\) were higher than the others. As a result, only \(DK_n\) is included in the Monte Carlo study presented in Sect. 4.

2.5 A test based on the empirical characteristic function

A large number of goodness-of-fit tests have been developed for a variety of distributions based on empirical characteristic functions [see, e.g., 28, 31, 12]. For a review of testing procedures based on the empirical characteristic functions see, e.g., Meintanis [33].

Recall that the characteristic function (cf) of a random variable X with distribution \(F_{\theta }\) is given by

with \(i=\sqrt{-1}\) the imaginary unit. The empirical characteristic function (ecf) is defined to be

As a general test statistic, one can use a weighted \(L^2\) distance between the fitted cf under the null hypothesis and the ecf,

where \({\widehat{\theta }}\) represents the estimated values of the parameters of the hypothesised distribution and \(w(\cdot )\) is a suitably chosen weight function ensuring that the integral is finite. Commonly used choices for the weight function are \(w(t)=\textrm{e}^{-a|t|}\) and \(w(t)=\textrm{e}^{-at^2}\), respectively derived from the kernels of the Laplace and normal density functions, where \(a>0\) is a user defined tuning parameter.

The characteristic function of the Pareto distribution has a complicated closed form expression, making \(T_n\) intractable irrespective of the choice of the weight function. In order to circumvent this problem, we use the test proposed in Meintanis [31]. In order to perform this test, the data are transformed so as to approximately follow a standard uniform distribution under the null hypothesis. The test statistic used is a weighted \(L^2\) distance between the ecf of the transformed data \({\widehat{U}}_1,{\widehat{U}}_2,...,{\widehat{U}}_n\), denoted by \({\widehat{\varphi }}_n(t)\), and the cf of the standard uniform distribution, given by

Meintanis [31] proposes the test

Upon setting \(w({t})=\textrm{e}^{-a|t|}\), \(S_{n,a}\) simplifies to

The test rejects the null hypothesis for large values of the test statistic. Although Meintanis [31] does not explicitly use the resulting statistic to test for the Pareto distribution, it is demonstrated that this test is competitive when testing for the gamma, inverse Gaussian, and normal distributions. Meintanis et al. [34] considers the multivariate version of this class of tests and derives the limiting null distribution and also show that it is consistent against fixed alternatives.

2.6 A test based on the Mellin transform

Meintanis [32] introduces a test based on the moments of the reciprocal of the random variable X. If X follows a Pareto distribution, then \(E(X^t)\), \(t>0\), only exists when \(t<\beta \). On the other hand, the Mellin transform of X, given by

exists for all \(t>0\) if X is a Pareto random variable. Given an observed sample, the empirical Mellin transform is defined to be

If X is a \(P(\beta ,\sigma )\) random variable, then M(t) satisfies [see, e.g., 32]

Based on a random sample, D(t) can be estimated by

Meintanis [32] proposes a weighted \(L^2\) distance between \(D_n(t)\) and 0 as test statistic;

where w(t) is a suitable weight function, depending on a user-defined parameter a. After some algebra \(G_{n,a}\) simplifies to

where

Choosing \(w(t)=\textrm{e}^{-at}\), one has

and

culminating in an easily calculable test statistic.

The test rejects the null hypothesis for large values of the test statistic. Meintanis [32] proves the consistency of the test against fixed alternatives and uses a Monte Carlo study to demonstrate that the power performance of the test compares favourably to that of the classical goodness-of-fit tests.

2.7 A test based on an inequality curve

Let X be a positive random variable with distribution function F and finite mean \(\mu \). Let \(L(p)=Q(F^{-1}(p))\), with

the generalised inverse of F and

the first incomplete moment of X. Using this notation, the inequality curve \(\lambda (p)\), \(p\in (0,1)\) is defined to be [see, e.g., 53]

Taufer et al. [49] proposes a test based on the constant inequality curve exhibited by the \(P(\beta ,\sigma )\) distribution for some \(\sigma >0\). Taufer et al. [49] proves the following characterisation for the Pareto distribution based on \(\lambda (p)\).

Theorem 2.1

The inequality curve \(\lambda (p)\) is equal to \(\frac{1}{\beta }\) over all values of p, \(p\in (0,1)\) if, and only if, F is the Pareto distribution function, \(F_{\beta ,\sigma }\).

In order to use this characterisation to develop goodness-of-fit tests, Taufer et al. [49] uses the following approach. Defining the empirical version of Q(x) as

the estimator for L(p) becomes

where \(F_n^{-1}(p)=\inf \{x:F_n(x)\le p\}\). Finally, an estimator for \(\lambda (p)\) is given by

The choice \(j=1,2,\dots ,n-\lfloor \sqrt{n}\rfloor \) ensures that \({\widehat{\lambda }}_j\) is a consistent estimator for \(\lambda \) [see 49]. Setting \(m=n-\lfloor \sqrt{n}\rfloor \), Theorem 2.1 states that under the null hypothesis, for any choice of \(p_j\), \(0<p_j<1\), \(j=1,2,\dots ,m\), and \(\beta >1\), we have the linear equation

with \(\beta _0=\frac{1}{\beta }\) and \(\beta _1=0\). Now, based on the data \(X_1,...,X_n\), we can obtain estimators for \(\beta _0\) and \(\beta _1\) from the regression

where \(\varepsilon _j={\widehat{\lambda }}_j-\lambda _j\).

The least squares estimators for \(\beta _0\) and \(\beta _1\) are given by

where \(\bar{p}=\frac{m+1}{2n}\) and \(S_p^2=\frac{m(m^2-1)}{12n^2}\).

Testing the hypothesis in (1.2) is equivalent to testing the hypothesis

where the null hypothesis is rejected for large values of \(|{\widehat{\beta }}_1|\). In a finite sample study, Taufer et al. [49] finds that this test is oversized in the case of small sample sizes (\(n=20\)), but achieves the nominal significance level for larger samples (\(n=100\)). The results indicate that the test compares favourably against the traditional tests in terms of estimated powers. Since the focus of the current research is on the finite sample performance of tests in the case of small samples, we do not include this test in the Monte Carlo study presented in Sect. 4.

2.8 Tests based on various characterisations of the Pareto distribution

A wide range of characterisations of the Pareto distribution is available and several have been used to develop goodness-of-fit tests. In what follows, we state these characterisations and discuss the associated test in each case. It should be noted that, although the tests below are equally useful in the situation where both parameters of the Pareto distribution are required to be estimated, the asymptotic theory was developed in the setting where the scale parameter is known. Furthermore, the majority of the tests are consistent. The exceptions are those tests employing an integral expression in which the integrand is a linear difference.

Each of the subsections below are dedicated to a characterisations and contains a brief discussion on the associated test.

2.8.1 Characterisation 1 [40]

Let X and Y be i.i.d. positive absolutely continuous random variables. The random variable X and \(\max \left\{ \frac{X}{Y}, \frac{Y}{X}\right\} \) have the same distribution if, and only if, X follows a Pareto distribution.

Obradović et al. [40] provides the proof for this characterisation and proposes two test statistics based on it. In order to specify these test statistics, denoted by

the U-empirical distribution function of the random variable \(\textrm{max}\{X/Y,Y/X\}\). The test statistics are specified to be

and

Both of these tests reject the null hypothesis for large values of the test statistics. Obradović et al. [40] calculates Bahadur efficiencies for selected alternative distributions and also determines some of the locally optimal alternatives. The mentioned paper also derives the null distribution of \(T_n\) and shows that \(\sqrt{n}T_n\) converges to a centered normal random variable with variance \(\frac{5}{108}\). A limited Monte Carlo study shows that the tests \(T_n\) and \(V_n\) are competitive against the traditional \(KS_n\) and \(CM_n\) tests.

2.8.2 Characterisation 2 [6]

Let \(X, X_1,...,X_n\) be i.i.d. positive absolutely continuous random variables from some distribution function F. The random variables \(\root m \of {X}\) and \(\min (X_1,...,X_m)\) have the same distribution if, and only if, F is the Pareto distribution, for all integers \(2\le m\le n\).

Using m as a tuning parameter, Allison et al. [6] proposes three classes of tests for the Pareto distribution based on the characterisation above. The test statistics used are discrepancy measures between the empirical distribution of \(\min \{X_1,...,X_m\}\) and the V-empirical distribution of \(\root m \of {X}\), defined as

Based on \(\Delta _{n,m}\), the authors propose the following test statistics

\(K_{n,m}\) and \(M_{n,m}\) reject the null hypothesis for large values of the test statistics, while \(I_{n,m}\) rejects for large values of \(|I_{n,m}|\). Allison et al. [6] derive the limiting null distribution of all three test statistics. Upon calculating and comparing the Bahadur efficiencies, Allison et al. [6] found that the test \(I_{n,m}\) has the best performance among the three in terms of local efficiency. This result is reinforced by a finite sample power study which results in the recommendation of choosing \(I_{n,m}\) with \(m=2\).

Where Allison et al. [6] used empirical distribution functions to construct their tests, Ndwandwe et al. [37] propose test statistics that instead utilise empirical versions of the characteristic function. To this end, let

be the characteristic functions of \(X^{1/m}\) and \(\min (X_1,\dots ,X_m)\), respectively. Denote the empirical versions of \(\phi _m\) and \(\xi _m\) by

and

The characterisation implies that, for all \(t\in {\mathbb {R}}\) and \(0 \le m \le n\), \(\phi _m(t)=\xi _m(t)\) if, and only if, \(X \sim P(\beta )\) for some \(\beta >0\). As is usually the case in characteristic function based tests, Ndwandwe et al. [37] propose as test statistic that is a weighted \(L^2\) distance between \(\phi _{n,m}\) and \(\xi _{n,m}\):

where \(w_a(t)\) is a weight function (see Sect. 2.5 for some more detail on the weight function). Setting \({w}_a(t)=e^{-at^2}\), the test statistic \(L_{n,m,a}\) simplifies to

where

The test rejects for large values of the test statistic. Ndwandwe et al. [37] comment on the limiting null distribution of the test statistic and also demonstrate that the test is consistent against a wide range of fixed alternative distributions. A Monte Carlo study also revealed that \(L_{n,m,a}\) performed better in terms of empirical powers than the majority of the other tests that were evaluated. For implementing the test the authors recommend choosing the values of the tuning parameters as \(m=3\) and \(a=2\).

Remark: Ndwandwe et al. [37] also studied a test where \(\xi _m(t)\) is estimated by the U-statistic

However, this test was found to be less powerful than \(L_{n,m,a}\), and will not be discussed further in this paper.

2.8.3 Characterisation 3 [46]

Obradović [39] uses the following special case of Rossberg’s characterisation of the Pareto distribution to construct a goodness-of-fit test:

Let \(X_1\), \(X_2\), and \(X_3\) be i.i.d. positive absolutely continuous random variables and denote the corresponding order statistics by \(X_{(1)} \le X_{(2)} \le X_{(3)}\). If \(X_{(2)}/X_{(1)}\) and \(\min (X_1,X_2)\) are identically distributed, then \(X_1\) follows a Pareto distribution.

In order to base a test on this characterisation, Obradović [39] suggests estimating the distribution of \(X_{(2)}/X_{(1)}\) by

and the distribution of \(\min (X_1,X_2)\) by

Tests can be based on the discrepancy between \(G_n\) and \(H_n\); Obradović [39] proposes the test statistics

and

Both tests reject the null hypothesis for large values of the test statistics. Bahadur efficiencies for these tests are presented in Obradović [39] where the results show that, while no test outperforms all others, each test is found to be locally optimal against certain classes of alternatives. Obradović [39] also shows that the asymptotic null distribution of \(\sqrt{n}I_n^{[1]}\) is normal with mean 0 and variance \(\frac{52}{1125}\).

2.8.4 Characterisation 4 [39]

In addition to the tests above, Obradović [39] also proposes tests for the Pareto distribution based on the following characterisation which is linked to a characterisation of the exponential distribution due to Ahsanullah [1].

Let \(X_1,X_2\) and \(X_3\) be i.i.d. positive absolutely continuous random variables with strictly monotone distribution function and monotonically increasing or decreasing hazard function and denote the order statistics by \(X_{(1)}\le X_{(2)}\le X_{(3)}\). The random variable \(X_{(3)}/X_{(2)}\) and \(X_{(2)}/X_{(1)}\) have the same distribution if, and only if, the distribution of X follows a Pareto distribution.

The test statistics that Obradović [39] proposes based on this characterisation are

and

where

and

Both tests reject the null hypothesis for large values of the test statistic. As with the tests given in Section 2.8.3, Obradović [39] concludes that, while neither of the tests was dominant against all alternatives in terms of local efficiency, they are both locally optimal for certain classes of alternatives. It is again showed that \(I_n^{[2]}\) has an asymptotic normally distributed null distribution.

2.8.5 Characterisation 5 [51]

For a fixed k, let \(X_1,...,X_k\) be i.i.d. non negative and bounded random variables having absolutely continuous distribution function F. The random variable \(X_1\) and \(X_{(k)}/X_{(k-1)}\) have the same distribution if, and only if, F is the Pareto distribution.

Volkova [51] provides a proof of this characterisation and derives two test statistics utilizing this characterisation:

and

where

and \(X_{(k,\{j_1,\dots ,j_k\})}\) denotes the \(k^{th}\) order statistic of the subsample \(X_{j_1},...,X_{j_k}\).

Both tests reject the null hypothesis for large values of the test statistics. In addition to deriving the conditions for local optimality of the tests, Volkova [51] also derives the null distribution of \(I_n^{(k)}\) for \(k=3\) and \(k=4\). It is shown that \(\sqrt{n}I_n^{(3)}\) and \(\sqrt{n}I_n^{(4)}\) converge, under the null, to zero mean normal random variables with variances \(\frac{11}{120}\) and \(\frac{271}{2100}\), respectively. Due to its computationally expensive nature and the large number of tests already included, we opted to exclude this test from the Monte Carlo study.

2.9 Other tests

While we tried to consider the majority of tests available for the Pareto distribution, we will now only mention four others which are outside the scope of the paper. These are a weighted quantile correlation test by Csörgö and Szabó [19], a test based on Euclidean distances by Rizzo [45], a test based on spacings by Gulati and Shapiro [22] and a Kolmogorov-type test involving a sort of “memory-less” characterization of the Pareto distribution by Milošević and Obradović [35].

In addition, it should be noted that the Pareto distribution is closely linked to the exponential distribution; if \(X \sim P(\beta ,\sigma )\), then \(Y=\text {log}(X/\sigma )\) follows an exponential distribution with mean \(1/\beta \). As a result, we can use this transformation, with estimated values of \(\beta \) and \(\sigma \), in order to transform the data, and then we can use goodness-of-fit tests for the exponential distribution in order to test the hypothesis in (1.2). For a an overview of tests for some of the multitudes of tests available for exponential, see Allison et al. [5].

3 Parameter and critical value estimation

In this section we discuss two popular methods for the estimation of the parameters of the Pareto distribution: the method of maximum likelihood as well as a method closely related to moment matching. The empirical results in Sect. 4 demonstrate that the choice of estimation method used has a profound effect on the powers achieved by the tests considered. As a result, it is necessary to discuss the procedures in some detail.

We consider parameter estimation in the setting where both \(\beta \) and \(\sigma \) are required to be estimated. In the testing scenario where \(\sigma \) is known, the estimated value of \(\sigma \) can simply be replaced by this known value.

For each estimation method we also discuss how the critical values are estimated.

3.1 Maximum likelihood estimators (MLEs)

In the case where both \(\sigma \) and \(\beta \) are unknown, the MLEs of \(\sigma \) and \(\beta \) are respectively given by

and

Note that if we transform \(X_1,...,X_n\) as follows:

then

and

As can be seen above, the transformation in (3.1) ensures that, when the Pareto distribution is fitted to \(Y_1,...,Y_n\), the resulting parameter estimates are fixed at \({\widehat{\sigma }}_n = {\widehat{\beta }}_n = 1\). This enables us to approximate fixed critical values by Monte Carlo simulations not depending on \({\widehat{\sigma }}_n\) or \({\widehat{\beta }}_n\). As a result, the limit null distribution is independent of the values of \(\sigma \) and \(\beta \) if the data are transformed as in (3.1). This result essentially renders the critical values for tests for the Pareto distribution shape invariant in the case where estimation is performed using MLE.

It should be noted that, if the transformation in (3.1) is used, then the sample minimum is \(Y_{(1)}=1\). This leads to computational issues for several of the tests discussed above. Specifically, the calculation of \(AD_n\), \(ZA_n\), \(ZB_n\) and \(ZC_n\) break down. In order to circumvent these numerical problems, we set \(Y_{(1)}=1.0001\) when computing these test statistics.

Remark

The test proposed in Taufer et al. [49], see Sect. 2.7, assumes that the mean of the Pareto distribution fitted to the transformed values is finite. Let \({{\widehat{\mu }}}_n:={{\widehat{\beta }}}_n/({{\widehat{\beta }}}_n-1)\) denote the mean of the fitted Pareto distribution; \({{\widehat{\mu }}}_n\) is finite if, and only if, \({{\widehat{\beta }}}_n>1\). As a result, the transformation in (3.1) leads to numerical problems with the implementation of this test. In order to obtain critical values for this test, we recommend using the transformation \(Y_j = \left( \frac{X_j}{{\widehat{\sigma }{} }_n}\right) ^{{\widehat{\beta }}_n/2}, \ j = 1,...,n\), which results in \({{\widehat{\mu }}}:=2\).

3.2 Adjusted method of moments estimators (MMEs)

The traditional implementation of the method of moments requires that both the mean and the variance of the distribution be finite. In the case of the Pareto distribution, this implies that \(\beta >2\). As a result, the traditional method of moments estimators are not consistent when estimating the parameters of a \(P(\beta ,\sigma )\) distribution when \(\beta < 2\).

A partial solution to the problem explained above is found when using the so-called adjusted method of moments estimators proposed in Quandt [43]. Instead of choosing parameter estimates so as to equate the first two population moments to the first two sample moments, Quandt [43] equates the first population and sample moments as well as equating the observed minimum to the expected value of the sample minimum. The resulting estimators are

and

Note that this method only requires the assumption that the population mean is finite, meaning that we assume only that \(\beta >1\). When analysing a data set in practice, we recommend rather using the MLE in cases where the MME for \(\beta \) is close to 1.

Unlike the case of maximum likelihood, we are unable to obtain fixed critical values; the critical values are functions of the estimated shape parameter \({\widetilde{\beta }}_n\). We provide the following bootstrap algorithm for the estimation of critical values.

-

1.

Based on data \(X_1,...,X_n\), estimate \(\beta \) and \(\sigma \) by \({\widetilde{\beta }}_n\) and \({\widetilde{\sigma }}_n\), respectively.

-

2.

Obtain a parametric bootstrap sample \(X_1^*,...,X_n^*\) by sampling independently from \(F_{{\widetilde{\beta }}_n,{\widetilde{\sigma }}_n}\).

-

3.

Calculate \({\widetilde{\beta }}_n^*={\widetilde{\beta }}(X_1^*,...,X_n^*)\), \({\widetilde{\sigma }}_n^*={\widetilde{\sigma }}(X_1^*,...,X_n^*)\), and the value of the test statistic say \(S^*=S(X_1^*,...,X_n^*)\).

-

4.

Repeat steps 2 and 3 B times to obtain \(S_1^*,...,S_B^*\) and obtain the order statistics \(S_{(1)}^*\le ...\le S_{(B)}^*\).

-

5.

The estimated critical value at a \(\alpha \times 100\%\) significance level is \(\widehat{C}_n=S^*_{(B\lfloor 1-\alpha \rfloor )}\), where \(\lfloor x \rfloor \) denotes the floor of x.

We now turn our attention to the numerical powers of the tests obtained using the two estimation methods discussed above.

3.3 Other estimation methods

While the numerical result presented later in this paper are based on the MLE and MME estimators mentioned above, one can also consider alternative methods of estimation. For the sake of completeness, we note that there are many other alternative methods of obtaining these estimators such as the L-moment estimator [23], methods that involve minimising some distance criterion between distribution functions [10, 11, 13, 42, 52], as well as similar minimum distance-based methed related to \(\phi \)-diveregence [8].

4 Monte Carlo results

In this section we present a Monte Carlo study in which we examine the empirical sizes as well as the empirical powers achieved by the various tests discussed in Sect. 2. Section 4.1 details the simulation setting used, including the alternative distributions considered, while Sect. 4.2 shows the numerical results obtained together with a discussion and comparison of these results.

4.1 Simulation setting

We consider four different Monte Carlo settings. In the first two of these we consider the case in which only the shape parameter of the Pareto distribution requires estimation, while both the shape and scale parameters are estimated in the third and fourth settings. Furthermore, in the first and third settings we use maximum likelihood estimation in order to obtain parameter estimates, while the adjusted method of moments is used in the second and fourth settings.

We calculate empirical sizes and powers for samples of size \(n=20\) and \(n=30\). The empirical powers are calculated against the range of alternative distributions given in Table 1. Traditionally, these alternatives have support \((0,\infty )\). In order to ensure that the simulated data have the same support as the Pareto distribution, these alternatives are shifted by 1.

The powers obtained against these alternative distributions are displayed in Table 3, 4, 5, 6 and 10, 11, 12, 13. The highest two powers in each row (including ties) are highlighted. For ease of reference, the entries in Table 2 below gives a brief summary of the settings used in these power tables with respect to the sample size, estimation method and the number of parameters estimated.

Where MLE is used for parameter estimation, we approximate critical values using 100,000 Monte Carlo replications. Thereafter we generate 10,000 samples from each alternative distribution considered and we calculate the empirical powers as the percentages (rounded to the nearest integers) of these samples that resulted in the rejection of \(H_0\) in (1.2). In the case where MME is used in order to perform parameter estimation we are unable to calculate fixed critical values. As a result, we use the warp-speed bootstrap method proposed in Giacomini et al. [21] in order to arrive at empirical critical values and powers in this case. This technique entails the following: each Monte Carlo sample is not subject to a large number of time-consuming bootstrap replications since only one bootstrap sample is taken for each Monte Carlo replication. The warp-speed method has been used in numerous studies to evaluate the power performances of the tests, see, e.g. Cockeran et al. [17] as well as Ndwandwe et al. [36]. In this setting, we make use of 50,000 Monte Carlo samples (which then also imply 50,000 bootstrap replications). All calculations were done in R v4.2.2 [44].

A final remark regarding the numerical powers associated with the tests based on characterisation of the Pareto distribution is in order. These tests, see Sect. 2.8, are typically much more computationally expensive to evaluate than the other tests considered. As a result, it is simply not feasible to calculate numerical powers for these tests using the warp-speed bootstrap. However, note that these tests do not require parameter estimation (the test statistics are not functions of the estimated parameter values) and we simply treat these tests as if parameter estimation is performed using MLE. Consequently, we are able to, once more, compute fixed critical values. The numerical powers reported in the tables are obtained based on these fixed critical values. In order to appreciate the large difference between the computational times required for the various tests, see Table 9 in Sect. 5.

4.2 Simulation results and discussion

We begin our discussion of the performance of the tests with the remark that the powers generally increase with sample size; meaning the powers associated with samples of size \(n=30\) are higher than those associated with \(n=20\). In the discussion below, we consider the results obtained using samples of size \(n=20\), before turning our attention to the cases where \(n=30\).

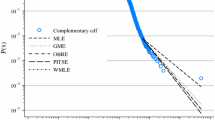

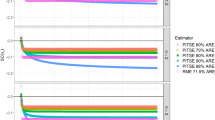

Before turning our attention to a general discussion of the empirical power results or a comparison between the results associated with the various settings considered, we discuss the results obtained using maximum likelihood estimation. The numerical results shown in Tables 3 and 5 indicate that each of the tests closely maintains the specified nominal significance level of 5%. When considering the numerical powers, it is clear that the \(DK_n\) test generally outperforms all of the competing tests against the majority of alternatives considered. In the case of samples of size \(n=20\), this impressive power performance is followed closely by that of \(KL_{n,10}\), which provides power close to those achieved by \(DK_n\). In the case where \(n=30\), \(DK_n\) still produces the highest powers, followed by \(ZC_n\).

We now turn our attention to the results in Tables 4 and 6 (as well as those in Tables 11 and 13), obtained when using the method of moments to perform parameter estimation. The tests generally fail to achieve the specified nominal significance level of 5% against the P(1, 1) distribution. Of course, the tests for which parameter estimation are not required (\(T_n\), \(I_{n2,2}\), \(I_{n,3}\), \(I_n^{[1]}\) and \(I_n^{[2]}\)) do not exhibit this shortcoming. The general lack of adherence to the specified significance level can be ascribed to the fact that the first moment of the P(1, 1) distribution does not exist. For the remaining Pareto distributions considered, all of which posses a finite first moment, the sizes of the tests closely coincide with the specified nominal significance level. The results presented in Table 4 indicate that the tests that generally exhibit the highest levels of statistical power are \(G_{n,2}\), \(MA_n\), and \(L_{n,m,a}\) when \(n=20\). Turning our attention to the case where \(n=30\) (see Tables 12 and 13), we see that the most powerful test is still \(G_{n,2}\) followed by \(MA_n\). However, in this case the performances of these tests is closely followed by that of \(ZB_n\).

One striking feature of the reported empirical results is the noticeably poor performance of the \(DK_n\) test when using MME. While this test achieved the highest power against the majority of the alternatives when employing MLEs for parameter estimation, it frequently produces the lowest power when using the MMEs. This illustrates the importance of the choice of the estimation method when testing the assumption of the Pareto distribution.

When considering the effects of sample size and the number of parameters to be estimated, the powers are influenced in the expected way. An increase in sample size generally results in an increase in empirical power, while the settings in which a single parameter requires estimation generally produce higher numerical powers than is the case for settings in which both parameters are estimated. When comparing the results obtained using MLE and MME, we see that one estimation method does not increase the powers associated with all of the tests. That is, changing the estimation method used from MLE to MME results in an increase in the powers of some of the tests while the other tests experience a decrease in power. The most striking example is the \(DK_n\) test which shows excellent powers when using MLE while exhibiting dismal powers when using MME.

5 Practical application

We now employ the various tests considered in order to ascertain whether or not an observed data set is compatible with the assumption of being realised from a Pareto distribution. The data set is comprised of the monetary expenses incurred as a result of wind related catastrophes in 40 separate instances during 1977, rounded to the nearest million US dollars. The data are provided in Table 7.

The rounding of the recorded values in Table 7 causes unrealistic clustering in the data which may lead to problems when testing for the Pareto distribution. In order to circumvent the associated problems, we use the de-grouping algorithm discussed in Allison et al. [6] as well as Brazauskas and Serfling [14]. This algorithm replaces the values in each group of tied observations with the expected value of the order statistics of the uniform distribution with the same range. That is, if one observes k identical integer values, x, in an interval \((l=x-1/2,u=x+1/2)\), we replace these values by

for \(j \in \{1,\dots ,k\}\). We emphasise that this de-grouping algorithm does not change the mean of the data set. The de-grouped data can be found in Table 8.

When testing the hypothesis of the Pareto distribution for the data set, we consider each of the four settings used in the Monte Carlo study presented in Sect. 4. That is, we test the hypothesis in both the one and two parameter cases and we use MLE as well as MME in order to arrive at parameter estimates. Note that, when fitting a one parameter distribution, the support of the distribution is assumed known. Since the observed minimum is rounded to 2, we conclude that no value less than 1.5 is possible. As a result, we fix \(\sigma =1.5\) in the cases where the one parameter distribution is considered. No such assumption is necessary for the two parameter case; in this case, the value of \(\sigma \) is simply estimated from the data.

When assuming that \(\sigma =1.5\), the MLE of \(\beta \) is calculated to be \({\widehat{\beta }}_n= 0.764\) while the MME is \({\tilde{\beta }}_n=1.194\). In the case where both \(\beta \) and \(\sigma \) are estimated; the MLEs are \({\widehat{\beta }}_n=0.796\) and \({\widehat{\sigma }}_n=1.053\). The corresponding MMEs are \({\tilde{\beta }}_n=1.202\) and \({\widehat{\sigma }}_n=1.031\). The empirical p-values associated with each of these four instances are shown in Table 9. When using MMEs, the empirical p-values are obtained via a parametric bootstrap procedure employing a modified version of the algorithm presented in Sect. 3.2. In the case of MLEs, p-values are approximated using a Monte Carlo procedure; for details, see the discussion in Sect. 3.1. In both cases 10 000 samples are generated from the Pareto distribution. The results associated with each of the tests considered in Sect. 4 are shown. The column headings used indicate the estimation method used as well as the number of parameters estimated. The final column in the table shows the time required in order to arrive at the reported p-values in seconds. The reported results are obtained using a 64 bit Windows 10 operating system with an AMD Ryzen 7 5800U CPU @ 1.90 GHz with 8 GB of RAM. Note the substantial computational times associated with the tests based on characterisations of the Pareto distribution.

In the interpretation of the p-values, we use a nominal significance level of 5%. When assuming a known \(\sigma \) of 1.5 and using MLE to estimate the value of \(\beta \), the majority of the test statistics do not reject the null hypothesis. The exceptions, which reject the hypothesis of the Pareto distribution, are \(ZC_n\), \(KL_{n,10}\), and \(DK_n\). In the case where parameter estimation is performed using the MME, the situation is reversed and 12 of the 20 tests considered reject the Pareto assumption. The tests not rejecting the null hypothesis in this case are \(ZC_n\), \(KL_{n,1}\), \(DK_n\), \(T_n\), \(I_{n,2}\), \(I_{n,3}\), \(I_n^{[1]}\) and \(I_n^{[2]}\).

We now turn our attention to the case where both \(\beta \) and \(\sigma \) require estimation. We start by considering the results obtained using MLE. In this case the majority of the tests do not reject the null hypothesis. Only \(AD_n\), \(ZA_n\), \(ZB_n\), \(ZC_n\), \(KL_{n,10}\) and \(DK_n\) reject the null hypothesis while the remaining 14 tests do not reject the null hypothesis. Finally, when considering the results associated with MME, we observe that the majority of the tests reject the hypothesis of the Pareto distribution. The exceptions to this are the \(ZC_n\), \(KL_{n,1}\), \(DK_n\), \(T_n\), \(I_{n,2}\), \(I_{n,3}\), \(I_n^{[1]}\), \(I_n^{[2]}\) and \(L_{n,3,2}\) tests.

When comparing the p-values associated with the practical example, some further remarks are in order. It should be noted that the estimated value of \(\beta \) is close to 1 when using the MME, while a value of less than 1 is obtained when using MLE. This raises some doubt as to the assumption implicit in the MME that the first moment exists. As a result, we put more stock in the results obtained using the MLE than those obtained using MME. When using the MLE, in both the one and two parameter cases, the majority of the tests do not reject the Pareto assumption, providing evidence in favour of the null hypothesis. As a result, we conclude that the Pareto distribution is likely an appropriate model for the data considered.

6 Concluding remarks

The goal of this study is to review the existing goodness-of-fit tests for the Pareto type I distribution based on a wide range of characteristics of this distribution. Below we provide brief descriptions of these characteristics and the tests related to them. The tests based on the edf, commonly known as the traditional tests, are Kolmogov-Smirnov (\(KS_n\)), Cramér–von Mises (\(CV_n\)), Anderson–Darling (\(AD_n\)) and modified Anderson–Darling (\(MA_n\)) tests. We also consider tests based on likelihood ratios. These tests are either weighted by some function of the edf (\(ZA_n\) and \(ZC_n\)) or by the distribution function under the null hypothesis with estimated parameters (\(ZB_n\)).

Next we consider the Hellinger distance (\(M_{m,n}\)) and Kullback-Leibler divergence (\(KL_{n,m}\)) tests which are based on the concept of entropy. Furthermore, we review tests based on phi-divergence. These tests are based on four distance measures; the Kullback-Leibler distance (\(DK_n\)), the Hellinger distance (\(DH_n\)), the Jeffreys divergence distance (\(DJ_n\)) as well as the Total variation distance (\(DT_n\)).

Although the Pareto distribution does not have a closed form expression for its characteristic function, we include a test, \(S_{n,a}\), utilising the characteristic function of the uniform distribution. We also discuss a test involving the Mellin transform (\(G_n\)) as well as a test based on the fact that the Pareto distribution has a constant inequality curve (\(TS_n\)). Finally, we consider a number of tests utilising different characterisations of the Pareto distribution (\(T_n\), \(I_{n,2}\), \(I_{n,3}\), \(I_n^{[1]}\), \(I_n^{[2]}\) and \(L_{n,3,2}\)).

For the Monte Carlo simulation, we consider eight different distributions (with various parameter settings) under the alternative hypothesis. Some of the tests utilised require parameter estimation. To this end, we consider the maximum likelihood estimators (MLE) and the adjusted method of moments estimators (MMEs). The power performance of the tests are considered in the case where only the shape parameter of the Pareto distribution requires estimation as well as in the case where both the shape and scale parameters are unknown.

The numerical powers of the various test statistics are investigated and compared using a Monte Carlo study. This study shows that \(KL_{n,10}\) and \(DK_n\) produces impressive power results against a range of alternative distributions when using MLE in order to estimate the parameters of the Pareto distribution. In the case where MMEs are used to perform parameter estimation, the \(G_{n,2}\) test produces the highest powers followed by \(MA_n\). It should, however, be noted that \(G_{n,2}\) produces the lowest powers against W(0.5), LN(2.5) and the tilted Pareto distribution. When taking all of the above into account, we recommend using \(DK_n\) together with MLE when testing for the Pareto distribution in practice.

Data availability

Not available.

References

Ahsanullah, M.: A characterization of the exponential distribution by spacings. J. Appl. Prob. 15(3), 650–653 (1978)

Alizadeh Noughabi, H.: Testing exponentiality based on the likelihood ratio and power comparison. Ann. Data Sci 2(2), 195–204 (2015)

Alizadeh Noughabi, H., Balakrishnan, N.: Tests of goodness of fit based on phi-divergence. J. Appl. Stat. 43(3), 412–429 (2016)

Alizadeh Noughabi, R., Alizadeh Noughabi, H., Behabadi, A.E.M.: An entropy test for the rayleigh distribution and power comparison. J. Stat. Comput. Simul. 84(1), 151–158 (2014)

Allison, J.S., Santana, L., Smit, N., Visagie, I.J.H.: An ‘apples to apples’ comparison of various tests for exponentiality. Comput. Stat. 32(4), 1241–1283 (2017)

Allison, J.S., Milošević, B., Obradović, M., Smuts, M.: Distribution-free goodness-of-fit tests for the Pareto distribution based on a characterization. Comput. Stat. 37(1), 403–418 (2022)

Arnold, B.C.: Pareto Distributions. CRC Press, New York (2015)

Basu, A., Harris, I.R., Hjort, N.L., Jones, M.C.: Robust and efficient estimation by minimising a density power divergence. Biometrika 85, 549–559 (1998)

Bera, A.K., Galvao, A.F., Wang, L., Xiao, Z.: A new characterization of the normal distribution and test for normality. Economet. Theory 32(5), 1216–1252 (2016)

Beran, R.J.: Minimum Pareto distance estimates for parameter models. Ann. Stat. 5, 445–463 (1977)

Beran, R.J.: An efficient and robust adaptive estimator of location. Ann. Stat. 6, 292–313 (1978)

Betsch, S., Ebner, B.: Testing normality via a distributional fixed point property in the Stein characterization. TEST 29(1), 105–138 (2020)

Boos, D.: Minimum distance estimators for location and goodness of fit. J. Am. Stat. Assoc. 76, 663–670 (1981)

Brazauskas, V., Serfling, R.: Favorable estimators for fitting Pareto models: a study using goodness-of-fit measures with actual data. ASTIN Bull. J. IAA 33(2), 365–381 (2003)

Choi, B., Kim, K.: Testing goodness-of-fit for Laplace distribution based on maximum entropy. Statistics 40(6), 517–531 (2006)

Chu, J., Dickin, O., Nadarajah, S.: A review of goodness of fit tests for Pareto distributions. J. Comput. Appl. Math. 361, 13–41 (2019)

Cockeran, M., Meintanis, S.G., Allison, J.S.: Goodness-of-fit tests in the Cox proportional hazards model. Commun. Stat. Simul. Comput. 50(12), 4132–4143 (2021)

Csiszár, I.: On topological properties of f-divergences. Studia Scientiarum Mathematicarum Hungarica 2, 329–339 (1967)

Csörgö, S., Szabó, T.: Weighted quantile correlation tests for Gumbel, Weibull and Pareto families. Probab. Math. Stat. 29, 227–250 (2009)

D’Agostino, R., Stephens, M.: Goodness-of-fit Techniques. Marcel Dekker, New York (1986)

Giacomini, R., Politis, D.N., White, H.: A warp-speed method for conducting Monte Carlo experiments involving bootstrap estimators. Econom. Theory 29, 567–589 (2013)

Gulati, S., Shapiro, S.: Goodness-of-fit tests for Pareto distribution. Stat. Models Methods Biomed. Tech. Syst. 25, 259–274 (2008)

Hosking, J.R.M.: \({L}\)-moments: analysis and estimation of distributions using linear combinations of order statistic. J. R. Stat. Soc. 52, 105–124 (1990)

Ismaïl, S.: A simple estimator for the shape parameter of the Pareto distribution with economics and medical applications. J. Appl. Stat. 31(1), 3–13 (2004)

Jahanshahi, S., Rad, A.H., Fakoor, V.: A goodness-of-fit test for Rayleigh distribution based on Hellinger distance. Ann. Data Sci. 3(4), 401–411 (2016)

Kapur, J.N.: Measures of Information and Their Applications. Wiley-Interscience, New York (1994)

Klar, B.: Goodness-of-fit tests for the exponential and the normal distribution based on the integrated distribution function. Ann. Inst. Stat. Math. 53(2), 338–353 (2001)

Klar, B., Meintanis, S.G.: Tests for normal mixtures based on the empirical characteristic function. Comput. Stat. Data Anal. 49(1), 227–242 (2005)

Kullback, S.: Information Theory and Statistics. Dover Publications Inc, New York (1997)

Lequesne, J.: Entropy-based goodness-of-fit test: Application to the pareto distribution. In: AIP Conference Proceedings, vol. 1553, (pp. 155–162). American Institute of Physics (2013)

Meintanis, S.G.: Goodness-of-fit tests and minimum distance estimation via optimal transformation to uniformity. J. Stat. Plan. Inference 139(2), 100–108 (2009)

Meintanis, S.G.: A unified approach of testing for discrete and continuous Pareto laws. Stat. Pap. 50(3), 569–580 (2009)

Meintanis, S.G.: A review of testing procedures based on the empirical characteristic function. South Afr. Stat. J. 50(1), 1–14 (2016)

Meintanis, S.G., Gamero, M.D.J., Alba-Fernández, V.: A class of goodness-of-fit tests based on transformation. Commun. Stat. Theory Methods 43(8), 1708–1735 (2014)

Milošević, B., Obradović, M.: Two-dimensional kolmogorov-type goodness-of-fit tests based on characterisations and their asymptotic efficiencies. J. Nonparamet. Stat. 28(2), 413–427 (2016)

Ndwandwe, L., Allison, J.S., Visagie, I.J.H.: A new fixed point characterisation based test for the Pareto distribution in the presence of random censoring. Annu. Proc. South Afri. Stat. Assoc. Conf. 1, 17–23 (2021)

Ndwandwe, L., Allison, J., Smuts, M., Visagie, I.: On a new class of tests for the pareto distribution using fourier methods. Stat, (p. e566) (2023)

Nofal, Z.M., El Gebaly, Y.M.: New characterizations of the Pareto distribution. Pak. J. Stat. Oper. Res. 13, 63–74 (2017)

Obradović, M.: On asymptotic efficiency of goodness of fit tests for Pareto distribution based on characterizations. Filomat 29(10), 2311–2324 (2015)

Obradović, M., Jovanović, M., Milošević, B.: Goodness-of-fit tests for Pareto distribution based on a characterization and their asymptotics. Statistics 49(5), 1026–1041 (2015)

Pareto, V.: Cours d’economie Politique, vol. II. F. Rouge, Lausanne (1897)

Parr, W.C., Schucany, W.R.: Minimum distance and robust estimation. J. Am. Stat. Assoc. 75, 616–624 (1980)

Quandt, R.E.: Statistical discrimination among alternative hypotheses and some economic regularities. J. Region. Sci. 5(2), 1–23 (1964)

R Core Team. R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, Austria. (2022). https://www.R-project.org/

Rizzo, M.L.: New goodness-of-fit tests for Pareto distributions. Astin Bull. 39, 691–715 (2009)

Rossberg, H.: Characterization of the exponential and the Pareto distributions by means of some properties of the distributions which the differences and quotients of order statistics are subject to. Statistics 3(3), 207–216 (1972)

Shannon, C.E.: A mathematical theory of communication. Bell. Syst. Tech.l J. 27(3), 379–423 (1948)

Silverman, B.W.: Density Estimation for Statistics and Data Analysis. Routledge, London (2018)

Taufer, E., Santi, F., Espa, G., Dickson, M.M.: Graphical representations and associated goodness-of-fit tests for Pareto and log-normal distributions based on inequality curves. J. Nonparamet. Stat. 33(3–4), 464–481 (2021)

Vasicek, O.: A test for normality based on sample entropy. J. R. Stat. Soc. Ser. B (Methodol.) 38(1), 54–59 (1976)

Volkova, K.: Goodness-of-fit tests for the Pareto distribution based on its characterization. Stat. Methods Appl. 25(3), 351–373 (2016)

Wolfowitz, J.: Estimation by the minimum distance method. Ann. Inst. Stat. Math. 5, 9–23 (1953)

Zenga, M.: Proposta per un indice di concentrazione basato sui rapporti fra quantili di popolazione e quantili di reddito. Giornale degli economisti e Annali di Economia 5(6), 301–326 (1984)

Zhang, J.: Powerful goodness-of-fit tests based on the likelihood ratio. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 64(2), 281–294 (2002)

Funding

Open access funding provided by North-West University. No financial or non-financial benefits are directly or indirectly related to this work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

None of the authors has any conflict of interest whatsoever. The work of the last three authors are based on research supported by the National Research Foundation (NRF). Any opinion, finding and conclusion or recommendation expressed in this material is that of the authors and the NRF does not accept any liability in this regard.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

This appendix contains the numerical results pertaining to samples of size \(n=30\).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ndwandwe, L., Allison, J.S., Santana, L. et al. Testing for the Pareto type I distribution: a comparative study. METRON 81, 215–256 (2023). https://doi.org/10.1007/s40300-023-00252-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40300-023-00252-5