Abstract

In this paper, we study the low-rank matrix completion problem, a class of machine learning problems, that aims at the prediction of missing entries in a partially observed matrix. Such problems appear in several challenging applications such as collaborative filtering, image processing, and genotype imputation. We compare the Bayesian approaches and a recently introduced de-biased estimator which provides a useful way to build confidence intervals of interest. From a theoretical viewpoint, the de-biased estimator comes with a sharp minimax-optimal rate of estimation error whereas the Bayesian approach reaches this rate with an additional logarithmic factor. Our simulation studies show originally interesting results that the de-biased estimator is just as good as the Bayesian estimators. Moreover, Bayesian approaches are much more stable and can outperform the de-biased estimator in the case of small samples. In addition, we also find that the empirical coverage rate of the confidence intervals obtained by the de-biased estimator for an entry is absolutely lower than of the considered credible interval. These results suggest further theoretical studies on the estimation error and the concentration of Bayesian methods as they are quite limited up to present.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The goal of low-rank matrix completion is to recover a low-rank matrix from its partially (noisy) observed entries. This problem has recently received an increased attention due to the emergence of several challenging applications, such as recommender systems [1, 38] (particularly the famous Netflix challenge [6]), genotype imputation [12, 19], image processing [7, 18, 25] and quantum state tomography [15, 28, 30]. Different approaches from frequentist to Bayesian methods have been proposed and studied from theoretical and computational points of views, see for example [2, 3, 8,9,10,11, 21, 23, 24, 26, 29, 34, 37, 40].

From a frequentist point of view, most of the recent methods are usually based on penalized optimization. A seminal result can be found in Candés and Recht [9], Candés and Tao [10] for exact matrix completion (noiseless case) and further developed in the noisy case in Candés and Plan [8], Koltchinskii et al. [21], Negahban and Wainwright [33]. Some efficient algorithms had also been studied, for example see [17, 31, 34]. More particularly, in the notable work [21], the authors studied nuclear-norm penalized estimators and provided reconstruction error rate for their methods. They also showed that these error rates are minimax-optimal (up to a logarithmic factor). Note that the error rate, i.e. the average quadratic error on the entries, of a rank-r matrix size \( m \times p \) from n-observations can not be better than: \( r\max (m,p)/n \) [21].

More recently, in a work by Chen et al. [11], de-biased estimators have been proposed for the problem of noisy low-rank matrix completion. The estimation accuracy of this estimator is shown to be sharp in the sense that it reaches the minimax-optimal rate without any additional logarithmic factor. A sharp bound has also been obtained by a different estimator in Klopp [20]. However, uncertainty quantification is not given. More importantly, the confidence intervals on the reconstruction of entries of the underlying matrix are also provided by using the de-biased estimators in the work by Chen et al. [11]. It is noted that conducting uncertainty quantification for matrix completion is not straightforward. This is because, in general, the solutions for matrix completion do not admit closed-form and the distributions of the estimates returned by the state-of-the-art algorithms are hard to derive.

On the other hand, uncertainty quantification can be obtained straightforwardly from a Bayesian perspective. More specifically, the unknown matrix is considered as a random variable with a specific prior distribution and statistical inference can be obtained using the posterior distribution, for example considering credible intervals. Bayesian methods have been studied for low-rank matrix completion mainly from a computational viewpoint [4, 4, 5, 13, 23, 24, 37, 39, 40]. Most Bayesian estimators are based on conjugate priors which allow to use Gibbs sampling [4, 37] or Variational Bayes methods [24]. These algorithms are fast enough to deal with and actually tested on large datasets like Netflix [6] or MovieLens [16]. However, the theoretical understanding of Bayesian estimators is quite limited, up to our knowledge, [29] and [3] are the only prominent examples. More specifically, they showed that a Bayesian estimator with a low-rank factorization prior reaches the minimax-optimal rate up to a logarithmic factor and the paper [3] further shows that the same estimation error rate can be obtained by using a Variational Bayesian estimator.

In this paper, to understand the performance of Bayesian approaches when compared to the de-biased estimators, we perform numerical comparisons on the estimation accuracy (the estimation error, the normalized squared error and the prediction error, see Sect. 3) considering the de-biased estimator in Chen et al. [11] and the Bayesian methods [3] for which the statistical properties have been well studied. Furthermore, we examine in detail the behaviour of the confidence intervals obtained by the de-biased estimator and the Bayesian credible intervals. Interestingly, it is noted that recent works [35, 36] show that Bayesian methods are now the most accurate in practical recommender systems. Although Bayesian methods have become popular in the problem of matrix completion, its uncertainty quantification (e.g. credible intervals) has received much more limited attention in the literature.

Results from simulation comparisons release originally interesting messages. More specifically, the de-biased estimator is just as good as the Bayesian estimators when we look at the estimation accuracy, although it is completely successful in improving the estimator being de-biased. On the other hand, the Bayesian approaches are much more stable than the de-biased method and, in addition, they outperform the de-biased estimator especially in the case of small samples. Moreover, we find that the coverage rates of the 95% confidence intervals obtained using the de-biased estimator are lower than the 89% equal-tailed credible intervals. These evidences suggest that the Bayesian estimators may actually reach the minimax-optimal rate sharply and the log-term could be due to the technical proofs (the PAC-Bayesian bounds technique). Furthermore, the concentration rate of the corresponding Bayesian posterior discussed in Alquier and Ridgway [3] with a log-term might not be tight.

The rest of the paper is structured as follows. In Sect. 2 we present the low-rank matrix completion problem, then introduce the de-biased estimator and the corresponding confidence interval and provide details on the considered Bayesian estimators. In Sect. 3, simulation studies comparing the different methods are presented. We discuss our results and give some concluding remarks in the final section.

2 Low-rank matrix completion

2.1 Model

In this work, we adopt the statistical model commonly studied in the literature for noisy matrix completion [11]. Let \( M^* \in \mathbb {R}^{m\times p} \) be an unknown rank-r matrix of interest. We partially observe some noisy entries of \(M^*\) as

where \(\Omega \subseteq \lbrace 1, \ldots , m \rbrace \times \lbrace 1, \ldots ,p \rbrace \) is a small subset of indexes and \( \mathcal {E}_{ij} \sim \mathcal {N}(0, \sigma ^2) \) are independently generated noise at the location (i, j) . The random sampling model is assumed that each index \( (i,j) \in \Omega \) is observed independently with probability \( \kappa \) (i.e., data are missing uniformly at random). Then, the problem of estimating \( M^* \) with \( n = |\Omega | < mp \) is called the (noisy) low-rank matrix completion problem.

Let \( \mathcal {P}_\Omega (\cdot ) : \mathbb {R}^{m\times p} \mapsto \mathbb {R}^{m\times p} \) be the orthogonal projection onto the observed entries in the index set \(\Omega \) that

Notations: For a matrix \(A\in \mathbb {R}^{m\times p} \), \( \Vert A\Vert _F = \sqrt{\textrm{trace}(A^\top A)} \) denotes its Frobenius norm and \( \Vert A\Vert _* = \textrm{trace} (\sqrt{A^\top A}) \) denotes its nuclear norm. \( \left[ a \pm b\right] \) denotes the interval \( \left[ a -b, a + b \right] \). We use \( I_q \) to denote the identity matrix of dimension \(q \times q \).

2.2 The de-biased estimator

Let \(\hat{M}\) be either the solution of the following nuclear norm regularization [31]

or of the following factorization minimization [17]

where \(\lambda >0 \) is a tuning parameter. The optimization problem in (2) can be seen as the problem of finding the MAP (maximum a posteriori) in Bayesian modeling where Gaussian priors are used on columns of the factors U and V, detailed discussion can be found in d in Alquier et al. [4], Fithian and Mazumder [14].

Given an estimator \( \hat{M} \) as above, the de-biased estimator [11] is defined as

where \(\textrm{Pr}_{\mathrm{rank-}r} (B) = \arg \min _{A, \textrm{rank}(A) \le r} \Vert A- B\Vert _F \) is the projection onto the set of rank-r matrices.

Remark 1

The estimation accuracy of the de-biased estimator, provided in Theorem 3 in Chen et al. [11] under some assumptions, is \( \Vert M^{db} - M^* \Vert _F^2 \le c \max (m,p)r \sigma ^2/n \) without any extra log-term and c is universal numerical constant.

2.2.1 Confidence interval

Let \( \hat{M} = \hat{U} \hat{\Sigma } \hat{V} ^{\top } \) be the singular values decomposition of \( \hat{M} \). Put

where

Then, given a significance level \(\alpha \in (0,1) \), the following interval

is a nearly accurate two-sided \((1-\alpha )\) confidence interval of \(M^*_{ij}\), where \( \Phi (\cdot ) \) is the CDF of the standard normal distribution. This is given in Corollary 1 in Chen et al. [11]. This method is implemented in the R package dbMC [27].

2.3 The Bayesian estimators

The Bayesian estimator studied in Alquier and Ridgway [3] is given by

where

is the posterior and \(L(Y| M)^\lambda \) is the likelihood raised to the power \(\lambda \). Here \(\lambda \in (0,1)\) is a tuning parameter and \( \pi (M)\) is the prior distribution.

2.3.1 Priors

A popular choice for the priors in Bayesian matrix completion is to assign conditional Gaussian priors to \(U \in \mathbb {R}^{m\times K} \) and \(V \in \mathbb {R}^{p\times K} \) such that

for a fixed integer \(K \le \min (m,p) \). More specifically, for \(k \in \{1,\ldots ,K \} \), independently

where \( I_q \) is the identity matrix of dimension \(q \times q \) and a, b are some tuning parameters. This type of prior is conjugate so that the conditional posteriors can be derived explicitly in closed form and allows to use the Gibbs sampler, see [37] for details. Some reviews and discussion on low-rank factorization priors can be found in Alquier [2], Alquier et al. [4].

Remark 2

In the case that the rank r is not known, it is natural to take K as large as possible, e.g \(K = \min (m,p) \) but this may be computationally prohibitive if K is large.

Remark 3

The estimation error for this Bayesian estimator, under some assumptions, given in Corollary 4.2 in Alquier and Ridgway [3], is \( \Vert M^{B} - M^* \Vert _F^2 \le \max (m,p)r \sigma ^2/n \) with an additional (multiplicative) log-term by \(\log (n\max (m,p)) \). It is noted that the rate is also reached in Mai and Alquier [29] with an additional (multiplicative) log-term by \(\log (\min (m,p)) \) under general sampling distribution however the authors considered some truncated priors.

For a given rank-r, we propose to consider the following prior, called fixed-rank-prior,

for \(k = 1, \ldots ,r\). This prior is a simplified version of the above prior. We note that for \(K > r\) the Gibbs sampler of the fixed-rank-prior will be faster than Gibbs sampler for the above prior. Interestingly, results from simulation for the Bayesian estimator with this prior are slightly better than the one based on the above prior at some point.

Remark 4

We remark that the theoretical estimation error for the Bayesian estimator with the fixed-rank-prior given in (6) remains unchanged following by Corollary 4.2 in Alquier and Ridgway [3].

2.3.2 Credible intervals

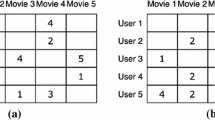

Using Bayesian approach, the credibility intervals for the matrix and their functions (e.g. entries) can be easily constructed using the Markov Chain Monte Carlo (MCMC) technique. Here, we focus on the equal-tailed credible interval for an entry (Fig. 1).

More precisely, the credible intervals are reported using the 89% equal-tailed intervals that are recommended by Kruschke [22], McElreath [32] for small posterior samples as in our situations with 500 posterior samples. We noted that, according to Salakhutdinov and Mnih [37] as the data are too big to draw a reasonable size sample, the authors state that drawing only 500 observations from the Gibbs Sampler took 90 hours for the Netflix dataset. Thus, we focus on the 89% equal-tailed credible intervals for 500 posterior samples. It is, however, noted that to obtain 95% intervals, an effective posterior sample size of at least 10,000 is recommended [22], which is computationally costly to run on all of our simulations. A few examples with 10,000 posterior samples are examined in Fig. 2.

Q–Q (quantile–quantile) plot to compare the 10,000 posterior samples for some entries against the standard normal distribution. Top row (from left to right, 3 figures) is the results from Setting I with \(r = 2,p = 100, \tau = 50\%\). Bottom row (from left to right, 3 figures) is the results from Setting I with \(r = 2,p = 1000, \tau = 50\%\)

Plot to compare the limiting Gaussian distributions of the de-biased estimator and the histograms of the 10,000 posterior samples for some entries. The dotted line is the true value of the entries. Top row (from left to right, 3 figures) is the results from Setting I with \(r = 2,p = 100, \tau = 50\%\). Bottom row (from left to right, 3 figures) is the results from Setting I with \(r = 2,p = 1000, \tau = 50\%\)

3 Simulation studies

3.1 Experimental designs

In order to access the performance of different estimators, a series of experiments were conducted with simulated data. We fix \( m = 100 \) and alternate the other dimension by taking \( p = 100 \) and \( p = 1000 \). The rank r is varied between \(r = 2 \) and \(r = 5 \).

-

Setting I: In the first setting, a rank-r matrix \( M^*_{m\times p} \) is generated as the product of two rank-r matrices,

$$\begin{aligned} M^* = U^*_{m\times r} V_{p\times r}^{*\top } , \end{aligned}$$where the entries of \(U^* \) and \( V^* \) are i.i.d \( \mathcal {N} (0 , 1) \). With a missing rate \( \tau = 20\%, 50\% \) and \(80\%\), the entries of the observed set are drawn uniformly at random. This sampled set is then corrupted by noise as in (1), where the \( \mathcal {E}_i \) are i.i.d \( \mathcal {N} (0 , 1) \).

-

Setting II: The second series of simulations is similar to the first one, except that the matrix \(M^*\) is no longer rank-r, but it can be well approximated by a rank-r matrix:

$$\begin{aligned} M^*=U^*_{m\times r} V_{p\times r}^{*\top } + \frac{1}{10} A_{m\times 50} B_{p\times 50}^\top \end{aligned}$$where the entries of A and B are i.i.d \( \mathcal {N} (0 , 1) \).

-

Setting III: This setting is similar to Setting I but here a heavy tail noise is used. More specifically, the noise \( \mathcal {E}_i \) are i.i.d Student distribution \( t_3 \) with 3 degrees of freedom.

-

Setting IV: The set up of this setting is also similar to Setting I. However, we consider a more extreme case where the entries of \(U^* \), \( V^* \) and the noise \( \mathcal {E}_i \) are all i.i.d drawn from the Student distribution with 3 degrees of freedom.

Remark 5

We note that for the second series of simulations, with approximate low-rank matrices, the theory of the de-biased estimator can not be used whereas theoretical guarantees for Bayesian estimators are still valid, see [3]. The setting I follows exactly the minimax-optimal regime and thus it will allow to access the accuracy of the considered estimators. The last 2 settings (III and IV) are misspecification models set up where the theoretical guarantee is not available for all considered estimators.

The behavior of an estimator (say \(\widehat{M}\)) is evaluated through the average squared error (ase) per entry

and the relative squared error (rse)

We also measure the error in predicting the missing entries by using

where \( \bar{\Omega } \) is the set of un-observed entries. For each setup, we generate 100 data sets (simulation replicates) and report the average and the standard deviation for a measure of error of each estimator over the replicates.

We compare the de-biased estimator (denoted by ‘d.b’), the Bayesian estimator with the fixed-rank-prior (6) (denoted by ‘f.Bayes’) and the Bayesian estimator with the (flexible rank) prior (5) (denoted by ‘Bayes’). As a by-product in calculating the de-biased estimator through the Alternating Least Squares estimator (2), we also report the results for this estimator, denoted it by ‘als’.

The ‘als’ estimator is available from the R package ‘softImpute’ [31] and is used with default options. The ‘d.b’ estimator is run with \( \lambda = 2.5\sigma \sqrt{mp} \) as in Chen et al. [11]. The ‘f.Bayes’ and ‘Bayes’ estimators are used with tuning parameter \(\lambda = 1/(4\sigma ^2) \) and parameters for the prior of ’Bayes’ estimator are \(K=10,a=1, b=1/100\). The Gibbs samplers for these two Bayesian estimators are run with 500 steps and 100 burn-in steps.

3.2 Results on estimation accuracy

From the results in Tables 1 and 2, it is clear that the de-biased estimator significantly outperforms its ancestry estimator being de-biased. Whereas, the de-biased estimator is just as good as the Bayesian methods in some cases.

More specifically, in Table 1, the de-biased estimator behaves similar compared to Bayesian estimators in the case with high rates of observation (say \(\tau = 20\%\) or \( 50\% \)). With the case of highly missing rate \(\tau = 80\% \), the de-biased estimator returns highly unstable results, this may be because its ancestry estimator (here it is the als estimator) is unstable with few observations. However, when the dimension of the matrix increases, the differences between the de-biased estimator and the Bayesian estimators become smaller. This is also recognized for the setting of approximate low-rank matrices as in Table 2 and in Table 5, 6.

The ‘f.Bayes’ method yields the best results quite often in terms of all considered errors (ase, Nase and Pred) in setting of exact low-rank matrices. However, it is noted that for the setting with the true underlying matrix being approximately low-rank, in Tables 2 and 6, the ‘Bayes’ approach is slightly better than the ‘f.Bayes’ approach at some point. This can be explained as the ‘Bayes’ approach employs a kind of approximate low-rank prior through the Gamma prior on the variance of the factor matrices and thus it is able to adapt to the approximate low-rankness.

Results in the cases of model misspecification with heavy tail noise are given Tables 3 and 4. Although Bayesian methods, especially ‘f.Bayes’ method, yield better results compared with ‘als’ or ‘db’, all methods fail in the case of highly missing data, \(\tau = 80\%\). This could be due to the fact that these considered methods are all designed for the case of Gaussian noise and thus they are not robust to other heavy tail noise, such as Student noise.

3.3 Results on uncertainty quantification

To examine the uncertainty quantification across the methods, we simulate a matrix as in Sect. 3.1 then we repeat the observation process 100 times. More precisely, we obtain 100 data sets by replicating the observation of \( 20\%, 50\% \) and \(80\%\) entries of the matrix \( M^* \) using a uniform sampling and then each sampled set is corrupted by noise as in (1), where the \( \mathcal {E}_i \) are i.i.d \( \mathcal {N} (0 , 1) \).

Tables 5 and 6 gather the empirical coverage rate of the confidence intervals and of the credible intervals of all methods over 100 independent experiments. More precisely, we report the 95% confidence intervals for the de-biased method. The credible interval is reported using the 89% equal-tailed interval, see [22, 32], for small posterior samples as in our situations with 500 posterior samples. We noted that to obtain 95% intervals, an effective posterior sample size of at least 10,000 is recommended [22]. A few examples from Setting I with 10000 posterior samples are given in Fig. 2.

A noteworthy conclusion from the results in Tables 5 and 6 is that the coverage rates of the 89% credible intervals are significantly higher than those of the 95% confidence intervals revealed by the de-biased method. The credible intervals of the ’f.Bayes’ approach show a slightly better coverage rate than those based on the ’Bayes’ approach. It is also noted that in the setting of approximate low-rankness, Table 6, where we do not have theoretical guarantee for the de-biased estimator, the coverage rate of the confidence intervals is very low while the credible intervals still come with reliable coverage rates. These results further explain why Bayesian methods yield better results in accuracy as in Tables 1 and 2.

In Fig. 2, we compare the limiting Gaussian distribution of the de-biased estimator and posterior samples for the ‘f.Bayes’ method against the true entries of interest. It is shown that the limiting Gaussian distribution of the de-biased estimator yields a slightly sharper tail distribution compared to the distribution of the posterior samples. In addition, displays the Q-Q (quantile-quantile) plots of 10,000 posterior samples of some entries vs. the standard Gaussian random variables. It shows that the posterior distributions of these entries reasonably well match the standard Gaussian distribution.

Results on empirical coverage rate of the confidence intervals and of the credible intervals for Setting III and IV with heavy tail noises are gathered in Tables 7 and 8. We can see that there is a slight reduction in the empirical coverage rate of all methods compared with those in the Gaussian noise setting in Table 5. As in Setting I and II, the empirical coverage rates of confidence intervals decrease quickly as the missing rates \( \tau \) increase, while the empirical coverage rates of credible intervals remain stable.

4 Discussion and conclusion

In this paper, we have provided extensive numerical comparisons between the de-biased estimator and the Bayesian estimators in the problem of low-rank matrix completion. Results from numerical simulations draw a systematic picture of the behaviour of these estimators originally. More specifically, on the estimation accuracy, the de-biased estimator is comparable to the Bayesian estimators whereas the Bayesian estimators are much more stable and in some cases can outperform the de-biased estimator, especially in the small samples regime. Moreover, the credible intervals reasonably cover the underlying entries quite well and slightly better than the confidence intervals in exact low-rank matrix completion. However, in the case of approximate low-rankness, the confidence intervals revealed by the de-biased estimators no longer work well. These results are interested for and can be served as a guideline for researchers as well as practitioners in many areas where one only has access to a few observations.

On the other hand, the results in this work suggest that the considered Bayesian estimators may actually reach the minimax-optimal rate of convergence without additional logarithmic factor. The extra log-terms could be due to the PAC-Bayesian bounds technique that used to prove the theoretical properties of the Bayesian estimator. Moreover, as shown in Alquier and Ridgway [3], the same rate with log-term is proved for the concentration of the corresponding posterior and we conjecture that this rate could also be improved due to the coverage of credible intervals. These are important questions that remain open up to our knowledge.

Last but not least, it is also important to perform the comparisons with the Variational Bayesian (VB) method in Lim and Teh [24] where its theoretical guarantees are given in Lim and Teh [3], because this method is very popular for matrix completion with large datasets. This will be the objective of our future work. However, we would like to note that, in a preprint [4], the authors had performed some comparisons between the Bayesian approach and the VB method. The message from their works is that we can expect that VB should be more or less as accurate as Bayes, maybe slightly less, but that the credibility intervals would be inaccurate (see e.g Figure 3 in Alquier et al. [4]).

Data availability

The R codes and data used in the numerical experiments are available at: https://github.com/tienmt/UQMC.

References

Adomavicius, G., Tuzhilin, A.: Context-aware recommender systems. In: Recommender Systems Handbook, pp. 217–253. Springer, Berlin (2011)

Alquier, P.: Bayesian methods for low-rank matrix estimation: short survey and theoretical study. In: Algorithmic Learning Theory 2013. Springer, Berlin, pp 309–323 (2013)

Alquier, P., Ridgway, J.: Concentration of tempered posteriors and of their variational approximations. Ann. Stat. 48(3), 1475–1497 (2020)

Alquier, P., Cottet, V., Chopin, N., Rousseau, J.: Bayesian matrix completion: prior specification and consistency. (2014) arXiv preprint arXiv:1406.1440

Babacan, S.D., Luessi, M., Molina, R., Katsaggelos, A.K.: Sparse Bayesian methods for low-rank matrix estimation. IEEE Trans. Signal Process. 60(8), 3964–3977 (2012)

Bennett, J., Lanning, S.: The netflix prize. In: Proceedings of KDD Cup and Workshop, vol 2007, p 35 (2007)

Cabral, R., De la Torre, F., Costeira, J.P., Bernardino, A.: Matrix completion for weakly-supervised multi-label image classification. IEEE Trans. Pattern Anal. Mach. Intell. 37(1), 121–135 (2014)

Candès, E.J., Plan, Y.: Matrix completion with noise. Proc. IEEE 98(6), 925–936 (2010)

Candès, E.J., Recht, B.: Exact matrix completion via convex optimization. Found. Comput. Math. 9(6), 717–772 (2009)

Candès, E.J., Tao, T.: The power of convex relaxation: near-optimal matrix completion. IEEE Trans. Inform. Theory 56(5), 2053–2080 (2010)

Chen, Y., Fan, J., Ma, C., Yan, Y.: Inference and uncertainty quantification for noisy matrix completion. Proc. Natl. Acad. Sci. 116(46), 22931–22937 (2019)

Chi, E.C., Zhou, H., Chen, G.K., Del Vecchyo, D.O., Lange, K.: Genotype imputation via matrix completion. Genome Res. 23(3), 509–518 (2013)

Cottet, V., Alquier, P.: 1-bit matrix completion: Pac-bayesian analysis of a variational approximation. Mach. Learn. 107(3), 579–603 (2018)

Fithian, W., Mazumder, R.: Flexible low-rank statistical modeling with missing data and side information. Stat. Sci. 33(2), 238–260 (2018)

Gross, D., Liu, Y.-K., Flammia, S.T., Becker, S., Eisert, J.: Quantum state tomography via compressed sensing. Phys. Rev. Lett. 105(15), 150401 (2010)

Harper, F.M., Konstan, J.A.: The movielens datasets: history and context. Acm Trans. Interact. Intell. Syst. (TIIS) 5(4), 1–19 (2015)

Hastie, T., Mazumder, R., Lee, J.D., Zadeh, R.: Matrix completion and low-rank SVD via fast alternating least squares. J. Mach. Learn. Res. 16(1), 3367–3402 (2015)

He, K., Sun, J.: Image completion approaches using the statistics of similar patches. IEEE Trans. Pattern Anal. Mach. Intell. 36(12), 2423–2435 (2014)

Jiang, B., Ma, S., Causey, J., Qiao, L., Hardin, M.P., Bitts, I., Johnson, D., Zhang, S., Huang, X.: Sparrec: an effective matrix completion framework of missing data imputation for GWAS. Sci. Rep. 6(1), 1–15 (2016)

Klopp, O.: Matrix completion by singular value thresholding: sharp bounds. Electron. J. Stat. 9(2), 2348–2369 (2015)

Koltchinskii, V., Lounici, K., Tsybakov, A.B.: Nuclear-norm penalization and optimal rates for noisy low-rank matrix completion. Ann. Stat. 39(5), 2302–2329 (2011)

Kruschke, J.: Doing Bayesian Data Analysis: A Tutorial with R, JAGS, and Stan. Academic Press, New York (2014)

Lawrence, N.D., Urtasun, R.: Non-linear matrix factorization with gaussian processes. In: Proceedings of the 26th Annual International Conference on Machine Learning. ACM, pp. 601–608 (2009)

Lim, Y.J., Teh, Y.W.: Variational bayesian approach to movie rating prediction. In: Proceedings of KDD Cup and Workshop, vol. 7, pp. 15–21 (2007)

Luo, Y., Liu, T., Tao, D., Xu, C.: Multiview matrix completion for multilabel image classification. IEEE Trans. Image Process. 24(8), 2355–2368 (2015)

Mai, T.T.: Bayesian matrix completion with a spectral scaled student prior: theoretical guarantee and efficient sampling. (2021a). arXiv preprint arXiv:2104.08191

Mai, T.T.: dbMC: Confidence Interval for Matrix Completion via De-Biased Estimator. R package version 1.0.0 (2021b)

Mai, T.T.: An efficient adaptive mcmc algorithm for pseudo-bayesian quantum tomography. Comput. Stat., 1–17 (2022)

Mai, T.T., Alquier, P.: A Bayesian approach for noisy matrix completion: optimal rate under general sampling distribution. Electron. J. Stat. 9(1), 823–841 (2015)

Mai, T.T., Alquier, P.: Pseudo-Bayesian quantum tomography with rank-adaptation. J. Stat. Plan. Infer. 184, 62–76 (2017)

Mazumder, R., Hastie, T., Tibshirani, R.: Spectral regularization algorithms for learning large incomplete matrices. J. Mach. Learn. Res. 11, 2287–2322 (2010)

McElreath, R.: Statistical Rethinking: A Bayesian Course with Examples in R and Stan. CRC press, Boca Raton (2020)

Negahban, S., Wainwright, M.J.: Restricted strong convexity and weighted matrix completion: optimal bounds with noise. J. Mach. Learn. Res. 13, 1665–1697 (2012)

Recht, B., Ré, C.: Parallel stochastic gradient algorithms for large-scale matrix completion. Math. Program. Comput. 5(2), 201–226 (2013)

Rendle, S., Zhang, L., Koren, Y.: On the difficulty of evaluating baselines: A study on recommender systems (2019). arXiv preprint arXiv:1905.01395

Rendle, S., Krichene, W., Zhang, L., Anderson, J.: Neural collaborative filtering vs. matrix factorization revisited. In: Fourteenth ACM Conference on Recommender Systems, pp. 240–248 (2020)

Salakhutdinov, R., Mnih, A.: Bayesian probabilistic matrix factorization using markov chain monte carlo. In: Proceedings of the 25th International Conference on Machine learning. ACM, pp. 880–887 (2008)

Xiong, L., Chen, X., Huang, T.-K., Schneider, J., Carbonell, J.G.: Temporal collaborative filtering with bayesian probabilistic tensor factorization. In: Proceedings of the 2010 SIAM International Conference on Data Mining. SIAM, pp. 211–222 (2010)

Yang, L., Fang, J., Duan, H., Li, H., Zeng, B.: Fast low-rank Bayesian matrix completion with hierarchical gaussian prior models. IEEE Trans. Signal Process. 66(11), 2804–2817 (2018)

Zhou, M., Wang, C., Chen, M., Paisley, J., Dunson, D., Carin, L.: Nonparametric bayesian matrix completion. In: 2010 IEEE Sensor Array and Multichannel Signal Processing Workshop. IEEE, pp. 213–216 (2010)

Acknowledgements

The author would like to thank the editor and the anonymous referee, who kindly reviewed the earlier version of the manuscript, for providing valuable suggestions and enlightening comments that help improve the current version of the paper. TTM is supported by the Norwegian Research Council grant number 309960 through the Centre for Geophysical Forecasting at NTNU. The author would like to thank Pierre Alquier for kindly providing useful feedbacks on a first draft of this paper.

Funding

Open access funding provided by NTNU Norwegian University of Science and Technology (incl St. Olavs Hospital - Trondheim University Hospital)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mai, T.T. Simulation comparisons between Bayesian and de-biased estimators in low-rank matrix completion. METRON 81, 193–214 (2023). https://doi.org/10.1007/s40300-023-00239-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40300-023-00239-2