Abstract

This paper documents the results from the highly successful Lunar flashlight Optical Navigation Experiment with a Star tracker (LONEStar). Launched in December 2022, Lunar Flashlight (LF) was a NASA-funded technology demonstration mission. After a propulsion system anomaly prevented capture in lunar orbit, LF was ejected from the Earth-Moon system and into heliocentric space. NASA subsequently transferred ownership of LF to Georgia Tech to conduct an unfunded extended mission to demonstrate further advanced technology objectives, including LONEStar. From August to December 2023, the LONEStar team performed on-orbit calibration of the optical instrument and a number of different OPNAV experiments. This campaign included the processing of nearly 400 images of star fields, Earth and Moon, and four other planets (Mercury, Mars, Jupiter, and Saturn). LONEStar provided the first on-orbit demonstrations of heliocentric navigation using only optical observations of planets. Of special note is the successful in-flight demonstration of (1) instantaneous triangulation with simultaneous sightings of two planets with the LOST algorithm and (2) dynamic triangulation with sequential sightings of multiple planets.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Lunar Flashlight (LF) was a NASA technology demonstration mission [1] launched in December 2022 that had a secondary science goal of searching for water ice on the Moon [2]. LF successfully completed all of its primary objectives as a technology demonstration mission. However, following a propulsion system anomaly, the spacecraft was unable to reach the originally planned lunar orbit and the secondary (non-mandatory) science goal was lost. The vehicle instead executed an Earth flyby in May 2023 before being ejected from the Earth-Moon system and into its present heliocentric orbit. Fortunately, despite some of the challenges encountered with the innovative propulsion system, the vehicle was otherwise fully operational. Consequently, as part of an extended mission through the Georgia Institute of Technology (GT), the Lunar Flashlight team identified optical navigation as a compelling technology demonstration experiment that could be completed along the vehicle’s Earth-Moon departure trajectory. This investigation was designated the LF Optical Navigation Experiment with a Star tracker (LONEStar) and became a primary objective of the GT-led extended mission of the Lunar Flashlight spacecraft.

The art of optical navigation (OPNAV) has a colorful history [3, 4] and has played a pivotal role in the success of many planetary exploration missions—including Voyager [5, 6], Cassini [7, 8], New Horizons [9], Artemis I [10], and many others. A number of other missions have demonstrated autonomous (onboard) OPNAV during encounters with small bodies (e.g., asteroids, comets), including Deep Impact [11], OSIRIS-REx [12], and DART [13]. With the exception of Deep Space 1 (DS1) [14, 15], however, few missions have explored the efficacy of autonomous OPNAV in a heliocentric orbit while very far away from the observed celestial bodies. This idea was considered for ESA’s Smart-1 mission, but never actually demonstrated in flight [16]. At the time this paper was written, DS1 and Lunar Flashlight are the only two missions to have successfully demonstrated OPNAV-only orbit determination in heliocentric space.

The DS1 AutoNav and LONEStar experiments both navigated using line-of-sight (LOS) observations to unresolved celestial bodies. However, the results of these two projects differ in three important ways. First, DS1 AutoNav processed images and performed state estimation entirely onboard the spacecraft (the first of its kind), whereas LONEStar was conducted by human analysts on Earth. Second, the DS1 team chose to collect images of nearby asteroids (instead of distant planets) against star field backgrounds to achieve better absolute navigation performance. For LONEStar, however, no nearby asteroids were visible during the investigation and the LF team instead focused on collecting images of planets. As a consequence, LONEStar became the first flight demonstration of an OPNAV-only orbit solution using exclusively planet observations in heliocentric space. Third, DS1 AutoNav directly processed LOS measurements in a navigation filter. While this was also done for LONEStar (see Sect. 7), many of the LONEStar experiments focused on explicit triangulation for instantaneous localization or initial orbit determination. Thus, LONEStar is the first demonstration of heliocentric spacecraft localization by (1) absolute triangulation using the simultaneous observation of multiple planets (Mercury and Mars, see Sect. 5.3), (2) absolute triangulation with sequential images of two planets (Jupiter and Saturn, see Sect. 5.4), and (3) dynamic triangulation using sequential observations of multiple planets over a long period of time (Jupiter and Saturn, see Sect. 5.5).

Although LONEStar is the first flight demonstration of planet-based celestial triangulation, this fundamental idea has been proposed since at least the 1950s [17,18,19,20]. After a period of stagnation, the idea of OPNAV by explicit triangulation gained significant traction in the last decade [21,22,23,24,25,26]. LONEStar builds on this legacy of innovation, while also providing an important experimental verification of the Linear Optimal Sine Triangulation (LOST) method recently theorized by Henry and Christian [25, 26].

2 The Lunar Flashlight Mission

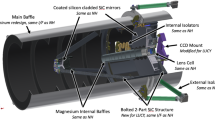

Lunar Flashlight was conceived as a small satellite technology demonstration mission developed by the Jet Propulsion Laboratory (JPL) and funded by NASA’s Space Technology Mission Directorate (STMD) [2, 27]. Lunar Flashlight’s primary mission objectives were to demonstrate several advanced small satellite technologies on a cislunar mission. Innovative components of the 6U CubeSat (see Fig. 1) included: (1) a radiation tolerant microprocessor, (2) a Deep Space Network (DSN) compatible transceiver, (3) a relatively high-power infrared laser, and (4) an experimental monopropellant propulsion system. The mission had an additional science goal to survey the lunar south pole region for evidence of surface ice in permanently shadowed regions using the onboard infrared laser. This latter science goal required orbit insertion into a highly elliptic lunar orbit.

The Lunar Flashlight spacecraft. Shown here are the major spacecraft subsystems and components [28] (left) and the spacecraft during integration and testing at Georgia Tech (right)

The Lunar Flashlight team was a partnership among several different institutions. JPL managed the project and developed several of the key subsystems, including the radiation tolerant microprocessor, radio transceiver, and laser instrument. NASA’s Marshall Space Flight Center and Georgia Tech designed and built the experimental propulsion system [29, 30]. Additionally, spacecraft integration, testing, and mission operations were conducted at Georgia Tech [1].

Lunar Flashlight was launched on 2022-DEC-11 and deployed into a trajectory which would take it to cislunar space. The original concept of operations (see Fig. 2) required multiple trajectory correction maneuvers (TCMs) to insert the spacecraft into a target near rectilinear halo orbit (NRHO) around the moon. However, an anomaly with the propulsion system prevented the spacecraft from achieving the target NRHO. After several attempts to recover the propulsion system, the official mission was declared over in May 2023 after successfully completing all of its technology demonstration objectives. After an Earth flyby, the spacecraft’s trajectory exited the Earth-Moon system and entered into heliocentric orbit (see Fig. 3). The current LF orbit has a 15-year synodic period with Earth and the spacecraft will return to the Earth-Moon vicinity in 2038. Other than the propulsion system, the spacecraft was functioning nominally, and NASA officially transferred the spacecraft to Georgia Tech for additional experimentation in August 2023 [31].

Original Lunar Flashlight mission concept of operations [32]

Visualization of as-flown Lunar Flashlight trajectory as seen in Earth-centered ecliptic International Celestial Reference Frame (ICRF). After about 5 months within the Earth-Moon system (left), LF was ejected into a heliocentric trajectory (right). Shown in dark blue is the portion of the trajectory corresponding the LONEStar imaging campaign. Tick marks indicate the LONEStar elapsed time relative to the reference epoch 2023-JUL-22 00:00:00 UTC. The Moon’s orbit is shown in red

The GT-led LF extended mission began in mid-2023 and lasted until December 2023. Since an abundance of activities had been conducted using the propulsion unit during the primary mission, the on-orbit demonstration of a number of novel OPNAV techniques was chosen as the primary extended mission objective. Results from other mission activities are available elsewhere [28].

LF was controlled directly by GT students from a Mission Operations Center (MOC) located on the GT campus in Atlanta, Georgia (Fig. 4) [33]. To the authors’ knowledge, Lunar Flashlight is the first interplanetary spacecraft to be operated by an operations team comprised entirely of students. During the course of the LF mission, GT conducted more than 500 Deep Space Network (DSN) contacts with the spacecraft, consisting of both “autonomous" and “operator in the loop” interactions.

Lunar Flashlight operations relied on Earth-based radiometric navigation with DSN. The JPL-led Lunar Flashlight Navigation Team processed two-way Doppler, two-way ranging, and delta-differential one-way ranging (DDOR) navigation observables from DSN between launch (2022-DEC-11) and about 2023-JUN-27 14:00:00 UTC. However, the portion of the trajectory covering the times of the LONEStar experiment are primarily based on DSN observations between 2023-MAY-24 and 2023-JUN-27. The final DSN-based navigation solution was delivered in late June 2023 [34] and this product was used as the reference trajectory for LONEStar. This navigation solution is referred to as the reference trajectory from this point forward. Since LF did not attempt to use any onboard thrusters after 2023-MAY-05 (19 days before the start of the final DSN tracking data arc), the final reference trajectory remained unperturbed by propulsive maneuvers and was found to be valid for the entirety of the LONEStar investigation.

3 OPNAV Instrument

3.1 Overview of Instrument

The Blue Canyon Technologies XACT-50 is an essential component of the LF guidance, navigation, and control (GNC) system [35, 36]. While intended for attitude determination, the star tracker within the XACT-50 module is also capable of acting as a camera. This instrument has a \(1024 \times 1280\) pixel image sensor with overall specifications as shown in Table 1. The camera is mounted on the − Y face of the LF bus, with the boresight rotated by 10 degrees from the face normal about the LF + X axis (i.e., canted away from the solar panels), as shown in Fig. 5.

Consistent with Fig. 5, and following the conventions of Ref. [37], the camera frame is defined to have the + Z axis along the boresight direction (positive out of the camera). Moreover, when looking out of the camera at the image plane, the +X axis is to the right (direction of increasing image columns) and the +Y axis is down (direction of increasing image rows). This orientation is illustrated in Fig. 6.

The XACT contains a buffer capable of storing up to five images at a time. Once the buffer is full, the five images must be offloaded to the LF flight computer before additional images can be collected. This set of five sequential images is referred to as an Image Block. The time between sequential images within a single Image Block varied, but was as short as about five seconds apart. An entire Image Block sequence (including five images and associated metadata) took about 110 minutes to transfer from the XACT buffer to the LF flight computer. To ensure a complete transfer of data, the start of any new Image Block was always scheduled at least 10 minutes after the conclusion of data transfer for the previous Image Block. As a result, two sequential Image Blocks were always separated by at least 120 minutes. Multiple coordinated Image Blocks in a row are referred to as an OPNAV Pass.

In most cases, each LONEStar image was given an alphanumeric label with the first three numbers corresponding to the Image Block, followed by a letter a-e denoting the image number within that imaging block. For example, image 536b is the second image within Image Block 536. In some instances, the target was changed in the middle of an Image Block—for example, image 593b (the second image within Image Block 593) captured Jupiter, but the subsequent three images in the Image Block captured Saturn. In these cases, the number is iterated by one to correspond to a new target, and the letter reindexes from a (therefore, these final three images capturing Saturn were labeled 594a/b/c).

3.2 Lunar Flashlight Imaging Operations

LONEStar included several OPNAV experiments. Each of these experiments required a particular sequencing of images within each Image Block and OPNAV Pass to achieve the desired objectives. The timing, pointing, and camera settings for each image were collaboratively designed between the LF Operations and OPNAV teams.

3.2.1 LONEStar Imaging Campaign

The LONEStar Imaging Campaign (see Fig. 7) was scheduled from late July 2023 through late November 2023. The campaign began by capturing a series of star field images for geometric calibration (see Sect. 4.4). After acquiring star field images, attention was turned to OPNAV activities. The Earth and Moon (usually close enough to one another to be seen together in a single image) were imaged regularly for the remainder of the LONEStar campaign. Favorable geometry enabled Mercury and Mars to be captured in a single frame for most of August 2023. Following the conclusion of the Mercury and Mars imaging campaign, LONEStar turned to imaging Jupiter and Saturn. Since the apparent angle between Jupiter and Saturn was too large for concurrent imaging (varying from 66 to 72 deg throughout the campaign), these planets were imaged sequentially. The LONEStar experiments required these images to be taken in close succession, thus dictating that they be collected within a single Image Block (since Image Blocks are separated from one another by at least 120 min). Consequently, within the same Image Block, LF was commanded to capture two images of one planet (either Jupiter or Saturn) and three of the other.

Not all of the images captured were usable for subsequent OPNAV analysis. For each target, many early Image Blocks consisted of sweeps through different exposure times and gains in order to best expose the celestial body. Only a subset of these images capture the target at an appropriate, OPNAV-quality exposure. Thus, the OPNAV results in the following sections sometimes have considerably fewer data points than one might infer from Fig. 7 alone.

A large interruption of the imaging campaign occurred in September (shown red in Fig. 7). During this time, the spacecraft experienced a safe mode event following a latch-up believed to have been caused by a radiation charged particle event. The recovery and subsequent investigation into this event took 15 days, during which there were no images captured or downlinked.

3.2.2 Image Block Design

An ideal OPNAV image contains a well-exposed celestial body in the foreground against a backdrop of well-exposed stars to anchor the camera’s inertial attitude. In most cases, however, the dynamic range of the camera is insufficient to detect stars without overexposing the planets. The usual solution, which was employed by LONEStar, is to bracket short exposure OPNAV images (where no stars are visible) on both sides with longer exposure star images (where the celestial body is over-exposed). The attitude of the intermediate OPNAV image is estimated by interpolating between the attitude computed from the surrounding star images. Following this approach, the first and fifth (last) images of each LONEStar Image Block are almost always long-exposure star images. The second, third, and fourth images were typically taken with a much shorter exposure time and/or lower sensor gain setting to capture the OPNAV target without saturation. The time between sequential images in a single Image Block varied, but was no quicker than about 5 s.

For each image within an Image Block, the LF Operations and OPNAV team determined the camera boresight direction necessary to acquire the desired OPNAV image. Along with vehicle-level constraints (e.g., laser payload’s detector Sun keep-out-zone (KOZ)), the OPNAV pointing direction was used to construct a commanded LF body-frame quaternion. This commanded quaternion was visually inspected using the JPL-developed TBALL software application. If found acceptable by the LF Operations team, the attitude was added to the Image Block sequence.

3.2.3 Sequencing, Uplink/Downlink, and Formatting

Once an Image Block sequence was developed by the LF Operations and OPNAV teams, it was incorporated into the LF Master Events Timeline (MET). The MET was then parsed by a customized LF sequence generator that produced the commands that were uplinked to the spacecraft during each human-in-the-loop DSN contact.

Following the successful completion of an LF OPNAV Image Block sequence, the LF Operations team facilitated the downlink of each image via DSN. Each image downlink was scheduled into the MET, which allowed the team to easily adjust for the current downlink data rate, other scheduled activities, and contact duration. The required time to downlink a single image varied from 7.3 min (at 32,000 bits per second) to 58.3 min (at 4,000 bits per second). See Fig. 8 for a timeline of the different data rates used to downlink images during LONEStar. The length of time communicating at higher data rates was extended by implementing commanding for the spacecraft to point its antenna towards Earth during a contact—the effects of this change may be seen by the jump back up to 16,000 bits per second around the 94th day of the LONEStar experiment.

Images were downlinked as a binary file, with three 10-bit pixels packed into every 4 bytes. These binary files were then unpacked and converted into TIFF images for subsequent processing. Additionally, the spacecraft telemetry, event records, and sequences were downlinked and parsed to provide information within a metadata file for each downlinked image including the commanded quaternion, image capture time, camera settings, and sequenced commands.

4 Camera Calibration

Accurate OPNAV requires a well-calibrated camera. Of particular concern is the geometric calibration of the camera, which establishes the relationship between three-dimensional (3D) directions and two-dimensional (2D) image points. Such a correspondence enables observed points to be mapped to physical lines-of-sight (LOS) directions, which in turn may be processed by the desired OPNAV algorithms. Following standard practice, in-flight geometric calibration for LONEStar was performed using images of star fields.

4.1 Point Spread Function (PSF) Characterization

As is typical for star trackers [38], the XACT camera is intentionally defocused to improve star centroiding performance. The shape of the resulting blur (i.e., the impulse response in the spatial domain) is described by the point spread function (PSF), which is often approximated with a bivariate Gaussian distribution [39, 40]. Characterization of the PSF shape is an essential camera calibration task. Indeed, many OPNAV image processing techniques (e.g., subpixel horizon localization [41], image restoration by deconvolution) require knowledge of the PSF as an input to the algorithm.

Following historical precedent, the PSF was initially modeled as a circularly symmetric, bivariate Gaussian above a constant background

where \(J_B\) is the background brightness, \(J_0\) is the PSF amplitude, \((u_c,v_c)\) is the PSF center location, (u, v) are the coordinates in the image defined in accordance with Fig. 6, and \(\sigma\) is the PSF width. The units of \(J,J_B,J_0\) are assumed to be digital number (DN).

The fit residuals for this model, however, were not always as low as desired, and so a bivariate Laplace distribution (which has higher kurtosis) was also considered

where w is the scale parameter of the Laplace distribution.

For both models, the five free parameters of the model are estimated by minimizing the residuals between the predicted and observed PSFs in small patches centered about each star. To match against the observed PSF (which is quantized), the continuous PSF is numerically integrated over the bounds of each pixel. The resulting nonlinear least-squares problem is solved with the Levenberg–Marquardt algorithm (LMA). Example results from two stars used in the calibration process are shown in Fig. 9.

Comparison of best-fit Gaussian PSF (left) and best-fit Laplace PSF (middle) with actual LF image patches (right). Top row shows an example patch of LF image 191g centered around star HIP 52419 (\(\theta\) CAR, visual magnitude of 2.74). Bottom row shows an example patch of LF image 432g centered around star HIP 68815 (visual magnitude of 5.69)

The PSFs for 108 stars from 19 images were used to characterize the PSF throughout the sensor FOV. The post-fit residuals were found to be smaller for the Laplace PSF than for the Gaussian PSF for 107 of the 108 stars. The PSF estimates were rather sparse and noisy (for both the Gaussian and Laplace models), and so a median filter was used to reject outliers and construct a smoothed visualization of PSF variation. Results from this analysis for the Laplace PSF are shown in Fig. 10. The average PSF width is found to be about w = 0.72 pixels, which corresponds to a full-width, half-maximum (FWHM) of about \(2\text {ln}(2)w= 2\text {ln}(2)0.72= 1.0\) pixels.

4.2 Camera Model

The camera calibration procedure aims to estimate the free parameters in a model that maps directions in the International Celestial Reference Frame (ICRF) into image pixel coordinates. Thus, before proceeding further, it is appropriate to describe this model in more detail.

Consider a celestial object (e.g., star, planet) with an ICRF direction given by \({\varvec{{e}}}_i\). Since the LF spacecraft is moving at a speed of around 29-30 km/s relative to the Solar System Barycenter (SSB), it is important to account for stellar aberration. Defining the LF spacecraft’s ICRF velocity as \({\varvec{{v}}}_I\), the observer’s velocity as a fraction of the speed-of-light may be computed as \(\varvec{\beta }= {\varvec{{v}}}_I / c\). Thus, to first order in \(||\varvec{\beta }||\), the apparent ICRF direction \({\varvec{{e}}}_i'\) is given by [42]

The apparent ICRF direction \({\varvec{{e}}}_i'\) must be rotated into the camera frame. Let the rotation from ICRF to the camera frame be given by \({\varvec{{T}}}_C^I\), such that the apparent LOS direction in the camera frame \({\varvec{{a}}}_i'\) is given by

The apparent direction in the camera frame \({\varvec{{a}}}_i'\) may be projected onto the image plane with the pinhole camera model, which may be compactly represented with the proportionality relationship [37]

where \(\bar{{\varvec{{x}}}}_i\) is the apparent image plane location in homogeneous coordinates. Recall here that the image plane is a fictitious plane located outside the camera at \(z_C=1\) (see Fig. 6).

The idealized image plane coordinates \(\bar{{\varvec{{x}}}}_i\) must then be perturbed due to imperfections in the optical system. The LONEStar project accounts for this with the Brown–Conrady model [43,44,45], which is the same model used for the Orion OPNAV camera on Artemis I [46, 47] and OSIRIS-REx TAGCAMS [48]. That is, the distorted (observed) image plane coordinates may be computed as

where \(r_i^2=x_i^2+y_i^2\). This can be written in homogeneous coordinates as \(\bar{{\varvec{{x}}}}_{d_i}=[{\varvec{{x}}}_{d_i};1]\). Finally, the distorted image plane coordinates may be converted to pixel coordinates with the camera calibration matrix \({\varvec{{K}}}\) as

where \(d_x\) and \(d_y\) are the ratio of focal length to pixel pitch and (\(u_c\),\(v_c\)) are the pixel coordinates where the camera boresight pierces the image plane. If the pixels are square then \(d_x\) = \(d_y\). Moreover, assuming small angles, note that \(IFOV \approx 1 / d_x\).

Thus, in total, the camera model used for LONEStar has nine free parameters: five lens distortion parameters from the Brown-Conrady model (\(k_1,k_2,k_3,p_1,p_2\)) and four parameters for transforming from image plane to pixel coordinates (\(d_x,d_y,u_p,v_p\)).

4.3 Image Processing

The calibration procedure requires as an input the measured pixel locations of stars in an image. This is an image processing task which aims to find the center of brightness of purposefully defocused stars. The LF OPNAV team implemented a standard star detection and centroiding algorithm.

Many star field images—especially those near the Sun or Earth—experienced a noticeable amount of stray light. This was expected given the instrument’s specifications. To remove the resulting background gradient, a field flattening operation was performed. This consisted of applying a median filter to construct a background image, and then removing that background from the original image. From the flattened image, a binary image containing clusters of potential stars was formed by applying a global threshold to the flattened image. Then, a connected components analysis was used to find contiguous groups of pixels in the binary image, where only groups having \(n \ge 9\) total pixels were considered as candidate stars. Final star centroids in the image were then computed for each group of pixels using a simple center of brightness (COB) computation [49, 50].

where \(DN_i\) is the digital number representing the intensity of the i-th pixel in the group. Non-star centroids were rejected by reprojecting the Hipparcos star catalog into the image (using the best available attitude estimate) and only retaining measured centroids that corresponded to a catalog star. Catalog correspondence was determined via a distance threshold of 25 pixels (arbitrarily selected to be large enough to accommodate differences between the actual and commanded attitudes) paired with a nearest-neighbor ratio test, enforcing that the distance to the second-closest catalog star must be at least twice as great. An example output of this procedure is shown in Fig. 11, which shows a \(9 \times 9\) section of a star field image centered on a star candidate.

LF star centroiding was performed using a center of brightness (COB) algorithm. A segment of LF image 191d centered around star HIP 56561 (\(\lambda\) CEN, visual magnitude of 3.11) is shown on the left. The star border used by the COB algorithm (cyan outline) and the corresponding centroid (cyan dot) are shown on the right. Each pixel is annotated with its digital number (DN)

4.4 Geometric Calibration

Geometric calibration is a parameter estimation problem that seeks to find camera model coefficients which bring projections of known star LOS directions into alignment with the imaged candidate stars. The objective here is to estimate (or fix) the nine free parameters in the model described in Sect. 4.2.

Ground truth ICRF star LOS directions were constructed using the Hipparcos catalog [51] and the standard five-parameter model given by [52]

This model accounts for perturbations in the reference SSB direction due to both (1) the star’s proper motion (\(\mu _\alpha\) and \(\mu _\delta\)) since the Hipparcos catalog epoch and (2) the annual parallax (\(\varpi\)) due to LF’s position. Observed star LOS directions were acquired from a total of 35 star field images, with 282 stars uniquely identified across these images using the procedure described in Sect. 4.3.

With corresponding Hipparcos ICRF directions \(\{{\varvec{{e}}}_i\}_{i=1}^n\) and measured image centroids \(\{\bar{{\varvec{{u}}}}_i\}_{i=1}^n\) in hand, camera parameter estimation was performed by minimizing the reprojection errors of Hipparcos into the digital image. This was done by optimizing on the nine parameters of the camera model and corrections to the a priori attitudes of the 35 star field images. This nonlinear least-squares problem was solved using LMA.

LONEStar introduced a few assumptions to reduce the number of estimated camera parameters. First, the sensor pixels were assumed to be square and evenly spaced, leading to \(d_x = d_y\). Furthermore, the optical center was taken to be the geometrical center of the image plane, and thus \(u_p\) and \(v_p\) were considered fixed exactly at the image center. In doing so, errors in boresight location were absorbed into the image attitude corrections as discussed in Ref. [46]. This left \(d_x\) as the only intrinsic parameter to be estimated.

It is also possible to simplify the parameterization of the full Brown-Conrady model. After experimentation similar to that in Ref. [46], it was found that only the decentering coefficients, \(p_1\) and \(p_2\), and the first radial coefficient, \(k_1\), were important. Thus, the LONEStar camera model assumed \(k_2 = k_3 = 0\).

Including image attitude correction in the calibration process is essential and was found to significantly enhance the quality of the in-flight LONEStar calibration.

Prior to calibration (assuming a perfect pinhole camera), a clear pincushion distortion pattern was observed (see left frames of Figs. 12 and 13). This idealized model leads to reprojection errors on the order of about 5-10 pixels (see left frame of Fig. 14), depending on the distance from the image center. Application of the LONEStar calibration procedure described here reduces the reprojection error to the subpixel level across the entire image (see right frame of Fig. 14). Qualitatively, the high-level pincushion distortion is entirely removed in the right frame of Fig. 13. Moreover, the residuals in Fig. 12 appear to be randomly directed after calibration, indicating that the global structure of the lens distortion has been removed.

Magnitude of star centroid residuals as a function of radius from the center of the image. Pre-calibration residuals (left) are substantially reduced after the calibration is applied (right). Post-calibration residuals are subpixel across the entire image, indicating that the distortions are well-explained by the Brown–Conrady model

4.5 OPNAV System Effective Dynamic Range

It is necessary to characterize the effective dynamic range of the end-to-end OPNAV system. The effective dynamic range (i.e., the difference between the brightest and dimmest object an optical system can detect) depends on both the camera specifications and the image processing algorithms. For example, the centroiding algorithm used by LONEStar (see Sect. 4.3) is designed for relatively high signal-to-noise ratio (SNR) objects and a larger effective dynamic range could likely be achieved with low-SNR star centroiding algorithms (e.g., image coadding [53]). The same 35 star field images used for calibration were interrogated to empirically bound the effective dynamic range for the LONEStar experiment. For each calibration image, this analysis considered only the stars with no saturated pixels that were also successfully matched to a known star from the Hipparcos catalog. The difference in Hipparcos visual magnitude between the brightest and dimmest successfully matched star was computed for each image, giving us an empirical lower-bound on the dynamic range. The system-level dynamic range was found to be approximately \(\Delta m = 5\).

5 Celestial Triangulation with Distant Planets

5.1 Overview of Celestial Triangulation

The selection of the specific triangulation algorithm to use for a particular spacecraft localization problem is important. Different triangulation algorithms minimize different cost functions and, consequently, are not interchangeable when LOS measurements, planet ephemerides, and camera attitude estimates are noisy. A comprehensive study of triangulation methods is provided in Ref. [25], including derivations of the analytic error covariance for each. Further study of triangulation performance with different types of uncertainty may be found in Ref. [54]. However, for the purposes of LONEStar, three specific triangulation algorithms are of primary concern: Direct Linear Transform (DLT), midpoint algorithm, and Linear Optimal Sine Triangulation (LOST).

5.1.1 Direct Linear Transform (DLT)

Suppose that a spacecraft with ICRF position \({\varvec{{r}}}_I\) views a distant planet at position \({\varvec{{p}}}_{I_i}\). It follows, then, that the ICRF LOS direction from the spacecraft to the planet is a point in \(\mathbb {P}^2\) given by

Without (yet) considering the optimality in the presence of noisy measurements, the DLT proceeds with the purely geometric argument that the troublesome proportionality may be removed by taking the cross-product of both sides with the known measurement \(\varvec{\ell }_{C_{i}}\). Doing so,

hence

For many LOS observations \(\{\varvec{\ell }_{C_i}\}_{i=1}^n\) this relation may be stacked into a linear system to solve for the unknown \({\varvec{{r}}}_I\)

In this work, since the planet positions \({\varvec{{p}}}_{I_i}\) are assumed known from the DE440 ephemeris files [55], it is possible to directly solve for \({\varvec{{r}}}_I\).

Note, however, that the scaling of the LOS direction is arbitrary and it is often helpful to describe a particular scaling of the LOS direction as \(\varvec{\ell }_{C_i} \propto w_i {\varvec{{a}}}_i\) where, as before, \({\varvec{{a}}}_i\) is a camera frame unit vector. Consequently, the DLT may be written as

where different choices of the LOS scaling parameter \(w_i\) (which act as weights on corresponding measurements) will lead to different answers in the presence of measurement noise. It will now be shown how different cost functions lead to different choices of \(w_i\).

5.1.2 Midpoint Algorithm

An inertial LOS measurement describes a direction in 3D space. If that LOS direction is constrained to pass through a particular 3D point (e.g., the location of the observed planet at a specified time) then the result is a line of position (LOP) on which the spacecraft must lie. If the LOS measurements are perfect, two (or more) LOPs exactly intersect at a point. If the LOS measurements are noisy, two (or more) LOPs do not intersect at all. In this case, one reasonable cost function for optimal triangulation is to find the spacecraft location \({\varvec{{r}}}_I\) with the minimum (perpendicular) distances to each of the LOPs. This is called the midpoint algorithm since, for two LOPs, the resulting estimate lies halfway along the line connecting the LOPs at their closest point.

To proceed, recognize that the perpendicular distance of a point \({\varvec{{r}}}_I\) from a LOP with direction \({\varvec{{e}}}_i\) through point \({\varvec{{p}}}_{I_i}\) may be computed as

The midpoint cost function proposed above would then be

Applying the first differential condition and recalling that \(\left[ {\varvec{{a}}}_i \times \right] ^4 = -\left[ {\varvec{{a}}}_i \times \right] ^2\) produces an optimal estimate of \({\varvec{{r}}}_I\) satisfying

Rewriting slightly yields [56]

which is nothing more than the Normal equations for the DLT with \(w_i = 1\). Thus, in practice, triangulation by the midpoint algorithm is found as the solution to the equivalent linear system of the form (just Eq. (14) with \(w_i = 1\))

5.1.3 Linear Optimal Sine Triangulation (LOST)

In cases where measurements are simultaneous (e.g., two celestial bodies in one image) and where errors in the LOS directions are dominated by centroid localization in the image, the maximum likelihood estimate (MLE) may be found in closed form with the LOST algorithm. Thus, for the LOST algorithm, one seeks to minimize the cost function

which is nothing more than the reprojection errors as weighted by the measurement covariance. The analytic solution to this optimization problem is given in Ref. [25] and only the final result is shown here.

Assuming the centroiding errors are isotropic and uncorrelated, the LOST weighting of the DLT is

To avoid unnecessary normalization of image plane coordinates, note that \(\bar{{\varvec{{x}}}}_i = \Vert \bar{{\varvec{{x}}}}_i \Vert {\varvec{{a}}}_i\) such that one may write \(w_i \left[ {\varvec{{a}}}_i \times \right] = q_i \left[ \bar{{\varvec{{x}}}}_i \times \right]\) where

The LOST algorithm removes the redundant row in \(q_i \left[ \bar{{\varvec{{x}}}}_i \times \right]\) with the \(2 \times 3\) matrix \({\varvec{{S}}}= \left[ {\varvec{{I}}}_{2 \times 2} , {\textbf {0}}_{2 \times 1} \right]\), such that

where \({\varvec{{p}}}_{I_i}\) is the location of the planet when the observed light was reflected by the body (some time \(\Delta t\) before the time of image capture).

Finally, an important feature of the LOST method is that light time-of-flight (LTOF) may be accounted for directly within the triangulation solution to within a few milliarcseconds without iteration. Introducing the term

where \({\varvec{{v}}}_i\) is the velocity of the observed celestial body and c is the speed of light, the LTOF-corrected triangulation solution is simply [26]

where \({\varvec{{p}}}_i^+\) is the planet location at the time of image capture.

5.2 Image Processing and Attitude Detection

Successful triangulation relies on ICRF LOS measurements to the observed planets. The distant planets observed by LONEStar (Mercury, Mars, Saturn, Jupiter) were unresolved and appear similar to stars. This allows for use of the same centroiding algorithm as described in Sect. 4.3, but with some minor adjustments to brightness and cluster size thresholding.

The planet centroid location provides a LOS measurement in the camera frame that must be transformed into ICRF for triangulation and navigation. Ideally, the attitude could be determined from background stars within the OPNAV image itself. However, the LONEStar effective dynamic range (see Sect. 4.5) and viewing geometry (see scenario-specific discussions) prevented concurrent imaging of planets and stars. When possible, short exposure OPNAV images were bracketed on both sides with long exposure star images. The attitude at the intermediate time of the OPNAV image was then estimated using spherical linear interpolation (SLERP) [57]. For some OPNAV images, it was only possible to obtain one corresponding long exposure star image, in which case the attitude obtained from that single image was utilized. For example, when performing sequential imaging of Jupiter and Saturn, the limitation of five images per Image Block means that only one of the OPNAV images could be bracketed on both sides with star images (usually Jupiter). The remaining planet OPNAV image (usually Saturn) had only a single star image, and thus only a single attitude reference.

Attitude determination with these long-exposure star field images was computed as an update to the commanded attitude from LF telemetry. Star LOS measurements were obtained and matched against a Hipparcos reprojection computed with the known commanded attitude. In some scenarios (particularly with images of Mercury and Mars), excessive background light in the image drove the choice for a low signal-to-noise threshold while screening star candidates, resulting in a multitude of false (non-star) candidates. Matching between star candidates and known catalog stars was thus a multi-step process that proved effective at rejecting false-positive stars in the face of exceptionally noisy images. First, the nearest catalog neighbors to each candidate point are obtained, and a simple threshold is applied, filtering out candidates with no close-by catalog star. Then, a nearest-neighbor ratio test is conducted for each candidate star, ensuring that a match with any nearby catalog entry is unique. It is natural to expect that any attitude offset from the reprojection will manifest as a shift of pixels in a consistent direction and magnitude across the entire image. As a result, the final step is to calculate the median pixel residual direction and magnitude from the set of multiple potentially valid observation-catalog pairs, and retain only pairs that fall within a specified tolerance of these median values.

5.3 Instantaneous Triangulation: Mercury and Mars

For a brief period of time in August 2023, favorable geometry allowed Lunar Flashlight to simultaneously observe Mercury and Mars in a single image. Six simultaneous observations of Mercury and Mars were successfully acquired as part of the LONEStar imaging campaign. The apparent path of both Mercury and Mars (as seen from LF) against the star field background may be seen in Fig. 15, with the resulting measured LOS directions to each planet shown as dots on the celestial sphere. This was the only time during the LONEStar imaging campaign when two distant planets were simultaneously visible with the camera. By capturing two planets in the same image (see Fig. 16), it is possible to demonstrate instantaneous triangulation using the LOST algorithm.

Although the August 2023 geometry permitted simultaneous imaging of two planets (apparent inter-planet angle of about 4.5–5.5 deg, see Table 2), the lighting condition was challenging. Indeed, the Sun was within the camera’s manufacturer-recommended 45 deg Sun KOZ for the entirety of this imaging opportunity. The lighting was best (although still not good) at earlier dates, and deteriorated as time advanced and the angle between the planet and Sun decreased (as can be seen from top to bottom in Fig. 17 and Table 2). Stray light from the Sun was a pervasive and performance-limiting effect for all of the Mercury and Mars OPNAV images. Nevertheless, the LONEStar team was still able to compute OPNAV solutions—a notable result when considering that the images were captured substantially outside of the instrument’s recommended operating conditions.

The instrument did not have sufficient dynamic range (see Sect. 4.5) to simultaneously capture well-exposed planets and background stars, and thus pairs of short and long-exposure images were required. The long exposure and short exposure images were separated by about 5 s from one another. It was not generally possible to bracket short-exposure planet images on both sides with long-exposure star images for the Mercury and Mars observations. An attitude stability study performed with images of Jupiter and Saturn (see Sect. 5.4) suggests that the LF pointing errors over a 5-second interval are likely on the order of 15–20 arcsec (i.e., about 0.5 pixel).

Short-exposure images were of reasonable quality even within the Sun KOZ, with properly exposed planets that could be easily centroided (see Fig. 18). These short exposure images had no detectable stars. Conversely, long exposure times revealed numerous stars in each image, but the planets became saturated and a significant amount of stray light saturated entire regions of the image. Despite these challenges, star centroiding, matching, and attitude determination was possible (see Fig. 19; note that the Bayer designations in this figure—and all subsequent figures—were determined from Ref. [58]). Unfortunately, as the sun angle \(\phi\) decreased, so too did the quality of the attitude correction that could be derived from these long-exposure images, in turn worsening the quality of the triangulation solution.

Three stars from a long-exposure image of Mars and Mercury. The \(\circ\) indicates a measured centroid and the \(\diamond\) indicates a centroid projection from the Hipparcos star catalog. Note that the brightness of each patch around these stars has been renormalized such that the darkest pixel is black and the brightest is white

For simultaneous LOS measurements, LOST provides the statistically optimal triangulation solution without iteration. Performance of the LOST algorithm (see Eq. (23) and Ref. [25]) for localizing LF using each of the six images containing both Mercury and Mars is summarized in Table 3. Residuals are reported relative to the reference trajectory.

Assuming isotropic centroid errors with image plane covariance \(\sigma _x=(\sigma _u)(IFOV)\) the error covariance of the MLE triangulated solution (whether by LOST or a classical iterative scheme) is given by [25]

where everything in this equation is known and \({\varvec{{P}}}_r\) may be readily computed. In the special case of a narrow FOV camera and only two observations, the generic covariance may be simplified and the total error \(\sigma _{tot} = \sqrt{Tr({\varvec{{P}}})}\) may be approximated as [22]

Of note is that the simplified total error in Eq. (27) from Ref. [22] was derived in a completely different manner than the generic covariance in Eq. (26) from Ref. [25]. Nevertheless, some simple analysis will show the results to be consistent with one another, which is numerically demonstrated in Table 4. The Mahalanobis distances for these cases are somewhat large, likely due to the poor attitude knowledge that is a result of collecting images within the sensor’s Sun KOZ.

Additionally, it is important to consider light time-of-flight (LTOF) effects. There are a great many ways to account for LTOF, and results for two such methods are summarized in Table 5. The first method uses an iterative approach to appropriately backdate the ephemeris catalog query to the time when photons were reflected by the celestial body instead of the time of the image collection. This was mechanized for LONEStar using the converged Newtonian (CN) option within the SPICE toolkit [59, 60]. Another option is the analytic and non-iterative LTOF correction that may be written directly into LOST (see Eq. 25 and Ref. [26]). Since both of the LTOF corrections give essentially the same solution, all subsequent discussions use the non-iterative method from Ref. [26].

5.4 Sequential Triangulation: Jupiter and Saturn

In most cases, simultaneous access to two or more planet LOS measurements is not achievable. This makes instantaneous triangulation of the type discussed in Sect. 5.3 impossible. However, if two of more planets can be viewed in rapid succession, an acceptable triangulation solution may still be formed. LONEStar demonstrated this concept on sequential images of Jupiter and Saturn.

LONEStar performs triangulation with sequential images using the midpoint algorithm. The selection of the midpoint algorithm instead of LOST is intentional. While LOST (and its variants) provide statistically optimal triangulation, the maximum likelihood cost function on which LOST is based fundamentally assumes that the observer resides at a single point. With sequential images from a moving spacecraft, however, each observation originates from a different point and even perfect LOPs will not intersect in a point. When there are only two LOP observations that are very close in time, a reasonable estimate of the spacecraft’s location at a time halfway between the observations is the point halfway along the line having the shortest distance between the two LOPs (see Fig. 20). This is exactly the position that the midpoint algorithm provides (see Sect. 5.1.2). Of special note is that the midpoint algorithm only makes sense when there are exactly two images, and it would not be appropriate for sequential triangulation with three (or more) sequential images. See Sect. 5.5 for a discussion of how to process many sequential images.

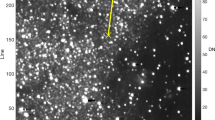

The apparent magnitude of Jupiter as seen from Lunar Flashlight was about \(m \approx -\,\,2.85\) during days 80-120 of the LONEStar imaging campaign. During this same period of time, Jupiter appeared to be moving across a rather dim portion of the constellation Aries and adjacent to a bright portion of the constellation Cetus (see Fig. 21). The brightest star within any of the Jupiter OPNAV images (HIP 14135, \(\alpha\) CET) had a visual magnitude of m = 2.54. Thus, the magnitude separation between the brightest star and Jupiter is \(\Delta m \approx 2.54 - (-\,\,2.85) \approx 5.39\). Recognizing that the effective dynamic range of the sensor is only \(\Delta m \approx 5\), this difference of \(\Delta m = 5.39\) is just beyond the limits of the LONEStar pipeline and the system cannot detect a star without also saturating Jupiter. Consequently, Jupiter observations required separate images for Jupiter and for stars, such as shown in Fig. 22. The star field images routinely matched about 7-12 stars to the Hipparcos database (e.g., Fig. 23).

A pair of sequential short exposure (top) and long exposure (bottom) images of Jupiter. The OPNAV LOS measurement is obtained from the top image where Jupiter is properly exposed, while the camera attitude is obtained from the bottom image where stars are visible. The \(\circ\) indicates a measured centroid, \(\diamond\) indicates a centroid projection from the reference trajectory, and \(\times\) indicates the reprojection from the OPNAV-produced solution. Each zoomed patch has been renormalized such that the darkest pixel is black and the brightest is white

Saturn appeared substantially dimmer than Jupiter. The apparent magnitude of Saturn as seen from Lunar Flashlight was about m = 0.64 − 0.82 during days 80–120 of the LONEStar imaging campaign. Saturn appeared to transit across a very small portion of the constellation Aquarius (see Fig. 24), near a number of modestly bright stars (notably \(\theta\) AQR at m = 4.17 and \(\iota\) AQR at m = 4.29). The difference in apparent visual magnitude between Saturn and these bright stars is \(\Delta m \gtrsim 3.35\), which is easily detectable within the effective dynamic range of the LONEStar system (\(\Delta m \approx 5\)). Consequently, it is usually possible to see two or three stars in the background of most of the Saturn images (e.g., Fig. 25). The small number of background stars, however, led to relatively poor attitude determination performance. Indeed, experimentation demonstrated that superior image alignment could be achieved by computing the attitude from long exposure stars images (which typically contained 7–12 matched stars) using the same procedure as for Jupiter.

Example of a short-exposure image of Saturn, demonstrating that stars and Saturn are simultaneously observable. The \(\circ\) indicates a measured centroid, \(\diamond\) indicates a centroid projection from the reference trajectory, and \(\times\) indicates the reprojection from the OPNAV-produced solution. Each zoomed patch has been renormalized such that the darkest pixel is black and the brightest is white

As can be seen from the observed paths of Jupiter and Saturn across the celestial sphere (Figs. 21 and 24), the apparent angle between them was about 70 deg (see Table 6). This angle was measured in practice by first imaging Jupiter (with star field images), and then slewing the spacecraft to subsequently image Saturn (with star field images) within the same Image Block. The time between sequential Jupiter and Saturn images was about 135 seconds for the six observation pairs summarized in Table 6.

Two long-exposure images of Jupiter used to bracket the attitude of short-exposure OPNAV image 590b. The filled circle indicates the centroid observed in the corresponding image, with the unfilled circle indicating the centroid location in the other bracketing image. Approximately one pixel of attitude drift is observed between the image on the left (590a) and the image on the right (590c)

Within a particular Image Block, since the planet observations are separated by a slewing maneuver, only one of Jupiter or Saturn could be bracketed on both sides with star field images; the other planet only had a star field image on one side. Bracketing was usually performed with Jupiter since the Saturn images contained a few background stars. In one case (608b, 607b), one of the star field images could not be downlinked and thus attitude bracketing was not possible with either planet.

The time between a short-exposure planet image and its corresponding long-exposure star image was about 5 s. Consequently, the time between two long-exposure star images bracketing a short-exposure planet image was about 10 seconds. The change in attitude across a bracket may be used to study the pointing stability during the LONEStar experiment, which is especially important for understanding the OPNAV pointing performance when bracketing with two star images is not possible (i.e., for one-sided attitude determination). As can be seen in Table 6, the attitude change between the star bracket images was about 30–45 arcsec over the 10-s interval, which is equivalent to about one pixel (the IFOV is about 36.6 arcsec, see Table 1). This suggests an attitude rate on the order of about 3.0-4.5 arcsec/sec during OPNAV operations. The apparent movement of stars (by about one pixel) between two star bracket images for Jupiter may be seen in Fig. 26. By interpolating the attitude at the midpoint, the attitude error associated with the bracketed planet image is substantially reduced.

Results of triangulation using the midpoint algorithm with the measured LOS directions to Jupiter (red dots in Fig. 21) and Saturn (purple dots in Fig. 24) may be found in Table 7. The covariance of the midpoint algorithm is analytically computed using the methods of Ref. [25], and the total error \(\sqrt{Tr({\varvec{{P}}})}\) and Mahalanobis distance are reported in Table 8. The analytic approximation of total error from Eq. (27) and Ref. [22] could not be used here since different centroiding errors were assumed for Jupiter (0.5 px) and Saturn (0.25 px). In addition to planet centroiding errors, these Mahalanobis distance numbers assume a pointing error of about 0.25 px.

5.5 Dynamic Triangulation: Jupiter and Saturn

With only a single camera having a narrow FOV, instantaneous triangulation by the simultaneous observation of two (or more) planets is an infrequent occurrence. This constraint may be relaxed by imaging two planets in rapid succession and triangulating with the midpoint algorithm, as was done in Sect. 5.4 with Jupiter and Saturn. In many cases, however, it is operationally inconvenient to sequentially observe two (or more) planets in rapid succession. The concept of dynamic triangulation allows us to have a rather large amount of time between sequential observations, thus substantially simplifying OPNAV operations. Dynamic triangulation accomplishes this through a two-step process: (1) generating an initial guess with a simplified dynamical model and (2) refining this guess using the full dynamical model.

The first step in the process is generating the initial guess, which follows a similar procedure as Ref. [25]. The principal concern here is that the initial guess is good enough that the refinement process in step 2 converges to the correct state. For short timespans (relative to the dynamics of the orbit), it is often convenient and practical to simply assume rectilinear motion to construct the initial guess, as was done in these LONEStar experiments. The generic framework for the first step of dynamic triangulation follows the framework of Ref. [25].

To generate the initial guess, first assume a linearized dynamical model which permits a solution of the form

where \(\varvec{\Phi }_{(\cdot )}\) are \(3 \times 3\) submatrices of the full \(6 \times 6\) state transition matrix (STM). To obtain only the position at some time \(t_i\) for use in triangulation, the top three rows of this expression may be compactly written as

where \(\varvec{\Phi }_r = [\varvec{\Phi }_{rr} \ \varvec{\Phi }_{rv}]\). This may be substituted in to the DLT (see Eq. (23)) to obtain a linear system as a function at the arbitrarily chosen reference time

Thus, the initial dynamic triangulation problem estimates the spacecraft’s full translational state (both position and velocity) at the reference time, rather than only the position.

This initial guess is then refined by minimizing the reprojection error with a nonlinear least-squares solver (e.g., LMA) and full dynamical model. This refinement follows the same batch estimation (i.e., orbit determination) procedure as discussed in Sect. 7.

To demonstrate this approach, a subset of four measurements from Sect. 5.4 were chosen—two of Jupiter (images 588b and 598b) and two of Saturn (images 584b and 594b)—with at least 2.5 days between each sequential measurement. This sequence of measurements spans a total of about 13 days, which is short enough (for heliocentric orbits) to assume rectilinear motion when generating the initial guess with Eq. (30). The initial state estimate constructed in this way yields the state vector residuals shown in the top rows of Tables 9 and 10, with a position residual of 774,503 km (121.43 Earth radii) and a velocity residual of 3452 m/s.

Minimizing the reprojection error (step 2) reduces the measurement residuals (left frame of Fig. 27) and state residuals (Tables 9 and 10) by an order of magnitude, yielding a final state estimate with a position residual of 137,674 km (21.59 Earth radii) and a velocity residual of 132 m/s. Fig. 27 illustrates how sequential steps within the dynamic triangulation process converge to a result that is in excellent agreement with the reference trajectory. With this data, it was shown that dynamic triangulation can use infrequent LOS measurements to perform celestial triangulation for the purposes of IOD.

It is important to remember that the residuals in Tables 9 and 10 are only an IOD solution produced with four measurements over a relatively short period of time. The IOD result is not used to navigate directly, but only to generate the initial guess for a batch orbit determination that processes many measurements (e.g., more than four observations, more targets than just Jupiter and Saturn) over a longer period of time (e.g., longer than 13 days). The LONEStar batch orbit determination solution is discussed in Sect. 7.

6 Optical Navigation with the Earth and Moon

6.1 Earth and Moon Viewing Geometry

Images of the Earth and Moon were collected throughout the entirety of the LONEStar imaging campaign (see Fig. 7 in Secti. 3.2.1). During this time, both the Earth and Moon were always visible in a single OPNAV image. For the duration of the approximately 100-day span of imaging, the Earth-Moon system appeared to transit the constellations Sagittarius, Capricornus, Aquarius, and Pisces, from the vantage point of LF (see Figs. 28 and 29). Early in the LONEStar imaging campaign, the Moon’s orbit had an apparent angular diameter of 10.3 deg (bottom right of Fig. 28). However, as the distance between LF and the Earth-Moon system increased with time, the apparent angular diameter of the Moon’s orbit dropped to only 2.5 deg (top left of Fig. 28).

Apparent motion of the Moon (dark gray) on the celestial sphere as seen by Lunar Flashlight. The path of the Earth is shown in light blue for reference. The measured LOS directions to the Moon obtained from LONEStar OPNAV images are shown as dark gray dots. Tick marks along the Moon’s track indicate elapsed time from the LONEStar reference epoch

Apparent motion of the Earth (blue) on the celestial sphere as seen by Lunar Flashlight. The path of the Moon is shown in light gray for reference. The measured LOS directions to the Earth obtained from LONEStar OPNAV images are shown as blue dots. Tick marks along the Earth’s track indicate elapsed time from the LONEStar reference epoch

Before the beginning of the LONEStar imaging campaign, two images were acquired prior to LF’s ejection from the Earth-Moon system (see Fig. 30). Disk-resolved imagery of the Earth remained available for much of the LONEStar imaging campaign. Although the intentional XACT defocusing does not permit crisp imagery of Earth, a number of prominent features remain visible. In particular, the bright regions of Earth have been directly correlated with prominent cloud patterns from NOAA data [61, 62] at the specific image times. The LONEStar Earth images coincided with the northern hemisphere’s 2023 hurricane season and LF witnessed a number of named weather patterns from heliocentric space. A sampling of images with continent overlays (using the OPNAV-derived state estimates presented in subsequent sections) and callouts to known weather patterns may be found in Fig. 31.

6.2 Moon Localization

As the LF trajectory departed the Earth-Moon system (see Fig. 3), the Moon transitioned from a resolved body to an unresolved body (subtending 10.4 pixels on 2023-AUG-10 to 2.2 pixels on 2023-NOV-08) during the LONEStar imaging campaign. Even when close, the Moon was too small for effective horizon-based OPNAV. Thus, both template matching and centroiding (with photocenter offset correction) were explored for generating lunar LOS measurements.

To centroid via template matching, 2-D renderings of the lunar surface were created to match the appearance of the Moon in the raw image. The Moon was rendered as a constant albedo sphere using the lunar-Lambert reflectance model [63]

where i is the incidence angle, e is the emission angle, and g is the phase angle. Following the heuristic observations from Ref. [64], the phase-dependent blending of the Lambertian and Lommel-Seeliger models was assumed to be \(\beta (g) = \text {exp}\left( -g / 60^{\circ } \right)\). The rendered sphere was then rotated into LF’s camera frame such that orientation of the illuminated sphere was representative of the true image.

To construct a template at the appropriate scale, the lunar-Lambert disk was numerically integrated over the extent of each pixel. Determining the appropriate bounds for this discretization depends on an a priori estimate of the Moon’s diameter in the image, which was obtained from the reference trajectory (though a functionally equivalent answer could come directly from image processing). The discretized (i.e., pixelated) disk was then defocused using the PSF from Sect. 4.1 to arrive at the template that was used for normalized cross-correlation. This process is illustrated in Fig. 32.

Template matching is performed using normalized cross-correlation (NCC) [65]. During the cross-correlation process, NCC normalizes the brightness of both the image and template, which circumvents the need to consider the absolute brightness of the Moon in the real image. The output of the NCC algorithm is an image-sized array of correlation scores indicating the agreement between a local image patch and the template. A typical correlation peak is shown in Fig. 33. Subpixel template registration (i.e., Moon centroiding) is achieved by fitting a paraboloid to the correlation peak and finding the maximum.

A zoom-in on the Moon (middle) within LF image 544b (left). The peak of the cross-correlation heatmap (right) corresponds to the \(\circ\) marker in the middle image. As a comparison, the \(\square\) marker shows the phase-corrected COB and the \(\diamond\) marker is the reprojection of the Moon’s center from the reference trajectory. A reprojection of the Moon’s horizon is also shown

An alternative to the NCC-based template matching method is center of brightness (COB) with a phase-dependent photocenter offset correction. Upon identifying a large contiguous cluster of bright pixels as the Moon (e.g., by proximity to the Moon’s expected location), the COB is computed in the same way as for unresolved objects (see Eq. (8)). At non-zero phase angles, the COB is biased away from the geometric center in the direction of the Sun. As such, a photocenter offset correction may be applied in the direction opposing the Sun to compensate for this bias. Given the direction from the Sun in the image plane \({\varvec{{u}}}_{illum}\) (i.e., the \(2 \times 1\) direction of incoming sunlight) and apparent radius of the Moon \(R_M\) in pixels, the photocenter offset correction for a partially illuminated Lambertian sphere is given by [66, 67]

An example may be seen in Fig. 34. As can be seen in Fig. 35, the photocenter offset correction brings the raw COB into better agreement with the NCC centroids.

Orbit determination was attempted with both the NCC centroids and offset corrected COB centroids, and NCC was found to consistently provide lower post-fit residuals. Thus, all subsequent triangulation and orbit determination results assume lunar LOS measurements produced by NCC with a lunar-Lambert template.

Comparison of different Moon centroiding algorithms (right) on LF image 596d (left). In the zoom-in on the right, the NCC centroid is the \(\circ\) marker, the reprojection of the reference trajectory solution is the \(\diamond\) marker, uncorrected COB centroid is the \(\triangle\) marker, and the photocenter corrected COB centroid is the \(\square\) marker

6.3 Earth Localization

OPNAV measurements of Earth were complicated by the body’s apparent size, optical effects, cloud cover, and atmosphere. Near the beginning of the LONEStar campaign (2023-AUG-10), the Earth subtended almost 35 pixels and dropped to a minimum of 11 pixels by the end of the mission (2023-NOV-08). Thus, Earth was processed as a resolved body for the duration of the experiment. There was often a noticeable coma that complicated the use of rudimentary image processing techniques. Additionally, template matching techniques (e.g., as was used for the Moon in Section 6.2) were deemed inappropriate given the constantly evolving cloud cover which played a dominant role in the Earth’s appearance. For these reasons, Earth localization was accomplished via horizon-based OPNAV. LONEStar accomplished this with the Christian-Robinson algorithm (CRA) [68], specifically using the contemporary formulation described in Ref. [37].

A significant challenge to using a horizon-based method on the Earth is the presence of its atmosphere, which is well-known to artificially shift the apparent location of the lit limb [69]. Such challenges related to Earth-based OPNAV were also studied during Artemis I [10]. However, given the intentional defocusing of the LF camera and small apparent diameter of Earth, the contribution of atmospheric effects to limb localization error is comparatively small.

Earth localization pipeline. From left to right: a zoomed view of the Earth in frame, b scanlines in illumination direction to create a coarse mask of edge points, c Sobel edges (unfiltered), d the resultant subpixel edge estimates (white+), the CRA centroid (\(\circ\)), the reprojection of the reference trajectory solution (\(\diamond\)), and the overlaid reprojected ellipsoid

A straightforward pipeline similar to Ref. [70] was implemented, as depicted in Fig. 36, leading to empirically sound performance. The image was first scanned in the direction of illumination to yield a coarse mask of possible edge points, then edges from the image were extracted via the Sobel edge detection algorithm. Once these edges were filtered against the coarse mask, a denser scan in the direction of illumination was yet again conducted to remove any edges not lying on the lit limb itself. The resultant pixel-level lit limb points were then refined to subpixel accuracy via a Zernike moment-based method [41, 70], where the appropriate kernel width was informed by the PSF characterization in Sect. 4.1. The subpixel horizon points were then used within the CRA to produce the OPNAV measurement. Although the CRA provides the full position vector from the camera to the Earth, only the direction (i.e., a LOS measurement) was used for LONEStar. This method consistently yielded an estimate within about 1.5 pixels of what was predicted by reprojection using the reference trajectory (see Fig. 37).

6.4 Celestial Triangulation with the Earth and Moon

During the LONEStar imaging campaign, the Earth and Moon were captured simultaneously in a single image 21 times, yielding the largest observation dataset of the mission. Since both celestial bodies were seen in the same image, LOST is the appropriate triangulation method for LF localization in this case. Figure 38 shows short-exposure images from three such observations, illustrating the evolution of the appearance of these bodies throughout the campaign.

In all cases, the Image Block consisted of two bracketing long-exposure images, used for attitude determination, surrounding three short-exposure images, each with small variations in exposure time and image sensor gain. Both long-exposure images were downlinked in all cases, with the exception of OPNAV Block 596, where only long-exposure image 596e was successfully downlinked. As such, the attitude for each OPNAV measurement was interpolated using SLERP from bracketing star images for 20 of the 21 observations.

Attitude determination from the long-exposure images initially appeared uniquely challenging in this context (as compared to the distant planets), given the proximity and relative brightness of the Earth and Moon in these images. Figure 39 illustrates that the Earth and Moon (and a great number of pixels in their vicinity) are saturated in these instances, while optical effects within the camera result in significant artifacts elsewhere in the image as well. In most cases, however, many stars are still visible elsewhere in the image (see Figs. 39 and 40). The robust image processing pipeline discussed in Sect. 5.2 was sufficient to localize these visible stars while handling all adverse optical effects. Prominent optical artifacts are visible in both Figs. 39 and 40, including large bright spots thought to be from the reflection of light within the camera’s optical system. Nevertheless. the LONEStar system described here was able to perform successful attitude determination with no special accommodations.

Selected long-exposure Earth-Moon images. The \(\circ\) markers indicate the observed centroids and the \(\diamond\) markers indicate centroid reprojections from the reference trajectory. Note that the brightness of each patch around these stars has been renormalized such that the darkest pixel is black and the brightest is white

Numerical results for the example images presented in Figs. 38 and 39 are given in Tables 11 and 12. Naturally, given the relative proximity of the Earth and Moon, residuals are significantly lower than triangulation scenarios with distant planets. When normalized by range, however, these results are consistent with (i.e., same order of magnitude as) the sequential triangulation results of Jupiter and Saturn. Even as LF grew more distant from the Earth-Moon system, the apparent angle between these two bodies was still well-estimated and resulted in excellent triangulation residuals when compared to the reference trajectory. Note that the time between bracketing star images was longer in these scenarios, with about 20 seconds between bounding images. When this is considered, the pointing drift rates observed in these images are consistent with those determined in Sect. 5.4. In the case of 580b, the bracketing star angle was found to be much lower, but this appears to have been a coincidence.

The particular geometry of the Earth-Moon system, as it relates to LF, leads to useful insights regarding the covariance of these measurements. Specifically, the covariance is a function of both the apparent diameter of the bodies in question (and thus their range), as well as the apparent angle between them. While the range and apparent angle of other targets was roughly constant throughout the LONEStar campaign, the orbital motion of the Moon about Earth led to a periodic apparent angle between the two. Coupled with the motion of LF moving away from the Earth-Moon system, the covariance changed considerably with time. Fig. 41 shows the norm of the triangulation residuals for each Earth-Moon OPNAV Pass, along with a continuous estimate of the instantaneous total error \(\sqrt{Tr({\varvec{{P}}})}\) from Eq. (26). The total error is plotted as a smooth function (not just at the measurement times), to show how the changing geometry affects the instantaneous triangulation performance. The covariance computations assume 0.5 pixels of pointing error and 0.25-1.0 pixel of centroiding error for the Moon and Earth (depending on apparent diameter). The spikes in this contour correspond to the Moon passing close to the Earth, where the apparent angle between them becomes small and the triangulation geometry becomes poor. As expected, the OPNAV passes with higher residuals are strongly correlated with regions of larger OPNAV covariance. The associated Mahalanobis distance for each case is also presented below these results in Fig. 41, with most measurements having a Mahalanobis distance below two.

7 OPNAV-Only Orbit Determination

The results of Sects. 5 and 6 focused on instantaneous localization of LF using triangulation, CRA, and related techniques. While such instantaneous solutions are valuable, it is equally important to process the planet LOS measurements directly in a navigation filter to produce an orbit determination (OD) solution. Using a batch filter [71], an OPNAV-only OD solution was produced for two scenarios. The first scenario used only the distant planet observations (Mercury, Mars, Jupiter, Saturn), which provides representative OPNAV-only navigation performance within the inner Solar System while far away from all the observed bodies (e.g., for an exploration mission during interplanetary cruise). The second scenario uses observations of the Earth and Moon in addition to all the distant planet observations (Mercury, Mars, Jupiter, Saturn), which represents the best OPNAV-only OD solution for LF that is possible with the LONEStar data set.

7.1 Batch Filter Design

The LONEStar batch OD solution is produced by solving a weighted nonlinear least-squares problem. This is accomplished while assuming no a priori information from the reference trajectory. A maximum likelihood estimate is constructed by attempting to find the LF state at a single reference time that best explains the entire sequence of OPNAV measurements over the 97-day imaging campaign—including 17 LOS measurements for Scenario 1 and 59 LOS measurements for Scenario 2. This is accomplished by minimizing the negative log likelihood function of the observations

which is essentially a sum of the measurement residual Mahalanobis distances. In Eq. (33) the state \({\varvec{{x}}}_i\) is computed by propagating the reference state \({\varvec{{x}}}_0\) from the reference time \(t_0\) to the measurement time \(t_i\). The measurement model \(h({\varvec{{x}}})\) is the full camera model from Sect. 4.2, which includes projective geometry, lens distortions, conversion to pixel coordinates, stellar aberration, and light time-of-flight. Attitude was not estimated by the filter, but was instead estimated from the star field background using the procedures discussed in Sect. 5.2.

The dynamical model used by the batch filter to propagate the state considered gravitational attraction from major celestial bodies within the Solar System (the Sun, planets, and Earth’s moon) and solar radiation pressure (SRP). For simplicity, a cannonball model was used for the SRP acting on the spacecraft. Given the influence of SRP, the SRP ballistic coefficient \(\beta = C_R A / m\) was estimated as part of the orbit determination process. SRP acceleration was modeled according to [72]

where \(P = 4.56 \times 10^{-6} \ Nm^{-2}\) and \({\varvec{{r}}}_S\) is the position of the sun with respect to the SSB. The LF dynamics were modeled in the ICRF with an origin at the SSB. This convention has the advantage of simplifying the gravitational acceleration of the N-body problem to a summation of the contributing accelerations from each body. Thus, the total acceleration is given by

where the positions of the solar system bodies were obtained from DE440 ephemerides [55].

The measurement error covariance was empirically determined by inspection of the triangulation residuals. Measurement errors were assumed to be zero-mean, Gaussian, and isotropic with standard deviations of 0.25 pixel (Saturn), 0.5 pixel (Jupiter), 0.75 pixel (Mercury), 0.75 pixel (Mars), 0.25–1.0 pixel (Earth and Moon, depending on apparent radius). These errors include both image processing (i.e., centroiding) and pointing (i.e., attitude knowledge) errors.

7.2 Scenario 1: OPNAV-Only OD with Distant Planets

The OPNAV-only distant planet OD solution included 1 Mercury observation, 1 Mars observation, 8 Jupiter observations, and 7 Saturn observations. The converged LMA solution from dynamic triangulation (discussed in Sect. 5.5) was used as the initial guess for the batch estimation. The resulting OPNAV-only OD result was compared with the reference trajectory, with residuals as shown in Fig. 42. Here, a difference of about 6-11 Earth radii between the two OD solutions is observed over the 97-day period. This performance is achieved despite the comparatively large ranges to the distant planets at this time, which were 0.9–1.4 AU (Mercury), 2.4–2.5 AU (Mars), 4.0–4.9 AU (Jupiter), and 8.8–9.2 AU (Saturn). The OPNAV OD residuals are an order of magnitude larger than the radiometric OD residuals, therefore the reference trajectory from Earth-based tracking seems to be a reasonable surrogate for the true orbit in this scenario.

Finally, as one would expect, the OPNAV-only OD solution demonstrated substantially better agreement with the OPNAV observables. This may be seen by the smaller measurement residuals for the OPNAV-only OD solution (blue dots) than for the reference trajectory (red dots) in Fig. 43.

7.3 Scenario 2: OPNAV-Only OD with Earth, Moon, and Distant Planets