Abstract

Background

While the burgeoning researcher and practitioner interest in physical literacy has stimulated new assessment approaches, the optimal tool for assessment among school-aged children remains unclear.

Objective

The purpose of this review was to: (i) identify assessment instruments designed to measure physical literacy in school-aged children; (ii) map instruments to a holistic construct of physical literacy (as specified by the Australian Physical Literacy Framework); (iii) document the validity and reliability for these instruments; and (iv) assess the feasibility of these instruments for use in school environments.

Design

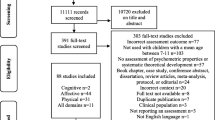

This systematic review (registered with PROSPERO on 21 August, 2022) was conducted in accordance with the Preferred Reporting Items for Systematic Review and Meta-Analysis (PRISMA) statement.

Data Sources

Reviews of physical literacy assessments in the past 5 years (2017 +) were initially used to identify relevant assessments. Following that, a search (20 July, 2022) in six databases (CINAHL, ERIC, GlobalHealth, MEDLINE, PsycINFO, SPORTDiscus) was conducted for assessments that were missed/or published since publication of the reviews. Each step of screening involved evaluation from two authors, with any issues resolved through discussion with a third author. Nine instruments were identified from eight reviews. The database search identified 375 potential papers of which 67 full text papers were screened, resulting in 39 papers relevant to a physical literacy assessment.

Inclusion and Exclusion Criteria

Instruments were classified against the Australian Physical Literacy Framework and needed to have assessed at least three of the Australian Physical Literacy Framework domains (i.e., psychological, social, cognitive, and/or physical).

Analyses

Instruments were assessed for five aspects of validity (test content, response processes, internal structure, relations with other variables, and the consequences of testing). Feasibility in schools was documented according to time, space, equipment, training, and qualifications.

Results

Assessments with more validity/reliability evidence, according to age, were as follows: for children, the Physical Literacy in Children Questionnaire (PL-C Quest) and Passport for Life (PFL). For older children and adolescents, the Canadian Assessment for Physical Literacy (CAPL version 2). For adolescents, the Adolescent Physical Literacy Questionnaire (APLQ) and Portuguese Physical Literacy Assessment Questionnaire (PPLA-Q). Survey-based instruments were appraised to be the most feasible to administer in schools.

Conclusions

This review identified optimal physical literacy assessments for children and adolescents based on current validity and reliability data. Instrument validity for specific populations was a clear gap, particularly for children with disability. While survey-based instruments were deemed the most feasible for use in schools, a comprehensive assessment may arguably require objective measures for elements in the physical domain. If a physical literacy assessment in schools is to be performed by teachers, this may require linking physical literacy to the curriculum and developing teachers’ skills to develop and assess children’s physical literacy.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

This review identified physical literacy assessments for children and adolescents based on a definition of physical literacy that incorporates physical, psychological, social, and cognitive domains. |

Assessments with more validity/reliability evidence were the: Canadian Assessment for Physical Literacy version 2, Adolescent Physical Literacy Questionnaire, Passport for Life, Physical Literacy in Children Questionnaire, and Portuguese Physical Literacy Assessment Questionnaire. |

Survey-based instruments were the most feasible to administer in schools. |

Findings will be useful for researchers and practitioners who wish to assess children’s physical literacy in a school setting and need information on how instruments are classified in terms of current validity, reliability, and feasibility data. |

1 Introduction

There has been a surge of research interest in physical literacy in children and youth in the past 5 years (Web of Science: < 80 per year in 2014/15, 100 + in 2016/2017, 170 + in 2018/19, 250 + articles each year in 2020/21, and 800 + articles in 2022), which can partly be explained by the hypothesis that possessing greater physical literacy will enhance an individual’s likelihood of participating in lifelong physical activity [1]. Physical literacy has been defined in various ways [2,3,4,5] and for this paper, we have selected the Australian definition: “Physical literacy is lifelong holistic learning acquired and applied in movement and physical activity contexts. It reflects ongoing changes integrating physical, psychological, social and cognitive capabilities. It is vital in helping us lead healthy and fulfilling lives through movement and physical activity. A physically literate person is able to draw on their integrated physical, psychological, social and cognitive capabilities to support health promoting and fulfilling movement and physical activity — relative to their situation and context — throughout the lifespan,” as described in the Australian Physical Literacy Framework (APLF) [6, 7]. The APLF incorporates four domains (physical, psychological, cognitive, and social) and 30 elements of physical literacy within these domains that are based on the capabilities/capacities known to influence human movement [7].

This research interest is also reflected in publications and debate regarding how and whether to assess physical literacy [8,9,10,11]. This review follows a pragmatic perspective, maintaining that assessment is important to understand any individual, at any point, on their physical literacy journey and how they can best be supported. While there have been several reviews on physical literacy instruments [8,9,10,11], no review has comprehensively documented the validity and reliability of developed instruments for school-age children and youth. When selecting assessment instruments, it is important to be able to understand the degree of available validity evidence for the context, for example, the school setting. This enables an instrument to be selected based on its measurement properties. We can be more confident of our findings if the physical literacy measurements we use have stronger validity and reliability evidence. Another important aspect of the choice and use of instruments is their feasibility for collecting data in the given context [10, 12].

A recent scoping review identified that some of the latest approaches to defining and assessing physical literacy encompassed notions regarding physical, psychological, cognitive, and social learning [13]. While many instruments assess component parts of physical literacy [12, 14], for example, movement skills, our purpose was to capture instruments that have been purposefully designed to measure physical literacy as a holistic construct. The APLF is our benchmark of a holistic assessment model, as it incorporates four domains (physical, psychological, cognitive, and social) unlike many other instruments [8,9,10,11]. In addition, this work was commissioned by the Australian Sports Commission (the funders of the APLF) to identify and understand which instruments developed for use in school-aged children best mapped to the APLF. Thus, the purpose of this review was to: (i) identify instruments designed to measure physical literacy in school-aged children; (ii) map these instruments to the APLF; (iii) document the validity and reliability for these instruments; and (iv) assess the feasibility of use of these instruments in school contexts.

2 Methods

2.1 Initial Search of Reviews

Reviews (narrative and systematic) of physical literacy instruments in the past 5 years (2017 onwards) [located through Google Scholar using the terms ‘physical literacy’ and ‘review’ on 20 July, 2022] were used to identify instruments (subjective or objective) specifically designed for the purpose of assessing physical literacy in school-aged children in the school setting. In this review, including ‘physical literacy’ in the name of an instrument may not necessarily meet the review inclusion requirements. As the aim was to identify instruments designed to measure a holistic construct of physical literacy, instruments needed to assess at least three domains of the APLF (i.e., psychological, social, cognitive, and/or physical). Instruments that met these criteria and addressed additional elements outside of the APLF were also included.

2.2 Search Terms and Databases

Searches were conducted by health faculty librarians on 20 July, 2022 for physical literacy instruments in school-aged children (not preschool or early years) that may have been missed/or published in (or since) the existing reviews in the past 5 years in six databases (CINAHL, ERIC, GlobalHealth, MEDLINE, PsycINFO, SPORTDiscus) [date range 1 June, 2017 to 30 June, 2022]. The search strategy, including all identified keywords and relevant subject headings (e.g., MeSH and Thesaurus terms), was adapted for each included information source. The key concepts and search terms were Concept 1: ‘Child’, Concept 2: ‘School’, and Concept 3: ‘Physical literacy’. Please see the Electronic Supplementary Material for the final search plan including alternative terms for the concepts. Table 1 reports the inclusion criteria for the review. Each screening step involved two authors with any issues resolved through discussion with a third author.

2.3 Instrument Synthesis

Instruments that met the included criteria were classified against the APLF (by one author and then checked with a second author) in terms of which of the 30 elements they assessed. Within the coding process, it was possible for two (or more) items in an instrument to be matched to only one element in the APLF. For example, motivation might be assessed by more than one survey item within an instrument/assessment. The converse could also apply if the item was assessed as meeting more than one of the APLF elements. For example, the item might measure psychological aspects of engagement and enjoyment and social aspects of collaboration. If instruments assessed additional elements to those assessed in the APLF, they were mapped to the appropriate domain or new domains were created.

2.4 Instrument Validity and Reliability

The Standards for Educational and Psychological Testing [15] provided the theoretical framework for assessing validity and reliability. These standards espouse that rather than ‘validating an instrument’, validation is a process involving ongoing evidence about the property of test scores and the interpretations that stem from instrument use within a context. The Standards discuss validity in terms of five aspects: test content (from a literature review and content validity with experts), response processes (face validity), internal structure (internal consistency, test–retest and/or inter-rater reliability, construct validity), relations with other variables, and the consequences of testing (screening potential). Specifically, for relations with other variables, age, sex, motor skill competence, physical literacy, and physical literacy over time were considered and reported on. Physical activity was not included, as this was not always considered part of the definition of physical literacy. The included instruments were assessed for each of these validity aspects (by one author and then checked with a second author) and then the evidence categorized as: supporting (✓), partially supportive ( ~), not supported (x), or not yet tested/reported (–). Please see Table 2 regarding how this was operationalized for this review.

2.5 Feasibility

Feasibility within a school environment for each physical literacy assessment with more than test content evidence was assessed using a modified matrix developed previously [10]. Instruments with less validity evidence were not considered for feasibility, as an instrument arguably needs reliability and validity to be established first. This process documented feasibility according to cost efficiency (time, space, equipment, training, and qualifications required), but not acceptability in the way the previous framework conceptualized it (i.e., participant understanding, completed assessments [10]), as this is considered as test content evidence within the validity framework [15].

3 Results

3.1 Identification of Instruments from the Google Scholar Search of Prior Reviews

Eight systematic or narrative reviews were identified (Fig. 1). These reviews included nine instruments (highlighted in underline and italics in this section) relevant for potential inclusion. Edwards et al. [8] used the global search term “physical literacy” to identify relevant instruments. Instruments did not meet our inclusion criteria if they typically focused on one domain of the APLF, particularly the physical (n = 22), the affective [also termed psychological] (n = 8), or the cognitive (n = 5) domains. The social domain was typically not assessed [8]. The Canadian Assessment for Physical Literacy CAPL (version 1) [16,17,18] was the only assessment that covered more than one domain of physical literacy but did not meet our inclusion criteria as it is not the most recent version of the CAPL.

Liu and Chen [19] undertook a narrative approach to physical literacy assessment that identified eight instruments. The Perceived Physical Literacy Inventory (PPLI) [20, 21], the Canadian Assessment for Physical Literacy version 2 (streamlined to 14 protocols rather than 25) [22,23,24,25,26], Passport for Life [27], and the Physical Literacy Assessment for Youth, specifically PLAYfun, PLAYbasic (a shortened version of PLAYfun), PLAYself, and PLAYcoach (counted as four instruments) [28] met our criteria. PLAYparent was not included as our focus was on assessments that could be performed in school. PLAYcoach was seen as potentially relevant as a coach might be engaged in a sport program at school. Four did not meet our criteria, with two designed for the early years, one focused only on movement skills, and another was not explicitly designed to assess physical literacy [19].

Kaioglou and Venetasnou [9] conducted a review on physical literacy assessment instruments for use with children engaged in gymnastics and identified two approaches to physical literacy assessment; the first, to develop and use multi-component assessment instruments and the second, when existing standardized instruments were used. The first approach aligns with our inclusion criteria and the Canadian assessments already identified (PLAY tools, Passport for Life, and the Canadian Assessment of Physical Literacy) were the only instruments they identified that used this approach.

Shearer et al. [10] aimed to identify child assessments of physical literacy elements that were not necessarily branded as physical literacy assessments. Of the 52 potential assessment instruments identified, only the three named as physical literacy assessments were considered comprehensive by Shearer et al. [10] and met our inclusion criteria. These assessments (the Canadian Assessment for Physical Literacy, Passport for Life, and the Physical Literacy Assessment for Youth) have already been identified for inclusion in our review. Essiet et al. [14] also took a wide systematic approach beyond the physical activity and sport-related literature; however, the authors did not report any teacher proxy-report physical literacy instruments.

Jean de Dieu and Zhou [11] conducted a narrower systematic search and identified ten instruments, including four already identified in previous reviews [10, 19]. Two instruments mentioned in prior reviews did not meet our inclusion criteria. Additionally, Jean de Dieu and Zhou [11] identified the observed model of physical literacy [29] but this was not included in our synthesis, as it was still at the conceptual model stage. The instruments newly identified were the Chinese Assessment and Evaluation of Physical Literacy (CAEPL) [30] and the International Physical Literacy Association (IPLA) Physical Literacy Charting Tool (published 13 December, 2018, on the IPLA website https://www.physical-literacy.org.uk/library/charting-physical-literacy-journey-tool/). In the same year, Young et al. [31] published a review aiming to investigate physical literacy assessments in physical education, sport, or public health. The six identified assessment instruments were all identified in previous reviews for inclusion in our synthesis.

3.2 Identification of Instruments from the Database Search

Through the database search, 39 papers relevant to the physical literacy assessment were identified. A total of 29 papers reported instrument reliability and/or validity. A total of 27 papers included information regarding the feasibility of assessment in schools [20 papers reported in Sect. 3.9 regarding the feasibility aspects captured in instruments and seven papers reported in the discussion on broader aspects of feasibility in schools (see Sect. 4)]. There was crossover between articles reporting validity and feasibility (Fig. 1).

Five additional assessments that were not included in the prior reviews met our inclusion criteria. These include the Adolescent Physical Literacy Questionnaire (APLQ) [32], the Physical Literacy in Children Questionnaire (PL-C Quest) designed for primary school-aged children [33, 34], the Physical Literacy self-Assessment Questionnaire (PLAQ) [35], and the Portuguese Physical Literacy Assessment Questionnaire (PPLA-Q) designed for adolescents in Grades 10–12 (aged 15–18 years) [36, 37]. One PhD thesis was also identified; Dong [38] developed the Perceptions of Physical Literacy for Middle-School Students (PPLMS).

3.3 Instruments Included in Our Synthesis

A total of 14 instruments were included in our synthesis (nine from prior reviews and five from the updated search) [i.e., referred to by their acronyms that are listed alongside instrument details in Table 3. 1. APLQ, 2. CAEPL, 3. CAPL version 2, 4. IPLA, 5. PFL, 6. PLAQ, 7. PLAYbasic, 8. PLAYcoach, 9. PLAYfun, 10. PLAYself, 11. PL-C Quest, 12. PPLA-Q, 13. PPLI, and 14. PPLMS]. Six were from Canada, three from China, one from Australia, one each from Iran, Portugal, the UK, and the USA. There were seven self-report instruments, one designed for children (PL-C Quest), five designed for adolescents (APLQ, CAEPL, PPLA-Q, PPLI, and PPLMS), and one without an age specification (IPLA). One proxy-report instrument was designed for coaches (PLAYcoach). A further four had mixed assessment approaches including self-report and observation (CAPL version 2, PFL, PLAYfun & self).

3.4 Mapping Instruments Against the APLF

Table 4 shows the ALPF elements each instrument assessed; elements in italics are additional to those specified in the APLF. The instrument that assessed the most elements of the APLF was the PL-C Quest, which was designed to map to the APLF and therefore assessed the 30 APLF elements. The PFL (n = 20) and the PLAQ (n = 18) assessed the next highest number of elements, with both assessing all four domains of the APLF. The APLQ assessed 11 elements across four domains. The PPLI and the IPLA instruments assessed fewer elements (n = 8), but still across all four domains.

The physical domain was the most assessed overall (n = 65), followed by the psychological (n = 53), cognitive (n = 29), and social (n = 19). The most assessed elements (defined as being in at least six of the 14 assessments) in the physical domain were movement skills, cardiovascular endurance, and then, object manipulation, and stability/balance. The most assessed psychological elements were motivation and confidence, engagement and enjoyment, and self-regulation (emotional) and self-perception. The most assessed cognitive element was content knowledge. The most assessed social element was relationships.

3.5 Environmental Context

In eight assessments (IPLA, PFL, PLAY [all four] instruments, PL-C Quest, and the PPLI), the environmental context (e.g., land, snow, ice, water) was either specifically referred to or diversity in the environment was inherent in the items. The PPLI differed from the other instruments in that it did not refer to land or water as the environmental context, but specifically to ‘wild natural survival’.

3.6 Additional Domains/Elements of Interest Identified to the APLF

Eight instruments measured this aspect. Some instruments had survey items covering a broad range of physical activity time periods and contexts. For instance, the APLQ asked about: hours of physical activity or exercise during the week and per day, and whether they did physical activity and exercise outside of school time or as a regular habit. The CAEPL included the domain of physical activity and exercise behavior in terms of: moderate- to vigorous-intensity physical activity, organizsed sports, active play, active transport, and experience in games/sports/events (within school/between schools/regional-national). The IPLA instrument included 15 survey items that investigated active participation (how often at school/home) in five movement domains: team sport (e.g., hockey, soccer), individual sport (e.g., golf, swimming), dance, gymnastics, and fitness activities (e.g., jogging, yoga). The PLAQ refers to: participation in sports activities (including sports classes and extra-curricular activities) and games no less than five times a week, and sports activities (including physical education classes and extracurricular activities) being not less than 1 h per day. The PPLMS asked about: frequency of aerobic exercises for at least 60 min per day and a minimum of five times per week, whether sports were played for at least 60 min per day, frequency of participation in a physical activity program, and participation in physical activities, for at least 60 min every day.

Two instruments had survey items that were more limited in the context. Passport for Life had items on the number of physical education classes per week, and time in physical activity each day and PLAYcoach asked coaches about the physical activities and sports that an individual person participated in, but this information was not included in the overall score of the instrument items.

One instrument used a device-based assessment of physical activity. The CAPL version 2 used pedometers (steps each day over 7 days) and had an item asking the number of days with at least 60 min of moderate-to-vigorous physical activity. The CAPL also asked participants about the number of days in the past week that they were physically active for at least 60 min per day; recommended by their Delphi process [17].

Two of these eight assessments also included sedentary behavior. The CAEPL included screen-based time and homework time (this was included in their final model even though it did not reach expert agreement during content development of the instrument). The PLAQ had an item stating: “I spend more than 2 h on the electronic screen every day.”

In terms of the additional elements identified (beyond the APLF), in the physical domain, items referring to specific sports skills were included in two instruments (APLQ, CAEPL). Body composition was included in one instrument (CAEPL), while power and body image were each included in one instrument. Some elements that are part of the APLF were only assessed by the two instruments directly aligned to the APLF (reaction time, connection to place).

3.7 Reliability and Validity Evidence for the Selected Instruments

A summary of validity and reliability evidence for each instrument is presented in Table 5. A narrative description of this evidence is presented below.

3.7.1 Instruments with Evidence of Test Content Only

Several instruments had evidence of test content only with one article located for each instrument. The CAEPL for school-aged children is in the conceptual stages of an assessment approach [30]. The IPLA instrument is available on the IPLA website (https://www.physical-literacy.org.uk/library/charting-physical-literacy-journey-tool/, accessed 14 July, 2022), and is developed from theoretical perspectives but no published validity or reliability data could be located. One paper that appears relevant to the IPLA approach highlighted considerations that organizations could make to develop methods to chart individuals’ progress [39].

3.7.2 Instruments with Evidence of Two Validity Aspects

The PLAQ (one article located [35]) used a grounded theory approach with students, parents, teachers, and experts to develop their physical literacy evaluation indicators for Chinese children in Grades 3–6, but they did not report a literature review; therefore, this was rated as partially meeting evidence for test content [35]. Internal structure was investigated using a factor analysis in a large sample (n = 1179) of Chinese children from randomly selected primary schools [35]. After an exploratory factor analysis, 16 items with low loadings were deleted and 44 items were retained. A confirmatory factor analysis then confirmed the structure (physical competence, affective, knowledge and understanding, physical activity) of the 44 reduced items [35].

Evidence for two validity aspects for the PPLI scale (three articles located [20, 21, 40]) in Hong Kong adolescents aged 11–19 years was reported [20]. Partial evidence for internal structure (a satisfactory three-factor structure but no information on reliability) and partial support for relations with other variables (male individuals had higher physical literacy levels than female individuals but perceptions of physical literacy were not impacted by age) was reported [20]. A translation into Turkish with 12-to-19-year-old adolescents investigated the PPLI (renamed as the Perceived Physical Literacy Scale for Adolescents [PPLSA]), reported further evidence of internal structure (a three-factor model structure with acceptable fit; internal consistency of 0.90 for whole scale; test–retest reliability ranged between 0.77 and 0.96) [40]. An earlier paper (2016) reported validity evidence of the PPLI in reference to teachers’ completion on behalf of themselves and thus this evidence was not considered as supportive of our population of interest (children) [21].

The PLAY instruments also have a range of publications with validity evidence (five articles reported in this section [28, 41,42,43,44] and one article mentioned in Sect. 3.8. [45]). There was mixed evidence, depending on the instrument, from mainly Canadian populations and one Croatian population [44]. Evidence for test content was not identified for any of the PLAY instruments. Internal structure of PLAYfun with 7- to 14-year-old individuals, with support for inter-rater agreement (ICC = 0.87) and a five-factor structure satisfactory model fit [41], was reported. There was also evidence for relations with other variables for sex and age (scores increased with age and in subscales such as object control boys were higher). PLAYfun and PLAYbasic were investigated in children aged 8–14 years living in remote Canadian communities and further evidence of internal structure for PLAYfun (inter-rater reliability ICC = 0.78 and 0.82; α = 0.83–0.87) was provided [42]. Internal structure for PLAYbasic was partially supported (inter-rater reliability ICC = 0.72 and 0.79; α = 0.56–0.65). Relations with other variables was reported again for age in terms of positive correlations [and PLAYfun (r = 0.23–0.39) and PLAYbasic (r = 0.21–0.34)]. Additionally, both these PLAY instruments had large positive correlations with the Canadian Agility and Movement Skill Assessment (CAMSA) motor skill obstacle course (PLAYfun r = 0.47–0.60, PLAYbasic r = 0.40–0.61) and small-to-moderate correlations with a self-reported measure of physical activity (PLAYfun r = 0.24–0.44, PLAYbasic r = 0.20–0.42). A suite of PLAY instruments was tested in children aged 8–13 years [28]. Evidence of internal structure was supported for PLAYfun (internal consistency, α > 0.70; inter-rater reliability, ICC > 0.80) but only partially supported for PLAYbasic (α = 0.47; inter-rater reliability ICC > 0.80). Test–retest reliability and factor validity were not assessed. There was also evidence of relations with other variables (male individuals scoring higher on PLAYbasic and PLAYfun total scores; age positively correlated with PLAYbasic and PLAYfun [r = 0.16–0.32]). PLAYfun and PLAYbasic were also both positively correlated (r = 0.19–0.59) with another measure of motor competence (BOT-2).

Evidence of internal structure for PLAYself in children (aged 8–14 years) has been reported [43] with good reliability (α = 0.80, and test–retest reliability over 7 days, 0.87) and while the initial fit statistics were not ideal, when two items were removed the final fit statistics were satisfactory [43]. In the Croatian population of individuals aged 14–18 years, PLAYself had acceptable internal consistency for the components (the total score was not reported) and good test–retest reliability (0.85) [44]. Construct validity was confirmed according to the factor analysis of two significant factors; no other forms of construct validity were tested [44].

There was no evidence of relations with other variables (male individuals did not score differently to female individuals for the total PLAYself score) [43, 44]. No published validity evidence could be located for PLAYcoach [28].

3.7.3 Instruments with Evidence of at Least Three Validity Aspects

There is an available body of evidence regarding validity evidence for the CAPL version 2 (11 articles in total, ten described in this section [18, 23, 25, 26, 44, 46,47,48,49,50], and one mentioned in Sect. 3.8. [45]). Evidence for test content has been published for Canadian children for: the movement skills assessment component (the CAMSA [18]), the domains of motivation and confidence [26], and the CAPL version 2 approach [23].

A Danish validation recently published evidence for response process. Elsborg et al. [46] selected the lowest grade levels (second grade) in Danish children on the basis they may have the most trouble to complete, and then conducted a pilot study of both the physical tests and the survey, followed by cognitive interviewing. As a result, the questionnaire administration was modified from paper to video-assisted (pictures and audio) for the children to complete unassisted on a tablet/computer.

Evidence regarding internal structure is supported overall, while internal consistency values show mixed evidence. The motivation and confidence domains are referred to in one paper [26], but these data could not be located in the additional files. However, the Danish study reported the motivation and confidence domains had good reliability (i.e., α = 0.90) [46]. A Chinese validity study also reported that motivation and confidence showed good internal consistency (α = 0.82), but the knowledge and understanding domain did not perform as well in that study (α = 0.52) [47]. The knowledge and understanding domain was assessed for test–retest reliability in a Croatian population of 14- to 18-year-old individuals with mixed results at the item level (total score not reported) [44].

Test–retest reliability for the CAMSA can be considered as partially supported, with excellent values reported for the completion time (ICC = 0.99) but lower values for the skill score reliability (ICC = 0.46 over a 2- to 4-day test interval and ICC = 0.74 over a longer interval) [18]. Published test–retest reliability for other aspects of the CAPL version 2 was not identified.

Evidence for the factorial structure of the domains of motivation and confidence [26], the factor structure of CAPL scores, and the contribution of each domain to the overall physical literacy score has been reported [25]. Subsequent Danish [46] and Chinese studies have reported acceptable model fits and factor loadings [47].

Relations with other variables for the CAPL version 2 is also generally supported. The CAMSA has reported convergent validity regarding motor skills in Canadian (i.e., age increasing and male sex) [18], Greek [49, 50], and Chinese children [47]. The Danish study also examined relations with other variables, with the CAPL version 2 score explaining 31.4% of the variance in physical education teacher ratings [46]. The CAPL was also modified (new protocols for the CAMSA and knowledge and understanding) for use with adolescents (aged 12–16 years) in Grades 7–9 (CAPL 789), with evidence of relations with other variables (i.e., physical competence increased with age and boys performed better on the CAMSA) [48]. In the Croatian sample, the knowledge and understanding domain did not show a difference according to sex [44].

Three articles regarding validity were located for the PPLA-Q [36, 37, 51]. Note that one article appears as a pre-reviewed version [36]. Content evidence (literature review, an analysis of the APLF, and expert validation) for the PPLA-Q for adolescents in Grades 10–12 (age 15–18 years) and response process evidence (gathered from interviews with students in the target age group) has been reported [37]. Internal structure was only partially evident in this paper (internal consistency > 0.70 in 10 of 16 scales, although problematic items were modified and tested with further cognitive interviews). In a subsequent paper that aimed to investigate the cognitive module of the PPLA-Q, more evidence concerning internal structure was provided (final model fit the data); however, the test–retest reliability was classified as poor to moderate (data not shown) [51]. Another paper aiming to test construct validity of the psychological and social modules of the PPLA-Q reported evidence of internal structure (as assessed though item dimensionality and convergent and discriminant validity and reliability, i.e., internal consistency > 0.80; test–retest reliability values between 0.66 and 0.92 across the eight scales) [36]. Therefore, the PPLA-Q was considered to meet the criteria for internal structure overall. Evidence of relations with other variables was partially supported for sex, with evidence of differential item and test functioning across sex groups reported in one item but with no significant effect at the test level [36].

The PL-C Quest (two articles located [33, 34]) has evidence of test content (literature review, experts) and response processes (interviews with children) in Australian school children aged 5–12 years [34]. A subsequent paper provided evidence in Australian children aged 7–12 years for internal structure (internal consistency, α = 0.92; test–retest reliability over 16 days, ICC = 0.83; satisfactory fit for a Confirmatory Factor Analysis model with four domains and a higher order factor of physical literacy) [33]. Relations with other variables was partially supported as boys reported higher values in some of the items relating to the physical domain, but not for the movement skill items.

Validity evidence (from one article [32]) for the APLQ in a large sample of Iranian adolescents aged 12–18 years was reported [32]. Test content (literature review, experts), response process (adolescent opinion), internal structure (internal consistency α = 0.95; test–retest reliability over 11 days, ICC = 0.99; construct validity confirmed three factors: psychological and behavioral, knowledge and awareness, and physical competence and physical activity) were all supported. There was some evidence for relations with other variables (correlated with the PPLI, r = 0.79 for the total score).

Three articles were located for the PFL, two in this section [27, 52] and one described in Sect. 3.8. [45]). Lodewyk and Mandigo [27] published test content evidence (consultative process and expert feedback) for PFL in Canadian children and adolescents (Grades 4–9, age not reported). Data from a pilot test of a draft of the Grade 10–12 PFL (sample of 642 students) were part of the development process. Feedback resulted in minor modifications to the wording of some items [52].

Some evidence of response processes was also reported. While more than 90% of teachers reported Grade 7–9 students were able to understand the assessments, this percentage was lower for Grade 4 and 5 students (living skills: 71%; active participation: 66%) [27]. The teachers said the year 10–12 students could follow and understand the active participation and living skills items [52].

There was support for the internal structure for the younger students in terms of reliability [internal consistency (> 0.60); inter-rater agreement (0.65–0.82); test–retest reliability (r = 0.72–0.89)] and initial partial support for construct validity (each item within each scale had strong factor loadings [0.53–0.81] and scale correlations within each PFL component had positive significant associations) [27]. For students in grades 10–12, there was also support for reliability [internal consistency (α > 0.83)]. Further, each item (bar two that were later omitted) had at least a satisfactory factor loading (0.30–0.81), and the extracted factor explained a satisfactory proportion of variance [52]. Finally, there was some evidence for relations with other variables, as authors reported predictive consistency between scales and components over the testing period of 2 years for the different year groups [52].

For the PPLMS (one PhD thesis located [38]), evidence of content validity was based on a construct map and literature review All scale items were aligned with the National Standards and grade level outcomes for K-12 PE published by SHAPE and theories of physical literacy prescribed by Whitehead Expert feedback was provided by academic staff [38]. There was evidence of internal structure. There was good internal consistency reliability for each subscale and the total 22-item instrument (0.93) and adequate construct validity (an exploratory factor analysis found a 22-item instrument with four subscales and a subsequent confirmatory factor analysis confirmed the first model [χ2/df = 1.487, root mean square error of approximation = 0.067, standardized root mean square residual = 0.062, Tucker Lewis Index = 0.903, Comparative Fit Index = 0.914]). All items loaded greater than 0.40 in the final model [38].

3.8 Gaps in Evidence

Only one study published consequences evidence [45]. That study evaluated the sensitivity and specificity of 40 screening tasks (including the PFL and PLAY motor skills, older version from 2013) to determine which tasks could identify children in need of support. The CAPL (version 1) reported children with a low or high body mass index z-score and children with a predilection score towards physical activity less than 31.5/36 points were the most likely to have a CAPL physical literacy score below the 30th percentile [45]. While two of the instruments in this paper were not current versions, these findings are reported here as it was the only evidence located related to this validity aspect. No study reported on using any of the included instruments in children with disability.

3.9 Feasibility of the Physical Literacy Assessment Instruments

Only the instruments with more than one aspect of validity evidence were considered for feasibility. (i.e., 1. APLQ, 3. CAPL version 2, 5. PFL, 6. PLAQ, 7. PLAYbasic, 8. PLAYcoach, 9. PLAYfun, 10. PLAYself, 11. PL-C Quest, 12. PPLA-Q, 13. PPLI, and 14. PPLMS). Please see Table 6 for information on feasibility. The physical literacy assessment instruments need to be considered separately in terms of their approach. The instruments with mixed assessment approaches that include observation require more time to administer.

Considering just the assessment approaches that use a survey only, the shortest was 8–10 min to complete/administer (PPLI), followed by the PL-C Quest (median 11.5 min), and then the PPLA-Q (27 min). The remainder did not report a completion time (APLQ, PPLI, PLAQ, PPLMS).

The PLAY instruments seem to take the least time with the objective components (PLAYfun or basic) taking 5–10 min, the seated component (PLAYself) also taking 5–10 min to administer and PLAYcoach does not have an administrative time reported. However, one study noted that the PLAY tools were time consuming as a whole package [53]. The motor skill component of the CAMSA can be completed quickly by a whole class group rather than one-on-one (25 min for 20 children), but it is not clear how long the entire CAPL version 2 takes to complete. One study described the time required to complete CAPL-2 as burdensome [23].

A recent paper documents an R analysis package [54] that automates the results process (capl R package [open source], to compute and visualize scores and interpretations from raw data). This could potentially assist in feasibility for researchers, but likely not for the feasibility of administration in school settings by teachers as this would require specialist knowledge to run the package. The whole PFL assessment is reported to take between two and six lessons to complete for a class group of children, with this being reported as an unreasonable amount of time [27]. These instruments (CAMSA, PFL, Playbasic, and PLAYfun) also require space, equipment for the objective components, and a level of training for administering these sections. The CAMSA requires two staff to administer and while the number of staff is not reported for PLAYfun/basic and the PFL, it is likely that two staff would also be needed for a class, i.e., one to administer and one to supervise the remaining children.

4 Discussion

This review identified 14 tools, mainly from Canada, designed to measure physical literacy in children and adolescents. Overall, the assessment approaches with more validity evidence (at least three to four validity aspects according to the standards developed by the American Educational Research Association [15]) were the PL-C Quest and PFL for children, the CAPL version 2 for older children/younger adolescents and the APLQ and PPLA-Q for adolescents. Note that for the PPLA-Q, one supporting article did not appear to be peer reviewed [36]. Additionally, whilst the PPLMS did have three to four aspects of validity for middle school-aged children, data were also not peer reviewed [38].

Even though these assessments had more validity and reliability evidence than other assessments, there was little evidence for consequences evidence. A recent paper has begun to question the consequential validity of physical literacy assessment instrument use (specifically CAPL version 2) in physical education settings [55]. It is also questionable whether determining a ‘cut-off’ for poor physical literacy is a useful approach for a strengths-based approach to physical literacy. There was also a lack of evidence regarding the ability of instruments to be sensitive to change. This is an important aspect for consideration when using instruments to measure change after an intervention. It is important to also note that seeking validity evidence is a journey, and thus some instruments developed more recently have not had the same time frame to develop validity evidence.

A clear gap for all assessments is validity and reliability evidence for instruments when the population includes children with disability. For example, one of the studies on the PFL noted that a gap was understanding students with special needs [52]. Instruments such as the PL-C Quest may offer opportunities here for children with intellectual disability because of the pictorial nature. There is emerging evidence of its utility for this population from a dissertation where it was used with adults with intellectual disability [56]. Although considering the diversity of disability experienced in children, adaptations of physical literacy assessment instruments may need to be tailored to individual disability populations, and this is an area that warrants further investigation.

When considering instrument breadth in terms of domain, the PL-C Quest was designed to map to the APLF and therefore assessed four domains (and 30 elements) of physical literacy. Other instruments that assessed more than ten elements across all four APLF domains were the PFL, PLAQ, PPLA-Q, CAEPL, CAPL version 2, and APLQ. Some instruments added additional domains and/or elements to those included in the APLF, potentially adding to a holistic mapping of physical literacy. Eight assessments incorporated physical activity (including sedentary behavior for two instruments) as an additional domain to the APLF. The position of the expert panel during the initial development of the APLF was that physical activity can be considered a consequence and/or antecedent of physical literacy, but not as an essential domain of physical literacy [57]. What this means in practical terms is that an individual may have high levels of physical literacy but not be active at that present time because of an injury or other personal circumstances, and thus the activity level is not always a reflection of an individual’s physical literacy.

Another aspect of instrument breadth or holism is the range of elements assessed. An additional element in the physical domain (specific sports skills) was added to three instruments (APLQ, CAEPL, PPLMS), with these instruments designed for adolescent populations. The addition of sports may make the instruments more relevant to adolescents, as the context of skill performance is then acknowledged. This supports the psychological theory that as children cognitively develop, their capacity to self-report in the physical domain changes to one that is more differentiated [58]. Other additional elements to the APLF were quite rare, i.e., power and body image were each added to one instrument and body composition was added to one instrument. Power could be a relevant addition to a holistic framework, although this would increase the number of physical elements and this domain already outweighs the other domains. Body image may be an important psychological element to consider including in a holistic physical literacy framework, as a scoping review identified positive body image as linked to physical activity and sport behaviors in adolescents (30% of the study samples) [59]. Including body composition as an element is like including physical activity behavior as a domain, in that can be perceived as reflecting a potential outcome and/or precipitator of physical literacy rather than necessarily being an indicator of physical literacy.

Survey-based instruments are the most feasible to administer in school settings and they can potentially reach larger populations/samples as a result, with the shortest being the PPLI and PL-C Quest. However, a key reason these instruments are shorter is that they do not provide an objective assessment of movement skills or fitness and therefore do not require more than one teacher to administer. Some instruments included an objective assessment of motor skill (CAPL version 2 and PLAY instruments, PFL), with the CAMSA (part of the CAPL version 2) reasonably efficient to administer as it is done as a class group (although two teachers are needed). Motor skill competence is an important component of physical literacy [1], and objective assessment is very well developed in the motor competence field with a plethora of reliable and valid assessment approaches to choose from [60,61,62]. Similarly, an objective assessment of cardiorespiratory and muscular fitness could be considered important to include. When using motor skill assessments as part of a physical literacy assessment, it is worth considering using a strength-based approach as opposed to deficiency testing.

A broader consideration of feasibility (seven articles located) is whether school personnel have the capacity, interest, and requirement to implement a physical literacy assessment. This discussion goes beyond the choosing of assessments for the school setting [12]. The need for teachers’ assessment of physical literacy in schools has been advocated whilst recognizing that Australian teachers had varying levels of understanding of the concept [63]. Two other Australian studies reported that health and physical education teachers’ understanding and operationalization of physical literacy in practice is limited, despite them largely being supportive of physical literacy [64, 65]. One of these studies recommended greater investment in studies that demonstrate how physical literacy supports the objectives of health and physical education [64].

Not having an explicit link to the curriculum is likely to be a primary barrier to physical literacy assessment in schools [65]. The instruments we have reviewed may have been originally designed to meet the needs of a particular curriculum. However, if such information was not explicitly reported in the articles identified in our search, then it was not reported. This problem is compounded when teachers’ personal physical capabilities are underdeveloped, as reported in a study of 57 pre-service teachers [66]. These authors contend greater attention to practical and physical learning experiences is required to develop teaching competencies [66]. A potential solution is physical literacy introduced as an additional proposition in the curricula (joining educative outcomes, strengths-based approach, health literacy, critical inquiry, and valuing movement) [67]. However, this contrasts with those who argue for the introduction of physical literacy as a general capability in the health and physical education curriculum, highlighting the ongoing discussion and divergence around the enactment of physical literacy in schools [68].

The strengths of this review include a thorough search, a comprehensive approach to validity assessment, and broad coverage of feasibility. Applying instruments to the APLF may be seen as a limitation depending on what definition of physical literacy the reader subscribes to, but even so, for those interested in physical literacy assessments that span multiple domains, this process should still have value. It also provides a template approach for others wishing to follow a similar process with other frameworks. It is important for transparency to anchor any physical literacy paper within the definition subscribed to. For example, an earlier paper conducted a conceptual critique of three Canadian physical literacy assessment instruments for school-aged children in terms of how well they related to Whitehead’s conception of physical literacy [53]. Reporting the theoretical standpoint and definition of physical literacy has also been recommended for the reporting of physical literacy interventions [69]. Even though our focus for this review was school-aged children, physical literacy is a lifespan concept and documenting the validity and reliability of instruments to assess physical literacy in the early years of children and adults are also worthy future endeavors.

5 Conclusions

A total of 14 physical literacy assessment instruments were identified, with at least five (APLQ, PFL, PL-C Quest, PPLA-Q, and PPLMS) having evidence for at least three validity aspects. Three instruments assessed four domains of the APLF and more than half the elements (the PL-C Quest, PFL, and the PLAQ). Survey-based instruments were the most feasible to administer in schools, although a comprehensive assessment may arguably include some objective assessments.

References

Cairney J, Dudley D, Kwan M, Bulten R, Kriellaars D. Physical literacy, physical activity and health: toward an evidence-informed conceptual model. Sports Med. 2019;49(3):371–83. https://doi.org/10.1007/s40279-019-01063-3.

Edwards LC, Bryant AS, Keegan RJ, Morgan K, Jones AM. Definitions, foundations and associations of physical literacy: a systematic review. Sports Med. 2017;47(1):113–26. https://doi.org/10.1007/s40279-016-0560-7.

Jurbala P. What is physical literacy, really? Quest. 2015;67(4):367–83.

Shearer C, Goss H, Edwards L, Keegan RJ, Knowles ZR, Boddy LM, et al. How is physical literacy defined? A contemporary update. J Teach Phys Educ. 2018. https://doi.org/10.1123/jtpe.2018-0136.

Tremblay MS, Costas-Bradstreet C, Barnes JD, Bartlett B, Dampier D, Lalonde C, et al. Canada’s Physical Literacy Consensus Statement: process and outcome. BMC Public Health. 2018;18(Suppl 2):1034. https://doi.org/10.1186/s12889-018-5903-x.

Keegan RJ, Barnett LM, Dudley DA. Physical literacy: informing a definition and standard for Australia. Canberra: Australian Government, Australian Sports Commission; 2017.

Sport Australia. Australian physical literacy framework: Australian Government, 2020. https://www.sportaus.gov.au/__data/assets/pdf_file/0019/710173/35455_Physical-Literacy-Framework_access.pdf. [Accessed 15 Apr 2020].

Edwards LC, Bryant AS, Keegan RJ, Morgan K, Cooper S-M, Jones AM. ‘Measuring’ physical literacy and related constructs: a systematic review of empirical findings. Sports Med. 2018;48(3):659–82. https://doi.org/10.1007/s40279-017-0817-9.

Kaioglou V, Venetsanou F. How can we assess physical literacy in gymnastics? A critical review of physical literacy assessment tools. Sci Gymnast J. 2020;12(1):27–47.

Shearer C, Goss HR, Boddy LM, Knowles ZR, Durden-Myers EJ, Foweather L. Assessments related to the physical, affective and cognitive domains of physical literacy amongst children aged 7–11.9 years: a systematic review. Sportsmed Open. 2021;7(1):37. https://doi.org/10.1186/s40798-021-00324-8.

Jean de Dieu H, Zhou K. Physical literacy assessment tools: a systematic literature review for why, what, who, and how. Int J Environ Res Public Health. 2021;18(15):7954. https://doi.org/10.3390/ijerph18157954.

Barnett LM, Dudley DA, Telford RD, Lubans DR, Bryant AS, Roberts WM, et al. Guidelines for the selection of physical literacy measures in physical education in Australia. J Teach Phys Educ. 2019;38(2):119–25. https://doi.org/10.1123/jtpe.2018-0219.

Martins J, Onofre M, Mota J, Murphy C, Repond R-M, Vost H, et al. International approaches to the definition, philosophical tenets, and core elements of physical literacy: a scoping review. Prospects. 2021;50(1):13–30. https://doi.org/10.1007/s11125-020-09466-1.

Essiet IA, Lander NJ, Salmon J, Duncan MJ, Eyre EL, Ma J, et al. A systematic review of tools designed for teacher proxy-report of children’s physical literacy or constituting elements. Int J Behav Nutr Phys Act. 2021;18(1):1–48. https://doi.org/10.1186/s12966-021-01162-3.

American Educational Research Association APA, National Council on Measurement in Education, Joint Committee on Standards for Educational Psychological Testing, National Council on Measurement in Education Joint Committee on Standards for Educational and Psychological Testing. Standards for educational and psychological testing. Washington: American Educational Research Association; 2014.

Longmuir PE, Boyer C, Lloyd M, Yang Y, Boiarskaia E, Zhu W, et al. The Canadian Assessment of Physical Literacy: methods for children in grades 4 to 6 (8 to 12 years). BMC Public Health. 2015;15(1):767. https://doi.org/10.1186/s12889-015-2106-6.

Francis CE, Longmuir PE, Boyer C, Andersen LB, Barnes JD, Boiarskaia E, et al. The Canadian Assessment of Physical Literacy: development of a model of children’s capacity for a healthy, active lifestyle through a Delphi process. J Phys Act Health. 2016;13(2):214–22. https://doi.org/10.1123/jpah.2014-0597.

Longmuir PE, Boyer C, Lloyd M, Borghese MM, Knight E, Saunders TJ, et al. Canadian Agility and Movement Skill Assessment (CAMSA): validity, objectivity, and reliability evidence for children 8–12 years of age. J Sport Health Sci. 2017;6(2):231–40. https://doi.org/10.1016/j.jshs.2015.11.004.

Liu Y, Chen S. Physical literacy in children and adolescents: definitions, assessments, and interventions. Eur Phys Educ Rev. 2020;27(1):96–112. https://doi.org/10.1177/1356336X20925502.

Sum RKW, Cheng C-F, Wallhead T, Kuo C-C, Wang F-J, Choi S-M. Perceived physical literacy instrument for adolescents: a further validation of PPLI. J Exerc Sci Fit. 2018;16(1):26–31. https://doi.org/10.1016/j.jesf.2018.03.002.

Sum RKW, Ha ASC, Cheng CF, Chung PK, Yiu KTC, Kuo CC, et al. Perceived physical literacy instrument. APA PsycTests. 2016. https://doi.org/10.1037/t63437-000.

Tremblay MS, Longmuir PE. Conceptual critique of Canada’s physical literacy assessment instruments also misses the mark. Meas Phys Educ Exerc Sci. 2017;21(3):174–6. https://doi.org/10.1080/1091367X.2017.1333002.

Longmuir PE, Gunnell KE, Barnes JD, Belanger K, Leduc G, Woodruff SJ, et al. Canadian Assessment of Physical Literacy Second Edition: a streamlined assessment of the capacity for physical activity among children 8 to 12 years of age. BMC Public Health. 2018;18(2):1047. https://doi.org/10.1186/s12889-018-5902-y.

Longmuir PE, Woodruff SJ, Boyer C, Lloyd M, Tremblay MS. Physical Literacy Knowledge Questionnaire: feasibility, validity, and reliability for Canadian children aged 8 to 12 years. BMC Public Health. 2018;18(2):1035. https://doi.org/10.1186/s12889-018-5890-y.

Gunnell KE, Longmuir PE, Barnes JD, Belanger K, Tremblay MS. Refining the Canadian Assessment of Physical Literacy based on theory and factor analyses. BMC Public Health. 2018;18(2):1044. https://doi.org/10.1186/s12889-018-5899-2.

Gunnell KE, Longmuir PE, Woodruff SJ, Barnes JD, Belanger K, Tremblay MS. Revising the motivation and confidence domain of the Canadian Assessment of Physical Literacy. BMC Public Health. 2018;18(2):1045. https://doi.org/10.1186/s12889-018-5900-0.

Lodewyk KR, Mandigo JL. Early validation evidence of a Canadian practitioner-based assessment of physical literacy in physical education: passport for Life. Phys Educ. 2017;74(3):441. https://doi.org/10.18666/TPE-2019-V76-I3-8850.

Caldwell HA, Di Cristofaro NA, Cairney J, Bray SR, Timmons BW. Measurement properties of the Physical Literacy Assessment for Youth (PLAY) tools. Appl Physiol Nutr Metab. 2021;99(999):1–8. https://doi.org/10.1139/apnm-2020-0648.

Dudley DA. A conceptual model of observed physical literacy. Phys Educ. 2015. https://doi.org/10.18666/TPE-2015-V72-I5-6020.

Chen S-T, Tang Y, Chen P-J, Liu Y. The Development of Chinese Assessment and Evaluation of Physical Literacy (CAEPL): a study using Delphi method. Int J Environ Res Public Health. 2020;17(8):2720. https://doi.org/10.3390/ijerph17082720.

Young L, O’Connor J, Alfrey L, Penney D. Assessing physical literacy in health and physical education. Curric Stud Health Phys Educ. 2021;12(2):156–79. https://doi.org/10.1080/25742981.2020.1810582.

Mohammadzadeh M, Sheikh M, Houminiyan Sharif Abadi D, Bagherzadeh F, Kazemnejad A. Design and psychometrics evaluation of Adolescent Physical Literacy Questionnaire (APLQ). Sport Sci Health. 2022;18(2):397–405. https://doi.org/10.1007/s11332-021-00818-8.

Barnett LM, Mazzoli E, Bowe SJ, Lander N, Salmon J. Reliability and validity of the PL-C Quest, a scale designed to assess children’s self-reported physical literacy. Psychol Sport Exerc. 2022. https://doi.org/10.1016/j.psychsport.2022.102164.

Barnett LM, Mazzoli E, Hawkins M, Lander N, Lubans DR, Caldwell S, et al. Development of a self-report scale to assess children’s perceived physical literacy. Phys Educ Sport Pedagogy. 2022;27(1):91–116. https://doi.org/10.1080/17408989.2020.1849596.

YongKang W, QianQian F. The Chinese assessment of physical literacy: based on grounded theory paradigm for children in grades 3–6. PLoS One. 2022. https://doi.org/10.1371/journal.pone.0262976.

Mota J, Martins J, Onofre M. Portuguese Physical Literacy Assessment Questionnaire (PPLA-Q) for adolescents (15–18 years) from grades 10–12: validity and reliability evidence of the psychological and social modules using Mokken Scale analysis. 2022. https://doi.org/10.21203/rs.3.rs-1458709/v3.

Mota J, Martins J, Onofre M. Portuguese Physical Literacy Assessment Questionnaire (PPLA-Q) for adolescents (15–18 years) from grades 10–12: development, content validation and pilot testing. BMC Public Health. 2021;21(1):1–22. https://doi.org/10.1186/s12889-021-12230-5.

Dong X. Measuring middle-school students’ physical literacy: instrument development: Barry University; 2021. ProQuest Dissertations Publishing. Available from: https://www.proquest.com/openview/6a4e01c27efe1b37104e7b3955feae2b/1?pq-origsite=gscholar&cbl=18750&diss=y. [Accessed 9 Jun 2023].

Green NR, Roberts WM, Sheehan D, Keegan RJ. Charting physical literacy journeys within physical education settings. J Teach Phys Educ. 2018;37(3):272–9. https://doi.org/10.1123/jtpe.2018-0129.

Yılmaz A, Kabak S. Perceived Physical Literacy Scale for Adolescents (PPLSA): validity and reliability study. Int J Literacy Educ. 2021;9(1):159–71. https://doi.org/10.7575/aiac.ijels.

Cairney J, Veldhuizen S, Graham JD, Rodriguez C, Bedard C, Bremer E, et al. A construct validation study of PLAYfun. Med Sci Sports Exerc. 2018;50(4):855–62. https://doi.org/10.1249/mss.0000000000001494.

Stearns JA, Wohlers B, McHugh T-LF, Kuzik N, Spence JC. Reliability and validity of the PLAYfun tool with children and youth in northern Canada. Meas Phys Educ Exerc Sci. 2019;23(1):47–57. https://doi.org/10.1080/1091367X.2018.1500368.

Jefferies P, Bremer E, Kozera T, Cairney J, Kriellaars D. Psychometric properties and construct validity of PLAYself: a self-reported measure of physical literacy for children and youth. Appl Physiol Nutr Metab. 2020. https://doi.org/10.1139/apnm-2020-0410.

Gilic B, Malovic P, Sunda M, Maras N, Zenic N. Adolescents with higher cognitive and affective domains of physical literacy possess better physical fitness: the importance of developing the concept of physical literacy in high schools. Children. 2022;9(6):796. https://doi.org/10.3390/children9060796.

Longmuir PE, Prikryl E, Rotz HL, Boyer C, Alpous A. Predilection for physical activity and body mass index z-score can quickly identify children needing support for a physically active lifestyle. Appl Physiol Nutr Metab. 2021;46(10):1265–72. https://doi.org/10.1139/apnm-2020-1103.

Elsborg P, Melby PS, Kurtzhals M, Tremblay MS, Nielsen G, Bentsen P. Translation and validation of the Canadian Assessment of Physical Literacy-2 in a Danish sample. BMC Public Health. 2021;21(1):1–9. https://doi.org/10.1186/s12889-021-12301-7.

Li MH, Sum RKW, Tremblay M, Sit CHP, Ha ASC, Wong SHS. Cross-validation of the Canadian Assessment of Physical Literacy second edition (CAPL-2): the case of a Chinese population. J Sports Sci. 2020;38(24):2850–2857. https://doi.org/10.1080/02640414.2020.1803016.

Blanchard J, Van Wyk N, Ertel E, Alpous A, Longmuir PE. Canadian Assessment of Physical Literacy in grades 7–9 (12–16 years): preliminary validity and descriptive results. J Sports Sci. 2020;38(2):177–86. https://doi.org/10.1080/02640414.2019.1689076.

Dania A, Kaioglou V, Venetsanou F. Validation of the Canadian Assessment of Physical Literacy for Greek children: understanding assessment in response to culture and pedagogy. Eur Phy Educ Rev. 2020;26(4):903–19. https://doi.org/10.1177/1356336X20904079.

Kaioglou V, Dania A, Venetsanou F. How physically literate are children today? A baseline assessment of Greek children 8–12 years of age. J Sports Sci. 2020;38(7):741–50. https://doi.org/10.1080/02640414.2020.1730024.

Mota J, Martins J, Onofre M. Portuguese Physical Literacy Assessment Questionnaire (PPLA-Q) for adolescents (15–18 years) from grades 10–12: item response theory analysis of the content knowledge questionnaire. 2022. https://doi.org/10.21203/rs.3.rs-1458688/v2.

Lodewyk KR. Early validation evidence of the Canadian practitioner-based assessment of physical literacy in secondary physical education. J Phys Educ. 2019;76(3):634–60. https://doi.org/10.18666/TPE-2019-V76-I3-8850.

Robinson DB, Randall L. Marking physical literacy or missing the mark on physical literacy? A conceptual critique of Canada’s physical literacy assessment instruments. Meas Phys Educ Exerc Sci. 2017;21(1):40–55.

Barnes JD, Guerrero MD. An R package for computing Canadian Assessment of Physical Literacy (CAPL) scores and interpretations from raw data. PLoS ONE. 2021;16(2): e0243841. https://doi.org/10.1371/journal.pone.0243841.

Dudley D, Cairney J. How the lack of content validity in the Canadian Assessment of Physical Literacy is undermining quality physical education. J Teach Phys Educ. 2022;1(aop):1–8. https://doi.org/10.1123/jtpe.2022-0063.

St John LR. From exercise to physical literacy measurement in individuals with intellectual disabilities. Canada University of Toronto, 2022. https://tspace.library.utoronto.ca/handle/1807/125684. [Accessed 9 Jun 2023].

Keegan RJ, Barnett LM, Dudley DA, Telford RD, Lubans DR, Bryant AS, et al. Defining physical literacy for application in Australia: a modified Delphi method. J Teach Phys Educ. 2019;38(2):105–18. https://doi.org/10.1123/jtpe.2018-0264.

Dreiskaemper D, Utesch T, Tietjens M. The perceived motor competence questionnaire in childhood (PMC-C). J Mot Learn Dev. 2018;6(s2):S264–80. https://doi.org/10.1123/jmld.2016-0080.

Sabiston C, Pila E, Vani M, Thogersen-Ntoumani C. Body image, physical activity, and sport: a scoping review. Psychol Sport Exerc. 2019;42:48–57. https://doi.org/10.1016/j.psychsport.2018.12.010.

Barnett LM, Stodden DF, Hulteen RM, Sacko RS. 19: motor competency assessment. In: Brusseau TA, editor. The Routledge handbook of pediatric physical activity. Routledge; 2020. p. 384–408.

Hulteen RM, Barnett LM, True L, Lander NJ, del Pozo Cruz B, Lonsdale C. Validity and reliability evidence for motor competence assessments in children and adolescents: a systematic review. J Sports Sci. 2020. https://doi.org/10.1080/02640414.2020.1756674.

Scheuer C, Herrmann C, Bund A. Motor tests for primary school aged children: a systematic review. J Sports Sci. 2019;37(10):1097–112. https://doi.org/10.1080/02640414.2018.1544535.

Essiet IA, Salmon J, Lander NJ, Duncan MJ, Eyre ELJ, Barnett LM. Rationalizing teacher roles in developing and assessing physical literacy in children. Prospects. 2020. https://doi.org/10.1007/s11125-020-09489-8.

Harvey S, Pill S. Exploring physical education teachers ‘everyday understandings’ of physical literacy. Sport Educ Soc. 2018. https://doi.org/10.1080/13573322.2018.1491002.

Essiet I, Lander NJ, Warner E, Eyre ELJ, Duncan MJ, Barnett LM. Primary school teachers’ perceptions of physical literacy assessment: a mixed-methods study. J Teach Phys Educ. 2022. https://doi.org/10.1080/17408989.2022.2028760.

Dinham J, Williams P. Developing children’s physical literacy: how well prepared are prospective teachers? Aust J Teach Educ. 2019;44(6):53–68. https://doi.org/10.14221/ajte.2018v44n6.4.

Brown TD, Whittle RJ. Physical literacy: a sixth proposition in the Australian/Victorian curriculum: health and physical education? Curric Stud Health Phys Educ. 2021;12(2):180–96. https://doi.org/10.1080/25742981.2021.1872036.

Macdonald D, Enright E, McCuaig L. Re-visioning the Australian curriculum for health and physical education. Redesigning physical education. London: Hal A. Lawson; 2018. p. 196–209.

Carl J, Barratt J, Arbour-Nicitopoulos KP, Barnett LM, Dudley DA, Holler P, et al. Development, explanation, and presentation of the Physical Literacy Interventions Reporting Template (PLIRT). Int J Behav Nutr Phys Act. 2023;20(1):21.

Acknowledgements

We acknowledge the Deakin University library team of Fiona Russell, Sarah Brunton, Rachel West and Blair Kelly for conducting the literature search.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Funding

This review was part of commissioned work for the Australian Sports Commission. LA is supported by an Australian Research Council Discovery Early Career Researcher Award (DE220100847). JS is supported by a Leadership Level 2 Fellowship, National Health and Medical Research Council (1026216, 1176885).

Conflict of interest

This review was supported by funding from the Australian Sports Commission. The Australian Sports Commission funded development of the Australian Physical Literacy Framework and the Physical Literacy for Children Questionnaire. Three authors of this review (LMB, DD, RK) were involved in development of the Australian Physical Literacy Framework and four authors on this review (LMB, RK, JS, DD) were involved in developing the Physical Literacy for Children Questionnaire.

Ethics Approval

Not applicable.

Consent to Participate

Not applicable.

Consent for Publication

Not applicable.

Availability of Data and Material

All data generated or analyzed during this study are included in this published article (and its supplementary information files.

Code Availability

Not applicable.

Authors’ Contributions

LMB, DD, and RK conceived the idea for this review with input from NR, LA, and JS. University librarians conducted the literature search with author input. LMB, AJ, and KWM selected the articles for inclusion in the review. LMB conducted the mapping to the APLF and the validity and feasibility results. DD, AJ, and RK reviewed mapping to the APLF and validity aspects. LMB extracted data and wrote the first draft of the manuscript except for the broader feasibility section, for which AJ wrote the first draft. All authors revised the original manuscript. All authors read and approved the final version.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Barnett, L.M., Jerebine, A., Keegan, R. et al. Validity, Reliability, and Feasibility of Physical Literacy Assessments Designed for School Children: A Systematic Review. Sports Med 53, 1905–1929 (2023). https://doi.org/10.1007/s40279-023-01867-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40279-023-01867-4