Abstract

Introduction

The rate of development and complexity of digital health interventions (DHIs) in recent years has to some extent outpaced the methodological development in economic evaluation and costing. Particularly, the choice of cost components included in intervention or program costs of DHIs have received scant attention. The aim of this study was to build a literature-informed checklist of program cost components of DHIs. The checklist was next tested by applying it to an empirical case, Mamma Mia, a DHI developed to prevent perinatal depression.

Method

A scoping review with a structured literature search identified peer-reviewed literature from 2010 to 2022 that offers guidance on program costs of DHIs. Relevant guidance was summarized and extracted elements were organized into categories of main cost components and their associated activities following the standard three-step approach, that is, activities, resource use and unit costs.

Results

Of the 3448 records reviewed, 12 studies met the criteria for data extraction. The main cost categories identified were development, research, maintenance, implementation and health personnel involvement (HPI). Costs are largely considered to be context-specific, may decrease as the DHI matures and vary with number of users.

The five categories and their associated activities constitute the checklist. This was applied to estimate program costs per user for Mamma Mia Self-Guided and Blended, the latter including additional guidance from public health nurses during standard maternal check-ups. Excluding research, the program cost per mother was more than double for Blended compared with Self-Guided (€140.5 versus €56.6, 2022 Euros) due to increased implementation and HPI costs. Including research increased the program costs to €190.8 and €106.9, respectively. One-way sensitivity analyses showed sensitivity to changes in number of users, lifespan of the app, salaries and license fee.

Conclusion

The checklist can help increase transparency of cost calculation and improve future comparison across studies.

Plain Language Summary

Estimating program or intervention costs of digital health interventions (DHIs) can be challenging without a checklist. We reviewed scientific literature to identify key cost categories of DHIs: development, research, maintenance, implementation and health personnel involvement. We also summarized relevant information regarding resource use and unit cost for each of the aforementioned categories. Applying this checklist to Mamma Mia, a DHI aimed at preventing perinatal depression, we find that the total cost per user for Mamma Mia Self-Guided is €106.9 and for Mamma Mia Blended is €190.8. Our checklist enhances visibility of DHI cost components and can aid analysts in better estimating costs for economic evaluations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Comparing costs in published economic evaluations of digital health interventions is challenging due to limited transparency in the program costs reported. |

We have built a literature-informed checklist that categorizes program cost components of digital health interventions in the standard three-step model: activities, resource use and unit costs. |

The checklist proved useful in estimating costs of a specific DHI, Mamma Mia, and has the potential to enhance transparency in future economic evaluations. |

1 Introduction

Economic evaluations of digital health interventions (DHIs) have increased in recent decades following the widespread development and uptake of DHIs. It is challenging to apply the standard methods of cost and effect estimation on DHIs since these technologies tend to develop both iteratively and quickly, may have multiple intended users and a mixed set of potential control conditions [1, 2]. In the published literature, the choice of cost components included in the DHI intervention or program costs, have received inadequate attention [3]. Whether research and development should be included or not is a matter of debate [4]. Furthermore, what costs should actually be included is often unclear [3, 5]. This makes it difficult to compare the cost effectiveness of DHIs, pool estimates from different sources, or transfer estimates to other contexts [5, 6]. Gomes and colleagues have provided methodological recommendations for economic evaluation of DHIs but comprehensive guidance on the subject of program costs is lacking [7].

DHIs have evolved from simple video or telephone consultations, to programs that can monitor health in real time, motivate behavior change, assist health decision making and manage information sharing between several agents [2]. DHIs can potentially increase access to healthcare at low cost, which is invaluable considering the global challenge of inequities in healthcare [8]. With the advent of artificial intelligence and self-guided technologies, the role of DHIs in assisting health systems is only likely to increase [9, 10]. Before implementation, decision makers need evidence-based assessments that comply with the established principles of economic evaluation in which program costs play a crucial role [11, 12].

The standard practice in health economic evaluations is to quantify all relevant costs and consequences of the choice between options. In this study, we focused specifically on intervention costs or program costs. In line with Drummond and colleagues (2015), we defined program costs similar to the total cost of a program, that is, “cost of producing a particular quantity of output” [13]. The program costs comprise both fixed and variable costs. We distinguished program costs from other economic considerations, such as the cost consequence of a preventive intervention, the societal or productivity gains of an intervention, or the costs accrued due to life-years gained. Program costs are only concerned with the production of a unit of good or service [13].

In many cases, program costs are assumed to be static [13], while in the case of DHIs, program costs may be dynamic. For example, DHIs are often developed iteratively with frequent updates to adapt to user wants and needs. The costs of scaling up to new users may be low, but total costs may increase as more stakeholders are involved [14]. This does not mean that methods for economic evaluation must be altered for DHIs. After all, DHIs compete for the same bulk of resources as other technologies [14]. However, the unique cost structure of DHIs requires further attention and methodological development.

Recent literature reviews have shed light on the heterogeneity in program costs of DHIs reported in published economic evaluations. Jacobs and Barnett highlighted the inconsistencies in cost reporting and underscored how estimating these costs are a particular challenge for telemedicine and new technologies [15]. Mitchell and colleagues reported difficulty in comparing the cost effectiveness of various interventions, particularly owing to how program costs were reported [3]. Kidholm and Kristensen made a similar observation and summarized the program costs variability in a tabular form [5]. It is noteworthy, that while these studies also offered some solutions, for example, Mitchell and colleagues (2021) proposed an overall cost components checklist, the question of program costs of DHIs has not been systematically explored in scientific literature thus far [3].

Existing methodological guidance on costing does not adequately capture the complexity of program cost estimation of DHIs. For example, ISPOR best practices guideline for estimating drug costs in economic evaluations recommends estimating marginal costs based on the drug’s negotiated price [16]. In the case of DHIs, there is often no negotiated price, and the price, or license fee, may not capture all aspects of the program costs. Similarly, frameworks developed for complex interventions have also not sufficiently addressed program cost estimation [17,18,19,20]. Methodological guidance is emerging for specific cost components, such as implementation costs of public health programs [21, 22], but this cannot account for the full breadth of program costs of DHIs. Therefore, the existing scientific literature is insufficient to help standardize the estimation and reporting of program costs of DHIs.

The specific challenges of DHIs are only to a small extent addressed by the regulatory bodies [23]. Leading guidelines for DHIs, such as those developed by the National Institute of Healthcare and Excellence (NICE), UK [11, 24], Food and Drug Administration, USA [25] and the Medical Device Coordination Group of the European Commission [26], focus on classifying DHIs by risk categories and offer methodological recommendations for measuring clinical outcomes and effectiveness. The question of cost structure, criteria for cost inclusion or methods for program cost estimation have not been specifically addressed [27].

The aim of this study is to build a checklist of program cost components of DHIs that is specific for health economic evaluations. The foundation of this checklist lies in the standard three-step approach in costing: list and identify activities, measure resource use and evaluate using appropriate unit costs. A challenge is what activities to include. The basis for the development of the checklist is a scoping review. The data extracted from the review informs the checklist’s main cost components and their associated activities and resource use. Methodological challenges pertinent to program cost estimation were charted and analyzed. The scoping review also shed light on factors and assumptions that must be considered as part of the cost estimation. To test the application of the checklist, we used Mamma Mia as an example case. Mamma Mia is a DHI developed to prevent perinatal depression and increase subjective well-being. The paper is organized in two sections: the scoping review and the resulting checklist, followed by the empirical case study.

2 Method

2.1 Scoping Review

We performed a scoping review to identify and analyze the literature on the costing of DHIs. The detailed protocol for our scoping review is available elsewhere [28]. The review was performed in accordance with the Preferred Reporting Items for Systematic reviews and Meta-Analyses extension for Scoping Reviews (PRISMA-ScR) [29].

The primary inclusion criteria were reviews (systematic, scoping or other) and studies that examined and offered guidance on program costs of DHIs. We defined DHIs similar to the NICE criteria for technologies suitable for the Evidence Standards Framework, that is, smartphone applications, standalone software, digital diagnostic tools and analytical programs for medical devices [24]. Telemedicine, telehealth, mHealth and eHealth were also included. Studies that focused on the effectiveness or cost effectiveness of DHIs, and did not discuss program costs of DHIs, were excluded. The review included qualitative as well as quantitative studies.

To identify relevant studies, a structured literature search was conducted in MEDLINE, Embase, Web of Science and Google Scholar in January 2023. The two main concepts ‘digital health interventions’ and ‘cost estimation’, were searched as thesaurus or free-text terms in the title, abstracts and keywords fields. We included records from January 2010 and onwards. This was a pragmatic consideration given that the term e-Health first appeared in 2000 [30] and mHealth first appeared in 2002 [31]. Research in mHealth technologies experienced an upsurge between 2007 and 2008 [31].

The resulting list of titles and abstracts were compiled in an EndNote library, and after duplicate removal, uploaded to PICO Portal [32]—an artificial intelligence (AI)-based software for systematic reviews. The first author screened all abstracts whereas the last author screened articles identified as relevant by the first author. Articles identified as relevant by both authors were retrieved for full-text review for data extraction. We used the ‘charting approach’ to summarize each study´s findings [33]. The fields of data extracted from the selected papers were:

-

1.

Study title

-

2.

Author(s)

-

3.

Year

-

4.

Study type: review or guidance

-

5.

Did the study describe or use a guideline or framework?

-

6.

What are the program cost categories of DHIs considered?

-

7.

Which resources and activities are associated with program cost categories?

-

8.

Are methodological approaches to estimate program costs described?

-

9.

In order to report costs, which specific challenges are described?

-

10.

Which specific factors influence program costs?

-

11.

What are the study’s overall methodological recommendations?

-

12.

Are there any specific recommendations regarding which cost categories to include (or exclude) in an economic evaluation?

2.2 Analytical Approach

After charting the studies, the authors critically appraised the extracted data to identify relevant program cost categories. Subsequently, the information recorded in the review chart was reorganized to complete the checklist of program cost components, that is, a tabular delineation of DHI program costs with corresponding comments on activities, resource use and unit costs. The checklist is primarily derived from the literature review, but relevant additional items were suggested by authors and finalized via discussion.

The checklist was then applied to Mamma Mia to estimate the DHI’s program costs per user. We performed several one-way deterministic sensitivity analyses to explore how varying selected cost inputs influenced the program cost components of the checklist. Figure 1 summarizes our analytical approach.

3 Results

3.1 Scoping Review

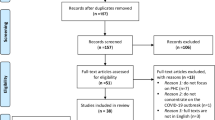

The structured search yielded 6614 records, which were reduced to 3448 after duplicate removal from EndNote v20.4.1 [34]. The PICO Portal [32] removed an additional 53 duplicate records. The first author screened 3395 records and shortlisted 176 abstracts that were reviewed by the last author. After excluding a further 135 abstracts, both authors concurrently reviewed the full text of 41 studies. Of these, 12 were identified as relevant and were included in the data extraction. The Preferred Reporting Items for Systematic Reviews and Meta Analyses (PRISMA) diagram (Fig. 2) details the screening process for the scoping review [35]. The data extraction results from the charting process are summarized in Table 1. The full data extraction chart is provided in the electronic supplemental material (ESM).

Among the 12 studies included in the data extraction, there were three systematic reviews [36,37,38], two scoping reviews [39, 40], one review [41] and six guidance or methodology style studies [7, 15, 42,43,44,45]. While these studies discussed program costs of DHIs from different angles, they did not exclusively focus on program costs. Five studies referred to some guidelines, but none of these were specific to program costs [15, 36,37,38, 44].

The studies examined program costs from various perspectives. Some mentioned development costs exclusively [7, 44, 45], whereas others used synonymous concepts such as capital [37, 43], technology [38], equipment [15, 39, 41, 42] or production-related expenses [36]. Certain studies touched upon costs of implementation [15, 45], user engagement [7, 36, 40] and dissemination [37]. Furthermore, costs of maintenance [7, 37,38,39, 44], hosting [37] and updates [45] were also taken into account. The exploration of costs of health personnel training and support was a recurring theme in literature [15, 37, 41,42,43]. While the included studies did not specifically mention research costs, they underscored the importance of evidence generation for measuring effectiveness.

The studies highlighted that DHIs evolve quickly [[7, 15, 41, 44, 45] and their program costs are often context specific [36, 39]. This makes it difficult to pool estimates from different sources [37]. Two systematic reviews reported a lack of methodological guidance on program cost estimation and heterogeneity in cost components included in the published estimates [36, 37].

3.2 Literature-Informed Checklist of Program Cost Components

Following the scoping review, we developed a checklist that categorizes the program costs of DHIs and lists common activities associated with each cost category (see Table 2). The checklist also details resource use and types of unit costs that can inform the cost estimation. We have commented on the methodological considerations and relevant cost analyses for each cost category.

The main cost categories identified through the full-text review were development, research, maintenance, implementation and health personnel involvement. Development comprises the conception, planning, content and service design, software development and testing of the intervention [7, 15, 37, 38, 40, 41, 43,44,45]. Research entails evidence generation for usability, patient satisfaction, clinical efficacy and cost effectiveness including feasibility studies and pilot testing, based on trial design like randomized controlled trials (RCTs) or natural experiments [15, 40, 41, 44]. The technical maintenance of a DHI involves data management, privacy protection, hosting, updating, troubleshooting and help desks [7, 15, 37, 39, 40, 44]. Implementation encompasses a large set of activities to promote the DHI and align it with an organization’s workflow, patient pathway, legal requirements and the technical system [7, 36,37,38,39,40,41,42, 44, 45], for both the healthcare provider and the user. Some DHIs also require health personnel involvement as part of service delivery [7, 15, 38, 41,42,43,44] in the form of guidance, consultation, examination, diagnosis or screening performed through or alongside the DHI.

3.3 Empirical Case Study—Mamma Mia

The Mamma Mia app is a smartphone/tablet-based program for the prevention of perinatal depression. The program comprises 44 interactive weekly sessions, which start mid-pregnancy and last 6 months postpartum [51]. The app was originally designed as an internet-based program and underwent a feasibility study, which helped tailor the program’s content and presentation [52]. The intervention was then evaluated in an RCT where it was found to be effective in preventing perinatal depression as an add-on treatment compared with standard treatment alone [51].

Mamma Mia is currently undergoing a second RCT (ISRCTN11387924) where the effectiveness and cost effectiveness of added guidance from health personnel is evaluated (‘Mamma Mia Blended’) against the standalone app (‘Mamma Mia Self-Guided’). The mothers in the blended group follow the same program as the self-guided group, except that they receive additional guidance from midwives and public health nurses during standard maternal check-ups. The health personnel do not have access to the mothers’ responses in the app. Mothers that indicate a moderate or high risk of depression in the app’s built-in depression screening are recommended to seek social support (e.g., partner, friends or relatives), consult their general practitioner, or call a mental health helpline. The symptom scores on the depression screening are not communicated to health personnel, however, health personnel are trained in the program contents and to provide supportive counselling related to perinatal depression.

We applied the checklist from the scoping review to estimate the program costs per user of Mamma Mia Self-Guided and Blended. Mamma Mia Self-Guided is available to all women in Norway, whereas Blended is only available as part of the ongoing RCT. Costs for Self-Guided represent the resource use of the standalone app, whereas Blended adds the cost of health personnel involvement. Costs were annuitized using straight-line depreciation, assuming a lifespan of 5 years and no salvage value. The estimates were made in Norwegian kroner and converted to 2022 Euros (1 Euro = 10.10 NOK) [53]. Resource use and unit costs for each cost category of Mamma Mia are summarized in the ESM (ESM Table 3).

We made top-down estimates of costs of development, research and maintenance. The 1-year license fee paid by the Norwegian Directorate of Health of €474,920, covers the costs of both development and maintenance. Total research costs from national projects are estimated at €2177,657 for the app’s lifetime.

Implementation costs are threefold: overall implementation costs (Self-Guided and Blended), program administration (Blended) and training of health personnel (Blended). For the overall implementation costs, two authors concurrently reviewed the Expert Recommendations for Implementing Change (ERIC) taxonomy [50], marked activities that were performed for Mamma Mia and estimated annual hours spent based on a pre-defined gradient of intensity (see ESM). Implementation costs related to program administration in well baby clinics and health personnel training are based on the ongoing RCT’s implementation guidelines [54], supplemented with expert opinion.

As for the cost of health personnel involvement (Blended only), the RCT guidelines indicate that each mother receives four guidance sessions in total. We assume that 30% of the national average consultation time for a routine maternal checkup [55] would be spent on Mamma Mia-related guidance.

In order to evaluate the cost per user, we used information from Mamma Mia´s developer, ChangeTech. A total of 8656 women, or roughly 16% of pregnant mothers in Norway, used the Mamma Mia app on a self-guided basis in 2022 [56]. Applying the percentage to the average number of women per midwife in municipal health services in Norway [57], we assume that 15 mothers per health personnel use the app in the Blended group.

Based on the aforementioned estimates of resource use, unit costs and number of users, we find that if research is not included, the total cost per mother is €56.6 for Mamma Mia Self-Guided and €140.5 for Mamma Mia Blended. If research is included, the total costs are €106.9 and €190.8, respectively (see Table 3).

3.3.1 Sensitivity Analyses

We performed one-way sensitivity analyses on the ‘Blended + Research’ costs (Table 3) to examine the effect of varying the following items on the total cost per mother: salary of health personnel, guidance time per mother, license fee (annualized), implementation time, lifespan of the app, number of users and women per health personnel. We found that our cost per mother estimates are especially sensitive to changes in the number of users, lifespan of the app, personnel salary, women per health personnel and the license fee (see Table 4).

4 Discussion

This study illustrates the challenges and complexity of estimating the program costs of DHIs. To our knowledge, it is the first study that examines the existing literature on program costs of DHIs, builds a simplified checklist for understanding common cost components and then applies the cost checklist to a case. In the process, we have identified the main cost categories, factors that influence the costs of DHIs, such as the number of users and the assumed lifespan of the technology, and explored how uncertainty surrounding these estimates affected the calculated cost. The program cost checklist is intended for economic evaluations but may also be a useful tool for planning the development, implementation and maintenance of DHIs, and for efficiency measurement.

The challenge of heterogeneous costing in economic evaluations has been recognized. The comprehensive PECUNIA Project represents a network of partners that aim to establish standardized costing and outcome assessment measures with a multi-sectorial and multi-national approach [58]. In the digital health arena, there are efforts underway to define DHIs [59], classify them according to risks [60], or build frameworks to assess their value [61]. As indicated by our scoping review, the question of DHI program costs has been raised from various perspectives but comprehensive guidance is lacking [62]. Our proposed program cost checklist bridges this gap and we have illustrated the application of the checklist through a case study.

Our case example, Mamma Mia, may be considered a Tier B intervention in the NICE Evidence Standards Framework (i.e. informs the patients about a condition, collects information on patients’ subjective wellbeing and records measurable patient outcomes) [24]. However, our cost checklist is adaptable to a wide range of technologies. For instance, DHIs involving artificial intelligence may completely replace the need for health personnel involvement but are also costly to develop and maintain. This cost trade-off between development and health personnel guidance, which has been emphasized by Michie and colleagues [45], can be accommodated within our checklist.

A rapid digital transformation is underway, aiming to empower patients and enhance health personnel efficiency [63]. The level of health personnel involvement varies between DHIs and the attribution of their resource use is a topic of inquiry in economic evaluations [3]. Mamma Mia Blended has relatively low health personnel involvement but we find that program cost per user more than doubles when health personnel are involved (€140.5 vs €56.6, 2022 Euros), taking into account both personnel involvement and implementation. For guided DHIs led entirely by clinicians or therapists, the program costs will be higher.

There is currently a debate on whether DHIs should be subjected to the same standards for evidence generation for cost effectiveness as pharmaceuticals, medical devices, or non-digital health interventions [1]. The debate arises due to the relatively short lifespans of DHIs, paired with their quick evolution. Methods for health technology assessment analyses of early effectiveness are being developed to accommodate the rapid growth of DHIs [64]. Referring to the NICE Evidence Standards Framework, Greaves and colleagues argued that evidence standards for DHIs should not be compromised for expediency [12]. Likewise, we emphasize that while the cost estimation of DHIs may be an iterative process, the justification of costs must comply with the established standards of health economic evaluations.

Program costs of DHIs are dynamic. For instance, development costs may decrease with each iteration due to enhanced efficiency [65]. Maintenance costs may also decline as the price performance ratio of equipment increases (Moore’s Law) [47]. Likewise, implementation expenses may reduce as the DHI matures. Considering the iterative development and implementation of DHIs, economic evaluations performed in the early phases of the DHI lifecycle become less relevant in the later stages [40, 44, 45]. Therefore, a clear recommendation is to perform iterative cost analysis, that is, use flexible data collection methods to make rapid and frequent cost estimation throughout the DHI’s lifecycle [7, 15, 41, 44, 45]. Our checklist can be used as a tool for iterative costing since it allows for sequentially estimating and updating individual cost components as the DHI matures. Specific methods for iterative costing are discussed elsewhere [66, 67].

Several studies in our scoping review underscored the relationship between economies of scale and cost effectiveness of DHIs [36, 38, 41]. Since most DHIs have large upfront costs—development and research—unsurprisingly, the cost per user decreases as the number of users increases. However, for cost components that are partially variable, such as maintenance or implementation, the changes in cost per user may be stepwise rather than linear. For example, costs per user initially decrease as demand increases owing to increased efficiency of existing servers or health personnel. Eventually these costs increase as server capacity is upgraded or new health personnel are trained [47].

There are several challenges with determining the number of users for the cost per user estimate. Firstly, it may be difficult to retrieve or project this information. Secondly, the definition of a user is elusive. Many DHIs require active and sustained engagement by the user. Level of engagement or program compliance may also need to be considered in addition to the absolute number of users [36]. While many studies estimate number of users based on RCT participants, this can overestimate the costs per user [7]. One solution is to update the number of users as the app matures, that is, iterative costing. In our empirical analysis, we received the number of registered users by Mamma Mia’s developer and used a mixed approach to predict the number of users in the blended group in a Norwegian setting. Our sensitivity analysis underscored the importance of our assumptions. Therefore, estimating the number of users requires further development of methodological guidance.

The choice of method of annuitization of costs influences the program cost estimate [38]. Bergmo recommends annuitizing costs using discount factors in order to account for both the depreciation period and the opportunity cost of capital [42]. Luxton similarly stresses incorporating opportunity costs in the annuity estimation by multiplying the asset´s undepreciated value with the interest rate [43]. Connected to the question of annuitization of costs is the expected lifetime of the app and its associated capital resources. One study in our scoping review assumed the expected life of their case DHI as 5 years, which is in line with the 3–5 years assumption reported in another scoping review [5]. So far, these lifespan estimates are based on assumptions whereas the program cost estimate would be strengthened with a more scientific approach to estimating the expected life of the DHI.

Reviews of economic evaluations of DHIs reveal that there is lack of transparency in costs that are included [65, 68]. Many economic evaluations disregard development costs [3], but Sülz and colleagues warn that exclusion of research and development costs assumes that these costs are sunk, whereas in reality these costs may be recurring [40]. Jacobs and Barnett recommend including research and development costs in the societal perspective [15]. A broader approach is to include development costs if the decision involves creation of a new DHI [7, 44]. In our empirical case study, we found that development, research and maintenance account for roughly 55% of the app’s program costs per user. Disregarding them without justification can potentially bias the results of an economic evaluation. Similarly, implementation costs are often ignored in economic evaluations but can represent a substantial burden for the health system [69]. In the case of Mamma Mia, implementation costs represent a significant proportion of the overall costs (25%).

The Second Panel on Cost-Effectiveness in Health and Medicine recommends that health economic evaluations report reference cases from both the health care sector perspective and the societal perspective [70]. Naturally, all program costs are relevant in the societal perspective. In the health care sector perspective, the choice of program cost categories can be complex as these costs may be distributed across various budgets [41]. For example, in the case of Mamma Mia, research was performed outside the scope of the health care sector, and as such these costs were not included in the license fee. Similarly, when comparing privately versus publicly funded health systems, the program costs may be experienced by different groups. Mamma Mia was provided at no cost to the user since the license was purchased by a national payer. In a privately funded health system, the user may be expected to pay for the DHI either directly, or indirectly via an insurer or health plan manager.

4.1 Limitations

Our scoping review and the resulting checklist is a first step in developing guidelines for cost estimation of DHIs. We underscore that methodological guidance on economic evaluations of DHIs is emerging and our resulting checklist reflects weaknesses found in the nascent literature. While our wide search yielded several relevant studies, our scoping review is not exhaustive and our proposed checklist can be refined with guidance from experts and practitioners in the field.

In our empirical estimation, we could not separate maintenance costs from the license fee. A pragmatic solution to understand the fixed versus variable costs in terms of the license fee is to examine the changes in license fee per annum. For example, if the license is renewed for subsequent years and the license fee is reduced, this could indicate that the original license primarily accounted for development costs, whereas the subsequent licenses account for maintenance costs. Scientific literature on estimation of maintenance costs is lacking [71], but an industry rule of thumb is to expect maintenance to account for 15–25% of the development costs in the long term [72].

5 Conclusion

The program cost checklist and our pragmatic case study illuminate the cost components that should be considered and identified when estimating program costs within economic evaluations of DHIs. Certainly, the perspective, time horizon and the comparator of the economic evaluation will in general influence which costs are included, but applying the checklist will increase transparency and facilitate comparison across studies. Reporting according to the checklist may help future analysts improve their justification of costs in economic evaluations.

References

Guo C, Ashrafian H, Ghafur S, Fontana G, Gardner C, Prime M. Challenges for the evaluation of digital health solutions—a call for innovative evidence generation approaches. NPJ Digit Med. 2020;3:110.

Murray E, Hekler EB, Andersson G, Collins LM, Doherty A, Hollis C, et al. Evaluating digital health interventions: key questions and approaches. Am J Prev Med. 2016;51(5):843–51.

Mitchell LM, Joshi U, Patel V, Lu C, Naslund JA. Economic evaluations of internet-based psychological interventions for anxiety disorders and depression: a systematic review. J Affect Disord. 2021;284:157–82.

Donker T, Blankers M, Hedman E, Ljótsson B, Petrie K, Christensen H. Economic evaluations of Internet interventions for mental health: a systematic review. Psychol Med. 2015;45(16):3357–76.

Kidholm K, Kristensen MBD. A scoping review of economic evaluations alongside randomised controlled trials of home monitoring in chronic disease management. Appl Health Econ Health Policy. 2018;16(2):167–76.

Bongiovanni-Delarozière I, Le Goff-Pronost M. Economic evaluation methods applied to telemedicine: From a literature review to a standardized framework. Eur Res Telemed La Recherche Européenne en Télémédecine. 2017;6(3):117–35.

Gomes M, Murray E, Raftery J. Economic evaluation of digital health interventions: methodological issues and recommendations for practice. Pharmacoeconomics. 2022;40(4):367–78.

The World Bank. Digital-in-health: unlocking the value for everyone. 2023.

Smith AA, Li R, Tse ZTH. Reshaping healthcare with wearable biosensors. Sci Rep. 2023;13(1):4998.

Chen C, Ding S, Wang J. Digital health for aging populations. Nat Med. 2023;29(7):1623–30.

Unsworth H, Dillon B, Collinson L, Powell H, Salmon M, Oladapo T, et al. The NICE Evidence Standards Framework for digital health and care technologies—developing and maintaining an innovative evidence framework with global impact. Digit Health. 2021;7:20552076211018616.

Greaves F, Joshi I, Campbell M, Roberts S, Patel N, Powell J. What is an appropriate level of evidence for a digital health intervention? Lancet. 2018;392(10165):2665–7.

Drummond MF, Sculpher MJ, Claxton K, Stoddart GL, Torrance GW. Methods for the economic evaluation of health care programmes. Oxford: Oxford University Press; 2015.

Tarricone R, Petracca F, Cucciniello M, Ciani O. Recommendations for developing a lifecycle, multidimensional assessment framework for mobile medical apps. Health Econ (United Kingdom). 2022;31(S1):73–97.

Jacobs JC, Barnett PG. Emergent challenges in determining costs for economic evaluations. Pharmacoeconomics. 2017;35(2):129–39.

Garrison LP Jr, Mansley EC, Abbott TA 3rd, Bresnahan BW, Hay JW, Smeeding J. Good research practices for measuring drug costs in cost-effectiveness analyses: a societal perspective: the ISPOR Drug Cost Task Force report—Part II. Value Health. 2010;13(1):8–13.

Payne K, Thompson AJ. Economic evaluations of complex interventions. Complex interventions in health. Routledge; 2015. p. 248-57.

Skivington K, Matthews L, Simpson SA, Craig P, Baird J, Blazeby JM, et al. A new framework for developing and evaluating complex interventions: update of Medical Research Council guidance. BMJ. 2021;374: n2061.

O’Cathain A, Croot L, Duncan E, Rousseau N, Sworn K, Turner KM, et al. Guidance on how to develop complex interventions to improve health and healthcare. BMJ Open. 2019;9(8):e029954.

Richards DA. The complex interventions framework. Complex interventions in health: an overview of research methods. Abingdon: Routledge; 2015. p. 1–15.

Malhotra A, Thompson RR, Kagoya F, Masiye F, Mbewe P, Mosepele M, et al. Economic evaluation of implementation science outcomes in low- and middle-income countries: a scoping review. Implement Sci. 2022;17(1):76.

Sohn H, Tucker A, Ferguson O, Gomes I, Dowdy D. Costing the implementation of public health interventions in resource-limited settings: a conceptual framework. Implement Sci. 2020;15(1):86.

Vervoort D, Tam DY, Wijeysundera HC. Health technology assessment for cardiovascular digital health technologies and artificial intelligence: why is it different? Can J Cardiol. 2022;38(2):259–66.

National Health Service. Evidence standards framework (ESF) for digital health technologies. In: National Institute for Health and Care Excellence (NICE), editor. 2018.

U.S. Food and Drug Administration. The Software Precertification (Pre-Cert) pilot program: tailored total product lifecycle approaches and key findings. Online: U.S. Food and Drug Administration,; 2022. p. 30.

European Commission. Guidance on Qualification and Classification of Software in Regulation (EU) 2017/745—MDR and Regulation (EU) 2017/746—IVDR In: Medical Device Coordination Group, editor. Online: European Commission; 2019. p. 28.

Kolasa K, Kozinski G. How to value digital health interventions? A systematic literature review. International Journal of Environmental Research and Public Health. 2020;17(6):2119

Khan ZAK, Kristian P, Sindre A, Drozd F, Haga SM, Sundrehagen T, Olvesen ES, Halsteinli V. Program costs of digital health interventions. Web: Open Science Framework; 2023.

Tricco AC, Lillie E, Zarin W, O’Brien KK, Colquhoun H, Levac D, et al. PRISMA extension for scoping reviews (PRISMA-ScR): checklist and explanation. Ann Intern Med. 2018;169(7):467–73.

Pagliari C, Sloan D, Gregor P, Sullivan F, Detmer D, Kahan JP, et al. What Is eHealth (4): a scoping exercise to map the field. J Med Internet Res. 2005;7(1): e9.

Fiordelli M, Diviani N, Schulz PJ. Mapping mHealth research: a decade of evolution. J Med Internet Res. 2013;15(5): e95.

PICO Portal. PICO Portal. New York.

Westphaln KK, Regoeczi W, Masotya M, Vazquez-Westphaln B, Lounsbury K, McDavid L, et al. From Arksey and O’Malley and Beyond: customizations to enhance a team-based, mixed approach to scoping review methodology. MethodsX. 2021;8: 101375.

The EndNote Team. EndNote. EndNote 20 ed. Philadelphia: Clarivate; 2013.

Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. 2021;372: n71.

Hazel CA, Bull S, Greenwell E, Bunik M, Puma J, Perraillon M. Systematic review of cost-effectiveness analysis of behavior change communication apps: assessment of key methods. Digit Health. 2021;7:13.

Jankovic D, Bojke L, Marshall D, Saramago Goncalves P, Churchill R, Melton H, et al. Systematic review and critique of methods for economic evaluation of digital mental health interventions. Appl Health Econ Health Policy. 2021;19(1):17–27.

Kumar G, Falk DM, Bonello RS, Kahn JM, Perencevich E, Cram P. The costs of critical care telemedicine programs: a systematic review and analysis. Chest. 2013;143(1):19–29.

Hilty DM, Serhal E, Crawford A. A telehealth and telepsychiatry economic cost analysis framework: scoping review. Telemed J ehealth. 2022;30.

Sülz S, van Elten HJ, Askari M, Weggelaar-Jansen AM, Huijsman R. eHealth applications to support independent living of older persons: scoping review of costs and benefits identified in economic evaluations. J Med Internet Res. 2021;23(3): e24363.

Huter K, Krick T, Rothgang H. Health economic evaluation of digital nursing technologies: a review of methodological recommendations. Health Econ Rev. 2022;12(1) (no pagination).

Bergmo TS. How to measure costs and benefits of eHealth interventions: an overview of methods and frameworks. J Med Internet Res. 2015;17(11): e254.

Luxton DD. Considerations for planning and evaluating economic analyses of telemental health. Psychol Serv. 2013;10(3):276–82.

McNamee P, Murray E, Kelly MP, Bojke L, Chilcott J, Fischer A, et al. Designing and undertaking a health economics study of digital health interventions. Am J Prev Med. 2016;51(5):852–60.

Michie S, Yardley L, West R, Patrick K, Greaves F. Developing and evaluating digital interventions to promote behavior change in health and health care: recommendations resulting from an international workshop. J Med Internet Res. 2017;19(6): e232.

Joshi U, Naslund JA, Anand A, Tugnawat D, Vishwakarma R, Bhan A, et al. Assessing costs of developing a digital program for training community health workers to deliver treatment for depression: a case study in rural India. Psychiatry Res. 2022;307: 114299.

Paterson L, Rennick-Egglestone S, Gavan SP, Slade M, Ng F, Llewellyn-Beardsley J, et al. Development and delivery cost of digital health technologies for mental health: application to the narrative experiences online intervention. Front Psychiatry. 2022;13 (no pagination).

Monashefsky A, Alon D, Baranowski T, Barreira TV, Chiu KA, Fleischman A, et al. How much did it cost to develop and implement an eHealth intervention for a minority children population that overlapped with the COVID-19 pandemic. Contemp Clin Trials. 2023;125 (no pagination).

Cidav Z, Mandell D, Pyne J, Beidas R, Curran G, Marcus S. A pragmatic method for costing implementation strategies using time-driven activity-based costing. Implement Sci. 2020;15(1):28.

Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;10(1):21.

Haga SM, Drozd F, Lisøy C, Wentzel-Larsen T, Slinning K. Mamma Mia—a randomized controlled trial of an internet-based intervention for perinatal depression. Psychol Med. 2019;49(11):1850–8.

Haga SM, Drozd F, Brendryen H, Slinning K. Mamma Mia: a feasibility study of a web-based intervention to reduce the risk of postpartum depression and enhance subjective well-being. JMIR Res Protoc. 2013;2(2): e29.

European Central Bank. Euro foreign exchange reference rates Web2022 [Euro to Norwegian kroner average conversion rate 2022]. https://www.ecb.europa.eu/stats/policy_and_exchange_rates/euro_reference_exchange_rates/html/eurofxref-graph-nok.en.html. Accessed 22 June 2023.

Olavesen ES, Sundrehagen S, Haga SM, Hartmann K, Randen I, Staksrud G, F. D. Mamma Mia—a practical guide for health promotion and prevention. Oslo: Regional Centre for Child and Adolescent Mental Health, Eastern and Southern Norway.; 2021.

The Norwegian Directorate of Health. Tools for calculating staffing in child health clinics and school health services Online2022; updated 27 June 2022. https://www.helsedirektoratet.no/tema/helsestasjons-og-skolehelsetjenesten/verktoy-beregning-bemanning. Accessed 5 Feb 2023.

Statistics Norway. Births. Web: Statistics Norway; 2023.

The Norwegian Directorate of Health. Midwives in Municipalities [Translated] Web: The Norwegian Directorate of Health; 2021. https://www.helsedirektoratet.no/rapporter/tilgang-pa-og-behov-for-jordmodre/jordmodre-i-helsetjenestene/jordmodre-i-kommunene. Accessed 3 Feb 2023.

Pokhilenko I, Janssen LMM, Paulus ATG, Drost RMWA, Hollingworth W, Thorn JC, et al. Development of an instrument for the assessment of health-related multi-sectoral resource use in Europe: the PECUNIA RUM. Appl Health Econ Health Policy. 2023;21(2):155–66.

Burrell A, Zrubka Z, Champion A, Zah V, Vinuesa L, Holtorf A-P, et al. How useful are digital health terms for outcomes research? An ISPOR Special Interest Group Report. Value Health. 2022;25(9):1469–79.

Unsworth H, Wolfram V, Dillon B, Salmon M, Greaves F, Liu X, et al. Building an evidence standards framework for artificial intelligence-enabled digital health technologies. Lancet Digit Health. 2022;4(4):e216–7.

Haig M, Main C, Chávez D, Kanavos P. A value framework to assess patient-facing digital health technologies that aim to improve chronic disease management: a Delphi approach. Value Health. 2023;26:1474.

Benedetto V, Filipe L, Harris C, Spencer J, Hickson C, Clegg A. Analytical frameworks and outcome measures in economic evaluations of digital health interventions: a methodological systematic review. Med Decis Making. 2023;43(1):125–38.

Socha-Dietrich K. Empowering the health workforce to make the most of the digital revolution. 2021.

Grutters JPC, Govers T, Nijboer J, Tummers M, van der Wilt GJ, Rovers MM. Problems and promises of health technologies: the role of early health economic modeling. Int J Health Policy Manag. 2019;8(10):575–82.

Wilkinson T, Wang M, Friedman J, Prestidge M. A framework for the economic evaluation of digital health interventions. 2023.

Ohinmaa A, Hailey D, Roine R. Elements for assessment of telemedicine applications. Int J Technol Assess Health Care. 2001;17(2):190–202.

LeFevre AE, Shillcutt SD, Broomhead S, Labrique AB, Jones T. Defining a staged-based process for economic and financial evaluations of mHealth programs. Cost Effect Resour Alloc. 2017;15:1–16.

Gentili A, Failla G, Melnyk A, Puleo V, Tanna GLD, Ricciardi W, Cascini F. The cost-effectiveness of digital health interventions: a systematic review of the literature. Front Public Health. 2022;10: 787135.

Snoswell CL, Taylor ML, Caffery LJ. Why telehealth does not always save money for the health system. J Health Organ Manag. 2021;35(6):763–75.

Sanders GD, Neumann PJ, Basu A, Brock DW, Feeny D, Krahn M, et al. Recommendations for conduct, methodological practices, and reporting of cost-effectiveness analyses: second panel on cost-effectiveness in health and medicine. JAMA. 2016;316(10):1093–103.

Nagappan M, Shihab E, editors. Future trends in software engineering research for mobile apps. In: 2016 IEEE 23rd international conference on software analysis, evolution, and reengineering (SANER); 2016: IEEE.

Georgiou M. Cost of Mobile App Maintenance in 2023 and Why It’s Needed Web2023 [Blog]. https://imaginovation.net/blog/importance-mobile-app-maintenance-cost/. Accessed 3 Oct 2023.

Acknowledgements

The authors thank ChangeTech for providing vital information for the empirical cost analysis. We are grateful to Gudrun Bjørnelv and Christina Edwards for reviewing a later version of the manuscript and offering feedback regarding organization of sections.

Funding

Open access funding provided by NTNU Norwegian University of Science and Technology (incl St. Olavs Hospital - Trondheim University Hospital).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Funding

This study is funded by the Center for Research-Based Innovation on Mobile Mental Health (ForHelse), which is part of the Center for Research Based Innovations Scheme IV of the Research Council of Norway (project number: 309264).

Conflicts of interest

The authors declare no conflicts of interest.

Availability of data and material

All data used in this study are presented in the text of the paper or the supplemental materials.

Ethics approval

No specific ethics approval was sought because this study does not collect or report data from human subjects or biological material.

Author contributions

VH, ZK and KK conceptualized the study and defined the methodology. SP performed the structured search for the scoping review, whereas ZK and VH performed the formal analysis of retrieved records (i.e. screening records, data extraction and categorization for the cost checklist). ZK, VH, FD, SH, EO and TS investigated data for the empirical cost analysis and ZK performed the analysis. VH organized resources for the project, in addition to project administration and supervision. The first draft of the manuscript was written by ZK, which was reviewed and edited by all authors. All authors approved the final manuscript.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License, which permits any non-commercial use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc/4.0/.

About this article

Cite this article

Khan, Z.A., Kidholm, K., Pedersen, S.A. et al. Developing a Program Costs Checklist of Digital Health Interventions: A Scoping Review and Empirical Case Study. PharmacoEconomics 42, 663–678 (2024). https://doi.org/10.1007/s40273-024-01366-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40273-024-01366-y