Abstract

Background and Objectives

The aim was to systematically review whether the reporting and analysis of trial-based cost-effectiveness evaluations in the field of obstetrics and gynaecology comply with guidelines and recommendations, and whether this has improved over time.

Data Sources and Selection Criteria

A literature search was performed in MEDLINE, the NHS Economic Evaluation Database (NHS EED) and the Health Technology Assessment (HTA) database to identify trial-based cost-effectiveness evaluations in obstetrics and gynaecology published between January 1, 2000 and May 16, 2017. Studies performed in middle- and low-income countries and studies related to prevention, midwifery, and reproduction were excluded.

Data Collection and Analysis

Reporting quality was assessed using the Consolidated Health Economic Evaluation Reporting Standard (CHEERS) statement (a modified version with 21 items, as we focused on trial-based cost-effectiveness evaluations) and the statistical quality was assessed using a literature-based list of criteria (8 items). Exploratory regression analyses were performed to assess the association between reporting and statistical quality scores and publication year.

Results

The electronic search resulted in 5482 potentially eligible studies. Forty-five studies fulfilled the inclusion criteria, 22 in obstetrics and 23 in gynaecology. Twenty-seven (60%) studies did not adhere to 50% (n = 10) or more of the reporting quality items and 32 studies (71%) did not meet 50% (n = 4) or more of the statistical quality items. As for the statistical quality, no study used the appropriate method to assess cost differences, no advanced methods were used to deal with missing data, and clustering of data was ignored in all studies. No significant improvements over time were found in reporting or statistical quality in gynaecology, whereas in obstetrics a significant improvement in reporting and statistical quality was found over time.

Limitations

The focus of this review was on trial-based cost-effectiveness evaluations in obstetrics and gynaecology, so further research is needed to explore whether results from this review are generalizable to other medical disciplines.

Conclusions and Implications of Key Findings

The reporting and analysis of trial-based cost-effectiveness evaluations in gynaecology and obstetrics is generally poor. Since this can result in biased results, incorrect conclusions, and inappropriate healthcare decisions, there is an urgent need for improvement in the methods of cost-effectiveness evaluations in this field.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

The quality of the statistical analysis and reporting of trial-based cost-effectiveness evaluations in obstetrics and gynaecology is poor with only a minority of studies presenting measures of statistical uncertainty around cost-effectiveness estimates. |

Exploratory analyses indicated that there have been no significant improvements over time in reporting or statistical quality in gynaecology, whereas in obstetrics a significant improvement in reporting and statistical quality was found over time. |

Improvement in reporting and statistical quality of trial-based cost-effectiveness evaluations is needed to ensure reliable results and conclusions as well as efficient allocation of scarce resources in healthcare. |

1 Background

To inform decisions about the allocation of scarce healthcare resources, decision makers need information on the relative efficiency of alternative healthcare interventions, which can be provided by cost-effectiveness evaluations [1]. These cost-effectiveness evaluations are increasingly being conducted alongside controlled clinical trials (i.e. so-called trial-based cost-effectiveness evaluations) [2]. Failure to adequately conduct, analyse and/or report such cost-effectiveness evaluations can lead to biased conclusions, resulting in inappropriate healthcare decision making, and thus a possible waste of scarce resources.

A growing number of cost-effectiveness evaluations in obstetrics and gynaecology are being conducted. To illustrate, a basic MEDLINE search combining search terms related to ‘obstetrics’ and ‘gynaecology’ and the MeSH term ‘cost-benefit analysis’ showed an increase in the number of published cost-effectiveness evaluations per year, from 32 in 2000 to 112 in 2015. A large share of these cost-effectiveness evaluations were conducted alongside a clinical trial. Interventions compared in these trials often concern induction of labour, hysterectomy (i.e. surgical removal of the uterus) and care arrangement (e.g. specialist nurse providing treatment vs physician providing treatment). Outcomes of these cost-effectiveness evaluations are usually expressed in clinical outcomes; for example, the number of caesarean sections or admission to intensive care. Costs associated with these interventions usually consist of materials used and occupation of caregiver or labour/operating room. Properly conducted cost-effectiveness evaluations in obstetrics and gynaecology can help to prevent wastage of scarce resources. This is important since obstetrics/gynaecology is a major contributor to total healthcare costs. For example, in a Dutch economic analysis comparing methods of induction, the costs of this specific obstetric procedure were estimated to be €1.4 million [3].

Reviews on the reporting and statistical methodology of trial-based cost-effectiveness evaluations show that major deficiencies are generally present in the way in which such evaluations are reported [4,5,6,7] and analysed [8,9,10]. This led Doshi et al. [8] to conclude that the results of trial-based cost-effectiveness evaluations need to be interpreted with caution due to the poor quality of the statistical approach. The majority of these reviews, however, only evaluated reporting quality [4,5,6,7] of trial-based cost-effectiveness evaluations and the only reviews that evaluated the statistical quality [8,9,10] were conducted over a decade ago. In the meantime, however, guidelines and recommendations [11,12,13,14] for trial-based cost-effectiveness evaluations have been updated and more researchers have been trained in the conduct of cost-effectiveness evaluations. In the field of obstetrics and gynaecology, methodological reviews showed similar characteristics (i.e. only evaluated reporting quality) [15, 16].

1.1 Objectives

This study aimed to explore whether the quality of reporting and the statistical methods of trial-based cost-effectiveness evaluations in obstetrics and gynaecology are in accordance with the most recent guidelines and recommendations, and whether both have improved over the past 16 years.

2 Methods

This systematic review, conducted in accordance with the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement [17], included trial-based cost-effectiveness evaluations in the field of obstetrics and gynaecology that were published from January 1, 2000 up to May 16, 2017. A search was conducted in MEDLINE, the National Health Service Economic Evaluation Database (NHS EED), and the Health Technology Assessment (HTA) database. The development of the earliest guidelines took place in 1996 [18], therefore the year 2000 was used as the start date to allow for implementation of the guidelines.

2.1 Search Strategy

Databases were searched with terms related to the research field (e.g. ‘gynaecology’, ‘obstetrics’ or ‘pregnancy’) and study design (e.g. ‘cost-utility analysis’, ‘economic evaluation’, ‘cost effectiveness’ or ‘economic analysis’) in the title, abstract, and MeSH headings or keywords. The full PubMed search is available in Appendix S1 (see electronic supplementary material [ESM]). The electronic search was supplemented by searching reference lists of relevant review articles and of the retrieved full texts. During the search, a search log was kept consisting of keywords used, searched databases and search results. Titles and abstracts of the retrieved studies were stored in an electronic database using EndNote X7.4® (Thomson Reuters, New York, NY, US).

2.2 Study Selection

Two reviewers (ME and JMvD) independently screened titles and abstracts of identified studies for eligibility. Studies were included if they reported an economic evaluation alongside a controlled trial in obstetrics or gynaecology and concerned a cost-effectiveness analysis (CEA) and/or a cost-utility analysis (CUA). Cost-benefit analyses and cost-minimization analyses were excluded since healthcare decision makers are typically interested in CEAs and CUAs, and because statistical methods may differ across these kinds of economic evaluations [1]. Both randomized and non-randomized studies were included in the review. Papers had to be published as full papers and written in English. Furthermore, this systematic review focused on therapeutic procedures (e.g. surgical treatments, induction of labour, etc.) in obstetrics and gynaecology. Therefore, studies describing interventions related to prevention and screening as well as training of healthcare staff were excluded. Moreover, studies related to reproductive medicine (i.e. fertility) were also excluded. Finally, we specifically focused on high-income countries (e.g. countries in Europe and North America) as we expected cost-effectiveness evaluations from low-/middle-income countries to systematically be of lower quality and therefore result in significantly lower scores, whereas cost-effectiveness evaluations are mostly conducted in high-income countries (i.e. 83% of the total published cost-effectiveness evaluations) [19]. Methodological issues are typically present in cost-effectiveness evaluations from low-/middle-income countries, such as scarcity and quality of the data used, trials that do not prioritize economics and absence of cost accounting systems [20], which makes it difficult to compare evaluations between high-income and low-income countries.

Full texts were retrieved when studies fulfilled the inclusion criteria or if uncertainty remained about the inclusion of a specific study. All full texts were read and checked for eligibility by two independent reviewers (ME and JMvD). To resolve disagreement between the two reviewers, a consensus procedure was used. A third reviewer (JEB) was consulted when disagreements persisted.

2.3 Data Extraction

Two reviewers (ME and JMvD) independently extracted data from the included studies using a standardized extraction form. Agreement between the reviewers was checked during a face-to-face meeting, and a consensus procedure was used involving a third reviewer (JEB) if necessary. The first part of the extraction form focused on general study characteristics (e.g. year of publication, country), healthcare delivery (i.e. primary or secondary care), medical discipline (i.e. obstetrics or gynaecology), and the design of the trial (i.e. non-randomized study [NRS] or randomized controlled trial [RCT]). The second part focused on cost-effectiveness evaluation design aspects: type of evaluation (i.e. CEA or CUA), study perspective (e.g. healthcare perspective, societal perspective), study population, follow-up period, comparator and outcome measures. The third part focused on the statistical approach of the trial-based cost-effectiveness evaluation and is described in Sect. 2.5.

2.4 Reporting Quality of Trial-Based Cost-Effectiveness Evaluations

Reporting quality was assessed using the Consolidated Health Economic Evaluation Reporting Standard (CHEERS) statement [11] that provides concrete recommendations to optimize the reporting of cost-effectiveness evaluations. Recommendations are subdivided into six main categories: (1) title and abstract, (2) introduction, (3) methods, (4) results, (5) discussion and (6) other. For a detailed description of the CHEERS statement, the reader is referred to Husereau et al. [11]. The full CHEERS statement is provided in Appendix S2 (see ESM). As the focus of this study was to evaluate trial-based cost-effectiveness evaluations, modelling-related criteria in the statement were omitted (i.e. items 15, 16 and 18). This resulted in a modified CHEERS statement with 21 items that were answered by ‘yes/no’. Studies fulfilling the criteria mentioned in the items were scored ‘yes’ and assigned a score of 1 per correct item (‘no’ was scored as 0). Answers were compared between the two reviewers and disagreements were discussed until consensus was reached. An overall reporting quality score ranging from 0 to 21 was calculated by adding up the number of items that were scored ‘yes’.

2.5 Quality of the Statistical Approach of Trial-Based Cost-Effectiveness Evaluations

To evaluate the quality of the statistical approach, four quality domains were identified based on existing guidelines [12,13,14]. These domains, including their subdomains, are described below.

-

1.

Analysis of incremental costs: This domain consisted of three sub-domains. First, we assessed whether the cost difference was presented (‘yes/no’). Studies presenting cost differences were scored as handling this sub-domain appropriately (score = 1); all others as inappropriate (score = 0). Second, we assessed the method for estimating the statistical uncertainty surrounding the cost difference, while accounting for the skewed distribution of cost data. Studies using non-parametric bootstrapping or a gamma distribution in combination with multivariable regression methods were scored as handling this sub-domain appropriately (score = 1); all others as inappropriate (score = 0) [14, 21,22,23]. Third, trial-based cost-effectiveness evaluations are typically underpowered for economic outcomes [24]. Consequently, researchers are recommended to use estimation (i.e. confidence intervals) rather than hypothesis testing (i.e. p values) [25]. Therefore, studies presenting confidence intervals were scored as handling this sub-domain appropriately (score = 1); all others as inappropriate (score = 0). An overall domain score was calculated by adding up the studies’ scores per sub-domain (1 point per correct sub-domain, maximum score = 3).

-

2.

Analysis of cost-effectiveness: This category consisted of three sub-domains. First, we assessed whether the authors presented an incremental cost-effectiveness ratio (ICER) (‘yes/no’). Studies presenting an ICER were scored as handling this sub-domain appropriately (score = 1); all others as inappropriate (score = 0). Second, the method for dealing with sampling uncertainty surrounding the ICER was assessed. Non-parametric bootstrapping is considered the most appropriate method and is recommended by current guidelines [12,13,14]. Therefore, studies using non-parametric bootstrapping were scored as handling this sub-domain appropriately (score = 1); all others as inappropriate (score = 0). Third, we assessed whether the presentation of the uncertainty surrounding the ICER was adequate. Bootstrapped cost and effect data can be plotted in a cost-effectiveness plane (CE plane), which graphically presents the uncertainty surrounding the ICER [26]. Furthermore, the joint uncertainty surrounding costs and effects can be presented in a cost-effectiveness acceptability curve (CEAC) [27]. Presentation of 95% confidence intervals around ICERs is not considered appropriate due to interpretation issues when statistical uncertainty surrounding the ICER is distributed across more than one quadrant in the CE plane [28]. Studies presenting a CE plane and a CEAC without 95% confidence intervals around ICERs were scored as handling this sub-domain appropriately (score = 1); all others as inappropriate (score = 0). An overall domain score was calculated by adding up the studies’ scores per sub-domain (1 point per correct sub-domain, maximum score = 3).

-

3.

Handling of missing data: Multiple imputation (MI) is currently considered the most appropriate method for dealing with missing cost data [13, 14], while maximum likelihood approaches (e.g. expectation-maximization algorithm) are also considered to result in valid estimates [13, 29]. However, this only applies when the missingness of data has a relationship with observed factors among participants, but not with unobserved factors. This is often referred to as the Missing At Random (MAR) assumption [25, 30, 31]. Therefore, studies using one of these approaches were classified as handling this domain appropriately (score = 1); all others as inappropriate (score = 0). Furthermore, studies with only a small amount of missing data (i.e. in our review we used a threshold of ≤5%) that used a complete-case analysis were also classified as handling this domain appropriately (score = 1). When >5%, but <10% of data is missing, more simple imputation techniques might be preferred over MI, purely for practical reasons [32].

-

4.

Addressing uncertainty (sensitivity analysis): Three types of uncertainty are inherent to trial-based cost-effectiveness evaluations: parameter uncertainty (i.e. uncertainty due to variables that might influence results, such as unit costs), methodological uncertainty (i.e. uncertainty due to the use of different methods for analysis) and subgroup uncertainty (i.e. uncertainty due to possible differences across subgroups of participants) [33, 34]. To assess the impact of these types of uncertainty on the robustness of the results, sensitivity analyses should be undertaken [25]. Studies performing at least one of the three types of sensitivity analyses were classified as handling this domain appropriately (score = 1); all others as inappropriate (score = 0).

An overall quality score of the statistical approach, ranging from 0 to 8, was calculated per study by adding up the number of overall sub-domains that were scored ‘yes’. See Table 1 for a summary of appropriate methods per domain.

2.6 Statistical Analysis

To describe the included studies’ reporting and statistical quality, descriptive statistics were used. To explore whether quality improved over time, linear regression analyses were performed; one with the overall reporting quality score as dependent variable and one with the overall quality score of the statistical approach as dependent variable stratified for medical discipline (i.e. obstetrics and gynaecology). The year of publication was used as an independent variable resulting in the regression model described below. Analyses were conducted using STATA 14®.

3 Results

3.1 Literature Search and Study Selection

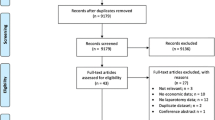

The electronic search identified 5482 potentially eligible studies. After removing 246 duplicates, 5236 studies were screened on title and abstract. The reviewers disagreed on the inclusion of 112 (2%) studies, resulting in an inter-rater agreement of 98%. Seventy-one studies were retrieved for full-text screening. In four cases, consensus was reached by asking a third reviewer. After the full-text screening, 44 studies [35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78] were included. One study [79] was identified through reference checking and was also included in the review (Fig. 1). This resulted in 45 studies included for review.

3.2 Study Characteristics

Study characteristics are reported in Table 2. Just over half of the studies were conducted in gynaecology (56%; n = 23). Most studies conducted a CEA (87%; n = 39), and five (11%) studies [46, 49, 52, 69, 77] conducted a CUA. One (2%) study [79] conducted both a CEA and a CUA. The hospital perspective was used in 28 (62%) studies [35,36,37,38, 40,41,42,43,44,45, 47,48,49,50, 53, 55, 59, 60, 62, 65,66,67,68, 71, 73,74,75, 78], followed by the healthcare perspective (22%; n = 10) [39, 46, 51, 52, 54, 58, 63, 69, 70, 77] and the societal perspective (12%; n = 5) [56, 57, 64, 76, 79]. In two (4%) studies [61, 72], the perspective was unclear. Twenty-eight (62%) studies [35, 38,39,40,41, 48, 50, 52, 54, 55, 57, 58, 61,62,63,64,65,66, 69,70,71,72,73,74,75,76,77, 79] were conducted alongside an RCT and 17 (41%) [36, 37, 42,43,44,45,46,47, 49, 51, 53, 56, 59, 60, 67, 68, 78] alongside an NRS. Sample sizes ranged from 35 [55] to 9996 participants [71] and the duration of follow-up ranged from 24 hours [66] to 36 months [47]. The majority of studies were conducted in Europe (66%; n = 27) [35, 37, 39,40,41, 43, 47, 50,51,52, 57,58,59, 61,62,63,64,65, 67,68,69,70, 74,75,76, 78, 79] and North America (29%; n = 12) [36, 38, 42, 44,45,46, 48, 49, 53, 56, 60, 66]. Two (4%) studies [54, 71] were conducted over multiple countries and one (2%) study [55] did not report the country where the study was conducted, but the authors’ affiliation was from the Republic of Ireland.

3.3 Reporting Quality of the Trial-Based Cost-Effectiveness Evaluations

Results of the reporting quality assessment are presented in Table 3. The overall reporting quality score (with a maximum of 21) ranged from 1 to 17 (mean 8.8; SD 4.8; median 8). Twenty-seven (60%) studies [35,36,37,38,39, 42,43,44,45,46,47, 49,50,51, 53, 55, 56, 58,59,60,61,62, 66,67,68, 72, 78] did not adhere to ≥50% of the items (i.e. having a score ≤10) of the CHEERS statement; one (2%) study [76] had a score of 17 (81% of the items were scored positively). Criteria that were often adequately described in the studies were the title (n = 40; 89%), the target population (n = 30; 67%) and the comparators (n = 33; 73%). Criteria that were least appropriately described were the abstract (n = 4; 9%), setting and location (n = 4; 9%) and choice of health outcomes (n = 6; 13%).

3.4 Quality of the Statistical Approach of Trial-Based Cost-Effectiveness Evaluations

Results of the quality assessment of the statistical approach are presented in Table 4. The overall quality score of the statistical approach per study ranged from 0 to 6 (see Table 4 and Appendix S3 in ESM for scores per sub-domain). Six (15%) studies [36, 37, 46, 56, 60, 78] did not use any of the recommended methods (i.e. overall quality score = 0). Furthermore, 32 (71%) studies [35,36,37,38,39,40, 42,43,44,45,46,47,47,48,49,50,51, 53, 55, 56, 58,59,60,61,62, 65,66,67,68, 70, 72, 76, 78] did not adhere to ≥ 50% of the statistical quality items (i.e. having a score ≤4). None of the studies (see appendix S3, ESM) used the recommended statistical method to assess the cost differences between interventions. Furthermore, no study used more advanced methods for handling missing data (i.e. multiple imputation or maximum likelihood approaches). When there was <10% missing data, more simple techniques were used in 16 (36%) studies [39, 45, 48, 49, 54, 55, 57,58,59, 62, 63, 66, 68, 73, 75]. Of note, no study looked into the clustered nature of the data by using methods that correct for clustering.

3.5 Improvement in Quality Over Time

Exploratory analyses showed that the reporting and statistical quality score of studies in gynaecology did not significantly improve over time. However, the statistical quality and reporting quality scores in obstetric studies did significantly improve over time. Goodness-of-fit estimates showed that the amount of variance in quality scores explained by time was only limited (Table 5).

4 Discussion

4.1 Main Findings

The majority of cost-effectiveness evaluations in obstetrics and gynaecology do not comply with current reporting guidelines and recommendations for statistical methods in trial-based cost-effectiveness evaluations. Furthermore, exploratory analyses indicated that there have not been significant improvements over time in reporting and statistical quality of trial-based cost-effectiveness evaluations in gynaecology. In obstetrics, the quality of reporting and analysis slightly improved over time.

4.2 Interpretation of the Findings

None of the included studies fully complied with the CHEERS statement’s reporting criteria [11] and the median reporting quality score of the included studies was relatively low (i.e. median 8, scale 0–21). This indicates that essential reporting components were missing, which can lead to faulty conclusions by researchers and healthcare decision makers. In particular, the failure to describe the setting in which the studies were performed (i.e. the place and setting in which the resource allocation decision needs to be made such as country, primary or secondary care and healthcare system) makes it difficult to assess the relevance or transferability of cost-effectiveness evaluation results [80].

None of the included studies fully complied with the statistical recommendations extracted from existing guidelines [12,13,14]. Various statistical pitfalls of the included studies are noteworthy. First, some studies presented an analysis based on median costs instead of mean costs, yet the median is a measure that is not easily interpretable or usable for healthcare decision makers [25, 81, 82]. Second, ICERs were only reported by less than half of the studies. Moreover, since ICERs have well known interpretation problems, reporting 95% confidence interval surrounding ICERs is not recommended [26, 28] and presentation of uncertainty using CE planes and/or CEA curves is preferred. Nonetheless, only a small number of studies adequately presented the statistical uncertainty around the ICERs. Last, one third of the included studies relied on naïve and outdated statistical techniques for dealing with missing data (e.g. mean imputation, last observation carried forward) rather than using more advanced and valid methods such as multiple imputation and maximum likelihood approaches [83, 84]. These shortcomings in the quality of the included studies may result in either under- or overestimated cost-effectiveness outcomes.

4.3 Strengths and Limitations

A strength of this review is the systematic way in which studies were included and assessed, increasing the validity of the review. Also, to the best of our knowledge, this is the first review that combined the assessment of reporting quality with a comprehensive and in-depth evaluation of the statistical methods based on up-to-date national and international recommendations. However, several limitations need to be mentioned as well. First, in order to keep this review manageable, we focused on trial-based cost-effectiveness evaluations in obstetrics and gynaecology. Further research is needed to assess whether these results are representative of trial-based cost-effectiveness evaluations in other clinical areas. Second, reviewers may have been subjective in their judgements of quality, because they were not blinded for authors, authors’ affiliations and journals. However, the quality assessments were done using objective criteria [11,12,13,14] by two independent reviewers. Third, considering the large developments in the methods of trial-based cost-effectiveness evaluations, early studies may be at a disadvantage. However, reporting guidelines have been available since 1996 [18, 85] and have not changed substantially since. Nonetheless, lower statistical quality scores may be the result of a lack of concrete, up-to-date statistical recommendations [86, 87]. Last, some of the included studies lacked transparency in how they designed and conducted their trial-based cost-effectiveness evaluations (i.e. poor reporting quality). This made it difficult to extract some of the data necessary to appropriately evaluate the quality of included studies, which affected the overall quality score negatively.

4.4 Comparison with the Literature

Our study adds to existing reviews in several ways. First, the majority of the previous reviews only assessed reporting quality and only a small number of reviews [8,9,10], which were conducted over a decade ago, evaluated the statistical quality of the included studies. Since then, however, statistical methods have improved considerably. Moreover, compared with previously conducted reviews in obstetrics and gynaecology, we performed an in-depth evaluation of the statistical methods.

Regardless, results of this systematic review are in line with those of previously conducted reviews, which concluded that the reporting and quality of the statistical approach of trial-based cost-effectiveness evaluations are typically poor [4,5,6,7] [8, 9] [15, 16]. However, these earlier methodological reviews in the field of obstetrics and gynaecology concluded that their quality improved over the last decades. This is in contrast with our exploratory analyses, which only showed a significant quality improvement over time in obstetrics and not in gynaecology. This discrepancy may be explained by our strict assessment of quality based on the most up-to-date evidence. All in all, our review suggests that, even though various efforts have been made during the last decade to improve the reporting and statistical quality of trial-based cost-effectiveness evaluations, there is still substantial room for improvement in the area of obstetrics and gynaecology. Further research should indicate whether this applies to other medical disciplines as well.

4.5 Implications for Further Research and Practice

Future trial-based cost-effectiveness evaluations should increase their adherence to available guidelines and recommendations to improve their credibility. Up to now, however, no criteria list of statistical quality has been available. For this review, we developed a criteria list based on current evidence, but items were not weighed in terms of their opportunity cost; that is, the risk of taking the wrong decision. For example, failure to adequately handle missing data will affect the decisions more than evaluating cost differences using a Mann–Whitney U test. Therefore, we urgently recommend the development of a criteria list to assess statistical quality of trial-based cost-effectiveness evaluations including a weighing system that can be used by researchers, policy makers, reviewers and journal editors. Also, none of the most frequently used statistical software packages (e.g. SPSS, STATA, SAS, R) includes easy to use scripts for performing state-of-the-art trial-based cost-effectiveness evaluations. As such, authors are encouraged to (publicly) share their ‘advanced’ trial-based cost-effectiveness evaluations scripts.

5 Conclusion

This study indicated that the reporting and statistical quality of trial-based cost-effectiveness evaluations in obstetrics and gynaecology is generally poor. Since this can result in biased results, incorrect conclusions, and inappropriate healthcare decisions, there is an urgent need for improvement in the methods of cost-effectiveness evaluations in this field.

Data Availability Statement

The authors provide the readers of this article with a data extraction sheet in which information about all included studies is summarized. This file is added as electronic supplementary material.

References

Drummond MF, Sculper MJ, Claxton K, Stoddart GL, Torrance GW. Methods for the economic evaluation of health care programmes. 4th ed. Oxford: Oxford University Press; 2015.

Glick H, Doshi JA, Sonnad SS, Polsky D. Economic evaluation in clinical trials. 2nd ed. Oxford: Oxford University Press; 2015.

Vijgen SM, Boers KE, Opmeer BC, Bijlenga D, Bekedam DJ, Bloemenkamp KW, et al. Economic analysis comparing induction of labour and expectant management for intrauterine growth restriction at term (DIGITAT trial). Eur J Obstet Gynecol Reprod Biol. 2013;170(2):358–63.

Neumann PJ, Fang CH, Cohen JT. 30 years of pharmaceutical cost-utility analyses: growth, diversity and methodological improvement. Pharmacoeconomics. 2009;27(10):861–72.

Neumann PJ, Greenberg D, Olchanski NV, Stone PW, Rosen AB. Growth and quality of the cost-utility literature, 1976–2001. Value Health. 2005;8(1):3–9.

Neumann PJ, Stone PW, Chapman RH, Sandberg EA, Bell CM. The quality of reporting in published cost-utility analyses, 1976–1997. Ann Intern Med. 2000;132(12):964–72.

Rosen AB, Greenberg D, Stone PW, Olchanski NV, Neumann PJ. Quality of abstracts of papers reporting original cost-effectiveness analyses. Med Decis Mak. 2005;25(4):424–8.

Doshi JA, Glick HA, Polsky D. Analyses of cost data in economic evaluations conducted alongside randomized controlled trials. Value Health. 2006;9(5):334–40.

Barber JA, Thompson SG. Analysis and interpretation of cost data in randomised controlled trials: review of published studies. BMJ. 1998;317(7167):1195–200.

Udvarhelyi IS, Colditz GA, Rai A, Epstein AM. Cost-effectiveness and cost-benefit analyses in the medical literature—are the methods being used correctly. Ann Intern Med. 1992;116(3):238–44.

Husereau D, Drummond M, Petrou S, Carswell C, Moher D, Greenberg D, et al. Consolidated Health Economic Evaluation Reporting Standards (CHEERS) statement. Value Health. 2013;16(2):e1–5.

National Institute for Health and Care Excellence (NICE). Guide to the methods of technology appraisal London, United Kingdom: NICE UK; 2013 [updated 04/04/13; cited 2016 20/04/16]. https://www.nice.org.uk/article/pmg9/resources/non-guidance-guide-to-the-methods-of-technology-appraisal-2013-pdf.

Zorginstituut Nederland. Richtlijn voor het uitvoeren van economische evaluaties in de gezondheidszorg. Diemen: Zorginstituut Nederland; 2015.

Ramsey SD, Willke RJ, Glick H, Reed SD, Augustovski F, Jonsson B, et al. Cost-effectiveness analysis alongside clinical trials II—an ISPOR Good Research Practices Task Force report. Value Health. 2015;18(2):161–72.

Subak LL, Caughey AB, Washington AE. Cost-effectiveness analyses in obstetrics and gynecology. Evaluation of methodologic quality and trends. J Reprod Med. 2002;47(8):631–9.

Vijgen SM, Opmeer BC, Mol BW. The methodological quality of economic evaluation studies in obstetrics and gynecology: a systematic review. Am J Perinatol. 2013;30(4):253–60.

Moher D, Liberati A, Tetzlaff J, Altman DG, Group Prisma. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 2009;6(7):e1000097.

Drummond MF, Jefferson TO. Guidelines for authors and peer reviewers of economic submissions to the BMJ. Br Med J. 1996;313(7052):275–83.

Pitt C, Goodman C, Hanson K. Economic evaluation in global perspective: a bibliometric analysis of the recent literature. Health Econ. 2016;25:9–28.

Pitt C, Vassall A, Teerawattananon Y, Griffiths UK, Guinness L, Walker D, et al. Foreword: health economic evaluations in low- and middle-income countries: methodological issues and challenges for priority setting. Health Econ. 2016;25(Suppl 1):1–5.

Thompson SG, Barber JA. How should cost data in pragmatic randomised trials be analysed? BMJ. 2000;320(7243):1197–200.

Manning WG, Basu A, Mullahy J. Generalized modeling approaches to risk adjustment of skewed outcomes data. J Health Econ. 2005;24(3):465–88.

Barber J, Thompson S. Multiple regression of cost data: use of generalised linear models. J Health Serv Res Policy. 2004;9(4):197–204.

Briggs A. Economic evaluation and clinical trials: size matters. BMJ. 2000;321(7273):1362–3.

van Dongen JM, van Wier MF, Tompa E, Bongers PM, van der Beek AJ, van Tulder MW, et al. Trial-based economic evaluations in occupational health: principles, methods, and recommendations. J Occup Environ Med. 2014;56(6):563–72.

Black WC. The CE plane: a graphic representation of cost-effectiveness. Med Decis Mak. 1990;10(3):212–4.

Fenwick E, O’Brien BJ, Briggs A. Cost-effectiveness acceptability curves—facts, fallacies and frequently asked questions. Health Econ. 2004;13(5):405–15.

Briggs AH, O’Brien BJ, Blackhouse G. Thinking outside the box: recent advances in the analysis and presentation of uncertainty in cost-effectiveness studies. Annu Rev Public Health. 2002;23:377–401.

Oostenbrink JB, Al MJ. The analysis of incomplete cost data due to dropout. Health Econ. 2005;14(8):763–76.

Sterne JA, White IR, Carlin JB, Spratt M, Royston P, Kenward MG, et al. Multiple imputation for missing data in epidemiological and clinical research: potential and pitfalls. BMJ. 2009;338:b2393.

Rubin DB. Multiple imputation for nonresponse in surveys. New York: Wiley; 1987.

Eekhout I, de Vet HC, Twisk JW, Brand JP, de Boer MR, Heymans MW. Missing data in a multi-item instrument were best handled by multiple imputation at the item score level. J Clin Epidemiol. 2014;67(3):335–42.

Briggs A, Sculpher M, Buxton M. Uncertainty in the economic evaluation of health care technologies: the role of sensitivity analysis. Health Econ. 1994;3(2):95–104.

Sculpher M. Subgroups and heterogeneity in cost-effectiveness analysis. Pharmacoeconomics. 2008;26(9):799–806.

Bernitz S, Aas E, Oian P. Economic evaluation of birth care in low-risk women. A comparison between a midwife-led birth unit and a standard obstetric unit within the same hospital in Norway. A randomised controlled trial. Midwifery. 2012;28(5):591–9.

Bienstock JL, Ural SH, Blakemore K, Pressman EK. University hospital-based prenatal care decreases the rate of preterm delivery and costs, when compared to managed care. J Matern-Fetal Med. 2001;10(2):127–30.

Bogliolo S, Ferrero S, Cassani C, Musacchi V, Zanellini F, Dominoni M, et al. Single-site versus multiport robotic hysterectomy in benign gynecologic diseases: a retrospective evaluation of surgical outcomes and cost analysis. J Minim Invas Gynecol. 2016;23(4):603–9.

Brooten D, Youngblut JM, Brown L, Finkler SA, Neff DF, Madigan E. A randomized trial of nurse specialist home care for women with high-risk pregnancies: outcomes and costs. Am J Manag Care. 2001;7(8):793–803.

Dawes HA, Docherty T, Traynor I, Gilmore DH, Jardine AG, Knill-Jones R. Specialist nurse supported discharge in gynaecology: a randomised comparison and economic evaluation. Eur J Obstet Gynecol Reprod Biol. 2007;130(2):262–70.

Eddama O, Petrou S, Regier D, Norrie J, MacLennan G, Mackenzie F, et al. Study of progesterone for the prevention of preterm birth in twins (STOPPIT): findings from a trial-based cost-effectiveness analysis. Int J Technol Assess Health Care. 2010;26(2):141–8.

Eddama O, Petrou S, Schroeder L, Bollapragada SS, Mackenzie F, Norrie J, et al. The cost-effectiveness of outpatient (at home) cervical ripening with isosorbide mononitrate prior to induction of labour. BJOG Int J Obstet Gynaecol. 2009;116(9):1196–203.

El Hachem L, Andikyan V, Mathews S, Friedman K, Poeran J, Shieh K, et al. Robotic single-site and conventional laparoscopic surgery in gynecology: clinical outcomes and cost analysis of a matched case-control study. J Minim Invas Gynecol. 2016;23(5):760–8.

El-Sayed MM, Mohamed SA, Jones MH. Cost-effectiveness of ultrasound use by on-call registrars in an acute gynaecology setting. J Obstet Gynaecol. 2011;31(8):743–5.

Eltabbakh GH, Shamonki MI, Moody JM, Garafano LL. Hysterectomy for obese women with endometrial cancer: laparoscopy or laparotomy? Gynecol Oncol. 2000;78(3 Part 1):329–35.

Eltabbakh GH, Shamonki MI, Moody JM, Garafano LL. Laparoscopy as the primary modality for the treatment of women with endometrial carcinoma. Cancer. 2001;91(2):378–87.

Evans KD. A cost utility analysis of sonohysterography compared with hysteroscopic evaluation for dysfunctional uterine bleeding. J Diagn Med Sonogr. 2000;16(2):68–72.

Fernandez H, Kobelt G, Gervaise A. Economic evaluation of three surgical interventions for menorrhagia. Hum Reprod. 2003;18(3):583–7.

Guo Y, Longo CJ, Xie R, Wen SW, Walker MC, Smith GN. Cost-effectiveness of transdermal nitroglycerin use for preterm labor. Value Health. 2011;14(2):240–6.

Horowitz NS, Gibb RK, Menegakis NE, Mutch DG, Rader JS, Herzog TJ. Utility and cost-effectiveness of preoperative autologous blood donation in gynecologic and gynecologic oncology patients. Obstet Gynecol. 2002;99(5 Pt 1):771–6.

Jack SA, Cooper KG, Seymour J, Graham W, Fitzmaurice A, Perez J. A randomised controlled trial of microwave endometrial ablation without endometrial preparation in the outpatient setting: patient acceptability, treatment outcome and costs. BJOG Int J Obstet Gynaecol. 2005;112(8):1109–16.

Jakovljevic M, Varjacic M, Jankovic SM. Cost-effectiveness of ritodrine and fenoterol for treatment of preterm labor in a low-middle-income country: a case study. Value Health. 2008;11(2):149–53.

Kilonzo MM, Sambrook AM, Cook JA, Campbell MK, Cooper KG. A cost-utility analysis of microwave endometrial ablation versus thermal balloon endometrial ablation. Value Health. 2010;13(5):528–34.

Kovac SR. Decision-directed hysterectomy: a possible approach to improve medical and economic outcomes. Int J Gynecol Obstet. 2000;71(2):159–69.

Lain SJ, Roberts CL, Bond DM, Smith J, Morris JM. An economic evaluation of planned immediate versus delayed birth for preterm prelabour rupture of membranes: findings from the PPROMT randomised controlled trial. BJOG: Int J Obstet Gynaecol. 2017;124(4):623–30.

Lalchandani S, Baxter A, Phillips K. Is helium thermal coagulator therapy for the treatment of women with minimal to moderate endometriosis cost-effective: a prospective randomised controlled trial. Gynecol Surg. 2005;2(4):255–8.

Lenihan JP, Kovanda C, Cammarano C. Comparison of laparoscopic-assisted vaginal hysterectomy with traditional hysterectomy for cost-effectiveness to employers. Am J Obstet Gynecol. 2004;190(6):1714–20.

Liem SM, van Baaren GJ, Delemarre FM, Evers IM, Kleiverda G, van Loon AJ, et al. Economic analysis of use of pessary to prevent preterm birth in women with multiple pregnancy (ProTWIN trial). Ultrasound Obstet Gynecol. 2014;44(3):338–45.

Lumsden MA, Twaddle S, Hawthorn R, Traynor I, Gilmore D, Davis J, et al. A randomised comparison and economic evaluation of laparoscopic-assisted hysterectomy and abdominal hysterectomy. Br J Obstet Gynaecol. 2000;107(11):1386–91.

Marino P, Houvenaeghel G, Narducci F, Boyer-Chammard A, Ferron G, Uzan C, et al. Cost-effectiveness of conventional vs robotic-assisted laparoscopy in gynecologic oncologic indications. Int J Gynecol Cancer: Off J Int Gynecol Cancer Soc. 2015;25(6):1102–8.

Morrison JC, Chauhan SP, Carroll CS Sr, Bofill JA, Magann EF. Continuous subcutaneous terbutaline administration prolongs pregnancy after recurrent preterm labor. Am J Obstet Gynecol. 2003;188(6):1460–5 (discussion 5–7).

Niinimaki M, Karinen P, Hartikainen AL, Pouta A. Treating miscarriages: a randomised study of cost-effectiveness in medical or surgical choice. BJOG : Int J Obstet Gynaecol. 2009;116(7):984–90.

Palomba S, Russo T, Falbo A, Manguso F, D’Alessandro P, Mattei A, et al. Laparoscopic uterine nerve ablation versus vaginal uterosacral ligament resection in postmenopausal women with intractable midline chronic pelvic pain: a randomized study. Eur J Obstet Gynecol Reprod Biol. 2006;129(1):84–91.

Petrou S, Taher SE, Abangma G, Eddama O, Bennett P. Cost-effectiveness analysis of prostaglandin E2 gel for the induction of labour at term. BJOG Int J Obstet Gynaecol. 2011;118(6):726–34.

Petrou S, Trinder J, Brocklehurst P, Smith L. Economic evaluation of alternative management methods of first-trimester miscarriage based on results from the MIST trial. BJOG Int J Obstet Gynaecol. 2006;113:879–89.

Prick BW, Duvekot JJ, van der Moer PE, van Gemund N, van der Salm PC, Jansen AJ, et al. Cost-effectiveness of red blood cell transfusion vs. non-intervention in women with acute anaemia after postpartum haemorrhage. Vox Sanguinis. 2014;107(4):381–8.

Ramsey PS, Harris DY, Ogburn PL Jr, Heise RH, Magtibay PM, Ramin KD. Comparative efficacy and cost of the prostaglandin analogs dinoprostone and misoprostol as labor preinduction agents. Am J Obstet Gynecol. 2003;188(2):560–5.

Relph S, Bell A, Sivashanmugarajan V, Munro K, Chigwidden K, Lloyd S, et al. Cost effectiveness of enhanced recovery after surgery programme for vaginal hysterectomy: a comparison of pre and post-implementation expenditures. Int J Health Plan Manag. 2014;29(4):399–406.

Sarlos D, Kots L, Stevanovic N, Schaer G. Robotic hysterectomy versus conventional laparoscopic hysterectomy: outcome and cost analyses of a matched case-control study. Eur J Obstet Gynecol Reprod Biol. 2010;150(1):92–6.

Sculpher M, Manca A, Abbott J, Fountain J, Mason S, Garry R. Cost effectiveness analysis of laparoscopic hysterectomy compared with standard hysterectomy: results from a randomised trial. BMJ. 2004;328:134–7.

Sculpher M, Thompson E, Brown J, Garry R. A cost effectiveness analysis of goserelin compared with danazol as endometrial thinning agents. Br J Obstet Gynaecol. 2000;107(3):340–6.

Simon J, Gray A, Duley L. Cost-effectiveness of prophylactic magnesium sulphate for 9996 women with pre-eclampsia from 33 countries: economic evaluation of the Magpie Trial. BJOG: Int J Obstet Gynaecol. 2006;113(2):144–51.

Sjostrom S, Kopp Kallner H, Simeonova E, Madestam A, Gemzell-Danielsson K. Medical abortion provided by nurse-midwives or physicians in a high resource setting: a cost-effectiveness analysis. PLoS One. 2016;11(6):e0158645.

ten Eikelder MLG, van Baaren G-J, Rengerink KO, Jozwiak M, de Leeuw JW, Kleiverda G, et al. Comparing induction of labour with oral misoprostol or Foley catheter at term: cost effectiveness analysis of a randomised controlled multi-centre non-inferiority trial. BJOG: Int J Obstet Gy. 2017. doi:10.1111/1471-0528.14706.

van Baaren G-J, Broekhuijsen K, van Pampus MG, Ganzevoort W, Sikkema JM, Woiski MD, et al. An economic analysis of immediate delivery and expectant monitoring in women with hypertensive disorders of pregnancy, between 34 and 37 weeks of gestation (HYPITAT-II). BJOG: Int J Obstet Gynaecol. 2017;124:453–61.

van Baaren GJ, Jozwiak M, Opmeer BC, Oude Rengerink K, Benthem M, Dijksterhuis MG, et al. Cost-effectiveness of induction of labour at term with a Foley catheter compared to vaginal prostaglandin E(2) gel (PROBAAT trial). BJOG: Int J Obstet Gynaecol. 2013;120(8):987–95.

Vijgen SM, Koopmans CM, Opmeer BC, Groen H, Bijlenga D, Aarnoudse JG, et al. An economic analysis of induction of labour and expectant monitoring in women with gestational hypertension or pre-eclampsia at term (HYPITAT trial). BJOG: Int J Obstet Gynaecol. 2010;117(13):1577–85.

Walker KF, Dritsaki M, Bugg G, Macpherson M, McCormick C, Grace N, et al. Labour induction near term for women aged 35 or over: an economic evaluation. BJOG Int J Obstet Gynaecol. 2017;124(6):929–34.

Yoong W, Fadel MG, Walker S, Williams S, Subba B. Retrospective cohort study to assess outcomes, cost-effectiveness, and patient satisfaction in primary vaginal ovarian cystectomy versus the laparoscopic approach. J Minim Invas Gynecol. 2016;23(2):252–6.

Bijen CB, Vermeulen KM, Mourits MJ, Arts HJ, Ter Brugge HG, van der Sijde R, et al. Cost effectiveness of laparoscopy versus laparotomy in early stage endometrial cancer: a randomised trial. Gynecol Oncol. 2011;121(1):76–82.

van Dongen JM. Economic evaluations of worksite health promotion programs [Doctoral dissertation]. Amsterdam: Vrije Universiteit Amsterdam; 2014.

Barber JA, Thompson SG. Analysis of cost data in randomized trials: an application of the non-parametric bootstrap. Stat Med. 2000;19(23):3219–36.

Briggs AH, Gray AM. Handling uncertainty when performing economic evaluation of healthcare interventions. Health Technol Assess. 1999;3(2):1–134.

Burton A, Billingham LJ, Bryan S. Cost-effectiveness in clinical trials: using multiple imputation to deal with incomplete cost data. Clin Trials. 2007;4(2):154–61.

MacNeil Vroomen J, Eekhout I, Dijkgraaf MG, van Hout H, de Rooij SE, Heymans MW, et al. Multiple imputation strategies for zero-inflated cost data in economic evaluations: which method works best? Eur J Health Econ. 2016;17(8):939–50.

Evers S, Goossens M, de Vet H, van Tulder M, Ament A. Criteria list for assessment of methodological quality of economic evaluations: Consensus on Health Economic Criteria. Int J Technol Assess Health Care. 2005;21(2):240–5.

Norwegian Medicines Agency. Guidelines on how to conduct Pharmacoeconomic Analyses. Oslo, Norway: Norwegian Medicines Agency; 2012. p. 77.

Collège des Economistes de la Santé. French Guidelines for the Economic Evaluation of Healthcare Technologies. Paris, France: Collège des Economistes de la Santé; 2004. p. 90.

Lin DY, Feuer EJ, Etzioni R, Wax Y. Estimating Medical Costs from Incomplete Follow-Up Data. Biometrics. 1997;53(2):419.

Author information

Authors and Affiliations

Contributions

ME: study rationale and design, literature selection, data extraction, interpretation and reflection, writing the manuscript. JvD: study rationale and design, literature selection, data extraction, interpretation and reflection, reviewing the manuscript. JH: interpretation and reflection, reviewing the manuscript. MvT: study rationale and design, interpretation and reflection, reviewing the manuscript. JEB: study rationale and design, literature selection, interpretation and reflection, reviewing the manuscript.

Corresponding author

Ethics declarations

Disclosure of potential conflict of interests

ME reports no conflict of interest. JMvD reports no conflict of interest. JEB reports no conflict of interest. JAF has received grants from ZonMw, NOW, Samsung, Celenova and Pelgrem, outside the submitted work. MWT’s institution received research grants from several government research agencies and professional organizations and his travel expenses were covered by organizing professional organizations. He received honoraria for reviewing grant proposals from Swedish and Canadian governmental grant agencies. He has not received any honoraria or travel expenses from the industry.

Ethics approval and informed consent

This was a systematic review of previously published data and therefore does not require ethical approval and/or informed consent.

Funding

None.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution-NonCommercial 4.0 International License (http://creativecommons.org/licenses/by-nc/4.0/), which permits any noncommercial use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

El Alili, M., van Dongen, J.M., Huirne, J.A.F. et al. Reporting and Analysis of Trial-Based Cost-Effectiveness Evaluations in Obstetrics and Gynaecology. PharmacoEconomics 35, 1007–1033 (2017). https://doi.org/10.1007/s40273-017-0531-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40273-017-0531-3