Abstract

Objective

Accurate representation of study findings is crucial to preserve public trust. The language used to describe results could affect perceptions of the efficacy or safety of interventions. We sought to compare the adjectives used in clinical trial reports of industry-authored and non-industry-authored research.

Methods

We included studies in PubMed that were randomized trials and had an abstract. Studies were classified as “non-industry-authored” when all authors had academic or governmental affiliations, or as “industry-authored” when any of the authors had industry affiliations. Abstracts were analyzed using a part-of-speech tagger to identify adjectives. To reduce the risk of false positives, the analysis was restricted to adjectives considered relevant to “coloring” (influencing interpretation) of trial results. Differences between groups were determined using exact tests, stratifying by journal.

Results

A total of 306,007 publications met the inclusion criteria. We were able to classify 16,789 abstracts; 9,085 were industry-authored research, and 7,704 were non-industry-authored research. We found a differential use of adjectives between industry-authored and non-industry-authored reports. Adjectives such as “well tolerated” and “meaningful” were more commonly used in the title or conclusion of the abstract by industry authors, while adjectives such as “feasible” were more commonly used by non-industry authors.

Conclusions

There are differences in the adjectives used when study findings are described in industry-authored reports compared with non-industry-authored reports. Authors should avoid overusing adjectives that could be inaccurate or result in misperceptions. Editors and peer reviewers should be attentive to the use of adjectives and assess whether the usage is context appropriate.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

The language used to describe results could affect perceptions of the efficacy or safety of interventions. |

There are differences in the adjectives used when study findings are described in industry-authored reports compared with non-industry-authored reports. |

Authors should avoid overusing adjectives that could be inaccurate or result in misperceptions. |

Editors and peer reviewers should be attentive to the use of adjectives and assess whether the usage is context appropriate. |

1 Background

Accurate understanding of the efficacy and safety of health interventions is crucial for public health. Major impediments to such understanding include selective reporting of trial results and inadequate reporting of trial results. Publication of only studies that show benefit, known as publication bias, leads to overestimation of the efficacy of interventions. Inadequate reporting of trial results limits the ability of the reader to assess the validity of trial findings [1, 2]. The CONSORT initiative [3] has led to improvements in the quality of reporting of trial results [4, 5]. In addition, mandatory registration of clinical trials and mandatory publication of trial results are strategies implemented to diminish the impact of publication bias [6].

How trial results are described in publications may influence the reader’s perception of the efficacy and safety of interventions. For example, an intervention can be portrayed as beneficial in the publication despite having failed to differentiate statistically from placebo. In this type of bias, called spin bias, the reader is distracted from the non-significant results [7]. The language used to describe trial results could also affect perceptions of the efficacy or safety of health interventions as well as the quality of the study. We studied the vocabulary used to report trial results and compared it between two authorship groups (industry versus non-industry).

2 Objective

The objective of this study was to compare the adjectives used to report results of clinical trials between industry and non-industry (academia and government). We focused on adjectives because their use adds “color” (potentially biasing interpretation) to the description of study findings.

3 Methods

3.1 Inclusion Criteria

We included studies indexed in PubMed that were randomized, controlled trials; assessed humans; had an abstract; and were published in English. The search was conducted on October 7, 2013, without any time limit (all articles present in PubMed until that time). The PubMed query used to identify the studies was “English[lang] AND Randomized Controlled Trial[ptyp] AND humans[MeSH Terms] AND has abstract[text]”.

3.2 Classification of Abstracts

Studies were classified as industry-authored or non-industry-authored (academia and government), depending on the affiliation of the authors, using an automated algorithm. To determine the affiliation of an author, the affiliation field in PubMed was used to scan for word patterns indicating an industry (e.g., “janssen”, “johnson & johnson”), academic affiliation (e.g., “university”, “school”) or government (e.g., “centers? for disease control”, “u\\.?s\\.? agency”). Because the PubMed affiliation field contains the affiliation of only one of the authors and therefore could not be used as conclusive evidence in papers written by multiple authors, we supplemented the search for the authors’ affiliation, using PubMed Central®. PubMed Central is a free full-text archive of biomedical journals and therefore lists the affiliations of each one of the authors of a manuscript. Appendix 1 contains the complete list of patterns used for the abstract classification.

For the abstracts not included in PubMed Central, we developed an algorithm to predict the affiliation of the authors. We assumed that if an author had a particular affiliation in one manuscript, that author would also have that affiliation in any other manuscript written by that author in the same year. Because there are no unique identifiers for authors in PubMed, we used an author name disambiguation algorithm similar to Authority [8], which models the probability that two articles sharing the same author name were written by the same individual. The probability was estimated using a random forest [9] classifier using these features as input: length of author name, author name frequency in Medline, similarity in MeSH terms, words in the title or words in the abstract, whether the paper was in the same journal, overlap of other authors, and time between publication in years. The classifier was trained on a set where positive cases were identified using author e-mail addresses (only available for very few authors), and negative controls cases were identified based on mismatch in author first name. The probability was subsequently used in a greedy cluster algorithm to group all papers by an author.

An abstract was classified as non-industry-authored when all authors of the publication had academic or government affiliations. An abstract was classified as industry-authored when any of the authors in the publication had an industry affiliation. Publications in which the algorithm found none of the patterns to classify an author, or found an author with affiliations to both industry and academia or government, were excluded from the analysis.

To assess the accuracy of the algorithm that predicted author affiliation, we selected a random sample of 250 abstracts and manually checked the affiliation of each one of the authors in the full manuscript and compared these results with the algorithm’s classification.

3.3 Adjective Selection

To compare the use of language between industry and non-industry authors, we downloaded the Medline database. The abstracts that met the inclusion criteria were run through the part-of-speech tagger of OpenNLP [10], which allowed us to classify the adjectives. OpenNLP uses the Penn Treebank tagset, and we considered all tokens with tags JJ (adjective), JJR (adjective, comparative) and JJS (adjective, superlative) [11]. OpenNLP is an open-source machine learning-based toolkit for the processing of natural language text, made available by the Apache Foundation. It has an overall accuracy of around 97 % [12]. We focused on abstracts because more people read the abstract than the whole article, and because only abstracts are available in Medline.

After extracting all adjectives from the abstracts, we selected a set of adjectives we considered relevant to coloring the results of a trial. This selection was performed independently by two authors (MSC and MS), after which discrepancies were resolved in conference. All subsequent analyses were limited to this set to reduce the risk of false positives. Examples of excluded adjectives are “viscous” and “intellectual”. The list of adjectives included and excluded is shown in Appendix 2.

3.4 Location in the Abstract

To locate where in the abstract the differences in adjective use occurred, we looked separately at the title and conclusion. The title is clearly identified in PubMed records. For unstructured abstracts, the conclusion was considered to be in the last two sentences of the abstract. Sentences were detected using the OpenNLP toolkit [10].

3.5 Analysis

To determine whether an adjective was used more often by industry or non-industry authors, we used an exact test for contingency tables [13], stratifying by journal to adjust for any differences in language in the different journals. This test is similar to the well-known Mantel–Haenszel test in that it tests for an overall difference between groups through differences within strata, but it uses an exact method, making it more robust for small numbers within each stratum. We further restricted the adjectives to those that were present in at least 100 papers in our final data set. Because of the large number of tests, we corrected for multiple testing, using Holm’s technique [14].

We also calculated a relative estimate. Values >1 mean that industry uses that adjective more. Values <1 mean that the adjective is used more often by academia and government. A value of exactly 1.0 would indicate equal use by both groups of authors. We report 95 % confidence intervals (CIs), but these intervals are not adjusted for multiple testing.

We further computed the average number of “colored” adjectives used in the title and abstract, where any adjective that was used multiple times in an abstract was counted multiple times. For this analysis, we used all adjectives we considered relevant, including those that appeared in fewer than 100 articles.

3.6 Source of Funding

Using authors’ affiliation is one way to classify studies as either industry or non-industry. Funding of the study is another way. PubMed identifies financial support of the research, but it would only allow for a US-government and non-US-government funding classification. Sources of financial support are often listed in the full manuscript. For the subset of abstracts that had the full-text articles in PubMed Central, we identified the source of funding and then compared that classification with our affiliation-based classification to provide an estimate of the degree of potential discordance. For example, a trial conducted by an academic institute may be authored by academicians only but funded by a pharmaceutical company. Under the affiliation classification, the research would be considered as non-industry, while under the sponsorship classification, it would be considered as industry. Because the information was only available for a limited number of abstracts, we could not conduct sensitivity analyses, but we are reporting the findings.

4 Results

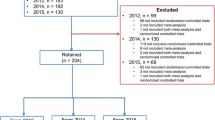

A total of 306,007 publications met the inclusion criteria. We were able to classify 16,789 abstracts; 9,085 were classified as industry, and 7,704 were classified as non-industry. The algorithm correctly identified 235 of the 250 manuscripts sampled for accuracy (15 were incorrectly assessed as non-industry-authored), indicating that the accuracy of the classifying algorithm was 94 % with a Kappa value of 0.88 (Table 1).

The abstracts were published from 1981 to 2013, and 92.5 % were published in 2000 or after. The abstracts were published in 1,788 journals, and 50 % were published in 98 journals. Appendix 3 provides the list of journals, with the number of abstracts by journal.

The 16,789 abstracts had a total of 4,690 adjectives: 298 were considered relevant by both of the authors (see Appendix 4), and 72 adjectives were present in at least 100 papers in our final data set and were analyzed (Table 2). With few exceptions, these were positive adjectives.

The use of adjectives differed between industry and non-industry (Table 3). Ten adjectives located in the title or conclusion, and 15 adjectives located anywhere in the abstract, had relative use values >1, indicating preferential use by industry. Most notably, adjectives such as “well tolerated” and “meaningful” were more commonly used by industry-authored reports in the title or conclusion of the abstracts [relative use 5.20 (CI 2.73–10.03) and 3.08 (CI 1.73–5.44), respectively], whereas adjectives such as “feasible” were more commonly used in abstracts classified as non-industry-authored [relative use 0.34 (CI 0.18–0.6)]. Adjectives such as “successful” and “usual” were also more commonly used by non-industry, when considering the abstract overall [relative use 0.46 (CI 0.31–0.68) and 0.40 (CI 0.30–0.53), respectively] (Table 3).

Examples of the contexts in which the adjectives were used in the title or conclusion of the abstract are presented in Table 4.

On average, there were 2.6 “colored” adjectives in each abstract, and this number was the same for both industry-authored and non-industry-authored research.

4.1 Source of Funding

When we estimated the degree of potential discordance between abstracts classified by author affiliation or by source of funding, we found that of the 16,789 abstracts that we could classify as industry-authored or non-industry-authored research, only 189 (1.1 %) had the full text available in PubMed Central and disclosed either partial or total funding by industry; 16 % of these studies were classified as being from non-industry when looking at authors’ affiliations.

5 Discussion

There are differences in the adjectives used when study findings are described in industry-authored compared with non-industry-authored reports. Certain adjectives are five times more commonly used by industry, although, on average, both groups of authors use the same number of “colored” adjectives.

The differences in the adjectives used that were noted in the present study support anecdotal evidence about the way results of clinical trials are reported by industry. The Medical Publishing Insights and Practices (MPIP) initiative in 2012 recommended avoiding broad statements such as “generally safe and well tolerated” [15] when reporting trial results—precisely the type of adjectives we found were more commonly used by industry. The MPIP initiative was founded by members of the pharmaceutical industry and the International Society for Medical Publication Professionals to elevate trust, transparency, and integrity in publishing industry-sponsored studies. Describing an intervention as “well tolerated”, which is the adjective with the largest use in industry-authored manuscripts compared with academic or government-authored manuscripts—although accurate in certain circumstances, considering the nature of the trials conducted by industry—might not be generalizable to the broader population when the trial is small, when relatively “healthy” or “stable” participants are recruited (compared with the broader population with the target indication), or when the follow-up is short.

The use of those adjectives (such as “acceptable”, “meaningful”, “potent”, or “safe”) more commonly present in industry-authored reports than in non-industry-authored reports could suggest that industry-authored reports tend to focus on the positive aspects of the health intervention being evaluated. However, the differences in adjective use could also reflect variations in the types of trials conducted by industry and academic or governmental institutions. Industry studies tend to focus on drugs or devices, while non-industry work is likely to be more inclusive of other types of heath interventions. By controlling for journal, we adjusted partially for potential differences in the studies.

We used the affiliation of the authors to classify publications as industry-authored and non-industry-authored. Even if one author out of many was from industry, the paper was classified as industry-authored. Although this approach could seem extreme, it has also been recommended by others [16]. It might seem preferable to look at the source of funding instead of the author affiliation; however, this approach too has its shortcomings. Funding mechanisms are complex, some journals do not report funding sources, full reporting of all sources of financial support is not complete, and there are different levels of support, from unrestricted educational grants to support that includes input of the manufacturers into trial designs, conduct of the analysis and publication [16, 17]. We assessed how many of the abstracts in which the full manuscript reported support from industry were classified as being non-industry in origin. We found that 16 % of those papers with industry support were classified as being from non-industry. It is difficult to predict the direction of bias related to any potential misclassification because of the shortcomings listed above, but in the worst-case scenario, it would lead to an underestimation of the relative measure and consequently to a smaller set of adjectives because of loss of power.

The results of this study are based on assessing the abstract instead of the full paper. Many readers just read the abstract of the published article and may be influenced by it, so we argue that it is an important place to look for differences in reporting style. The results of a study that assessed the impact of “spin” on interpretation of cancer trials showed that clinicians’ interpretation was affected by reading the abstracts of the trials [18]. In addition, the study focused on randomized, controlled trials, thus the findings may not apply to other types of study designs.

The present study was limited to counting adjectives and assessing the difference in those counts in industry-authored and non-industry-authored reports. Although we have provided some examples to illustrate how they were used, the study ignored the context of the usage. A thematic analysis could allow detection of patterns, and a critical review of the full text of the paper could determine whether the use of the adjectives was indeed appropriate in view of the data or what it is known in the field.

6 Conclusion

We assessed a very large number of abstracts and found differences in how study findings are described by industry-affiliated authors as compared with non-industry-affiliated authors. The language used to describe trial results could affect perceptions of the efficacy or safety of health interventions. Authors should avoid overusing adjectives that could be inaccurate or potentially lead to misperceptions. Editors and peer reviewers should be attentive to the use of adjectives in the abstract (and the manuscript in its entirety) and assess whether their use is appropriate.

References

Dickersin K. The existence of publication bias and risk factors for its occurrence. JAMA. 1990;263(10):1385–9.

Easterbrook PJ, Berlin JA, Gopalan R, Matthews DR. Publication bias in clinical research. Lancet. 1991;337(8746):867–72.

Schulz KF, Altman DG, Moher D. CONSORT 2010 statement: updated guidelines for reporting parallel group randomized trials. Ann Intern Med. 2010;152(11):726–32.

Han C, Kwak KP, Marks DM, Pae CU, Wu LT, Bhatia KS, et al. The impact of the CONSORT statement on reporting of randomized clinical trials in psychiatry. Contemp Clin Trials. 2009;30(2):116–22.

Alvarez F, Meyer N, Gourraud PA, Paul C. CONSORT adoption and quality of reporting of randomized controlled trials: a systematic analysis in two dermatology journals. Br J Dermatol. 2009;161(5):1159–65.

De AC, Drazen JM, Frizelle FA, Haug C, Hoey J, Horton R, et al. Clinical trial registration: a statement from the International Committee of Medical Journal Editors. N Engl J Med. 2004;351(12):1250–1.

Vera-Badillo FE, Shapiro R, Ocana A, Amir E, Tannock IF. Bias in reporting of end points of efficacy and toxicity in randomized, clinical trials for women with breast cancer. Ann Oncol. 2013;24(5):1238–44.

Torvik VI, Smalheiser NR. Author name disambiguation in Medline. ACM Trans Knowl Discov Data. 2009;3(3). pii: 11.

Breiman L. Random forests. Mach Learn. 2001;45:5–32.

The Apache Software Foundation. OpenNLP. The Apache Software Foundation. http://opennlp.apache.org/index.html. 2010. Cited 28 Oct 2013.

Penn Treebank Project. Alphabetical list of part-of-speech tags used in the Penn Treebank Project. https://www.ling.upenn.edu/courses/Fall_2003/ling001/penn_treebank_pos.html. 2015. Accessed 20 Feb 2015

Ferraro JP, Daume H III, Duvall SL, Chapman WW, Harkema H, Haug PJ. Improving performance of natural language processing part-of-speech tagging on clinical narratives through domain adaptation. J Am Med Inform Assoc. 2013;20(5):931–9.

Martin DO, Austin H. An exact method for meta-analysis of case-control and follow-up studies. Epidemiology. 2000;11(3):255–60.

Holm S. A simple sequentally rejective multiple test procedure. Scand J Stat. 1979;6(2):65–70.

Mansi BA, Clark J, David FS, Gesell TM, Glasser S, Gonzalez J, et al. Ten recommendations for closing the credibility gap in reporting industry-sponsored clinical research: a joint journal and pharmaceutical industry perspective. Mayo Clin Proc. 2012;87(5):424–9.

van LM, Overbeke J, Out HJ. Recommendations for a uniform assessment of publication bias related to funding source. BMC Med Res Methodol. 2013; 13(1):120.

Schwartz RS, Curfman GD, Morrissey S, Drazen JM. Full disclosure and the funding of biomedical research. N Engl J Med. 2008;358(17):1850–1.

Boutron I, Altman DG, Hopewell S, Vera-Badillo F, Tannock I, Ravaud P. Impact of spin in the abstracts of articles reporting results of randomized controlled trials in the field of cancer: the SPIIN randomized controlled trial. J Clin Oncol. 2014;32(36):4120–6.

Acknowledgments and declaration of competing interests

All authors are employees of Janssen Research & Development, LLC, except for Jesse A. Berlin, who is an employee of Johnson & Johnson, the parent company of Janssen Research & Development, LLC.

Author Contributions

MSC: study conception and design, interpretation of study findings, manuscript preparation.

JAB: interpretation of study findings, revising manuscript critically for important intellectual content.

SCG: interpretation of study findings, revising manuscript critically for important intellectual content.

WPB: interpretation of study findings, revising manuscript critically for important intellectual content.

MS: study design and execution, analysis, interpretation of study findings, manuscript preparation.

All authors read and approved the final manuscript.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Industry and academic/governmental affiliation patterns

Industry patterns | Industry patterns | Academic/government patterns | Academic/government patterns |

|---|---|---|---|

Abbott | Merz | Clinic | School |

Amgen | Mundipharma | Clinique | Sjukhuset |

Astrazeneca | Nitec | College | Universidad |

Aventis | Nordis | Ecole | Universidade |

Bayer | Novartis | Foundation | Universitaires |

Boehringer | Pfizer | Gasthuis | Universitária |

Centocor | Pharmaceuticals | Hôpital | Universitat |

Genentech | Procter | Hospital | Universite |

Glaxo | Sanofi | Hospitalario | Université |

Glaxosmithkline | Shionogi squibb | Hospitales | Universiteit |

Gsk | Takeda | Hospitalier | Universiti |

Humax | Ucb | Hospitaller | University |

Janssen | Vifor | Karolinska | Ziekenhuis |

Kaken | Zymogenetics | Kliniek | Centers? for disease control |

Lilly | Industrie farmaceutiche | Kliniken | Centro de salud |

Mcneil | Johnson (&|and) johnson | Klinikum | Centro médico |

Medimmune | Pharmaceutical company | Krankenhaus | Memorial sloan kettering cancer center |

Medtronic | ([^a][^a-z]|^)roche([^a-z]|$) | National | u\\.?s\\.? agency |

Nazionale | Health department | ||

Policlinico | Food and drug administration | ||

Polyclinique |

Appendix 2: All adjectives present in the analyzed abstracts

1000-mg

100-mg

100-mm

100-point

10-cm

10-day

10-fold

10-h

10-item

10mg

10-mg

10-min

10-minute

10-mm

10-month

10-point

10th

10-valent

10-week

10-year

11-day

11-fold

11-point

11-week

120-mg

12-day

12-h

12-hour

12-item

12-lead

12-mg

12-month

12-months

12th

12-week

12-wk

13-week

14-day

14-week

150-mg

15-day

15-item

15-min

15-minute

15-month

15th

15-year

16-week

17beta-estradiol

17-item

18-month

18-week

1b

1c

1-compartment

1-day

1-g

1-h

1-hour

1-infected

1-mg

1-month

1-point

1rm

1s

1-sided

1st

1-week

1-year

1-yr

200mg

200-mg

20-item

20mg

20-mg

20-min

20-minute

20-month

20-week

21-day

21-item

23f

23-valent

24h

24-h

24-hour

24-hr

24-month

24-months

24-week

250-mg

25-hydroxyvitamin

25-item

25-mg

25th

26-week

28-day

28-item

28-joint

28-week

2-arm

2-compartment

2d

2-day

2-dose

2-fold

2-g

2-h

2-hour

2-hr

2max

2-mg

2-minute

2-month

2-part

2-period

2-phase

2-point

2-sequence

2-sided

2-stage

2-way

2-week

2-wk

2-year

2-yr

300-mg

30-day

30-fold

30-item

30-mg

30-min

30-minute

30-month

30-second

30-week

32-week

35-day

36-item

36-week

3-arm

3d

3-d

3-day

3-dimensional

3-dose

3-drug

3-fold

3-h

3-hour

3-hydroxy-3-methylglutaryl

3mg

3-mg

3-month

3-months

3-period

3rd

3-treatment

3-way

3-week

3-weekly

3-wk

3-year

400mg

400-mg

40-mg

40-week

45-min

46-week

48-h

48-hour

48-week

4-day

4-fold

4-h

4-hour

4-mg

4-month

4-period

4-point

4th

4-way

4-week

4-weeks

4-wk

4-year

4-yr

500-mg

50mg

50-mg

50-week

52-week

54-week

5alpha-reductase

5-day

5-fluorouracil

5-fold

5-fu

5-hmt

5-ht

5-ht3

5-httlpr

5-hydroxyindoleacetic

5-hydroxymethyl

5-hydroxytryptamine

5-lipoxygenase

5mg

5-mg

5-minute

5-month

5-period

5-point

5th

5-way

5-week

5-year

5-year-old

5-yr

600-mg

60-mg

60-min

6-day

6-h

6-hour

6-min

6-minute

6-month

6-months

6mwt

6-point

6th

6-week

6-wk

6-year

70-kg

70-mg

72-h

72-hour

750-mg

75-g

75-mg

75th

7-day

7-point

7-valent

7vcrm

7-week

7-year

800-mg

80-mg

85th

8-day

8-fold

8-h

8-hour

8-mg

8-month

8th

8-week

90-day

90-minute

90th

95th

96-week

9-day

9-month

9-week

µg

µ-opioid

a1c

abacavir

abatacept-treated

abbreviated

abciximab

abdominal

aberrant

abiraterone

able

abnormal

aboriginal

above

abrupt

absent

absolute

absorbable

abstinent

abstract

abused

academic

acarbose

acceptable

accepted

accessible

accidental

accompanying

accurate

acellular

acetabular

acetic

acetylsalicylic

achievable

achieved

acid

acidic

acid-related

acid-suppressive

acne

acoustic

acr20

acromegaly

acting

actinic

activate

activated

active

active-comparator-controlled

active-control

active-controlled

activity-related

actual

acute

acutely

acute-phase

adalimumab

adalimumab-treated

adaptive

adas-cog

added

additional

additive

add-on

adenosine

adequate

adherent

adhesive

adipose

adjacent

adjudicated

adjunct

adjunctive

adjustable

adjusted

adjuvant

adjuvanted

administrative

adolescent

adp-induced

adrenal

adrenergic

adult

advanced

advantageous

adverse

advisable

aerobic

aerosol

aerosolized

aesthetic

afebrile

affected

affective

affordable

aforementioned

african

african-american

age-adjusted

age-appropriate

aged

age-dependent

age-matched

age-related

age-specific

aggregate

aggressive

aging

agitated

agonist

ai

a-i

air

airborne

al

alanine

alcohol-dependent

alcohol-free

alcoholic

alcohol-related

aldosterone

alendronate

alert

alive

all-cause

allele

allelic

allergen-specific

allergic

allometric

allosteric

all-patients-treated

alone

alpha-linolenic

altered

alternate

alternative

alveolar

ambient

ambiguous

ambulatory

ameliorate

amenable

american

amg

amino

amino-terminal

aminotransferase

amiodarone

amlodipine

amodiaquine

amplitude

amprenavir

amyloid

anabolic

anaemic

anaerobic

anaesthetic

analgesic

analogous

analysed

analytic

analytical

anamnestic

anastomotic

anastrozole

anatomic

anatomical

anchor-based

ancillary

anecdotal

anemic

anesthetic

anesthetized

aneurysmal

anginal

angiogenic

angiographic

angioplasty

angiotensin-converting

angry

angular

animal

ankle-brachial

ankylosing

annual

annular

antacid

antagonistic

antecedent

antenatal

anterior

anteroposterior

anthropometric

anti-aging

anti-ama1

antianginal

anti-angiogenic

antiarrhythmic

anti-atherogenic

antibacterial

antibiotic

anticalculus

anticholinergic

anticipated

anticipatory

anti-circumsporozoite

anticoagulant

antidepressant

antidepressive

antidiabetic

anti-diabetic

antiemetic

antiepileptic

anti-factor

antifracture

antifungal

antigen

antigenic

antigen-specific

anti-hav

anti-hbs

anti-hcv

antihistamine

anti-hiv

anti-hpv

anti-hpv-16

anti-human

antihyperglycaemic

antihyperglycemic

antihypertensive

anti-hypertensive

anti-ige

antiinflammatory

anti-inflammatory

anti-influenza

anti-interleukin-1ß

antimalarial

anti-malarial

antimicrobial

antimigraine

antimuscarinic

antimycobacterial

antineoplastic

antinociceptive

antioxidant

antioxidative

antiparkinsonian

antiplaque

anti-plaque

antiplatelet

anti-platelet

anti-prp

antipsychotic

antipyretic

antipyrine

antiresorptive

antiretroviral

anti-retroviral

antiretroviral-experienced

antiretroviral-naïve

antiretroviral-naive

antirheumatic

anti-rheumatic

antisecretory

antisense

antiseptic

antithrombin

antithrombotic

anti-tnf

anti-tnf-alpha

antitumor

anti-tumor

antitumour

anti-tumour

anti-vegf

antiviral

anti-xa

antral

anxiolytic

anxious

aortic

aphthous

apical

apoa-i

apolipoprotein

apoptotic

apparent

applicable

appreciable

appropriate

approved

approximate

aprepitant

aqueous

arachidonic

arbitrary

ar-c124910xx

arginine-stimulated

aripiprazole

aripiprazole-treated

arithmetic

armed

aromatase

arousal

arrhythmic

artemether-lumefantrine

artemisinin-based

arterial

arteriovenous

artesunate

artesunate-amodiaquine

arthroplasty

arthroscopic

articular

artificial

as03a-adjuvanted

as03-adjuvanted

as04-adjuvanted

ascending-dose

ascorbic

asenapine

aseptic

asexual

asian

as-needed

aspirate

aspirin

aspirin-treated

assertive

assessable

assessor-blind

assessor-blinded

assigned

assisted

associated

associative

asthma

asthma-related

asthma-specific

asthmatic

as-treated

asymmetric

asymptomatic

at

atenolol-based

atherogenic

atherosclerotic

atherothrombotic

athletic

atomoxetine

atomoxetine-treated

atopic

atorvastatin

atp-binding

atrial

atrioventricular

at-risk

atrophic

attentional

attenuated

attractive

attributable

atypical

auc

auc0-t

audio

auditory

augmented

auricular

australian

autobiographical

autogenous

autoimmune

autologous

automated

automatic

autonomic

autonomous

available

average

aversive

avian

awake

awakening

aware

axial

ß

ß2-agonist

ß-cell

azimilide

azithromycin

azole

back

backward

bacterial

bactericidal

bacteriologic

bacteriological

bad

balanced

bare

baroreflex

basal

basal-bolus

base

baseline

baseline-adjusted

baseline-corrected

baseline-to-endpoint

basic

b-cell

bcr-abl

bdi-ii

behavioral

behavioural

belgian

beneficial

benign

best

best-corrected

beta

beta-1b

beta2-agonist

beta-adrenergic

beta-agonist

beta-carotene

beta-cell

beta-haemolytic

better

between-patient

between-subject

bg

bi

bid

bidirectional

biexponential

big

bilateral

biliary

bilingual

bimanual

b-inactivated

binary

binding

binding-adjusted

binocular

binomial

bioactive

bioavailable

biochemical

bioelectrical

bioequivalent

biologic

biological

biomechanical

biomedical

biopsy

biopsy-confirmed

biopsy-proven

bioresorbable

biphasic

bipolar

bis-guided

bispectral

bisphosphonate

bivalent

bivariate

biventricular

biweekly

bi-weekly

black

bladder-related

blend-a-med

blended

blind

blinded

blinded-endpoint

blinding

blue

blunt

blunted

blurred

bodily

body

bolus

bone-specific

bony

borderline

botanical

bound

bovine

bp-lowering

brachial

brain-derived

branded

brazilian

breast-fed

brief

bright

brisk

british

broad

broader

broad-spectrum

bronchial

bronchoalveolar

bronchodilatory

bronchopulmonary

bronchoscopic

brown

buccal

budesonide

buffered

buoyant

bupivacaine

buspirone

busy

ca

caesarean

caffeinated

caffeine

calculated

calibrated

caloric

canadian

cancer-related

cancer-specific

cannabinoid

capable

capsaicin-induced

carbohydrate

carbonic

carcinoembryonic

cardiac

cardinal

cardiometabolic

cardioprotective

cardiopulmonary

cardiorespiratory

cardio-respiratory

cardiovascular

care-as-usual

care-based

careful

carer

carious

carotid

carpal

carvedilol

castle

castration-resistant

casual

catabolic

categorical

catheter-related

cationic

caucasian

causal

causative

cause-specific

ccr5-tropic

cecal

cefaclor-treated

cefuroxime

celecoxib

cell-derived

cell-mediated

cellular

cemented

cementless

central

centralised

centralized

cephalic

ceramic

cerebral

cerebrospinal

cerebrovascular

cerivastatin

certain

certolizumab

certoparin

cervical

cervicovaginal

cesarean

cetuximab

cgi-i

challenging

characteristic

chd

cheaper

chemical

chemotherapeutic

chemotherapy-induced

chemotherapy-naive

chewable

chi

child-pugh

chimeric

chinese

chiropractic

chi-square

chi-squared

chloroquine

cholesterol-lowering

cholinergic

choroidal

chronic

ci

cilomilast

cimetidine

ciprofloxacin

circadian

circular

circumferential

circumsporozoite

cirrhotic

cisplatin

cisplatin-induced

citrate

citric

c-kit

clarithromycin

classic

classical

classified

clean

cleansing

clear

clearer

clinical

clinic-based

clinician

clinician-administered

clinician-rated

clonal

clonidine

clopidogrel

clopidogrel-treated

close

closed

closed-loop

closer

closest

clustered

cluster-randomised

cluster-randomized

cm

cmax

co2

coadministered

co-administered

coated

cochran-mantel-haenszel

coefficient

cognitive

cognitive-behavioral

cognitive-behavioural

coherent

cohort

cold

cold-adapted

colic

collaborative

collagen-induced

collective

colloid

colonic

colonoscopy

colony-forming

colony-stimulating

colorectal

colposcopic

combined

comfortable

commercial

common

commonest

community-acquired

community-based

community-dwelling

community-living

comorbid

co-morbid

comparable

comparative

comparator-controlled

comparator-treated

compartmental

compatible

compelling

compensated

compensatory

competent

competitive

complementary

complete

complex

compliant

complicated

component

composite

comprehensive

compressed

comprised

compromised

compulsive

compulsory

computational

computed

computer-assisted

computer-based

computer-generated

computerised

computerized

computer-tailored

concentrated

concentration-dependent

concentration-effect

concentration-time

concentration-versus-time

concentric

conceptual

concerned

conclusive

concomitant

concrete

concurrent

conditional

condition-specific

confident

confirmative

confirmatory

confirmed

conflicting

confounding

congenital

congestive

congruent

conjugate

conjugated

conjunctival

connective

conscious

consecutive

consensus-based

consenting

consequent

conservative

considerable

consistent

constant

constitutional

constraint-induced

contemporary

content

contextual

contingent

continual

continued

continuous

contraceptive

contradictory

contralateral

contrary

contrast-enhanced

controlled

controversial

convenient

conventional

convergent

convincing

cooperative

coordinated

copd

coprimary

co-primary

core

corneal

coronal

coronary

correct

corrective

correlate

correlated

corresponding

cortical

corticospinal

corticosteroid

cortisol

cosmetic

cost

cost-effective

costly

cost-saving

counterbalanced

covariate

cox-2

c-peptide

cranial

c-reactive

creatine

creatinine

creative

credible

crevicular

critical

cross-clade

cross-cultural

cross-linked

crossover

cross-over

cross-reactive

cross-sectional

crp

crucial

cruciate

crude

crushed

crystalloid

ct

c-terminal

ctx-ii

cubic

cued

cultural

culture-confirmed

cultured

culture-positive

cumulative

curative

current

customary

customized

cutaneous

cut-off

cv

cyclic

cycling

cyclooxygenase-2

cyclo-oxygenase-2

cyclophosphamide

cyclosporine

cyp

cyp3a

cystatin

cystic

cytogenetic

cytokine

cytological

cytoplasmic

cytotoxic

d

dabigatran

daily

d-amphetamine

danish

dapagliflozin

dapoxetine

daptomycin

darifenacin

dark

data-driven

day-1

daytime

day-to-day

dbp

d-conjugate

d-dimer

debatable

debilitating

decaffeinated

deceased

decisional

decision-making

declarative

decompensated

decreased

deep

deeper

defective

deficient

definite

definitive

degenerative

delayed

deleterious

deliberate

delta

deltoid

delusional

demographic

demonstrable

dendritic

denosumab-treated

dense

dental

dentinal

dependant

dependent

depressant

depressed

depression-related

depressive

derivative

dermal

dermatological

dermatology-specific

descriptive

design

desirable

desloratadine

desvenlafaxine

detailed

detectable

detrimental

devastating

developed

developmental

devoid

dexlansoprazole

dextromethorphan

diabetes-related

diabetes-specific

diabetic

diagnosed

diagnostic

diaphyseal

diary

diastolic

dichotomous

didactic

dietary

diet-induced

diet-treated

different

differential

differentiate

differentiated

differing

difficile

difficult

difficult-to-treat

diffuse

digestive

digit

digital

digoxin

dihydroartemisinin-piperaquine

dilated

diminished

diphtheria-tetanus-acellular

dipropionate

direct

direct-acting

directional

dirithromycin-treated

disabled

disabling

disadvantaged

disappointing

discernible

discontinued

discordant

discrete

discriminant

discriminative

discriminatory

diseased

disease-free

disease-modifying

disease-related

disease-specific

disordered

disparate

dispersible

dispositional

disproportionate

disruptive

dissatisfied

disseminated

dissociable

distal

distant

distilled

distinct

distinctive

distressing

distribution-based

disturbed

diuretic

diurnal

divergent

diverse

dlqi

docetaxel

docosahexaenoic

doctor-patient

domestic

dominant

donepezil

dopamine

dopaminergic

dorsal

dorsolateral

dorzolamide

dorzolamide-timolol

dose-adjusted

dose-dependent

dose-effect

dose-escalating

dose-finding

dose-independent

dose-limiting

dose-linear

dose-normalized

dose-proportional

dose-ranging

dose-related

dosing

double

double-blind

double-blinded

double-dummy

double-masked

doubtful

down-regulated

downward

doxorubicin

dpp-4

dramatic

drop-out

drug-eluting

drug-free

drug-induced

drug-naïve

drug-naive

drug-related

drug-resistant

dry

dsm-iii-r

dsm-iv

dsm-iv-defined

dsm-iv-tr

dsm-iv-tr-defined

dual

dual-chamber

dual-energy

dual-therapy

ductal

due

duloxetine

duloxetine-treated

duodenal

durable

dutasteride

dutch

dyadic

dye

dynamic

dysfunctional

dyslipidemic

dyspeptic

dysphoric

e2

earlier

earliest

early

early-onset

early-phase

early-stage

easier

east

eastern

easy

eccentric

echocardiographic

ecological

economic

economical

ed

educational

eeg

efalizumab

effective

effect-site

effervescent

efficacious

efficient

egfr

eicosapentaenoic

eighth

eight-week

eighty

elastic

elderly

e-learning

elective

electric

electrical

electrocardiographic

electrochemical

electroconvulsive

electroencephalographic

electrolyte

electromagnetic

electromyographic

electronic

electrophysiological

elemental

elementary

eletriptan

elevated

elicited

eligible

elispot

elusive

embedded

embolic

emergent

emetic

emetogenic

emotional

empiric

empirical

empty

emptying

enalapril

enantioselective

encapsulated

encouraging

end-diastolic

endemic

end-expiratory

endocrine

end-of-study

endogenous

endometrial

endoscopic

endothelial

endothelium-dependent

endotracheal

endovascular

endpoint

end-point

end-stage

end-systolic

end-tidal

energy-restricted

english

english-speaking

enhanced

enjoyable

enlarged

enormous

enough

enriched

enrolled

enteral

enteric

enteric-coated

enterohepatic

entire

environmental

enzymatic

enzyme

enzyme-linked

eortc

eosinophil

eosinophilic

epicardial

epidemic

epidemiological

epidermal

epidural

epigastric

episodic

epithelial

eq-5d

equal

equianalgesic

equipotent

equivalent

equivocal

er

erectile

ergogenic

ergonomic

erlotinib

erosive

er-positive

ertapenem

erythrocyte

erythromycin

erythromycin-treated

erythropoiesis-stimulating

erythropoietic

esomeprazole

esophageal

essential

established

estimable

estimated

etanercept

etexilate

ethical

ethinyl

ethnic

etoposide

etoricoxib

euglycaemic

euglycemic

euglycemic-hyperinsulinemic

european

euroqol

euroqol-5d

evaluable

evaluated

evaluative

evaluator-blind

evaluator-blinded

event

event-driven

event-free

event-related

eventual

everolimus

every-2-week

everyday

evidence-based

evident

evoked

evolutionary

exacerbated

exact

exaggerated

examiner-blind

examiner-blinded

excellent

excess

excessive

excised

excisional

excitatory

exciting

exclusive

excreted

executive

exenatide

exercise-based

exercise-induced

exercise-related

exert

exertional

exhaled

exhibited

existing

exogenous

expanded

expected

expensive

experienced

experimental

expert

expiratory

explanatory

explicit

explorative

exploratory

exponential

exposed

expressive

extended

extensive

external

extra

extracellular

extracorporeal

extrahepatic

extrapyramidal

extravascular

extreme

extrinsic

ezetimibe

face-to-face

facial

facilitated

facility-based

factorial

faecal

failed

fair

fall-related

false-positive

familial

familiar

family-based

family-focused

famotidine

fast

fasted

faster

fastest

fasting

fast-track

fat

fatal

fat-free

fat-rich

fatty

favorable

favourable

fda-approved

fearful

feasible

febrile

febuxostat

fecal

fellow

female

feminine

femoral

fenofibrate

fenofibric

fentanyl

fermented

ferric

ferrous

fesoterodine

fetal

fev1

few

fewer

fexofenadine

fibrate

fibre

fibrinolytic

fibroblast

fidaxomicin

fifth

fifty

fifty-eight

filamentous

film-coated

final

financial

finasteride

fine

finnish

firm

first

first-degree

first-dose

first-episode

first-generation

first-in-class

first-in-human

first-in-man

first-line

first-order

first-pass

first-phase

first-time

first-year

five-day

five-period

five-point

five-way

five-year

fixed

fixed-dose

flare

flat

flexible

flexible-dose

flow-mediated

fluconazole

fluid

flu-like

fluorescent

fluoride

fluoroscopic

fluoxetine

fluoxetine-treated

flush

flushing

fmri

focal

folate

folfiri

folic

folinic

follicle-stimulating

follicular

followed-up

following

followup

follow-up

food-effect

forced

force-titrated

forearm

foreign

formal

formative

former

formoterol

formula-fed

fortified

forty-eight

forward

four-arm

four-day

fourfold

four-fold

four-month

four-period

four-point

fourth

four-treatment

four-way

four-week

four-year

foveal

fractional

fractionated

fracture

fragile

fragmented

frail

frailty

free

free-living

free-radical

french

frequent

fresh

frontal

front-line

frozen

full

full-scale

full-term

full-time

fulvestrant

fumarate

functional

fundamental

fundus

fungal

furoate

furosemide

further

future

g

gag-specific

gamma-aminobutyric

gaseous

gastric

gastroduodenal

gastroesophageal

gastrointestinal

gastro-intestinal

gastrointestinal-related

gastro-oesophageal

gefitinib

gel

gelatinase-associated

gel-forming

genant-modified

gender-matched

general

generalisable

generalised

generalizable

generalized

gene-related

generic

genetic

genital

genome

genome-wide

genomic

genotype

genotyped

genotypic

gentamicin

gentle

genuine

geographic

geographical

geometric

geriatric

german

gestational

gh

gh-deficient

gh-releasing

gh-treated

gi

glargine

glaxosmithkline

global

glomerular

glp-1

glucagon-like

glucocorticoid

glucocorticoid-induced

glucodynamic

glucoregulatory

glucose

glucose-6-phosphate

glucose-dependent

glucose-lowering

glucose-related

glucose-stimulated

glutamate

glutamatergic

gluteal

glycaemic

glycated

glycemic

glycosylated

goal-directed

gold

golimumab

good

go-raise

gout

g-protein-coupled

graded

gradual

gram-negative

gram-positive

granulocyte

graphic

graphical

gray

great

greater

greatest

green

grey

gross

group-based

guideline-based

gynaecological

gynecological

h

h1n1

h2-receptor

h5n1

habitual

haematological

haemodynamic

haemoglobin

haemophilus

haemostatic

half

half-life

haloperidol

haloperidol-controlled

haloperidol-treated

ham-d-17

hand-foot

hand-held

hands-on

happy

haptic

haq-di

hard

harmful

harmonic

hazard

hazardous

hb

hba1c

hbeag-positive

hcg

hcl

hcv-infected

hdl-c

head-to-head

healing

healthcare

health-care

healthful

healthier

health-promoting

health-related

healthy

heartburn-free

heat-sensitive

heavy

hedonic

heightened

helminth

helpful

hematological

hematopoietic

hemodynamic

hemorrhagic

hemostatic

hepatic

hepatocellular

heptavalent

her2-negative

her2-positive

herbal

hereditary

heterogeneous

heterologous

heterosexual

heterozygous

hexavalent

hg

hidden

hierarchical

high

high-calcium

high-calorie

high-carbohydrate

high-density

high-dose

high-energy

higher

higher-dose

higher-order

highest

high-fat

high-gi

high-grade

high-intensity

high-level

high-performance

high-pressure

high-protein

high-quality

high-resolution

high-risk

high-sensitivity

high-throughput

hippocampal

hispanic

histological

histomorphometric

histopathological

historic

historical

hiv-1

hiv-1-infected

hiv-infected

hiv-negative

hiv-positive

hiv-related

hiv-seronegative

hiv-specific

hmg-coa

hoc

holistic

home-based

homeopathic

homeostatic

homogeneous

homogenous

homologous

homozygous

horizontal

hormonal

hormone-receptor-positive

hormone-sensitive

hospital-acquired

hospital-based

hospitalized

hot

hourly

hp

hpv

hr

hrqol

hscrp

hs-crp

hsv-2

http

human

human-bovine

humanized

humoral

hyaluronic

hybrid

hydrated

hydrochloride

hydrochlorothiazide

hydrocodone

hydrolyzed

hydrophilic

hydroxy

hydroxyapatite

hydroxyapatite-coated

hydroxychloroquine

hydroxynefazodone

hyperbaric

hypercholesterolaemic

hypercholesterolemic

hyperglycaemic

hyperglycemic

hyperinsulinaemic

hyperinsulinemic

hyperinsulinemic-euglycemic

hyperlipidemic

hypertensive

hypertonic

hypertrophic

hypnotic

hypoactive

hypocaloric

hypoglycaemic

hypoglycemic

hypogonadal

hypotensive

hypothalamic

hypothalamic-pituitary-adrenal

hypothesized

hypothetical

hypoxic

iatrogenic

ibandronate

ibuprofen

icu

ideal

identical

idiopathic

ifnß-1b

ige-mediated

igfbp-3

igf-i

i-ii

il-1

il-10

il-1ß

il-1beta

il-2

ileal

iliac

ill

illicit

illusory

iloperidone

imiquimod

immature

immediate

immediate-release

immigrant

immune

immune-mediated

immune-related

immunized

immunocompetent

immunocompromised

immunogenic

immunohistochemical

immunologic

immunological

immunomodulatory

immunoreactive

immunosorbent

immunostimulatory

immunosuppressant

immunosuppressive

immunotherapy

impacted

impaired

impeding

imperative

implant

implantable

implanted

implementation

implicit

important

impossible

improved

improving

impulsive

inaccurate

inactivated

inactive

inadequate

inadvertent

inappropriate

inattentive

incisional

inclusive

incompatible

incomplete

inconclusive

incongruent

inconsistent

incontinent

incorporate

incorporated

incorporating

incorrect

increased

incremental

incurable

indacaterol

independent

in-depth

indexed

indian

indicated

indicative

indigenous

indirect

indistinguishable

individual

individualised

individualized

individual-level

individually-tailored

indocyanine

indole

indomethacin

indoor

induced

inducible

inductive

industrial

industrialized

ineffective

inefficient

ineligible

inert

inexpensive

inexperienced

infant

infantile

infected

infection-related

infectious

infective

inferior

infertile

infinite

inflamed

inflammatory

infliximab

infliximab-treated

influential

influenza

influenzae

influenza-like

informal

informational

informative

informed

infrared

infrequent

infused

infusional

infusion-related

ingested

inguinal

inhalational

inhaled

inhaler

inherent

inhibited

inhibiting

inhibitor

inhibitor-1

inhibitor-based

inhibitory

in-home

in-hospital

initial

initiating

injectable

injected

injection-site

injured

injurious

innate

inner

inner-city

innovative

inoperable

inotropic

inpatient

in-patient

in-person

insecticide-treated

insensitive

insignificant

insoluble

inspiratory

inspired

instantaneous

in-stent

institutional

instructional

instructive

instrumental

instrumented

insufficient

insulin-dependent

insulinemic

insulin-independent

insulin-induced

insulin-like

insulin-mediated

insulin-naïve

insulin-naive

insulinogenic

insulin-resistant

insulin-sensitizing

insulin-stimulated

insulin-treated

intact

intake

integral

integrated

integrative

intellectual

intended

intense

intensive

intentional

intention-to-treat

intent-to-treat

interactive

intercellular

interchangeable

intercompartmental

intercostal

interdisciplinary

interested

interesting

interferon

interferon-inducible

interim

interindividual

inter-individual

interleukin

interleukin-1

interleukin-10

interleukin-1ß

interleukin-2

intermediate

intermediate-acting

intermittent

internal

international

internet

internet-based

internet-delivered

interpatient

inter-patient

interpersonal

interpretable

interproximal

interquartile

interrupted

interstitial

intersubject

inter-subject

interventional

interview-based

intestinal

intestine

intimal

intimate

intolerable

intolerant

intra

intraabdominal

intra-abdominal

intra-arterial

intraarticular

intra-articular

intrabony

intracellular

intracerebral

intracoronary

intracortical

intracranial

intractable

intracytoplasmic

intradermal

intraepithelial

intragastric

intrahepatic

intraindividual

intra-individual

intramuscular

intramyocellular

intranasal

intraocular

intraoperative

intra-operative

intraoral

intraperitoneal

intrapulmonary

intrasubject

intra-subject

intrathecal

intratumoral

intrauterine

intravaginal

intravascular

intravenous

intraventricular

intravitreal

in-treatment

intriguing

intrinsic

intrusive

intubated

intuitive

invariant

invasive

inverse

inverted

investigational

investigative

investigator-administered

investigator-blind

investigator-blinded

investigator-initiated

investigator-rated

in-vitro

in-vivo

involuntary

involved

iodine

iohexol

iontophoretic

iop-lowering

ipilimumab

ipsilateral

iranian

irinotecan

irregular

irrelevant

irrespective

irreversible

irritable

irritant

ischaemic

ischemic

isocaloric

isoenergetic

isokinetic

isolated

isometric

isosorbide-5-mononitrate

isotonic

isrctn

italian

iterative

iu

japanese

joint

jugular

juvenile

kaplan-meier

kcal

ketoconazole

key

kg

kg-1

kinematic

kinetic

known

korean

l

l1

labelled

laboratory-based

laboratory-confirmed

lacosamide

lactic

lactobacilli

lactobacillus

lai

lamivudine

lamotrigine

lansoprazole

laparoscopic

large

larger

large-scale

largest

laropiprant

laryngeal

laryngoscopy

last

lasting

last-observation-carried-forward

latanoprost

late

late-life

latency

latent

late-onset

late-phase

later

lateral

latest

late-stage

latin

latin-square

latter

lavage

laxative

ldl

ldl-c

ldl-cholesterol

ldl-c-lowering

le

lead

lead-in

lean

learned

least

least-square

led

left

left-sided

legal

leisure-time

lengthy

leptin

lersivirine

lesional

less

lesser

lethal

liberal

licensed

lifelong

lifestyle

life-threatening

light

lighter

likely

likert

limbic

limited

linear

linezolid

lingual

linguistic

linoleic

lipid

lipid-altering

lipid-lowering

lipid-modifying

lipophilic

liposomal

liquid

lispro

little

live

live-attenuated

living

local

localised

localized

locoregional

logarithmic

logical

logistic

logistical

log-linear

log-rank

log-transformed

long

long-acting

long-chain

longer

longer-term

longest

longitudinal

long-lasting

long-standing

longterm

long-term

loose

loperamide

lopinavir

loracarbef

loracarbef-treated

loratadine

lot-to-lot

lovastatin

low

low-active

low-back

low-calorie

low-carbohydrate

low-cost

low-density

low-dose

lower

lower-dose

lowest

low-fat

low-gi

low-glycemic

low-grade

low-income

low-intensity

low-level

low-molecular-weight

low-risk

low-trauma

lumbar

luminal

lumiracoxib

lupus

luteal

lymph

lymphatic

lymphoblastic

lymphocyte

lymphoproliferative

macronutrient

macrovascular

macular

magnetic

main

major

maladaptive

malaria-endemic

malarial

malaria-naïve

male

maleate

malignant

malnourished

mammalian

mammographic

manageable

mandatory

mandible

mandibular

manic

manifest

manipulative

mantel-haenszel

mantle

manual

manualized

many

marginal

marital

marked

masked

mass

matching

maternal

mathematical

mature

maximal

maximum

maximum-tolerated

mcg

mcrc

meal-induced

meal-related

mealtime

mean

meaningful

measurable

measured

mechanical

mechanism-based

mechanistic

medial

median

mediastinal

mediate

mediated

mediating

medical

medication-free

medicinal

medium

medium-term

mefloquine

mellitus

memantine

meningococcal

menopausal

menstrual

mental

metabolic

metabolite

metallic

metastatic

metered

metered-dose

metformin

metformin-treated

methicillin-resistant

methicillin-susceptible

methodological

methotrexate

methotrexate-naive

metoprolol

metric

metronidazole

metropolitan

mexican

mf59-adjuvanted

mg

micro

microbial

microbiologic

microbiological

microcirculatory

microg

micronized

micronutrient

microscopic

microsomal

microvascular

mid

middle

middle-aged

migraine

migraine-associated

migraine-related

migraine-specific

mild

milder

mild-moderate

mild-to-moderate

military

milk-based

mimetic

mindfulness-based

minimal

mini-mental

minimum

minor

minute

mismatched

missing

mitochondrial

mitogen-activated

mitral

mitt

mixed

mixed-effect

mixed-effects

mixed-model

ml

ml-1

mm

mmhg

mmol

mmp-3

mobile

mobile-bearing

mobility

mock

modafinil

modal

model-based

modeled

modeling

modelled

modelling

moderate

moderated

moderate-dose

moderate-fat

moderate-intensity

moderate-to-severe

moderate-to-vigorous

moderating

modern

modest

modifiable

modified

modular

modulate

modulated

modulating

modulatory

moisturizing

molar

molecular

monetary

monitored

monocentric

monoclonal

monocular

monogamous

mononuclear

monophasic

monotherapy

monounsaturated

monovalent

monoxide-confirmed

montelukast

monthly

moral

more

moreover

morphine-induced

morphological

morphometric

most

motivated

motivational

moxifloxacin

mrcc

mri

mrna

mtx

much

mucous

mug

multi

multicenter

multi-center

multicentered

multicentre

multi-centre

multicentric

multicompartmental

multicomponent

multi-component

multidimensional

multidisciplinary

multi-disciplinary

multidose

multi-dose

multidrug

multidrug-resistant

multi-ethnic

multifaceted

multi-faceted

multifactorial

multi-factorial

multifocal

multifunctional

multi-institutional

multi-item

multikinase

multilevel

multi-level

multimodal

multinational

multinomial

multiple

multiple-ascending

multiple-choice

multiple-dose

multiplex

multi-professional

multisite

multi-site

multi-stage

multitargeted

multivalent

multivariable

multivariable-adjusted

multivariate

multivariate-adjusted

municipal

mu-opioid

muscular

musculoskeletal

musical

mutant

mutated

mutational

mutual

mycobacterial

mycophenolate

mycophenolic

myelodysplastic

myelogenous

myeloid

myocardial

n-3

naïve

naive

naproxen

narrative

narrow

nasal

nasogastric

nasopharyngeal

nateglinide

national

nationwide

native

natriuretic

natural

naturalistic

nausea

n-back

near

nearest

near-infrared

near-maximal

nebulized

necessary

necrotic

nefazodone

negative

negatively

negligible

nelfinavir

neoadjuvant

neointimal

neonatal

neoral

neovascular

nephrotic

nervous

net

neural

neuraminidase

neurobehavioral

neurobiological

neurochemical

neurocognitive

neurodevelopmental

neuroendocrine

neurogenic

neurohormonal

neuroimaging

neuroleptic

neurologic

neurological

neuromuscular

neuronal

neuropathic

neurophysiological

neuroprotective

neuropsychiatric

neuropsychological

neurosensory

neurosurgical

neurotrophic

neurovascular

neutral

neutralizing

neutropenic

neutrophil

new

newborn

newer

new-onset

next

next-day

next-generation

ng

niacin

niacin-induced

nicotine

nicotinic

nifedipine

nighttime

night-time

nilotinib

ninety-eight

ninth

nitrate

nitric

nitrous

nizatidine

nm

nnrti-resistant

nociceptive

nocturnal

nodal

node-positive

no-effect

nominal

non

nonadherent

nonadjuvanted

non-adjuvanted

nonalcoholic

non-alcoholic

non-blind

nonblinded

non-blinded

noncancer

noncardiac

non-clinical

non-communicable

noncomparative

noncompartmental

non-compartmental

non-coronary

nondepressed

nondiabetic

non-diabetic

non-disabled

non-dominant

non-elderly

non-exercise

nonfasting

nonfatal

non-fatal

non-hdl

non-hdl-c

non-hematological

non-high-density

non-hispanic

non-hodgkin

nonhuman

noninfectious

noninferior

non-inferior

non-inferiority

non-inflammatory

non-insulin

non-insulin-dependent

non-intervention

noninvasive

non-invasive

non-japanese

nonlinear

non-linear

nonlipid

non-malignant

non-medical

nonmetastatic

non-motor

nonmyeloid

non-neuropathic

nonnucleoside

non-nucleoside

nonobese

non-obese

nonocular

non-opioid

non-palpable

nonparametric

non-parametric

nonpeptide

non-peptide

non-pharmaceutical

nonpharmacological

non-pharmacological

non-platinum

nonpregnant

non-pregnant

non-prescription

nonprogressive

non-progressive

nonpsychotic

non-randomised

nonrandomized

non-randomized

nonrenal

non-responder

non-response

nonresponsive

nonselective

non-selective

nonserious

non-serious

non-severe

nonsignificant

non-significant

non-small

non-small-cell

nonsmoking

non-smoking

nonspecific

non-specific

nonsquamous

non-squamous

nonsteroidal

non-steroidal

nonstructural

non-st-segment

non-supervised

nonsurgical

non-surgical

non-tailored

non-traumatic

non-treatment

nontypeable

non-typeable

non-vaccine

non-verbal

nonvertebral

noradrenergic

nordic

norepinephrine

norethindrone

norethisterone

norfloxacin

norgestimate

normal

normalised

normalized

normal-weight

normative

normotensive

north

northern

norwegian

nosocomial

notable

noteworthy

noticeable

no-treatment

novel

noxious

nph

n-telopeptide

n-terminal

nuclear

nucleoside

nucleotide

nucleus

null

nulliparous

numeric

numerical

numerous

nurse-delivered

nurse-led

nutrient

nutritional

obese

obesity-related

objective

objectively

observable

observational

observed

observer-blind

observer-blinded

observer-masked

obsessive-compulsive

obstetric

obstetrical

obstructive

obtainable

obvious

oc

occasional

occipital

occlusal

occlusive

occult

occupational

ocular

odd

oesophageal

oestradiol

off-drug

office-based

off-label

off-pump

olanzapine

olanzapine-fluoxetine

olanzapine-treated

old

older

oldest

oleic

oligomeric

oligonucleotide

olive

omalizumab-treated

omega-3

omeprazole

once-a-day

once-daily

once-monthly

once-weekly

once-yearly

oncogenic

on-demand

one-compartment

one-fifth

one-half

one-hour

one-month

one-sided

one-tailed

one-third

one-time

one-to-one

one-way

one-week

one-year

ongoing

on-going

online

on-line

only

onset

on-site

on-study

on-treatment

oocyte

opaque

open

open-angle

open-ended

open-label

open-labeled

operable

operational

operative

ophthalmic

opiate

opioid

opioid-induced

opioid-related

opportunistic

opposite

oppositional

opsonophagocytic

optic

optical

optimal

optimised

optimized

optimum

optional

or

oral

oral-dose

orange

orbitofrontal

ordinal

ordinary

organic

organisational

organizational

original

oropharyngeal

orthopaedic

orthopedic

orthostatic

oscillatory

oseltamivir

osmotic

osseous

osteoblast

osteoclast

osteopenic

osteoporotic

otamixaban