Abstract

Background and Objective

Missing data and unmeasured confounding are key challenges for external comparator studies. This work evaluates bias and other performance characteristics depending on missingness and unmeasured confounding by means of two case studies and simulations.

Methods

Two case studies were constructed by taking the treatment arms from two randomised controlled trials and an external real-world data source that exhibited substantial missingness. The indications of the randomised controlled trials were multiple myeloma and metastatic hormone-sensitive prostate cancer. Overall survival was taken as the main endpoint. The effects of missing data and unmeasured confounding were assessed for the case studies by reporting estimated external comparator versus randomised controlled trial treatment effects. Based on the two case studies, simulations were performed broadening the settings by varying the underlying hazard ratio, the sample size, the sample size ratio between the experimental arm and the external comparator, the number of missing covariates and the percentage of missingness. Thereby, bias and other performance metrics could be quantified dependent on these factors.

Results

For the multiple myeloma external comparator study, results were in line with the randomised controlled trial, despite missingness and potential unmeasured confounding, while for the metastatic hormone-sensitive prostate cancer case study missing data led to a low sample size, leading overall to inconclusive results. Furthermore, for the metastatic hormone-sensitive prostate cancer study, missing data in important eligibility criteria led to further limitations. Simulations were successfully applied to gain a quantitative understanding of the effects of missing data and unmeasured confounding.

Conclusions

This exploratory study confirmed external comparator strengths and limitations by quantifying the impact of missing data and unmeasured confounding using case studies and simulations. In particular, missing data in key eligibility criteria were seen to limit the ability to derive the external comparator target analysis population accurately, while simulations demonstrated the magnitude of bias to expect for various settings.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Missing data and unmeasured confounding are key challenges for external comparator studies. |

Their effects have been quantified in this paper by means of two case studies and simulations. |

1 Introduction

Randomised controlled trials (RCTs) are the gold standard design for studies supporting drug approvals. In cases where RCTs are unfeasible, alternative designs may be necessary. One alternative approach is a single-arm trial (SAT). However, without an internal control group, the relative treatment effect cannot be estimated by means of a direct comparison. Instead, the SAT context can be provided by aggregated data from the literature or by compiling an external comparator (EC) from real-world data (RWD). Sometimes, previous RCTs can be utilised to compile individual patient-level comparator data as well.

The general concept of EC studies has been described previously [1,2,3,4,5,6,7,8,9,10,11,12,13], including sources of bias, methodological approaches and terminology considerations. Relevant regulatory guidelines describe the potential uses and limitations of RWD in regulatory decision making [14,15,16,17,18,19,20]. Recently, the US Food and Drug Administration published a dedicated draft guideline specific to the EC approach [21], and the European Medicines Agency (EMA) published a draft reflection paper on SATs as pivotal evidence [22]. Both guidance documents reflect that SATs can, in rare instances, be the basis for marketing authorisation, but acknowledge that conclusions on efficacy from SATs have strong limitations and uncertainties. Reviews of Food and Drug Administration and EMA approvals of submissions utilising EC data are available [23, 24] and findings were summarised elsewhere [1]. Comments on regulatory aspects have been published as well [25,26,27], including specific challenges within oncology [25, 26], and utilisation for efficacy/effectiveness versus safety purposes, as well as operational, technical and methodological challenges [27]. A regulatory purpose, however, is not the only use case for the EC design, as health technology assessment submissions and ECs for internal decision making are possible use cases as well. Additionally, if an EC study is submitted for regulatory purposes, this may not take place in lieu of a pivotal trial, but as additional information to increase the ability to contextualise and interpret SAT findings [12].

Because the EC study design has been gaining traction in recent years, the importance of validating relatively new approaches by means of a prospective and controlled setting has been suggested [28], which constitutes the objective of this research project (FWC EMA/2017/09/PE, Lot 4). External comparator strengths and limitations were explored, focusing specifically on the impact of missing values in covariates and unmeasured confounding. Oncological indications were chosen, and causal inference methods as described in [29, 30] were applied. Oncology is a therapeutic area in which SATs are used more frequently than in some other areas [23, 31,32,33].

Prior EC research has been summarised [1] and includes studies in which results from RWD sources were compared to corresponding clinical trial results. Specifically, case studies where comparable overall survival (OS) outcomes between RWD and RTCs have been reported [34, 35], including a recent case study in non-small-cell lung cancer [36]. One further study investigated the use of real world (RW) healthcare databases for EC purposes and listed eight examples of RWD versus RCT results with “encouraging examples of alignment” [37]. Utilisation of healthcare claims data for replicating RCT results has also been described [38, 39], and the consequences of missingness for OS estimations in electronic health records are available as well [40]. While OS is typically measured in a comparable way across study types, other endpoints including progression can suffer from differential measurement methods, leading to potentially inconsistent treatment effect estimates across RWD and controlled clinical trials. Nonetheless, OS is not per se suitable to isolate a treatment effect without external data, as per the reflection paper recently published by the EMA [22], mainly due to potential biases related to the definition of the start of the observation period. There is also an example in the literature where inconsistent RCT versus observational OS results were reported [41]. Consequently, the statistical properties and operational characteristics of OS in EC studies, where data quality and assumptions on confounders are critical, warrant further investigation.

The present study differs from previously described research by applying a strong methodological focus on the role and effects of missing data and unmeasured confounding. Results are stratified by the different marginal estimators that may be chosen to estimate the treatment effect. The latter being the average treatment effect (ATE), the average treatment effect on the treated (ATT) and the average treatment effect on the overlap population (ATO).

2 Methods

The aim of this project was to identify at least two EC studies for at least two different indications by utilising the treatment arms from two completed RCTs and by compiling corresponding EC RWD. The following criteria were used to select RCTs: OS was required to be available because other key oncology outcomes such as progression-based endpoints are often measured differently in RCTs and RWD. Our investigation was limited to studies reporting statistically significant differences in OS to permit an added value of an evaluation of treatment effects both under the alternative hypothesis (by comparing the RCT treatment cohort with the EC) and the null hypothesis (by comparing the internal RCT control with the EC). Individual patient data of both study arms had to be available (sometimes only control data are available in data portals) with a sufficiently large sample size. The studies were required to be relatively contemporary with randomisation taking place ideally from 2010 onwards.

Based on the above criteria, two RCTs were identified in data portals meeting almost all of the criteria and these two RCTs were selected for analysis. One RCT was performed in the indication of multiple myeloma (MM) [42] and one in the indication of metastatic hormone-sensitive prostate cancer (mHSPC) [43, 44]. The MM RCT had one criterion that was not fully met, as the RCT randomised patients between 2008 and 2012. However, this was considered to be acceptable because no other RCTs except the mHSPC trial could be identified meeting all criteria. Criteria were also defined for the RWD selection, where the main points were direct access to individual patient-level data, and the availability of OS with a sufficient follow-up time.

2.1 Data Sources

For this research project, the RCT data were accessed from two different data portals. Specifically, the identified MM data were retrieved from Project Data Sphere (PDS) [45] and mHSPC data from Yale Open Data Access (YODA) [46] data portals. The EC data were compiled utilising the Guardian Research Network (GRN) database [47]. The GRN data are owned by a network of regional community health systems in the USA with experienced cancer research programmes. The network collaborates with community hospitals to organise patient electronic medical record data to facilitate trials and contribute to the understanding of RW trends in oncology care and outcomes. The network centres treat about 400,000 new oncology patients every year. Data regarding clinical characteristics, treatments and outcomes for these patients are collected as soon as they are diagnosed and treated in one of the centres and continues unless patients move to specialised treatment centres.

Mortality data for the GRN RW dataset are obtained by one of two sources: (1) direct entry into health system electronic health records (which occurs either when the patient dies while hospitalised or from the health system’s regular records updates) or (2) linkage to the Social Security Death Index. The GRN uses the most conservative approach to linking patients to the Social Security Death Index (matching last name, matching social security number, and matching date of birth). This approach enables access to mortality data for patients who have transferred their care to outside of the system.

2.2 Statistical Approaches for Case Studies

The endpoint OS was analysed for both case studies. For the mHSPC case study, also the endpoint time to next treatment or death (TTND) was available. The analysis was performed by applying propensity score (PS) weighting and different marginal estimators, namely the ATE, ATT and ATO. The ATT normalises the estimated treatment effect according to the SAT baseline distribution of prognostic factors, while the ATE standardises the treatment effect according to the overall baseline distribution (SAT plus EC). ATT weighting is sometimes also referred to as the standardised mortality/morbidity ratio weighting and ATE as the inverse probability of treatment weighting, though inverse probability of treatment weighting can also be interpreted to constitute a more general term. The ATO is a more recently proposed marginal estimator, which weights patients highest when the probability of receiving the treatment (modelled by baseline covariates) is around 50%, while for probabilities approaching either 0% or 100% weights are shrinking. This approach aims to compare patients in clinical equipoise, see also [1] for a full methodological discussion.

The analysis was performed by using a multivariable logistic regression model to estimate propensity scores. The covariates listed in Tables 1 and 2 of the Electronic Supplementary Material (ESM) were used, based on discussions with subject matter experts. Interaction terms or higher moments were not included in the logistic regression model. Afterwards, PS-weighted Cox models (assuming the proportional hazards [PH] assumption) and restricted mean survival time (RMST) analyses based on PS-weighted Kaplan–Meier curves (not assuming the PH assumption) were applied [1]. The RMST models restricted the mean survival time at the minimum of the two maximum (censored) event times per cohort, making full use of the available data. Deviations from the PH assumption were tested based on a significance test using scaled Schoenfeld residuals. In the case of a significant test results, the robust RMST approach was preferred over the weighted Cox model. In addition to comparing the EC study results with RCT results, the direct comparison of the EC and internal control cohorts was performed by using the same weighted models as outlined above.

Multiple imputation (MI) within cohorts was applied in the main analysis to handle missing covariate data, and sensitivity analyses were applied to investigate the robustness of results depending on the missing data handling method. The first analysis was performed by only considering covariates in the PS model, which had less than 35% (for MM) and less than 40% (for mHSPC) missingness within the EC, respectively, following a proposal from Jacobsen et al. [48]. Analyses without MI were performed in addition, using only covariates without substantial missingness, which were age and sex for MM, and age and time from metastatic disease to index date for mHSPC. See Sects. 2.5 and 2.7 for the definitions of the index dates and note that all patients with mHSPC were male. For the mHSPC case study, a further sensitivity analysis was performed by using the date of the metastatic disease diagnosis as an alternative index date in order to investigate the robustness of results regarding different index date definitions.

The analysis included all treated eligible patients and followed as specified in the protocol a treatment policy estimand approach for intercurrent events [49] with censoring being applied at the last date of follow-up. Applying a hypothetical estimand strategy by means of inverse probability of censoring weighting would constitute a meaningful alternative estimand approach as well, addressing a scientifically different question. See also Sect. 2.3.2 for further methodological details.

2.3 Statistical Approaches for Simulations

Simulated data were analysed in the same way as case study data, except that owing to the PH assumption being known to be fulfilled, no additional RMST analysis was necessary. The R programming code for the performed simulations is available in the ESM.

2.3.1 Data Generation

The simulations followed a published framework [50] (Table 3 in the ESM). Covariates including their means, variances and correlations were simulated according to the observed values of the two case studies using multivariate normal distributions. For categorical covariates, the simulated normal variables were transformed to category frequencies by using the cumulative distribution function. See Tables 1 and 2 in the ESM for the list of used covariates. Survival times based on covariate values were simulated parametrically using a Cox-Gompertz model [51] using the software R, version 3.6.3. Censoring was simulated to occur at the maximum observed follow-up time, i.e. at the end of the observational period.

The true treatment effect (hazard ratio [HR]) was defined upfront, and performance metrics were derived stratified by the marginal estimators ATE, ATT and ATO. Based on the MM and mHSPC case studies, scenarios were broadened by varying the underlying HR (0.6, 0.7, 0.8, 0.9, 1.0), the sample size (2 × 50, 2 × 100, 2 × 200), the sample size ratio between the SAT and the EC (1:1, 1:2, 1:3), the number of missing EC covariates (0, 1, 2, 3, 4) and the percentage of missingness in EC covariates (25%, 50%, 75%, 100%), leading to 900 scenarios for each case study. These scenarios were chosen to investigate a range of realistic EC study use cases. As 100% missingness is equivalent to a covariate not being available in the EC, this setting constitutes unmeasured confounding. Note that trial data were set to have no missingness, leading to all of the trial covariates being always fully available.

2.3.2 Data Analysis

All analysis assumptions were aligned with the actual data generation process, including the assumption of PH and data being missing at random. Analysis approaches were also in line with the case studies’ analyses. The propensity scores were estimated by a multivariable logistic regression model, regressing the covariates (see Tables 1 and 2 in the ESM) on the two cohorts (SAT/EC). The four most significant covariates were chosen to set up missingness and unmeasured confounding scenarios. For scenarios involving one to three covariates, a random selection approach was applied for each simulation repetition to randomly select which of the one to three covariates were affected by missingness or unmeasured confounding. This resulted in a random mix of missing prognostic factors, averaging the effect of a randomly missing covariate.

Multiple imputation with 20 imputations when the missingness was ≤ 50%, and otherwise with 50 imputations was applied to handle missing baseline covariates. Bootstrap with 50 bootstrap samples was used to estimate the standard errors of the ATE, ATT and ATO effect estimates. Bootstrap was performed within each MI dataset, such that one simulation run consisted of 20 or 50 (MI) × 50 (bootstrap) = 1000 (2500) runs for just one scenario. Because there are 900 scenarios for different parameter constellations and two case study set-ups, the resulting number has to be multiplied further by 2 × 900, leading to a simulation run number between 1.8 and 4.5 million. These calculations are finally repeated by 200 simulation runs, leading overall to > 360 millions of iterations overall. A weighted Cox model was applied estimating the HR using weights according to the ATE, ATT and ATO. A one-sided test with significance level of 2.5% was applied for testing purposes.

2.3.3 Performance Metrics

For simulations, the bias, type I error and the 95% confidence interval (CI) coverage were key performance metrics, while power considerations were not included, as they are misleading in case of bias. Bias was defined as the log estimated HR minus the true log HR, such that a negative bias denotes a bias away from the null hypothesis, i.e. in favour of the experimental treatment. The 95% CI coverage has the advantage of being a more holistic assessment compared with the bias as it also includes the dimension of the variability of the estimate. Still, each of the performance metrics is of interest and all derived results are presented.

2.4 MM RCT Data

The phase III, open-label MM RCT compared bortezomib with lenalidomide and dexamethasone versus lenalidomide and dexamethasone alone in patients with newly diagnosed MM without intent for an immediate autologous stem-cell transplant. The original trial results have been published before [42]. The RCT was conducted in three countries (USA, Puerto Rico and Saudi Arabia) and is registered with ClinicalTrials.gov (NCT00644228). Randomisation occurred from April 2008 to February 2012 applying a 1:1 randomisation scheme. This resulted in the randomisation of 264 treated and 261 control patients, from which 242 treated and 229 control patients were eligible for analysis.

The randomisation was stratified based on the International Staging System (I, II or III) and the intention to have a transplant (yes/no). A stratified Cox analysis of the RCT for the endpoint OS resulted in HR = 0.71, 95% CI 0.52, 0.96 with a two-sided p-value of 0.025. Also, a multivariable age-adjusted analysis was reported (Table 2 in [42]), which resulted in HR = 0.74, 95% CI 0.55, 1.00 with a two-sided p-value of 0.048.

2.5 MM EC Data

The eligibility criteria from the RCT were applied to the EC to the extent possible (Fig. 1 and Table 4 in the ESM). Because the GRN dataset included a sufficient sample size of RW patients receiving the experimental treatment, it was also possible to compile a treated RW cohort in addition to the comparator RW cohort, which was instrumental for performing further comparisons. Overall, 461 EC patients and 284 treated patients could be identified from the RWD (Table 5 in the ESM). The comparator cohort index date was defined as the initiation date of a regime with lenalidomide and dexamethasone as first-line treatment, with the index date being based on the date of lenalidomide. Index dates ranged from 2008 to 2020 with most being between 2010 and 2017. The initial data extraction and enhancement of data from GRN took place in July and August 2021 and thus follow-up data included the data available in the database until that point in time. The treatment index date definition is the same as the comparator index date definition, but with bortezomib added as well.

2.6 mHSPC RCT Data

The original mHSPC RCT results have been published before [43, 44]. This phase III, double-blind, placebo-controlled trial testing abiraterone plus prednisone in high-risk patients with mHSPC was conducted in Europe, Africa, South America, Canada, Mexico and the Asia-Pacific region and is registered with ClinicalTrials.gov (NCT01715285). Randomisation occurred from February 2013 to December 2014 applying a 1:1 randomisation scheme. This resulted in the randomisation of 605 treated and 604 control patients, respectively, from which 597 treated and 602 control patients were included in the intention-to-treat population.

The randomisation was stratified based on measurable visceral disease and Eastern Cooperative Oncology Group (ECOG) score (< 2 vs 2). The stratified primary RCT analysis for the endpoint OS resulted in HR = 0.62, 95% CI 0.51, 0.76 [43] and subsequently for more mature survival data in HR = 0.66, 95% CI 0.56, 0.78 [44] with a two-sided p value of < 0.0001. These more mature data, however, also included 72 patients (12% of the control group), who crossed over to the experimental treatment. These patients did not experience a progression before switching, such that this patient group may constitute a more robust subset of patients. It is not clear whether the treatment effect for the more mature data set should be expected to be closer to the null hypothesis or farer away.

RCT results were recalculated using the available mature RCT data because these data were used for the EC approach. This was achieved by setting the index date to the first treatment date with androgen deprivation therapy instead of the randomisation date, as this date could be assessed for both the RCT treatment arm and the EC patients (see also Sect. 2.7 for index date information). Additionally, not all stratification data were available in the EC, such that an unstratified analysis approach was applied. This resulted in HR = 0.66, 95% CI 0.57, 0.78, which was nearly identical to the original result.

2.7 mHSPC EC Data

The rather complex eligibility criteria from the RCT were applied to the EC as far as possible (Fig. 2 and Table 6 in the ESM), leading to 92 EC patients (Table 7 in the ESM). The eligibility criteria were complex because the definition of being at high risk was based on a combination of three criteria (Gleason score > 7, three or more bone lesions, visceral disease), where at least two criteria must have been met. This was difficult to translate to all RWD patients because of missing values. The patients’ status of visceral disease was not available in the data source and needed to be approximated by a manual review identifying patients with lung/liver disease involvement (see Table 8 in the ESM). Further, exact counts of bone lesions were only consistently available in the RCT but rarely in the EC (instead the term ‘multiple’ was often used, which was considered as fulfilling the criteria of having three or more bone lesions). This limited the ability to check comparability of groups.

Because of the relatively recent approval of the study drug, no meaningful RW treatment cohort could be derived. However, there was the opportunity to analyse the endpoint TTND in addition to OS, where the greater number of events allowed for an analysis with adequate statistical power.

The index date was defined as the first date a patient with metastatic prostate cancer was initiated on androgen deprivation therapy, as androgen deprivation therapy was the only common aspect between the treatment and control (placebo) arms of the mHSPC RCT. It ranged from 2010 to 2019 with most index dates being between 2013 and 2018. The initial data extraction and enhancement of data from GRN took place in July and August 2021 and thus follow-up data included the data available in the database until that point in time.

It was also possible to define an alternative index date (the date of metastatic disease diagnosis), such that the robustness of results could be evaluated by using a different index date. For this sensitivity analysis, 78 out of 92 patients could be included owing to the need to harmonise the time from diagnosis of metastatic disease to index date between the EC and the RCT. This harmonisation was achieved by including EC patients in the analysis if the time from diagnosis of metastatic disease to the index date was 12 months or less. Note, that for the main analysis the time from diagnosis of metastatic disease was an actual baseline covariate (and not a post-baseline observation), which could be successfully incorporated into the PS model, such that no additional standardisation for the main analysis was necessary.

3 Results

3.1 MM Case Study Results

Figure 1 displays unweighted (unadjusted) survival curves for the four cohorts, and Fig. 2 shows the ATE-adjusted Kaplan–Meier curves. The RCT results were recalculated without stratification, as stratification data were not available in the EC. This resulted in a revised RCT HR = 0.69, 95% CI 0.51, 0.93 with a two-sided p value of 0.014 (Table 1), as compared to the original RCT result of HR = 0.71 and the multivariable adjusted RCT estimate of HR = 0.74. Table 1 shows a comparison of point estimates between the EC and RCT results (ATE HR = 0.76, ATT HR = 0.84 and ATO HR = 0.87). The PH assumption was doubtful to be met with p = 0.002, p = 0.035 and p = 0.064 for ATE, ATT and ATO (Table 1). The RCT RMST treatment difference was 5.6 months (p = 0.025, two-sided), compared with 5.9 months for the EC using the ATE (p = 0.016). As for the HR, the ATO-weighted and ATT-weighted RMST results were again closer to the null hypothesis (3.2 and 3.4 months treatment difference, respectively) when comparing with the ATE (Table 1).

See Table 9 in the ESM for the distribution of demographic and baseline characteristics. When checking the covariate balance, the standardised mean differences were observed to be < 0.11, indicating an improved balance of measured covariates across treatment groups after applying PS weights (Table 10 in the ESM). Note that balance in unmeasured covariates cannot be assessed. Propensity score and weight distributions are displayed in Fig. 3 and Table 11 in the ESM.

Table 12 in the ESM shows ATE results applying a model without MI, and a MI model including only covariates with missingness < 35%. These results were similar to the original ATE analysis results.

Interestingly, the PH assumption appeared reasonable within both data sources (within the RCT and within the RW data) but seemed to be compromised when using one arm from the RCT and a comparator cohort from the RW data (Figs. 1 and 2), showing the potential for the non-robustness of the PH assumption when mixing two data sources in the EC setting. Figure 4 in the ESM shows an ATE comparison of the internal control and the EC, where the potential deviation from the PH assumption becomes visible.

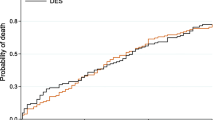

3.2 mHSPC Case Study Results

Figure 3 displays unweighted (unadjusted) survival curves, and Fig. 4 the ATE-adjusted Kaplan–Meier curves, for which the proportionality of hazards was considered to be a reasonable assumption (Table 2). Table 2 in the ESM displays the association of PS covariates with OS as per the RCT. Table 2 reports ATE, ATT and ATO estimates together with the RCT results. The RCT estimate of HR = 0.64 was seen to be empirically lower than the ATE, ATT and ATO results (HR range 0.81–0.86). Similarly, the RMST RCT difference of 6.5 months was empirically higher than the ATE, ATT and ATO estimates, which ranged from 2.2 to 3.5 months.

Average treatment effect (ATE)-weighted overall survival comparing the randomised controlled trial (RCT) treatment arm with the real-world data (RWD) comparator cohort, Kaplan–Meier curves for the metastatic hormone-sensitive prostate cancer case study. The grey line at the 50% mark denotes a reference line for corresponding median overall survival times

See Table 7 in the ESM for the distribution of demographic and baseline clinical characteristics. Most standardised mean differences were observed to be ≤ 0.20, except for ECOG (0.25 for ATE and 0.29 for ATT), indicating a limited balance in measured characteristics across treatment groups after PS weighting (Table 13 in the ESM). Propensity score and weight distributions are displayed in Fig. 5 and Table 14 in the ESM.

For the ATE, different strategies for handling missing data were applied as a sensitivity analysis. These led to similar estimates of HR = 0.84–0.87 (see Table 15 in the ESM) with the PH assumption being reasonable and RMST differences that ranged between 1.6 and 2.5 months.

A direct comparison of the EC with the internal RCT control group showed numerical but nominally non-significant differences after weighting when using the Cox model (HR = 1.30, 95% CI 0.85, 1.99, p = 0.22) and RMST differences (−4.4, 95% CI −9.6, 0.8, p = 0.097), see Fig. 6 and Table 16 in the ESM. Note that the p-value testing the PH assumption for this comparison was p = 0.28 and Kaplan–Meier curves did not yield strong signals for non-proportionality. When applying the alternative index date, the adjusted analysis resulted in HR = 0.88, 95% CI 0.53, 1.44 for ATE, HR = 0.91, 95% CI 0.54, 1.55 for ATT and HR = 0.78, 95% CI 0.54, 1.13 for ATO, respectively (Table 17 in the ESM).

The TTND analyses showed that many RW patients stayed with androgen deprivation therapy alone just for a short time period (in around 20% of patients a subsequent treatment was initiated in the first 3 months), which was different to the RCT. The unweighted HR for TTND was HR = 0.40, 95% CI 0.31, 0.52, as compared to the estimate from the RCT (HR = 0.47, 95% CI 0.41, 0.55 (Table 18 in the ESM). The ATE-weighted analysis resulted in HR = 0.41, 95% CI 0.30, 0.58, and ATT and ATO point estimates were HR = 0.43 and HR = 0.41, respectively. The original RCT RMST difference result was 13.8 months (95% CI 11.1, 16.5) as compared to the ATE, ATT and ATO estimates that ranged from 16.5 to 17.2 months. Corresponding unweighted and weighted TTND figures are available in Figs. 7 and 8 in the ESM.

3.3 Simulation Results

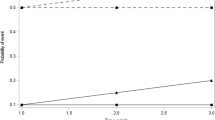

In Figs. 5a, b 6a, b, 7a, b, the performance metrics bias, type I error and the true coverage proportion of the 95% CI are displayed for the ATE, ATT and ATO, including also the naïve unweighted analysis where no adjustment is applied at all. It should be noted that in the figures the unweighted results do fluctuate slightly for different missingness scenarios because of the nature of performing simulations (using data following a random distribution), though they are constant from a theoretical perspective, as they are independent of any missingness adjustment.

a Mean bias and 95% confidence interval for the natural logarithm of the hazard ratio [log(HR)] by marginal estimator and factor in case of no unmeasured confounding. The horizontal dashed lines denote a bias of zero. The upper part of the figure is based on the multiple myeloma case study and the lower part of the figure is based on the metastatic hormone-sensitive prostate cancer study. b Mean bias and 95% confidence interval for the natural logarithm of the hazard ratio [log(HR)] by marginal estimator and factor in case of unmeasured confounding. The horizontal dashed lines denote a bias of zero. The upper part of the figure is based on the multiple myeloma case study and the lower part of the figure is based on the metastatic hormone-sensitive prostate cancer study

a Mean type I error and 95% confidence interval for the natural logarithm of the hazard ratio [log(HR)] by marginal estimator and factor in case of no unmeasured confounding. The horizontal dashed lines denote the nominal type I error of 2.5%. The upper part of the figure is based on the multiple myeloma case study and the lower part of the figure is based on the metastatic hormone-sensitive prostate cancer study. b Mean type I error and 95% confidence interval for the natural logarithm of the hazard ratio [log(HR)] by marginal estimator and factor in case of unmeasured confounding. The horizontal dashed lines denote the nominal type I error of 2.5%. The upper part of the figure is based on the multiple myeloma case study and the lower part of the figure is based on the metastatic hormone-sensitive prostate cancer study

a Mean 95% confidence interval coverage percentage and 95% confidence interval for the natural logarithm of the hazard ratio [log(HR)] by marginal estimator and factor in case of no unmeasured confounding. The horizontal dashed lines denote the nominal two-sided coverage of 95%. The upper part of the figure is based on the multiple myeloma case study and the lower part of the figure is based on the metastatic hormone-sensitive prostate cancer study. b Mean 95% confidence interval coverage percentage and 95% confidence interval for the natural logarithm of the hazard ratio [log(HR)] by marginal estimator and factor in the case of unmeasured confounding. The horizontal dashed lines denote the nominal two-sided coverage of 95%. The upper part of the figure is based on the multiple myeloma case study and the lower part of the figure is based on the metastatic hormone-sensitive prostate cancer study

In the figures, the performance metrics bias, type I error and 95% CI coverage are displayed with their 95% CIs for a given factor as the average over all scenarios within the specified factor. As an example, the scenarios with one missing variable are averages over all scenarios where one variable is missing, at 25%, 50% or 75%. As another example, the scenarios with 25% missingness are averages over all scenarios with zero to four missing covariates. The case of no missing data can be looked at in the last panel labelled “Missing Variables” for a zero number of missing variables (Figs. 5a, 6a and 7a).

3.3.1 Bias

Ideally, the bias in the simulations would be zero. However, when introducing missing data and unmeasured confounding, some bias was observed (Fig. 5a, b). This bias was observed to be away from the null hypothesis (i.e. in favour of the experimental treatment).

Figure 5a shows that the bias was quite similar for varying HR. Low sample size and trial/comparator ratio may increase the bias especially for the ATT. The larger the missingness and unmeasured confounding, the larger the bias and the more differences between marginal estimators are seen. This is true for both the percentage of missingness within a variable and the number of variables with missing data. Overall, the average bias was lowest for the ATE, followed by the ATO and finally by the ATT.

Scenarios displaying unmeasured confounding (Fig. 5b) did not show any difference between marginal estimators. This is comprehensible because covariates being 100% missing are not associated with any reweighting of MI-derived random values when using within-cohort MI. Numerical estimates show a mean logarithm of the HR bias of around −0.25 for MM and −0.15 for mHSPC (Table 19 in the ESM). For scenarios without any missingness, the bias is approximately zero for all marginal estimators (see also Table 19 in the ESM), as expected.

3.3.2 Type I Error

In the ideal case, the empirical type I error in the simulations would be 2.5% (one-sided), while in the case of unmeasured confounding, a higher average type I error can be expected due to the observed bias in favour of the experimental treatment. Indeed, the average type I error for unmeasured confounding was seen to be 38% for MM and 22–25% for mHSPC (Table 19 in the ESM).

For scenarios without any missingness, the type I error was not significantly different from 2.5%, as expected, and the ATE resulted in estimates that were closest to the nominal alpha of 2.5% (Table 19 in the ESM). For missingness scenarios, the observed type I error differed for the applied marginal estimators. Concretely, the ATE type I error was on average 2.6% and 1.6% for the MM and mHSPC case study simulations, while for ATT this was 14.0% and 4.3%, respectively. The ATO type I error was between the other two marginal estimators by being 8.2% and 2.9% for MM and mHSPC study simulations, respectively (Table 19 in the ESM).

Figure 6a, b show that the type I error increased for increasing sample size and for increasing trial/comparator sample size ratio as expected, as biased analyses in favour of the experimental treatment are known to result in increased type I error rates when the power increases. Figure 6a shows the pattern that the more extreme the missingness, the more differences between marginal estimators: for the simulations based on the MM study, the larger the missingness (for both the percentage of missingness within a variable and the number of variables with missing data), the higher the type I error when applying the ATT and ATO, while the ATE did adhere to the nominal alpha level. For the simulations based on the mHSPC study, the differences were less pronounced, with both ATE and ATO adhering to the nominal alpha level of 2.5%.

3.3.3 True Coverage of the 95% CI

Ideally, the true coverage of the 95% CI would be 95%. Indeed, for scenarios with complete data, the true coverage was close to 95% for all marginal estimators, as expected, while for unmeasured confounding scenarios the coverage was estimated to be below 95%. There was no difference between marginal estimators in the case of unmeasured confounding (Fig. 7b), while for missing data scenarios the ATE performed best, ATO performed second best and ATT performed worst (Fig. 7a). Concretely, ATE coverage was 95% for MM and 96% for mHSPC, while ATT coverage was only 82% for MM and 90% for mHSPC (Table 19 in the ESM). The empirical ATO coverage was between ATE and ATT estimates by being 87% for MM and 94% for mHSPC (Table 19 in the ESM).

3.3.4 Overall Result Patterns

The observed relative performance patterns were similar for the two case study set-ups, and the observed absolute performance differences were in line with the different number of explanatory covariates, the covariates having different levels of impact and different covariance matrices. A larger bias, type I error and a lower 95% CI coverage was seen in the MM-based simulations compared with the mHSPC-based simulations.

Descriptive statistics for all performance metrics are available in Table 19 in the ESM, together with descriptive p values. Overall, as measured by averages, the ATE performed best (p < 0.001) compared with ATT and ATO regarding all the applied performance characteristics of bias, type I error and 95% CI coverage. Though there was barely any difference within each of the unmeasured confounding scenarios, substantial differences were seen for the missing data scenarios, especially when the missingness was large. However, Table 20 in the ESM displays proportions of how often a specific marginal estimator performed best, and there were a number of scenarios where the ATT and ATO performed best, such that the adequate average performance of the ATE cannot be generalised to all scenarios. As an example, in terms of the performance metric ‘bias’, the ATE performed best for the simulated MM case study in 60% of scenarios, but ATO performed best in 38% of the scenarios and the ATT in 2% of scenarios. For the simulations based on the mHSPC case study, this was similar by the ATE performing best in 57% of the scenarios, ATO performing best in 39% of scenarios and the ATT performing best in 4% of scenarios (Table 20 in the ESM).

Notably, the ATE showed adequate performance regarding all of the performance measures bias, type I error and 95% CI coverage in a range of scenarios with no unmeasured confounding. With unmeasured confounding, all methods could remove a portion of the bias, but residual bias remained. As an example, if the four most important covariates had 100% missingness (constituting very strong unmeasured confounding), the results were closer to the RCT results (ATE, ATT and ATO bias approximately −0.24 in the mHSPC scenarios and approximately −0.40 in the MM scenarios) than the unadjusted results (bias around −0.38 and −0.59 in the MM and mHSPC settings, respectively), although notable differences remain (Fig. 5b, mHSPC part), of course, because of the intense unmeasured confounding.

For missing data scenarios, there are two potential reasons why estimates were seen to be biased, especially for high missingness, despite the fact that the data generation and analysis were aligned in their assumptions (including data being missing at random). First, a low sample size and sparse categories of baseline data may result in practical problems when setting up a PS model. Second, when comparing ATE versus ATT, the ATE has the advantage of being able to distribute weights across both cohorts, while the ATT approach restricts itself by only weighting comparator patients. This led in the simulations to more extreme ATT weights compared with the ATE, which is comprehensible because the weights have to be distributed to a subset of the data: the fewer the observations to be reweighted (just the comparator group), the more extreme some weights may become. Apparently, this made the ATT approach less robust when using MI (especially in case of high missingness), where the generated random MI values were weighted on average in a more extreme way. Note that all of the missingness occurred in the comparator cohort only, such that stable and constant ATT weights in the treatment cohort are less relevant, while high weights for randomly generated MI values in the comparator cohort are undesirable. The finding that the ATO performs between ATE and ATT is consistent with the literature, which states that “the average treatment effect for the overlap population (ATO) […] approximates ATT if propensity to treatment is small, and approximates ATE if treated and untreated groups are nearly balanced in size” [52].

4 Discussion

There were several major limitations observed in this project. The most significant limitation for the case studies was the different level of RW data completeness, standardisation and quality compared with RCT settings:

-

The proportion of missing values for the covariates used for PS modelling ranged for the MM EC from 25% to 75% (except for age and sex, see Table 9 in the ESM) and from 33 to 85% for the mHSPC EC (except for age and time from metastatic disease to index date, see Table 8 in the ESM).

-

Not all RCT inclusion and exclusion criteria could be applied in the EC because of missing or unmeasured data (e.g. ECOG being largely missing in the RW data source). Specifically, the critical mHSPC RCT variable of ‘visceral disease’, which was one out of three variables used to define the inclusion criterion of being at high risk, was not recorded in the RW data. Similarly, the number of bone lesions was often not available as an exact count. Reasons for missingness include that not all variables were mandatory to be entered as structured data in all centres in the network, that variables of interest were part of the structured dataset but were not always available as part of routine practice, and that certain assessments and/or treatment procedures took place outside of the network.

-

The RCTs collected clear baseline measurements, but for the EC a look-back period had to be specified as a retrospective time period to define which measurements were acceptable to be considered as baseline variables. This period may be chosen to be different for different classes of measurements (such as laboratory values, ECOG, severity of disease such as the Gleason score or number of bone lesions). However, often multiple reasonable definitions of look-back periods are possible, which may lead to defendable but also to somewhat arbitrary choices. Additionally, even if the look-back period is defined in an optimal way in some sense, still, measurement error may arise by actual values at true baseline being different from previously recorded values (in addition to other sources of measurement error).

-

While RCT data cleaning follows the highest data management standards, RW data standards are more pragmatic, which may lead to data quality issues generally, not necessarily restricted to baseline variables only. Additionally, some RW measurements (e.g. some laboratory measurements) may only be conducted if the patient has a specific condition, leading to the possibility that missingness of baseline covariates may not occur at random if the variables describing the conditions for performing a measurement are not part of the data source.

The second major limitation was the reduced comparability of cohorts:

-

For the mHSPC study, the EC included patients with an initial diagnosis of mHSPC, which were only required to become metastatic before the index date, while the RCT included only patients with an initial diagnosis of metastatic PC. This may have resulted in differences of measured or unmeasured patient and clinical characteristics, and importantly especially in those for which no exact adjustment could be performed (such as the incomplete number of bone lesions in the EC).

-

Comparability of subsequent treatment patterns is limited as well. The TTND analysis for the mHSPC case study revealed differences in the timing of treatment switching, which may have impacted OS. This effect may have played a larger role in the mHSPC case study than in the MM case study because the former had a placebo group while the latter had an active comparator. It is important to note that especially in the EC context, the long-term endpoint OS may be sensitive to differences in subsequent treatments. Therefore, adding the endpoint TTND along with descriptive statistics of subsequent therapies could be helpful in general for interpreting EC results.

-

The EC populations came from the USA, whereas the RCTs were conducted in a mix of different countries (especially for the mHSPC case study). Additionally, the calendar years of the index dates were different in the ECs. Regional, temporal and also site-type differences may impact OS because of differences in healthcare, including diagnostic pathways. The influence of differing index date year distributions between treatment and EC cohorts was tested in sensitivity analyses by adding the index date to a TTE regression model for the EC data (results not shown), but the index date was far away from being statistically significant (p ≥ 0.25 for both studies).

Limitations were also identified for the conducted simulations:

-

Conclusions based on averages cannot be automatically extrapolated to all scenarios, and there is also an infinite number of situations outside of the considered 2*900 scenarios.

-

Only two case studies were used as input information for the simulations and these studies included specific characteristics of covariates and their correlations.

-

Other case study set-ups may lead to different results, though it has to be noted that the observed patterns were similar for the two indications.

-

Although the range of assumed values for key input parameters were informed by the MM and mHSPC case studies, other parameter ranges are possible to consider in addition.

-

The PS model was applied by matching the true data-generating mechanism but the effect of using a wrong PS model (e.g. by leaving out or adding covariates, interaction terms or higher moments) was not investigated.

The following points are considered highlights regarding the derived simulation results:

-

As anticipated, the results of the simulations showed that the bias increased with the magnitude of the unmeasured confounding, and exact numerical estimates of this relationship could be derived to gain a quantitative instead of a mere qualitative understanding. Concretely, the bias was about −0.1 per additional missing covariate for the MM case study while it was ~ −0.06 for the mHSPC case study.

-

Multiple imputation for handling missing baseline data in the absence of unmeasured confounding was possible to be applied even in case of extensive missingness, as described previously [53], while dropping a covariate from the PS model completely owing to a certain amount of missingness may result in a new source of bias.

-

The simulations suggested differences when comparing ATE with ATO and ATT in case of PS weighting and MI. However, the choice of the marginal estimator is primarily based on the research question to be addressed and should not rely exclusively on statistical performance.

-

The MI algorithm should reflect the two underlying data generation processes of the two cohorts exactly, such that within-cohort MI was performed to apply the most rigid approach. However, a mixture of within-cohort and across-cohort MI seems to be a possible concept as well. Concretely, within-cohort MI could be applied for covariates with limited missingness first, while thereafter across-cohort imputation could be performed for covariates being missing with a high percentage, exceeding a certain threshold. Such an approach borrows information from the trial cohort for those EC covariates which exhibit extreme missingness up to 100%. This could potentially result in a better approximation and smaller bias for high missingness and unmeasured confounding scenarios. The general across-cohort MI approach is rather discouraged because of possibly different correlation structures in the two data sources but could constitute a workable approximation especially in the case of a low sample size. We are currently conducting corresponding follow-up research to quantify the performance of modified MI strategies.

-

The PH assumption was often compromised in the analyses of the two case studies, such that it is recommended to not rely primarily on the Cox model and to apply a method from a range of different approaches (e.g. RMST models or non-parametric RMST estimations by means of weighted Kaplan–Meier curves, as performed in this research paper).

Further, additional general data and data access limitations became apparent:

-

The RW data used in this study were not specifically compiled to address the two research questions addressed by the RCTs, which may have contributed to the high degree of missingness for relevant variables. Further, there was intentionally no feasibility step to check whether the data is fit-for-purpose or to minimise missingness because demonstrating effects of missing data and unmeasured confounding was the purpose of the research project.

-

The two RW data situations are relevant examples for scarce data availability scenarios, such as in rare cancers, newly identified indications, or RW EC approaches based on less than comprehensive data sources. Where possible, some RW EC approaches might produce more complete data and reduced potential for bias, for example by conducting a retrospective chart review study with elaborated data management standards, or by conducting a fully prospective RW study.

-

Though RCT data access has been improved by the existence of data portals, the process of making the data analysis ready is still a tedious and lengthy process. It is a limitation that frequently just control but no treatment group data are available from data portals, while often new RCT data are added only a long time after a successful submission, leading to a rather outdated database. Additionally, potentially owing to patient de-identification, typically not all parts of the data are shared, which can be problematic. Finally, some minor deviations from publications and the available data were seen, possibly due to updating the data after a while when additional follow-up data were available, where also some additional data cleaning activities may have been performed. We suggest that together with the RCT datasets access should also be granted to the protocol, the statistical analysis plan and trial report(s) as a minimum. Additional guidance from the data providers on variable definitions can be helpful as well. Additionally, RCTs in data portals tend to be relatively old with the implication that treatments may no longer be used, or they may have evolved/changed over time.

Note that the findings on unmeasured confounding and missing data are also of relevance in closely related fields, for example for indirect comparisons (e.g. by means of matching-adjusted indirect comparisons), which are frequently carried out based on a mixture of patient-level and aggregated data.

This work provides also further insights on the complexities of OS data, confirming that the estimation of treatment effects on this endpoint requires careful considerations, also in terms of (timings of) subsequent therapies. Mechanisms that may influence OS are complex and special care needs to be applied when investigating effects on this endpoint.

External comparators may yield more informative results about indications and treatments for which there is good knowledge and data availability for important covariates, and less so when data on important covariates are not readily available, or the identification of important covariates is less well known or still growing, such as in rare cancers or newly identified, molecularly driven indications. This highlights the importance of performing in-depth feasibility assessments and obtaining statistical, medical and epidemiological expert input to determine and assess the availability of critical data including eligibility criteria, covariates and endpoints.

The research confirmed as expected that EC analyses of OS are sensitive to violations of critical assumptions, in particular the assumption of no unmeasured confounding. In a comprehensive simulation framework based on real case studies, this publication quantified the extent to which study results may be affected.

5 Conclusions

This research project was undertaken to explore the strengths and limitations of ECs by evaluating operational characteristics dependent on missing data and unmeasured confounding. Eligibility criteria could be matched well for the MM case study, while there were issues for the complex mHSPC criterion of patients being at high risk, including missing precise information for visceral disease and number of bone lesions. Hence, the mHSPC EC case study clearly demonstrated the impact of missing eligibility data. Correspondingly, the two case studies provided valuable scientific insights about which EC studies may have greater challenges than others. As a result, researchers designing a new EC study should consider whether key eligibility criteria and key covariates are available in a specific RW dataset, highlighting again the need for thorough feasibility assessments. The simulations were able to successfully quantify effects of missing data and unmeasured confounding, hinting also at the possibility of missing data handling approaches specifically customised for EC studies.

In summary, missing data in important eligibility criteria and unmeasured confounding due to non-available EC covariates were confirmed to constitute the most challenging factors for EC studies, which require thorough considerations, including a careful go/no-go decision to use or not to use the candidate dataset for analysis.

References

Rippin G, Ballarini N, Sanz H, Largent J, Quinten C, Pignatti F. A review of causal inference for external comparator arm studies. Drug Saf. 2022;45(8):815–37. https://doi.org/10.1007/s40264-022-01206-y.

Gray CM, Grimson F, Layton D, et al. A framework for methodological choice and evidence assessment for studies using external comparators from real-world data. Drug Saf. 2020;43:623–33. https://doi.org/10.1007/s40264-020-00944-1.

Burcu M, Dreyer NA, Franklin JM, et al. Real-world evidence to support regulatory decision-making for medicines: considerations for external control arms. Pharmacoepidemiol Drug Saf. 2020;29:1228–35. https://doi.org/10.1002/pds.4975.

Friends of Cancer Research, Beckers F, Capra W, Cassidy A, et al. Characterizing the use of external controls for augmenting randomized control arms and confirming benefit. White Paper, 2019. https://www.focr.org/sites/default/files/Panel-1_External_Control_Arms2019AM.pdf. Accessed 25 Mar 2022.

Schmidli H, Häring DA, Thomas M, et al. Beyond randomized clinical trials: use of external controls. Clin Pharmacol Ther. 2019;107(4):806–16. https://doi.org/10.1002/cpt.1723.

Mack C, Christian JC, Brinkley E, et al. When context is hard to come by: external comparators and how to use them. Ther Innov Regul Sci. 2020;54(4):932–8. https://doi.org/10.1007/s43441-019-00108-z.

Thorlund K, Dron L, Park JJH, et al. Synthetic and external controls in clinical trials—a primer for researchers. Clin Epidemiol. 2020;12:457–67. https://doi.org/10.2147/CLEP.S242097.

Lodi S, Phillips A, Lundgren J, et al. Effect estimates in randomized trials and observational studies: comparing apples with apples. Am J Epidemiol. 2019;188:1569–77. https://doi.org/10.1093/aje/kwz100.

Ghadessi M, Tang R, Zhou J, et al. A roadmap to using historical controls in clinical trials—by Drug Information Association Adaptive Design Scientific Working Group (DIA-ADSWG). Orphanet J Rare Dis. 2020;15(1):1–19. https://doi.org/10.1186/s13023-020-1332-x.

Burger HU, Gerlinger C, Harbron C, et al. The use of external controls: to what extent can it currently be recommended? Pharm Stat. 2021;20:1002–16. https://doi.org/10.1002/pst.2120.

Seeger JD, Davis KJ, Innacone MR, et al. Methods for external control groups for single arm trials or long-term uncontrolled extensions to randomized clinical trials. Pharmacoepidemiol Drug Saf. 2020;29:1382–92. https://doi.org/10.1002/pds.5141.

Rippin G, Largent J, Hoogendoorn WE, Sanz H, Bosco J, Mack C. External comparator cohort studies: clarification of terminology. Front Drug Saf Regul. 2024;3:1341894. https://doi.org/10.3389/fdsfr.2023.1321894.

Rippin G. External comparators and estimands. Front Drug Saf Regul. 2024;3:1332040. https://doi.org/10.3389/fdsfr.2023.1332040.

European Medicines Agency, Committee for Medicinal Products for Human Use. Guideline on clinical trials in small populations. CHMP/EWP/83561/2005, 2006. https://www.ema.europa.eu/en/clinical-trials-small-populations. Accessed 25 Mar 2022.

European Medicines Agency, International Council on Harmonization (ICH). ICH Topic E10: choice of control groups in clinical trials. CPMP/ICH/364/96, 2001. https://www.ema.europa.eu/en/documents/scientific-guideline/ich-e-10-choice-control-group-clinical-trials-step-5_en.pdf. Accessed 25 Mar 2022.

US Food and Drug Administration. Framework for FDA’s real-world evidence, 2018. https://www.fda.gov/media/120060/download. Accessed 25 Mar 2022.

Gottlieb S. Statement from FDA Commissioner Scott Gottlieb, M.D., on FDA’s new strategic framework to advance use of real-world evidence to support development of drugs and biologics. FDA press release, 2018. Available from: https://www.fda.gov/news-events/press-announcements/statement-fda-commissioner-scott-gottlieb-md-fdas-new-strategic-framework-advance-use-real-world. Accessed 25 Mar 2022.

US FDA. Real-world data: Assessing electronic health records and medical claims data to support regulatory decision-making for drug and biological products. Draft guidance for industry, 2021. https://www.fda.gov/regulatory-information/search-fda-guidance-documents/real-world-data-assessing-electronic-health-records-and-medical-claims-data-support-regulatory. Accessed 25 Mar 2022.

European Medicines Agency. Reflection paper on use of real-world data in non-interventional studies to generate real-world evidence – Scientific guideline. (MA/CHMP/150527/2024), 2024. https://www.ema.europa.eu/en/reflection-paper-use-realworld-data-non-interventional-studies-generate-real-world-evidence-scientific-guideline. Accessed 1 July 2024.

US Food and Drug Administration. Real-world evidence: Considerations regarding non-interventional studies for drug and biological products. Draft guidance, 2024. https://www.fda.gov/regulatory-information/search-fda-guidance-documents/realworld-evidence-considerations-regarding-non-interventional-studies-drug-and-biological-products. Accessed 1 July 2024.

US FDA. Considerations for the design and conduct of externally controlled trials for drug and biological products. Draft guidance for industry, 2023. https://www.fda.gov/regulatory-information/search-fda-guidance-documents/considerations-design-and-conduct-externally-controlled-trials-drug-and-biological-products. Accessed 11 Feb 2023.

EMA. Reflection paper on establishing efficacy based on single-arm trials submitted as pivotal evidence in a marketing authorization (EMA/CHMP/564424/2021). Draft guidance for industry, 2023. https://www.ema.europa.eu/en/documents/scientific-guideline/reflection-paper-establishing-efficacy-based-single-arm-trials-submitted-pivotal-evidence-marketing_en.pdf. Accessed 7 Sep 2023.

Largent JA, Velentgas P. External comparators supporting regulatory submissions 2017–2019. Pharmacoepidemiol Drug Saf. 2020;29(Suppl. 3):Abstract 4848.

Jahanshahi M, Gregg K, Davis G, Ndu A, Miller V, Vockley J, et al. The use of external controls in FDA regulatory decision making. Ther Innov Regul Sci. 2021;55:1019–35. https://doi.org/10.1007/s43441-021-00302-y.

Khozin S, Blumenthal GM, Pazdur R. Real-world data for clinical evidence generation in oncology. JNCI J Natl Cancer Inst. 2017;109(11):1–5. https://doi.org/10.1093/jnci/djx187.

Skovlund E, Leufkens HGM, Smyth JF. The use of real-world data in cancer drug development. Eur J Cancer. 2018;101:69–76. https://doi.org/10.1016/j.ejca.2018.06.036.

Cave A, Kurz X, Arlett P. Real-World data for regulatory decision making: challenges and possible solutions for Europe. Clin Pharmacol Ther. 2019;106(1):36–8.

Eichler HG, Koenig F, Arlett P, et al. Are novel, nonrandomized analytic methods fit for decision making? The need for prospective, controlled, and transparent validation. Clin Pharmacol Ther. 2020;107(4):773–9. https://doi.org/10.1002/cpt.1638.

Faries DE, Zhang X, Zbigniew K, et al. Real world health care data analysis SAS. Cary: SAS Institute; 2020.

Hernán MA, Robins JM. Causal inference: what if. Boca Raton: Chapman & Hall/CRC; 2020.

Mishra-Kalyani PS, Kordestani LA, Rivera DR, Singh H, Ibrahim A, DeClaro RA, et al. Control arms in oncology: current use and future directions. Ann Oncol. 2022;33(4):376–83. https://doi.org/10.1016/j.annonc.2021.12.015.

Goring S, Taylor A, Müller K, et al. Characteristics of non-randomised studies using comparisons with external controls submitted for regulatory approval in the USA and Europe: a systematic review. BMJ Open. 2019;9: e024895. https://doi.org/10.1136/bmjopen-2018-024895.

Stewart M, Norden AD, Dreyer N, et al. An exploratory analysis of real-world endpoints for assessing outcomes among immunotherapy-treated patients with advanced non-small-cell lung cancer. JCO Clin Cancer Inform. 2019;3:1–15. https://doi.org/10.1200/CCI.18.00155.

Davies J, Martinec M, Delmar P, et al. Comparative effectiveness from a single-arm trial and real-world data: alectinib versus ceritinib. J Comp Eff Res. 2018;7(9):855–65. https://doi.org/10.2217/cer-2018-0032.

Carrigan G, Whipple S, Capra WB, et al. Using electronic health records to derive control arms for early phase single-arm lung cancer trials: proof-of-concept in randomized controlled trials. Clin Pharmacol Ther. 2019;107(2):369–77. https://doi.org/10.1002/cpt.1586.

Yin X, Mishra-Kalyani PS, Sridharab R, Stewart MD, Stuart EA, Davi RC. Exploring the potential of external control arms created from patient level data: a case study in non-small cell lung cancer. J Biopharm Stat. 2022;32:204–18. https://doi.org/10.1080/10543406.2021.2011901.

Franklin JM, Glynn RJ, Martin D, et al. Evaluating the use of nonrandomized real-world data analyses for regulatory decision making. Clin Pharmacol Ther. 2019;105(4):867–77. https://doi.org/10.1002/cpt.1351.

Franklin JM, Pawar A, Martin D, et al. Nonrandomized real-world evidence to support regulatory decision making: process for a randomized trial replication project. Clin Pharmacol Ther. 2020;107(4):817–26. https://doi.org/10.1002/cpt.1633.

Wang SV, Schneeweiss S; RCT-DUPLICATE Initiative. Emulation or randomized clinical trials with nonrandomized database analyses. results of 32 clinical trials. JAMA 2023;329(16):1376–85. https://doi.org/10.1001/jama.2023.4221.

Carrigan G, Whipple S, Taylor MD, et al. An evaluation of the impact of missing deaths on overall survival analyses of advanced non-small cell lung cancer patients conducted in an electronic health records database. Pharmacoepidemiol Drug Saf. 2019;28:572–81. https://doi.org/10.1002/pds.4758.

Dávila LA, Weber K, Bavendiek U, Bauersachs J, Wittes J, Yusuf S, Koch A. Digoxin–mortality: randomized vs. observational comparison in the DIG trial. Eur Heart J. 2019;40;3336–41. https://doi.org/10.1093/eurheartj/ehz395.

Durie BGM, Hoering A, Abidi MH, Rajkumar SV, Epstein J, Kahanic SP, et al. Bortezomib with lenalidomide and dexamethasone versus lenalidomide and dexamethasone alone in patients with newly diagnosed myeloma without intent for immediate autologous stem-cell transplant (SWOG S0777): a randomised, open-label, phase 3 trial. Lancet. 2017;389(10068):519–27. https://doi.org/10.1016/S0140-6736(16)31594-X.

Fizazi K, Tran N, Fein L, Matsubara N, Rodriguez-Antolin A, Alekseev BY et al.; LATITUDE Investigators. Abiraterone plus prednisone in metastatic, castration-sensitive prostate cancer. N Engl J Med. 2017;377(4):352–60. https://doi.org/10.1056/NEJMoa1704174.

Fizazi K, Tran N, Fein L, Matsubara N, Rodriguez-Antolin A, Alekseev BY, et al. Abiraterone acetate plus prednisone in patients with newly diagnosed high-risk metastatic castration-sensitive prostate cancer (LATITUDE): final overall survival analysis of a randomised, double-blind, phase 3 trial. Lancet Oncol. 2019;20(5):686–700. https://doi.org/10.1016/S1470-2045(19)30082-8.

PDS data portal. https://projectdatasphere.org/projectdatasphere/html/home. Accessed 21 Feb 2024.

YODA data portal. http://yoda.yale.edu. Accessed 21 Feb 2024.

Guardian Network Research. https://www.guardianresearch.org. Accessed 21 Feb 2024.

Jacobsen JC, Gluud C, Wetterslev J, Winkel P. When and how should multiple imputation be used for handling missing data in randomised clinical trials: a practical guide with flowcharts. BMC Med Res Meth. 2017;17:162. https://doi.org/10.1186/s12874-017-0442-1.

ICH E9(R1) Expert Working Group. ICH E9(R1) addendum on estimands and sensitivity analysis in clinical trials to the guideline on statistical principles for clinical trials. EMA/CHMP/ICH/436221/2017, 2020. https://www.ema.europa.eu/en/documents/scientific-guideline/ich-e9-r1-addendum-estimands-sensitivity-analysis-clinical-trials-guideline-statistical-principles_en.pdf. Accessed 25 Mar 2022.

Morris TP, White IR, Crowther MJ. Using simulation studies to evaluate statistical methods. Stat Med. 2019;38(11):2074–102. https://doi.org/10.1002/sim.8086.

Bender R, Augustin T, Blettner M. Generating survival times to simulate Cox proportional hazards models. Stat Med. 2005;24(11):1713–23. https://doi.org/10.1002/sim.2059.

Hajage D, Chauvet G, Belin L, et al. Closed-form variance estimator for weighted propensity score estimators with survival outcome. Biom J. 2018;60(6):1151–63.

Madley-Dowd P, Hughes R, Tilling K, Heron J. The proportion of missing data should not be used to guide decisions on multiple imputation. J Clin Epidemiol. 2019;110:63–73. https://doi.org/10.1016/j.jclinepi.2019.02.016.

Acknowledgements

This publication or is based on information obtained from http://www.projectdatasphere.org, which is maintained by Project Data Sphere, LLC. Neither Project Data Sphere, LLC nor the owner(s) of any information from the web site have contributed to, approved or are in any way responsible for the contents of this publication. The manuscript was prepared using data from the dataset “Analysis Dataset (NCT00644228-D1-Dataset.csv) ” from the NCTN/NCORP Data Archive of the National Cancer Institute’s National Clinical Trials Network. Data were originally collected from clinical trial NCT number NCT00644228 “Bortezomib with lenalidomide and dexamethasone versus lenalidomide and dexamethasone alone in patients with newly diagnosed myeloma without intent for immediate autologous stem-cell transplant (SWOG S0777): a randomised, open-label, phase 3 trial”. All analyses and conclusions in this manuscript are the sole responsibility of the authors and do not necessarily reflect the opinions or views of the clinical trial investigators, the National Clinical Trials Network or the National Cancer Institute. This publication is also based on data obtained from the Yale University Open Data Access Project, which has an agreement with Janssen Research & Development, L.L.C.. Specifically, the study was carried out under YODA Project # 2021-4649. The interpretation and reporting of research using this data are solely the responsibility of the authors and do not necessarily represent the official views of the Yale University Open Data Access Project or Janssen Research & Development, L.L.C.. Further, RW data were provided by the Guardian Research Network [45], which is a non-profit consortium of 110 community oncology centres in the USA with a database containing patients’ entire medical history, including all demographics, diagnoses, laboratory tests, medications, procedures, encounters and notes of all types (clinical, radiology reports). The authors thank the Project Data Sphere, Yale Open Data Access and the Guardian Research Network for enabling data access, and Andrea McCracken (Guardian Research Network), Michael O’Kelly (IQVIA) and Ettore Mari (IQVIA) for supporting this research.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Funding

This work was funded by the European Medicines Agency (EMA/2017/09/PE, Lot 4).

Conflicts of interest/competing interests

The authors have no conflicts of interests that are directly relevant to the content of this article.

Ethics approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Availability of data and material

Randomised controlled trial data sharing is not permitted by the policies of Project Data Sphere, LLC [45] and Yale Open Data Access [46], which had to be signed by IQVIA at the start of this research project.

Code availability

The programming code for simulations is available in the ESM.

Authors’ contributions

The main author directed all research efforts and drafted the first version of the publication. Contributing authors revised and added to the first version. Each author had participated sufficiently in the work to take responsibility of portions of the content. All authors read and approved the final manuscript.

Disclaimer

The views expressed in this article are the personal views of the authors and may not be understood or quoted as being made on behalf of or reflecting the position of the regulatory agency/agencies or organisations with which the authors are employed/affiliated.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License, which permits any non-commercial use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc/4.0/.

About this article

Cite this article

Rippin, G., Sanz, H., Hoogendoorn, W.E. et al. Examining the Effect of Missing Data and Unmeasured Confounding on External Comparator Studies: Case Studies and Simulations. Drug Saf (2024). https://doi.org/10.1007/s40264-024-01467-9

Accepted:

Published:

DOI: https://doi.org/10.1007/s40264-024-01467-9